Kaggle Titanic survival prediction challenge

This is the Prediction of Getting Started on kaggle

Competition is also an introductory and simple rookie competition. My best performance seems to have reached the top 8%. Looking back and consolidating this competition, I will be divided into three parts:

- ** Survival prediction challenge of Kaggle Titanic -- data analysis **

- ** Survival prediction challenge of Kaggle Titanic -- characteristic Engineering **

- ** Survival prediction challenge of Kaggle Titanic -- model establishment, model parameter adjustment and fusion **

Prerequisite knowledge

- numpy

- pandas

- matplotlib

- seaborn

- sklearn

**Title address: [Titanic: Machine Learning from Disaster]

](https://www.kaggle.com/c/titanic) **

The sinking of the Titanic

On April 15, 1912, during her maiden voyage, the RMS Titanic, which is generally considered "sunk", sank after colliding with an iceberg.

Unfortunately, there were not enough lifeboats on board for everyone to use, resulting in the death of 1502 of the 2224 passengers and crew. Although there is some luck in surviving, it seems that some people are more likely to survive than others.

In this challenge, we ask you to build a prediction model to answer the following question: "what kind of people are more likely to survive?" Use passenger data (i.e. name, age, gender, socio-economic class, etc.)

Task analysis: This is a classification task to build a model to predict survivors

data set

- Training set: 891 * 12, including 891 samples and 11 + 1 features (one is target)

- Test set: 418 * 11, including 418 samples and 11 features

Overview:

- PassengerId: Passenger id - id number has little effect, so it is considered to be deleted

- Survived: target -- label: 1 means alive, 0 means no survival

- Pclass: class of accommodation - divided into three classes 1 2 3

- Name: name - because foreign surnames have grades, they are meaningful

- Sex: gender - women first??

- Age: how old are young people likely to survive??

- SibSp: brothers and sisters on the Titanic / even number - to be tested

- Parch: number of parents / children on the Titanic - to be tested

- Ticket: Bill - to be examined

- Fare: fares - those with high fares may receive high treatment

- Cabin: cabin number - different cabins may survive differently

- Embarked: port of embarkation - C = Cherbourg; Q = Queenstown; S = Southampton

code implementation

Import related libraries

import numpy as np

import pandas as pd

import matplotlib.pyplot as plt

import seaborn as sns

import warnings

warnings.filterwarnings('ignore')

seed =2020

Data overview

1. Loading data method: pd.read_csv(),pd.read_table() * pd.read_csv(): Read to','Split files to DataFrame,For reading csv file(csv Separate character segments with commas) * pd.read_table(): Read to'\t'Split files to DataFrame,For reading tsv file(tsv Separate character segments with tabs) * In essence, both methods are general, and the parameters in the function sep You can select the type of separator

For example, read_csv() reads tsv file, DF = PD read_ csv(file_path,sep='\t')

2. When dealing with large files or insufficient memory, block reading is adopted: * Use parameters chunksize Specifies the size of the file block (for iteration)

df = pd.read_csv(file_path,chunksize = 100)

for i in df: ## iteratively read the DataFrame in a circular manner

print(i)

3. More common parameters

##It is not recommended to convert the feature name into Chinese when loading data, and there may be garbled code when drawing

train_df = pd.read_csv('data_train.csv')

test_df = pd.read_csv('data_test.csv')

##Data preview view the data of the first 5 rows

train_df.head()

! [insert picture description here]( https://img-blog.csdnimg.cn/20200818202028525.png?x-oss-

process=image/watermark,type_ZmFuZ3poZW5naGVpdGk,shadow_10,text_aHR0cHM6Ly9ibG9nLmNzZG4ubmV0L3dlaXhpbl80MzUzMjAwMA==,size_16,color_FFFFFF,t_70#pic_center)

test_df.head()

! [insert picture description here]( https://img-blog.csdnimg.cn/20200818202052866.png?x-oss-

process=image/watermark,type_ZmFuZ3poZW5naGVpdGk,shadow_10,text_aHR0cHM6Ly9ibG9nLmNzZG4ubmV0L3dlaXhpbl80MzUzMjAwMA==,size_16,color_FFFFFF,t_70#pic_center)

- DataFrame.info(): you can give a brief overview of the data, including the number of non empty samples, the type of feature line, and the number of features

- DataFrame.describe(): output some statistics of numerical features

train_df.info()

! [insert picture description here]( https://img-blog.csdnimg.cn/20200818202306112.png?x-oss-

process=image/watermark,type_ZmFuZ3poZW5naGVpdGk,shadow_10,text_aHR0cHM6Ly9ibG9nLmNzZG4ubmV0L3dlaXhpbl80MzUzMjAwMA==,size_16,color_FFFFFF,t_70#pic_center)

train_df.describe()

! [insert picture description here]( https://img-blog.csdnimg.cn/20200818202306147.png?x-oss-

process=image/watermark,type_ZmFuZ3poZW5naGVpdGk,shadow_10,text_aHR0cHM6Ly9ibG9nLmNzZG4ubmV0L3dlaXhpbl80MzUzMjAwMA==,size_16,color_FFFFFF,t_70#pic_center)

test_df.info()

! [insert picture description here]( https://img-blog.csdnimg.cn/20200818202306251.png?x-oss-

process=image/watermark,type_ZmFuZ3poZW5naGVpdGk,shadow_10,text_aHR0cHM6Ly9ibG9nLmNzZG4ubmV0L3dlaXhpbl80MzUzMjAwMA==,size_16,color_FFFFFF,t_70#pic_center)

test_df.describe()

! [insert picture description here]( https://img-blog.csdnimg.cn/20200818202306337.png?x-oss-

process=image/watermark,type_ZmFuZ3poZW5naGVpdGk,shadow_10,text_aHR0cHM6Ly9ibG9nLmNzZG4ubmV0L3dlaXhpbl80MzUzMjAwMA==,size_16,color_FFFFFF,t_70#pic_center)

Exploratory analysis of data EDA

### You can encapsulate a function according to your own needs

def _data_info(data,categorical_features):

print('number of train examples = {}'.format(data.shape[0]))

print('number of train Shape = {}'.format(data.shape))

print('Features={}'.format(data.columns))

print('\n--------Type of output category feature--------')

for i in categorical_features:

if i in list(data.columns):

print("train: "+i+":",list(data[i].unique()))

print('\n--------Missing value--------')

missing = data.isnull().sum()

missing = missing[missing > 0]

print(missing)

missing.sort_values(inplace=True)

missing.plot.bar()

plt.show()

def data_info(data_train,data_test,categorical_features):

print('--------Basic overview of training set--------')

_data_info(data_train,categorical_features)

print('\n\n--------Basic overview of test set--------')

_data_info(data_test,categorical_features)

Data overview, category characteristics and missing values

-

Sample number of training set: 891, feature number: 11 + 1 (one label)

-

Number of samples in the test set: 418, number of features: 11 (one label)

Category characteristics:

1. Survived (label): Value{0,1},Corresponding to not surviving and surviving

2. Pclass: Value{1,2,3},Corresponding cabin level

3. Sex: Value{male,female},Corresponding gender

4. Cabin: Cabin number

5. Embarked:Value{S,C,Q}. Corresponding boarding port

data_info(train_df,test_df,['Survived','Pclass','Sex','Cabin','Embarked','SibSp','Parch'])

! [insert picture description here]( https://img-blog.csdnimg.cn/20200818202818845.png?x-oss-

process=image/watermark,type_ZmFuZ3poZW5naGVpdGk,shadow_10,text_aHR0cHM6Ly9ibG9nLmNzZG4ubmV0L3dlaXhpbl80MzUzMjAwMA==,size_16,color_FFFFFF,t_70#pic_center)

! [insert picture description here]( https://img-blog.csdnimg.cn/20200818202830237.png?x-oss-

process=image/watermark,type_ZmFuZ3poZW5naGVpdGk,shadow_10,text_aHR0cHM6Ly9ibG9nLmNzZG4ubmV0L3dlaXhpbl80MzUzMjAwMA==,size_16,color_FFFFFF,t_70#pic_center)

! [insert picture description here]( https://img-blog.csdnimg.cn/20200818202843572.png?x-oss-

process=image/watermark,type_ZmFuZ3poZW5naGVpdGk,shadow_10,text_aHR0cHM6Ly9ibG9nLmNzZG4ubmV0L3dlaXhpbl80MzUzMjAwMA==,size_16,color_FFFFFF,t_70#pic_center)

! [insert picture description here]( https://img-blog.csdnimg.cn/20200818202843569.png?x-oss-

process=image/watermark,type_ZmFuZ3poZW5naGVpdGk,shadow_10,text_aHR0cHM6Ly9ibG9nLmNzZG4ubmV0L3dlaXhpbl80MzUzMjAwMA==,size_16,color_FFFFFF,t_70#pic_center)

Missing values

Once you understand the missing values, you can simply populate them

1. Training set missing value:

-

Age : 177

-

Cabin: 687

-

Embarked: 2

- Test set missing value: 418

-

Age:86

-

Fare : 1

-

Cabin : 327

#The data are combined and processed together, and a train feature is added to distinguish the training set from the test set

train_df['train'] = 1

test_df['train'] = 0

data_df = pd.concat([train_df,test_df],sort=True).reset_index(drop=True)

## Delete PassengerId feature

data_df.drop('PassengerId',inplace=True,axis=1)

## First digitize the non digital category features

from sklearn import preprocessing

ler_sex = preprocessing.LabelEncoder()

ler_sex.fit(data_df['Sex'])

data_df['Sex'] = ler_sex.transform(data_df['Sex'])

Embarked

The number of missing items is small, so it is considered to use multiple values for filling

data_df['Embarked'].fillna(data_df['Embarked'].mode()[0],inplace=True)

## After filling Embarker, digitize the non digital category features first

ler_Embarked = preprocessing.LabelEncoder()

ler_Embarked.fit(data_df['Embarked'])

data_df['Embarked'] = ler_Embarked.transform(data_df['Embarked'])

Age

177 + 86 891 + 418 ≈ 20 % {177+86\over891+418}\approx 20% 8 9 1 + 4 1

8 1 7 7 + 8 6 ≈ 2 0 %

The missing rate is about 20%. Considering the alignment for filling, if the data features of the data set are directly used for filling, the effect may not be very good

Try to fill in Age in combination with other aggregation features. It can be seen from the correlation analysis that the degree of Pclass is large

abs(data_df.corr()['Age']).sort_values(ascending=False)

Age 1.000000

Pclass 0.408106

SibSp 0.243699

Fare 0.178740

Parch 0.150917

Embarked 0.080195

Survived 0.077221

Sex 0.063645

train 0.018528

age distribution

y = data_df['Age']

plt.figure(1)

plt.title('Distribution of Age')

sns.distplot(y, kde=True)

## The age distribution of different genders shows that their distribution tends to be the same

plt.figure(2);

Age_Sex0 = data_df.loc[data_df['Sex']==0,'Age']

Age_Sex1 = data_df.loc[data_df['Sex']==1,'Age']

plt.title('Distribution of Age in Sex');

plt.legend(['Sex0','Sex1']);

sns.distplot(Age_Sex0, kde=True);

sns.distplot(Age_Sex1, kde=True);

! [insert picture description here]( https://img-blog.csdnimg.cn/20200818203517835.png?x-oss-

process=image/watermark,type_ZmFuZ3poZW5naGVpdGk,shadow_10,text_aHR0cHM6Ly9ibG9nLmNzZG4ubmV0L3dlaXhpbl80MzUzMjAwMA==,size_16,color_FFFFFF,t_70#pic_center)

There are some differences in the age distribution in different pclasses

Age_p1 = data_df.loc[data_df['Pclass']==1,'Age']

Age_p2 = data_df.loc[data_df['Pclass']==2,'Age']

Age_p3 = data_df.loc[data_df['Pclass']==3,'Age']

sns.distplot(Age_p1,kde=True,color='b')

sns.distplot(Age_p2,kde=True,color='green')

sns.distplot(Age_p3,kde=True,color='grey')

plt.title('Distribution of Age in Pclass')

plt.legend(['p1','p2','p3'])

! [insert picture description here]( https://img-blog.csdnimg.cn/20200818203618723.png?x-oss-

process=image/watermark,type_ZmFuZ3poZW5naGVpdGk,shadow_10,text_aHR0cHM6Ly9ibG9nLmNzZG4ubmV0L3dlaXhpbl80MzUzMjAwMA==,size_16,color_FFFFFF,t_70#pic_center)

Age_Pclass = data_df.groupby([ 'Pclass']).median()['Age']

for pclass in range(1, 4):

print('Median age of Pclass {}: {}'.format(pclass,Age_Pclass [pclass]))

print('Median age of all passengers: {}'.format(data_df['Age'].median()))

# Fill in Age value according to Pclass

data_df['Age'] = data_df.groupby(['Pclass'])['Age'].apply(lambda x: x.fillna(x.median()))

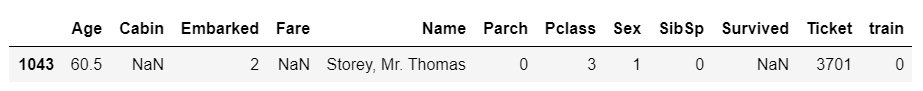

Fare

There is only one missing sample in far, so we can consider filling it directly with the statistical characteristics of the data set

However, when you see that sibsp and parch are equal to 0, it means that you are purchasing a single ticket and Plass is the cabin level. You can consider aggregating the above attributes

#View missing samples from Fare

data_df[data_df['Fare'].isnull()]

## Pclass has a great impact on the price

abs(data_df.corr()['Fare']).sort_values(ascending=False)

Fare 1.000000

Pclass 0.558629

Survived 0.257307

Embarked 0.238005

Parch 0.221539

Age 0.202512

Sex 0.185523

SibSp 0.160238

train 0.030831

## Aggregate data properties

print(data_df.groupby(['Pclass', 'Parch','SibSp','Embarked']).Fare.max()[3][0][0][0])#18.7875

print(data_df.groupby(['Pclass', 'Parch','SibSp','Embarked']).Fare.min()[3][0][0][0])#4.0125

print(data_df.groupby(['Pclass', 'Parch','SibSp','Embarked']).Fare.median()[3][0][0][0])#7.2292

print(data_df.groupby(['Pclass', 'Parch','SibSp','Embarked']).Fare.mean()[3][0][0][0])#7.923984210526318

## Select median to fill

data_df['Fare'].fillna(data_df.groupby(['Pclass', 'Parch','SibSp','Embarked'])['Fare'].median()[3][0][0][0],inplace=True)

Cabin

There are many missing cabins. If there is no good method to fill in the data, it is recommended to delete them directly.

data_df.drop('Cabin',inplace=True,axis=1)

Missing data fill complete

Continue to analyze the data

#From data_df get training set

train_data = data_df[data_df.train==1]

train_data['Survived'] = train_df['Survived']

train_data.drop('train',axis=1,inplace=True)

#From data_df get test training set

test_data = data_df[data_df.train==0]

test_data.drop(['Survived','train'],axis=1,inplace=True)

Feature correlation analysis

Training set

train_data.corr()

! [insert picture description here]( https://img-blog.csdnimg.cn/20200818204410386.png?x-oss-

process=image/watermark,type_ZmFuZ3poZW5naGVpdGk,shadow_10,text_aHR0cHM6Ly9ibG9nLmNzZG4ubmV0L3dlaXhpbl80MzUzMjAwMA==,size_16,color_FFFFFF,t_70#pic_center)

### There is a negative correlation between survival and gender

### There is a negative correlation between survival and Pclass

### There is a large negative correlation between survival and Fare

train_data.corr()['Survived'].sort_values(ascending=False)

Survived 1.000000

Fare 0.257307

Parch 0.081629

SibSp -0.035322

Age -0.046230

Embarked -0.167675

Pclass -0.338481

Sex -0.543351

Heat map of feature correlation degree

plt.figure( figsize=(10, 10))

plt.title('Train Set Correlation HeatMap ',y=1,size=16)

sns.heatmap(train_data.corr(),square = True, vmax=0.7,annot=True,cmap='Accent')

! [insert picture description here]( https://img-blog.csdnimg.cn/20200818204623929.png?x-oss-

process=image/watermark,type_ZmFuZ3poZW5naGVpdGk,shadow_10,text_aHR0cHM6Ly9ibG9nLmNzZG4ubmV0L3dlaXhpbl80MzUzMjAwMA==,size_16,color_FFFFFF,t_70#pic_center)

Survival

plt.bar(['Not Survived','Survived'],train_data['Survived'].value_counts().values)

plt.title('Train_Set_Survived')

! [insert picture description here]( https://img-blog.csdnimg.cn/20200818204701726.png?x-oss-

process=image/watermark,type_ZmFuZ3poZW5naGVpdGk,shadow_10,text_aHR0cHM6Ly9ibG9nLmNzZG4ubmV0L3dlaXhpbl80MzUzMjAwMA==,size_16,color_FFFFFF,t_70#pic_center)

Test set

test_data.corr()

! [insert picture description here]( https://img-blog.csdnimg.cn/20200818204925171.png?x-oss-

process=image/watermark,type_ZmFuZ3poZW5naGVpdGk,shadow_10,text_aHR0cHM6Ly9ibG9nLmNzZG4ubmV0L3dlaXhpbl80MzUzMjAwMA==,size_16,color_FFFFFF,t_70#pic_center)

plt.figure( figsize=(10, 10))

plt.title('Test Set Correlation HeatMap ',y=1,size=16)

sns.heatmap(test_data.corr(),square = True, vmax=0.7,annot=True,cmap='Accent')

! [insert picture description here]( https://img-blog.csdnimg.cn/20200818205004615.png?x-oss-

process=image/watermark,type_ZmFuZ3poZW5naGVpdGk,shadow_10,text_aHR0cHM6Ly9ibG9nLmNzZG4ubmV0L3dlaXhpbl80MzUzMjAwMA==,size_16,color_FFFFFF,t_70#pic_center)

Continuous data distribution

It can be seen from the survival distribution of Age and Fare that

- Age: it seems that the survival rate of young people is higher. The survival rate of different age groups is different. In the follow-up, we can consider the bucket operation of data

- Fare: it can be seen that the survival rate is higher when the ticket price is higher

The distributions of Age and Fare on the training set and test set are consistent

continue_features = ['Age', 'Fare']

survived = train_data['Survived'] == 1

fig, axs = plt.subplots(ncols=2, nrows=2, figsize=(20, 20))

plt.subplots_adjust(right=1.5)

for i, feature in enumerate(continue_features):

sns.distplot(train_data[~survived][feature], label='Not Survived', hist=True, color='#e74c3c', ax=axs[0][i])

sns.distplot(train_data[survived][feature], label='Survived', hist=True, color='#2ecc71', ax=axs[0][i])

sns.distplot(train_data[feature], label='Training Set', hist=False, color='#e74c3c', ax=axs[1][i])

sns.distplot(test_data[feature], label='Test Set', hist=False, color='#2ecc71', ax=axs[1][i])

axs[0][i].set_xlabel('')

axs[1][i].set_xlabel('')

for j in range(2):

axs[i][j].tick_params(axis='x', labelsize=20)

axs[i][j].tick_params(axis='y', labelsize=20)

axs[0][i].legend(loc='upper right', prop={'size': 20})

axs[1][i].legend(loc='upper right', prop={'size': 20})

axs[0][i].set_title('Distribution of Survival in {}'.format(feature), size=20, y=1.05)

axs[1][0].set_title('Distribution of {} Feature'.format('Age'), size=20, y=1.05)

axs[1][1].set_title('Distribution of {} Feature'.format('Fare'), size=20, y=1.05)

plt.show()

! [insert picture description here]( https://img-blog.csdnimg.cn/20200818205408975.png?x-oss-

process=image/watermark,type_ZmFuZ3poZW5naGVpdGk,shadow_10,text_aHR0cHM6Ly9ibG9nLmNzZG4ubmV0L3dlaXhpbl80MzUzMjAwMA==,size_16,color_FFFFFF,t_70#pic_center)

Distribution of category characteristics

- Embarked: when the value is 0, the survival rate is high

- Sex: when the value is 0, the survival rate is high

- Pclass: the survival rate decreases from 1 to 3

- The survival rates of SibSp and Parch are roughly the same, so they can be combined into a Family feature

Categorical_features = ['Embarked', 'Parch','SibSp','Sex', 'Pclass']

fig, axs = plt.subplots(ncols=2, nrows=3, figsize=(20, 20))

plt.subplots_adjust(right=1.5, top=1.25)

for i, feature in enumerate(Categorical_features, 1):

plt.subplot(2, 3, i)

sns.countplot(x=feature, hue='Survived', data=train_data)

plt.tick_params(axis='x', labelsize=20)

plt.tick_params(axis='y', labelsize=20)

plt.xlabel('{}'.format(feature), size=20, labelpad=15)

plt.ylabel('Passenger Count', size=20, labelpad=15)

plt.legend(['Not Survived', 'Survived'], loc='upper center')

plt.title('Count of Survival in {} Feature'.format(feature), size=20, y=1.05)

plt.show()

fig, axs = plt.subplots(ncols=2, nrows=3, figsize=(15, 15))

plt.subplots_adjust(right=1.5, top=1.25)

for i, feature in enumerate(Categorical_features, 1):

plt.subplot(2, 3, i)

sns.pointplot(feature,y='Survived',data=train_data)

plt.tick_params(axis='x', labelsize=20)

plt.tick_params(axis='y', labelsize=20)

plt.xlabel('{}'.format(feature), size=20, labelpad=15)

plt.ylabel('Passenger Count', size=20, labelpad=15)

plt.title('Rate of Survival in {} Feature'.format(feature), size=20, y=1.05)

plt.show()

! [insert picture description here]( https://img-blog.csdnimg.cn/20200818205830444.png?x-oss-

process=image/watermark,type_ZmFuZ3poZW5naGVpdGk,shadow_10,text_aHR0cHM6Ly9ibG9nLmNzZG4ubmV0L3dlaXhpbl80MzUzMjAwMA==,size_16,color_FFFFFF,t_70#pic_center)

! [insert picture description here]( https://img-blog.csdnimg.cn/20200818205830455.png?x-oss-

process=image/watermark,type_ZmFuZ3poZW5naGVpdGk,shadow_10,text_aHR0cHM6Ly9ibG9nLmNzZG4ubmV0L3dlaXhpbl80MzUzMjAwMA==,size_16,color_FFFFFF,t_70#pic_center)

! [insert picture description here]( https://img-blog.csdnimg.cn/20200818205830525.png?x-oss-

process=image/watermark,type_ZmFuZ3poZW5naGVpdGk,shadow_10,text_aHR0cHM6Ly9ibG9nLmNzZG4ubmV0L3dlaXhpbl80MzUzMjAwMA==,size_16,color_FFFFFF,t_70#pic_center)

! [insert picture description here]( https://img-blog.csdnimg.cn/20200818205830500.png?x-oss-

process=image/watermark,type_ZmFuZ3poZW5naGVpdGk,shadow_10,text_aHR0cHM6Ly9ibG9nLmNzZG4ubmV0L3dlaXhpbl80MzUzMjAwMA==,size_16,color_FFFFFF,t_70#pic_center)

Save csv file

train_data.to_csv('./train.csv',index=False)

test_data.to_csv('./test.csv',index=False)