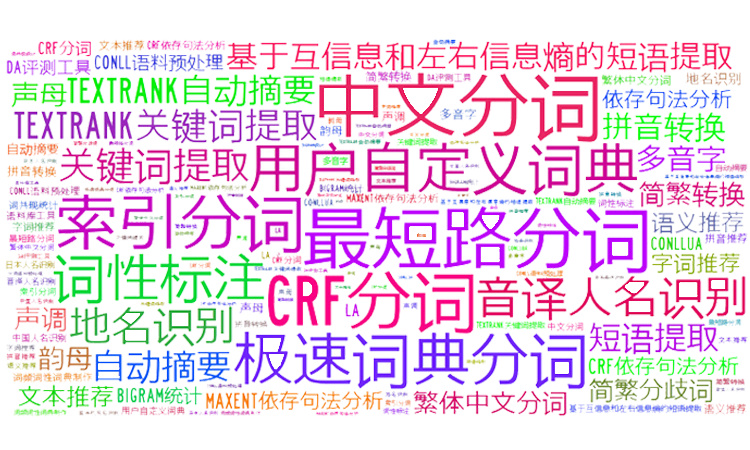

Hanlp natural language

introduce

HanLP is a Java toolkit composed of a series of models and algorithms. Its goal is to promote the application of natural language processing in production environment. HanLP has the characteristics of perfect function, efficient performance, clear architecture, up-to-date and customizable corpus.

Open source dynamics

Official website: https://www.hanlp.com/

Open source address:

Yard Farm: http://www.hankcs.com/nlp/hanlp.html

github: https://github.com/hankcs/HanLP/releases

Hanlp Java implementation

Through Maven's POM xml

maven introduction: for the convenience of users, Hanlp provides a Portable version with built-in data packets, which only needs to be installed in POM XML added: (the latest version is 1.8.2)

<dependency> <groupId>com.hankcs</groupId> <artifactId>hanlp</artifactId> <version>portable-1.8.2</version> </dependency>

The portable version has zero configuration, which can use all the basic functions (except CRF word segmentation and dependency parsing). As a cost, it uses the small dictionary of 1998, which has limited support for modern Chinese; Therefore, it is not recommended to use it in the production environment. It is recommended to use it in combination with Data packets

Using hanlp with Data packets

1. Download Data package (hanlp version 1.7.5)

https://file.hankcs.workers.dev/hanlp/data-for-1.7.5.zip

2. Configuration file: hanlp Properties (official version)

#The root directory of the path in this configuration file, root directory + other paths = full path (relative paths are supported, please refer to: https://github.com/hankcs/HanLP/pull/254 ) #Note to Windows users that path separators are used uniformly/ root=E:/hanlp/data-for-1.7.5/ #Well, the above is the only part that needs to be modified. The following configuration items can be de annotated and edited as needed. #Core dictionary path #CoreDictionaryPath=data/dictionary/CoreNatureDictionary.txt #Binary Grammar Dictionary path #BiGramDictionaryPath=data/dictionary/CoreNatureDictionary.ngram.txt #Custom dictionary path, with; Separate multiple custom dictionaries. The beginning of a space indicates that they are in the same directory. Using the form of "file name part of speech" means that the part of speech of this dictionary is the part of speech by default. Priority decrement. #All dictionaries are uniformly encoded in UTF-8. Each line represents a word. The format follows [word] [part of speech a] [frequency of a] [part of speech b] [frequency of B] If you do not fill in the part of speech, it means that the default part of speech of the dictionary is adopted. CustomDictionaryPath=data/dictionary/custom/CustomDictionary.txt; Modern Chinese Supplementary Thesaurus.txt; National collection of geographical names.txt ns; biographical dictionary .txt; Organization name dictionary.txt; Shanghai place names.txt ns;data/dictionary/person/nrf.txt nrf; #Stop word dictionary path #CoreStopWordDictionaryPath=data/dictionary/stopwords.txt #Synonym dictionary path #CoreSynonymDictionaryDictionaryPath=data/dictionary/synonym/CoreSynonym.txt #Person name dictionary path #PersonDictionaryPath=data/dictionary/person/nr.txt #Name Dictionary transfer matrix path #PersonDictionaryTrPath=data/dictionary/person/nr.tr.txt #Complex and simple dictionary root directory #tcDictionaryRoot=data/dictionary/tc #HMM word segmentation model #HMMSegmentModelPath=data/model/segment/HMMSegmentModel.bin #Does the word segmentation result show the part of speech #ShowTermNature=true #IO adapter to realize com hankcs. HanLP. corpus. io. Iioadapter interface to run HanLP on different platforms (Hadoop, Redis, etc.) #The default IO adapter is as follows, which is based on the normal file system. #IOAdapter=com.hankcs.hanlp.corpus.io.FileIOAdapter #Perceptron lexical analyzer #PerceptronCWSModelPath=data/model/perceptron/pku1998/cws.bin #PerceptronPOSModelPath=data/model/perceptron/pku1998/pos.bin #PerceptronNERModelPath=data/model/perceptron/pku1998/ner.bin #CRF lexical analyzer #CRFCWSModelPath=data/model/crf/pku199801/cws.txt #CRFPOSModelPath=data/model/crf/pku199801/pos.txt #CRFNERModelPath=data/model/crf/pku199801/ner.txt #For more configuration items, please refer to https://github.com/hankcs/HanLP/blob/master/src/main/java/com/hankcs/hanlp/HanLP.java#L59 self add

Put the configuration file in the same location as the jar package

3. Java usage

(1) First Demo

System.out.println(HanLP.segment("Hello, welcome HanLP Chinese processing package!"));

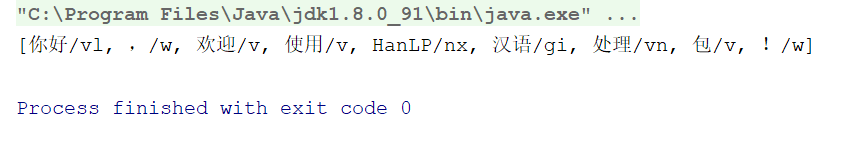

result:

(2) Classic standard participle, Demo

List<Term> termList = StandardTokenizer.segment("Goods and services");

System.out.println(termList);

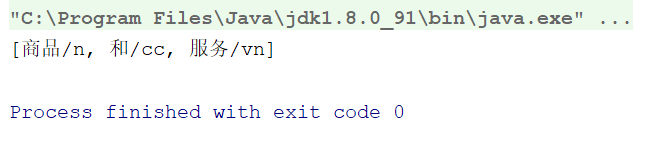

result:

Note: HanLP has a series of "out of the box" static word separators, which end with Tokenizer. They will continue to be introduced in the following examples.

HanLP.segment is actually for standardtokenizer Segment packaging.

The word segmentation result contains parts of speech. Please refer to the meaning of each part of speech HanLP part of speech tagging set.

(3) NLP word segmentation, Demo

List<Term> termList = NLPTokenizer.segment("Professor Zong Chengqing of the Institute of computing technology of the Chinese Academy of Sciences is teaching natural language processing");

System.out.println(termList);

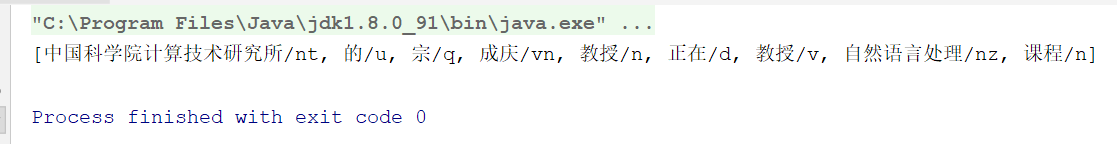

result:

Note: NLP word segmentation NLPTokenizer will perform all named entity recognition and part of speech tagging.

Note: NLP word segmentation NLPTokenizer will perform all named entity recognition and part of speech tagging.

(4) Index participle, Demo

List<Term> termList = IndexTokenizer.segment("Main and non-staple food");

for (Term term : termList)

{

System.out.println(term + " [" + term.offset + ":" + (term.offset + term.word.length()) + "]");

}

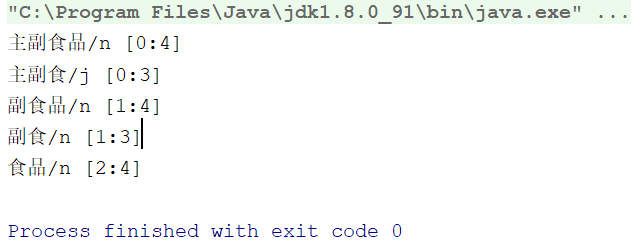

result:

Note: index word segmentation IndexTokenizer is a word segmentation device for search engine, which can fully segment long words. In addition, through term Offset can get the offset of the word in the text.

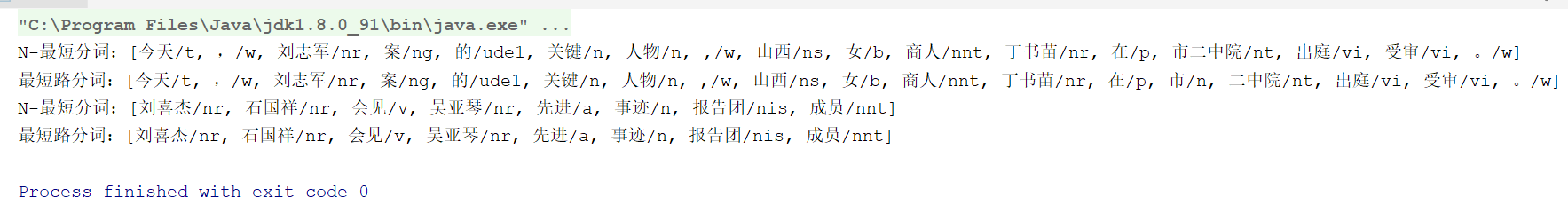

(5) N-shortest path word segmentation, Demo

Segment nShortSegment = new NShortSegment().enableCustomDictionary(false).enablePlaceRecognize(true).enableOrganizationRecognize(true);

Segment shortestSegment = new DijkstraSegment().enableCustomDictionary(false).enablePlaceRecognize(true).enableOrganizationRecognize(true);

String[] testCase = new String[]{

"Today, the key figure in the Liu Zhijun Case,Ding Shumiao, a businesswoman from Shanxi, appeared in court at the second municipal people's court.",

"Liu Xijie and Shi Guoxiang meet with members of Wu Yaqin's advanced deeds report team",

};

for (String sentence : testCase)

{

System.out.println("N-Shortest participle:" + nShortSegment.seg(sentence) + "\n Shortest path participle:" + shortestSegment.seg(sentence));

}

result:

Note: the N shortest path participle NShortSegment is slower than the shortest path participle, but the effect is slightly better and has stronger recognition ability for named entities.

In general, the accuracy of the shortest path word segmentation is enough, and the speed is several times faster than the N shortest path word segmentation. Please choose as appropriate.

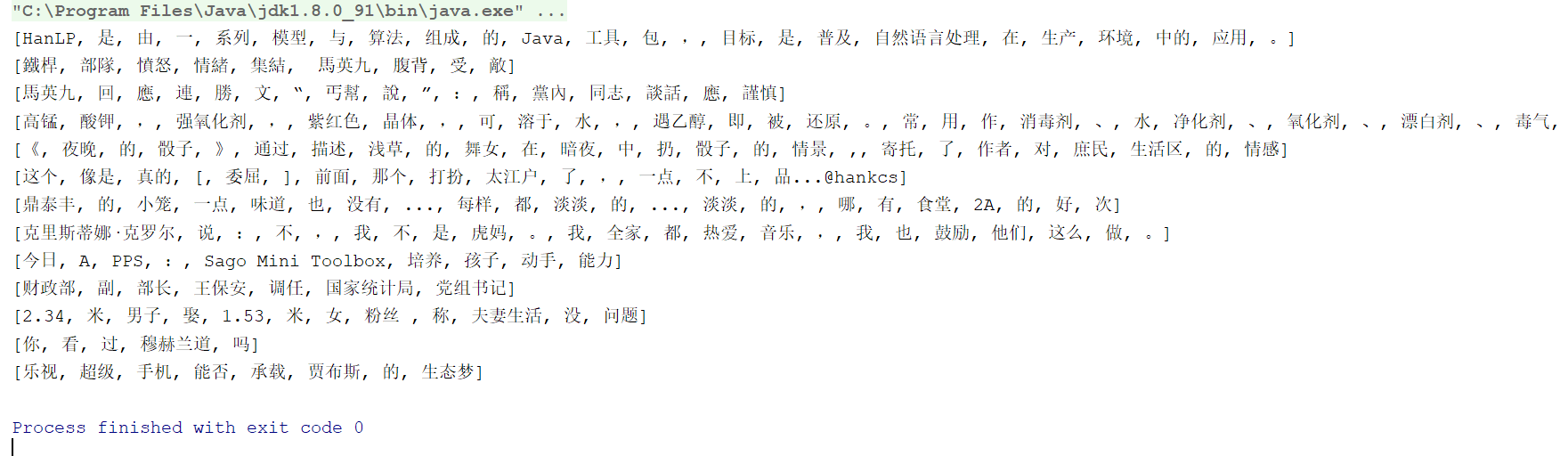

(6) CRF participle, Demo

HanLP.Config.ShowTermNature = false; // Turn off part of speech display

Segment segment = new CRFLexicalAnalyzer();//The old version uses CRFSegment and has been abandoned

String[] sentenceArray = new String[]

{

"HanLP It is composed of a series of models and algorithms Java Toolkit, the goal is to popularize the application of natural language processing in production environment.",

"The ironclad troops gathered in anger, and Ma Ying Jeou was attacked from behind", // Traditional no pressure

"Ma Ying Jeou responded to Lian Shengwen's "beggar's sect theory": he said that comrades in the party should be cautious in talking",

"Potassium permanganate, strong oxidant, purplish red crystal, soluble in water and reduced in ethanol. Commonly used as disinfectant, water purifier, oxidant, bleach, poison gas absorbent, carbon dioxide refining agent, etc.", // Professional nouns have certain recognition ability

"<Dice at night "describes the scene of shallow grass dancers throwing dice in the dark night,It reposes the author's feelings for the common people's living area", // Non news corpus

"This seems true[Grievance]The one in front is too dressed up in Edo. It's not top-grade at all...@hankcs", // micro-blog

"Dingtaifeng's cage has no taste at all...Everything is light...Light, where is the canteen 2 A Good times",

"Cristina·Kroll said: No, I'm not a tiger mother. My family loves music and I encourage them to do so.",

"today APPS: Sago Mini Toolbox Cultivate children's practical ability",

"Vice Minister of Finance Wang Baoan was transferred to Secretary of the Party group of the National Bureau of statistics",

"2.34 M men marry 1.53 Rice female fans say husband and wife life is no problem",

"Have you seen Mulholland road",

"Can LETV super phone carry jabbs's ecological dream"

};

for (String sentence : sentenceArray)

{

List<Term> termList = segment.seg(sentence);

System.out.println(termList);

}

result:

Note: CRF has a good ability to recognize new words, but it can't use the user-defined dictionary

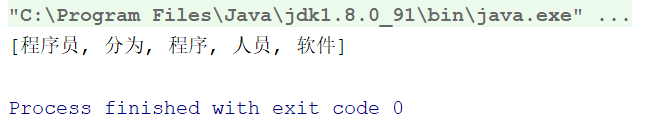

(7) Keyword extraction, Demo

String content = "programmer(english Programmer)Is a professional engaged in program development and maintenance. Programmers are generally divided into programmers and programmers, but the boundary between the two is not very clear, especially in China. Software practitioners are divided into four categories: junior programmers, senior programmers, system analysts and project managers.";

List<String> keywordList = HanLP.extractKeyword(content, 5);

System.out.println(keywordList);

result:

Note: TextRankKeyword is used internally. Users can call TextRankKeyword directly getKeywordList(document, size)

Java implementation of TextRank algorithm for keyword extraction

End of Demo

Hanlp combines a lot of lexical analysis algorithms to basically meet the general requirements in word processing analysis. More demos are available http://www.hankcs.com/nlp/hanlp.html and https://github.com/hankcs/HanLP/releases see