Deep understanding of volatile pseudo sharing and volatile reordering in concurrent programming

1, Memory barrier

What is a memory barrier

Memory barrier, also known as memory barrier, memory barrier, barrier instruction, etc., is a kind of synchronous barrier instruction. It is a synchronization point in the operation of random access to memory by CPU or compiler, so that all read and write operations before this point can be executed before the operation after this point can be started (from Baidu Encyclopedia).

There are two types of memory barriers:

- The read memory barrier only ensures the memory read operation. Inserting the Load Barrier after the instruction can invalidate the data in the cache and force the data to be loaded from the main memory again. It also won't let the CPU rearrange instructions.

- The write memory barrier only guarantees the memory write operation. Inserting the Store Barrier in front of the instruction can make the latest data update in the write cache written to the main memory and visible to other threads. Forced write into main memory, this display call will not let the CPU reorder instructions.

The common x86/x64 is usually implemented by using the prefix of lock instruction plus a null operation.

Relationship between volatile and memory barrier

The variable modified by volatile keyword will have a prefix of "lock". Lock is not a memory barrier, but it can complete the function similar to memory barrier. Lock locks the CPU bus and cache, which can be understood as a kind of CPU instruction level lock. Similar to the lock instruction.

In terms of specific execution, it locks the bus and cache first, and then executes the following instructions. When locking the bus, the read and write requests of other CPUs will be blocked until the Lock is released. Finally, after the Lock is released, all the data in the cache will be flushed back to the main memory, and the operation of writing back to memory will invalidate the data cached at this address in other CPUs.

Look at the following code:

public class Test001 {

private static volatile boolean a = false;

public static void main(String[] args) {

a = true;

}

}

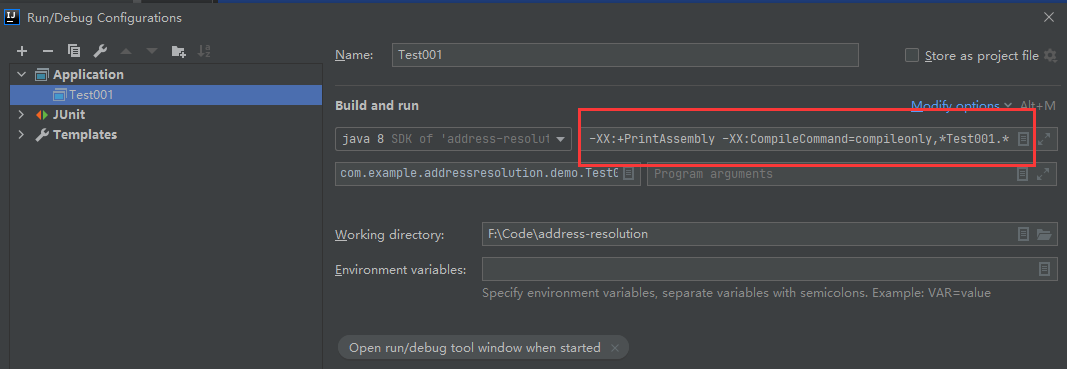

to configure:

-server -Xcomp -XX:+UnlockDiagnosticVMOptions -XX:+PrintAssembly -XX:CompileCommand=compileonly,* Test001. *

Test001 is the name of the test class (download hsdis-amd64.dll from the Internet and put it in C:\java8\jdk\jre\bin\server, otherwise an error will be reported) and add it to the startup attribute:

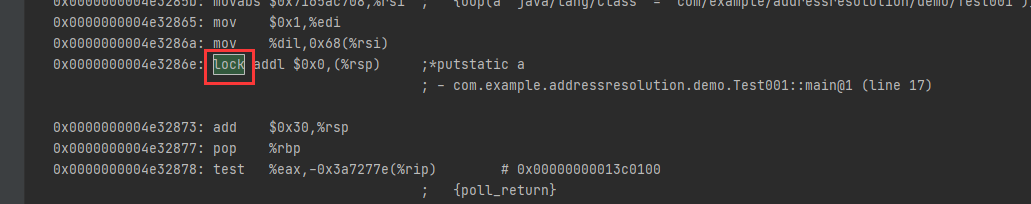

Run the code to get the JVM assembly code analysis. You can see that in main, we added lock when we operated on the shared variable a.

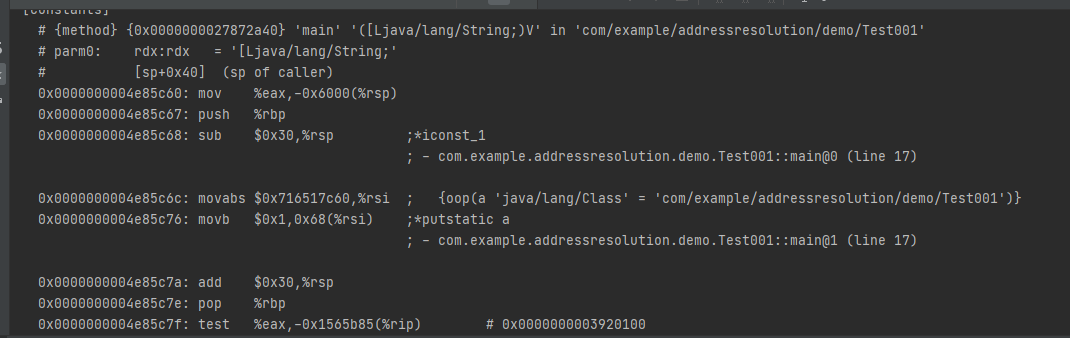

Then remove the keyword and execute again. The global search cannot find the lock, and there is no lock to find the corresponding location.

The variable modified by volatile keyword is not a memory barrier, but a function similar to memory barrier.

2, Analysis of volatile anti reordering based on memory barrier

What is reordering

Instruction reordering means that the Java memory model allows the compiler and processor to reorder the instruction code to improve the efficiency of operation. It will only reorder the instructions that do not depend on the data. In the case of single thread, reordering ensures the consistency between the final execution result and the program sequence execution result.

Causes of reordering

When our CPU writes to the cache and finds that the cache area is being occupied by other CPUs (indicating that it will only happen if it is not a multi-core processor), in order to improve the performance of CPU processing, we may give priority to the following read cache commands.

Reordering needs to follow the as if serial semantics:

As if serial: no matter how reordering (compiler and processor to improve the efficiency of parallelism), the execution results of single threaded programs will not change. That is, our compiler and processor will not reorder operations with data dependency.

The execution result of as if serial single thread program will not change, but in the case of multi-core and multi thread, the instruction logic cannot distinguish the causality, and there may be a disorder center problem, resulting in the error of program execution result.

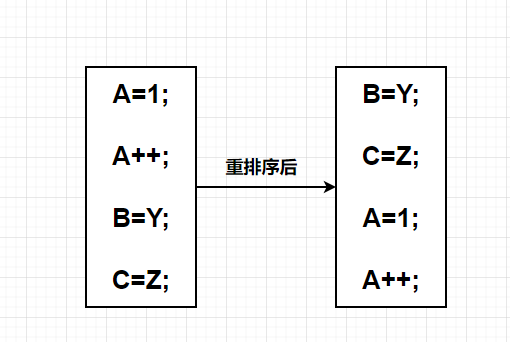

Example reordering

A=1 is dependent on a + +, so it will not be reordered. B = y and C = Z do not depend on them. A + + has write operations, so B = y and C = Z may be executed in advance after CPU reordering. Of course, single core CPU single process reordering is no problem.

Take another look at the code under multithreading:

public class ReorderThread {

// Globally shared variables

private static int a = 0, b = 0;

private static int x = 0, y = 0;

public static void main(String[] args) throws InterruptedException {

int i = 0;

while (true) {

//Reset the initial value of the variable every time

i++;

a = 0;b = 0;x = 0;y = 0;

Thread thread1 = new Thread(new Runnable() {

@Override

public void run() {

a++;

x = b;

x = b;x = b;x = b;x = b; //If you can't try it out, you can write more, which will not affect the analysis results.

}

});

Thread thread2 = new Thread(new Runnable() {

@Override

public void run() {

b++;

y = a;

}

});

thread1.start();

thread2.start();

//Ensure that the main thread is executed after the sub thread is executed

thread1.join();

thread2.join();

System.out.println("The first" + i + "second(" + x + "," + y + ")");

if (x == 0 && y == 0) {

break;

}

}

}

}

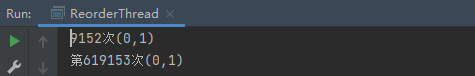

Analyze what happens to the above code:

- Scenario 1: thread 1 executes first and thread 2 executes later. The output value is x = 0 and y = 1.

- Scenario 2: thread 2 executes first and thread 1 executes later. The output value is y = 1 and x = 0.

- Scenario 3: two threads execute at the same time, and the output values are x = 1 and y = 1. This situation is not found in the output results, but it is conceivable to assign values after the execution of a + + and b + +.

- It will never happen. x=0,y=0, so when we write x=0,y=0, we jump out of the loop. Coincidentally, we finally jump out of the loop. Why? It is because the instructions are reordered. Because there is no correlation between a + + and y=b, we can reorder. y=b is executed first and a + + is executed later. Similarly, thread 2, and then two threads execute at the same time. Now let's look at the result of scenario 3. Why can't we find it? The code is reordered. Maybe scenario 3 will never appear.

volatile keyword prevents code reordering

Look at the code with volatile keyword:

public class ReorderThread {

// Globally shared variables

private static int a = 0, b = 0;

private static volatile int x = 0, y = 0;

public static void main(String[] args) throws InterruptedException {

int i = 0;

while (true) {

i++;

a = 0;

b = 0;

x = 0;

y = 0;

Thread thread1 = new Thread(new Runnable() {

@Override

public void run() {

a++;

x=b;

x=b;

x = b;

x = b;

x = b;

}

});

Thread thread2 = new Thread(new Runnable() {

@Override

public void run() {

b++;

y=a;

y=a;

y=a;

y=a;

y=a;

}

});

thread1.start();

thread2.start();

thread1.join();

thread2.join();

System.out.println("The first" + i + "second(" + x + "," + y + ")");

if (x == 0 && y == 0) {

break;

}

}

}

}

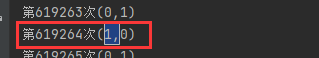

As a result, x = 0 and y = 0 will not appear. This shows that the volatile keyword can prevent instruction reordering.

Let's look back at the previous knowledge. volatile assignment will simulate the memory barrier in front of the code, and assign to write the memory barrier.

The write memory barrier only guarantees the memory write operation. Inserting the Store Barrier in front of the instruction can make the latest data update in the write cache written to the main memory and visible to other threads. Forced write into main memory, this display call will not let the CPU reorder instructions.

Look at the last sentence. Forcing a write to memory does not allow the CPU to reorder instructions. There are two lines of code in total. The second line cannot be reordered and the first line cannot be moved. Finally, the CPU executes in the order we write.

Why should volatile be added to the singleton mode of double check lock

Previous singleton mode code:

public class Singleton03 {

private static volatile Singleton03 singleton03;

public static Singleton03 getInstance() {

// First inspection

if (singleton03 == null) {

//Second inspection

synchronized (Singleton03.class) {

if (singleton03 == null) {

singleton03 = new Singleton03();

}

}

}

return singleton03;

}

public static void main(String[] args) {

Singleton03 instance1 = Singleton03.getInstance();

Singleton03 instance2 = Singleton03.getInstance();

System.out.println(instance1==instance2);

}

}

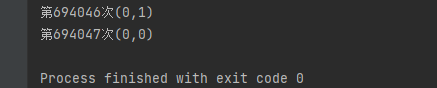

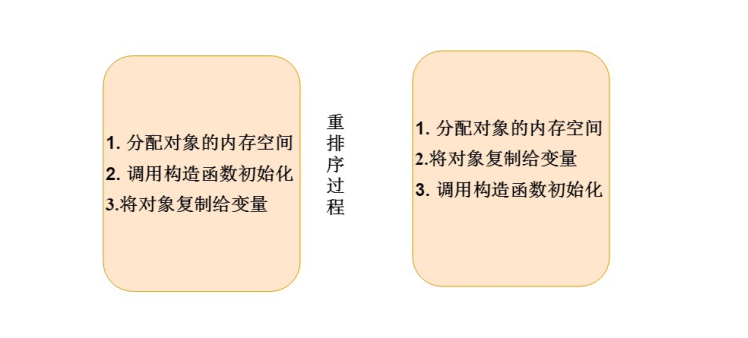

At first glance, there is no problem, but when there is research on the underlying creation objects, there is a problem. The new object is not an atomic operation. For the compiled code, the new operation is a three-step process.

We list possible reordering conditions in these three steps:

Consider the example of volatile keyword reordering mentioned above. At that time, when there are multiple threads, the second and third processes have reordering. It is also possible to execute ours first, copy the object to the variable, and call the constructor initialization, resulting in another thread obtaining that the object is not empty, but the transformation function is not initialized, so an error is reported, Because another thread gets an incomplete object. Although the probability of this phenomenon is very low, once a BUG appears, it is almost difficult to find, which should be paid attention to

3, Pseudo sharing problem of volatile

What is pseudo sharing problem

The CPU will read the data in the main memory in the form of cache line. The size of the cache line is 2 power bytes. The 64 bit computer we use now is 2 ^ 64, that is, 64 binary, that is, 64 bits.

For example: in Java, the long type takes up 8 bytes, but the cache line is 64 bits, so it is impossible to store only one long. Suppose that 6 variables of long type (A, B, C... 6 variables) are stored (the object header takes up space, so it is not 8, which will be analyzed by the JVM later). Due to the cache consistency protocol, the variable A using volatile keyword is changed, Other threads will synchronize the A variable. Since the CPU reads data in the way of caching rows, these six variables are synchronized. In this way, any update of the six variables will invalidate the cache lines in other threads and then update them again. In fact, the JMM memory model is meaningless and greatly affects the performance. In addition, C may not be A shared variable, but C caused by A shared refresh is also shared.

Look at the following code:

public class Test001 {

private static boolean a = false;

private static boolean b = false;

static class Thread001 extends Thread {

@Override

public void run() {

while (true) {

if(a){

System.out.println("here a yes true");

}

if(b){

System.out.println("here b yes true");

}

}

}

}

public static void main(String[] args) {

Thread001 thread001 = new Thread001();

thread001.start();

try {

Thread.sleep(1000);

} catch (InterruptedException e) {

e.printStackTrace();

}

b = true;

a = true;

System.out.println("End of main thread");

}

}

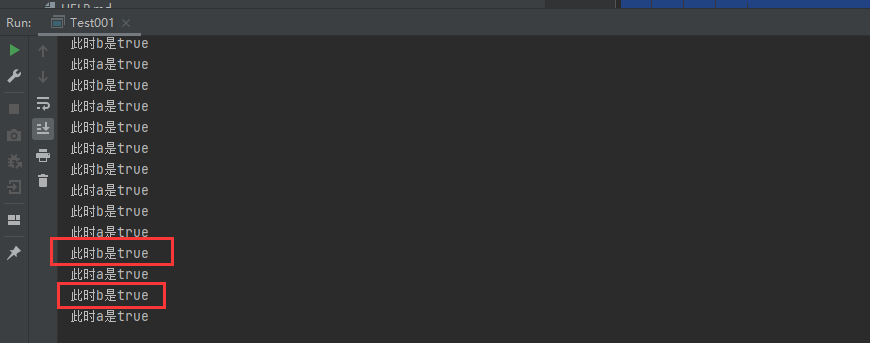

The result of execution is that the statements in b judgment are not printed, indicating that there is no thread consistency between a and b variables

Look at the following code. a is decorated with volatile

public class Test001 {

private static volatile boolean a = false;

private static boolean b = false;

static class Thread001 extends Thread {

@Override

public void run() {

while (true) {

if(a){

System.out.println("here a yes true");

}

if(b){

System.out.println("here b yes true");

}

}

}

}

public static void main(String[] args) {

Thread001 thread001 = new Thread001();

thread001.start();

try {

Thread.sleep(1000);

} catch (InterruptedException e) {

e.printStackTrace();

}

b = true;

a = true;

System.out.println("End of main thread");

}

}

Take a look at the execution results: A and b are consistent. Obviously, we only modify a with volatile, and why b is consistent. This is the pseudo sharing problem, because a and b are placed in the same cache line.

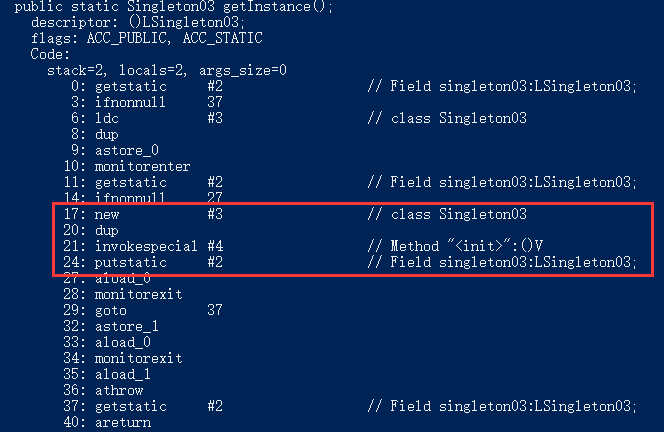

How to avoid pseudo sharing

- In JAVA6, you manually add some useless data to fill 64 bits. For example, when using long, boolean examples are written too much.

public final static class VolatileLong{

public volatile long value = 0L;

public long p1, p2, p3, p4, p5, p6;

}

- In Java 7, the code was optimized, and these useless variables were optimized, so yo ah, write a separate class to inherit

public final static class VolatileLong extends AbstractPaddingObject {

public volatile long value = 0L;

public long p1, p2, p3, p4, p5, p6;

}

public class AbstractPaddingObject {

public long p1, p2, p3, p4, p5, p6;

}

- The @ contained annotation was added in JAVA8. As long as this annotation is added, the buffer line will be automatically filled until it is filled

public final static class Test {

public VolatileLong value;

}

@Contended

public class VolatileLong {

ublic volatile long value = 0L;

}

Content source: Ant classroom