The most detailed Hive article series in the whole network, it is strongly recommended to collect and pay attention!

Later, the updated articles will list the catalogue of historical articles to help you review the key points of knowledge.

catalogue

Hive database and table operations

2. Create the database and specify the hdfs storage location

1. Create database table syntax

2. Hive field types when creating tables

Series of historical articles

2021 big data Hive (II): Hive's three installation modes are used with MySQL

2021 big data Hive (I): Hive basic concept

preface

The most detailed big data notes of the whole network in 2021 can easily take you from introduction to mastery. This column is updated every day to summarize and share knowledge

Hive database and table operations

1, Database operation

1. Create database

create database if not exists myhive; use myhive;

Note: the table storage location mode of hive is determined by hive site Specified by an attribute in XML

<name>hive.metastore.warehouse.dir</name> <value>/user/hive/warehouse</value>

2. Create the database and specify the hdfs storage location

create database myhive2 location '/myhive2';

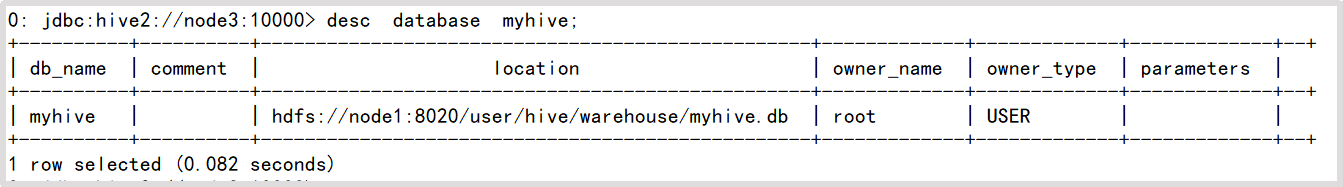

3. View database details

View basic database information

desc database myhive;

4. Delete database

Delete an empty database. If there is a data table under the database, an error will be reported

drop database myhive;

Forcibly delete the database, including the tables below the database

drop database myhive2 cascade;

2, Database table operation

1. Create database table syntax

CREATE [EXTERNAL] TABLE [IF NOT EXISTS] table_name [(col_name data_type [COMMENT col_comment], ...)] [COMMENT table_comment] [PARTITIONED BY (col_name data_type [COMMENT col_comment], ...)] [CLUSTERED BY (col_name, col_name, ...) [SORTED BY (col_name [ASC|DESC], ...)] INTO num_buckets BUCKETS] [ROW FORMAT row_format] [STORED AS file_format] [LOCATION hdfs_path]

explain:

1. CREATE TABLE creates a table with the specified name. If a table with the same name already exists, an exception is thrown; The user can ignore this exception with the IF NOT EXISTS option.

2. EXTERNAL keyword allows users to create an EXTERNAL table and specify a LOCATION to the actual data while creating the table. When Hive creates an internal table, it will move the data to the path pointed by the data warehouse; If an EXTERNAL table is created, only the path of the data is recorded without any change to the LOCATION of the data. When deleting a table, the metadata and data of the internal table will be deleted together, while the EXTERNAL table only deletes the metadata and does not delete the data.

3. LIKE allows users to copy existing table structures, but not data.

4. ROW FORMAT DELIMITED can be used to specify the row separator

5. STORED AS | SEQUENCEFILE|TEXTFILE|RCFILE to specify the storage format of the table data. In hive, the default storage format of the table is TextFile.

6. CLUSTERED BY: divide each table into buckets (partitions in MapReduce). Buckets are more fine-grained data range divisions. Hive is also a bucket organization for a column. Hive determines which bucket the record is stored in by hashing the column value and dividing it by the number of buckets.

7. LOCATION specifies the storage LOCATION of the table on HDFS.

2. Field type when Hive creates a table

| classification | type | describe | Literal example |

| Original type | BOOLEAN | true/false | TRUE |

|

| TINYINT | Signed integer of 1 byte - 128 ~ 127 | 1Y |

|

| SMALLINT | Signed integer of 2 bytes, - 32768 ~ 32767 | 1S |

|

| INT | 4-byte signed integer (- 2147483648 ~ 2147483647) | 1 |

|

| BIGINT | 8-byte signed integer | 1L |

|

| FLOAT | 4-byte single precision floating point number 1.0 |

|

|

| DOUBLE | 8-byte double precision floating-point number | 1.0 |

|

| DEICIMAL | Signed decimal of arbitrary precision | 1.0 |

|

| STRING | String, variable length | "a",'b' |

|

| VARCHAR | Variable length string | "a",'b' |

|

| CHAR | Fixed length string | "a",'b' |

|

| BINARY | Byte array | Cannot represent |

|

| TIMESTAMP | Timestamp, millisecond value, precision | 122327493795 |

|

| DATE | date | '2016-03-29' |

|

| Time | Hour, minute and second | '12:35:46' |

|

| DateTime | Year month day hour minute second |

|

| Complex type | ARRAY | An ordered collection of the same type | ["beijing","shanghai","tianjin","hangzhou"] |

| MAP | Key value, key must be the original type, and value can be of any type | {"Mathematics": 80, "Chinese": 89, "English": 95} | |

| STRUCT | Field collection, which can be of different types | struct('1',1,1.0) |

3. Internal table operation

What is not modified by external is the managed table, which is also called the management table. The data storage location of the internal table is determined by hive metastore. warehouse. The dir parameter determines (default: / user/hive/warehouse). Deleting an internal table will directly delete metadata and stored data. Therefore, the internal table is not suitable for sharing data with other tools.

1. hive watch building experience

create database myhive; use myhive; create table stu(id int,name string); insert into stu values (1,"zhangsan"); select * from stu;

2. Create a table and specify the separator between fields

create table if not exists stu3(id int ,name string) row format delimited fields terminated by '\t';

3. Create a table according to the query results

create table stu3 as select * from stu2;

4. Create a table based on the existing table structure

create table stu4 like stu2;

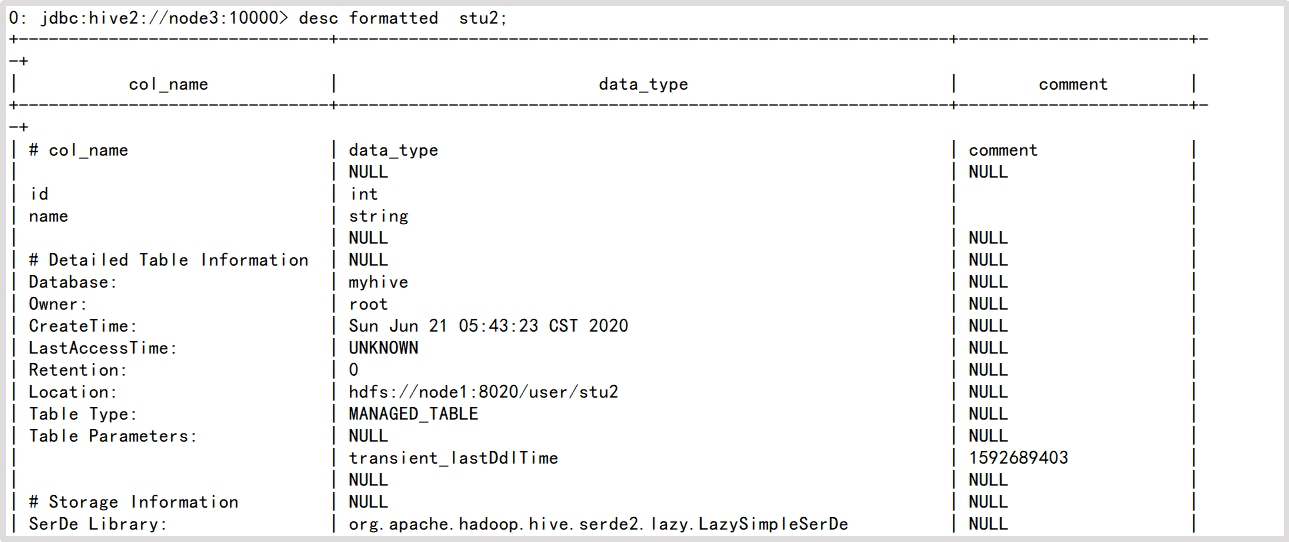

5. Type of query table

desc formatted stu2;

6. Delete table

drop table stu2;

Check the database and HDFS and find that after deleting the internal table, all contents are deleted

4. External table operation

When creating a table, you can specify the external keyword to create an external table. The file corresponding to the external table is stored in the hdfs directory specified by location. When adding a new file to the directory, the table will also read the file (of course, the file format must be consistent with the table definition).

Because the external table loads data from other hdfs paths into the table, the hive table will think that it does not completely monopolize the data. Therefore, when deleting the hive external table, the data is still stored in hdfs and will not be deleted.

1. Data loading command Load

The Load command is used to Load external data into the Hive table

Syntax:

load data [local] inpath '/export/data/datas/student.txt' [overwrite] | into table student [partition (partcol1=val1,...)];

Parameters:

- load data: indicates loading data

- Local: indicates loading data from local to hive table; Otherwise, load data from HDFS to hive table

- inpath: indicates the path to load data

- Overwrite: it means to overwrite the existing data in the table, otherwise it means to append

- into table: indicates which table to load

- student: represents a specific table

- Partition: indicates to upload to the specified partition

2. Operation cases

Create external tables of teacher and student tables respectively, and load data into the tables

The source data is as follows:

student.txt

01 Zhao Lei 1990-01-01 male

02 QianDian 1990-12-21 male

03 sun Feng 1990-05-20 male

04 Li Yun 1990-08-06 male

Zhou Mei, female, December 1, 1991

06 Wu Lan 1992-03-01 female

07 Zheng Zhu 1989-07-01 female

08 Wang Ju 1990-01-20 female

teacher.txt

01 Zhang San

02 Li Si

03 Wang Wu

- Create teacher table:

create external table teacher (tid string,tname string) row format delimited fields terminated by '\t';

- Create student table:

create external table student (sid string,sname string,sbirth string , ssex string ) row format delimited fields terminated by '\t';

- Load data from the local file system into the table

load data local inpath '/export/data/hivedatas/student.txt' into table student;

- Load data and overwrite existing data

load data local inpath '/export/data/hivedatas/student.txt' overwrite into table student;

- Load data from hdfs file system to table

In fact, it is an operation of moving files

You need to upload the data to the hdfs file system in advance,

hadoop fs -mkdir -p /hivedatas cd /export/data/hivedatas hadoop fs -put teacher.csv /hivedatas/ load data inpath '/hivedatas/teacher.csv' into table teacher;

Note that if the teacher table is deleted, the data in hdfs still exists, and after the table is re created, the data in the table will exist directly, because our student table uses an external table. After the drop table, the data in the table will still remain on hdfs

5. Complex type operation

1. Array type

Array is an array type, which stores data of the same type

Source data:

Description: name and locations are separated by tabs, and elements in locations are separated by commas

zhangsan beijing,shanghai,tianjin,hangzhou

wangwu changchun,chengdu,wuhan,beijin

Create table statement

create external table hive_array(name string, work_locations array<string>) row format delimited fields terminated by '\t' collection items terminated by ',';

Import data (import from local, also support import from HDFS)

load data local inpath '/export/data/hivedatas/work_locations.txt' overwrite into table hive_array;

Common query:

-- Query all data select * from hive_array; -- query loction First element in array select name, work_locations[0] location from hive_array; -- query location Number of elements in the array select name, size(work_locations) location from hive_array; -- query location Array contains tianjin Information select * from hive_array where array_contains(work_locations,'tianjin');

6. Partition table

Partition is not an independent table model. It should be combined with internal or external tables:

Internal partition table

External partition table

1. Basic operation

In big data, the most commonly used idea is divide and conquer. The partition table is actually an independent folder corresponding to the hdfs file system. Under this folder are all the data files in the partition.

Partition can be understood as classification, which puts different types of data into different directories.

The classification standard is the partition field, which can be one or more.

The significance of partitioned tables is to optimize queries. Try to use partition fields when querying. If the partition field is not used, it will be all scanned.

In the query, the where clause is used to specify the required partition.

In hive, partitions are folders

Create partition table syntax

create table score(sid string,cid string, sscore int) partitioned by (month string) row format delimited fields terminated by '\t';

Create a watch band with multiple partitions

create table score2 (sid string,cid string, sscore int) partitioned by (year string,month string,day string) row format delimited fields terminated by '\t';

Load data into partition table

load data local inpath '/export/data/hivedatas/score.csv' into table score partition (month='202006');

Load data into a multi partition table

load data local inpath '/export/data/hivedatas/score.csv' into table score2 partition(year='2020',month='06',day='01');

Multi partition joint query is implemented by union # all

select * from score where month = '202006' union all select * from score where month = '202007';

View partition

show partitions score;

Add a partition

alter table score add partition(month='202008');

Add multiple partitions at the same time

alter table score add partition(month='202009') partition(month = '202010');

Note: after adding partitions, you can see an additional folder under the table in the hdfs file system

delete a partition

alter table score drop partition(month = '202010');

7. Barrel separation table

Bucket division is to divide data into different files, which is actually the partition of MapReduce

1. Basic operation

Divide the data into multiple buckets according to the specified fields. In other words, divide the data according to the fields. You can divide the data into multiple files according to the fields

Turn on hive's bucket list function (if an error is reported when executing this command, it means that this version of hive has automatically turned on the bucket sorting function, then proceed to the next step directly)

set hive.enforce.bucketing=true;

Set the number of reduce

set mapreduce.job.reduces=3;

Create bucket table

create table course (cid string,c_name string,tid string) clustered by(cid) into 3 buckets row format delimited fields terminated by '\t';

The data loading of bucket table can only be done through insert overwrite because it is difficult to load the data of bucket table through hdfs # dfs - put file or load # data

Create a common table and load the data of the common table into the bucket table through query through insert overwrite

To create a normal table:

create table course_common (cid string,c_name string,tid string) row format delimited fields terminated by '\t';

Load data in normal table

load data local inpath '/export/data/hivedatas/course.csv' into table course_common;

Load data into the bucket table through insert overwrite

insert overwrite table course select * from course_common cluster by(cid);

8. Modify table

1. Table rename

Basic syntax:

alter table old_table_name rename to new_table_name;

-- Put the watch score3 Modified into score4 alter table score3 rename to score4;

2. Add / modify column information

-- 1:Query table structure desc score4; -- 2:Add column alter table score4 add columns (mycol string, mysco string); -- 3:Query table structure desc score4; -- 4:Update column alter table score4 change column mysco mysconew int; -- 5:Query table structure desc score4;

3. Delete table

drop table score4;

4. Clear table data

You can only empty the management table, that is, the internal table

truncate table score4;

9. Load data in hive table

1. Insert data directly into the partitioned table

Load data through insert into

create table score3 like score;

insert into table score3 partition(month ='202007') values ('001','002',100);Load data by query

create table score4 like score; insert overwrite table score4 partition(month = '202006') select sid,cid,sscore from score;

2. Insert data through query

Load data through load mode

create table score5 like score; load data local inpath '/export/data/hivedatas/score.csv' overwrite into table score5 partition(month='202006');

- Multi insert mode

It is often used in the actual production environment to split a table into two or more parts

Load data into the score table

load data local inpath '/export/data/hivedatas/score.csv' overwrite into table score partition(month='202006');

Create the first part table:

create table score_first( sid string,cid string) partitioned by (month string) row format delimited fields terminated by '\t' ;

Create part II table:

create table score_second(cid string,sscore int) partitioned by (month string) row format delimited fields terminated by '\t';

Load data for the first and second part tables respectively

from score insert overwrite table score_first partition(month='202006') select sid,cid insert overwrite table score_second partition(month = '202006') select cid,sscore;

- Create tables and load data in query statements (as # select)

Save the query results to a table

create table score5 as select * from score;

- When creating a table, specify the loading data path through location

1. Create a table and specify the location on hdfs

create external table score6 (sid string,cid string,sscore int) row format delimited fields terminated by '\t' location '/myscore6';

2. Upload data to hdfs

hadoop fs -mkdir -p /myscore6 hadoop fs -put score.csv/myscore6;

3. Query data

select * from score6;

10. Data export in hive table

Export the data in hive table to any other directory, such as linux local disk, such as hdfs, such as mysql, etc

1. insert export

1) Export query results to local

insert overwrite local directory '/export/data/exporthive' select * from score;

2) Format and export the query results to local

insert overwrite local directory '/export/data/exporthive' row format delimited fields terminated by '\t' collection items terminated by '#' select * from student;

3) Export the query results to HDFS (no local)

insert overwrite directory '/exporthive' row format delimited fields terminated by '\t' select * from score;

2. hive shell command export

Basic syntax: (hive -f/-e execute statement or script > file)

bin/hive -e "select * from myhive.score;" > /export/data/exporthive/score.txt

3. export to HDFS

export table score to '/export/exporthive/score';

4. sqoop export

Due to the limited space, a series of articles on the actual combat of the project are introduced in detail

This blog's big data series articles will be updated every day. Remember to collect and pay attention