Author: York zhou

preface

According to the general demand development process, this article will explain * * * an effective scheme of "obtaining real-time audio stream while playing video on Android devices" from demand, analysis, development to summary.

demand

In car products, there is such a demand. For example, I connect my Android device to the car through usb cable. At this time, I hope my operation on my Android mobile phone can be synchronized to the large screen of the car for display.

Now many car machines are basically Android systems, and there are software like CarPlay and CarLife that specializes in mobile screen projection. However, there are still some cars whose machines use Linux system. At this time, how to synchronize screen information between Android devices and Linux devices?

In the following article, we will only introduce one scenario, that is, when my mobile phone plays video, the video content and video sound are synchronized to the car machine of linux system. And in this article, we only introduce the content of audio synchronization.

analysis

The audio synchronization between two devices is to synchronize the audio data in one device to another device. One party is the sender and the other is the receiver. The sender keeps sending the audio stream, and the receiver receives the audio stream and plays it in real time, which can achieve the effect we want.

When it comes to communication between devices, I believe many students will think of tcp and udp protocols. Yes, considering the orderly transmission of tcp protocol and the disorder of udp, the audio data we transmit also needs to be orderly. For the transmission of all audio data, we use tcp protocol.

Next, let's learn about the playback process of sound on Android system? This is very helpful for us to get the audio stream during video playback.

Let's first look at the video playback and recording. What API s does Android provide us?

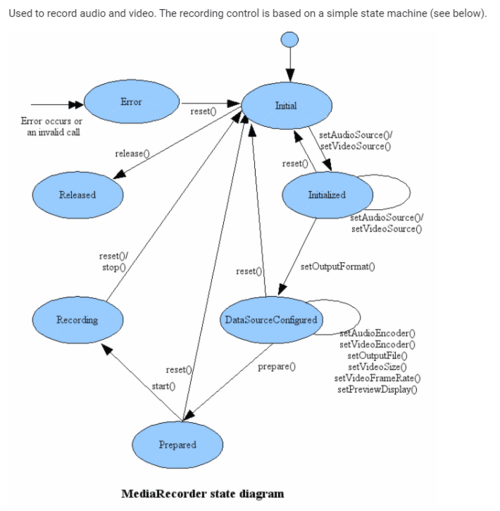

MediaRecorder

Students who have come into contact with Android video and recording should not be unfamiliar with the API MediaRecorder. Yes, on the Android system, we can easily realize video recording and recording functions through the MediaRecorder API. The following is the status chart of MediaRecorder. For specific use, you can check the official Android documents if you are interested( https://developer.android.google.cn/guide/topics/media/mediarecorder?hl=zh_cn).

MediaPlayer

In addition, for playing video, Android provides us with the interface of MediaPlayer( https://developer.android.google.cn/guide/topics/media/mediaplayer?hl=en).

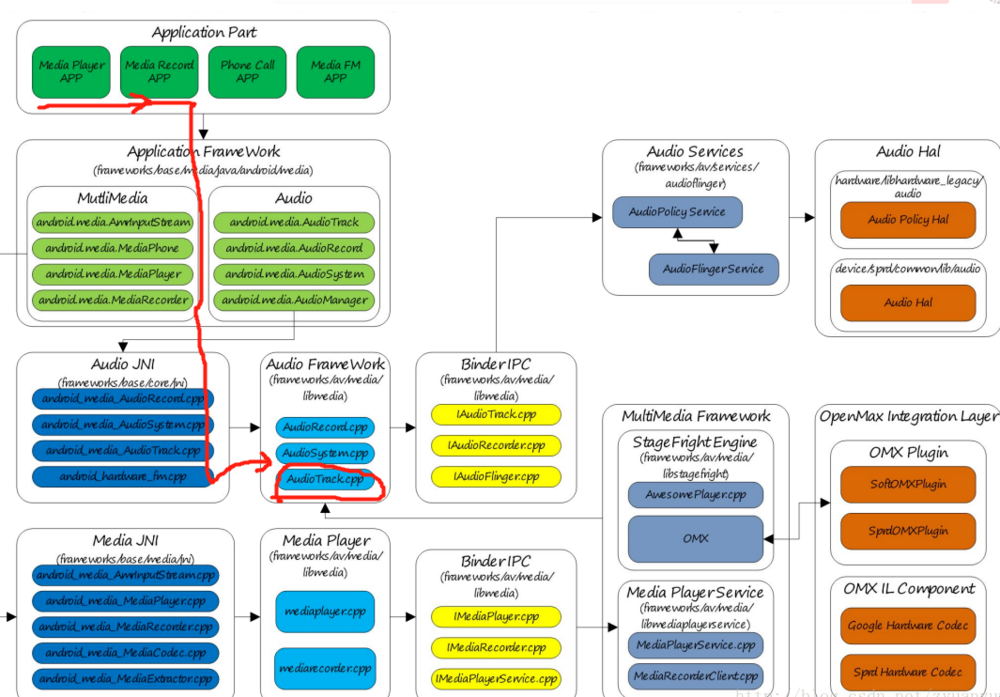

After understanding the above two API s, let's take a look at the frame diagram of Android audio system.

From the above audio system frame diagram (see the part with the red line), we can know that the application calls MediaPlayer and MediaRecorder to play and record, and the audiotrack will be called in the framewrok layer CPP this file.

So back to the focus of the article, we need to intercept the audio stream of the video in real time when playing the video. The work of intercepting audio stream can be put in audiotrack Processing in CPP.

Let's take a look at audiotrack CPP is an important method

ssize_t AudioTrack::write(const void* buffer, size_t userSize, bool blocking) { if (mTransfer != TRANSFER_SYNC) { return INVALID_OPERATION; }

if (isDirect()) {

AutoMutex lock(mLock);

int32_t flags = android_atomic_and(

~(CBLK_UNDERRUN | CBLK_LOOP_CYCLE | CBLK_LOOP_FINAL | CBLK_BUFFER_END),

&mCblk->mFlags);

if (flags & CBLK_INVALID) {

return DEAD_OBJECT;

}

}

if (ssize_t(userSize) < 0 || (buffer == NULL && userSize != 0)) {

// Sanity-check: user is most-likely passing an error code, and it would

// make the return value ambiguous (actualSize vs error).

ALOGE("AudioTrack::write(buffer=%p, size=%zu (%zd)", buffer, userSize, userSize);

return BAD_VALUE;

}

size_t written = 0;

Buffer audioBuffer;

while (userSize >= mFrameSize) {

audioBuffer.frameCount = userSize / mFrameSize;

status_t err = obtainBuffer(&audioBuffer,

blocking ? &ClientProxy::kForever : &ClientProxy::kNonBlocking);

if (err < 0) {

if (written > 0) {

break;

}

if (err == TIMED_OUT || err == -EINTR) {

err = WOULD_BLOCK;

}

return ssize_t(err);

}

size_t toWrite = audioBuffer.size;

memcpy(audioBuffer.i8, buffer, toWrite);

mBuffer = malloc(toWrite);

memcpy(mBuffer,buffer,toWrite);

if(mCurrentPlayMusicStream && mSocketHasInit){

onSocketSendData(toWrite);

}

buffer = ((const char *) buffer) + toWrite;

userSize -= toWrite;

written += toWrite;

releaseBuffer(&audioBuffer);

}

if (written > 0) {

mFramesWritten += written / mFrameSize;

}

return written;

realization

After the previous analysis, our scheme is also relatively clear, that is, audiotrack in the framework layer Cpp file, send the audio stream in real time through socket.

The other is the receiver, which constantly receives the socket data sent out. This socket data is the real-time pcm stream. The receiver can realize the real-time synchronization of audio by playing the pcm stream in real time.

About video streaming, how to achieve synchronization, you can also guess?

1)AudioTrack. Code implementation in CPP

#define DEST_PORT 5046

#define DEST_IP_ADDRESS "192.168.7.6"

int mSocket;

bool mSocketHasInit;

bool mCurrentPlayMusicStream;

struct sockaddr_in mRemoteAddr;

ssize_t AudioTrack::write(const void* buffer, size_t userSize, bool blocking)

{

......

size_t toWrite = audioBuffer.size;

memcpy(audioBuffer.i8, buffer, toWrite);

mBuffer = malloc(toWrite);

memcpy(mBuffer,buffer,toWrite);

//The code we added: send the audio stream in real time

if(mCurrentPlayMusicStream && mSocketHasInit){

onSocketSendData(toWrite);

}

......

}

int AudioTrack::onSocketSendData(uint32_t len){

assert(NULL != mBuffer);

assert(-1 != len);

if(!mSocketHasInit){

initTcpSocket();

}

unsigned int ret = send(mSocket, mBuffer,len, 0);

free(mBuffer);

return 0;

}

2) Code processing at the receiving end

(I use Android device for debugging here. If it is linux system, the idea is the same)

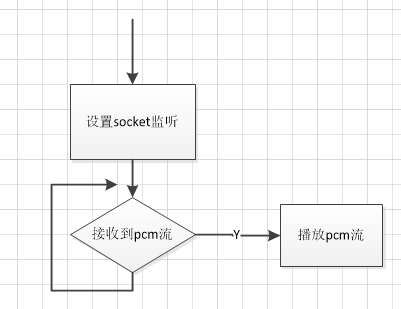

The processing logic flow chart of the receiving end is as follows:

1. Set socket listening;

2. Loop monitoring socket port data;

3. Received pcm stream;

4. Play pcm stream;

---------- PlayActivity.java ------------

private ServerSocket mTcpServerSocket = null;

private List<Socket> mSocketList = new ArrayList<>();

private MyTcpListener mTcpListener = null;

private boolean isAccept = true;

/**

* Set socket listening

*/

public void startTcpService() {

Log.v(TAG,"startTcpService();");

if(mTcpListener == null){

mTcpListener = new MyTcpListener();

}

new Thread() {

@Override

public void run() {

super.run();

try {

mTcpServerSocket = new ServerSocket();

mTcpServerSocket.setReuseAddress(true);

InetSocketAddress socketAddress = new InetSocketAddress(AndroidBoxProtocol.TCP_AUDIO_STREAM_PORT);

mTcpServerSocket.bind(socketAddress);

while (isAccept) {

Socket socket = mTcpServerSocket.accept();

mSocketList.add(socket);

//Start a new thread to receive socket data

new Thread(new TcpServerThread(socket,mTcpListener)).start();

}

} catch (Exception e) {

Log.e("TcpServer", "" + e.toString());

}

}

}.start();

}

/**

* Stop socket listening

*/

private void stopTcpService(){

isAccept = false;

if(mTcpServerSocket != null){

new Thread() {

@Override

public void run() {

super.run();

try {

for(Socket socket:mSocketList) {

socket.close();

}

mTcpServerSocket.close();

} catch (IOException e) {

e.printStackTrace();

}

}

}.start();

}

}

/**

* Play pcm real-time stream

* @param buffer

*/

private void playPcmStream(byte[] buffer) {

if (mAudioTrack != null && buffer != null) {

mAudioTrack.play();

mAudioTrack.write(buffer, 0, buffer.length);

}

}

private Handler mUiHandler = new Handler() {

@Override

public void handleMessage(Message msg) {

super.handleMessage(msg);

switch (msg.what) {

case HANDLER_MSG_PLAY_PCM:

playPcmStream((byte[]) msg.obj);

break;

default:

break;

}

}

};

private class MyTcpListener implements ITcpSocketListener{

@Override

public void onRec(Socket socket, byte[] buffer) {

sendHandlerMsg(HANDLER_MSG_PLAY_PCM,0,buffer);

}

}

summary

At the beginning of receiving this development demand, I also thought about this scheme for a long time. It is also verified again that being familiar with the framework layer can provide us with a lot of ideas for implementation problems. During the intermediate debugging, we also encountered many problems. But I'm glad that the results were good, and they all ran through in the end.

I have run the scheme on Android 5.0 and Android 7.0 and passed the test. I hope it will be helpful to you.

last

I'd like to share with you a copy of learning documents, interview questions, study notes and other documents related to Android development and audio and video that I collected and sorted online. I hope they can help you learn and improve. If you need reference, you can go to me directly CodeChina address: https://codechina.csdn.net/u012165769/Android-T3 Access.