Summary of performance test steps

- 1. Observe and run for at least one day to see how slow the production is

- 2. Start the slow query log and set the threshold. For example, slow SQL is used for more than 5 seconds, and grab it for processing. You can see the SQL statements that return the most recordsets

- 3.explain + slow SQL analysis

- 4.show profile

- 5. Optimize the parameters of SQL database server (O & M or DBA)

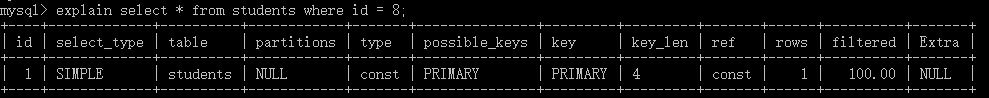

1, explain ---- performance analysis of single SQL statement

(1) Introduction

definition

- Use the explain keyword to simulate the optimizer to execute SQL query statements, so as to know how MySQL handles your SQL statements

- You can observe the query optimizer to see how SQL executes statements

- Test the performance of single SQL statement

What can explain do

- Read order of table: id

- Operation type of data reading operation: select_type

- Which indexes can be used: possible_keys

- Which indexes are actually used: key

- References between tables: table

- How many rows per table are queried by the optimizer: rows

Open explain

- explain + SQL statement [\ G: vertical arrangement result]

- After the normal statement, you can directly analyze the performance of this statement

(2) Interpretation of results

id - reading order of the table

- The sequence number of the select query, including a group of numbers, indicates the order in which the select sentence or operation table is executed in the query. The first row is the drive table

- Two cases

- 1. The ID is the same, and the execution sequence is from top to bottom

- 2. The id is different. If it is a sub query, the id sequence number will increase. The larger the id value, the higher the priority, and the earlier it will be executed

- 3.id is the same but different. The larger the id value, the higher the priority, and the earlier it is executed

select_type operation type

| value | describe |

|---|---|

| SIMPLE | Simple SELECT statement (excluding UNION operation or subquery operation) |

| PRIMARY | SELECT the outermost layer in the query (for example, two tables are UNOIN or there are sub queries. The outer table operation is the primary and the inner operation is UNION) |

| UNION | In the UNION operation, the SELECT in the inner layer is queried, that is, the selected by the UNION |

| SUBQUERY | SELECT of subquery |

| DERIVED | SELECT query contained in FROM clause |

| UNION RESULT | The result of UNION. At this time, the id is NULL |

table

- Which table is the data of this row displayed

partitions

- Query access partition

type

-

From good to bad

- system,const, eq_ret, ref, range, index, ALL

-

Various meanings

- System: indicates that there is only one row of records (equal to the system table). This is a special case of const type and is unlikely to be implemented

- const: indicates that it can be found once through the index. const is used to compare the primary key, which is unlikely to be realized. eg: specify a unique index value

- eq_ret: unique index scanning. For each index key, only one record matches it. Excellent

- ref: non unique index scanning, which returns all rows matching an individual value. In essence, it is also an index access. It returns all rows matching an individual value. It can be multiple rows and should reach the level. eg: joint index

- Range: only retrieve rows in a given range, and use an index to select rows. eg: use between, in, > for index fields

- Index: the difference between index and all is that index type only traverses the index tree

- all: the whole table will be traversed to find matching rows. If the data volume of the database reaches millions, an index can be added to avoid full table scanning

possible_keys

- Possible indexes

- The display may apply one or more indexes similar to those in this table

key

- The index actually used. If null, no index is used

- If an overlay index is used in the query, the index will only appear in the key list

- The index used shall be subject to this. If there is a large amount of data, the effect of adding the index can be seen through this to see whether the index is used

key_len

-

Indicates the number of bytes used in the index. The index length used in the query can be calculated through this column. It is observed that the number of indexes used

-

The shorter the length, the better without losing accuracy

-

key_ The value displayed by Len is the maximum possible length of the index field, not the actual length, i.e. key_len is calculated according to the table definition, not retrieved from the table

-

key_ Length calculation formula of len:

- varchr(24) variable length field and allow null = 24 * (character set: utf8 = 3, GBK = 2, Latin1 = 1) + 1 (null) + 2 (variable length field)

- varchr(10) variable length field and null = 10 * (character set: utf8 = 3, GBK = 2, Latin1 = 1) + 2 (variable length field)

- char(10) fixed field and null = 10 * (character set: utf8=3,gbk=2,latin1=1)+1(NULL)

- char(10) fixed field and null = 10 * is not allowed (character set: utf8=3,gbk=2,latin1=1)

ref

- The column showing the index is used

rows

- According to table statistics and index selection, roughly estimate the number of rows to be read to find the required records

extra

- Contains additional information that is not suitable for display in other columns but is important

- Using FileSort: it indicates that MySQL will use an external index to sort the data instead of reading according to the index order in the table. The sort that cannot be completed by using the index in MySQL is called "file sort". It is not good and needs to be optimized

- Using temporary table: temporary table is used to save intermediate results. MYSQL uses temporary table when sorting query results. It is common in sorting OrderBy and grouping query GroupBy. It is not good

- Using Index: index is used to avoid full table scanning

- Using Where: where is used

- Using Join Buffer: connection cache is used (there are too many join tables, and the value of JoinBuffer in the configuration file can be increased)

- Impossible Where: an impossible condition. The where condition cannot be true. No line is returned

2, showprofile ---- analyze statement resource consumption

(1) Introduction

definition

- It is a measurement provided by MySQL that can be used to analyze the resource consumption of statement execution in the current session and can be used for SQL tuning

- Analyze the resource consumption and execution process of each executed sql statement

- By default, the parameters are turned off and the results of the last 15 runs are maintained

open

- 1. Check whether the current MySQL version supports:

- 2. Turn on function. It is off by default. It needs to be turned on before use

# Query whether to open or close show varibales like'profiling' # The setting is enabled. It is invalid after mysql is restarted. You need to restart it set profiling=on

(2) Result analysis

type

| type | meaning |

|---|---|

| all | Display all overhead information |

| block io | Display block IO related information |

| cpu | Display cpu related information |

| ipc | Display cost information related to sending and receiving |

| memory | Display memory related overhead information |

| page faults | Display overhead information related to page errors |

``` # Displays all executed. 15 by default show profiles; # Query the details of an item according to queryID show profile cpu,block io for query2 ```

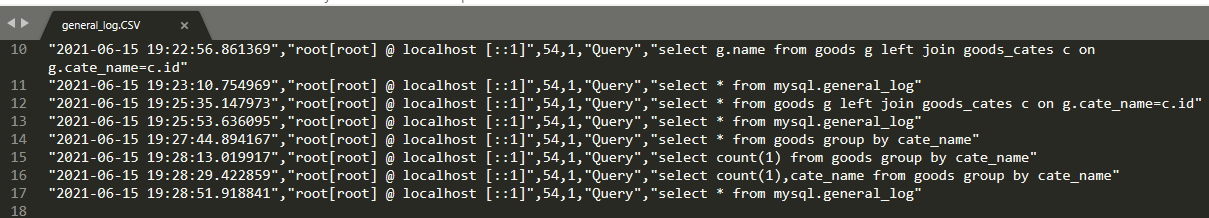

3, Global query log, slow query log - save executed statements

(1) Global query log - records every SQL statement written

be careful

- Compare consumption performance. Record every SQL statement written

- Don't open it easily, open it in case of problems, don't open it in the production environment, open it in the test environment

Enable global query log

-- Open command,Close is=0 set global general_log=1; -- take SQL Statement is written in the table - set global log_output='TABLE'; -- What you wrote SQL Statement will be recorded MySQL Curry's genderal_log surface select * from mysql.general_log;

SQL needs to be modified and dangerous

- converting HEAP TO MYISAM tour results are too large, memory is not enough, and Wang disk is moved

- creating tmp TABLE creating a temporary table

- copying TO tmp TABLE ON DISK is dangerous

- locked lock

(2) Slow query log - records SQL statements that have timed out

definition

- The slow query log of MySQL is a kind of log record provided by mysql. It is used to record the statements whose response time exceeds the threshold value in MySQL. Specifically, it refers to the statements whose running time exceeds long_ query_ If time is worth SQL, it will be recorded in the slow query log

- Specifically, the running time exceeds long_ query_ If time is worth SQL, it will be recorded in the slow query log. long_ query_ The default value of time is 10, which means to run statements for more than 10 seconds.

- Let him check the SQL that exceeds our maximum endurance time. For example, if an SQL is executed for more than 5 seconds, we are even slow SQL. We hope to collect SQL that exceeds 5 seconds and conduct a comprehensive analysis in combination with the previous explain

Open in mysql

- By default, MySQL database does not enable slow query log, so * * we need to set this parameter manually

- Of course, if it is not necessary for tuning, it is generally not recommended to start this parameter, because starting the slow query log will more or less have a certain performance impact. Slow query log supports writing log records to files

-- Check whether the slow query log is enabled and the save path of the slow query log show variables like '%slow_query_log%'; -- Check the threshold (default 10) show variables like 'long_query_time%'; -- Set threshold, restart mysql Will take effect set global long_query_time=3; set global slow_query_log_file=C:\ProgramData\MySQL\MySQL Server 8.0\Data\slow.log -- If it is invalid, it can be in the file my.ini File settings global long_query_time=3 global slow_query_log_file=C:\ProgramData\MySQL\MySQL Server 8.0\Data\slow.log -- test select sleep(5); --stay slow_log.txt You can see this statement, query time and statement

Open in linux

- With the help of the slow query log tool mysqldumpslow

- parameter information

- s: Indicates how to sort

c: Number of visits

l: Lock time

r: Return record

t: Query time

al: average locking time

ar: average number of returned records

t: That is to return the previous data-- see mysqldumpslow What's the function mysqldumpslow --help -- Get up to 10 returned recordsets SQL mysqldumpslow -s r -t 10 D:/phpStudy/PHPTutorial/MySQL/slow_log.txt -- Get the top 10 visits SQL mysqldumpslow -s c -t 10 D:/phpStudy/PHPTutorial/MySQL/slow_log.txt

- s: Indicates how to sort

4, Benchmark - test the performance of the system - evaluate the processing capacity of the server

(1) Benchmark definition

What is benchmarking

- Benchmarking is an activity of measuring and evaluating software performance indicators. It is used to establish the performance benchmark at a certain time, so that when the software and hardware of the system change, the benchmarking can be carried out again to evaluate the impact of the change on performance

- Benchmark is a kind of stress test set for the system

Benchmark features

- It is direct, simple and easy to compare. It is used to evaluate the processing capacity of the server

- It may not be related to the business logic. The query used and the authenticity of the business may not be related to the business environment

Stress test features

- Test the real business data to obtain the pressure that the real system can withstand

- Different topics need to be targeted, and the data and queries used are also real

- Benchmarking is a simplified stress test

Purpose of benchmarking

- Establish the performance baseline of MySQL server, determine the current operation of MySQL server, and determine the effect after optimization

- Simulate the higher load than the current system, find out the expansion bottleneck of the system, increase the database concurrency, observe the changes of QPS (queries processed per second) and TPS (transactions processed per second), and determine the relationship between concurrency and optimal performance

- Test different hardware, software and operating system configurations

(2) How to conduct basic test

Benchmark the whole system

- advantage

- It can test the performance of the whole system, including web server cache, database and so on

- MySQL is not always the bottleneck of performance problems. If you only focus on MySQL, you may ignore other problems, which can reflect the performance problems between the interfaces of various components in the system and reflect the real performance status

- shortcoming

- The most important thing of benchmarking is simplicity. It is possible to test different schemes and find the best scheme. The time of benchmarking must be short, otherwise it will take a lot of time to conduct benchmarking

- The test design is complex and takes a long time

Benchmark MySQL

-

advantage

- The test design is simple and the time is short

-

shortcoming

- Unable to fully understand the performance benchmark of the whole system

Common indicators of MySQL benchmark

- Number of transactions processed per unit time (TPS)

- Number of queries processed per unit time (QPS)

MySQL benchmark tool mysqlslap

- You can simulate the server load and output relevant statistics

- Used outside mysql

Description of common parameters

| parameter | Parameter description |

|---|---|

| –auto-generate-sql | The system automatically generates SQL script for testing |

| –auto-generate-sql-load-type | Type of test statement. Specifies whether the test environment is a read operation, a write operation, or a mixture of the two. Values include: read, key, write, update and mixed (default). |

| –auto-generate-sql-write-number | Specifies the amount of data generated when initializing data. |

| –auto-generate-sql-add-auto-increment | Auto increment ID is automatically added to the generated table |

| –number-char-cols | Specify the number of vachar types in the test table and the number of character type columns in the automatically generated test table. The default is 1 |

| –number-int-cols | Specify the number of INT type columns in the test table and the number of numeric type columns in the automatically generated test table. The default is 1 |

| –number-of-queries | Formulate the number of queries executed by each thread and the total number of test queries (number of concurrent customers) × Number of queries per customer) |

| –query | It is used to specify a custom SQL script and use the custom script to execute the test. For example, you can call a custom stored procedure or SQL statement to execute the test. |

| –create-schema | Specifies the name of the database used to execute the test. schema is database. It will be deleted after the test |

| –concurrency | Specify the number of concurrent threads, that is, how many clients are simulated to execute select at the same time. Multiple values can be specified, with a comma or the value specified by the – delimiter parameter as the separator. For example: – concurrency = 100200500. |

| –engine | Specify the engine to be tested. There can be multiple engines separated by commas. For example: – engines=myisam,innodb. |

| –no-drop | Specifies not to clean up test data |

| –iterations | Specify the number of test runs, which represents the number of tests to be run in different concurrent environments. This is specified. No drop cannot be specified |

| –only-print | Only print test statements without actually executing them. |

| –debug-info, -T | Specify to output additional memory and CPU statistics, and print information related to memory and CPU. |

# give an example mysqlslap --concurrency=1,50,100,200 --iterations=3 --number-int-cols=5 --number-char-cols=5 -- auto-generate-sql --auto-generate-sql-add-autoincrement --engine=myisam,innodb --number-of- queries=10 --create-schema=test -uroot -p root --auto-generate-sql-load-type=read,write

- During this test, there is no innodb block in the read + write operation time of myisam

- -uroot -p root here you need to fill in the user name and password

- – auto generate SQL load type = read, write. The operation filled in here is read + write, which is used for testing

5, trace ---- view how the MySQL optimizer selects the execution plan

introduce

- From the above, explain can view the SQL execution plan, but you can't know why it makes this decision. If you want to determine how to choose between multiple index schemes or what sort method to choose when sorting, is there any good way?

- Trace can see why MySQL chooses this execution plan. Through trace, we can further understand why the optimizer chooses A to execute instead of B, or know the sorting method used for A sort. Help us better understand optimizer behavior

definition

- trace: see how the optimizer selects the tool to execute the plan

open

- From mysql5 Starting from 6, trace is supported to view how the optimizer selects the execution plan

- Enabling this function will affect MySQL performance, so it is temporarily enabled when analyzing problems

-- Indicates on trace. JSON Output format set session optimizer_trace='enabled=on' ,end_markers_in_json=on -- Execute after opening SQL sentence -- see trace Analysis results select *from information_schema.OPTIMISER_TRACE\G -- close trace set session optimizer_trace='enabled=off'

case analysis

- Execute statement

CREATE TABLE `test_trace` ( `id` INT(11) NOT NULL AUTO_INCREMENT, `a` INT(11) DEFAULT NULL, `b` INT(11) DEFAULT NULL, `create_time` DATETIME NOT NULL DEFAULT CURRENT_TIMESTAMP COMMENT 'Record creation time', `update_time` DATETIME NOT NULL DEFAULT CURRENT_TIMESTAMP ON UPDATE CURRENT_TIMESTAMP COMMENT 'Record update time', PRIMARY KEY (`id`), KEY `idx_a` (`a`), KEY `idx_b` (`b`) ) ENGINE=INNODB DEFAULT CHARSET=utf8mb4;

- Output results

QUERY: select * from t1 where a >900 and b > 910 order by a --SQL sentence

TRACE: {

"steps": [

{

"join_preparation": { --SQL Preparation stage

"select#": 1,

"steps": [

{

"expanded_query": "/* select#1 */ select `t1`.`id` AS `id`,`t1`.`a` AS `a`,`t1`.`b` AS `b`,`t1`.`create_time` AS `create_time`,`t1`.`update_time` AS `update_time` from `t1` where ((`t1`.`a` > 900) and (`t1`.`b` > 910)) order by `t1`.`a`"

}

] /* steps */

} /* join_preparation */

},

{

"join_optimization": { --SQL Optimization stage

"select#": 1,

"steps": [

{

"condition_processing": { --Conditional processing

"condition": "WHERE",

"original_condition": "((`t1`.`a` > 900) and (`t1`.`b` > 910))", --Original condition

"steps": [

{

"transformation": "equality_propagation",

"resulting_condition": "((`t1`.`a` > 900) and (`t1`.`b` > 910))" --Equivalent transfer transformation

},

{

"transformation": "constant_propagation",

"resulting_condition": "((`t1`.`a` > 900) and (`t1`.`b` > 910))" --Constant transfer conversion

},

{

"transformation": "trivial_condition_removal",

"resulting_condition": "((`t1`.`a` > 900) and (`t1`.`b` > 910))" --Structure after removing no conditions

}

] /* steps */

} /* condition_processing */

},

{

"substitute_generated_columns": {

} /* substitute_generated_columns */ --Replace virtual build column

},

{

"table_dependencies": [ --Table dependency details

{

"table": "`t1`",

"row_may_be_null": false,

"map_bit": 0,

"depends_on_map_bits": [

] /* depends_on_map_bits */

}

] /* table_dependencies */

},

{

"ref_optimizer_key_uses": [

] /* ref_optimizer_key_uses */

},

{

"rows_estimation": [ --Estimated table access costs

{

"table": "`t1`",

"range_analysis": {

"table_scan": {

"rows": 1000, --Number of scan lines

"cost": 207.1 --cost

} /* table_scan */,

"potential_range_indexes": [ --Analyze possible indexes

{

"index": "PRIMARY",

"usable": false, --by false,Description the primary key index is not available

"cause": "not_applicable"

},

{

"index": "idx_a", --Index may be used idx_a

"usable": true,

"key_parts": [

"a",

"id"

] /* key_parts */

},

{

"index": "idx_b", --Index may be used idx_b

"usable": true,

"key_parts": [

"b",

"id"

] /* key_parts */

}

] /* potential_range_indexes */,

"setup_range_conditions": [

] /* setup_range_conditions */,

"group_index_range": {

"chosen": false,

"cause": "not_group_by_or_distinct"

} /* group_index_range */,

"analyzing_range_alternatives": { --Analyze the cost of each index

"range_scan_alternatives": [

{

"index": "idx_a", --Use index idx_a Cost of

"ranges": [

"900 < a" --Use index idx_a Scope of

] /* ranges */,

"index_dives_for_eq_ranges": true, --Whether to use index dive(For detailed description, please see the knowledge extension below)

"rowid_ordered": false, --Whether the records obtained by using this index are sorted by primary key

"using_mrr": false, --Whether to use mrr

"index_only": false, --Use overlay index

"rows": 100, --The number of records obtained using this index

"cost": 121.01, --Cost of using this index

"chosen": true --The index may be selected

},

{

"index": "idx_b", --Use index idx_b Cost of

"ranges": [

"910 < b"

] /* ranges */,

"index_dives_for_eq_ranges": true,

"rowid_ordered": false,

"using_mrr": false,

"index_only": false,

"rows": 90,

"cost": 109.01,

"chosen": true --The index may also be selected

}

] /* range_scan_alternatives */,

"analyzing_roworder_intersect": { --Analyze the cost of using index consolidation

"usable": false,

"cause": "too_few_roworder_scans"

} /* analyzing_roworder_intersect */

} /* analyzing_range_alternatives */,

"chosen_range_access_summary": { --Confirm the optimal method

"range_access_plan": {

"type": "range_scan",

"index": "idx_b",

"rows": 90,

"ranges": [

"910 < b"

] /* ranges */

} /* range_access_plan */,

"rows_for_plan": 90,

"cost_for_plan": 109.01,

"chosen": true

} /* chosen_range_access_summary */

} /* range_analysis */

}

] /* rows_estimation */

},

{

"considered_execution_plans": [ --Implementation plan considered

{

"plan_prefix": [

] /* plan_prefix */,

"table": "`t1`",

"best_access_path": { --Optimal access path

"considered_access_paths": [ --Determine the access path

{

"rows_to_scan": 90, --Number of rows scanned

"access_type": "range", --Access type: Yes range

"range_details": {

"used_index": "idx_b" --The index used is: idx_b

} /* range_details */,

"resulting_rows": 90, --Number of result rows

"cost": 127.01, --cost

"chosen": true, --Confirm selection

"use_tmp_table": true

}

] /* considered_access_paths */

} /* best_access_path */,

"condition_filtering_pct": 100,

"rows_for_plan": 90,

"cost_for_plan": 127.01,

"sort_cost": 90,

"new_cost_for_plan": 217.01,

"chosen": true

}

] /* considered_execution_plans */

},

{

"attaching_conditions_to_tables": { --Try adding some other query criteria

"original_condition": "((`t1`.`a` > 900) and (`t1`.`b` > 910))",

"attached_conditions_computation": [

] /* attached_conditions_computation */,

"attached_conditions_summary": [

{

"table": "`t1`",

"attached": "((`t1`.`a` > 900) and (`t1`.`b` > 910))"

}

] /* attached_conditions_summary */

} /* attaching_conditions_to_tables */

},

{

"clause_processing": {

"clause": "ORDER BY",

"original_clause": "`t1`.`a`",

"items": [

{

"item": "`t1`.`a`"

}

] /* items */,

"resulting_clause_is_simple": true,

"resulting_clause": "`t1`.`a`"

} /* clause_processing */

},

{

"reconsidering_access_paths_for_index_ordering": {

"clause": "ORDER BY",

"index_order_summary": {

"table": "`t1`",

"index_provides_order": false,

"order_direction": "undefined",

"index": "idx_b",

"plan_changed": false

} /* index_order_summary */

} /* reconsidering_access_paths_for_index_ordering */

},

{

"refine_plan": [ --Improved implementation plan

{

"table": "`t1`",

"pushed_index_condition": "(`t1`.`b` > 910)",

"table_condition_attached": "(`t1`.`a` > 900)"

}

] /* refine_plan */

}

] /* steps */

} /* join_optimization */

},

{

"join_execution": { --SQL Execution phase

"select#": 1,

"steps": [

{

"filesort_information": [

{

"direction": "asc",

"table": "`t1`",

"field": "a"

}

] /* filesort_information */,

"filesort_priority_queue_optimization": {

"usable": false, --Priority queue optimization sorting is not used

"cause": "not applicable (no LIMIT)" --The reason why priority queue sorting is not used is that there is no limit

} /* filesort_priority_queue_optimization */,

"filesort_execution": [

] /* filesort_execution */,

"filesort_summary": { --Sort details

"rows": 90,

"examined_rows": 90, --Number of rows participating in sorting

"number_of_tmp_files": 0, --Number of temporary files used during sorting

"sort_buffer_size": 115056,

"sort_mode": "<sort_key, additional_fields>" --Sorting mode (see knowledge expansion below for details)

} /* filesort_summary */

}

] /* steps */

} /* join_execution */

}

] /* steps */

}

MISSING_BYTES_BEYOND_MAX_MEM_SIZE: 0 --This field indicates the size of text bytes discarded in the analysis process. In this example, it is 0, indicating that no text is discarded

INSUFFICIENT_PRIVILEGES: 0 --see trace Is your permission insufficient? 0 means you have permission to view trace details

1 row in set (0.00 sec)

Three processes of outputting result text

- Preparation stage: corresponding to the join in the text_ preparation

- Optimization stage: corresponding to the join in the text_ optimization

- Execution phase: the join in the corresponding text_ execution

When using, focus on the optimization stage and execution stage

Our attention

- "rows_estimation": -- estimated table access cost

- analyzing_range_alternatives ": -- analyze the cost of each index

- Alayzing in trace results_ range_ The item of alternatives can be seen: use the index idx_ The cost of a is 121.01 using IDX_ The cost of B is 109.01, obviously using index idx_ The cost of B is lower, so the optimizer chooses idx_b index