###Foreword

Now the CICD of this unit is in chaos, and then I have a whim and want to transform it, so I made a simple pipeline with pipeline. Here are some introductions about it

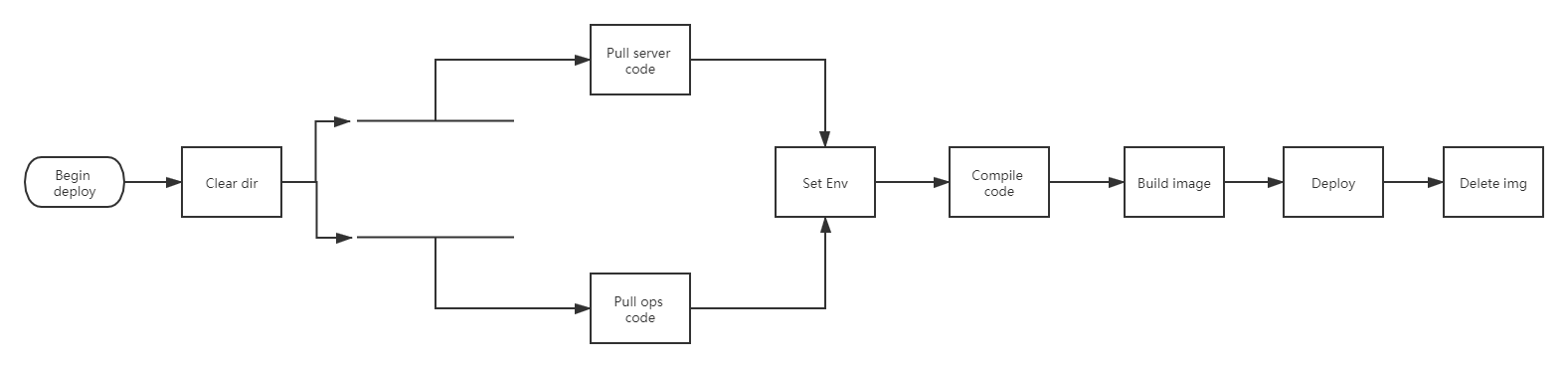

Write a simple pipeline

It's probably such a process. To put it simply, it's: pull code - compile - create image - push image - deploy to k8s. The following pipeline is based on this main line and added according to the situation

pipeline {

agent { label 'pdc&&jdk8' }

environment {

git_addr = "Code warehouse address"

git_auth = "Authentication when pulling code ID"

pom_dir = "pom Directory location of the file (relative path)"

server_name = "service name"

namespace_name = "Namespace where the service is located"

img_domain = "Mirror Address "

img_addr = "${img_domain}/cloudt-safe/${server_name}"

// cluster_name = "cluster name"

}

stages {

stage('Clear dir') {

steps {

deleteDir()

}

}

stage('Pull server code and ops code') {

parallel {

stage('Pull server code') {

steps {

script {

checkout(

[

$class: 'GitSCM',

branches: [[name: '${Branch}']],

userRemoteConfigs: [[credentialsId: "${git_auth}", url: "${git_addr}"]]

]

)

}

}

}

stage('Pull ops code') {

steps {

script {

checkout(

[

$class: 'GitSCM',

branches: [[name: 'pipeline-0.0.1']], //Branch of the pulled build script

doGenerateSubmoduleConfigurations: false,

extensions: [[$class: 'RelativeTargetDirectory', relativeTargetDir: 'DEPLOYJAVA']], //Deploy Java: store the code in this directory

userRemoteConfigs: [[credentialsId: 'chenf-o', url: 'Warehouse address of build script']]

]

)

}

}

}

}

}

stage('Set Env') {

steps {

script {

date_time = sh(script: "date +%Y%m%d%H%M", returnStdout: true).trim()

git_cm_id = sh(script: "git rev-parse --short HEAD", returnStdout: true).trim()

whole_img_addr = "${img_addr}:${date_time}_${git_cm_id}"

}

}

}

stage('Complie Code') {

steps {

script {

withMaven(maven: 'maven_latest_linux') {

sh "mvn -U package -am -amd -P${env_name} -pl ${pom_dir}"

}

}

}

}

stage('Build image') {

steps {

script {

dir("${env.WORKSPACE}/${pom_dir}") {

sh """

echo 'FROM Base image address' > Dockerfile //Since I have optimized the image here, I can only specify a basic image address, which will be described in detail later

"""

withCredentials([usernamePassword(credentialsId: 'faabc5e8-9587-4679-8c7e-54713ab5cd51', passwordVariable: 'img_pwd', usernameVariable: 'img_user')]) {

sh """

docker login -u ${img_user} -p ${img_pwd} ${img_domain}

docker build -t ${img_addr}:${date_time}_${git_cm_id} .

docker push ${whole_img_addr}

"""

}

}

}

}

}

stage('Deploy img to K8S') {

steps {

script {

dir('DEPLOYJAVA/deploy') {

//Execute build script

sh """

/usr/local/python3/bin/python3 deploy.py -n ${server_name} -s ${namespace_name} -i ${whole_img_addr} -c ${cluster_name}

"""

}

}

}

// After making a judgment, if the above script fails to execute, the image in the above stage will be deleted

post {

failure {

sh "docker rmi -f ${whole_img_addr}"

}

}

}

stage('Clear somethings') {

steps {

script {

// Delete image

sh "docker rmi -f ${whole_img_addr}"

}

}

post {

success {

// The current directory will be deleted if the execution phase is successful

deleteDir()

}

}

}

}

}

Optimize the construction of images

There is a command in the above pipeline to generate Dockerfile, and many optimizations have been made here. Although my Dockerfile has written a FROM, a series of operations will be performed after that. Let's compare the Dockerfile that has not been optimized

FROM Base image address RUN mkdir xxxxx COPY *.jar /usr/app/app.jar ENTRYPOINT java -jar app.jar

Optimized

FROM Base image address

The optimized Dockerfile is finished in this line..... Here is a brief introduction to ONBUILD

ONBUILD can be understood in this way. For example, the image we use here is an image based on java language. This image has two parts: one is the basic image A containing JDK, and the other is the image B containing jar package. The relationship is A before B, that is, B depends on A.

Assuming a complete CICD scenario based on Java, we need to pull the code, compile, create the image, push the image and update the pod. In the process of creating the image, we need to COPY the compiled product jar package into the basic image, which leads us to write a Dockerfile to COPY the jar package, just like the following:

FROM jdk base image COPY xxx.jar /usr/bin/app.jar ENTRYPOINT java -jar app.jar

It looks good. It's basically solved in three lines, but it can be solved in one line. Why use three lines?

FROM jdk base image ONBUILD COPY target/*.jar /usr/bin/app.jar CMD ["/start.sh"]

Make an image. For example, the image name is java service: jdk1 8. During mirroring, the following after ONBUILD will not be executed during local mirroring, but will be executed during the next reference

FROM java-service:jdk1.8

Only this line is needed, and it looks more concise, and the pipeline looks very standardized. In this way, every java service can use this line of Dockerfile.

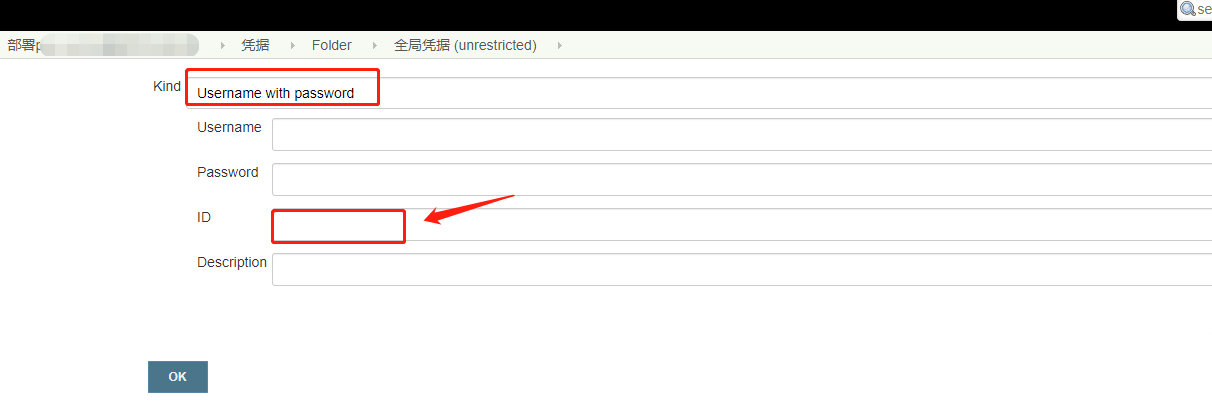

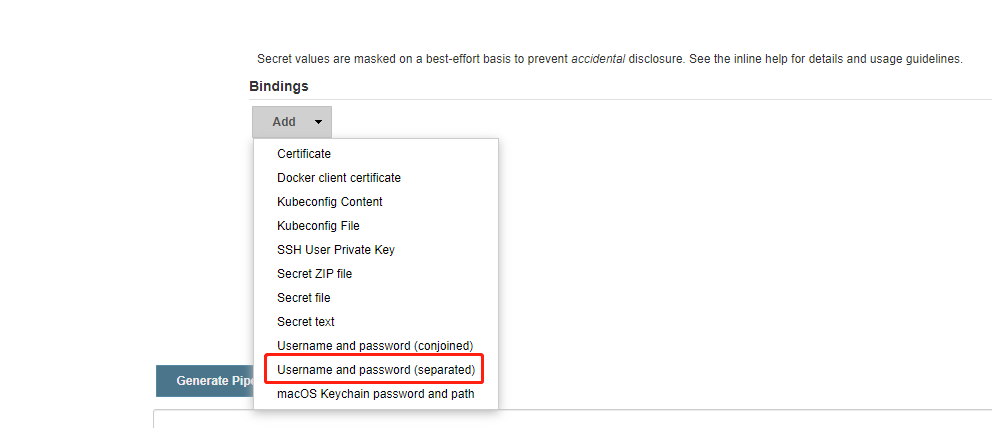

Use credentials

Sometimes, when using docker for push image, authentication is required. If we write directly in pipeline, it is not very safe, so we have to desensitize it. In this way, we need to use credentials. Adding credentials is also very simple. Because we only save our user name and password, we can use the credentials of Username with password, As shown below

For example, the code for pulling git warehouse needs to be used, and then a credential is added here, which corresponds to the following paragraph in pipeline:

stage('Pull server code') {

steps {

script {

checkout(

[

$class: 'GitSCM',

branches: [[name: '${Branch}']],

userRemoteConfigs: [[credentialsId: "${git_auth}", url: "${git_addr}"]]

]

)

}

}

}

The variable ${git_auth} here is the ID set when adding credentials. If the ID is not set, an ID will be randomly generated

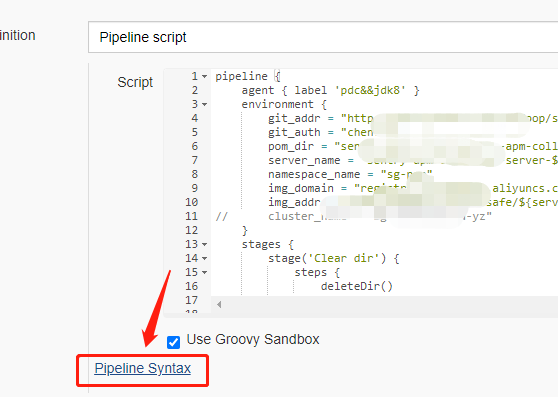

Then, when docker push es, authentication and credentials need to be added. The adding method is the same as above, but we can generate one with Pipeline Syntax as follows: click Pipeline Syntax

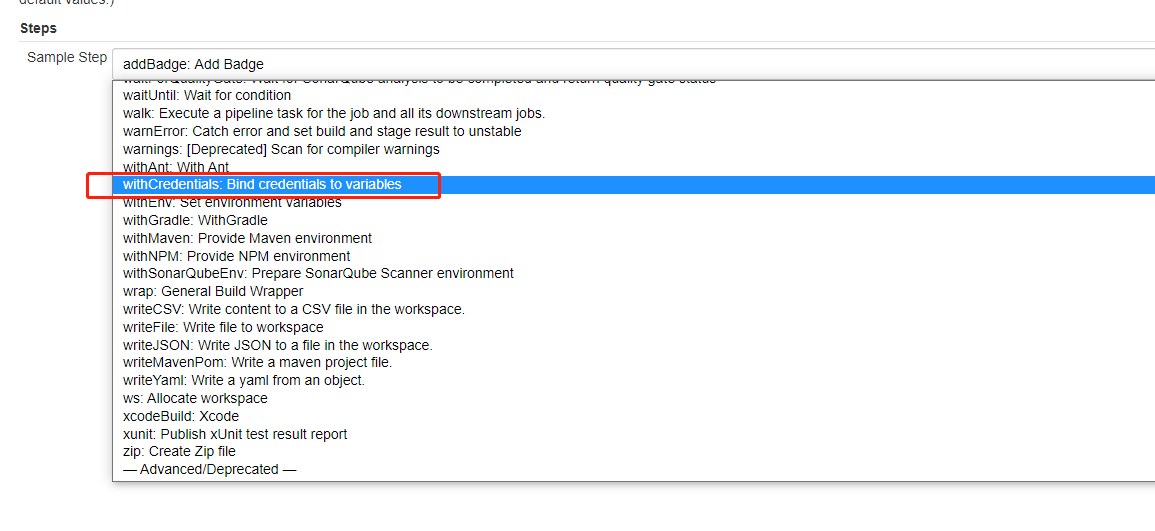

Select withCredentials: Bind credentials to variables

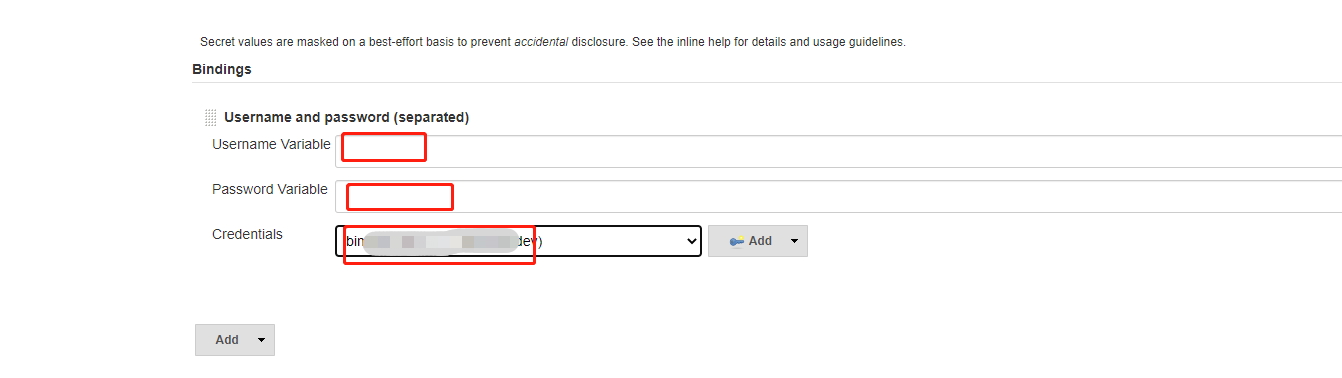

Then bind with the previously added credentials. Here, select the type: Username and password (separated)

Set the variable name of user name and password, and then select the credentials just added

Click generate, which is the following paragraph in the pipeline above:

withCredentials([usernamePassword(credentialsId: 'faabc5e8-9587-4679-8c7e-54713ab5cd51', passwordVariable: 'img_pwd', usernameVariable: 'img_user')]) {

sh """

docker login -u ${img_user} -p ${img_pwd} ${img_domain}

docker build -t ${img_addr}:${date_time}_${git_cm_id} .

docker push ${whole_img_addr}

"""

}

credentialsId: this ID is a randomly generated ID

Execute script to update image

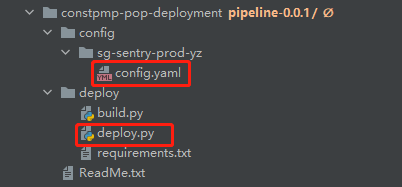

Here is a small script written in python to call the interface of kubernetes and complete a patch operation. Let's first look at the directory structure of this script

Core code: deploy py

Core file: config Yaml stores kubeconfig files for authentication with kubernetes

Next, post deploy For the script content of Py, please refer to the following:

import os

import argparse

from kubernetes import client, config

class deployServer:

def __init__(self, kubeconfig):

self.kubeconfig = kubeconfig

config.kube_config.load_kube_config(config_file=self.kubeconfig)

self._AppsV1Api = client.AppsV1Api()

self._CoreV1Api = client.CoreV1Api()

self._ExtensionsV1beta1Api = client.ExtensionsV1beta1Api()

def deploy_deploy(self, deploy_namespace, deploy_name, deploy_img=None, deploy_which=1):

try:

old_deploy = self._AppsV1Api.read_namespaced_deployment(

name=deploy_name,

namespace=deploy_namespace,

)

old_deploy_container = old_deploy.spec.template.spec.containers

pod_num = len(old_deploy_container)

if deploy_which == 1:

pod_name = old_deploy_container[0].name

old_img = old_deploy_container[0].image

print("Get information about the previous version\n")

print("current Deployment have {} individual pod, by: {}\n".format(pod_num, pod_name))

print("The image address of the previous version is: {}\n".format(old_img))

print("The image address of this build is: {}\n".format(deploy_img))

print("Replacing the mirror address of the current service....\n")

old_deploy_container[deploy_which - 1].image = deploy_img

else:

print("Only one mirror address can be replaced")

exit(-1)

new_deploy = self._AppsV1Api.patch_namespaced_deployment(

name=deploy_name,

namespace=deploy_namespace,

body=old_deploy

)

print("The mirror address has been replaced\n")

return new_deploy

except Exception as e:

print(e)

def run():

parser = argparse.ArgumentParser()

parser.add_argument('-n', '--name', help="Build service name")

parser.add_argument('-s', '--namespace', help="The namespace in which the service to be built resides")

parser.add_argument('-i', '--img', help="The image address of this build")

parser.add_argument('-c', '--cluster',

help="rancher The name of the cluster in which the current service is located")

args = parser.parse_args()

if not os.path.exists('../config/' + args.cluster):

print("The current cluster name is not set or the name is incorrect: {}".format(args.cluster), 'red')

exit(-1)

else:

kubeconfig_file = '../config/' + args.cluster + '/' + 'config.yaml'

if os.path.exists(kubeconfig_file):

cli = deployServer(kubeconfig_file)

cli.deploy_deploy(

deploy_namespace=args.namespace,

deploy_name=args.name,

deploy_img=args.img

)

else:

print("Current cluster kubeconfig Does not exist, please configure at{}Lower config.yaml.(be careful: config.yaml The name is written dead and does not need to be changed to)".format(args.cluster),

'red')

exit(-1)

if __name__ == '__main__':

run()

The writing is relatively simple and there is nothing difficult to understand. The key points are:

new_deploy = self._AppsV1Api.patch_namespaced_deployment(

name=deploy_name,

namespace=deploy_namespace,

body=old_deploy

)

This sentence is to perform a patch operation to patch the contents of the replaced new image address.

Then it's execution.

other

One thing to note here is that an exception capture is added to the pipeline, as shown below:

post {

success {

// If the above phase is successfully executed, the current directory will be deleted

deleteDir()

}

}

The writing method of exception capture of life pipeline and script pipeline is different. The declarative writing method is judged by post. It is relatively simple. You can refer to the official documents

In addition, there is another place where parallel execution is used, which pulls the code of the service and the code of the build script at the same time, which can improve the speed of executing the whole pipeline, as shown below:

parallel {

stage('Pull server code') {

steps {

script {

checkout(

[

$class: 'GitSCM',

branches: [[name: '${Branch}']],

userRemoteConfigs: [[credentialsId: "${git_auth}", url: "${git_addr}"]]

]

)

}

}

}

stage('Pull ops code') {

steps {

script {

checkout(

[

$class: 'GitSCM',

branches: [[name: 'pipeline-0.0.1']], //Branch of the pulled build script

doGenerateSubmoduleConfigurations: false,

extensions: [[$class: 'RelativeTargetDirectory', relativeTargetDir: 'DEPLOYJAVA']], //Deploy Java: store the code in this directory

userRemoteConfigs: [[credentialsId: 'chenf-o', url: 'Warehouse address of build script']]

]

)

}

}

}

}

Well, that's the case. A simple pipeline is completed. If you want to use the pipeline to complete CICD quickly, you can refer to this article.

Write at the end

After reading, you can leave a message below to discuss what you don't understand

Thank you for watching.

If you think the article is helpful to you, remember to pay attention to me and give me some praise and support!

Author: fei

Link: https://juejin.cn/post/6922388073456074766

last

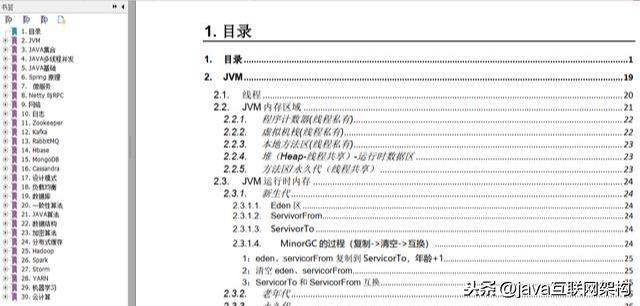

I also collected a core knowledge of Java interview to cope with the interview. I can give it to my readers for free by taking this opportunity:

catalog:

Core knowledge points of Java interview

There are 30 topics in total, which is enough for readers to cope with the interview and save time for friends to search for information everywhere and sort it out by themselves!

Core knowledge points of Java interview

In this case, a simple pipeline is completed. If you want to use the pipeline to complete CICD quickly, you can refer to this article.

Write at the end

After reading, you can leave a message below to discuss what you don't understand

Thank you for watching.

If you think the article is helpful to you, remember to pay attention to me and give me some praise and support!

Author: fei

Link: https://juejin.cn/post/6922388073456074766

last

I also collected a core knowledge of Java interview to cope with the interview. I can give it to my readers for free by taking this opportunity:

catalog:

[external chain picture transferring... (img-jl9adgv7-162355251792)]

Core knowledge points of Java interview

There are 30 topics in total, which is enough for readers to cope with the interview and save time for friends to search for information everywhere and sort it out by themselves!

[external chain picture transferring... (img-bkwssq2t-162355251793)]

Core knowledge points of Java interview

Data collection method: after likes[ Stamp interview data ]You can get it for free!