preface

I have written articles about redis related data structures before. This paper follows the above content to study some other features of redis, so as to have a more comprehensive grasp and understanding of the use of redis.

This article will summarize and discuss the io communication model of redis, the persistence of redis, and some precautions and related methods and principles of redis in the case of distributed deployment. Finally, let's learn about the usage scenarios of redis as a distributed lock. The content of the article may be a little messy.

io model of redis

Establishment of redis client

Before introducing the io model of redis, let's first understand how redis establishes a connection with the client Redis receives connections from clients by listening on tcp ports or using socket s. When a new client connection request is accepted, the following operations will be performed:

1. When redis uses non blocking i/o multiplexing, the client socket will be set to non blocking state

2. socket TCP_ The nodelay property will be set to ensure that there is no delay in the connection.

3. A readable file event is created, so the new data can be accessed, and redis can accept the query of the client faster.

After the above operations are completed, redis checks the number of client connections after initializing the client to ensure that the number of connections does not exceed the maximum number of connections.

io model of redis

In the process of using redis at ordinary times, we have heard more or less that redis is a single process and single thread application, and that redis has high IO efficiency. So how does redis do these two almost contradictory things? Let's first review some basic knowledge about io. The process of IO is generally divided into two steps. The first stage is the data preparation stage, and the second stage is the data replication stage. In the replication stage, the data is copied from the kernel state to the user space. When we refer to IO, we often refer to the concepts of blocking IO, non blocking IO, synchronous IO, asynchronous IO, multiplexing and so on. Blocking IO means that when a client sends a request, the thread will be suspended and subsequent logical processing will not be carried out until the server returns the result. The non blocking IO checks the preparation of the server data regularly after the client sends the request and suspends. However, when the data is returned, the non blocking IO will copy the data in the same way as the non blocking io. Synchronous IO and asynchronous IO are described according to whether the IO is blocking or not. If blocking occurs during IO, this IO belongs to synchronous io. If blocking does not occur, it belongs to asynchronous io. Redis, on the other hand, uses a non blocking IO format, so it has better IO processing efficiency. Another point is that redis uses multiplexing technology. The so-called multiplexing technology is that the server uses polling technology to process different client requests through the same communication channel. In redis, redis uses multiplexing APIs to listen to read and write operations of multiple clients at the same time. These APIs often have related timeout s. During this time, redis threads will block and then listen to sockets. In this process, redis will match an instruction queue for each client socket and process it according to the instruction queue, At the same time, put the operation results into the output queue.

REDIS distributed lock

According to the Convention, before introducing the topic, let's first introduce some basic concepts about distribution and what is distributed. The so-called distributed system is to communicate through the network, so that a group of computers can coordinate to complete tasks together. Its purpose is to use multiple computers to complete tasks that one 1 computer cannot complete. With regard to distributed systems, we often mention cap theory. So what is the so-called cap theory? In fact, cap theorem describes the relevant characteristics of distributed systems, that is, consistency, partition fault tolerance, and consistency cannot be satisfied at the same time in a distributed system. Usually, when we develop the system, we often give priority to ensuring the fault tolerance and availability of the system. Abandoning the guarantee of consistency does not mean that consistency is not required, but we can ensure that the final results can be consistent through relevant technical methods. This feature is generally called final consistency.

So what is the connection between the distributed lock we want to talk about and the above theory? We all know that in order to ensure the consistency of data in programming and development, we usually use locking to ensure that the data can be modified in an exclusive form for a period of time. The distributed lock is also based on the same purpose. Using the distributed lock, we can ensure that only one process can modify the data in a period of time, so as to ensure the consistency of the data.

How to achieve

We talked about the causes and background of distributed locks. Now let's see how to implement a distributed lock through redis. Before designing a lock, we must first consider a problem, that is, what features should the lock guarantee? For this problem, we can refer to, for example, the implementation of lock in java language. We can get the following points:

- For a lock, first of all, ensure that the process that gets the lock can access the resources protected by the lock in an exclusive way. After the command is executed, the lock can be released.

- For the same process, it is necessary to ensure the reentrancy of the lock

- Locks should have high acquisition and release performance.

- There will be no deadlock.

Next, let's look at how to implement a distributed lock. We can design it as follows:

① When acquiring the lock, we set the key through the SETNX command of redis. If the key does not exist, we create the key and assign a value to the key. In order to ensure the uniqueness of the lock, we use the randomly generated uuid. When the key value pair is created, it returns 1. If the key exists, it returns 1.

② After the lock is created, we set a timeout for the lock. When the lock expires, it will be released automatically.

③ When acquiring a lock, we need to add a timeout to the acquisition operation. When the acquisition operation does not obtain a lock within the time, we give up the acquisition operation.

④ When releasing a lock, release the lock through the uuid of the previous lock. If it is a lock, use the del command to delete the lock.

Implementation code

//Lock related code

import redis.clients.jedis.Jedis;

import redis.clients.jedis.JedisPool;

import redis.clients.jedis.Transaction;

import redis.clients.jedis.exceptions.JedisException;

import java.util.List;

import java.util.UUID;

public class DistributeLock {

private final JedisPool jedisPool;

public DistributeLock(JedisPool jedisPool) {

this.jedisPool = jedisPool;

}

/*

//Lock code: set the key value pair with the lock name as key and uuid as value to redis. If the setting is successful,

//It proves that the client obtains the lock and continues to execute the following logic. If it fails, call the sleep method to suspend the thread temporarily and wait for the thread that obtains the lock to complete execution.

/lockName The name of the lock, that is, the key of the lock. acquireTimeout the timeout for acquiring the lock. Timeout timeout of the lock

*/

public String lockWithTimeOut(String lockName, long acquireTimeOut, long timeout) {

Jedis conn = null;

String resIdentifier = null;

try {

conn = jedisPool.getResource();

String identifier = UUID.randomUUID().toString();

String lockKey = "lock:" + lockName;

int lockExpire = (int) (timeout / 1000);

long end = System.currentTimeMillis() + acquireTimeOut;

while (System.currentTimeMillis() < end) {

if (conn.setnx(lockKey, identifier) == 1) {

conn.expire(lockKey, lockExpire);

resIdentifier = identifier;

return resIdentifier;

}

if (conn.ttl(lockKey) == -1) {

conn.expire(lockKey, lockExpire);

}

try {

Thread.sleep(10);

} catch (InterruptedException e) {

Thread.currentThread().interrupt();

}

}

} catch (JedisException e) {

e.printStackTrace();

} finally {

if (conn != null) {

conn.close();

}

}

return resIdentifier;

}

//The code that releases the lock uses the watch method of redis to monitor the key. If the key is modified, the execution of subsequent transactions will be abandoned. Otherwise, the lock will be released by means of transactions.

public boolean releaseLock(String lockName, String indentifier) {

Jedis conn = null;

String lockKey = "lock" + lockName;

boolean retFlag = false;

try {

conn = jedisPool.getResource();

while (true) {

conn.watch(lockKey);

if (conn.get(lockKey) != null) {

if (indentifier.equals(conn.get(lockKey))){

Transaction transaction = conn.multi();

transaction.del(lockKey);

List<Object> results = transaction.exec();

if (results == null) {

continue;

}

retFlag = true;

}

}

conn.unwatch();

break;

}

} catch (JedisException e) {

e.printStackTrace();

} finally {

if (conn != null) {

conn.close();

}

}

return retFlag;

}

}

public class Service {

private static JedisPool pool = null;

static {

JedisPoolConfig config = new JedisPoolConfig();

config.setMaxTotal(200);

config.setMaxIdle(8);

config.setMaxWaitMillis(1000 * 100);

config.setTestOnBorrow(false);

pool = new JedisPool(config, "127.0.0.1",6379, 3000);

}

DistributeLock lock = new DistributeLock(pool);

AtomicInteger n = new AtomicInteger(50);

public void kill() {

String identifier = lock.lockWithTimeOut("killLock", 5000, 1000);

System.out.println(Thread.currentThread().getName() + "Got the lock");

System.out.println(n.decrementAndGet());

lock.releaseLock("killLock", identifier);

}

}

public class TestThread implements Runnable {

private Service service;

public TestThread(Service service) {

this.service = service;

}

@Override

public void run() {

service.kill();

}

}

public class Test {

public static void main(String [] args) {

Service service = new Service();

for (int i = 0; i < 50; i++) {

TestThread thread = new TestThread(service);

Thread t = new Thread(thread);

t.start();

}

}

}

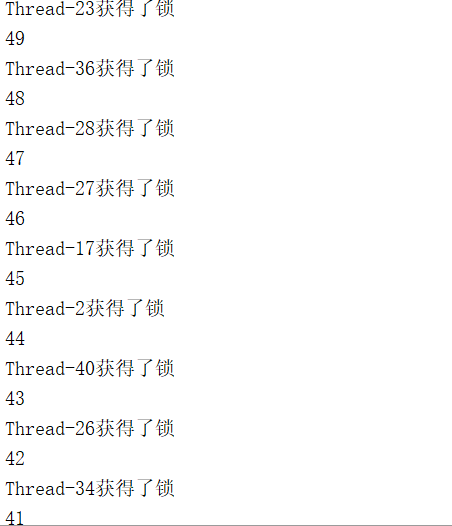

The execution results are as follows. It can be seen that each thread has successfully obtained the lock and executed.

redis partition

The so-called reids partition is the process of distributing different data to different redis instances. Each redis instance has only a part of all key s. Redis can manage more memory through partition. Otherwise, redis can only use the memory of one machine. Second, through partitioning, the computing power of redis can be significantly enhanced, which will also increase the bandwidth of redis.

① reids partition method

For example, we need to store user:1 to user: n in multiple redis instances. At this time, we have the following partition schemes:

1. The simplest method is to partition according to the range of user id. each redis instance stores a part of user data. For example, the first user stores data of 1-1000 users and the other instance stores data of 1001-2000. Using this method, we can quickly store data in different instances, but this method also has an obvious disadvantage, We need to build a table to describe the mapping relationship between data and redis. The maintenance of this table often requires a lot of overhead, which greatly increases the complexity of this partition method.

2. Another way to partition is through hash. We use hash function to convert the key name into number. After converting the key into number, we take the module of the generated hash value. The number used to generate the corresponding redis instance. Store the data in the corresponding redis instance through the generated number

First, we need to calculate the hash value of the ring server according to the consistency of the hash value of 32. We need to calculate the hash value of the ring server according to the hash value of 32. We need to calculate the hash value of the ring server according to the consistency of 32, Find the nearest cache server node on the hash ring clockwise.

4. Although the hash consistency algorithm can well balance the data load of redis, the redis cluster is implemented in another way. This method is called data slicing. A redis cluster contains 16384 hash slots, and each key belongs to one of the 16384 hash slots. The cluster uses crc (key)% 16384 to calculate which slot the data key belongs to. Each node in the redis cluster is responsible for a certain number of slots.

② Implementation of different zoning schemes

redis can be partitioned at different levels of the program:

① Client partition: the client has decided which redis node the data will be stored in or which redis node the data will be read from.

② Proxy partition: the client sends the request to the proxy, and then the proxy decides which node to store or read data from.

③ Query routing: the client randomly requests any redis instance, and then the redis instance forwards the request to the correct redis node.