Catalogue of series articles

Chapter I gin preliminary understanding

Chapter 2 setting API

The third chapter uses MongoDB data persistence

Note:

- The series of articles are learning notes corresponding to the original English books mentioned above

- Related to their own practice code, including comments, on my gitee Welcome, star

- All contents are allowed to be reproduced. If the copyright of the book is infringed, please contact to delete it

- Notes are constantly updated

Data persistence using MongoDB

preface

This chapter will use docker to deploy MongoDB and Redis, realize CRUD, introduce the directory structure of standard go projects, optimize API response and improve website performance

go use mongodb

- Get dependencies in project

go get go.mongodb.org/mongo-driver/mongo

This will download the driver to the system GOPath and write it to go as a dependency Mod file

- Connect MongoDB

- docker running mongodb

docker run -d --name mongodb -e MONGO_INITDB_ROOT_ USERNAME=admin -e MONGO_INITDB_ROOT_PASSWORD=password -p 27017:27017 mongo:4.4.3

- Use free mongo atlas database

- Database connection and test code

package main

import (

"context"

"fmt"

"go.mongodb.org/mongo-driver/mongo"

"go.mongodb.org/mongo-driver/mongo/options"

"go.mongodb.org/mongo-driver/mongo/readpref"

"log"

)

var ctx context.Context

var err error

var client *mongo.Client

// Database address defined using environment variables

//var uri = os.Getenv("MONGO_URI")

var uri = "mongodb+srv://root:123456lp@cluster0.9aooq.mongodb.net/test?retryWrites=true&w=majority"

func init() {

ctx = context.Background()

client, err = mongo.Connect(ctx, options.Client().ApplyURI(uri))

if err = client.Ping(context.TODO(), readpref.Primary()); err != nil {

log.Fatal(err)

}

fmt.Println("Connected to MongoDB")

}

func main() {

}

- Initialize the database using the data from the previous chapter

func init() {

recipes = make([]model.Recipe, 0)

// Read the information in the file

file, _ := ioutil.ReadFile("recipes.json")

// Parse the information into recipe entities

_ = json.Unmarshal([]byte(file), &recipes)

...

// The data parameter passed by InsertMany is interface

var listOfRecipes []interface{}

for _, recipe := range recipes {

listOfRecipes = append(listOfRecipes, recipe)

}

collection := client.Database(database_name).Collection(collection_name)

insertManyResult, err := collection.InsertMany(ctx, listOfRecipes)

if err != nil {

log.Fatal(err)

}

log.Println("Inserted recipes: ", len(insertManyResult.InsertedIDs))

}

When MongoDB inserts data, as long as it is not created, it will create the library of the specified data model by default (in MongoDB, the library is called collection collection, and the inserted records are called document documents)

collection.InsertMany receives data of interface {} slice type, so the above circularly copies the data of the recipes array into the interface slice of listOfRecipes

recipes. The JSON file has the following contents

[

{

"id": "c80e1msc3g21dn3s62e0",

"name": "Homemade Pizza",

"tags": [

"italian",

"pizza",

"dinner"

],

"ingredients": [

"1 1/2 cups (355 ml) warm water (105°F-115°F)",

"1 package (2 1/4 teaspoons) of active dry yeast",

"3 3/4 cups (490 g) bread flour",

"feta cheese, firm mozzarella cheese, grated"

],

"instructions": [

"Step 1.",

"Step 2.",

"Step 3."

],

"PublishedAt": "2022-02-07T17:05:31.9985752+08:00"

}

]

Use mongoimport to import serialized data (json file) and initialize the table (collection)

mongoimport --username admin --password password --authenticationDatabase admin --db demo --collection recipes --file recipes.json --jsonArray

CRUD operation instance

lookup

// collection of operation database collection = client.Database(database_name).Collection(collection_name)

func ListRecipesHandler(c *gin.Context) {

// Gets the cursor that operates on the database

// cur is actually a document stream

cur, err := collection.Find(ctx, bson.M{})

if err != nil {

c.JSON(http.StatusInternalServerError,

gin.H{"error": err.Error()})

return

}

defer cur.Close(ctx)

recipes := make([]model.Recipe, 0)

for cur.Next(ctx) {

var recipe model.Recipe

// Assemble the queried document as an entity of the Recipe structure

cur.Decode(&recipe)

recipes = append(recipes, recipe)

}

c.JSON(http.StatusOK, recipes)

}

Insert a record

func NewRecipesHandler(c *gin.Context) {

var recipe model.Recipe

// Get and parse the data passed from the POST request message body

if err := c.ShouldBindJSON(&recipe); err != nil {

c.JSON(http.StatusBadRequest, gin.H{

"error": err.Error()})

return

}

recipe.ID = primitive.NewObjectID()

recipe.PublishedAt = time.Now()

_, err := collection.InsertOne(ctx, recipe)

if err != nil {

fmt.Println(err)

c.JSON(http.StatusInternalServerError, gin.H{

"error": "Error while inserting a new recipe"})

return

}

c.JSON(http.StatusOK, recipe)

}

Modify the type of ID field to primitive Object and annotate the fields of the structure with bson annotation

// swagger: parameters recipes newRecipe

type Recipe struct {

// swagger:ignore

ID primitive.ObjectID `json:"id" bson:"_id"`

Name string `json:"name" bson:"name"`

Tags []string `json:"tags" bson:"tags"`

Ingredients []string `json:"ingredients" bson:"ingredients"`

Instructions []string `json:"instructions" bson:"instructions"`

PublishedAt time.Time `json:"PublishedAt" bson:"publishedAt"`

}

Update a record

func UpdateRecipeHandler(c *gin.Context) {

// Get the url passed parameter host/recipes/{id} from the context

// Belongs to position parameter

id := c.Param("id")

var recipe model.Recipe

// After obtaining data from the body,

if err := c.ShouldBindJSON(&recipe); err != nil {

c.JSON(http.StatusBadRequest, gin.H{

"error": err.Error()})

return

}

// Create a new ObjectID entity from ID string

objectId, _ := primitive.ObjectIDFromHex(id)

_, err = collection.UpdateOne(ctx, bson.M{

"_id": objectId}, bson.D{{"$set", bson.D{

{"name", recipe.Name},

{"instructions", recipe.Instructions},

{"ingredients", recipe.Ingredients},

{"tags", recipe.Tags}}}})

if err != nil {

fmt.Println(err)

c.JSON(http.StatusInternalServerError, gin.H{

"error": err.Error()})

return

}

c.JSON(http.StatusOK, gin.H{"message": "Recipes has been updated"})

}

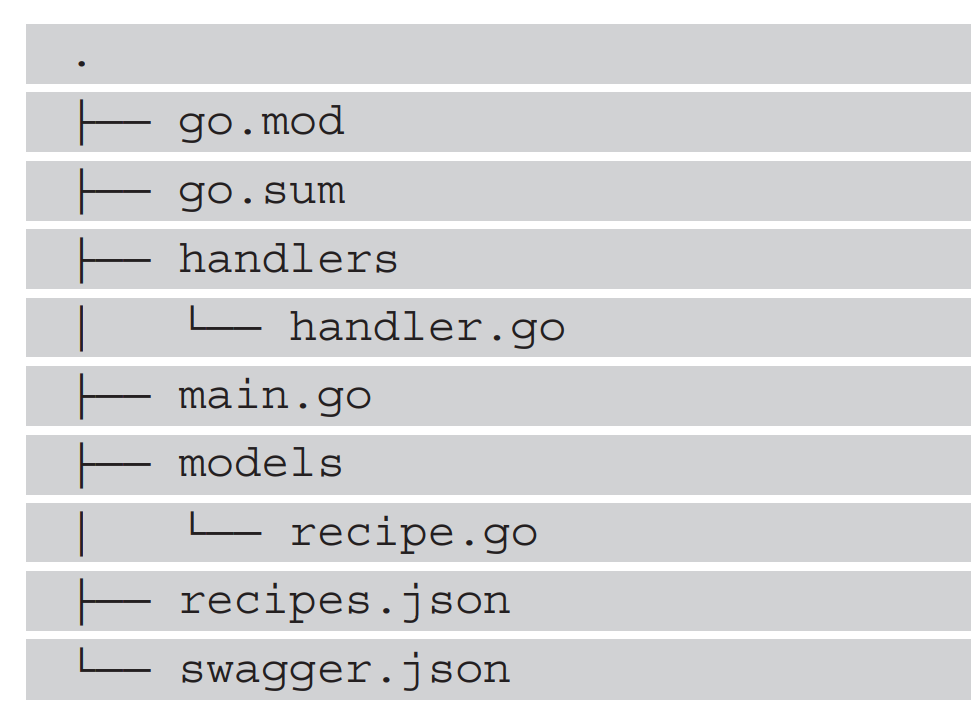

Hierarchical structure of design project

Project subdirectories and files are easy to manage, making the code clear and easy to use

- Recipe. In the models directory Go define data model

- Handler. Of the handlers directory Go defines the routing processing function

handler.go design

- Take the context and database connection required by the handler function as the structure

type RecipesHandler struct {

collection *mongo.Collection

ctx context.Context

}

// Get the data entities required by handler processing -- context and database connection

func NewRecipesHandler(ctx context.Context, collection *mongo.Collection) *RecipesHandler {

return &RecipesHandler{

collection: collection,

ctx: ctx,

}

}

- Add method for RecipesHandler

func (handler *RecipesHandler) ListRecipesHandler(c *gin.Context) {}

- main.go stores the code of database authentication and link

func init() {

ctx := context.Background()

client, err := mongo.Connect(ctx, options.Client().ApplyURI(uri))

if err = client.Ping(context.TODO(), readpref.Primary()); err != nil {

log.Fatal(err)

}

log.Println("Connected to MongoDB")

// collection of operation database

collection := client.Database(database_name).Collection(collection_name)

recipesHandler = handlers.NewRecipesHandler(ctx, collection)

}

Current project directory structure

MONGO_URI="mongodb://admin:password@localhost:27017/ test?authSource=admin" MONGO_DATABASE=demo go run *.go

Get variables in the program, and then pass them on the command line

var uri = os.Getenv("MONGO_URI")

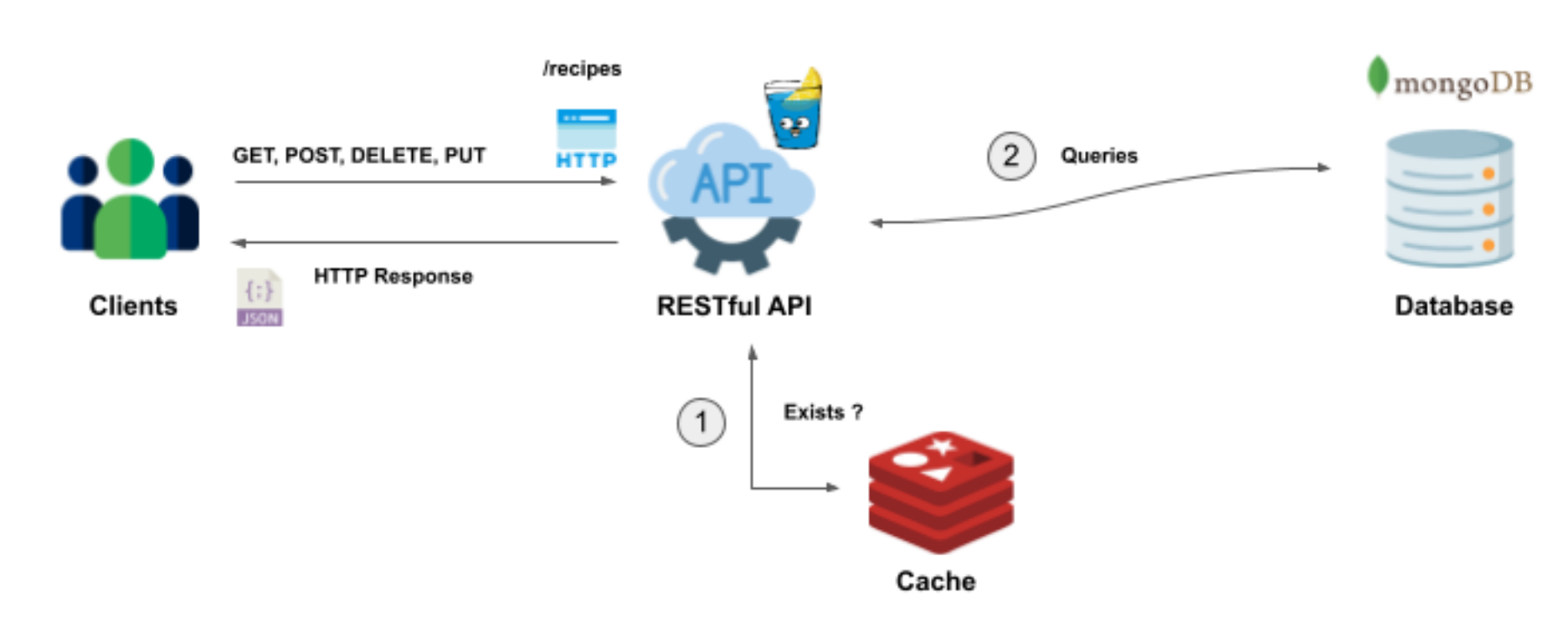

Using redis caching API

The following explains how to add redis caching mechanism to API

Generally, after the program runs, the frequently queried data only accounts for a small part of all the data in the database. Caching the acquired data into a memory cache database such as redis can avoid requesting database calls every time, greatly reduce the load of database query and improve query efficiency. On the other hand, redis is an in memory database, which is faster than the disk database and has a small system overhead.

Query status

- Query cache to get data, Cache hit

- There is no data in the query cache, and the Cache miss requests database data

- Return data to the client and cache it to the local cache

docker running redis

docker run -d --name redis -p 6379:6379 redis:6.0

view log

Docker logs - f < container ID >

Edit redis Conf defines the data replacement algorithm

Least recently used algorithm using LRU algorithm

maxmemory-policy allkeys-lru maxmemory 512mb

To map the configuration file locally, change the command to start the docker container to

docker run -v D:/redis/conf/redis.conf:/usr/local/etc/redis/redis.conf --name redis_1 -p 6379:6379 redis:6.0 redis-server /usr/local/etc/redis/redis.conf

Configure redis

import "github.com/go-redis/redis"

- In main redis is configured in the init() function of go

redisClient := redis.NewClient(&redis.Options{

Addr: "localhost:6379",

Password: "",

DB: 0,

})

status := redisClient.Ping()

fmt.Println(status)

- Modify handler go

type RecipesHandler struct {

collection *mongo.Collection

ctx context.Context

redisClient *redis.Client

}

// Get the data entities required by handler processing -- context and database connection

func NewRecipesHandler(ctx context.Context, collection *mongo.Collection, redisClient *redis.Client) *RecipesHandler {

return &RecipesHandler{

collection: collection,

ctx: ctx,

redisClient: redisClient,

}

}

Code for data caching using redis

func (handler *RecipesHandler) ListRecipesHandler(c *gin.Context) {

var recipes []models.Recipe

val, err := handler.redisClient.Get("recipes").Result()

// If the error thrown is that redis does not have this data

if err == redis.Nil {

log.Printf("Request to MongoDB")

// Gets the cursor that operates on the database

// cur is actually a document stream

cur, err := handler.collection.Find(handler.ctx, bson.M{})

if err != nil {

c.JSON(http.StatusInternalServerError,

gin.H{"error": err.Error()})

return

}

defer cur.Close(handler.ctx)

recipes = make([]models.Recipe, 0)

for cur.Next(handler.ctx) {

var recipe models.Recipe

// Assemble the queried document as an entity of the Recipe structure

cur.Decode(&recipe)

recipes = append(recipes, recipe)

}

// Store the newly found data into redis in the form of key value pairs

data, _ := json.Marshal(recipes)

handler.redisClient.Set("recipes", string(data), 0)

} else if err != nil {

c.JSON(http.StatusInternalServerError, gin.H{

"error": err.Error()})

} else {

log.Printf("Request to Redis")

recipes = make([]models.Recipe, 0)

json.Unmarshal([]byte(val), &recipes)

}

c.JSON(http.StatusOK, recipes)

}

Directly check whether the redis cache exists

- Open redis cli

- EXISTS recipes

Using web tools to view redis cache

docker run -d --name redisinsight --link redis_1 -p 8001:8001 redislabs/redisinsight

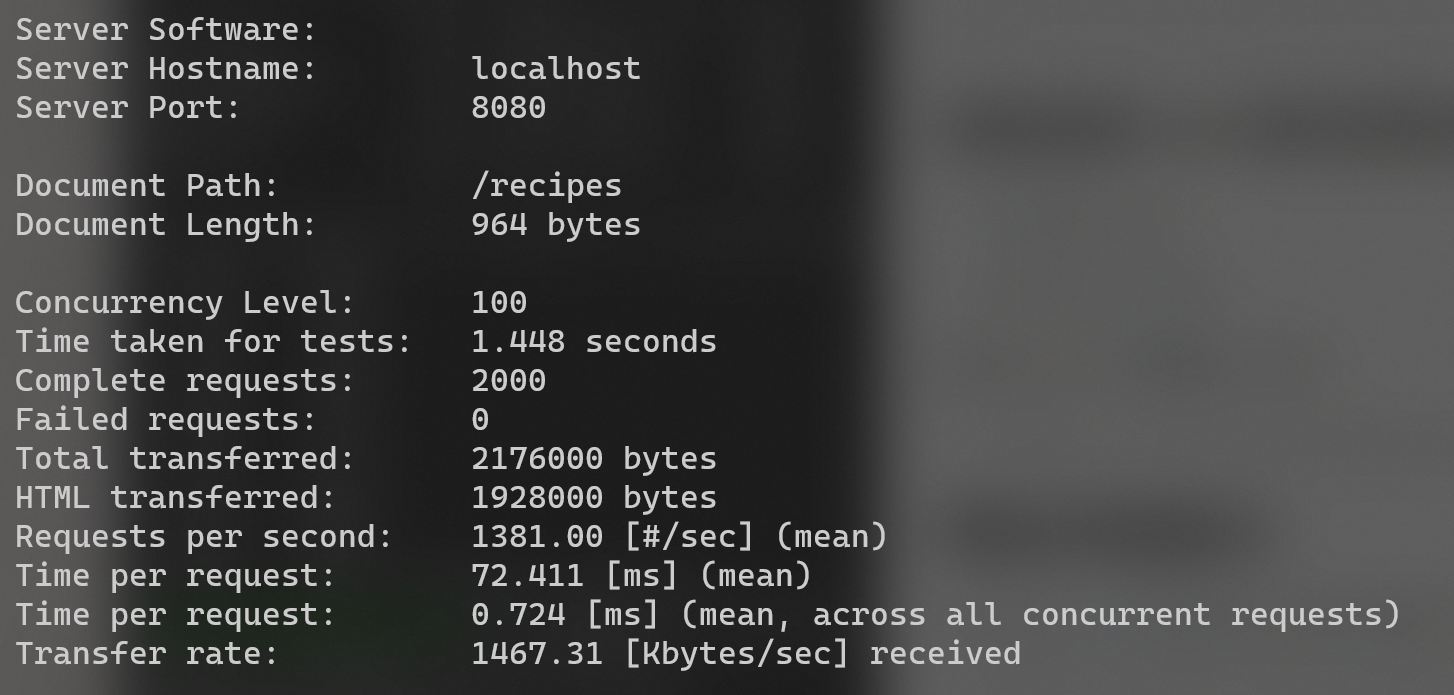

Website performance score

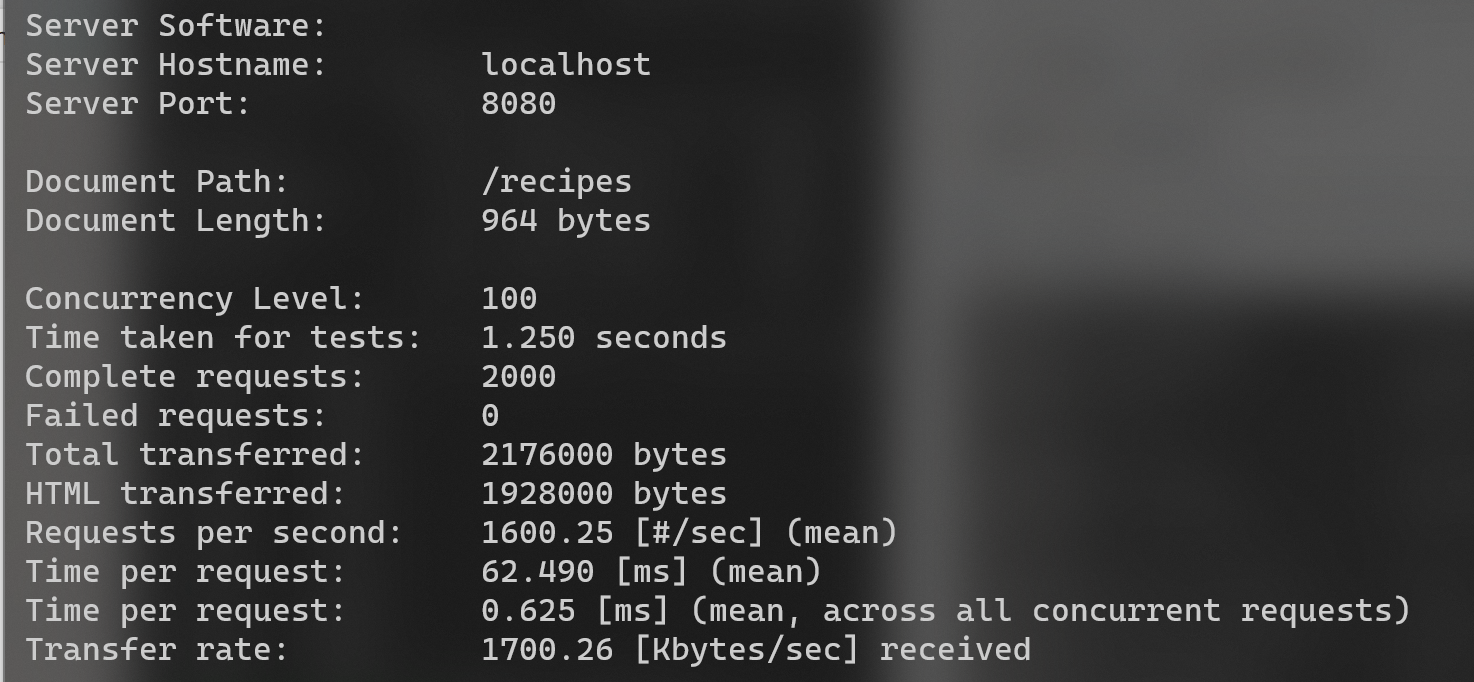

The software ab in apache2 utils is used for website stress testing (the windows version is Apache httpd)

ab.exe -n 2000 -c 100 -g without-cache.data http://localhost:8080/recipes

2000 requests, 100 concurrent requests

The following results will be obtained:

Close cache -g without cache data

Open and use redis cache - G with cache data

Focus on Time taken for tests and Time per request