We will use a series to explain the complete practice of microservices from requirements to online, from code to k8s deployment, from logging to monitoring.

The whole project uses the micro services developed by go zero, which basically includes go zero and some middleware developed by relevant go zero authors. The technology stack used is basically the self-developed component of the go zero project team, which is basically the go zero software.

Actual project address: github.com/Mikaelemmmm/go-zero-loo...

1. Overview

There are many kinds of message queues, including rabbitmq, rocketmq, kafka and other commonly used ones, including go queue( https://github.com/zeromicro/go-queue )It is a message queue component officially developed by go zero, which is divided into two categories: kq and dq. kq is a message queue based on kafka and dq is a delay queue based on beanstalkd. However, go queue does not support scheduled tasks. If you want to know more about go queue, I've written a tutorial before. You can go and have a look. I won't elaborate here.

The project adopts go queue as message queue and asynq as delay queue and timing queue

Several reasons why asynq is used

- It is directly based on redis. Generally, projects have redis, and asynq itself is based on redis, so it can maintain less middleware

- It supports message queue, delay queue and scheduled task scheduling, because you want the project to support scheduled tasks, while asynq supports them directly

- There is a Web ui interface. Each task can be suspended, archived, viewed through the ui interface, and monitored

Why does asynq support message queuing and still use go queue?

- kafka's throughput is famous for its performance. If the early volume is small, you can directly use asynq

- No purpose, just want to show you go queue

When we use go zero, goctl brings us great convenience, but at present, go zero only generates APIs and RPCs. Many students ask how to generate timed tasks, delay queues and message queues, and how to do the directory structure in the group. In fact, go zero is designed for us, that is, serviceGroup. Use serviceGroup to manage your services.

2. How to use

In fact, we have demonstrated the previous scenes such as orders and messages, and we will supplement them separately here

Let's take order MQ as an example. Obviously, using goctl to generate api and rpc is not what we want. Then we use serviceGroup to transform ourselves. The directory structure is basically the same as that of the api. We just change the handler to listen and the logic to mqs.

2.1 the code in main is as follows

var configFile = flag.String("f", "etc/order.yaml", "Specify the config file")

func main() {

flag.Parse()

var c config.Config

conf.MustLoad(*configFile, &c)

// log, prometheus, trace, metricsUrl

if err := c.SetUp(); err != nil {

panic(err)

}

serviceGroup := service.NewServiceGroup()

defer serviceGroup.Stop()

for _, mq := range listen.Mqs(c) {

serviceGroup.Add(mq)

}

serviceGroup.Start()

}First, we need to define the configuration and resolve the configuration.

Secondly, why should we add SetUp here instead of api and rpc? api and rpc are written in MustNewServer framework, but we don't use serviceGroup management. You can click SetUp manually. This method includes the definitions of log, prometheus, trace and metricsUrl. One method can save a lot of things. In this way, we can directly modify the configuration file to realize log, monitoring and link tracking.

Next is the serivceGroup management service of go zero. serviceGroup is used to manage a group of services. The service is actually an interface. The code is as follows

Service (the code is in go zero / core / service / servicegroup. Go)

// Service is the interface that groups Start and Stop methods. Service interface { Starter // Start Stopper // Stop }Therefore, as long as your service implements these two interfaces, you can join the serviceGroup for unified management

You can see that we implement this interface for all mq and put it into the list In MQS, just start the service

2.2 mq classified management

Code in go zero look / APP / order / CMD / MQ / internal / listen directory

The code in this directory manages different types of mq in a unified way, because we want to manage kq, asynq, and possibly rabbitmq, rocketmq, etc. in the future, so we have made classification here to facilitate maintenance

Unified management is in go zero look / APP / order / CMD / mq / internal / listen / listen Go, and then call listen. in main. MQS can get all MQS together and start

// Return all consumers

func Mqs(c config.Config) []service.Service {

svcContext := svc.NewServiceContext(c)

ctx := context.Background()

var services []service.Service

// kq: message queue

services = append(services, KqMqs(c, ctx, svcContext)...)

// asynq: delay queue, scheduled task

services = append(services, AsynqMqs(c, ctx, svcContext)...)

// other mq ....

return services

}go-zero-looklook/app/order/cmd/mq/internal/listen/asynqMqs.go is defined as asynq

// asynq

// Scheduled task, delayed task

func AsynqMqs(c config.Config, ctx context.Context, svcContext *svc.ServiceContext) []service.Service {

return []service.Service{

// Listening delay queue

deferMq.NewAsynqTask(ctx, svcContext),

// Monitor scheduled tasks

}

}go-zero-looklook/app/order/cmd/mq/internal/listen/asynqMqs.go is defined as KQ (Kafka of go queue)

// kq

// Message queue

func KqMqs(c config.Config, ctx context.Context, svcContext *svc.ServiceContext) []service.Service {

return []service.Service{

// Monitor consumption flow status change

kq.MustNewQueue(c.PaymentUpdateStatusConf, kqMq.NewPaymentUpdateStatusMq(ctx, svcContext)),

// .....

}

}2.3 actual business

The actual business is written in go zero look / APP / order / CMD / MQ / internal / listen / MQS, which is also classified for maintenance purposes

- deferMq: delay queue

- kq: message queue

2.3.1 delay queue

// Monitor closed orders

type AsynqTask struct {

ctx context.Context

svcCtx *svc.ServiceContext

}

func NewAsynqTask(ctx context.Context, svcCtx *svc.ServiceContext) *AsynqTask {

return &AsynqTask{

ctx: ctx,

svcCtx: svcCtx,

}

}

func (l *AsynqTask) Start() {

fmt.Println("AsynqTask start ")

srv := asynq.NewServer(

asynq.RedisClientOpt{Addr: l.svcCtx.Config.Redis.Host, Password: l.svcCtx.Config.Redis.Pass},

asynq.Config{

Concurrency: 10,

Queues: map[string]int{

"critical": 6,

"default": 3,

"low": 1,

},

},

)

mux := asynq.NewServeMux()

// Close home stay order task

mux.HandleFunc(asynqmq.TypeHomestayOrderCloseDelivery, l.closeHomestayOrderStateMqHandler)

if err := srv.Run(mux); err != nil {

log.Fatalf("could not run server: %v", err)

}

}

func (l *AsynqTask) Stop() {

fmt.Println("AsynqTask stop")

}Because asynq needs to start first and then define the routing task, we are in asynqtask Unified routing management is implemented in go. After that, each business defines a file under the folder of deferMq (such as "delayed closing order: closeHomestayOrderState.go"), so that each business has a file, which is convenient to maintain, just like the api of go zero and the logic of rpc

closeHomestayOrderState.go close order logic

package deferMq

import (

"context"

"encoding/json"

"looklook/app/order/cmd/rpc/order"

"looklook/app/order/model"

"looklook/common/asynqmq"

"looklook/common/xerr"

"github.com/hibiken/asynq"

"github.com/pkg/errors"

)

func (l *AsynqTask) closeHomestayOrderStateMqHandler(ctx context.Context, t *asynq.Task) error {

var p asynqmq.HomestayOrderCloseTaskPayload

if err := json.Unmarshal(t.Payload(), &p); err != nil {

return errors.Wrapf(xerr.NewErrMsg("analysis asynq task payload err"), "closeHomestayOrderStateMqHandler payload err:%v, payLoad:%+v", err, t.Payload())

}

resp, err := l.svcCtx.OrderRpc.HomestayOrderDetail(ctx, &order.HomestayOrderDetailReq{

Sn: p.Sn,

})

if err != nil || resp.HomestayOrder == nil {

return errors.Wrapf(xerr.NewErrMsg("Failed to get order"), "closeHomestayOrderStateMqHandler Failed to get order or Order does not exist err:%v, sn:%s ,HomestayOrder : %+v", err, p.Sn, resp.HomestayOrder)

}

if resp.HomestayOrder.TradeState == model.HomestayOrderTradeStateWaitPay {

_, err := l.svcCtx.OrderRpc.UpdateHomestayOrderTradeState(ctx, &order.UpdateHomestayOrderTradeStateReq{

Sn: p.Sn,

TradeState: model.HomestayOrderTradeStateCancel,

})

if err != nil {

return errors.Wrapf(xerr.NewErrMsg("Failed to close order"), "closeHomestayOrderStateMqHandler Failed to close order err:%v, sn:%s ", err, p.Sn)

}

}

return nil

}2.3.2 kq message queue

Look at the go zero look / APP / order / CMD / MQ / internal / MQS / kq folder. Because kq is different from asynq, it is managed by the Service of go zero and has implemented the starter and stopper interfaces, so we are in / users / seven / Developer / goenv / go zero look / app / order / CMD / MQ / internal / listen / kqmqs Just throw a go queue business directly defined in go to serviceGroup and hand it to main for startup. Our business code only needs to implement the Consumer of go queue and write our own business directly.

1)/Users/seven/Developer/goenv/go-zero-looklook/app/order/cmd/mq/internal/listen/kqMqs.go

func KqMqs(c config.Config, ctx context.Context, svcContext *svc.ServiceContext) []service.Service {

return []service.Service{

// Monitor consumption flow status change

kq.MustNewQueue(c.PaymentUpdateStatusConf, kqMq.NewPaymentUpdateStatusMq(ctx, svcContext)),

// .....

}

}You can see KQ Mustnewqueue itself returns a queue MessageQueue , queue.MessageQueue implements Start and Stop

2) In business

/Users/seven/Developer/goenv/go-zero-looklook/app/order/cmd/mq/internal/mqs/kq/paymentUpdateStatus.go

func (l *PaymentUpdateStatusMq) Consume(_, val string) error {

fmt.Printf(" PaymentUpdateStatusMq Consume val : %s \n", val)

// Parse data

var message kqueue.ThirdPaymentUpdatePayStatusNotifyMessage

if err := json.Unmarshal([]byte(val), &message); err != nil {

logx.WithContext(l.ctx).Error("PaymentUpdateStatusMq->Consume Unmarshal err : %v , val : %s", err, val)

return err

}

// Execute business

if err := l.execService(message); err != nil {

logx.WithContext(l.ctx).Error("PaymentUpdateStatusMq->execService err : %v , val : %s , message:%+v", err, val, message)

return err

}

return nil

}We are in paymentupdatestatus Go only needs to implement the interface consumption to receive messages from kafka from kq. We just need to process our business in our Consumer

3. Scheduled task

As for scheduled tasks, go zero look is not used at present. I will also explain here

- If you want to simply use cron (both bare metal and k8s),

- If it is a little more complicated, it can be used https://github.com/robfig/cron Package, defining time in code

- Using XXL job and gocron distributed timed task system access

- asynq's shelule

Because asynq is used in the project, let me demonstrate asynq's schedule

It is divided into client and server. The client is used to define the scheduling time. The server accepts the message trigger of the client to execute the business we write when the time comes. The actual business should be written in the server, and the client is used to define the business scheduling time

asynqtest/docker-compose.yml

version: '3'

services:

#asynqmon asynq webui of delay queue and timing queue

asynqmon:

image: hibiken/asynqmon:latest

container_name: asynqmon_asynq

ports:

- 8980:8080

command:

- '--redis-addr=redis:6379'

- '--redis-password=G62m50oigInC30sf'

restart: always

networks:

- asynqtest_net

depends_on:

- redis

#redis container

redis:

image: redis:6.2.5

container_name: redis_asynq

ports:

- 63779:6379

environment:

# Time zone Shanghai

TZ: Asia/Shanghai

volumes:

# data file

- ./data/redis/data:/data:rw

command: "redis-server --requirepass G62m50oigInC30sf --appendonly yes"

privileged: true

restart: always

networks:

- asynqtest_net

networks:

asynqtest_net:

driver: bridge

ipam:

config:

- subnet: 172.22.0.0/16asynqtest/shedule/client/client.go

package main

import (

"asynqtest/tpl"

"encoding/json"

"log"

"github.com/hibiken/asynq"

)

const redisAddr = "127.0.0.1:63779"

const redisPwd = "G62m50oigInC30sf"

func main() {

// Periodic tasks

scheduler := asynq.NewScheduler(

asynq.RedisClientOpt{

Addr: redisAddr,

Password: redisPwd,

}, nil)

payload, err := json.Marshal(tpl.EmailPayload{Email: "546630576@qq.com", Content: "Send email"})

if err != nil {

log.Fatal(err)

}

task := asynq.NewTask(tpl.EMAIL_TPL, payload)

// Sync every 1 minute

entryID, err := scheduler.Register("*/1 * * * *", task)

if err != nil {

log.Fatal(err)

}

log.Printf("registered an entry: %q\n", entryID)

if err := scheduler.Run(); err != nil {

log.Fatal(err)

}

}asynqtest/shedule/server/server.go

package main

import (

"context"

"encoding/json"

"fmt"

"log"

"asynqtest/tpl"

"github.com/hibiken/asynq"

)

func main() {

srv := asynq.NewServer(

asynq.RedisClientOpt{Addr: "127.0.0.1:63779", Password: "G62m50oigInC30sf"},

asynq.Config{

Concurrency: 10,

Queues: map[string]int{

"critical": 6,

"default": 3,

"low": 1,

},

},

)

mux := asynq.NewServeMux()

// Close home stay order task

mux.HandleFunc(tpl.EMAIL_TPL, emailMqHandler)

if err := srv.Run(mux); err != nil {

log.Fatalf("could not run server: %v", err)

}

}

func emailMqHandler(ctx context.Context, t *asynq.Task) error {

var p tpl.EmailPayload

if err := json.Unmarshal(t.Payload(), &p); err != nil {

return fmt.Errorf("emailMqHandler err:%+v", err)

}

fmt.Printf("p : %+v \n", p)

return nil

}asynqtest/tpl/tpl.go

package tpl

const EMAIL_TPL = "schedule:email"

type EmailPayload struct {

Email string

Content string

}Start server go,client.go

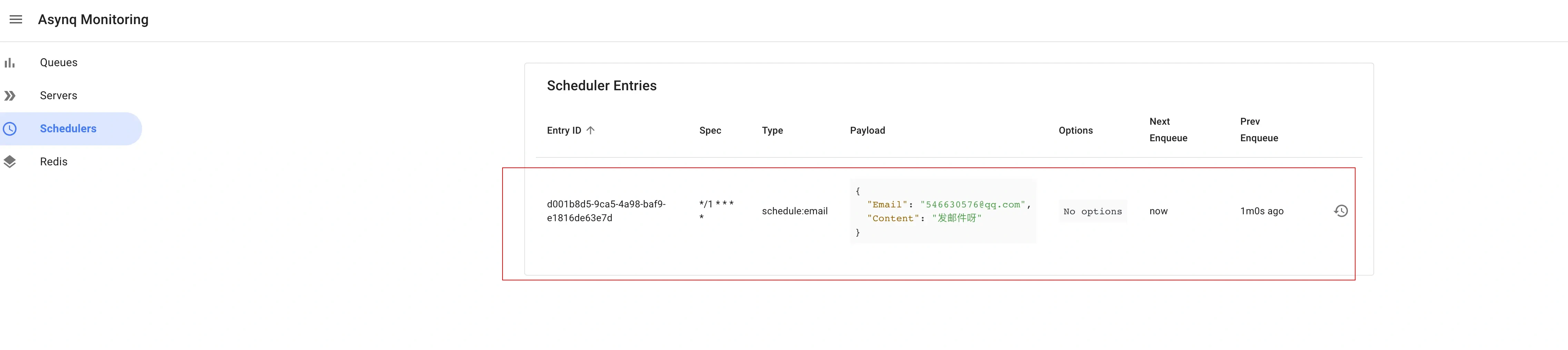

Browser input http://127.0.0.1:8980/schedulers here You can see all client defined tasks

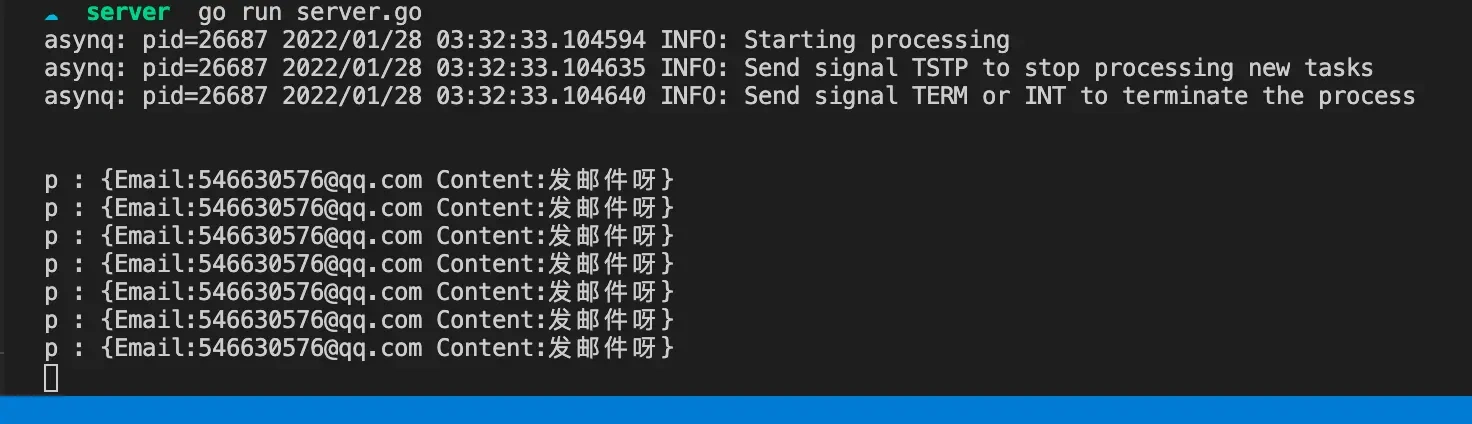

Browser input http://127.0.0.1:8990/ You can see our server consumption here. Please

Console consumption

Let's talk about the idea of integrating asynq's sheldule into the project. You can start a service separately as a scheduling client, define the scheduled task scheduling management of the system, and define the server in the asynq of each business's own mq.

4. Ending

In this section, we learned to use message queues and delay queues. kafka can be viewed through management tools. For asynq, check webui in go zero look / docker compose env In YML, we have started asynqmon, which can be used directly 127.0.0.1:8980 You can view it

Project address

Welcome to go zero and star support us!

Wechat communication group

Focus on the "micro service practice" official account and click on the exchange group to get the community community's two-dimensional code.