We all know that the quality of the depth map returned by AREngine is not high. The probability is that the tof depth map returned directly, which is still far from the depth map returned by ARCORE. In our current AR cloud rendering project, we need to use the depth map returned by AREngine, which needs to improve the quality of the depth map.

So we began to study how to optimize the depth map of AREngine. This blog is a conventional method of patching holes in depth maps.

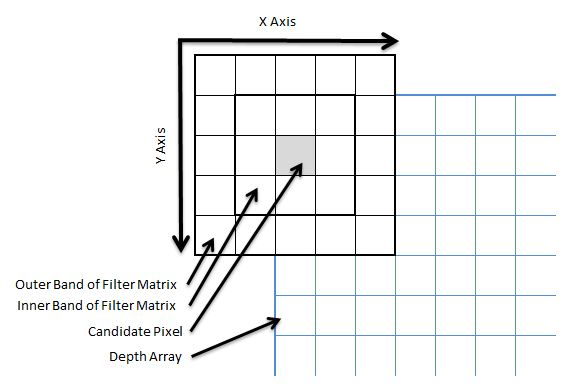

Two base band statistical algorithm: firstly, traverse the depth map, find the "white point" pixels (candidate pixels) in the depth map, and find the pixels adjacent to the candidate pixels. The filter divides the surrounding of the candidate pixels into two "bands", and counts the non "white point" values of the surrounding pixels. The filter calculates the statistical frequency distribution of each depth value and records the values found in each frequency band. It then compares these values with the threshold of each band to determine whether candidate pixels should be filtered. If the threshold of any frequency band is broken, the pixel value with the highest frequency is used as the value of the candidate pixel.

In this, we do not use the statistical average value of non-zero pixels when assigning values to candidate pixels in the statistics of the two base bands, because by looking at these values, we can intuitively identify the edges of some objects that may exist in the filter matrix. If we apply the average of all these values to the candidate pixels, it will remove the noise from the X and Y transparent views, but introduce the noise along the Z and Y transparent views; Place the depth of the candidate pixel halfway between two separate features. By using the statistical model, we can basically ensure that the depth is allocated to the candidate pixels of the most important features in the matched filter matrix.

OPENGL code implementation:

vec4 twoBandFilter(vec2 texCoords) {

//Get the size of the image and the size of the step

ivec2 size = ivec2(0);

if (isSrcTexture) {

size = textureSize(srcTexture, 0);

} else {

size = textureSize(inTexture, 0);

}

vec2 tex_offset = 1.0 / vec2(size);

//Storage statistics frequency

const int rad = 2;

int count = (rad * 2 + 1) * (rad * 2 + 1) - 1;

//Note that the number of columns in the filterCollection needs to be dynamically modified to be consistent with the count.

float filterCollection[24][2];

int bandPartion = 2;

//Later, the inner band count and outer band count are used to compare with the threshold

int innerBandCount = 0;

int outerBandCount = 0;

float curDepth = getDepth(texCoords);

float retDepth = curDepth;

//If the pixel is not a white dot, the original depth value is returned directly

if (abs(curDepth - FAR) < bias) {

//Only white or black spots can be counted

for (int x = -rad; x <= rad; ++x)

{

for (int y = -rad; y <= rad; ++y)

{

if (x == 0 && y == 0) {

continue;

}

//Those beyond the boundary will not be handled

vec2 curUv = texCoords + vec2(x, y) * tex_offset;

if (any(lessThan(curUv, vec2(0.0, 0.0)))) {

continue;

}

if (any(greaterThan(curUv, vec2(1.0, 1.0)))) {

continue;

}

float tempDepth = getDepth(curUv);

//Look only for values that are not white dots

if (abs(tempDepth - FAR) < bias) {

continue;

}

for (int i = 0; i < count; i++) {

if (abs(filterCollection[i][0] - tempDepth) < 0.00001) {

filterCollection[i][1]++;

break;

} else if (abs(filterCollection[i][0] - 0.0) < 0.00001) {

filterCollection[i][0] = tempDepth;

filterCollection[i][1]++;

break;

}

}

if (y < bandPartion && y > -1 * bandPartion && x < bandPartion && x > -1 * bandPartion)

innerBandCount++;

else

outerBandCount++;

}

}

// Once we have determined our inner and outer band non-zero counts, and

// accumulated all of those values, we can compare it against the threshold

// to determine if our candidate pixel will be changed to the

// statistical mode of the non-zero surrounding pixels.

if (innerBandCount >= innerBandThreshold || outerBandCount >= outerBandThreshold)

{

float frequency = 0.0;

float depth = 0.0;

// This loop will determine the statistical mode

// of the surrounding pixels for assignment to

// the candidate.

for (int i = 0; i < count; i++)

{

// This means we have reached the end of our

// frequency distribution and can break out of the

// loop to save time.

if (abs(filterCollection[i][0] - 0.0) < 0.00001)

break;

if (abs(filterCollection[i][1] - frequency) > 0.00001)

{

depth = filterCollection[i][0];

frequency = filterCollection[i][1];

}

}

retDepth = depth;

}

}

return encodeFloatRGBA(retDepth);

}AREngine depth map optimization series:

One of the optimization of AREngine depth map hole filling method based on maximum / minimum / average value

AREngine depth maps are visualized in a rainbow view

opengl uses Gaussian blur (Gaussian blur / Gaussian filter) to smooth the tof depth image

Huawei AREngine obtains depth information according to the depth map

References:

https://www.codeproject.com/Articles/317974/KinectDepthSmoothing