Problem Description

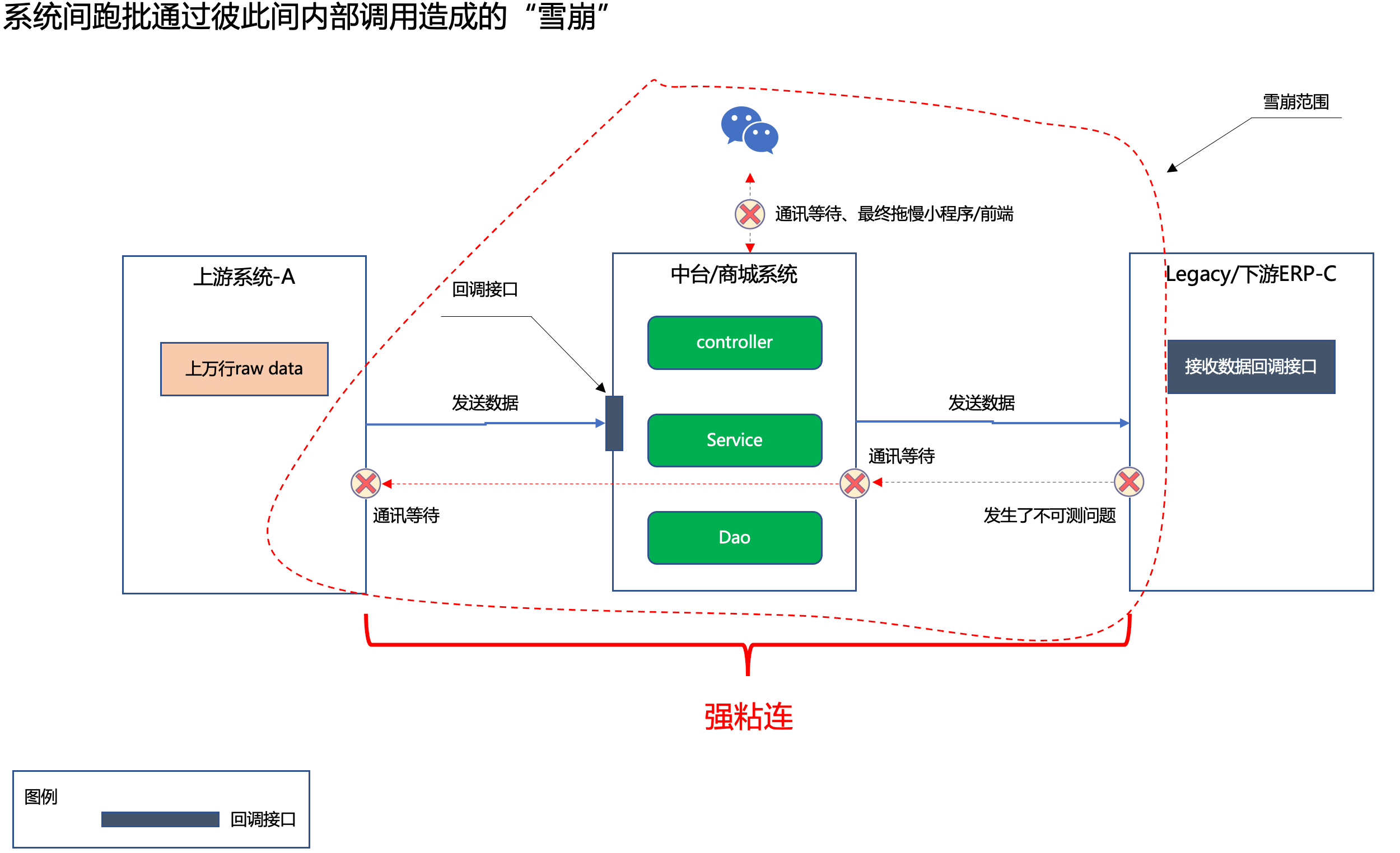

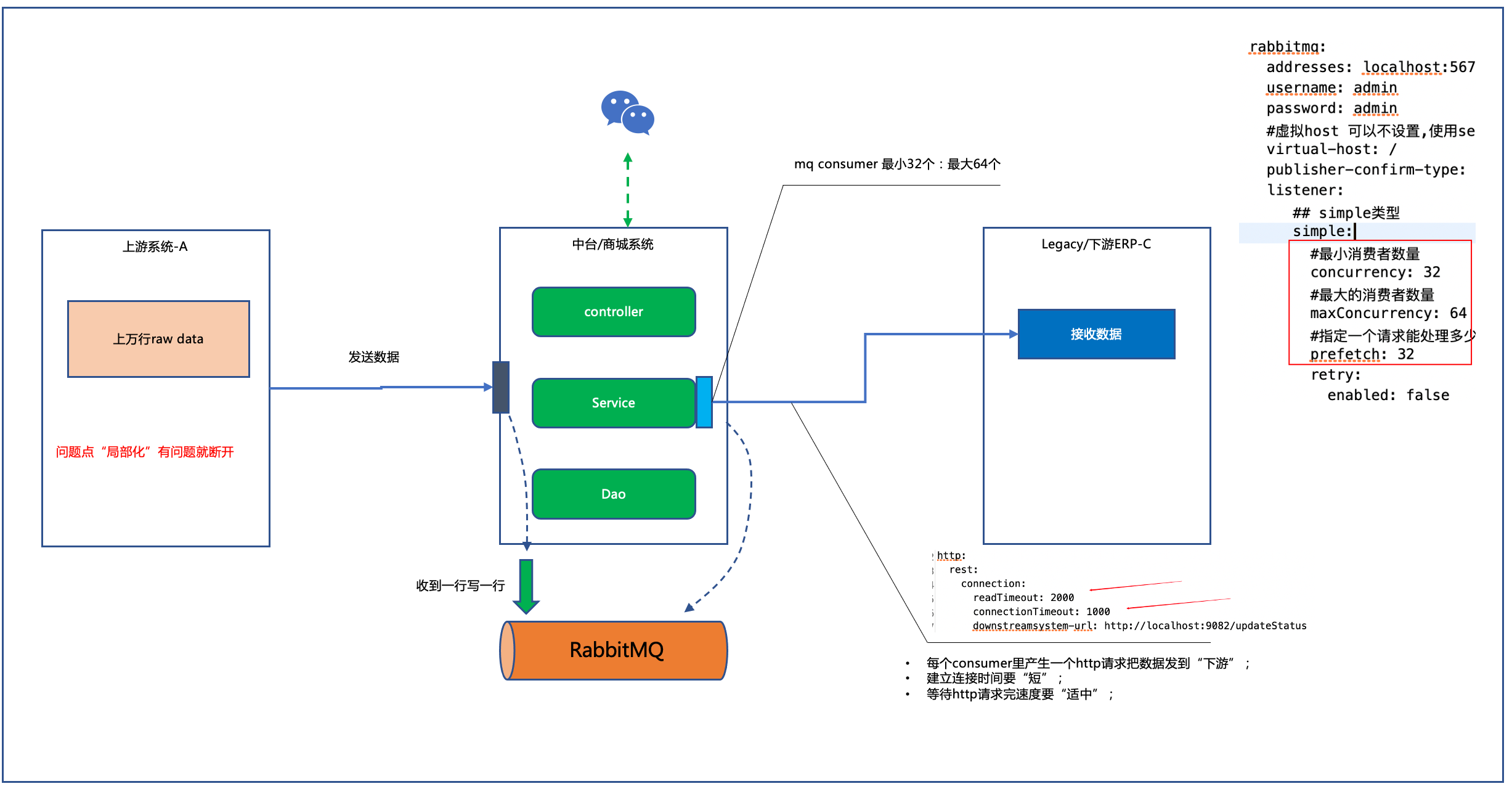

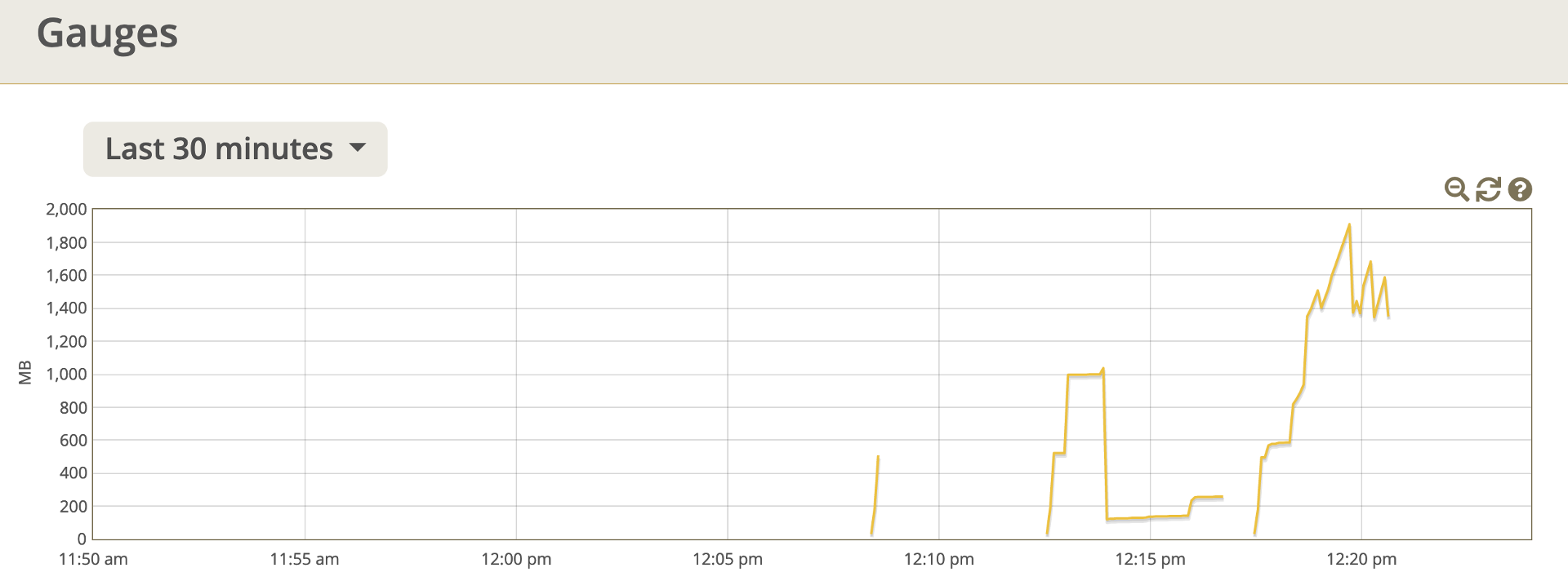

This is also a real production accident, as shown in the following figure

This "avalanche" is an internal system avalanche within an enterprise.

We all know that if it exists on external http calls, including all restful, soap requests, HTTP type calls: a->b->c->d assumes that system B is an "intermediate segment", any error occurs if your HTTP settings are too long or no connection time or no melt. All the way down the road,'card reversal system b'. This is called an avalanche.

However, this effect of an "intersystem avalanche" exists among our internal systems, especially in batch scenarios, such as:

- Over-the-counter receipts send data, scavenging purchases, and face-to-face transactions to the stage;

- In the middle of the transaction, the secondary data transferer is transferred to the third-party analysis engine.

- Once the third-party analysis engine fails, the above results will occur. "The third-party analysis engine gets stuck - > the desk gets stuck - > the counter receipt";

Then there's a bunch of "chickens and dogs jumping". Keeping adding memory, boosting CPU and restarting frequently can't solve the problem. Finally, the business owner can't stop asking for the transformation. Then a group of people can't find the problem, so they have:

- Hey, you send less data!

- Hey, I'm just sending you data that you can't eat. What's wrong with me?

- Hey, we have no problem that you can't affect us, so you have to change this!

Oops, that's a football game! Oops, that's a buzz! Oops, that's a hurry!

The theoretical basis of the solution

We know that external cascade avalanches, as well as intranet inter-enterprise systems, also have avalanches and are more prone to avalanches.

As illustrated above, when one system is in between two other legacy systems, the system is "uncomfortable". Because legacy is either erp or c/s traditional architecture.

legacy within an enterprise focuses on business power. Hence, DB-based pessimistic locks are all used to conduct transactions. Such systems, 150 concurrencies, are already it's a myth for them. You start a run-batch, a big for cycle, there are at least 150-300 kinds of run-batches of medium and large enterprises, each of which has a good daily transaction volume of 10,000-30,000, and banking and finance are all about 100,000. Then multiply 100,000 pipelining channels by account type to get a Cartesian product for circulation that is millions.

Millions of records are wrapped in a for loop and sent downstream to legacy. Multithreading is not a problem for the "middle trading system" of the advanced point. It starts hundreds of threads at a click, sending thousands of data out of each thread.

Oh dear... No more than 1 minute, downstream system jams.

Once a downstream system crashes, it negatively affects the middle-tier trading system, and there is an avalanche of this kind, manifested by the sudden slowing down of front-end APP applications or applet applications.

Then the above pile of shaking pots started.

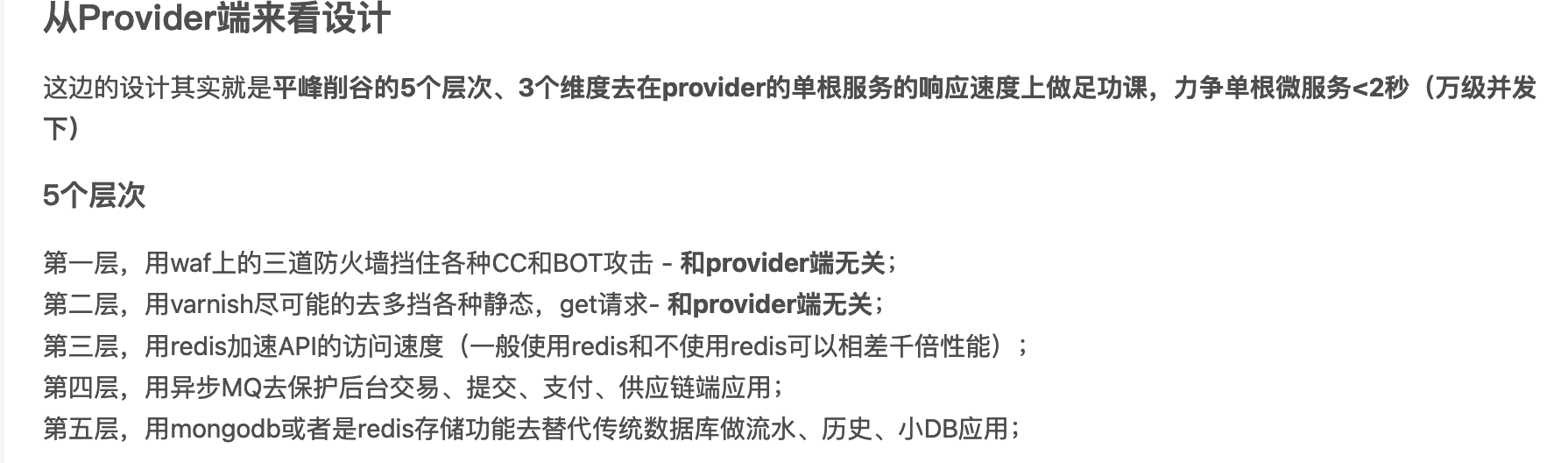

Therefore, to solve this kind of problem, we need to follow the design principle, which is that some advanced factories often interview people at P6, P7 level or above to hear candidates say the following:

- Multithreads must be clean between threads;

- Supports re-running, retry, serious error recording;

- The number of threads is constant and controllable, and batch time can be slightly longer (you don't care how many seconds you run batches, but the actual use of multi-threads is even thousands times better than the blindly designed use of hundreds or thousands of threads);

- Task processing does not always use "Flexible Horizontal Extension Application" to solve distributed computing rather than simply increasing the number of threads;

- Use as few resources as possible (memory utilization < 10%, CPU utilization < 10%, TCP file open control < 10% or even 5% of the overall file handle) throughout the job run;

- Make full use of "CPU time slice rotation of the computer", make full use of parallel computing, make full use of cache, memory, asynchronous;

Therefore, "batch" seems simple, but it means everything. It is the design and production of a highly integrated professional knowledge of computer science.

By the way, this is a supplement to the fact that the quick and profitable way of "training class-just teaching CRUD" cannot teach you the knowledge that real computer science programmers need. It is the lack of these core knowledge that makes up 90% of the apes on the market that makes up the term "X nong". It is also the lack of these basic knowledge points that causes the "root cause" around which all systems should (are programmed and need to be basically done) unfold but fail to do so. It also causes most of our systems to have functions or failures, and the system explodes with a little more stores and customers. A system has not reached half a year's life span (it is not exaggerated that most of the domestic systems have no performance when they jump out of the field of Beiguang-Dachang):

- HTTP=TCP=OS (let's take unix/linux for example) has ulimit s up to 65,535, but consider that there are other applications on the OS that use file handles. Typically, half the number of web applications can be given, about 32,000 concurrently open https. A large for loop or uncontrolled number of threads can easily reach this threshold in one minute, once reached, the application on the OS is "stuck";

- When the CPU utilization is over 80%, all the applications on your OS are in a "dummy" state. You can take jmeter by yourself, write 10,000 concurrent threads, run for one minute and try to hang up your computer directly.

- When the memory occupancy is more than 90%, it is as if the CPU utilization is more than 80%.

- Especially in 10,000, 100,000, million data cycles, if you don't sleep, wait, the CPU will burst like a person running at a speed of 100 meters in 11 seconds to finish 45 kilometers. It won't take long for the lungs to burst. You have to give him a "breath," which is CPU rotation. Many people don't know sleep when running batches like this;

- Front-end APP/applet applications often get stuck when running batches because many people think the front-end and back-end are separated, but the actual front-end APIs fall on the back-end spring boot application. Back-end and running batches, if they happen to be the same application and running batches are consuming your HTTP process at this time, are bound to affect your front-end access. Because the batches on the TO B end run out of available http connections, how can front-end users get in? Both think front-end and back-end are separated, but front-end you have API requests. Otherwise, if you don't want API requests, you are a pure static application. Background even shutdown will not affect the front-end. This is an unexpected point for many traditional A company's IT and even CTO layers. It is not an exaggeration to say that 90% (nationwide) of current market A-side IT is such recognition.

- Change the "strong correlation between systems" to the "weak correlation", and limit the point and extent of the problem to the smallest possible extent. Then use the "asynchronous" architecture more so that no avalanche will occur, neither will avalanche. Crashing your upstream or downstream just exposes problems in their systems. If you want to crash together, then when this problem occurs, it is a pot of mixed water. Three systems are not capable of two systems, and investigation and resolution can also be accurate to the system. Now you're "stirring up"... This is "Dead with others? How friendly are you?"

- Reduce the use of traditional OLTP DBs or simply avoid them. A database row-level lock can jam your system all the way. If you use NOSQL properly, such as mongodb and redis, you will find that using NOSQL with the same amount of data is thousands or thousands of times more efficient than traditional DB.

- One update, insert loop 1,000 times, and 1,000 update statements are assembled together and submitted at one time, with dozens or even thousands of times different performance before and after. Because databases are happy to do "batch one-time submissions" instead of one-by-one submissions. The same is true for network transfers. For example, if you upload 30,000 files to Baidu's networking disk with only 20K per file, you may have to upload for a few hours, and you will not upload 30,000 files as a ZIP package for more than 15 minutes at a time. Because frequent reading and writing of network IO is extremely inefficient;

- HTTP or any connection must not have a timeout, and the timeout should be as short as possible. And any connection, including an HTTP connection, has two timeouts. One is createConnectionTimeout and the other is readTimeout. CreateConnectionTime refers to the time it takes to establish a connection channel between two systems. If this time is more than 2 seconds or 4 seconds for an intranet, let's say 4 seconds. That's an exaggeration. This means that one system between the systems you want to build has already been "hung up". ReadTimeout is http request->response time, which is a "transaction" time. In the field of Internet to c-end micro-services, we even require this readTimeout to reach 100 milliseconds or 100 milliseconds. You can imagine how much time is left for you to trade a transaction. Why? You can also take a long time. Processing a transaction for more than 10 seconds is good. An avalanche along the way involves a number of system modules, system components, and even systems, then a global avalanche, then an applet/APP white screen, jammed, really happy! So let's control readTimeout for four seconds. The relationship between the two parameters is readTimeout's time > createConnectionTime. CreateConnectionTimeout settings for many reasonable systems generally do not exceed 2 seconds;

Okay, the big principle is just like the 9 points above, so according to the 9 points above, we show our latest design how to do this by "controlling a constant number of threads, achieving business power, being not inefficient (in fact only higher) and having less overhead of system resources"?

Propose solutions

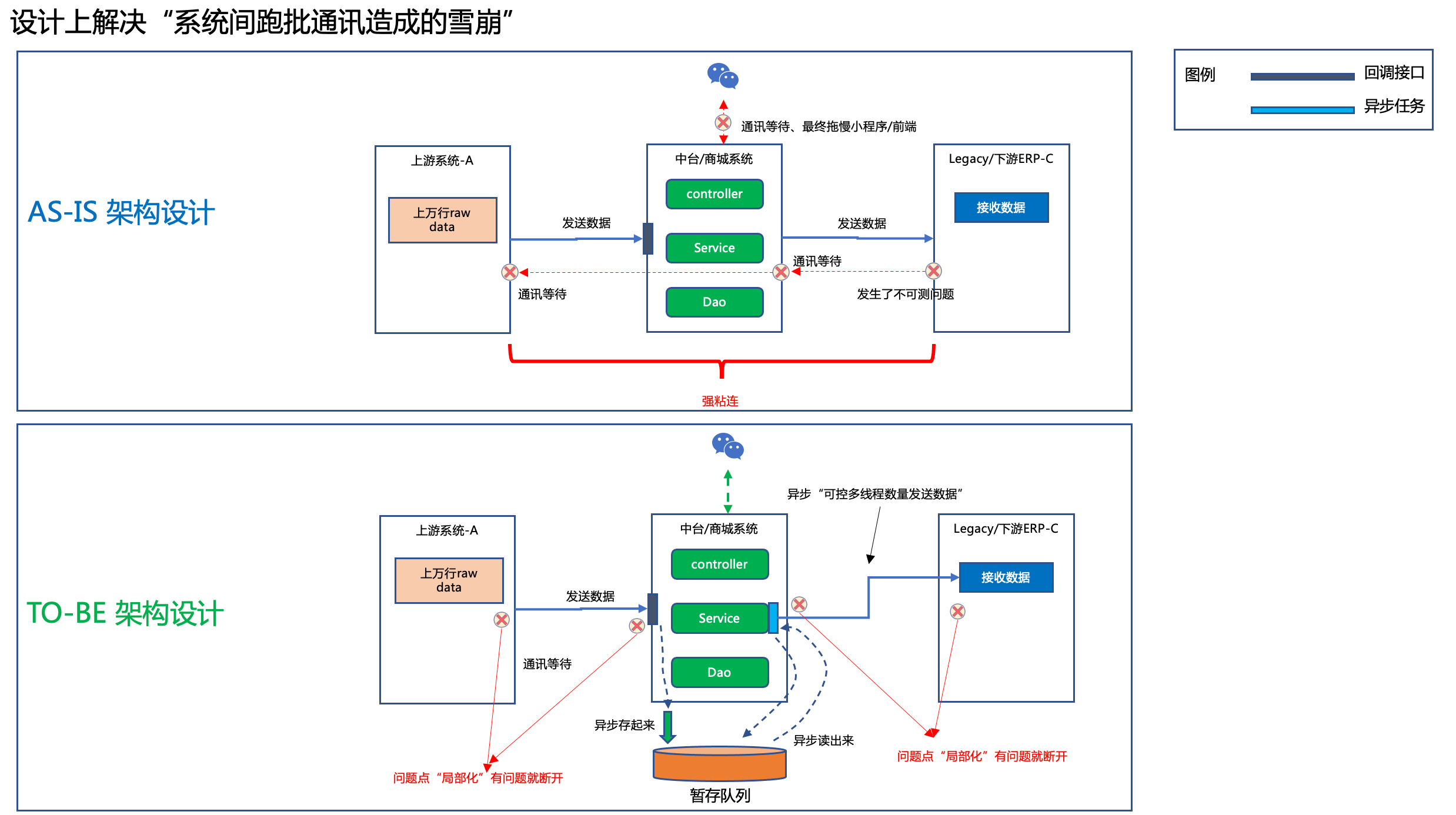

AS-IS & TO-B is a common architecture design method for TOGAF enterprise architecture.

- AS-IS representative: own/existing design;

- TO-B stands for: future, goal, what we want to design;

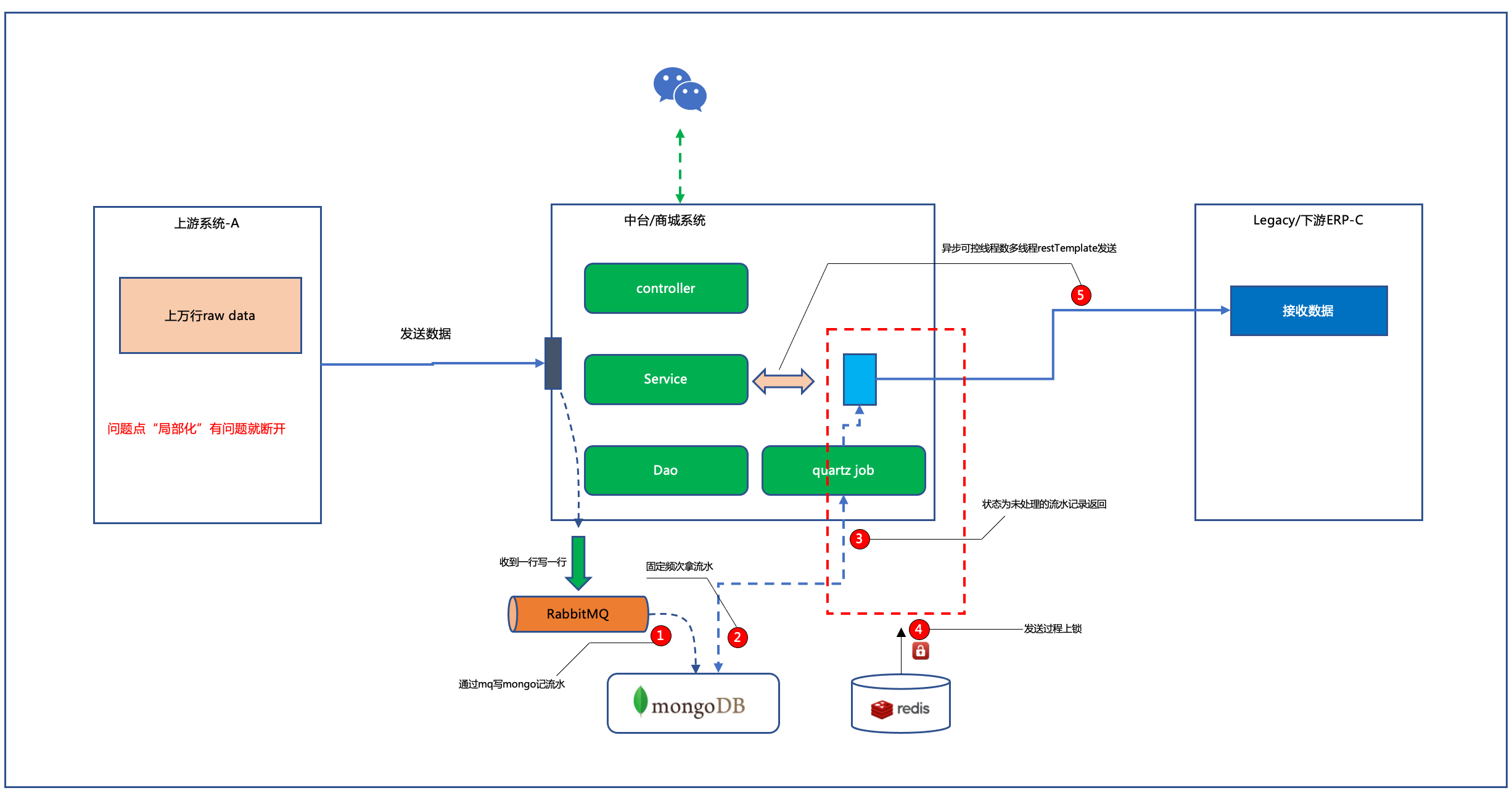

In TO-B architecture design, we can see the following design goals:

- Running batches between A-B-C systems are restricted from large-scale avalanches caused by strong adhesion to small-scale avalanches.

- In order to keep the TO C-end applet/APP from getting stuck, we also control the avalanche in the background and do not "bounce/bounce" to the front, so we use asynchronous;

- If you want to be asynchronous, you have to use a temporary queue, which can either use mq or make asynchronous job s by itself using "Pipeline Table + With Status Field". The design is broken down into later detailed designs to explain.

- The upstream side sends data one by one, and the trading system eats it down and returns it directly to the response. This request readTimeout does not exceed one second.

- After saving, use an asynchronous job to read the running water out and send it to the downstream system "throw" in an asynchronous mechanism that controls the number of threads.

This is our design idea, complete decoupling.

Explain two reasons

It is very important that these two reasons clarify the core idea of the whole design once they are understood, so we will explain them in depth here.

Why must we focus on "sending threads are controllable and constant"?

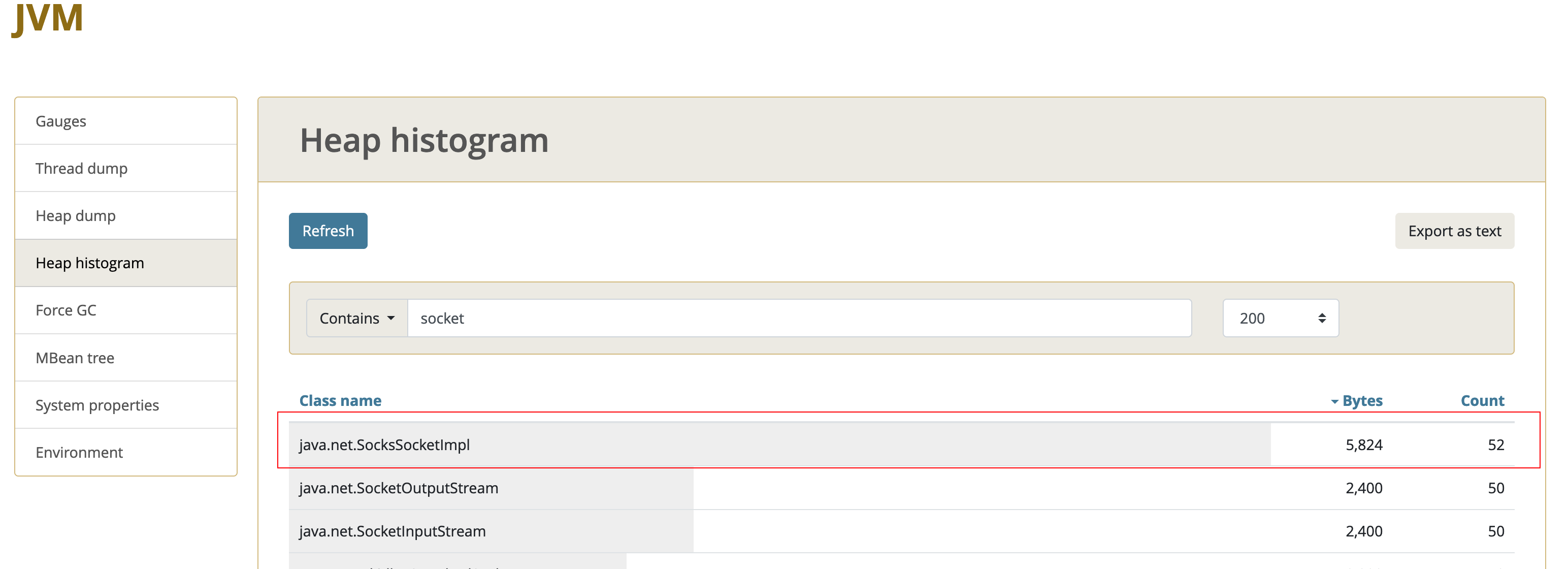

This design runs in a jvm and the sending thread is constant and controllable. Generally, it is not easy to set the number of threads to more than 50. As I have always said, generally upstream or downstream systems are legacy. This legacy is not "expensive", but it is really impossible to eat even 150 concurrencies. Because these systems are "strong trading" (which is simply the result of their old-fashioned technology stack design an average of 20 years ago), and are DB-based pessimistic lock designs, we have this "responsibility" to protect these systems.

For these systems, the number of requests sent to them must be controlled and not killed. For IT of the whole enterprise, "win-win" can win. Win-win in a single department is not a win. Therefore, this design is very important, not only consider your own system "cool".

Why do I have to use asynchronous temporary storage?

We can control how much we send out so that no one else will kill you. How can you guarantee that no one will kill you? The origin of these legacy systems, as I mentioned above, is that the design of these systems is very old. They may not even be able to use multi-threading. Many banks and financial enterprises even use single-chip computers and C to do it. It is true that in that time, these systems were very advanced. But they don't keep up with the times that have been phased out in the trend of evolution.

The height of the data they send to you is likely to be "uncontrolled threads, directly flooding requests" into your system. Therefore, your system should have the ability to "block" these internal legacy flows by "digesting" them a little later.

So asynchronous, temporary, and queuing are all things you need to do here.

detailed design

For the core principle of "the front can block, the front can block, the output can be controlled". There are two ways we can get to the ground of our design.

- Use MQ+Constumer+RestTemplate to control the number of MQs;

- Use MQ+MongoDb for the Pipeline Table+SpringBoot Thread Pool+Redisson Self-renewal Lock+RestTemplate;

Both approaches involve the following detailed techniques.

It's a good habit for me to have all the technology stacks ready for use before the design goes to the ground. I don't know if you have ever been to Tianjin and eaten snacks there?

In Tianjin, early in the morning, when it was still dawn, you came to Tianjin, especially some small lanes, and saw a stall where the boss had boiled the water and prepared the ingredients at 4 or 5 a.m. A small dish of salt, a small dish of garlic, a small dish of peanuts, a small dish of sesame seeds, shallots, sauce, shrimp peel and placed a table.

When the guest arrives: Boss, come to a bowl of old noodle tea.

The boss wanders quickly between the plates with his hands, picks up this one, picks up that one, quickly sprinkles these prepared ingredients into a sea bowl, then takes a big spoon, flicks it into the already hot soup pot, pours it into the small bowl and shouts "Ouch, eat slowly".

Where are the guests? Take a bite of oil with the fruit in one hand and a spoon in the other hand to drink an old noodle tea like this. It's called fragrance.

So, we've prepared all our "little things" in advance.

| Sequence Number | Technology Stack Name |

| 1 | spring boot 2.4.2 |

| 2 | webflux to be our controller |

| 3 | thread pool for spring boot |

| 4 | quartz of spring boot |

| 5 | spring boot redisson self renewal lock |

| 6 | spring boot rabbitmqTemplate |

| 7 | spring boot mongoTemplate |

| 8 | mysql 5.7 |

| 9 | MongoDb 3.2.x |

| 10 | RabbitMq 3.8.x |

| 11 | Redis6.x |

| 12 | apache jmeter 5.3 |

These techniques are broken down into two spring boot projects:

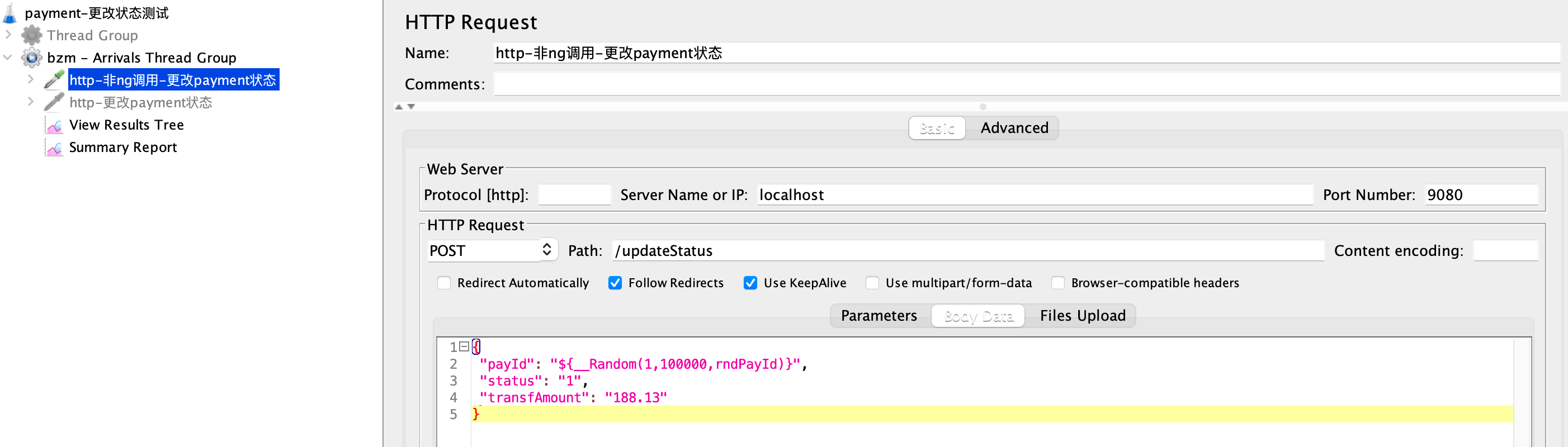

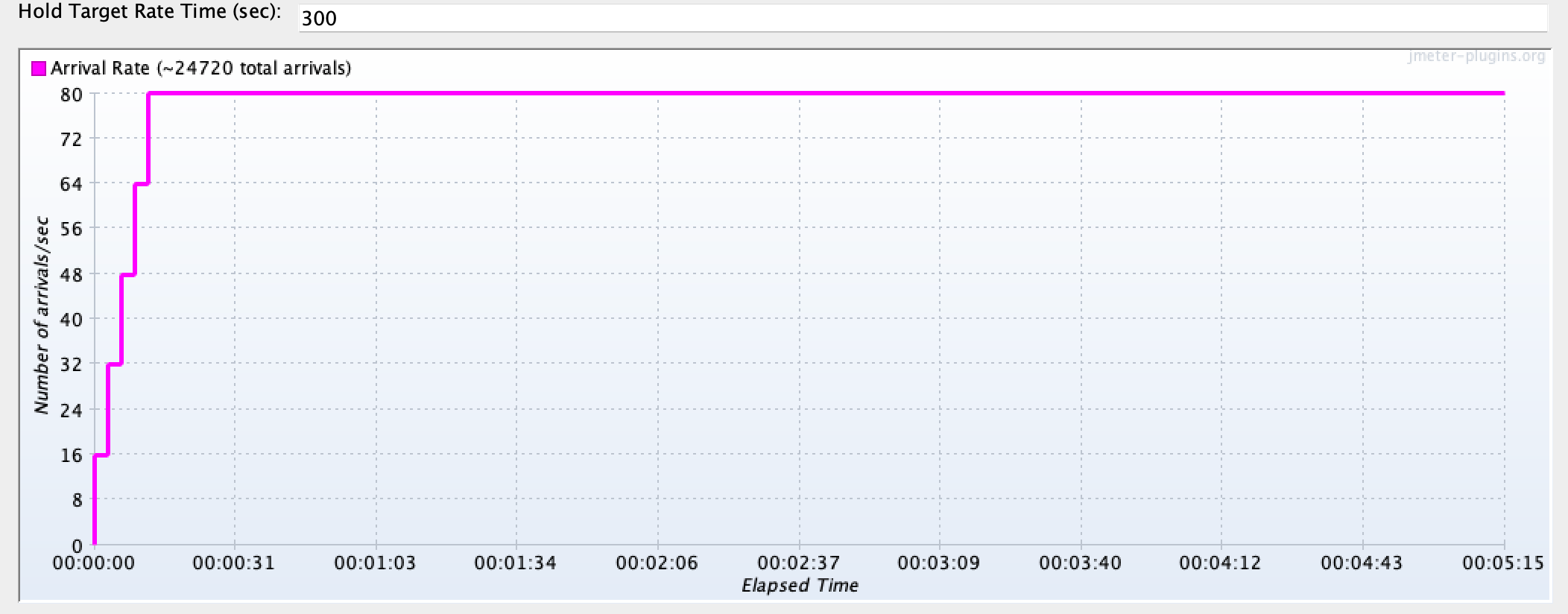

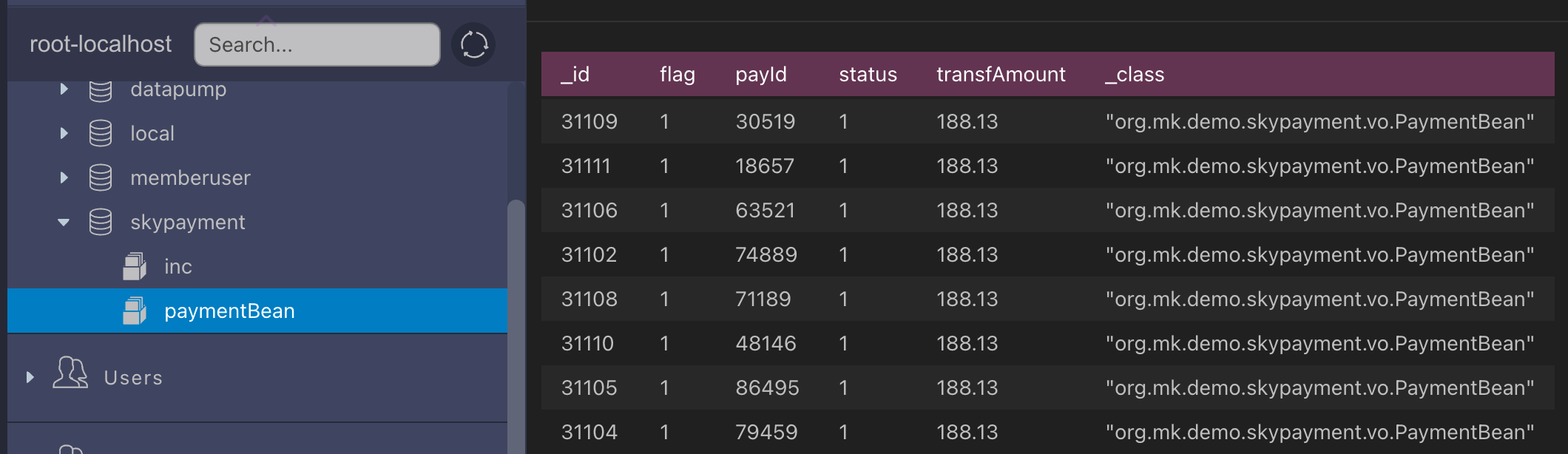

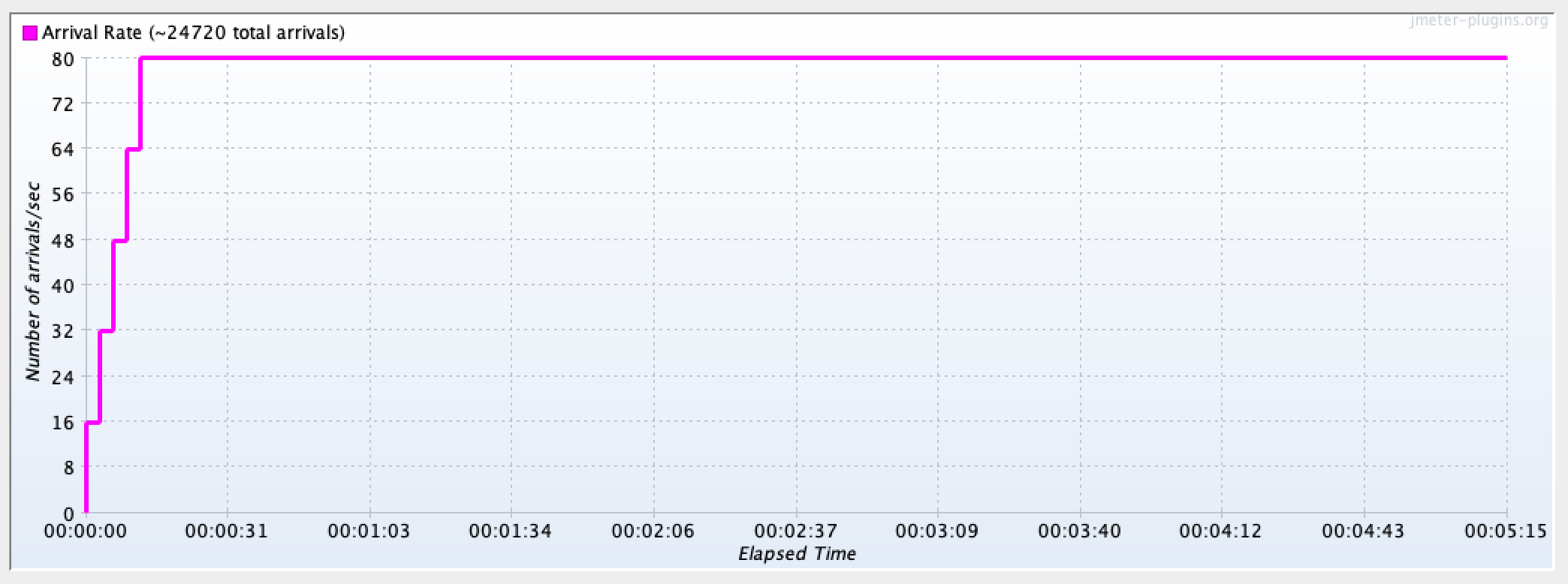

- SkyPayment, which is used to simulate our core platform for broadcasting/e-commerce, provides a callback interface that accepts an http request that can reach tens of thousands of requests to simulate the upstream side feeding huge amounts of data into the SkyPayment project, where we use jmeter's Arrival Threads to simulate this high concurrent amount;

- SkyDownStream System, used to simulate downstream systems. That is, when an upstream (jmeter) feeds a large amount of highly concurrent data to the SkyPayment system, SkyPayment passes this data as a "second-hand" to the SkyDownStreamSystem. SkyDownStreamSystem receives the data SkyPayment passes over and modifies the status of the payment table in the database to simulate the actions of "entering the database" and "accounting";

System design requirements:

- When these two projects are running, how much data must be pushed in and how much data must be processed in the face of tens of thousands of levels of instantaneous flow pressure from start to end;

- Any data processing error system can repeat, compensate for processing;

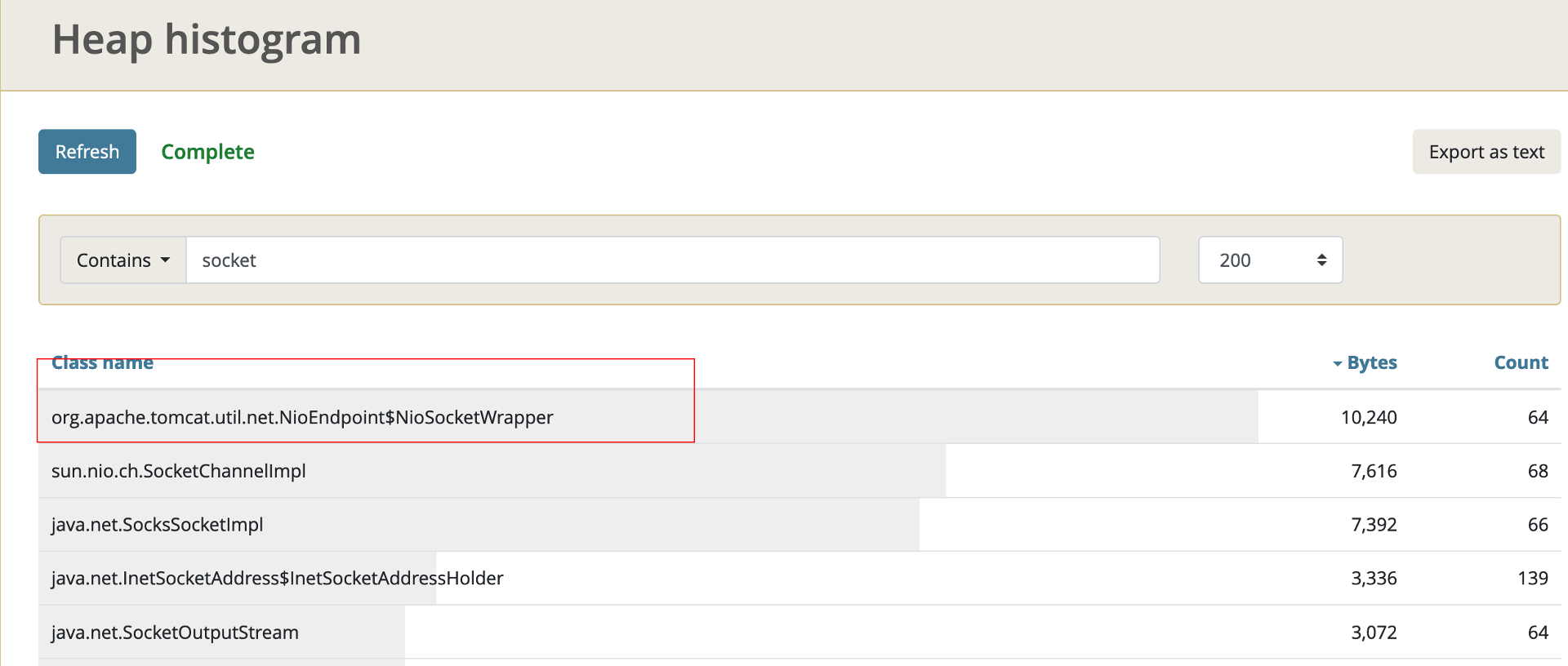

- The number of http connections in both systems must be controlled within a small number of concurrencies so that no system can be exploded;

- While meeting the above three points, it is also necessary to complete all tens of thousands of data processing within one minute of SkyPayment end transmission when the large amount of data has been sent out completely.

- Memory usage for each project during the entire process is not more than 2G, and CPU is not more than 10%.

Start next.

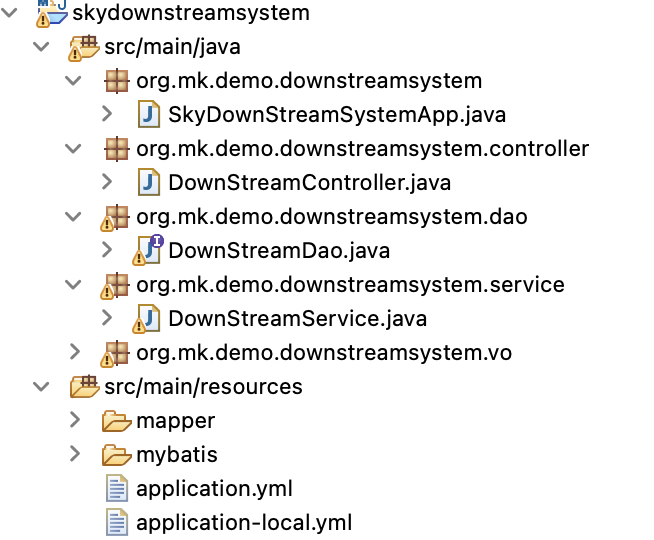

Detailed design - SkyDownStream project structure used to simulate downstream systems

controller for warehousing

@PostMapping(value = "/updateStatusInBatch", produces = "application/json")

@ResponseBody

public Mono<ResponseBean> updateStatusInBatch(@RequestBody List<PaymentBean> paymentList) {

ResponseBean resp = new ResponseBean();

try {

downStreamService.updatePaymentStatusByList(paymentList);

resp = new ResponseBean(ResponseCodeEnum.SUCCESS.getCode(), "success");

} catch (Exception e) {

resp = new ResponseBean(ResponseCodeEnum.FAIL.getCode(), "system error");

logger.error(">>>>>>updateStatusInBatch error: " + e.getMessage(), e);

}

return Mono.just(resp);

}DownStreamService.java

@TimeLogger

@Transactional(rollbackFor = Exception.class)

public void updatePaymentStatusByList(List paymentList) throws Exception {

downStreamDao.updatePaymentStatusByList(paymentList);

}DownStreamDao.java

public void updatePaymentStatusByList(List paymentList);<?xml version="1.0" encoding="UTF-8"?>

<!DOCTYPE mapper PUBLIC "-//mybatis.org//DTD Mapper 3.0//EN" "http://mybatis.org/dtd/mybatis-3-mapper.dtd">

<mapper

namespace="org.mk.demo.downstreamsystem.dao.DownStreamDao">

<resultMap id="paymentResult"

type="org.mk.demo.downstreamsystem.vo.PaymentBean">

<id column="pay_id" property="payId" javaType="Integer" />

<result column="status" property="status" javaType="Integer" />

<result column="transf_amount" property="transfAmount"

javaType="Double" />

</resultMap>

<update id="updatePaymentStatusById"

parameterType="org.mk.demo.downstreamsystem.vo.PaymentBean">

update sky_payment set status=#{status} where

pay_id=#{payId}

</update>

<update id="updatePaymentStatusByList">

<foreach collection="list" item="payment" separator=";">

update sky_payment set status=#{payment.status} where pay_id=#{payment.payId}

</foreach>

</update>

</mapper>You can see here that I have two daos, one based on a single pay_ The ID is used for the library, and the other DAO is the batchUpdate method.

Why should I emphasize using batchUpdate here? Think about the 1,000 loops in 1,000 of the nine basic knowledge points I mentioned above that write databases one by one and submit 1,000 at a time, and the performance can be tens or even thousands of times worse. So instead of just implementing RabbitMq and using NOSQL for controllable, asynchronous, multi-threaded, distributed computing in the POC code, I used Insert Update Data One Bar and Bulk Update Data in each of these ways to compare them to verify how horrible the efficiency of one bar and batch interpolation/update is.

PaymentBean.java

/**

* System project name org.mk.demo.skypayment.vo PaymentBean.java

*

* Feb 1, 2022-11:12:17 PM 2022XX Company-Copyright

*

*/

package org.mk.demo.downstreamsystem.vo;

import java.io.Serializable;

/**

*

* PaymentBean

*

*

* Feb 1, 2022 11:12:17 PM

*

* @version 1.0.0

*

*/

public class PaymentBean implements Serializable {

private int payId;

public int getPayId() {

return payId;

}

public void setPayId(int payId) {

this.payId = payId;

}

public int getStatus() {

return status;

}

public void setStatus(int status) {

this.status = status;

}

public double getTransfAmount() {

return transfAmount;

}

public void setTransfAmount(double transfAmount) {

this.transfAmount = transfAmount;

}

private int status;

private double transfAmount = 0;

}

Application of SkyDownStream System. YML configuration

mysql:

datasource:

db:

type: com.alibaba.druid.pool.DruidDataSource

driverClassName: com.mysql.jdbc.Driver

minIdle: 50

initialSize: 50

maxActive: 300

maxWait: 1000

testOnBorrow: false

testOnReturn: true

testWhileIdle: true

validationQuery: select 1

validationQueryTimeout: 1

timeBetweenEvictionRunsMillis: 5000

ConnectionErrorRetryAttempts: 3

NotFullTimeoutRetryCount: 3

numTestsPerEvictionRun: 10

minEvictableIdleTimeMillis: 480000

maxEvictableIdleTimeMillis: 480000

keepAliveBetweenTimeMillis: 480000

keepalive: true

poolPreparedStatements: true

maxPoolPreparedStatementPerConnectionSize: 512

maxOpenPreparedStatements: 512

master: #master db

type: com.alibaba.druid.pool.DruidDataSource

driverClassName: com.mysql.jdbc.Driver

url: jdbc:mysql://localhost:3306/ecom?useUnicode=true&characterEncoding=utf-8&useSSL=false&useAffectedRows=true&autoReconnect=true&allowMultiQueries=true

username: root

password: 111111

slaver: #slaver db

type: com.alibaba.druid.pool.DruidDataSource

driverClassName: com.mysql.jdbc.Driver

url: jdbc:mysql://localhost:3307/ecom?useUnicode=true&characterEncoding=utf-8&useSSL=false&useAffectedRows=true&autoReconnect=true&allowMultiQueries=true

username: root

password: 111111

server:

port: 9082

tomcat:

max-http-post-size: -1

accept-count: 1000

max-threads: 1000

min-spare-threads: 150

max-connections: 10000

max-http-header-size: 10240000

spring:

application:

name: skydownstreamsystem

servlet:

multipart:

max-file-size: 10MB

max-request-size: 10MB

context-path: /skydownstreamsystem

SkyDownStream's pom. XML (shared by parent pom.xml and SkyPayment projects)

<project xmlns="http://maven.apache.org/POM/4.0.0"

xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance"

xsi:schemaLocation="http://maven.apache.org/POM/4.0.0 https://maven.apache.org/xsd/maven-4.0.0.xsd">

<modelVersion>4.0.0</modelVersion>

<parent>

<groupId>org.mk.demo</groupId>

<artifactId>springboot-demo</artifactId>

<version>0.0.1</version>

</parent>

<artifactId>skydownstreamsystem</artifactId>

<dependencies>

<dependency>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-starter-web</artifactId>

<version>${spring-boot.version}</version>

<exclusions>

<exclusion>

<groupId>org.slf4j</groupId>

<artifactId>slf4j-log4j12</artifactId>

</exclusion>

<exclusion>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-starter-logging</artifactId>

</exclusion>

</exclusions>

</dependency>

<!-- mq must -->

<dependency>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-starter-amqp</artifactId>

<exclusions>

<exclusion>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-starter-logging</artifactId>

</exclusion>

</exclusions>

</dependency>

<!-- mysql must -->

<dependency>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-starter-jdbc</artifactId>

<exclusions>

<exclusion>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-starter-logging</artifactId>

</exclusion>

</exclusions>

</dependency>

<dependency>

<groupId>mysql</groupId>

<artifactId>mysql-connector-java</artifactId>

</dependency>

<dependency>

<groupId>com.alibaba</groupId>

<artifactId>druid</artifactId>

</dependency>

<!-- redis must -->

<dependency>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-starter-data-redis</artifactId>

<exclusions>

<exclusion>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-starter-logging</artifactId>

</exclusion>

<exclusion>

<groupId>org.slf4j</groupId>

<artifactId>slf4j-log4j12</artifactId>

</exclusion>

</exclusions>

</dependency>

<dependency>

<groupId>org.mybatis.spring.boot</groupId>

<artifactId>mybatis-spring-boot-starter</artifactId>

</dependency>

<!-- jedis must -->

<dependency>

<groupId>redis.clients</groupId>

<artifactId>jedis</artifactId>

</dependency>

<!--redisson must start -->

<dependency>

<groupId>org.redisson</groupId>

<artifactId>redisson-spring-boot-starter</artifactId>

<version>3.13.6</version>

<exclusions>

<exclusion>

<groupId>org.redisson</groupId>

<artifactId>redisson-spring-data-23</artifactId>

</exclusion>

</exclusions>

</dependency>

<dependency>

<groupId>org.apache.commons</groupId>

<artifactId>commons-lang3</artifactId>

</dependency>

<!--redisson must end -->

<dependency>

<groupId>org.redisson</groupId>

<artifactId>redisson-spring-data-21</artifactId>

<version>3.13.1</version>

</dependency>

<dependency>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-starter-test</artifactId>

<scope>test</scope>

<exclusions>

<exclusion>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-starter-logging</artifactId>

</exclusion>

<exclusion>

<groupId>org.slf4j</groupId>

<artifactId>slf4j-log4j12</artifactId>

</exclusion>

</exclusions>

</dependency>

<dependency>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-starter-log4j2</artifactId>

<exclusions>

<exclusion>

<groupId>org.apache.logging.log4j</groupId>

<artifactId>log4j-core</artifactId>

</exclusion>

<exclusion>

<groupId>org.apache.logging.log4j</groupId>

<artifactId>log4j-api</artifactId>

</exclusion>

</exclusions>

</dependency>

<!-- Old version excluded log4j2 Upgrade to the latest 2.17.1 -->

<dependency>

<groupId>org.apache.logging.log4j</groupId>

<artifactId>log4j-api</artifactId>

<version>${log4j2.version}</version>

</dependency>

<dependency>

<groupId>org.apache.logging.log4j</groupId>

<artifactId>log4j-core</artifactId>

<version>${log4j2.version}</version>

</dependency>

<!-- Old version excluded log4j2 Upgrade to the latest 2.15.0 -->

<dependency>

<groupId>org.aspectj</groupId>

<artifactId>aspectjweaver</artifactId>

</dependency>

<dependency>

<groupId>com.lmax</groupId>

<artifactId>disruptor</artifactId>

</dependency>

<dependency>

<groupId>com.alibaba</groupId>

<artifactId>fastjson</artifactId>

</dependency>

<dependency>

<groupId>com.fasterxml.jackson.core</groupId>

<artifactId>jackson-databind</artifactId>

</dependency>

<dependency>

<groupId>com.google.guava</groupId>

<artifactId>guava</artifactId>

</dependency>

<dependency>

<groupId>com.alibaba</groupId>

<artifactId>fastjson</artifactId>

</dependency>

<dependency>

<groupId>com.fasterxml.jackson.core</groupId>

<artifactId>jackson-databind</artifactId>

</dependency>

<!-- Project Common Framework -->

<dependency>

<groupId>org.mk.demo</groupId>

<artifactId>common-util</artifactId>

<version>${common-util.version}</version>

</dependency>

<dependency>

<groupId>org.mk.demo</groupId>

<artifactId>db-common</artifactId>

<version>${db-common.version}</version>

</dependency>

</dependencies>

</project>skyDownStream - How to use a downstream system when it runs

Sample Request Message

[

{

"payId": "1",

"status": "6"

},

{

"payId": "2",

"status": "6"

},

{

"payId": "3",

"status": "6"

},

{

"payId": "4",

"status": "6"

},

{

"payId": "5",

"status": "6"

},

{

"payId": "10",

"status": "6"

}

]skypayment database table structure

CREATE TABLE `sky_payment` ( `pay_id` int(11) NOT NULL AUTO_INCREMENT, `status` tinyint(3) DEFAULT NULL, `transf_amount` varchar(45) DEFAULT NULL, `created_date` timestamp NULL DEFAULT CURRENT_TIMESTAMP, `updated_date` timestamp NULL DEFAULT NULL ON UPDATE CURRENT_TIMESTAMP, PRIMARY KEY (`pay_id`) ) ENGINE=InnoDB AUTO_INCREMENT=1 DEFAULT CHARSET=utf8mb4;

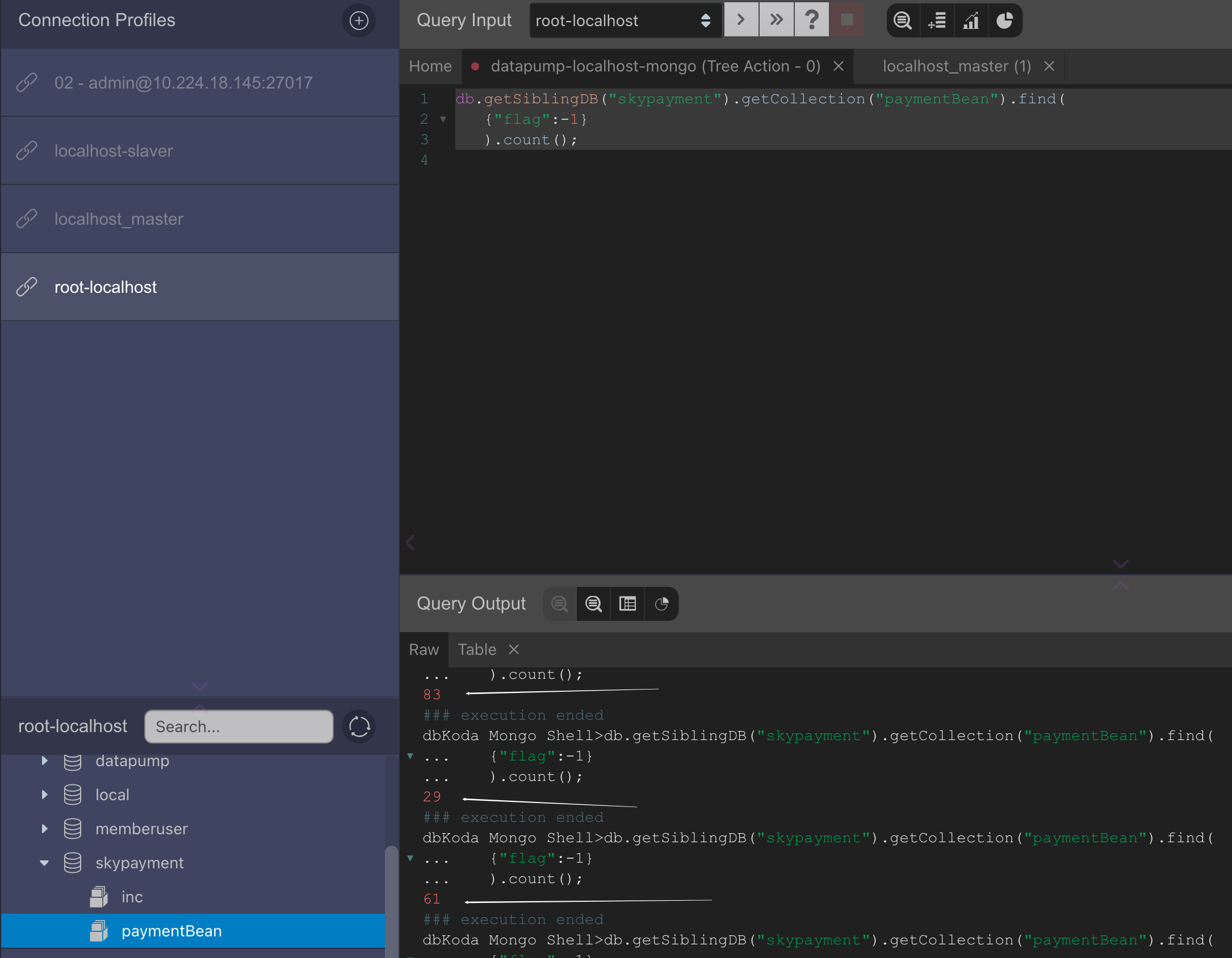

I wrote a store procedure and fed more than 8 million pieces of data to this table. Because after more than 5 million data, such batch operations, if not done well, are prone to database deadlocks, master-slave delays, database transaction lengths, which can fully simulate the online production environment.

In fact, the amount of data in our online production environment related to this batch-going form is not as large as the amount of data I simulated myself.

Detailed Design-RabbitMq Solution

The SkyDownStream downstream system is done, with the emphasis on our simulation of the SkyPayment system of our Taiwan/Shop trading system.

Let's start with RabbitMQ + Controlled Consumption + Controlled Threads

Start with the parent pom shared by SkyPayment and SkyDownStream systems.

parent pom.xml

<project xmlns="http://maven.apache.org/POM/4.0.0"

xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance"

xsi:schemaLocation="http://maven.apache.org/POM/4.0.0 https://maven.apache.org/xsd/maven-4.0.0.xsd">

<modelVersion>4.0.0</modelVersion>

<groupId>org.mk.demo</groupId>

<artifactId>springboot-demo</artifactId>

<version>0.0.1</version>

<packaging>pom</packaging>

<properties>

<java.version>1.8</java.version>

<jacoco.version>0.8.3</jacoco.version>

<aldi-sharding.version>0.0.1</aldi-sharding.version>

<spring-boot.version>2.4.2</spring-boot.version>

<!-- <spring-boot.version>2.3.1.RELEASE</spring-boot.version> -->

<!-- spring-boot.version>2.0.6.RELEASE</spring-boot.version> <spring-cloud-zk-discovery.version>2.1.3.RELEASE</spring-cloud-zk-discovery.version -->

<zookeeper.version>3.4.13</zookeeper.version>

<!-- <spring-cloud.version>Greenwich.SR5</spring-cloud.version> -->

<spring-cloud.version>2020.0.1</spring-cloud.version>

<dubbo.version>2.7.3</dubbo.version>

<curator-framework.version>4.0.1</curator-framework.version>

<curator-recipes.version>2.8.0</curator-recipes.version>

<!-- druid.version>1.1.20</druid.version -->

<druid.version>1.2.6</druid.version>

<guava.version>27.0.1-jre</guava.version>

<fastjson.version>1.2.59</fastjson.version>

<dubbo-registry-nacos.version>2.7.3</dubbo-registry-nacos.version>

<nacos-client.version>1.1.4</nacos-client.version>

<!-- mysql-connector-java.version>8.0.13</mysql-connector-java.version -->

<mysql-connector-java.version>5.1.46</mysql-connector-java.version>

<disruptor.version>3.4.2</disruptor.version>

<aspectj.version>1.8.13</aspectj.version>

<spring.data.redis>1.8.14-RELEASE</spring.data.redis>

<seata.version>1.0.0</seata.version>

<netty.version>4.1.42.Final</netty.version>

<nacos.spring.version>0.1.4</nacos.spring.version>

<lombok.version>1.16.22</lombok.version>

<javax.servlet.version>3.1.0</javax.servlet.version>

<mybatis.version>2.1.0</mybatis.version>

<pagehelper-mybatis.version>1.2.3</pagehelper-mybatis.version>

<spring.kafka.version>1.3.10.RELEASE</spring.kafka.version>

<kafka.client.version>1.0.2</kafka.client.version>

<shardingsphere.jdbc.version>4.0.0</shardingsphere.jdbc.version>

<xmemcached.version>2.4.6</xmemcached.version>

<swagger.version>2.9.2</swagger.version>

<swagger.bootstrap.ui.version>1.9.6</swagger.bootstrap.ui.version>

<swagger.model.version>1.5.23</swagger.model.version>

<swagger-annotations.version>1.5.22</swagger-annotations.version>

<swagger-models.version>1.5.22</swagger-models.version>

<swagger-bootstrap-ui.version>1.9.5</swagger-bootstrap-ui.version>

<sky-sharding-jdbc.version>0.0.1</sky-sharding-jdbc.version>

<cxf.version>3.1.6</cxf.version>

<jackson-databind.version>2.11.1</jackson-databind.version>

<gson.version>2.8.6</gson.version>

<groovy.version>2.5.8</groovy.version>

<logback-ext-spring.version>0.1.4</logback-ext-spring.version>

<jcl-over-slf4j.version>1.7.25</jcl-over-slf4j.version>

<spock-spring.version>2.0-M2-groovy-2.5</spock-spring.version>

<xxljob.version>2.2.0</xxljob.version>

<java-jwt.version>3.10.0</java-jwt.version>

<commons-lang.version>2.6</commons-lang.version>

<hutool-crypto.version>5.0.0</hutool-crypto.version>

<maven.compiler.source>${java.version}</maven.compiler.source>

<maven.compiler.target>${java.version}</maven.compiler.target>

<compiler.plugin.version>3.8.1</compiler.plugin.version>

<war.plugin.version>3.2.3</war.plugin.version>

<jar.plugin.version>3.1.1</jar.plugin.version>

<quartz.version>2.2.3</quartz.version>

<h2.version>1.4.197</h2.version>

<zkclient.version>3.4.14</zkclient.version>

<httpcore.version>4.4.10</httpcore.version>

<httpclient.version>4.5.6</httpclient.version>

<mockito-core.version>3.0.0</mockito-core.version>

<project.build.sourceEncoding>UTF-8</project.build.sourceEncoding>

<project.reporting.outputEncoding>UTF-8</project.reporting.outputEncoding>

<oseq-aldi.version>2.0.22-RELEASE</oseq-aldi.version>

<poi.version>4.1.0</poi.version>

<poi-ooxml.version>4.1.0</poi-ooxml.version>

<poi-ooxml-schemas.version>4.1.0</poi-ooxml-schemas.version>

<dom4j.version>1.6.1</dom4j.version>

<xmlbeans.version>3.1.0</xmlbeans.version>

<java-jwt.version>3.10.0</java-jwt.version>

<commons-lang.version>2.6</commons-lang.version>

<hutool-crypto.version>5.0.0</hutool-crypto.version>

<nacos-discovery.version>2.2.5.RELEASE</nacos-discovery.version>

<spring-cloud-alibaba.version>2.2.1.RELEASE</spring-cloud-alibaba.version>

<redission.version>3.16.1</redission.version>

<log4j2.version>2.17.1</log4j2.version>

<redis-common.version>0.0.1</redis-common.version>

<common-util.version>0.0.1</common-util.version>

<db-common.version>0.0.1</db-common.version>

<httptools-common.version>0.0.1</httptools-common.version>

</properties>

<dependencyManagement>

<dependencies>

<!-- redis -->

<!-- redission must -->

<dependency>

<groupId>org.redisson</groupId>

<artifactId>redisson-spring-boot-starter</artifactId>

<version>${redission.version}</version>

<!-- <exclusions> <exclusion> <groupId>org.redisson</groupId> <artifactId>redisson-spring-data-23</artifactId>

</exclusion> </exclusions> -->

</dependency>

<dependency>

<groupId>org.redisson</groupId>

<artifactId>redisson-spring-data-21</artifactId>

<version>${redission.version}</version>

</dependency>

<dependency>

<groupId>com.alibaba.cloud</groupId>

<artifactId>spring-cloud-alibaba-dependencies</artifactId>

<version>${spring-cloud-alibaba.version}</version>

<type>pom</type>

<scope>import</scope>

</dependency>

<dependency>

<groupId>com.alibaba.cloud</groupId>

<artifactId>spring-cloud-starter-alibaba-nacos-discovery

</artifactId>

<version>${nacos-discovery.version}</version>

</dependency>

<dependency>

<groupId>com.aldi.jdbc</groupId>

<artifactId>sharding</artifactId>

<version>${aldi-sharding.version}</version>

</dependency>

<!-- jwt -->

<dependency>

<groupId>com.auth0</groupId>

<artifactId>java-jwt</artifactId>

<version>${java-jwt.version}</version>

</dependency>

<dependency>

<groupId>cn.hutool</groupId>

<artifactId>hutool-crypto</artifactId>

<version>${hutool-crypto.version}</version>

</dependency>

<!-- poi must start -->

<dependency>

<groupId>org.apache.poi</groupId>

<artifactId>poi</artifactId>

<version>${poi.version}</version>

</dependency>

<dependency>

<groupId>org.apache.poi</groupId>

<artifactId>poi-ooxml</artifactId>

<version>${poi-ooxml.version}</version>

</dependency>

<dependency>

<groupId>org.apache.poi</groupId>

<artifactId>poi-ooxml</artifactId>

<version>${poi-ooxml.version}</version>

</dependency>

<dependency>

<groupId>org.apache.poi</groupId>

<artifactId>poi-ooxml-schemas</artifactId>

<version>${poi-ooxml-schemas.version}</version>

</dependency>

<dependency>

<groupId>dom4j</groupId>

<artifactId>dom4j</artifactId>

<version>${dom4j.version}</version>

</dependency>

<dependency>

<groupId>org.apache.xmlbeans</groupId>

<artifactId>xmlbeans</artifactId>

<version>${xmlbeans.version}</version>

</dependency>

<!-- poi must end -->

<dependency>

<groupId>com.odianyun.architecture</groupId>

<artifactId>oseq-aldi</artifactId>

<version>${oseq-aldi.version}</version>

</dependency>

<dependency>

<groupId>org.apache.httpcomponents</groupId>

<artifactId>httpcore</artifactId>

<version>${httpcore.version}</version>

</dependency>

<dependency>

<groupId>org.apache.httpcomponents</groupId>

<artifactId>httpclient</artifactId>

<version>${httpclient.version}</version>

</dependency>

<dependency>

<groupId>org.apache.zookeeper</groupId>

<artifactId>zookeeper</artifactId>

<version>${zkclient.version}</version>

<exclusions>

<exclusion>

<artifactId>log4j</artifactId>

<groupId>log4j</groupId>

</exclusion>

<exclusion>

<groupId>org.slf4j</groupId>

<artifactId>slf4j-log4j12</artifactId>

</exclusion>

</exclusions>

</dependency>

<!--quartz rely on -->

<dependency>

<groupId>org.quartz-scheduler</groupId>

<artifactId>quartz</artifactId>

<version>${quartz.version}</version>

</dependency>

<dependency>

<groupId>org.quartz-scheduler</groupId>

<artifactId>quartz-jobs</artifactId>

<version>${quartz.version}</version>

</dependency>

<!-- spring cloud base -->

<dependency>

<groupId>org.springframework.cloud</groupId>

<artifactId>spring-cloud-dependencies</artifactId>

<version>Hoxton.SR7</version>

<type>pom</type>

<scope>import</scope>

</dependency>

<!-- Use mockito -->

<dependency>

<groupId>org.mockito</groupId>

<artifactId>mockito-core</artifactId>

<version>${mockito-core.version}</version>

<scope>test</scope>

</dependency>

<!-- jwt token -->

<dependency>

<groupId>com.auth0</groupId>

<artifactId>java-jwt</artifactId>

<version>${java-jwt.version}</version>

</dependency>

<dependency>

<groupId>cn.hutool</groupId>

<artifactId>hutool-crypto</artifactId>

<version>${hutool-crypto.version}</version>

</dependency>

<dependency>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-starter-actuator</artifactId>

<version>${spring-boot.version}</version>

</dependency>

<!-- Logback -->

<dependency>

<groupId>org.logback-extensions</groupId>

<artifactId>logback-ext-spring</artifactId>

<version>${logback-ext-spring.version}</version>

</dependency>

<dependency>

<groupId>org.slf4j</groupId>

<artifactId>jcl-over-slf4j</artifactId>

<version>${jcl-over-slf4j.version}</version>

</dependency>

<!-- Database for unit testing -->

<dependency>

<groupId>com.h2database</groupId>

<artifactId>h2</artifactId>

<version>${h2.version}</version>

</dependency>

<dependency>

<groupId>org.apache.zookeeper</groupId>

<artifactId>zookeeper</artifactId>

<version>${zookeeper.version}</version>

<exclusions>

<exclusion>

<groupId>org.slf4j</groupId>

<artifactId>slf4j-log4j12</artifactId>

</exclusion>

<exclusion>

<groupId>log4j</groupId>

<artifactId>log4j</artifactId>

</exclusion>

</exclusions>

</dependency>

<!-- xxl-rpc-core -->

<dependency>

<groupId>com.xuxueli</groupId>

<artifactId>xxl-job-core</artifactId>

<version>${xxljob.version}</version>

</dependency>

<dependency>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-starter-test</artifactId>

<version>${spring-boot.version}</version>

<scope>test</scope>

<exclusions>

<exclusion>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-starter-logging</artifactId>

</exclusion>

<exclusion>

<groupId>org.slf4j</groupId>

<artifactId>slf4j-log4j12</artifactId>

</exclusion>

</exclusions>

</dependency>

<dependency>

<groupId>org.spockframework</groupId>

<artifactId>spock-core</artifactId>

<version>1.3-groovy-2.4</version>

<scope>test</scope>

</dependency>

<dependency>

<groupId>org.spockframework</groupId>

<artifactId>spock-spring</artifactId>

<version>1.3-RC1-groovy-2.4</version>

<scope>test</scope>

</dependency>

<dependency>

<groupId>org.codehaus.groovy</groupId>

<artifactId>groovy-all</artifactId>

<version>2.4.6</version>

</dependency>

<dependency>

<groupId>com.google.code.gson</groupId>

<artifactId>gson</artifactId>

<version>${gson.version}</version>

</dependency>

<dependency>

<groupId>com.fasterxml.jackson.core</groupId>

<artifactId>jackson-databind</artifactId>

<version>${jackson-databind.version}</version>

</dependency>

<dependency>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-starter-web-services</artifactId>

<version>${spring-boot.version}</version>

</dependency>

<dependency>

<groupId>org.apache.cxf</groupId>

<artifactId>cxf-rt-frontend-jaxws</artifactId>

<version>${cxf.version}</version>

</dependency>

<dependency>

<groupId>org.apache.cxf</groupId>

<artifactId>cxf-rt-transports-http</artifactId>

<version>${cxf.version}</version>

</dependency>

<dependency>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-starter-security</artifactId>

<version>${spring-boot.version}</version>

</dependency>

<dependency>

<groupId>io.github.swagger2markup</groupId>

<artifactId>swagger2markup</artifactId>

<version>1.3.1</version>

</dependency>

<dependency>

<groupId>io.springfox</groupId>

<artifactId>springfox-swagger2</artifactId>

<version>${swagger.version}</version>

</dependency>

<dependency>

<groupId>io.springfox</groupId>

<artifactId>springfox-swagger-ui</artifactId>

<version>${swagger.version}</version>

</dependency>

<dependency>

<groupId>com.github.xiaoymin</groupId>

<artifactId>swagger-bootstrap-ui</artifactId>

<version>${swagger-bootstrap-ui.version}</version>

</dependency>

<dependency>

<groupId>io.swagger</groupId>

<artifactId>swagger-annotations</artifactId>

<version>${swagger-annotations.version}</version>

</dependency>

<dependency>

<groupId>io.swagger</groupId>

<artifactId>swagger-models</artifactId>

<version>${swagger-models.version}</version>

</dependency>

<dependency>

<groupId>org.sky</groupId>

<artifactId>sky-sharding-jdbc</artifactId>

<version>${sky-sharding-jdbc.version}</version>

</dependency>

<dependency>

<groupId>com.googlecode.xmemcached</groupId>

<artifactId>xmemcached</artifactId>

<version>${xmemcached.version}</version>

</dependency>

<dependency>

<groupId>org.apache.shardingsphere</groupId>

<artifactId>sharding-jdbc-core</artifactId>

<version>${shardingsphere.jdbc.version}</version>

</dependency>

<!-- <dependency> <groupId>org.springframework.kafka</groupId> <artifactId>spring-kafka</artifactId>

<version>${spring.kafka.version}</version> </dependency> <dependency> <groupId>org.apache.kafka</groupId>

<artifactId>kafka-clients</artifactId> <version>${kafka.client.version}</version>

</dependency> -->

<dependency>

<groupId>org.springframework.kafka</groupId>

<artifactId>spring-kafka</artifactId>

<version>1.3.10.RELEASE</version>

</dependency>

<dependency>

<groupId>org.mybatis.spring.boot</groupId>

<artifactId>mybatis-spring-boot-starter</artifactId>

<version>${mybatis.version}</version>

</dependency>

<dependency>

<groupId>com.github.pagehelper</groupId>

<artifactId>pagehelper-spring-boot-starter</artifactId>

<version>${pagehelper-mybatis.version}</version>

</dependency>

<dependency>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-starter-web</artifactId>

<version>${spring-boot.version}</version>

<exclusions>

<exclusion>

<groupId>org.slf4j</groupId>

<artifactId>slf4j-log4j12</artifactId>

</exclusion>

<exclusion>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-starter-logging</artifactId>

</exclusion>

</exclusions>

</dependency>

<dependency>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-dependencies</artifactId>

<version>${spring-boot.version}</version>

<type>pom</type>

<scope>import</scope>

</dependency>

<dependency>

<groupId>org.apache.dubbo</groupId>

<artifactId>dubbo-spring-boot-starter</artifactId>

<version>${dubbo.version}</version>

<exclusions>

<exclusion>

<groupId>org.slf4j</groupId>

<artifactId>slf4j-log4j12</artifactId>

</exclusion>

<exclusion>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-starter-logging</artifactId>

</exclusion>

</exclusions>

</dependency>

<dependency>

<groupId>org.apache.dubbo</groupId>

<artifactId>dubbo</artifactId>

<version>${dubbo.version}</version>

<exclusions>

<exclusion>

<groupId>javax.servlet</groupId>

<artifactId>servlet-api</artifactId>

</exclusion>

</exclusions>

</dependency>

<dependency>

<groupId>org.apache.curator</groupId>

<artifactId>curator-framework</artifactId>

<version>${curator-framework.version}</version>

</dependency>

<dependency>

<groupId>org.apache.curator</groupId>

<artifactId>curator-recipes</artifactId>

<version>${curator-recipes.version}</version>

</dependency>

<dependency>

<groupId>mysql</groupId>

<artifactId>mysql-connector-java</artifactId>

<version>${mysql-connector-java.version}</version>

</dependency>

<dependency>

<groupId>com.alibaba</groupId>

<artifactId>druid</artifactId>

<version>${druid.version}</version>

</dependency>

<dependency>

<groupId>com.alibaba</groupId>

<artifactId>druid-spring-boot-starter</artifactId>

<version>${druid.version}</version>

</dependency>

<dependency>

<groupId>com.lmax</groupId>

<artifactId>disruptor</artifactId>

<version>${disruptor.version}</version>

</dependency>

<dependency>

<groupId>com.google.guava</groupId>

<artifactId>guava</artifactId>

<version>${guava.version}</version>

</dependency>

<dependency>

<groupId>com.alibaba</groupId>

<artifactId>fastjson</artifactId>

<version>${fastjson.version}</version>

</dependency>

<dependency>

<groupId>org.apache.dubbo</groupId>

<artifactId>dubbo-registry-nacos</artifactId>

<version>${dubbo-registry-nacos.version}</version>

</dependency>

<dependency>

<groupId>com.alibaba.nacos</groupId>

<artifactId>nacos-client</artifactId>

<version>${nacos-client.version}</version>

</dependency>

<dependency>

<groupId>org.aspectj</groupId>

<artifactId>aspectjweaver</artifactId>

<version>${aspectj.version}</version>

</dependency>

<dependency>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-starter-data-redis</artifactId>

<version>${spring-boot.version}</version>

</dependency>

<dependency>

<groupId>io.seata</groupId>

<artifactId>seata-all</artifactId>

<version>${seata.version}</version>

</dependency>

<dependency>

<groupId>io.netty</groupId>

<artifactId>netty-all</artifactId>

<version>${netty.version}</version>

</dependency>

<dependency>

<groupId>org.projectlombok</groupId>

<artifactId>lombok</artifactId>

<version>${lombok.version}</version>

</dependency>

<!-- https://mvnrepository.com/artifact/com.alibaba.boot/nacos-config-spring-boot-starter -->

<dependency>

<groupId>com.alibaba.boot</groupId>

<artifactId>nacos-config-spring-boot-starter</artifactId>

<version>${nacos.spring.version}</version>

<exclusions>

<exclusion>

<artifactId>nacos-client</artifactId>

<groupId>com.alibaba.nacos</groupId>

</exclusion>

</exclusions>

</dependency>

<dependency>

<groupId>net.sourceforge.groboutils</groupId>

<artifactId>groboutils-core</artifactId>

<version>5</version>

</dependency>

<dependency>

<groupId>commons-lang</groupId>

<artifactId>commons-lang</artifactId>

<version>${commons-lang.version}</version>

</dependency>

</dependencies>

</dependencyManagement>

<modules>

<module>redis-common</module>

<module>common-util</module>

<module>db-common</module>

<module>skypayment</module>

<module>skydownstreamsystem</module>

<module>httptools-common</module>

</modules>

</project>SkyPayment's pom.xml file

<project xmlns="http://maven.apache.org/POM/4.0.0"

xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance"

xsi:schemaLocation="http://maven.apache.org/POM/4.0.0 https://maven.apache.org/xsd/maven-4.0.0.xsd">

<modelVersion>4.0.0</modelVersion>

<parent>

<groupId>org.mk.demo</groupId>

<artifactId>springboot-demo</artifactId>

<version>0.0.1</version>

</parent>

<artifactId>skypayment</artifactId>

<dependencies>

<dependency>

<groupId>io.netty</groupId>

<artifactId>netty-all</artifactId>

</dependency>

<dependency>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-starter-web</artifactId>

<version>${spring-boot.version}</version>

<exclusions>

<exclusion>

<groupId>org.slf4j</groupId>

<artifactId>slf4j-log4j12</artifactId>

</exclusion>

<exclusion>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-starter-logging</artifactId>

</exclusion>

</exclusions>

</dependency>

<!-- quartz dependencies -->

<dependency>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-starter-quartz</artifactId>

<exclusions>

<exclusion>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-starter-logging</artifactId>

</exclusion>

</exclusions>

</dependency>

<dependency>

<groupId>org.quartz-scheduler</groupId>

<artifactId>quartz</artifactId>

</dependency>

<dependency>

<groupId>org.quartz-scheduler</groupId>

<artifactId>quartz-jobs</artifactId>

</dependency>

<!-- mq must -->

<dependency>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-starter-amqp</artifactId>

<exclusions>

<exclusion>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-starter-logging</artifactId>

</exclusion>

</exclusions>

</dependency>

<!-- mysql must -->

<dependency>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-starter-jdbc</artifactId>

<exclusions>

<exclusion>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-starter-logging</artifactId>

</exclusion>

</exclusions>

</dependency>

<dependency>

<groupId>mysql</groupId>

<artifactId>mysql-connector-java</artifactId>

</dependency>

<dependency>

<groupId>com.alibaba</groupId>

<artifactId>druid</artifactId>

</dependency>

<!-- redis must -->

<dependency>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-starter-data-redis</artifactId>

<exclusions>

<exclusion>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-starter-logging</artifactId>

</exclusion>

<exclusion>

<groupId>org.slf4j</groupId>

<artifactId>slf4j-log4j12</artifactId>

</exclusion>

</exclusions>

</dependency>

<dependency>

<groupId>org.mybatis.spring.boot</groupId>

<artifactId>mybatis-spring-boot-starter</artifactId>

</dependency>

<!-- jedis must -->

<dependency>

<groupId>redis.clients</groupId>

<artifactId>jedis</artifactId>

</dependency>

<!-- redission must -->

<dependency>

<groupId>org.redisson</groupId>

<artifactId>redisson-spring-boot-starter</artifactId>

<version>${redission.version}</version>

<!-- <exclusions> <exclusion> <groupId>org.redisson</groupId> <artifactId>redisson-spring-data-23</artifactId>

</exclusion> </exclusions> -->

</dependency>

<dependency>

<groupId>org.redisson</groupId>

<artifactId>redisson-spring-data-21</artifactId>

<version>${redission.version}</version>

</dependency>

<dependency>

<groupId>org.apache.commons</groupId>

<artifactId>commons-lang3</artifactId>

</dependency>

<dependency>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-starter-test</artifactId>

<scope>test</scope>

<exclusions>

<exclusion>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-starter-logging</artifactId>

</exclusion>

<exclusion>

<groupId>org.slf4j</groupId>

<artifactId>slf4j-log4j12</artifactId>

</exclusion>

</exclusions>

</dependency>

<dependency>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-starter-log4j2</artifactId>

<exclusions>

<exclusion>

<groupId>org.apache.logging.log4j</groupId>

<artifactId>log4j-core</artifactId>

</exclusion>

<exclusion>

<groupId>org.apache.logging.log4j</groupId>

<artifactId>log4j-api</artifactId>

</exclusion>

</exclusions>

</dependency>

<!-- Old version excluded log4j2 Upgrade to the latest 2.17.1 -->

<dependency>

<groupId>org.apache.logging.log4j</groupId>

<artifactId>log4j-api</artifactId>

<version>${log4j2.version}</version>

</dependency>

<dependency>

<groupId>org.apache.logging.log4j</groupId>

<artifactId>log4j-core</artifactId>

<version>${log4j2.version}</version>

</dependency>

<!-- Old version excluded log4j2 Upgrade to the latest 2.15.0 -->

<dependency>

<groupId>org.aspectj</groupId>

<artifactId>aspectjweaver</artifactId>

</dependency>

<dependency>

<groupId>com.lmax</groupId>

<artifactId>disruptor</artifactId>

</dependency>

<dependency>

<groupId>com.alibaba</groupId>

<artifactId>fastjson</artifactId>

</dependency>

<dependency>

<groupId>com.fasterxml.jackson.core</groupId>

<artifactId>jackson-databind</artifactId>

</dependency>

<dependency>

<groupId>com.google.guava</groupId>

<artifactId>guava</artifactId>

</dependency>

<dependency>

<groupId>com.alibaba</groupId>

<artifactId>fastjson</artifactId>

</dependency>

<dependency>

<groupId>com.fasterxml.jackson.core</groupId>

<artifactId>jackson-databind</artifactId>

</dependency>

<dependency>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-starter-data-mongodb</artifactId>

</dependency>

<!-- Project Common Framework -->

<dependency>

<groupId>org.mk.demo</groupId>

<artifactId>redis-common</artifactId>

<version>${redis-common.version}</version>

</dependency>

<dependency>

<groupId>org.mk.demo</groupId>

<artifactId>common-util</artifactId>

<version>${common-util.version}</version>

</dependency>

<dependency>

<groupId>org.mk.demo</groupId>

<artifactId>db-common</artifactId>

<version>${db-common.version}</version>

</dependency>

<dependency>

<groupId>org.mk.demo</groupId>

<artifactId>httptools-common</artifactId>

<version>${httptools-common.version}</version>

</dependency>

</dependencies>

</project>PaymentController.java

This file is the callback interface that accepts jmeter pushing into massive amounts of concurrent data.

/**

* System project name org.mk.demo.skypayment.controller PaymentController.java

*

* Feb 3, 2022-12:08:59 AM 2022XX Company-Copyright

*

*/

package org.mk.demo.skypayment.controller;

import javax.annotation.Resource;

import org.apache.logging.log4j.LogManager;

import org.apache.logging.log4j.Logger;

import org.mk.demo.util.response.ResponseBean;

import org.mk.demo.util.response.ResponseCodeEnum;

import org.mk.demo.skypayment.service.Publisher;

import org.mk.demo.skypayment.vo.PaymentBean;

import org.springframework.web.bind.annotation.PostMapping;

import org.springframework.web.bind.annotation.RequestBody;

import org.springframework.web.bind.annotation.ResponseBody;

import org.springframework.web.bind.annotation.RestController;

import reactor.core.publisher.Mono;

/**

*

* PaymentController

*

*

* Feb 3, 2022 12:08:59 AM

*

* @version 1.0.0

*

*/

@RestController

public class PaymentController {

private Logger logger = LogManager.getLogger(this.getClass());

@Resource

private Publisher publisher;

@PostMapping(value = "/updateStatus", produces = "application/json")

@ResponseBody

public Mono<ResponseBean> updateStatus(@RequestBody PaymentBean payment) {

ResponseBean resp = new ResponseBean();

try {

publisher.publishPaymentStatusChange(payment);

resp = new ResponseBean(ResponseCodeEnum.SUCCESS.getCode(), "success");

} catch (Exception e) {

resp = new ResponseBean(ResponseCodeEnum.FAIL.getCode(), "system error");

logger.error(">>>>>>updateStatus error: " + e.getMessage(), e);

}

return Mono.just(resp);

}

}

This interface accepts jmeter's "data that's surging in"

publisher.java

As you can see from the controller, when you enter a data, you write a data into the mq

/**

* System project name org.mk.demo.rabbitmqdemo.service Publisher.java

*

* Nov 19, 2021-11:38:39 AM 2021XX Company-Copyright

*

*/

package org.mk.demo.skypayment.service;

import org.mk.demo.skypayment.config.mq.RabbitMqConfig;

import org.mk.demo.skypayment.vo.PaymentBean;

import org.slf4j.Logger;

import org.slf4j.LoggerFactory;

import org.springframework.amqp.rabbit.core.RabbitTemplate;

import org.springframework.amqp.support.converter.Jackson2JsonMessageConverter;

import org.springframework.beans.factory.annotation.Autowired;

import org.springframework.stereotype.Component;

/**

*

* Publisher

*

*

* Nov 19, 2021 11:38:39 AM

*

* @version 1.0.0

*

*/

@Component

public class Publisher {

private Logger logger = LoggerFactory.getLogger(this.getClass());

@Autowired

private RabbitTemplate rabbitTemplate;

public void publishPaymentStatusChange(PaymentBean payment) {

try {

rabbitTemplate.setMessageConverter(new Jackson2JsonMessageConverter());

rabbitTemplate.convertAndSend(RabbitMqConfig.PAYMENT_EXCHANGE, RabbitMqConfig.PAYMENT_QUEUE, payment);

} catch (Exception ex) {

logger.error(">>>>>>publish exception: " + ex.getMessage(), ex);

}

}

}

Configuration of corresponding rabbitTemplate

RabbitMqConfig.java

/**

* System project name org.mk.demo.rabbitmqdemo.config RabbitMqConfig.java

*

* Nov 19, 2021-11:30:43 AM 2021XX Company-Copyright

*

*/

package org.mk.demo.skypayment.config.mq;

import org.springframework.amqp.core.Binding;

import org.springframework.amqp.core.BindingBuilder;

import org.springframework.amqp.core.ExchangeBuilder;

import org.springframework.amqp.core.FanoutExchange;

import org.springframework.amqp.core.Queue;

import org.springframework.amqp.core.QueueBuilder;

import org.springframework.amqp.core.TopicExchange;

import org.springframework.amqp.rabbit.connection.ConnectionFactory;

import org.springframework.amqp.rabbit.core.RabbitTemplate;

import org.springframework.amqp.support.converter.Jackson2JsonMessageConverter;

import org.springframework.beans.factory.annotation.Value;

import org.springframework.context.annotation.Bean;

import org.springframework.stereotype.Component;

/**

*

* RabbitMqConfig

*

*

* Nov 19, 2021 11:30:43 AM

*

* @version 1.0.0

*

*/

@Component

public class RabbitMqConfig {

/**

* The main test is a dead letter queue, whose function is mainly to achieve delayed consumption. The principle is to send the message to the normal queue first, and the normal queue has a time-out. When the time is reached, the message is sent to the dead letter queue automatically, and then the consumer consumes the message in the dead letter queue.

*/

public static final String PAYMENT_EXCHANGE = "payment.exchange";

public static final String PAYMENT_DL_EXCHANGE = "payment.dl.exchange";

public static final String PAYMENT_QUEUE = "payment.queue";

public static final String PAYMENT_DEAD_QUEUE = "payment.queue.dead";

public static final String PAYMENT_FANOUT_EXCHANGE = "paymentFanoutExchange";

/**

* The unit is in microseconds.

*/

@Value("${queue.expire:5000}")

private long queueExpire;

/**

* Create a regular switch.

*/

@Bean

public TopicExchange paymentExchange() {

return (TopicExchange)ExchangeBuilder.topicExchange(PAYMENT_EXCHANGE).durable(true).build();

}

/**

* Create Dead Letter Switch.

*/

@Bean

public TopicExchange paymentExchangeDl() {

return (TopicExchange)ExchangeBuilder.topicExchange(PAYMENT_DL_EXCHANGE).durable(true).build();

}

/**

* Create a regular queue.

*/

@Bean

public Queue paymentQueue() {

return QueueBuilder.durable(PAYMENT_QUEUE).withArgument("x-dead-letter-exchange", PAYMENT_DL_EXCHANGE)// Set Dead Letter Switch

.withArgument("x-message-ttl", queueExpire).withArgument("x-dead-letter-routing-key", PAYMENT_DEAD_QUEUE)// Set Dead Letter routingKey

.build();

}

/**

* Create a dead letter queue.

*/

@Bean

public Queue paymentDelayQueue() {

return QueueBuilder.durable(PAYMENT_DEAD_QUEUE).build();

}

/**

* Bind a dead letter queue.

*/

@Bean

public Binding bindDeadBuilders() {

return BindingBuilder.bind(paymentDelayQueue()).to(paymentExchangeDl()).with(PAYMENT_DEAD_QUEUE);

}

/**

* Bind to a regular queue.

*

* @return

*/

@Bean

public Binding bindBuilders() {

return BindingBuilder.bind(paymentQueue()).to(paymentExchange()).with(PAYMENT_QUEUE);

}

/**

* Broadcast switch.

*

* @return

*/

@Bean

public FanoutExchange fanoutExchange() {

return new FanoutExchange(PAYMENT_FANOUT_EXCHANGE);

}

@Bean

public RabbitTemplate rabbitTemplate(final ConnectionFactory connectionFactory) {

final RabbitTemplate rabbitTemplate = new RabbitTemplate(connectionFactory);

rabbitTemplate.setMessageConverter(producerJackson2MessageConverter());

return rabbitTemplate;

}

@Bean

public Jackson2JsonMessageConverter producerJackson2MessageConverter() {

return new Jackson2JsonMessageConverter();

}

}

RabbitMqListenerConfig.java

/**

* System project name org.mk.demo.skypayment.config.mq RabbitMqListenerConfig.java

*

* Feb 3, 2022-12:35:21 AM 2022XX Company-Copyright

*

*/

package org.mk.demo.skypayment.config.mq;

import org.springframework.amqp.rabbit.annotation.RabbitListenerConfigurer;

import org.springframework.amqp.rabbit.listener.RabbitListenerEndpointRegistrar;

import org.springframework.context.annotation.Bean;

import org.springframework.context.annotation.Configuration;

import org.springframework.messaging.converter.MappingJackson2MessageConverter;

import org.springframework.messaging.handler.annotation.support.DefaultMessageHandlerMethodFactory;

import org.springframework.messaging.handler.annotation.support.MessageHandlerMethodFactory;

/**

*

* RabbitMqListenerConfig

*

*

* Feb 3, 2022 12:35:21 AM

*

* @version 1.0.0

*

*/

@Configuration

public class RabbitMqListenerConfig implements RabbitListenerConfigurer {

/* (non-Javadoc)

* @see org.springframework.amqp.rabbit.annotation.RabbitListenerConfigurer#configureRabbitListeners(org.springframework.amqp.rabbit.listener.RabbitListenerEndpointRegistrar)

*/

@Override

public void configureRabbitListeners(RabbitListenerEndpointRegistrar registor) {

registor.setMessageHandlerMethodFactory(messageHandlerMethodFactory());

}

@Bean

MessageHandlerMethodFactory messageHandlerMethodFactory() {

DefaultMessageHandlerMethodFactory messageHandlerMethodFactory = new DefaultMessageHandlerMethodFactory();

messageHandlerMethodFactory.setMessageConverter(consumerJackson2MessageConverter());

return messageHandlerMethodFactory;

}

@Bean

public MappingJackson2MessageConverter consumerJackson2MessageConverter() {

return new MappingJackson2MessageConverter();

}

}

RabbitMQ consumer-Subscriber.java

/**

* System project name org.mk.demo.rabbitmqdemo.service Subscriber.java

*

* Nov 19, 2021-11:47:02 AM 2021XX Company-Copyright

*

*/

package org.mk.demo.skypayment.service;

import javax.annotation.Resource;

import org.mk.demo.skypayment.config.mq.RabbitMqConfig;

import org.mk.demo.skypayment.vo.PaymentBean;

import org.slf4j.Logger;

import org.slf4j.LoggerFactory;

import org.springframework.amqp.rabbit.annotation.RabbitListener;

import org.springframework.stereotype.Component;

/**

*

* Subscriber

*

*

* Nov 19, 2021 11:47:02 AM

*

* @version 1.0.0

*

*/

@Component

public class Subscriber {

private Logger logger = LoggerFactory.getLogger(this.getClass());

@Resource

private PaymentService paymentService;

@RabbitListener(queues = RabbitMqConfig.PAYMENT_QUEUE)

public void receiveDL(PaymentBean payment) {

try {

if (payment != null) {

paymentService.UpdateRemotePaymentStatusById(payment, false);

}

} catch (Exception ex) {

logger.error(">>>>>>Subscriber from dead queue exception: " + ex.getMessage(), ex);

}

}

}

This consumer side has our core configuration in application. The following are configured in YML

rabbitmq:

publisher-confirm-type: CORRELATED

listener:

## simple type

simple:

#Minimum number of consumers

concurrency: 32

#Maximum number of consumers

maxConcurrency: 64

#Specifies how many messages a request can handle and, if there are transactions, must be greater than or equal to the number of transactions

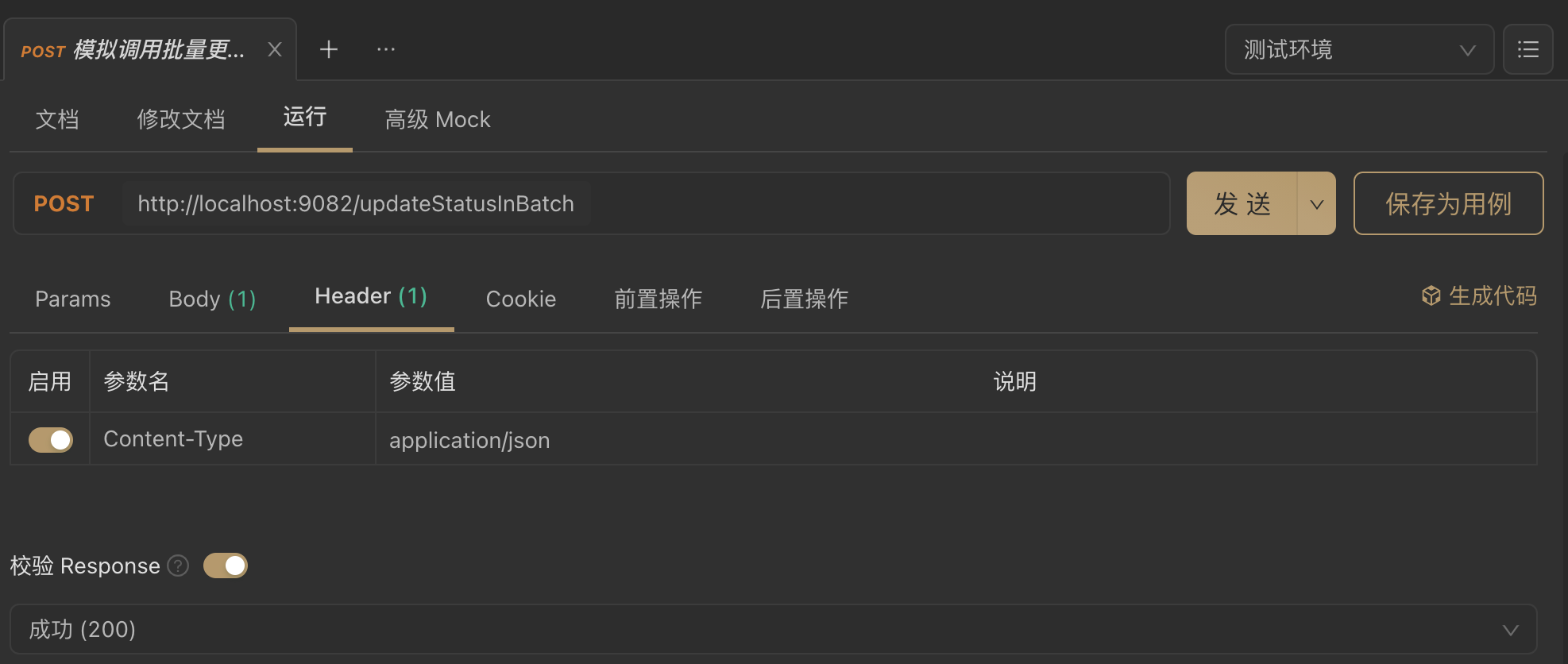

prefetch: 32So this configuration determines that our largest consumer is 64 per second.

Then we open a / updateStatusInBatch request to SkyDownStream on each of our consumers, which means that it will not make more than 64 concurrent restTemplate requests downstream per second.

RestTemplate Configuration

HttpConfig.java

package org.mk.demo.config.http;

import java.util.ArrayList;

import java.util.List;

import org.slf4j.Logger;

import org.slf4j.LoggerFactory;

import org.springframework.boot.context.properties.ConfigurationProperties;

import org.springframework.context.annotation.Bean;

import org.springframework.context.annotation.Configuration;

import org.springframework.http.client.BufferingClientHttpRequestFactory;

import org.springframework.http.client.ClientHttpRequestFactory;

import org.springframework.http.client.ClientHttpRequestInterceptor;

import org.springframework.http.client.SimpleClientHttpRequestFactory;

import org.springframework.web.client.RestTemplate;

@Configuration

@ConfigurationProperties(prefix = "http.rest.connection")

public class HttpConfig {

private Logger logger = LoggerFactory.getLogger(this.getClass());

public int getConnectionTimeout() {

return connectionTimeout;

}

public void setConnectionTimeout(int connectionTimeout) {

this.connectionTimeout = connectionTimeout;

}

public int getReadTimeout() {

return readTimeout;

}

public void setReadTimeout(int readTimeout) {

this.readTimeout = readTimeout;

}

private int connectionTimeout;

private int readTimeout;

@Bean

public RestTemplate restTemplate(ClientHttpRequestFactory factory) {

RestTemplate restTemplate = new RestTemplate(new BufferingClientHttpRequestFactory(factory));

List<ClientHttpRequestInterceptor> interceptors = new ArrayList<>();

interceptors.add(new LoggingRequestInterceptor());

restTemplate.setInterceptors(interceptors);

return restTemplate;

}

@Bean

public ClientHttpRequestFactory simpleClientHttpRequestFactory() {

SimpleClientHttpRequestFactory factory = new SimpleClientHttpRequestFactory();// The default is that the JDK provides http connections, which can be //replaced by a setRequestFactory method such as Apache if needed

// Other HTTP such as HttpComponents, Netty, or //OkHttp

// library.

logger.info(">>>>>>http.rest.connection.readTimeout setted to->{}", readTimeout);

logger.info(">>>>>>http.rest.connection.connectionTimeout setted to->{}", connectionTimeout);

factory.setReadTimeout(readTimeout);// Unit is ms

factory.setConnectTimeout(connectionTimeout);// Unit is ms

return factory;

}

}

LoggingRequestInterceptor.java

package org.mk.demo.config.http;

import java.io.BufferedReader;

import java.io.IOException;

import java.io.InputStreamReader;

import org.slf4j.Logger;

import org.slf4j.LoggerFactory;

import org.springframework.http.HttpRequest;

import org.springframework.http.client.ClientHttpRequestExecution;

import org.springframework.http.client.ClientHttpRequestInterceptor;

import org.springframework.http.client.ClientHttpResponse;

public class LoggingRequestInterceptor implements ClientHttpRequestInterceptor {

private Logger logger = LoggerFactory.getLogger(this.getClass());

@Override

public ClientHttpResponse intercept(HttpRequest request, byte[] body, ClientHttpRequestExecution execution)

throws IOException {

traceRequest(request, body);

ClientHttpResponse response = execution.execute(request, body);

traceResponse(response);

return response;

}

private void traceRequest(HttpRequest request, byte[] body) throws IOException {

logger.debug("===========================request begin================================================");

logger.debug("URI : {}", request.getURI());

logger.debug("Method : {}", request.getMethod());

logger.debug("Headers : {}", request.getHeaders());

logger.debug("Request body: {}", new String(body, "UTF-8"));

logger.debug("==========================request end================================================");

}

private void traceResponse(ClientHttpResponse response) throws IOException {

StringBuilder inputStringBuilder = new StringBuilder();

BufferedReader bufferedReader = new BufferedReader(new InputStreamReader(response.getBody(), "UTF-8"));

String line = bufferedReader.readLine();

while (line != null) {

inputStringBuilder.append(line);

inputStringBuilder.append('\n');

line = bufferedReader.readLine();

}

logger.debug("============================response begin==========================================");

logger.debug("Status code : {}", response.getStatusCode());

logger.debug("Status text : {}", response.getStatusText());

logger.debug("Headers : {}", response.getHeaders());

logger.debug("Response body: {}", inputStringBuilder.toString());

logger.debug("=======================response end=================================================");

}

}

Core configuration of RestTemplate

http:

rest:

connection:

readTimeout: 4000

connectionTimeout: 2000

downstreamsystem-url: http://localhost:9082/updateStatus

downstreamsystem-batch-url: http://localhost:9082/updateStatusInBatchYou can see from the configuration above that our ReadTimeout is 4 seconds and the connection Timeout is 2 seconds. In order to make it possible to "disconnect" in time even if there is a problem with the system.

A prolonged wait time is a hidden bell of the most problematic program apes. Microservices focus on the one-second principle, where any request >=1 second is slow (whether it's external and concurrently reaching 100,000 or 1 million requests per second). Rather than stealing the bell, we should focus more on compressing your transaction response to "shorter time", which is the programmer.

Full application of SkyPayment project. YML file content

mysql:

datasource:

db:

type: com.alibaba.druid.pool.DruidDataSource

driverClassName: com.mysql.jdbc.Driver

minIdle: 50

initialSize: 50

maxActive: 300

maxWait: 1000

testOnBorrow: false

testOnReturn: true

testWhileIdle: true

validationQuery: select 1

validationQueryTimeout: 1

timeBetweenEvictionRunsMillis: 5000

ConnectionErrorRetryAttempts: 3

NotFullTimeoutRetryCount: 3

numTestsPerEvictionRun: 10

minEvictableIdleTimeMillis: 480000

maxEvictableIdleTimeMillis: 480000

keepAliveBetweenTimeMillis: 480000

keepalive: true

poolPreparedStatements: true

maxPoolPreparedStatementPerConnectionSize: 512

maxOpenPreparedStatements: 512

master: #master db

type: com.alibaba.druid.pool.DruidDataSource

driverClassName: com.mysql.jdbc.Driver

url: jdbc:mysql://localhost:3306/ecom?useUnicode=true&characterEncoding=utf-8&useSSL=false&useAffectedRows=true&autoReconnect=true&allowMultiQueries=true

username: root

password: 111111

slaver: #slaver db

type: com.alibaba.druid.pool.DruidDataSource

driverClassName: com.mysql.jdbc.Driver

url: jdbc:mysql://localhost:3307/ecom?useUnicode=true&characterEncoding=utf-8&useSSL=false&useAffectedRows=true&autoReconnect=true&allowMultiQueries=true

username: root

password: 111111

server:

port: 9080

tomcat:

max-http-post-size: -1

max-http-header-size: 10240000

spring:

application:

name: skypayment

servlet:

multipart:

max-file-size: 10MB

max-request-size: 10MB

context-path: /skypayment

data:

mongodb:

uri: mongodb://skypayment:111111@localhost:27017,localhost:27018/skypayment

quartz: