template matching

Template matching is often used in image processing. This algorithm is mainly used to find the same area in the image as the template image. In addition, it is also used for image positioning. The specified position is found through template matching, and then subsequent processing is carried out.

The principle of template matching is similar to that of convolution. The template slides from the origin on the original image to calculate the difference between the template and (where the image is covered by the template). There are six calculation methods for this difference in opencv, and then the results of each calculation are put into a matrix and output as the results. If the original figure is AXB size and the template is AXB size, the matrix of the output result is (A-a+1)x(B-b+1)

In template matching, you need to make a template first. The template image usually takes an image area from the original image as a template. The template image must be smaller than the image to be matched.

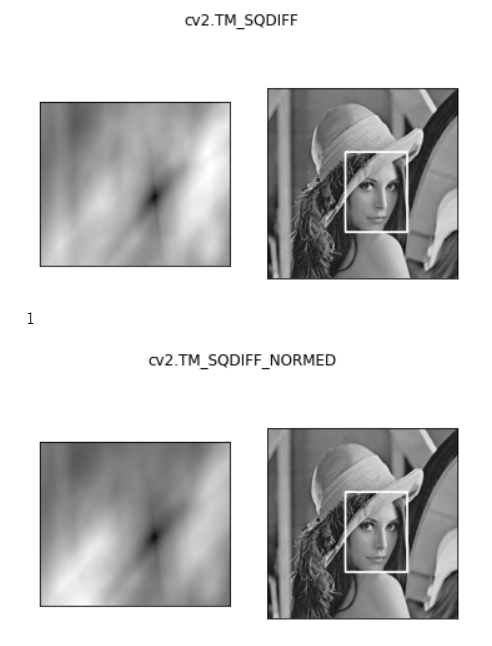

- TM_SQDIFF: the calculated square is different. The smaller the calculated value is, the more relevant it is

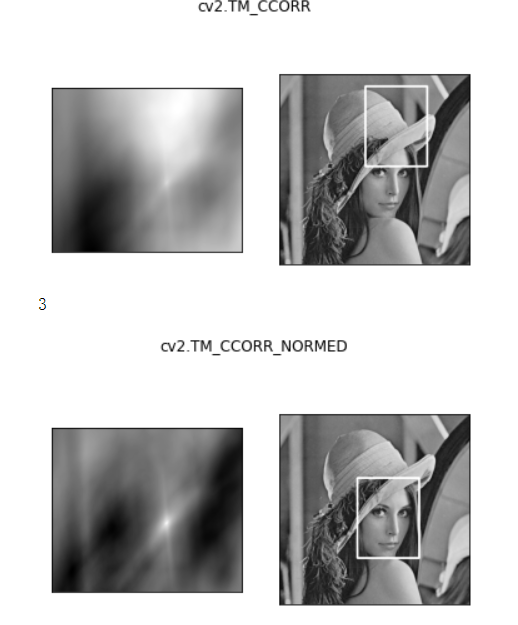

- TM_CCORR: calculate the correlation. The larger the calculated value, the more relevant it is

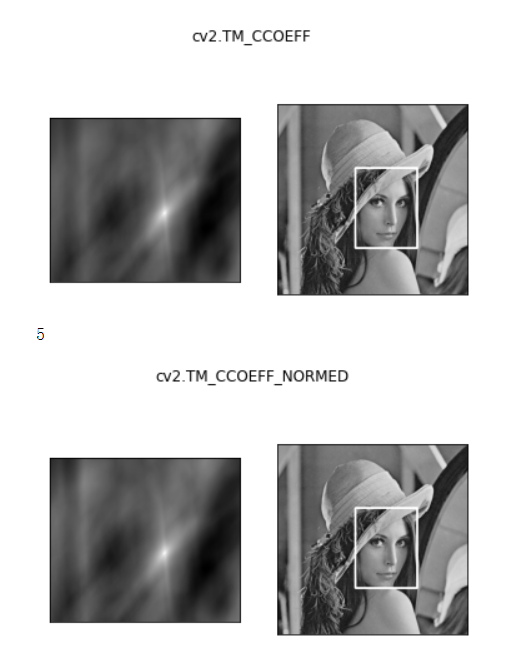

- TM_CCOEFF: calculate the correlation coefficient. The larger the calculated value, the more relevant it is

- TM_ SQDIFF_ Normalized: the calculated normalized square is different. The closer the calculated value is to 0, the more relevant it is

- TM_ CCORR_ Normalized: calculate the normalized correlation. The closer the calculated value is to 1, the more relevant it is

- TM_ CCOEFF_ Normalized: calculate the normalized correlation coefficient. The closer the calculated value is to 1, the more relevant it is

No matter which matching method is used, the method is very simple, that is, let the template image slide on the image to be matched, and calculate some parameter values of the template image covering the image to be matched, such as square difference, correlation, etc. Record these values after each sliding, and then look for the minimum or maximum value. The position of the value on the image to be matched is the matching position.

# template matching

#Original image, 0 means gray image

img = cv2.imread('lena.jpg', 0)

#Template to match

template = cv2.imread('face.jpg', 0)

#h, w of template

h, w = template.shape[:2]

methods = ['cv2.TM_CCOEFF', 'cv2.TM_CCOEFF_NORMED', 'cv2.TM_CCORR',

'cv2.TM_CCORR_NORMED', 'cv2.TM_SQDIFF', 'cv2.TM_SQDIFF_NORMED']

for meth in methods:

img2 = img.copy()

# True value of matching method

method = eval(meth)

res = cv2.matchTemplate(img, template, method)

min_val, max_val, min_loc, max_loc = cv2.minMaxLoc(res)

# If it is square difference matching TM_SQDIFF or normalized square difference matching TM_SQDIFF_NORMED, take the minimum value, the smaller the better

if method in [cv2.TM_SQDIFF, cv2.TM_SQDIFF_NORMED]:

top_left = min_loc

else:

top_left = max_loc

#Get matching length and width

bottom_right = (top_left[0] + w, top_left[1] + h)

# Draw rectangle

cv2.rectangle(img2, top_left, bottom_right, 255, 2)

plt.subplot(121), plt.imshow(res, cmap='gray')

plt.xticks([]), plt.yticks([]) # Hide axes

plt.subplot(122), plt.imshow(img2, cmap='gray')

plt.xticks([]), plt.yticks([])

plt.suptitle(meth)

plt.show()

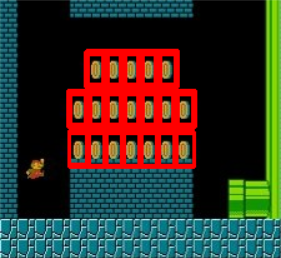

The following figure shows six different matching methods. The highlight of the left figure is the found face position. The normalization method basically works well

Multiple templates match

Original image

Matching template

img_rgb = cv2.imread('mario.jpg')

img_gray = cv2.cvtColor(img_rgb, cv2.COLOR_BGR2GRAY)

template = cv2.imread('mario_coin.jpg', 0)

h, w = template.shape[:2]

res = cv2.matchTemplate(img_gray, template, cv2.TM_CCOEFF_NORMED)

threshold = 0.8

# Take coordinates with matching degree greater than% 80

loc = np.where(res >= threshold)

for pt in zip(*loc[::-1]): # *The number indicates an optional parameter

bottom_right = (pt[0] + w, pt[1] + h)

cv2.rectangle(img_rgb, pt, bottom_right, (0, 0, 255), 2)

cv2.imshow('img_rgb', img_rgb)

cv2.waitKey(0)

Matching results can be obtained