U-Net and PSPNet image segmentation algorithm for pavement segmentation based on propeller frame 2.1

Introduction to the data set used in the learning process

- The data provided in the 7-day camp tutorial is not used. The data set used in this version is Road trading knowledge (RTK) dataset

- Dataset features:

- This dataset provides images taken by a low-cost camera (HP Webcam HD-4110)

- Roads that contain different pavement types: asphalt changes, other pavement types, and even non pavement

- It also includes road damage, such as potholes

- The corresponding table of labels is as follows:

| category | Serial number |

|---|---|

| background | 0 |

| asphalt pavement | 1 |

| dry pavement | 2 |

| Unpaved pavement | 3 |

| road markings | 4 |

| Deceleration zone | 5 |

| cat eye | 6 |

| Ditch | 7 |

| patch | 9 |

| Puddle | 10 |

| hole in the ground | 11 |

| crack | 12 |

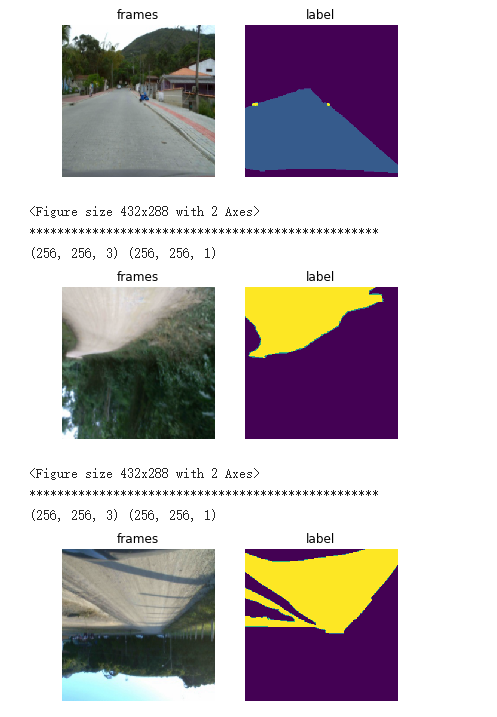

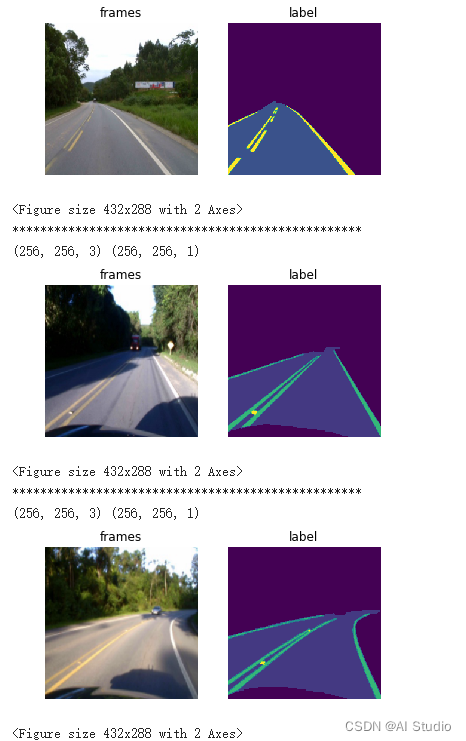

- Examples are as follows:

- During training, the mask image with a value between 0 and 12 is used as the label for training. The right side of the above figure is only for visualization

- Note: there are 12 types of ground objects in the set, including 8 types of background data

1, Dataset preparation

# Unzip the file to the folder of the dataset !mkdir work/dataset !unzip -q data/data71331/RTK_Segmentation.zip -d work/dataset/ !unzip -q data/data71331/tests.zip -d work/dataset/

# New verification set folder !mkdir work/dataset/val_frames !mkdir work/dataset/val_colors !mkdir work/dataset/val_masks

# Randomly select 50 pieces of data and move them to the verification set

import os

import shutil

import re

def moveImgDir(color_dir, newcolor_dir, mask_dir, newmask_dir, frames_dir, newframes_dir):

filenames = os.listdir(color_dir)

filenames.sort()

for index, filename in enumerate(filenames):

src = os.path.join(color_dir,filename)

dst = os.path.join(newcolor_dir,filename)

shutil.move(src, dst)

# There are too many file names in the colors folder, so it should be removed

new_filename = re.sub('GT', '', filename)

src = os.path.join(mask_dir, new_filename)

dst = os.path.join(newmask_dir, new_filename)

shutil.move(src, dst)

src = os.path.join(frames_dir, new_filename)

dst = os.path.join(newframes_dir, new_filename)

shutil.move(src, dst)

if index == 50:

break

moveImgDir(r"work/dataset/colors", r"work/dataset/val_colors",

r"work/dataset/masks", r"work/dataset/val_masks",

r"work/dataset/frames", r"work/dataset/val_frames")

# View the label mapping between the mask image and the color image and save it as a json file

import os

import cv2

import numpy as np

import re

import json

labels = ['Background', 'Asphalt', 'Paved', 'Unpaved',

'Markings', 'Speed-Bump', 'Cats-Eye', 'Storm-Drain',

'Patch', 'Water-Puddle', 'Pothole', 'Cracks']

label_color_dict = {}

mask_dir = r"work/dataset/masks"

color_dir = r"work/dataset/colors"

mask_names = [f for f in os.listdir(mask_dir) if f.endswith('png')]

color_names = [f for f in os.listdir(color_dir) if f.endswith('png')]

for index, label in enumerate(labels):

if index>=8:

index += 1

for color_name in color_names:

color = cv2.imread(os.path.join(color_dir, color_name), -1)

color = cv2.cvtColor(color, cv2.COLOR_BGR2RGB)

mask_name = re.sub('GT', '', color_name)

mask = cv2.imread(os.path.join(mask_dir, mask_name), -1)

mask_color = color[np.where(mask == index)]

if len(mask_color)!= 0:

label_color_dict[label] = list(mask_color[0].astype(float))

break

with open(r"work/dataset/mask2color.json", "w", encoding='utf-8') as f:

# json.dump(dict_, f) # Write as one line

json.dump(label_color_dict, f, indent=2, sort_keys=True, ensure_ascii=False) # Write as multiple lines

2, Data set class definition (training set, test set)

1. Data conversion

- The data conversion part is to make some changes when reading the training data, such as rotation, filling, center clipping, data standardization, etc., so as to achieve the purpose of data expansion and standardization and improve the segmentation effect of the model. The code is saved in work/Class3/data_transform.py file, which is relatively simple and will not be displayed

2. Definition of training set class and test set class

- In the operation of image conversion when reading data, there are many conversion of training set classes, while the test set classes are scaled and standardized

- The training set class needs to read the data and return the converted data and labels. The suffixes of the data and labels are png. In addition to returning data and labels, the test set class also returns the path of data, which is convenient for the visualization of training results in the future

# Pre work of dataset class definition

import os

import numpy as np

import cv2

import paddle

from paddle.io import Dataset, DataLoader

from work.Class3.data_transform import Compose, Normalize, RandomSacle, RandomFlip,ConvertDataType,Resize

IMG_EXTENSIONS = ['.jpg', '.JPG', '.jpeg', '.JPEG', '.png', '.PNG', '.ppm', '.PPM', '.bmp', '.BMP']

def is_image_file(filename):

return any(filename.endswith(extension) for extension in IMG_EXTENSIONS)

def get_paths_from_images(path):

"""get image path list from image folder"""

assert os.path.isdir(path), '{:s} is not a valid directory'.format(path)

images = []

for dirpath, _, fnames in sorted(os.walk(path)):

for fname in sorted(fnames):

if is_image_file(fname):

img_path = os.path.join(dirpath, fname)

images.append(img_path)

assert images, '{:s} has no valid image file'.format(path)

return images

# Training set class

class BasicDataset(Dataset):

'''

You need to read the data and return the converted data and labels. The suffixes of the data and labels are.png

'''

def __init__(self, image_folder, label_folder, size):

super(BasicDataset, self).__init__()

self.image_folder = image_folder

self.label_folder = label_folder

self.path_Img = get_paths_from_images(image_folder)

if label_folder is not None:

self.path_Label = get_paths_from_images(label_folder)

self.size = size

self.transform = Compose(

[RandomSacle(),

RandomFlip(),

Resize(self.size),

ConvertDataType(),

Normalize(0,1)

]

)

def preprocess(self, data, label):

h,w,c=data.shape

h_gt, w_gt=label.shape

assert h==h_gt, "error"

assert w==w_gt, "error"

data, label=self.transform(data, label)

label=label[:,:,np.newaxis]

return data, label

def __getitem__(self,index):

Img_path, Label_path = None, None

Img_path = self.path_Img[index]

Label_path = self.path_Label[index]

data = cv2.imread(Img_path , cv2.IMREAD_COLOR)

data = cv2.cvtColor(data, cv2.COLOR_BGR2RGB)

label = cv2.imread(Label_path, cv2.IMREAD_GRAYSCALE)

data,label = self.preprocess(data, label)

return {'Image': data, 'Label': label}

def __len__(self):

return len(self.path_Img)

# Test set class definition

class Basic_ValDataset(Dataset):

'''

You need to read the data and return the path of converted data, labels and image data

'''

def __init__(self, image_folder, label_folder, size):

super(Basic_ValDataset, self).__init__()

self.image_folder = image_folder

self.label_folder = label_folder

self.path_Img = get_paths_from_images(image_folder)

if label_folder is not None:

self.path_Label = get_paths_from_images(label_folder)

self.size = size

self.transform = Compose(

[Resize(size),

ConvertDataType(),

Normalize(0,1)

]

)

def preprocess(self, data, label):

h,w,c=data.shape

h_gt, w_gt=label.shape

assert h==h_gt, "error"

assert w==w_gt, "error"

data, label=self.transform(data, label)

label=label[:,:,np.newaxis]

return data, label

def __getitem__(self,index):

Img_path, Label_path = None, None

Img_path = self.path_Img[index]

Label_path = self.path_Label[index]

data = cv2.imread(Img_path , cv2.IMREAD_COLOR)

data = cv2.cvtColor(data, cv2.COLOR_BGR2RGB)

label = cv2.imread(Label_path, cv2.IMREAD_GRAYSCALE)

data,label = self.preprocess(data, label)

return {'Image': data, 'Label': label, 'Path':Img_path}

def __len__(self):

return len(self.path_Img)

# Test the dataset class to see if it works normally

%matplotlib inline

import matplotlib.pyplot as plt

paddle.device.set_device("cpu")

with paddle.no_grad():

dataset = BasicDataset("work/dataset/frames", "work/dataset/masks", 256)

dataloader = DataLoader(dataset, batch_size = 1, shuffle = True, num_workers = 0)

for index, traindata in enumerate(dataloader):

image = traindata["Image"]

image = np.asarray(image)[0]

label = traindata["Label"]

label = np.asarray(label)[0]

print(image.shape, label.shape)

plt.subplot(1,2,1), plt.title('frames')

plt.imshow(image), plt.axis('off')

plt.subplot(1,2,2), plt.title('label')

plt.imshow(label.squeeze()), plt.axis('off')

plt.show()

print(50*'*')

if index == 5:

break

(256, 256, 3) (256, 256, 1)

**************************************************

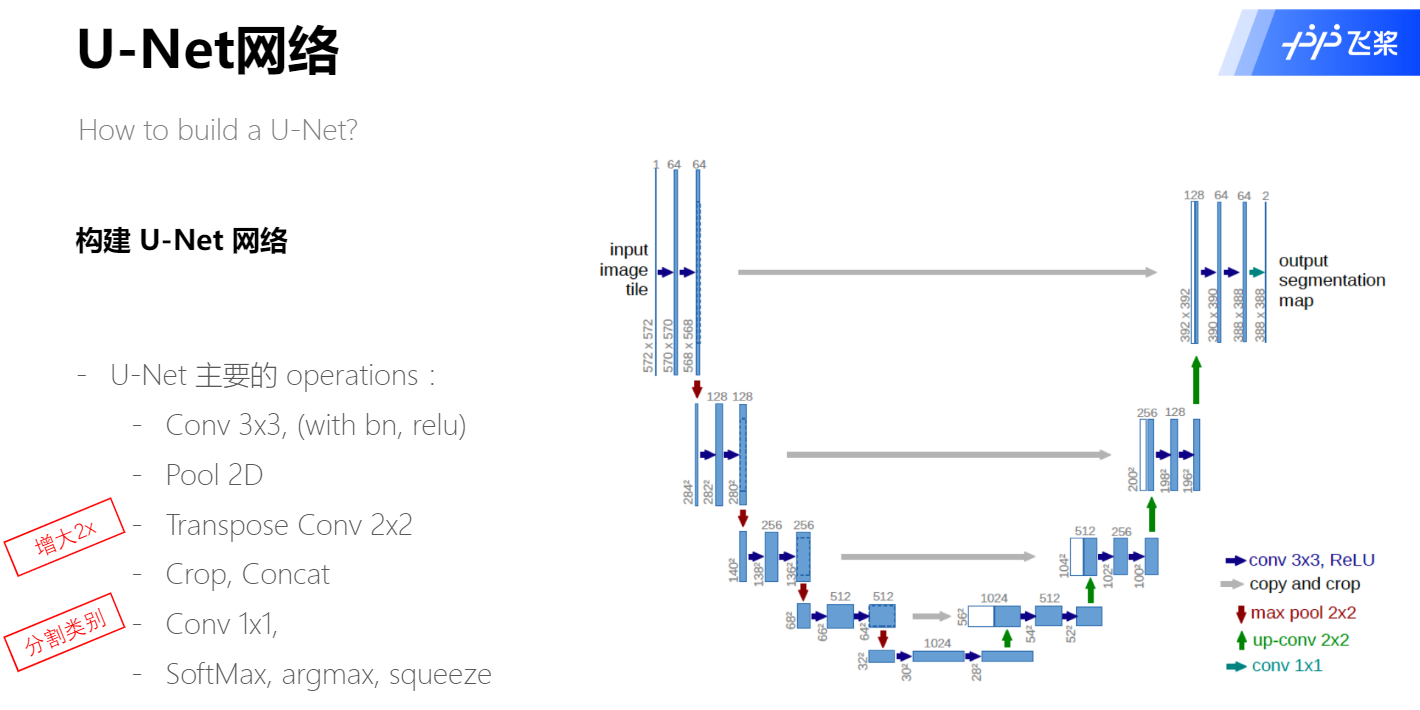

3, U-Net model networking

- U-Net is a U-shaped network structure, which can be seen as two major stages:

- The image is first down sampled by the Encoder to obtain the high-level semantic feature map

- Then the feature image is restored to the resolution of the original image by sampling on the Decoder

- Therefore, the networking of U-Net can be divided into three parts. First define Encoder, then define Decoder, and finally form the U-Net network with the two components

- In order to reduce the training parameters in convolution operation to improve performance, it is also necessary to define the SeparableConv2d class

- Put the formed UNet into the UNet under the Class3 file Py file

1. Define SeparableConv2d class

- The whole process is to filter_ size * filter_ size * num_ The Conv2D operation of filters is disassembled into two sub Conv2D

- First, use filter for each channel of input data_ size * filter_ The convolution kernel of size * 1 is calculated, and the number of input and output channels is the same

- Then use 1 * 1 * num_ Convolution kernel calculation of filters

import paddle

import paddle.nn as nn

from paddle.nn import functional as F

import numpy as np

class SeparableConv2D(paddle.nn.Layer):

def __init__(self,

in_channels,

out_channels,

kernel_size,

stride=1,

padding=0,

dilation=1,

groups=None,

weight_attr=None,

bias_attr=None,

data_format="NCHW"):

super(SeparableConv2D, self).__init__()

self._padding = padding

self._stride = stride

self._dilation = dilation

self._in_channels = in_channels

self._data_format = data_format

# First convolution parameter, no bias parameter

filter_shape = [in_channels, 1] + self.convert_to_list(kernel_size, 2, 'kernel_size')

self.weight_conv = self.create_parameter(shape=filter_shape, attr=weight_attr)

# Second convolution parameter

filter_shape = [out_channels, in_channels] + self.convert_to_list(1, 2, 'kernel_size')

self.weight_pointwise = self.create_parameter(shape=filter_shape, attr=weight_attr)

self.bias_pointwise = self.create_parameter(shape=[out_channels],

attr=bias_attr,

is_bias=True)

def convert_to_list(self, value, n, name, dtype=np.int):

if isinstance(value, dtype):

return [value, ] * n

else:

try:

value_list = list(value)

except TypeError:

raise ValueError("The " + name +

"'s type must be list or tuple. Received: " + str(

value))

if len(value_list) != n:

raise ValueError("The " + name + "'s length must be " + str(n) +

". Received: " + str(value))

for single_value in value_list:

try:

dtype(single_value)

except (ValueError, TypeError):

raise ValueError(

"The " + name + "'s type must be a list or tuple of " + str(

n) + " " + str(dtype) + " . Received: " + str(

value) + " "

"including element " + str(single_value) + " of type" + " "

+ str(type(single_value)))

return value_list

def forward(self, inputs):

conv_out = F.conv2d(inputs,

self.weight_conv,

padding=self._padding,

stride=self._stride,

dilation=self._dilation,

groups=self._in_channels,

data_format=self._data_format)

out = F.conv2d(conv_out,

self.weight_pointwise,

bias=self.bias_pointwise,

padding=0,

stride=1,

dilation=1,

groups=1,

data_format=self._data_format)

print('out:{}'.format(out.shape))

return out

2. Define Encoder

- The Encoder down sampling process in the network structure is encapsulated in a Layer to facilitate subsequent calls and reduce code writing

- Downsampling is a process in which a model gradually draws a curve downward. In this process, a unit structure is continuously repeated, the number of channels is continuously increased, the shape is continuously reduced, and the residual network structure is introduced

- All these are abstracted and encapsulated in a unified way

class Encoder(paddle.nn.Layer):

def __init__(self, in_channels, out_channels):

super(Encoder, self).__init__()

self.relus = paddle.nn.LayerList(

[paddle.nn.ReLU() for i in range(2)])

self.separable_conv_01 = SeparableConv2D(in_channels,

out_channels,

kernel_size=3,

padding='same')

self.bns = paddle.nn.LayerList(

[paddle.nn.BatchNorm2D(out_channels) for i in range(2)])

self.separable_conv_02 = SeparableConv2D(out_channels,

out_channels,

kernel_size=3,

padding='same')

self.pool = paddle.nn.MaxPool2D(kernel_size=3, stride=2, padding=1)

self.residual_conv = paddle.nn.Conv2D(in_channels,

out_channels,

kernel_size=1,

stride=2,

padding='same')

def forward(self, inputs):

previous_block_activation = inputs

y = self.relus[0](inputs)

y = self.separable_conv_01(y)

y = self.bns[0](y)

y = self.relus[1](y)

y = self.separable_conv_02(y)

y = self.bns[1](y)

y = self.pool(y)

residual = self.residual_conv(previous_block_activation)

y = paddle.add(y, residual)

return y

3. Define Decoder encoder

- When the number of channels reaches the maximum and the high-level semantic feature map is obtained, the network structure will begin to decode

- After up sampling, the number of channels decreases gradually, and the corresponding image size increases gradually until it is restored to the original image size

- This process is also completed by repeating the residual network with the same structure. In order to reduce code writing, this process defines a Layer to be used in the model networking

class Decoder(paddle.nn.Layer):

def __init__(self, in_channels, out_channels):

super(Decoder, self).__init__()

self.relus = paddle.nn.LayerList(

[paddle.nn.ReLU() for i in range(2)])

self.conv_transpose_01 = paddle.nn.Conv2DTranspose(in_channels,

out_channels,

kernel_size=3,

padding=1)

self.conv_transpose_02 = paddle.nn.Conv2DTranspose(out_channels,

out_channels,

kernel_size=3,

padding=1)

self.bns = paddle.nn.LayerList(

[paddle.nn.BatchNorm2D(out_channels) for i in range(2)]

)

self.upsamples = paddle.nn.LayerList(

[paddle.nn.Upsample(scale_factor=2.0) for i in range(2)]

)

self.residual_conv = paddle.nn.Conv2D(in_channels,

out_channels,

kernel_size=1,

padding='same')

def forward(self, inputs):

previous_block_activation = inputs

y = self.relus[0](inputs)

y = self.conv_transpose_01(y)

y = self.bns[0](y)

y = self.relus[1](y)

y = self.conv_transpose_02(y)

y = self.bns[1](y)

y = self.upsamples[0](y)

residual = self.upsamples[1](previous_block_activation)

residual = self.residual_conv(residual)

y = paddle.add(y, residual)

return y

4.U-Net networking

- The overall network structure is built according to the U-shaped network structure format, with three down sampling and four up sampling

class UNet(paddle.nn.Layer):

def __init__(self, num_classes):

super(UNet, self).__init__()

self.conv_1 = paddle.nn.Conv2D(3, 32,

kernel_size=3,

stride=2,

padding='same')

self.bn = paddle.nn.BatchNorm2D(32)

self.relu = paddle.nn.ReLU()

in_channels = 32

self.encoders = []

self.encoder_list = [64, 128, 256]

self.decoder_list = [256, 128, 64, 32]

# Define sub layers according to the number of down sampling and configuration cycles to avoid writing the same program repeatedly

for out_channels in self.encoder_list:

block = self.add_sublayer('encoder_{}'.format(out_channels),

Encoder(in_channels, out_channels))

self.encoders.append(block)

in_channels = out_channels

self.decoders = []

# Define sub layers according to the number of up samples and configuration cycles to avoid writing the same program repeatedly

for out_channels in self.decoder_list:

block = self.add_sublayer('decoder_{}'.format(out_channels),

Decoder(in_channels, out_channels))

self.decoders.append(block)

in_channels = out_channels

self.output_conv = paddle.nn.Conv2D(in_channels,

num_classes,

kernel_size=3,

padding='same')

def forward(self, inputs):

y = self.conv_1(inputs)

y = self.bn(y)

y = self.relu(y)

for encoder in self.encoders:

y = encoder(y)

for decoder in self.decoders:

y = decoder(y)

y = self.output_conv(y)

return y

4, PSPNet networking

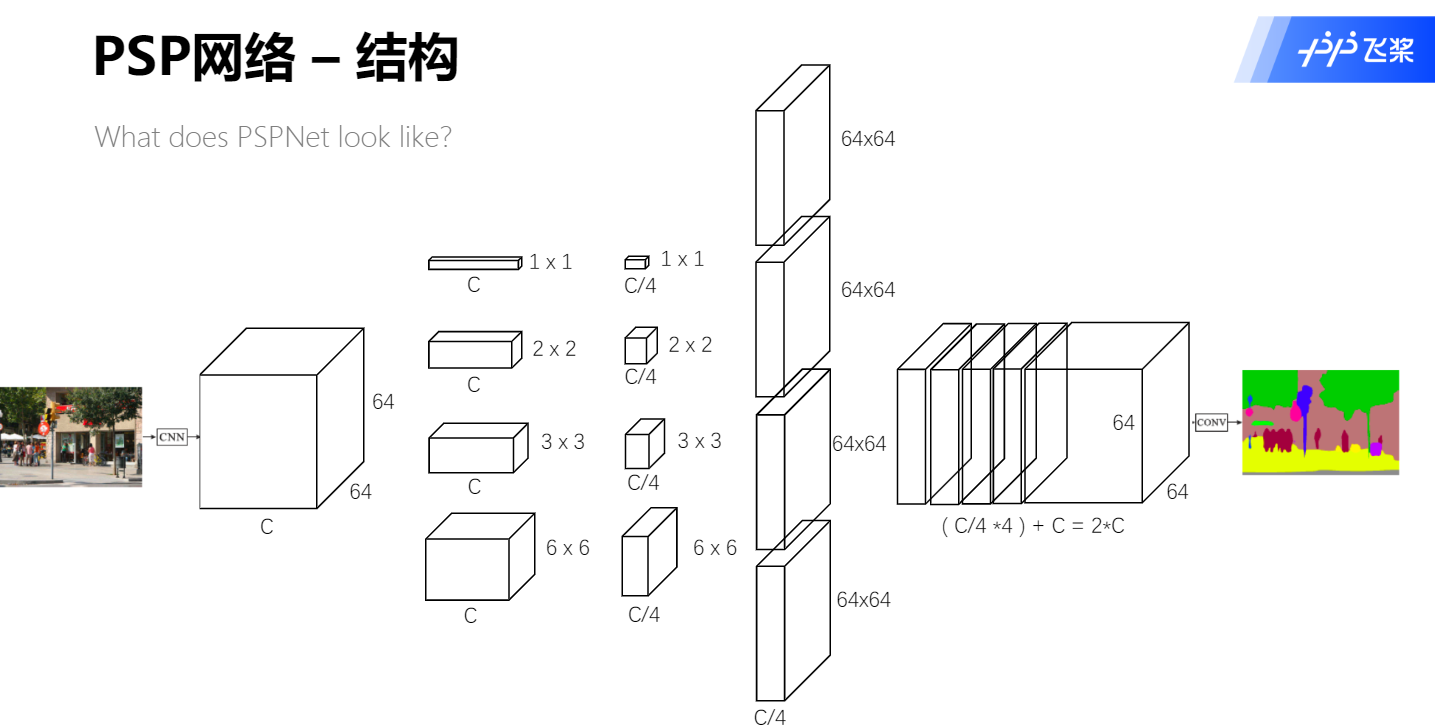

- The network structure of PSPNet is very clear, as shown in the figure above. It is composed of backbone, PSPModule and classifier

- The CNN module in the figure is the backbone of the network, and PSPNet uses ResNet_vd50 or ResNet_vd101 both. In order to simply load the pre training model, this tutorial uses ResNet instead of ResNet_vd

- PSPModule: the feature map generated through the backbone enters four parallel processing channels respectively, as shown in the above figure. The feature map of each channel shall be processed by adaptive_pool, change its size, then change the number of channels through convolution, and then change the size of the feature map into the same as when it comes out of the backbone through up sampling. Finally, concat enate the feature map output by each channel with the feature map from the backbone

- classifier module: as shown in the last conv module in the figure, each pixel is actually classified by the combination of convolution layers

- When building a PSPNet network, first define the overall network structure, and then define the PSPModule. The specific code is in PSPNet under the Class3 folder Py file

1.PSPNet networking

import paddle

import paddle.nn as nn

import paddle.nn.functional as F

from paddle.vision.models import resnet50, resnet101

class PSPNet(nn.Layer):

def __init__(self, num_classes=13, backbone_name = "resnet50"):

super(PSPNet, self).__init__()

if backbone_name == "resnet50":

backbone = resnet50(pretrained=True)

if backbone_name == "resnet101":

backbone = resnet101(pretrained=True)

#self.layer0 = nn.Sequential(*[backbone.conv1, backbone.bn1, backbone.relu, backbone.maxpool])

self.layer0 = nn.Sequential(*[backbone.conv1, backbone.maxpool])

self.layer1 = backbone.layer1

self.layer2 = backbone.layer2

self.layer3 = backbone.layer3

self.layer4 = backbone.layer4

num_channels = 2048

self.pspmodule = PSPModule(num_channels, [1, 2, 3, 6])

num_channels *= 2

self.classifier = nn.Sequential(*[

nn.Conv2D(num_channels, 512, 3, padding = 1),

nn.BatchNorm2D(512),

nn.ReLU(),

nn.Dropout2D(0.1),

nn.Conv2D(512, num_classes, 1)

])

def forward(self, inputs):

x = self.layer0(inputs)

x = self.layer1(x)

x = self.layer2(x)

x = self.layer3(x)

x = self.layer4(x)

x = self.pspmodule(x)

x = self.classifier(x)

out = F.interpolate(

x,

paddle.shape(inputs)[2:],

mode='bilinear',

align_corners=True)

return out

2. Definition of pspmodule

class PSPModule(nn.Layer):

def __init__(self, num_channels, bin_size_list):

super(PSPModule, self).__init__()

self.bin_size_list = bin_size_list

num_filters = num_channels // len(bin_size_list)

self.features = []

for i in range(len(bin_size_list)):

self.features.append(nn.Sequential(*[

nn.Conv2D(num_channels, num_filters, 1),

nn.BatchNorm2D(num_filters),

nn.ReLU()

]))

def forward(self, inputs):

out = [inputs]

for idx, f in enumerate(self.features):

pool = paddle.nn.AdaptiveAvgPool2D(self.bin_size_list[idx])

x = pool(inputs)

x = f(x)

x = F.interpolate(x, paddle.shape(inputs)[2:], mode="bilinear", align_corners=True)

out.append(x)

out = paddle.concat(out, axis=1)

return out

5, Model training

- The previous two parts define data processing and model networking. The part of training model needs to consider loss function, optimization algorithm, resource allocation, breakpoint recovery training and so on. In order to evaluate the quality of the model, we also need to define the part of effect evaluation

- Loss function: U-Net and PSPNet classify the feature map pixel by pixel, so the loss function used is to calculate the softmax cross entropy loss of each pixel. The loss function is defined in work/Class3/basic_seg_loss.py file

- Optimization algorithm: SGD optimization algorithm is adopted for optimization; Resource allocation: because studio can only use single card training, not considering multiple cards; Breakpoint recovery training: save the model parameters and optimizer parameters, and load the corresponding parameters when you want to recover. The training code is in work / class3 / train Py, you can run the following statement to start training

- Effect evaluation: use accuracy and IOU to evaluate the segmentation effect. The code is in work / class3 / utils Defined in PY

# Training model, remember to modify the train Use in PY_ GPU parameter is True !python work/Class3/train.py

6, Prediction results

import paddle from paddle.io import DataLoader import work.Class3.utils as utils import cv2 import numpy as np import os from work.Class3.unet import UNet from work.Class3.pspnet import PSPNet

# Load model function

def loadModel(net, model_path):

if net == 'unet':

model = UNet(13)

if net == 'pspnet':

model = PSPNet()

params_dict = paddle.load(model_path)

model.set_state_dict(params_dict)

return model

# Verify the function and modify the parameters to select the model to be verified

def Val(net = 'unet'):

image_folder = r"work/dataset/val_frames"

label_folder = r"work/dataset/val_masks"

model_path = r"work/Class3/output/{}_epoch200.pdparams".format(net)

output_dir = r"work/Class3/val_result"

if not os.path.isdir(output_dir):

os.mkdir(output_dir)

model = loadModel(net, model_path)

model.eval()

dataset = Basic_ValDataset(image_folder, label_folder, 256) # size 256

dataloader = DataLoader(dataset, batch_size = 1, shuffle = False, num_workers = 1)

result_dict = {}

val_acc_list = []

val_iou_list = []

for index, data in enumerate(dataloader):

image = data["Image"]

label = data["Label"]

imgPath = data["Path"][0]

image = paddle.transpose(image, [0, 3, 1, 2])

pred = model(image)

label_pred = np.argmax(pred.numpy(), 1)

# Calculate acc and iou indicators

label_true = label.numpy()

acc, acc_cls, mean_iu, fwavacc = utils.label_accuracy_score(label_true, label_pred, n_class=13)

filename = imgPath.split('/')[-1]

print('{}, acc:{}, iou:{}, acc_cls{}'.format(filename, acc, mean_iu, acc_cls))

val_acc_list.append(acc)

val_iou_list.append(mean_iu)

result = label_pred[0]

cv2.imwrite(os.path.join(output_dir, filename), result)

val_acc, val_iou = np.mean(val_acc_list), np.mean(val_iou_list)

print('val_acc:{}, val_iou{}'.format(val_acc, val_iou))

Val(net = 'unet') #Verify U-Net

000000000.png, acc:0.9532470703125, iou:0.21740302272507017, acc_cls0.24366524880119939 000000001.png, acc:0.9403533935546875, iou:0.21750944976291642, acc_cls0.2397760950676889 000000002.png, acc:0.8805084228515625, iou:0.20677225948304948, acc_cls0.22165470417461045 000000003.png, acc:0.910186767578125, iou:0.5374669697784406, acc_cls0.6002255939000143 000000004.png, acc:0.9135894775390625, iou:0.49426367831723594, acc_cls0.5440891190528987 000000005.png, acc:0.9084930419921875, iou:0.5620866142956834, acc_cls0.6502875734164735 000000006.png, acc:0.9343414306640625, iou:0.6045398696214368, acc_cls0.6824411153037097 000000007.png, acc:0.86566162109375, iou:0.13596347532565847, acc_cls0.15904775159141893 000000008.png, acc:0.92608642578125, iou:0.6066313636385289, acc_cls0.686377579583622 000000009.png, acc:0.9074554443359375, iou:0.14527489862487447, acc_cls0.19636295563014194 000000010.png, acc:0.978912353515625, iou:0.41013007889626, acc_cls0.5309096608495731 000000011.png, acc:0.8917388916015625, iou:0.12945535175493833, acc_cls0.15509463825018682 000000012.png, acc:0.88568115234375, iou:0.12710576977334995, acc_cls0.13982065622130674 000000013.png, acc:0.8527374267578125, iou:0.12052946145058839, acc_cls0.15498666764101443 000000014.png, acc:0.855865478515625, iou:0.11997121417720466, acc_cls0.1300705914081646 000000015.png, acc:0.8303680419921875, iou:0.11224693229386994, acc_cls0.15063578243606204 000000016.png, acc:0.81634521484375, iou:0.12238405158883152, acc_cls0.1552799892215321 000000017.png, acc:0.8724517822265625, iou:0.14832212341894052, acc_cls0.15794770487704352 000000018.png, acc:0.973236083984375, iou:0.15880628364464697, acc_cls0.16694603198365687 000000019.png, acc:0.9571380615234375, iou:0.15820230152015433, acc_cls0.16694095946803228 000000020.png, acc:0.9492950439453125, iou:0.15843530267671513, acc_cls0.17159408155183756 000000021.png, acc:0.9835205078125, iou:0.5169552867224676, acc_cls0.5293089391662275 000000022.png, acc:0.93670654296875, iou:0.14081470138925065, acc_cls0.14986690818069334 000000023.png, acc:0.9805908203125, iou:0.15547314332955006, acc_cls0.16389840413777204 000000024.png, acc:0.973480224609375, iou:0.15124500798277063, acc_cls0.21221830238297923 000000025.png, acc:0.8671112060546875, iou:0.1320808783138103, acc_cls0.18834871622115743 000000026.png, acc:0.8807220458984375, iou:0.12551283537045949, acc_cls0.160127612253545 000000027.png, acc:0.8549346923828125, iou:0.1256957684753734, acc_cls0.1947882072082881 000000028.png, acc:0.7188873291015625, iou:0.09553027193514095, acc_cls0.13162457606165895 000000029.png, acc:0.6623687744140625, iou:0.08602672874865583, acc_cls0.1448762024148364 000000030.png, acc:0.6565704345703125, iou:0.07762716768192297, acc_cls0.14779997114294124 000000031.png, acc:0.668609619140625, iou:0.08023237592062181, acc_cls0.15122971232487634 000000032.png, acc:0.982666015625, iou:0.531055672795304, acc_cls0.551112678117131 000000033.png, acc:0.6807403564453125, iou:0.08217231354625484, acc_cls0.12642576184992113 000000034.png, acc:0.7405242919921875, iou:0.09975355253896562, acc_cls0.14102723936071107 000000035.png, acc:0.7180633544921875, iou:0.08998982428014987, acc_cls0.12264189412001483 000000036.png, acc:0.7132110595703125, iou:0.09494116642949992, acc_cls0.15935831094464523 000000037.png, acc:0.74932861328125, iou:0.10875879497291979, acc_cls0.15855171662196246 000000038.png, acc:0.8556671142578125, iou:0.12793083621254434, acc_cls0.15536618070094121 000000039.png, acc:0.8833160400390625, iou:0.12986589591132633, acc_cls0.15274859248800776 000000040.png, acc:0.8965606689453125, iou:0.13013244391447568, acc_cls0.14474484569354112 000000041.png, acc:0.9409332275390625, iou:0.6847726608359286, acc_cls0.7734320143470286 000000042.png, acc:0.94476318359375, iou:0.13839467135688366, acc_cls0.14964636561620268 000000043.png, acc:0.9872589111328125, iou:0.533262521084918, acc_cls0.5504109781551652 000000044.png, acc:0.88800048828125, iou:0.1229156693978053, acc_cls0.1447694124446469 000000045.png, acc:0.881378173828125, iou:0.12194908345030431, acc_cls0.14572180611905405 000000046.png, acc:0.851593017578125, iou:0.11931384553390069, acc_cls0.13810932798358022 000000047.png, acc:0.8807220458984375, iou:0.12388368769572063, acc_cls0.1413720889511982 000000048.png, acc:0.8756103515625, iou:0.12691871866741045, acc_cls0.17894228937721984 000000049.png, acc:0.92852783203125, iou:0.1372728846966373, acc_cls0.1531958262879321 000000050.png, acc:0.927459716796875, iou:0.13396655339308303, acc_cls0.14496793246471645 val_acc:0.8728141036688113, val_iou0.21211657716377352

Val(net='pspnet') #Verify PSPNet

/opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages/paddle/nn/layer/norm.py:641: UserWarning: When training, we now always track global mean and variance. "When training, we now always track global mean and variance.") 000000000.png, acc:0.9158935546875, iou:0.1577242942255457, acc_cls0.17173641185720198 000000001.png, acc:0.910797119140625, iou:0.16134327173108523, acc_cls0.1762481738122499 000000002.png, acc:0.890045166015625, iou:0.15437460034040562, acc_cls0.17223887049440076 000000003.png, acc:0.8770599365234375, iou:0.32909551160641853, acc_cls0.3755967387939404 000000004.png, acc:0.896759033203125, iou:0.4255025049622586, acc_cls0.47846308530983944 000000005.png, acc:0.874267578125, iou:0.4274390621432923, acc_cls0.4899027661840728 000000006.png, acc:0.8851470947265625, iou:0.43181422085059556, acc_cls0.47451645143452004 000000007.png, acc:0.8247833251953125, iou:0.10052916219690879, acc_cls0.1383570399712419 000000008.png, acc:0.8719635009765625, iou:0.38244574435993944, acc_cls0.43329535485474685 000000009.png, acc:0.8692779541015625, iou:0.13464066456750332, acc_cls0.18448936562578214 000000010.png, acc:0.9468536376953125, iou:0.44863223039889155, acc_cls0.46856662893925427 000000011.png, acc:0.941162109375, iou:0.13867667780473572, acc_cls0.18118463243502247 000000012.png, acc:0.945068359375, iou:0.1389176838363624, acc_cls0.15043614809102962 000000013.png, acc:0.9033355712890625, iou:0.12846794742347878, acc_cls0.14400104197131805 000000014.png, acc:0.8373565673828125, iou:0.11054204416461214, acc_cls0.13875799125240074 000000015.png, acc:0.86090087890625, iou:0.1264827994115744, acc_cls0.16435776274153532 000000016.png, acc:0.7948760986328125, iou:0.11874856076666136, acc_cls0.15379384017006992 000000017.png, acc:0.8228759765625, iou:0.12823383572579636, acc_cls0.1570891982493681 000000018.png, acc:0.9229583740234375, iou:0.1302237343290557, acc_cls0.13985282348585362 000000019.png, acc:0.914764404296875, iou:0.12877152670350586, acc_cls0.14030697926333272 000000020.png, acc:0.906524658203125, iou:0.12558250297736512, acc_cls0.13805377313078535 000000021.png, acc:0.9603271484375, iou:0.4624199768708489, acc_cls0.47837019179957135 000000022.png, acc:0.9090728759765625, iou:0.12669000770141225, acc_cls0.13971059069788844 000000023.png, acc:0.9561920166015625, iou:0.1401867698842562, acc_cls0.14819146018309393 000000024.png, acc:0.958831787109375, iou:0.14103439018664546, acc_cls0.1490837315909282 000000025.png, acc:0.8876800537109375, iou:0.12239545261262934, acc_cls0.13539270176077994 000000026.png, acc:0.897247314453125, iou:0.12537335704194594, acc_cls0.13749971322365556 000000027.png, acc:0.905670166015625, iou:0.1277774355920293, acc_cls0.13915315931355438 000000028.png, acc:0.8493804931640625, iou:0.1123330103897112, acc_cls0.12825470143791537 000000029.png, acc:0.74676513671875, iou:0.08918434684919538, acc_cls0.11052698832946779 000000030.png, acc:0.662445068359375, iou:0.07431417013700324, acc_cls0.13493026187817742 000000031.png, acc:0.675506591796875, iou:0.07255593791925741, acc_cls0.1490838030416825 000000032.png, acc:0.9351348876953125, iou:0.4372444574056901, acc_cls0.4611648985543674 000000033.png, acc:0.723907470703125, iou:0.08647248810649447, acc_cls0.1537256973411532 000000034.png, acc:0.6313934326171875, iou:0.06857098073634199, acc_cls0.1488324367913469 000000035.png, acc:0.804412841796875, iou:0.10820015109697752, acc_cls0.13811802302293585 000000036.png, acc:0.808563232421875, iou:0.10711285792361261, acc_cls0.12773659704192822 000000037.png, acc:0.73858642578125, iou:0.09494626712583215, acc_cls0.15144985295885574 000000038.png, acc:0.8296051025390625, iou:0.10950276111431888, acc_cls0.13514122161203976 000000039.png, acc:0.8893280029296875, iou:0.12520038793252025, acc_cls0.15648624414517176 000000040.png, acc:0.8923492431640625, iou:0.12395896040329932, acc_cls0.14618784019861522 000000041.png, acc:0.8879852294921875, iou:0.3993106317304327, acc_cls0.4364105449925553 000000042.png, acc:0.9210662841796875, iou:0.12852261270994678, acc_cls0.143167733626099 000000043.png, acc:0.9283599853515625, iou:0.4298842882667755, acc_cls0.4555886592291649 000000044.png, acc:0.9065093994140625, iou:0.12527710447115725, acc_cls0.14299767782824516 000000045.png, acc:0.8910980224609375, iou:0.12194565425367523, acc_cls0.14454145715166625 000000046.png, acc:0.881591796875, iou:0.12085055326063152, acc_cls0.140775084335062 000000047.png, acc:0.8845977783203125, iou:0.12193075116381968, acc_cls0.13955108498475677 000000048.png, acc:0.8610992431640625, iou:0.11238279829752305, acc_cls0.12585970329906584 000000049.png, acc:0.8972930908203125, iou:0.12274889202392533, acc_cls0.13700648812518762 000000050.png, acc:0.8695068359375, iou:0.11524267939015634, acc_cls0.12829112730315914 val_acc:0.8667485854204964, val_iou0.17807370025733443

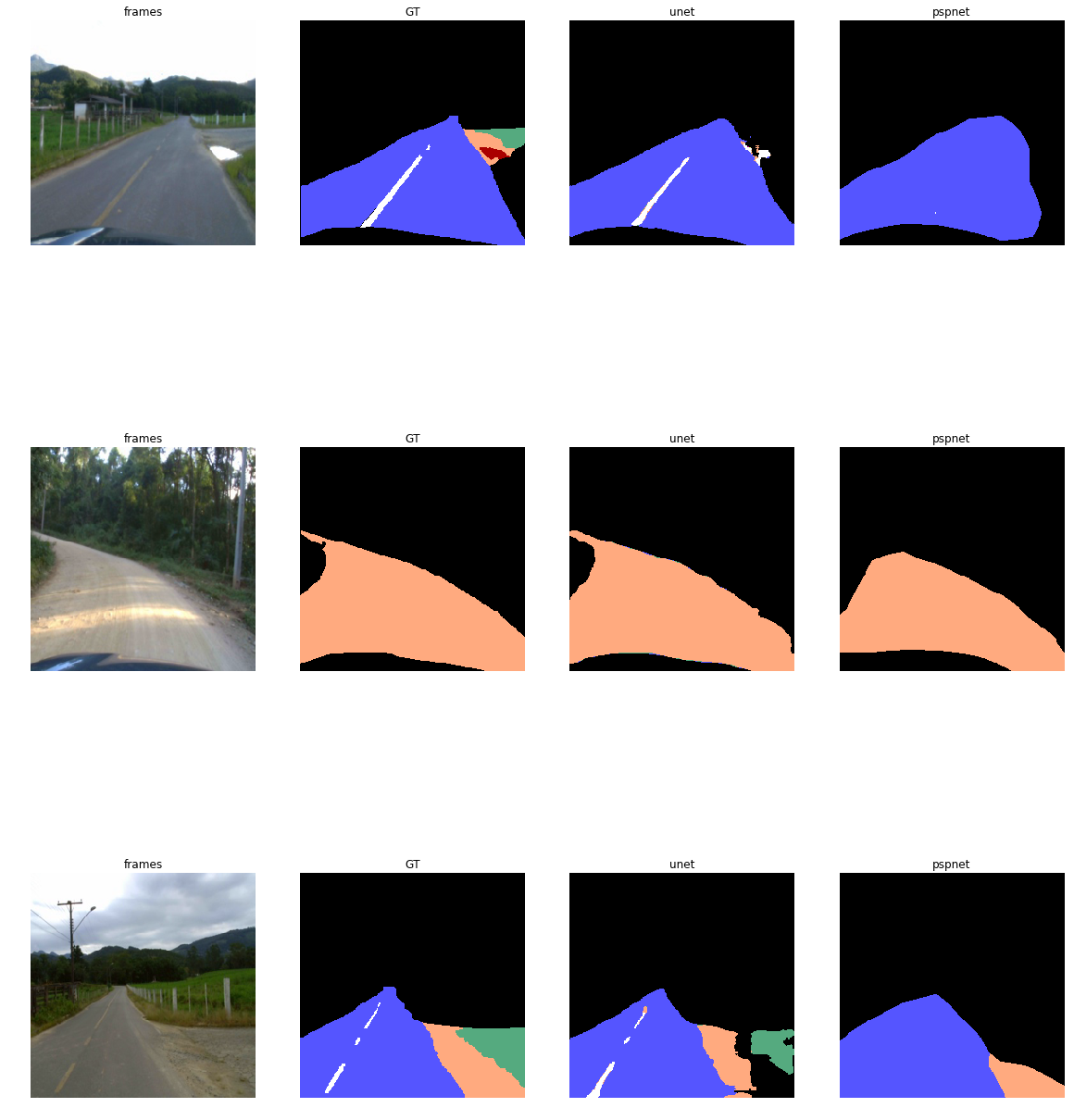

7, Prediction results and visualization

- The results of 200 epoch s of U-Net training, acc: 87.28%, IOU:21.21%

- The results of PSPNet training 200 epoch s, ACC: 86.09%, IOU: 17.60%

Visual comparison between U-Net and PSPNet

# Convert the prediction result to color image

import json

import numpy as np

from PIL import Image

import cv2

import os

labels = ['Background', 'Asphalt', 'Paved', 'Unpaved',

'Markings', 'Speed-Bump', 'Cats-Eye', 'Storm-Drain',

'Patch', 'Water-Puddle', 'Pothole', 'Cracks']

# Convert label image to color image

def mask2color(mask, labels):

jsonfile = json.load(open(r"work/dataset/mask2color.json"))

h, w = mask.shape[:2]

color = np.zeros([h, w, 3])

for index in range(len(labels)):

if index>=8:

mask_index = index+1 # The mask tag needs to be changed

else:

mask_index = index

if mask_index in mask:

color[np.where(mask == mask_index)] = np.asarray(jsonfile[labels[index]])

else:

continue

return color

# Save the converted color map in Val under Class2 folder_ color_ Result folder

def save_color(net):

save_dir = r"work/Class3/{}_color_result".format(net)

if not os.path.isdir(save_dir):

os.mkdir(save_dir)

mask_dir = r"work/Class3/val_result"

mask_names = [f for f in os.listdir(mask_dir) if f.endswith('.png')]

for maskname in mask_names:

mask = cv2.imread(os.path.join(mask_dir, maskname), -1)

color = mask2color(mask, labels)

result = Image.fromarray(np.uint8(color))

result.save(os.path.join(save_dir, maskname))

save_color('pspnet') #Save the prediction result of pspnet and convert it into color image

save_color('unet') #Color prediction result of unet

import matplotlib.pyplot as plt

from PIL import Image

import os

# Show the pictures used in the example folder

newsize = (256, 256)

gt_color1 = Image.open(r"work/dataset/val_colors/000000000GT.png").resize(newsize)

frames1 = Image.open(r"work/dataset/val_frames/000000000.png").resize(newsize)

unet1 = Image.open(r"work/Class3/unet_color_result/000000000.png")

pspnet1 = Image.open(r"work/Class3/pspnet_color_result/000000000.png")

gt_color2 = Image.open(r"work/dataset/val_colors/000000032GT.png").resize(newsize)

frames2 = Image.open(r"work/dataset/val_frames/000000032.png").resize(newsize)

unet2 = Image.open(r"work/Class3/unet_color_result/000000032.png")

pspnet2 = Image.open(r"work/Class3/pspnet_color_result/000000032.png")

gt_color3 = Image.open(r"work/dataset/val_colors/000000041GT.png").resize(newsize)

frames3 = Image.open(r"work/dataset/val_frames/000000041.png").resize(newsize)

unet3 = Image.open(r"work/Class3/unet_color_result/000000041.png")

pspnet3 = Image.open(r"work/Class3/pspnet_color_result/000000041.png")

plt.figure(figsize=(20,24))#Set window size

plt.subplot(3,4,1), plt.title('frames')

plt.imshow(frames1), plt.axis('off')

plt.subplot(3,4,2), plt.title('GT')

plt.imshow(gt_color1), plt.axis('off')

plt.subplot(3,4,3), plt.title('unet')

plt.imshow(unet1), plt.axis('off')

plt.subplot(3,4,4), plt.title('pspnet')

plt.imshow(pspnet1), plt.axis('off')

plt.subplot(3,4,5), plt.title('frames')

plt.imshow(frames2), plt.axis('off')

plt.subplot(3,4,6), plt.title('GT')

plt.imshow(gt_color2), plt.axis('off')

plt.subplot(3,4,7), plt.title('unet')

plt.imshow(unet2), plt.axis('off')

plt.subplot(3,4,8), plt.title('pspnet')

plt.imshow(pspnet2), plt.axis('off')

plt.subplot(3,4,9), plt.title('frames')

plt.imshow(frames3), plt.axis('off')

plt.subplot(3,4,10), plt.title('GT')

plt.imshow(gt_color3), plt.axis('off')

plt.subplot(3,4,11), plt.title('unet')

plt.imshow(unet3), plt.axis('off')

plt.subplot(3,4,12), plt.title('pspnet')

plt.imshow(pspnet3), plt.axis('off')

plt.show()