In the process of writing this article, we refer to the content of the course "Introduction to Python Programming and data science" by Mr. Hu Junfeng.

Concept:

- Multiple tasks should be carried out simultaneously at one time, but not necessarily at the same time.

- Parallel: promote multiple tasks at the same time in a period of time, and carry out multiple tasks at the same time.

- Parallelism is a subset of concurrency; The alternative execution of multiple tasks by a single core CPU is concurrent but not parallel; Multi core CPUs execute multiple tasks simultaneously, which is both concurrent and parallel.

When do I need concurrency?

- Multiple tasks need to be processed at the same time

- Often need to wait for resources

- Multiple sub processes cooperate with each other

Computer task execution mechanism:

- The operating system kernel is responsible for the suspension and wake-up of tasks (i.e. processes / threads) and the allocation of resources (such as which memory addresses a program can access)

- Process is the smallest unit of resource allocation. Different processes do not share resources (such as accessible memory area); Processes can be subdivided into threads, which share most of their resources.

Because of the difference between sharing resources or not, switching between threads (i.e. suspend and wake up) is faster than switching between processes.

Thread is the smallest unit of scheduling execution. This means that the operating system kernel is responsible for executing multiple threads concurrently.

Comparison between multi process and multi thread

Multi process:

- Split the task into multiple processes

- It is up to the kernel to decide whether to be parallel or just concurrent.

- Memory is not shared between processes

-

Advantages: one process hangs without affecting others

-

Disadvantages: the switching process is time-consuming and the communication between processes is inconvenient

Multithreading:

- Split the task into multiple threads in a process

-

It is up to the kernel to decide whether to be parallel or just concurrent.

-

There is a global interpreter lock in CPython interpreter, which leads to multi threads can only be concurrent but not parallel (multi processes can be parallel).

- Shared memory between processes

-

Advantages: low switching time and convenient communication

-

Disadvantages: lock mechanism should be used for global variables in parallel

Lock mechanism: when a thread uses a global variable, wait for it to be unlocked (by other threads), lock it, use it again, and then unlock it.

If locks are not used: after 100 threads with a+=1 are executed (initial a=0), a may be < 100.

In data science, we can not use locking mechanism in order to improve efficiency, but we should tolerate the errors caused at the same time.

Multi process mechanism and code implementation

In the functions described below, almost every possible blocking will have an optional timeout parameter. The matter will not be mentioned again.

Basic Usage

from multiprocessing import Process

import os

import time

def task(duration, base_time):

pid = os.getpid()

print(f'son process id {pid} starts at {"%.6f" % (time.perf_counter()-base_time)}s with parameter {duration}')

time.sleep(duration)

print(f'son process id {pid} ends at {"%.6f" % (time.perf_counter()-base_time)}s')

if __name__ == '__main__':

pid = os.getpid()

base_time = time.perf_counter()

print(f'main process id {pid} starts at {"%.6f" % (time.perf_counter()-base_time)}s')

p1 = Process(target=task, args=(1,base_time)) # a process that executes task(1,base_time); currently not running

p2 = Process(target=task, args=(2,base_time)) # a process that executes task(2,base_time); currently not running

p1.start()

p2.start()

print(p1.is_alive(), p2.is_alive()) # whether they are running

print('main process can proceed while son processes are running')

p1.join() # wait until p1 finishes executing (the main process will pause on this command in the meantime) and kill it after it finishes

p2.join() # wait until p2 finishes executing (the main process will pause on this command in the meantime) and kill it after it finishes

print(f'main process id {pid} ends at {"%.6f" % (time.perf_counter()-base_time)}s')

main process id 3316 starts at 0.000001s True True main process can proceed while son processes are running son process id 15640 starts at 0.002056s with parameter 1 son process id 10716 starts at 0.003030s with parameter 2 son process id 15640 ends at 1.002352s son process id 10716 ends at 2.017861s main process id 3316 ends at 2.114324s

If there is no P1 Join() and P2 Join(), the main process will complete the execution in a short time, while the child process is still running. The output results are as follows:

main process id 11564 starts at 0.000001s True True main process can proceed while son processes are running main process id 11564 ends at 0.011759s son process id 13500 starts at 0.004392s with parameter 1 son process id 11624 starts at 0.003182s with parameter 2 son process id 13500 ends at 1.009420s son process id 11624 ends at 2.021817s

Why is an event of 0.004 seconds output before an event of 0.003 seconds?

1. Because the time consumption of print statement is not included

2. Because perf_ There is a bug in counter () under Windows. The time given by it is not completely synchronized between different processes.

It should be noted that a child process is still alive after running; Only after being joined () will he officially die (i.e. be removed from the system).

About if__ name__ == '__ main__'::

-

In Python, you can obtain the code in other files through import; Importing file A at the beginning of file B (the same is true for other locations) is equivalent to copying A at the beginning of B.

-

If we want some code in A to be ignored during execution (such as test statements) when copying the contents of A, we can add if to these codes in A__ name__ == '__ main__':

- For system variables import ed from elsewhere__ name__ In this code, it will be equal to the name of the source file (or module name, which you don't care); For codes that exist in this document__ name__ Will equal__ main__.

- For some reasons, under Windows, if multiple processes are used in the code of a file, the file will implicitly import itself (one or more times); Putting all zero indented codes in if name = = 'main': can avoid the problem of repeated execution (note that if this is not done, the imported copy will import itself again, resulting in infinite recursive import and error).

- For the time being, it can be considered that this measure can completely eliminate the effect of "implicit import itself".

Process replication

from multiprocessing import Process

import os

pid = os.getpid()

def task():

global pid

print(pid)

pid = 1

print(pid)

if __name__ == '__main__':

p = Process(target=task)

p.start()

p.join()

print(pid)

Output under Windows:

4836 1 2944

511 1 511

The first two numbers are output by the child process, and the third number is output by the parent process.

- Note that when pid is assigned to 1 in the child process, it is not 1 in the parent process. This shows that the modification of the running environment in the target function of the child process does not affect the running environment of the parent process. In fact, the opposite is true (the father does not affect the son). In other words, once the running environment of the child process is created, the running environment of the parent process and the running environment of the child process are completely independent.

- Because of this independence, the operation results of the child process cannot be directly fed back to the parent process. Two solutions will be introduced later: 1 Interprocess communication 2 Use the return value of the process pool apply method.

- Note that the output of one or three lines is different under Windows, but the same under Linux. This shows that the value of the global variable pid in the child process is obtained by directly copying the value of the pid in the parent process under Linux, and by re running pid = OS under Windows Getpid(). More generally, there are two facts:

-

In Windows, the subprocess created by Process(target) is a piece of white paper (that is, the running environment is empty); When start() is called, it will first execute the entire code file of the parent process through the import statement (thus creating a new running environment), and then start running the target function. So, if__ name__ == '__ main__': The wrapped code will only be executed by the parent process; The zero indentation code that is not wrapped up will also be executed by each sub process (in its own running environment).

This is the mechanism of "implicit import itself" mentioned earlier. -

In Linux, the child process created by Process(target) will completely copy the running environment of the parent process instead of re creating it by itself. The running environment of the copied child process is completely independent of that of the parent process.

from multiprocessing import Process

import os

def task():

pass

if __name__ == '__main__':

p = Process(target=task)

print('son process created')

p.start()

print('son process starts')

p.join()

print('son process ends')

print('gu?')

Output under Windows

son process created son process starts gu? son process ends gu?

It can be seen that when the Windows child process (during initialization) executes the code file of the parent process, the parent process will_ process. Content after start () such as print('gu? ') will also be executed.

Process pool

If we have many tasks to be carried out at the same time, opening a process for each task is inefficient (the cost of creating and destroying processes is high, they can not be fully parallel, and the kernel is difficult to schedule) and may not be allowed by the kernel.

Solution: use the process pool. There are several processes in the pool (generally not much more than the number of CPU cores). When there are new tasks, find an idle process to assign to it, and wait if there are no idle processes. The disadvantage is that you need to wait when there are no idle processes, so it can not be regarded as complete concurrency.

Basic usage of process pool

from multiprocessing import Pool

import os, time

def task(duration, base_time, task_name):

pid = os.getpid()

print(f'son process id {pid} starts working on {task_name} at {"%.6f" % (time.perf_counter()-base_time)}s with parameter {duration}')

time.sleep(duration)

print(f'son process id {pid} ends working on {task_name} at {"%.6f" % (time.perf_counter()-base_time)}s')

if __name__ == '__main__':

pid = os.getpid()

base_time = time.perf_counter()

print(f'main process id {pid} starts at {"%.6f" % (time.perf_counter()-base_time)}s')

pool = Pool(3) # a pool containing 3 subprocesses

print('start assigning tasks')

for i in range(4):

pool.apply_async(task, args=(1, base_time, "TaskNo."+str(i+1))) # assign task to some process in pool and start running

# if all son processes are busy, wait until one is free and then start

pool.close() # no longer accepting new tasks, but already applied ones (including those that are waiting) keeps running.

print('all tasks assigned; wait for son processes to finish')

pool.join() # wait until all tasks are done, and then the pool is dead. `join()` can be called only if `close()` has already been called

print(f'all tasks finished at {"%.6f" % (time.perf_counter()-base_time)}s')

print(f'main process id {pid} ends at {"%.6f" % (time.perf_counter()-base_time)}s')

Output: (the output under Win and Linux is similar)

main process id 5236 starts at 0.000002s start assigning tasks all tasks assigned; wait for son processes to finish son process id 8724 starts working on TaskNo.1 at 0.030557s with parameter 1 son process id 14584 starts working on TaskNo.2 at 0.037581s with parameter 1 son process id 10028 starts working on TaskNo.3 at 0.041210s with parameter 1 son process id 14584 ends working on TaskNo.2 at 1.042662s son process id 8724 ends working on TaskNo.1 at 1.040211s son process id 14584 starts working on TaskNo.4 at 1.044109s with parameter 1 son process id 10028 ends working on TaskNo.3 at 1.054017s son process id 14584 ends working on TaskNo.4 at 2.055515s all tasks finished at 2.214534s main process id 5236 ends at 2.214884s

When using apply_ When async ("asynchronous call") adds a task, the main process will continue to run during the execution of the task by the child process; If you add a task with apply ("synchronous call"), the main process pauses ("blocks") until the task is completed. Generally use apply_async instead of apply.

Process replication in process pool

from multiprocessing import Pool

import os, time

all_tasks_on_this_son_process = []

def task(duration, base_time, task_name):

global all_tasks_on_this_son_process

pid = os.getpid()

print(f'son process id {pid} starts working on {task_name} at {"%.6f" % (time.perf_counter()-base_time)}s with parameter {duration}, this process already executed',all_tasks_on_this_son_process)

time.sleep(duration)

print(f'son process id {pid} ends working on {task_name} at {"%.6f" % (time.perf_counter()-base_time)}s')

all_tasks_on_this_son_process += [task_name]

if __name__ == '__main__':

pid = os.getpid()

base_time = time.perf_counter()

print(f'main process id {pid} starts at {"%.6f" % (time.perf_counter()-base_time)}s')

pool = Pool(3)

print('start assigning tasks')

for i in range(4):

pool.apply_async(task, args=(1, base_time, "TaskNo."+str(i+1)))

pool.close()

print('all tasks assigned; wait for son processes to finish')

pool.join()

print(f'all tasks finished at {"%.6f" % (time.perf_counter()-base_time)}s')

print(f'main process id {pid} ends at {"%.6f" % (time.perf_counter()-base_time)}s')

print('gu?')

Output under Windows:

main process id 6116 starts at 0.000001s start assigning tasks all tasks assigned; wait for son processes to finish gu? gu? gu? son process id 16028 starts working on TaskNo.1 at 0.037577s with parameter 1, this process already executed [] son process id 11696 starts working on TaskNo.2 at 0.041393s with parameter 1, this process already executed [] son process id 5400 starts working on TaskNo.3 at 0.038409s with parameter 1, this process already executed [] son process id 11696 ends working on TaskNo.2 at 1.041521s son process id 16028 ends working on TaskNo.1 at 1.038722s son process id 11696 starts working on TaskNo.4 at 1.042543s with parameter 1, this process already executed ['TaskNo.2'] son process id 5400 ends working on TaskNo.3 at 1.052573s son process id 11696 ends working on TaskNo.4 at 2.053483s all tasks finished at 2.167447s main process id 6116 ends at 2.167904s gu?

Under Windows, each thread in the pool will run the code of the parent process once to build the running environment when (and only when) the first task it is assigned to is about to start executing. The change of the running environment of a process in the previous task will be reflected in the running environment of the next task. (that is, when accepting a new task, it will directly continue to use the running environment left by the previous task)

Output under Linux:

main process id 691 starts at 0.000001s all tasks assigned; wait for son processes to finish son process id 692 starts working on TaskNo.1 at 0.104757s with parameter 1, this process already executed [] son process id 693 starts working on TaskNo.2 at 0.104879s with parameter 1, this process already executed [] son process id 694 starts working on TaskNo.3 at 0.105440s with parameter 1, this process already executed [] son process id 692 ends working on TaskNo.1 at 1.106427s son process id 693 ends working on TaskNo.2 at 1.106426s son process id 694 ends working on TaskNo.3 at 1.107157s son process id 692 starts working on TaskNo.4 at 1.107560s with parameter 1, this process already executed ['TaskNo.1'] son process id 692 ends working on TaskNo.4 at 2.110033s all tasks finished at 2.117158s main process id 691 ends at 2.117452s gu?

Under Linux, each thread in the pool will completely copy the running environment from the parent process when (and only when) its first task is about to start executing. The change of the running environment of a process in the previous task will be reflected in the running environment of the next task. (that is, when accepting a new task, it will directly continue to use the running environment left by the previous task)

from multiprocessing import Pool

import os, time

all_tasks_on_this_son_process = []

def init(init_name):

global all_tasks_on_this_son_process

all_tasks_on_this_son_process += [init_name]

def task(duration, base_time, task_name):

global all_tasks_on_this_son_process

pid = os.getpid()

print(f'son process id {pid} starts working on {task_name} at {"%.6f" % (time.perf_counter()-base_time)}s with parameter {duration}, this process already executed',all_tasks_on_this_son_process)

time.sleep(duration)

print(f'son process id {pid} ends working on {task_name} at {"%.6f" % (time.perf_counter()-base_time)}s')

all_tasks_on_this_son_process += [task_name]

if __name__ == '__main__':

pid = os.getpid()

base_time = time.perf_counter()

print(f'main process id {pid} starts at {"%.6f" % (time.perf_counter()-base_time)}s')

pool = Pool(3, initializer=init, initargs=('init',)) # look here

print('start assigning tasks')

for i in range(4):

pool.apply_async(task, args=(1, base_time, "TaskNo."+str(i+1)))

pool.close()

print('all tasks assigned; wait for son processes to finish')

pool.join()

print(f'all tasks finished at {"%.6f" % (time.perf_counter()-base_time)}s')

print(f'main process id {pid} ends at {"%.6f" % (time.perf_counter()-base_time)}s')

Output (similar to Linux under Win):

main process id 18416 starts at 0.000004s start assigning tasks all tasks assigned; wait for son processes to finish son process id 10052 starts working on TaskNo.1 at 0.053483s with parameter 1, this process already executed ['init'] son process id 17548 starts working on TaskNo.2 at 0.040412s with parameter 1, this process already executed ['init'] son process id 10124 starts working on TaskNo.3 at 0.049992s with parameter 1, this process already executed ['init'] son process id 10124 ends working on TaskNo.3 at 1.054387s son process id 17548 ends working on TaskNo.2 at 1.044956s son process id 10052 ends working on TaskNo.1 at 1.062396s son process id 10124 starts working on TaskNo.4 at 1.055888s with parameter 1, this process already executed ['init', 'TaskNo.3'] son process id 10124 ends working on TaskNo.4 at 2.060094s all tasks finished at 2.443017s main process id 18416 ends at 2.444705s

Utilize the return value of child processes in the process pool

from multiprocessing import Pool

import time

def task(duration, base_time, task_name):

time.sleep(duration)

return f'{task_name} finished at {"%.6f" % (time.perf_counter()-base_time)}s'

if __name__ == '__main__':

base_time = time.perf_counter()

pool = Pool(2)

return_values = []

return_values.append(pool.apply(task, args=(1,base_time,'TaskNo.1_sync')))

print('at time {}, r_v is {}'.format(time.perf_counter() - base_time, return_values))

return_values.append(pool.apply_async(task, args=(2,base_time,'TaskNo.2_async')))

print('at time {}, r_v is {}'.format(time.perf_counter() - base_time, return_values))

pool.close()

pool.join()

print(f'all tasks finished at {"%.6f" % (time.perf_counter()-base_time)}s')

assert return_values[1].ready() == True

return_values[1] = return_values[1].get() # from ApplyResult to true return value

print('results:', return_values)

at time 1.2109459, r_v is ['TaskNo.1_sync finished at 1.027223s'] at time 1.2124976, r_v is ['TaskNo.1_sync finished at 1.027223s', <multiprocessing.pool.ApplyResult object at 0x0000016D24D79AC0>] all tasks finished at 3.258190s results: ['TaskNo.1_sync finished at 1.027223s', 'TaskNo.2_async finished at 3.041053s']

Pool here After join (), result. is called. Get (), so you can immediately get the return value of the function executed by the child process; If the corresponding child process has not returned, call result Get(), the main process will block until the child process returns, and then get the return value of the function executed by the child process. result.ready() returns a bool indicating whether the corresponding child process has returned.

In addition, result Wait () blocks until the child process returns, but does not get the return value.

An ApplyResult instance can call get() multiple times, that is, it can get the return value multiple times.

Interprocess communication

It can be considered that any object transferred across processes will be deeply copied during the transfer process.

Pipe

from multiprocessing import Process, Pipe

import time

def send_through_pipe(conn, pipe_name, sender_name, content, base_time):

print(sender_name, 'tries to send', content, 'through', pipe_name, 'at', '%.6f'%(time.perf_counter()-base_time))

conn.send(content)

print(sender_name, 'successfully finishes sending at', '%.6f'%(time.perf_counter()-base_time))

def receive_from_pipe(conn, pipe_name, receiver_name, base_time):

print(receiver_name, 'tries to receive content from', pipe_name, 'at', '%.6f'%(time.perf_counter()-base_time))

content = conn.recv()

print(receiver_name, 'successfully receives', content, 'at', '%.6f'%(time.perf_counter()-base_time))

return content

def task(conn, pipe_name, process_name, base_time):

receive_from_pipe(conn, pipe_name, process_name, base_time)

time.sleep(1)

send_through_pipe(conn, pipe_name, process_name, 142857, base_time)

if __name__ == '__main__':

base_time = time.perf_counter()

conn_A, conn_B = Pipe() # two endpoints of the pipe

p1 = Process(target=task, args=(conn_B,'pipe','son',base_time))

p1.start()

time.sleep(1)

send_through_pipe(conn_A, 'pipe', 'main', ['hello','hello','hi'], base_time) # any object can be sent

receive_from_pipe(conn_A, 'pipe', 'main', base_time)

p1.join()

son tries to receive content from pipe at 0.036439 main tries to send ['hello', 'hello', 'hi'] through pipe at 1.035570 main successfully finishes sending at 1.037174 main tries to receive content from pipe at 1.037318 son successfully receives ['hello', 'hello', 'hi'] at 1.037794 son tries to send 142857 through pipe at 2.039058 son successfully finishes sending at 2.040158 main successfully receives 142857 at 2.040441

In addition, you can also use conn.poll() (return Bool type) to know whether there is unread information sent from the opposite side in Conn.

from multiprocessing import Process, Pipe

import time

def send_through_pipe(conn, pipe_name, sender_name, content, base_time):

print(sender_name, 'tries to send', content, 'through', pipe_name, 'at', '%.6f'%(time.perf_counter()-base_time))

conn.send(content)

print(sender_name, 'successfully finishes sending at', '%.6f'%(time.perf_counter()-base_time))

def receive_from_pipe(conn, pipe_name, receiver_name, base_time):

print(receiver_name, 'tries to receive content from', pipe_name, 'at', '%.6f'%(time.perf_counter()-base_time))

content = conn.recv()

print(receiver_name, 'successfully receives', content, 'at', '%.6f'%(time.perf_counter()-base_time))

return content

def task1(conn, pipe_name, process_name, base_time):

receive_from_pipe(conn, pipe_name, process_name, base_time)

time.sleep(1)

send_through_pipe(conn, pipe_name, process_name, 'greetings from ' + process_name, base_time)

def task2(conn, pipe_name, process_name, base_time):

time.sleep(1)

send_through_pipe(conn, pipe_name, process_name, 'greetings from ' + process_name, base_time)

time.sleep(2)

receive_from_pipe(conn, pipe_name, process_name, base_time)

if __name__ == '__main__':

base_time = time.perf_counter()

conn_A, conn_B = Pipe()

p1 = Process(target=task1, args=(conn_A,'pipe','son1',base_time))

p2 = Process(target=task2, args=(conn_B,'pipe','son2',base_time))

p1.start()

p2.start()

p1.join()

p2.join()

son1 tries to receive content from pipe at 0.033372 son2 tries to send greetings from son2 through pipe at 1.058998 son2 successfully finishes sending at 1.060660 son1 successfully receives greetings from son2 at 1.061171 son1 tries to send greetings from son1 through pipe at 2.062389 son1 successfully finishes sending at 2.063290 son2 tries to receive content from pipe at 3.061378 son2 successfully receives greetings from son1 at 3.061843

thus it can be seen:

-

Pipe can temporarily store data, and the temporarily stored data conforms to FIFO rules.

However, the size of the area used by Pipe to temporarily store data is relatively limited (the specific size depends on the OS). If this area is full, send() will block until recv() is used to make room on the opposite side. -

The two endpoints of Pipe can be assigned to any two processes.

- It is not recommended to assign the same endpoint to multiple processes, which may bring risks; If necessary, use Queue.

Queue is essentially a queue that can run across processes.

The time cost of the operation of Queue is about twice that of the corresponding operation in Pipe.

from multiprocessing import Process, Queue

import time

def put_into_queue(q, queue_name, putter_name, content, base_time):

print(putter_name, 'tries to put', content, 'into', queue_name, 'at', '%.6f'%(time.perf_counter()-base_time))

q.put(content)

print(putter_name, 'successfully finishes putting at', '%.6f'%(time.perf_counter()-base_time))

def get_from_queue(q, queue_name, getter_name, base_time):

print(getter_name, 'tries to receive content from', queue_name, 'at', '%.6f'%(time.perf_counter()-base_time))

content = q.get()

print(getter_name, 'successfully gets', content, 'at', '%.6f'%(time.perf_counter()-base_time))

return content

def task1(q, delay, queue_name, process_name, base_time):

time.sleep(delay)

put_into_queue(q, queue_name, process_name, 'christmas card from ' + process_name, base_time)

time.sleep(5)

get_from_queue(q, queue_name, process_name, base_time)

def task2(q, delay, queue_name, process_name, base_time):

time.sleep(delay)

get_from_queue(q, queue_name, process_name, base_time)

time.sleep(5)

put_into_queue(q, queue_name, process_name, 'christmas card from ' + process_name, base_time)

if __name__ == '__main__':

base_time = time.perf_counter()

q = Queue()

put_and_get_1 = Process(target=task1, args=(q,0,'queue','putAndGet_No.1',base_time))

get_and_put_1 = Process(target=task2, args=(q,1,'queue','getAndPut_No.1',base_time))

get_and_put_2 = Process(target=task2, args=(q,2,'queue','getAndPut_No.2',base_time))

put_and_get_1.start()

get_and_put_1.start()

get_and_put_2.start()

put_and_get_1.join()

get_and_put_1.join()

get_and_put_2.join()

putAndGet_No.1 tries to put christmas card from putAndGet_No.1 into queue at 0.077883 putAndGet_No.1 successfully finishes putting at 0.079291 getAndPut_No.1 tries to receive content from queue at 1.104196 getAndPut_No.1 successfully gets christmas card from putAndGet_No.1 at 1.105489 getAndPut_No.2 tries to receive content from queue at 2.126434 putAndGet_No.1 tries to receive content from queue at 5.081044 getAndPut_No.1 tries to put christmas card from getAndPut_No.1 into queue at 6.106381 getAndPut_No.1 successfully finishes putting at 6.107820 getAndPut_No.2 successfully gets christmas card from getAndPut_No.1 at 6.108565 getAndPut_No.2 tries to put christmas card from getAndPut_No.2 into queue at 11.109579 getAndPut_No.2 successfully finishes putting at 11.112493 putAndGet_No.1 successfully gets christmas card from getAndPut_No.2 at 11.113546

In addition, if the size of the Queue is so large that it reaches an upper limit, the put() operation will also be blocked. But it should be hard to get that big.

Multithreading

The basic syntax is similar to multiprocessing, but there are important differences in mechanism. Due to the existence of global interpreter lock, Python multithreading is not practical. Here is only a brief introduction.

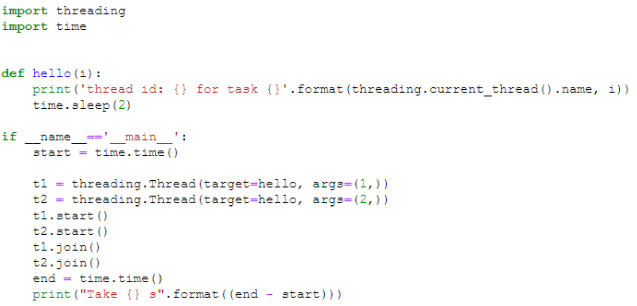

As can be seen from the figure below, the basic code of multithreading is completely consistent with that of multiprocessing. The code in the following figure will run for about 3s in the CPython interpreter.

In addition, this if is not required in multithreading__ name__ == '__ main__': Your judgment.

Variable mechanism of multithreading

import threading

lock_n = threading.Lock()

n = 0

def inc_n(m):

global n

lock_n.acquire(blocking=True)

n += m

lock_n.release()

threads = [threading.Thread(target=inc_n, args=(i,)) for i in range(1,11)]

[t.start() for t in threads]

[t.join() for t in threads]

print(n)

55

-

It can be seen from the above that different threads share the running environment (such as the variable n above).

-

lock.acquire(blocking=True) will block until the lock is empty; Once it's empty, it'll be locked.

Concurrent processing: concurrency

Different processes are executed alternately in the same thread. Each coroutine monopolizes resources at runtime, blocks itself after a period of running, and waits to be awakened by the code controlled by the external (such as the main function).

Compared with multithreading, it has the advantages of lightweight (implemented at the interpreter level, without kernel switching) and unlimited number.

Like multithreading, different processes share a common running environment.

Using a simple generator to realize the collaborative process

def sum(init):

s = init

while True:

delta = yield s # output s, and then input delta

s += delta

g = sum(0)

print(next(g)) # run entil receiving the first output

print(g.send(1)) # send the first input, and then get the second output

print(g.send(2)) # send the second input, and then get the third output

0 1 3

The above example only demonstrates the self blocking of the generator and the interaction between the generator and its caller.

Furthermore, you can define multiple generators to execute different processes and schedule them in the main function (such as implementing a task queue), so as to realize the collaborative process.

Use the callback function to change the ordinary function into a coroutine

def calc(n,callback):

r = 0

for i in range(n):

r += i

callback()

def pause():

print('pause')

yield # just pause, do not return anything

g = calc(10,pause)

Implementation of cooperative process with async/await

Advantages compared with the generator implementation: it can block the current process and execute other processes (without interrupting the waiting IO / communication) when waiting for IO / network communication, so as to save time (which can not be achieved only by the generator); More flexible and convenient to use.

- Multithreading actually has the former advantage. Therefore, multithreading under CPython is not useless, but its usefulness is a subset of the usefulness of collaborative processes.

- One note: if you want to accelerate IO through co process, you must use the asynchronous IO Library in python.

Basic use

import time

start = time.perf_counter()

def sayhi(delay):

time.sleep(delay)

print(f'hi! at {time.perf_counter() - start}')

def main():

sayhi(1)

sayhi(2)

main()

hi! at 1.0040732999914326 hi! at 3.015253899997333

import time

import asyncio

start = time.perf_counter()

async def sayhi(delay):

await asyncio.sleep(delay)

print(f'hi! at {time.perf_counter() - start}')

async def main():

sayhi1 = asyncio.create_task(sayhi(1))

sayhi2 = asyncio.create_task(sayhi(2))

await sayhi1

await sayhi2

# asyncio.run(main()) # use outside IPython

await main() # use inside IPython

hi! at 1.0037910000100965 hi! at 2.0026504999987083

In the above procedure:

- async declares that the current function is a coroutine. This declaration makes it possible to use create in the function body_ Task and await also enable the function itself to be created_ Task and await.

Once a function f is declared as a coroutine, what f() does is no longer run F, but just create a coroutine object and return it (this object will not be run automatically). You need to use the tools in asyncio to run this object.

- run(main()) indicates the start of execution of the collaboration main(). It is required that main() must be a "main coroutine", that is, it is the entry of all coroutines in the whole program (similar to the main function). run(main()) should only be called once in a program, and no coprocedures other than main() should be called.

- The concurrency feature of run(main()) coprocessor will only appear inside main(). From the outside, it is a common black box call.

- The function of run() is to start the environment required to run the collaboration (and close it after main() is completed). But Python starts running automatically, so you can use await directly (and you don't have to put all the collaborators in one main one, but you can spread them all over the program).

- create_task(sayhi(1)) means that the collaboration sayhi(1) creates a task in a "task pool" and starts executing the task. The return value is the handle of the task, or "remote control".

- Tasks in the task pool execute concurrently. When a task can be interrupted and switched to another task is specified by await.

-await sayhi1 has two meanings:

- Block the execution of the current collaboration (the collaboration in which the statement is located, here is main()) until the task sayhi1 is completed. (similar to Process.join())

- Tell the interpreter that now the current coroutine (the coroutine in which the statement is located) is blocked, and you can switch coroutines.

If the await here is not sayhi1, but, for example, an operation that accepts an http request, the waiting for the request will not be affected after the interpreter switches the process. This is the power of inserting code slices here.

This is at await asyncio Sleep (delay) is reflected. asyncio.sleep() has the feature of "switching co process does not affect waiting".

- A few things about await:

1. The task of await can not be the created task, but a collaboration object (such as await sayhi(1)). At this time, it will not be added to the task pool, but will directly start execution (of course, it may also be switched to another collaboration at the beginning of execution because await is used) and blocked until it is completed. As a result, sayhi(1) cannot participate in concurrency as a task and on an equal footing with other tasks, but it can still participate in concurrency indirectly with the interruption and recovery of the parent process (here is main()).

-

Not only the collaboration object and task handle, but also any awaitable object, that is, any implementation, can be await__ await__ Method, which tells the interpreter how to block and switch the coroutine at the beginning of its execution without affecting the internal waiting and other operations that may be in progress.

-

The object of await can only block and switch the collaboration immediately at the beginning of execution. Other positions that can be blocked during execution are specified by other await statements used inside the object, rather than the await statement that calls the object.

import time

import asyncio

start = time.perf_counter()

async def sayhi(delay):

await asyncio.sleep(delay)

print(f'hi! at {time.perf_counter() - start}')

async def main():

await sayhi(1)

await sayhi(2)

# asyncio.run(main()) # use outside IPython

await main() # use inside IPython

hi! at 1.0072715999995125 hi! at 3.0168006000021705

wait_for()

Change await A (A is arbitrary) to await asyncio wait_ For (A, timeout), you can add timeout seconds to the await operation. Once the await operation has not ended for so many seconds, it will interrupt the execution of A and throw asyncio TimeoutError.

Don't worry about wait_for what, you just need to remember await asyncio wait_ Just the sentence for (a, timeout). It can be considered that this sentence is no different from await A in other aspects (except timeout). Here is an example.

import time

import asyncio

async def eternity():

await asyncio.sleep(3600)

print('yay!')

async def main():

try:

await asyncio.wait_for(eternity(), timeout=1.0)

except asyncio.TimeoutError:

print('timeout!')

# asyncio.run(main()) # use outside IPython

await main() # use inside IPython

timeout!

import time

import asyncio

start = time.perf_counter()

async def sayhi(delay):

await asyncio.sleep(delay)

print(f'hi! at {time.perf_counter() - start}')

async def main():

sayhi1 = asyncio.create_task(sayhi(1))

sayhi2 = asyncio.create_task(sayhi(2))

await asyncio.wait_for(sayhi1,1.05)

await asyncio.wait_for(sayhi2,1.05)

# asyncio.run(main()) # use outside IPython

await main() # use inside IPython

hi! at 1.0181081000046106 hi! at 2.0045300999918254

import time

import asyncio

start = time.perf_counter()

async def sayhi(delay):

await asyncio.sleep(delay)

print(f'hi! at {time.perf_counter() - start}')

async def main():

sayhi1 = asyncio.create_task(sayhi(1))

sayhi2 = asyncio.create_task(sayhi(2))

await asyncio.wait_for(sayhi1,0.95)

await asyncio.wait_for(sayhi2,1.05)

# asyncio.run(main()) # use outside IPython

await main() # use inside IPython

---------------------------------------------------------------------------

TimeoutError Traceback (most recent call last)

<ipython-input-89-7f639d54114e> in <module>

15

16 # asyncio.run(main()) # use outside IPython

---> 17 await main() # use inside IPython

<ipython-input-89-7f639d54114e> in main()

11 sayhi1 = asyncio.create_task(sayhi(1))

12 sayhi2 = asyncio.create_task(sayhi(2))

---> 13 await asyncio.wait_for(sayhi1,0.95)

14 await asyncio.wait_for(sayhi2,1.05)

15

~\anaconda3\lib\asyncio\tasks.py in wait_for(fut, timeout, loop)

488 # See https://bugs.python.org/issue32751

489 await _cancel_and_wait(fut, loop=loop)

--> 490 raise exceptions.TimeoutError()

491 finally:

492 timeout_handle.cancel()

TimeoutError:

hi! at 2.0194762000028277

In addition, notice that even if the collaboration sayhi1 throws an exception, the parent collaboration main() can still continue to execute sayhi2. It can be seen that there is a certain degree of independence between different processes.

Realize producer consumer cooperation

To do this, you need to use asyncio Queue . The difference between it and ordinary queues is that its put/get operations will block when they cannot be executed (which is very similar to multiprocessing.Queue), and these operations are coprocessors (note that this makes you only return the coprocessor object when calling them without actually executing), which can be await.

import time

import asyncio

start = time.perf_counter()

async def producer(q):

for i in range(5):

await asyncio.sleep(1) # producing takes 1 sec

await q.put(i) # will wait if q is full

print(f'put {i} at {time.perf_counter() - start}')

await q.join() # will wait until all objects produced are **taken out** and **consumed**.

async def consumer(q):

for i in range(5):

item = await q.get() # will wait if q is empty. BTW we see that "await XXX" is an expression not a command.

print(f'get {item} at {time.perf_counter() - start}')

await asyncio.sleep(1) # consuming takes 1 sec

q.task_done() # tells the queue that [the object just taken out] has been consumed. just taking out is not enough!

print(f'consumed {item} at {time.perf_counter() - start}')

async def main():

q = asyncio.Queue()

P = asyncio.create_task(producer(q))

C = asyncio.create_task(consumer(q))

await P

await C

print(f'done at {time.perf_counter() - start}')

# asyncio.run(main()) # use outside IPython

await main() # use inside IPython

put 0 at 1.0108397000003606 get 0 at 1.0112231999955839 put 1 at 2.017216499996721 consumed 0 at 2.0176210000063293 get 1 at 2.0177472999930615 put 2 at 3.0279211000015493 consumed 1 at 3.0283254999958444 get 2 at 3.028457599997637 put 3 at 4.039952199993422 consumed 2 at 4.041183299996192 get 3 at 4.041302300000098 put 4 at 5.0465819999953965 consumed 3 at 5.04690839999239 get 4 at 5.047016099997563 consumed 4 at 6.047789799995371 done at 6.048323099996196

import time

import asyncio

start = time.perf_counter()

async def sleep_and_put(q):

await asyncio.sleep(1)

await q.put(1)

async def main():

q = asyncio.Queue()

C = asyncio.create_task(q.get())

P = asyncio.create_task(sleep_and_put(q))

await C

await P

print(f'finished at {time.perf_counter() - start}')

# asyncio.run(main()) # use outside IPython

await main() # use inside IPython

finished at 1.01112650000141

As can be seen from the above example, queue Get () (in fact, Queue.put() and other methods are the same) is a collaborative process, so you can also create tasks for it for concurrency.