preface

Hello, everyone. I'm a sea monster.

Recently, the project encountered a demand for recording on the web page. After a wave of search, it was found react-media-recorder This library. Today, let's study the source code of this library, from 0 to 1 to realize the functions of recording, video recording and screen recording of React.

The complete project code is placed in Github

Requirements and ideas

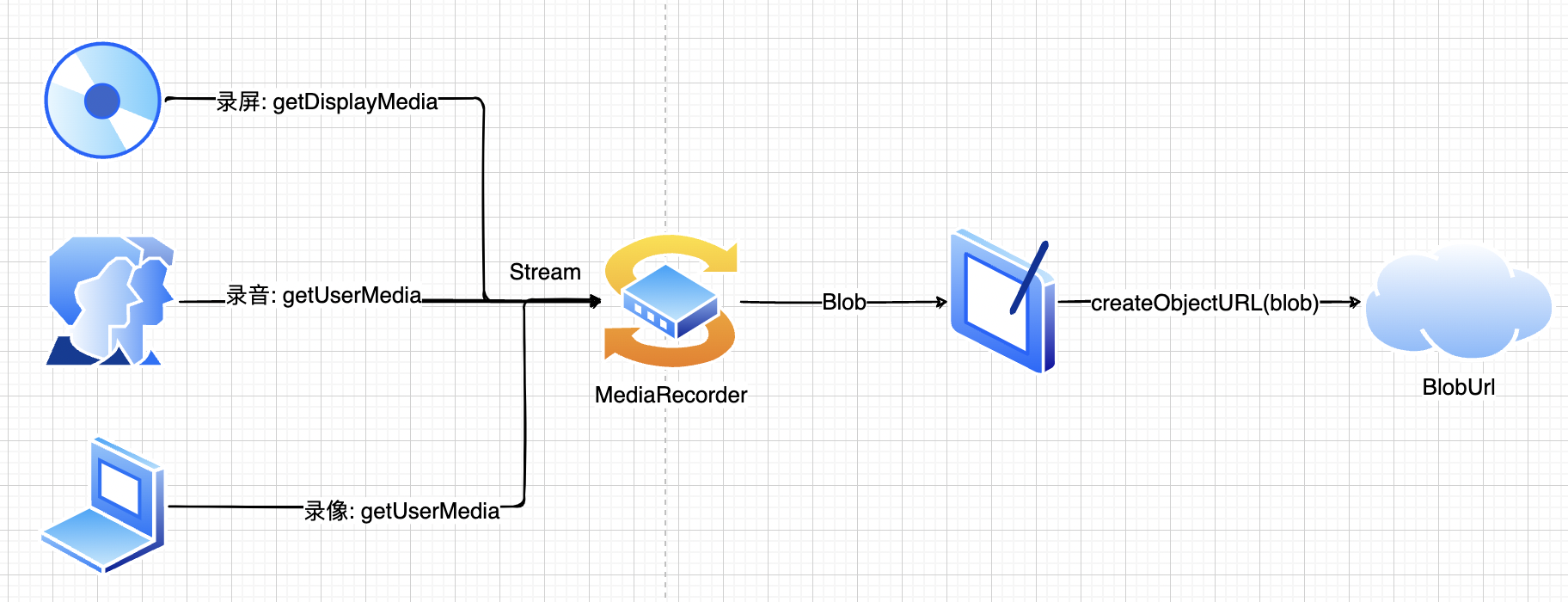

First of all, we should clarify what we want to accomplish: recording, video recording and screen recording.

The principle of recording media streaming is actually very simple.

Just remember: store the input stream in the blobList, and finally preview the blobUrl.

Basic functions

With the above simple ideas, we can first make a simple recording and recording function.

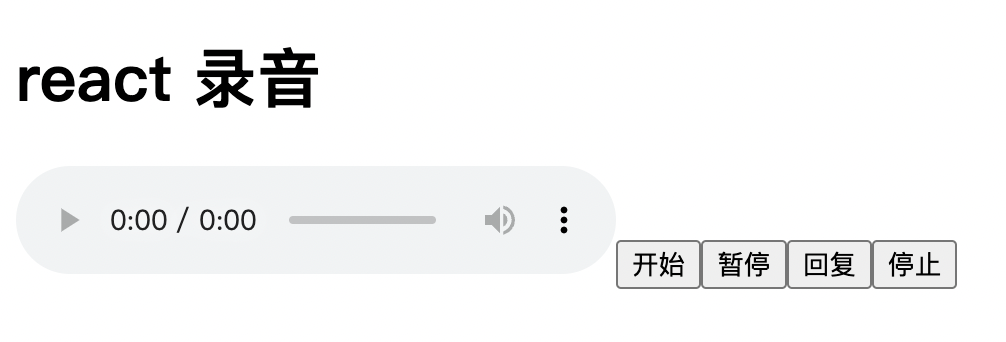

Here, the basic HTML structure is realized:

const App = () => {

const [audioUrl, setAudioUrl] = useState<string>('');

const startRecord = async () => {}

const stopRecord = async () => {}

return (

<div>

<h1>react sound recording</h1>

<audio src={audioUrl} controls />

<button onClick={startRecord}>start</button>

<button>suspend</button>

<button>recovery</button>

<button onClick={stopRecord}>stop it</button>

</div>

);

}

There are four functions: start, pause, resume and stop, and a < audio > is added to view the recording results.

Then start and stop:

const medisStream = useRef<MediaStream>();

const recorder = useRef<MediaRecorder>();

const mediaBlobs = useRef<Blob[]>([]);

// start

const startRecord = async () => {

// Read input stream

medisStream.current = await navigator.mediaDevices.getUserMedia({ audio: true, video: false });

// Generate MediaRecorder object

recorder.current = new MediaRecorder(medisStream.current);

// Convert stream into blob for storage

recorder.current.ondataavailable = (blobEvent) => {

mediaBlobs.current.push(blobEvent.data);

}

// blob url to generate preview when stopping

recorder.current.onstop = () => {

const blob = new Blob(mediaBlobs.current, { type: 'audio/wav' })

const mediaUrl = URL.createObjectURL(blob);

setAudioUrl(mediaUrl);

}

recorder.current?.start();

}

// End, not only stop the MediaRecorder, but also stop all tracks

const stopRecord = async () => {

recorder.current?.stop()

medisStream.current?.getTracks().forEach((track) => track.stop());

}

As can be seen from the above, first obtain the input stream mediaStream from getUserMedia, and then open video: true to synchronously obtain the video stream.

Then pass the mediaStream to the mediaRecorder and store the blob data in the current stream through ondataavailable.

The last step is to call the URL Createobjecturl to generate preview links. This API is very useful in the front end. For example, when uploading pictures, you can also call it to realize picture preview without really sending it to the back end to display the preview pictures.

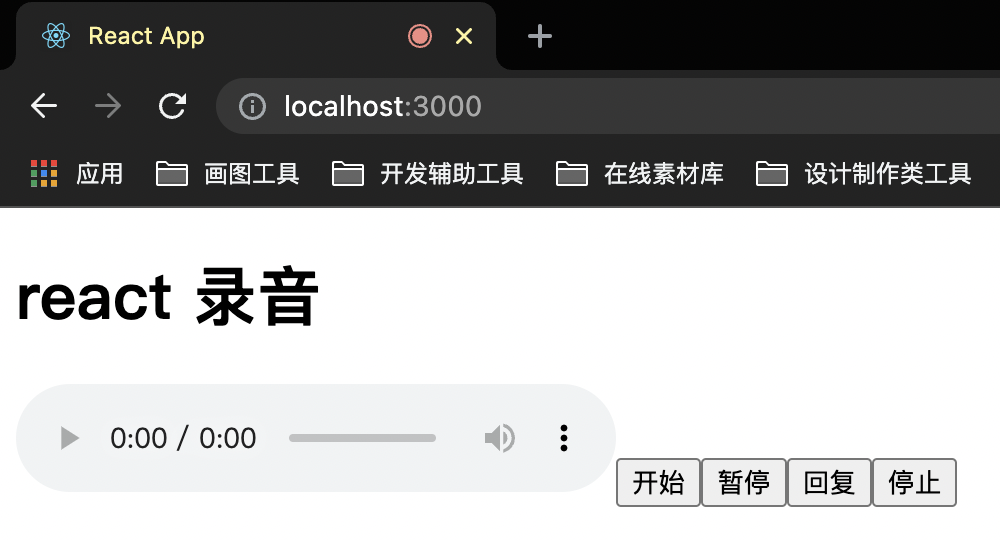

After clicking start, you can see that the current web page is recording:

Now the rest of the suspension and resumption have been realized:

const pauseRecord = async () => {

mediaRecorder.current?.pause();

}

const resumeRecord = async () => {

mediaRecorder.current?.resume()

}

Hooks

After implementing simple functions, let's try to encapsulate the above functions into React Hook. First throw these logic into a function, and then return to the API:

const useMediaRecorder = () => {

const [mediaUrl, setMediaUrl] = useState<string>('');

const mediaStream = useRef<MediaStream>();

const mediaRecorder = useRef<MediaRecorder>();

const mediaBlobs = useRef<Blob[]>([]);

const startRecord = async () => {

mediaStream.current = await navigator.mediaDevices.getUserMedia({ audio: true, video: false });

mediaRecorder.current = new MediaRecorder(mediaStream.current);

mediaRecorder.current.ondataavailable = (blobEvent) => {

mediaBlobs.current.push(blobEvent.data);

}

mediaRecorder.current.onstop = () => {

const blob = new Blob(mediaBlobs.current, { type: 'audio/wav' })

const url = URL.createObjectURL(blob);

setMediaUrl(url);

}

mediaRecorder.current?.start();

}

const pauseRecord = async () => {

mediaRecorder.current?.pause();

}

const resumeRecord = async () => {

mediaRecorder.current?.resume()

}

const stopRecord = async () => {

mediaRecorder.current?.stop()

mediaStream.current?.getTracks().forEach((track) => track.stop());

mediaBlobs.current = [];

}

return {

mediaUrl,

startRecord,

pauseRecord,

resumeRecord,

stopRecord,

}

}

On app Just get the return value from TSX:

const App = () => {

const { mediaUrl, startRecord, resumeRecord, pauseRecord, stopRecord } = useMediaRecorder();

return (

<div>

<h1>react sound recording</h1>

<audio src={mediaUrl} controls />

<button onClick={startRecord}>start</button>

<button onClick={pauseRecord}>suspend</button>

<button onClick={resumeRecord}>recovery</button>

<button onClick={stopRecord}>stop it</button>

</div>

);

}

After encapsulation, you can now add more functions to this Hook.

Clear data

When generating the blob url, we called the url Createobjecturl API. The generated url is as follows:

blob:http://localhost:3000/e571f5b7-13bd-4c93-bc53-0c84049deb0a

Every URL After creating an objecturl, a reference of URL - > blob will be generated. Such a reference will also occupy resource memory, so we can provide a method to destroy this reference.

const useMediaRecorder = () => {

const [mediaUrl, setMediaUrl] = useState<string>('');

...

return {

...

clearBlobUrl: () => {

if (mediaUrl) {

URL.revokeObjectURL(mediaUrl);

}

setMediaUrl('');

}

}

}

Recording screen

The above recording and video recording are realized by getUserMedia, while the screen recording needs to be realized by calling getDisplayMedia.

In order to better distinguish these two situations, we can provide developers with three parameters: audio, video and screen to tell us which interface to call to obtain the corresponding input stream data:

const useMediaRecorder = (params: Params) => {

const {

audio = true,

video = false,

screen = false,

askPermissionOnMount = false,

} = params;

const [mediaUrl, setMediaUrl] = useState<string>('');

const mediaStream = useRef<MediaStream>();

const audioStream = useRef<MediaStream>();

const mediaRecorder = useRef<MediaRecorder>();

const mediaBlobs = useRef<Blob[]>([]);

const getMediaStream = useCallback(async () => {

if (screen) {

// Screen recording interface

mediaStream.current = await navigator.mediaDevices.getDisplayMedia({ video: true });

mediaStream.current?.getTracks()[0].addEventListener('ended', () => {

stopRecord()

})

if (audio) {

// Add audio input stream

audioStream.current = await navigator.mediaDevices.getUserMedia({ audio: true })

audioStream.current?.getAudioTracks().forEach(audioTrack => mediaStream.current?.addTrack(audioTrack));

}

} else {

// Ordinary video and recording stream

mediaStream.current = await navigator.mediaDevices.getUserMedia(({ video, audio }))

}

}, [screen, video, audio])

// Start recording

const startRecord = async () => {

// Get stream

await getMediaStream();

mediaRecorder.current = new MediaRecorder(mediaStream.current!);

mediaRecorder.current.ondataavailable = (blobEvent) => {

mediaBlobs.current.push(blobEvent.data);

}

mediaRecorder.current.onstop = () => {

const [chunk] = mediaBlobs.current;

const blobProperty: BlobPropertyBag = Object.assign(

{ type: chunk.type },

video ? { type: 'video/mp4' } : { type: 'audio/wav' }

);

const blob = new Blob(mediaBlobs.current, blobProperty)

const url = URL.createObjectURL(blob);

setMediaUrl(url);

onStop(url, mediaBlobs.current);

}

mediaRecorder.current?.start();

}

...

}

Since we have allowed users to record videos and sounds, we should also set the corresponding blobProperty to generate the corresponding media type's blobUrl when generating the URL.

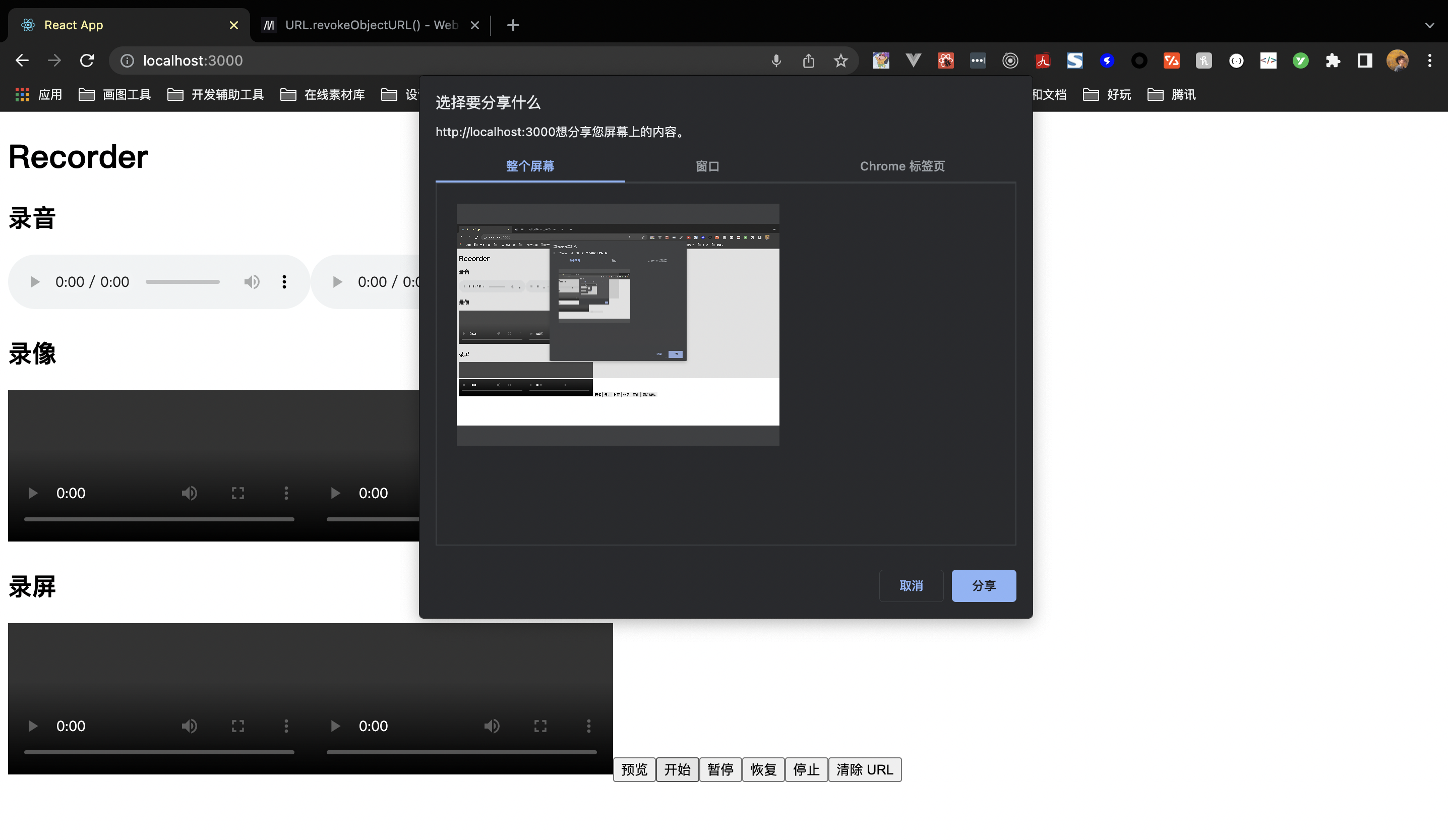

Finally, when calling hook, pass in screen: true to enable the screen recording function:

Note: both video recording, recording and screen recording are the ability to call the system, and the web page only asks the browser for this ability, but this premise is that the browser already has system permissions, so the browser must be allowed to have these permissions in the system settings to record the screen.

The above method of throwing the logic of obtaining media stream into getMediaStream function can be easily used to obtain user permissions. If we want to obtain user camera, microphone and screen recording permissions when we just load this component, we can call it in useEffect:

useEffect(() => {

if (askPermissionOnMount) {

getMediaStream().then();

}

}, [audio, screen, video, getMediaStream, askPermissionOnMount])

preview

Video recording can be realized by setting {video: true} when getUserMedia is used. In order to make it more convenient for users to watch the effect while recording, we can also return the video stream to users:

return {

...

getMediaStream: () => mediaStream.current,

getAudioStream: () => audioStream.current

}

After getting these mediastreams, users can directly assign values to srcObject for preview:

<button onClick={() => previewVideo.current!.srcObject = getMediaStream() || null}>

preview

</button>

Forbidden sound

Finally, let's realize the sound suppression function, and the principle is also simple. Get the audioTrack in audioStream and set them to enabled = false.

const toggleMute = (isMute: boolean) => {

mediaStream.current?.getAudioTracks().forEach(track => track.enabled = !isMute);

audioStream.current?.getAudioTracks().forEach(track => track.enabled = !isMute)

setIsMuted(isMute);

}

When in use, you can use it to disable and turn on the channel:

<button onClick={() => toggleMute(!isMuted)}>{isMuted ? 'Turn on sound' : 'Forbidden sound'}</button>

summary

The above simply implements a recording, video recording and screen recording tool Hook with WebRTC API. Here is a summary:

- getUserMedia can be used to get the stream of microphone and camera

- getDisplayMedia is used to obtain the video and audio streams of the screen

- The essence of recording is stream - > bloblist - > blob URL. MediaRecorder can listen to stream to obtain BLOB data

- MediaRecorder also provides multiple Record related interfaces such as start, end, pause and resume

- createObjectURL and revokeObjectURL are antonyms. One is to create a reference and the other is to destroy

- The forbidden sound can be passed through track Enabled = false turn off the audio track to achieve

The implementation of this gadget library is brought here. You can view the details react-media-recorder The source code of this library is very simple and easy to understand. It is very suitable for students who read the source code at the beginning!

If you also like my article, you can click a wave of attention, or click three times to compare your heart ❤️