background

I've been working on a lightweight deployment recently, which requires a message queue, but I feel kafka is relatively heavy, so I finally chose a relatively lightweight message queue "Redis Stream". I feel that the Java implementation on the Internet is not very good. After a period of exploration, I decided to write a complete and runnable Redis message queue using java for your reference. The code has been uploaded to gitee and can be downloaded at the end of the text.

I hope you can read it patiently. I believe reading this article can help you quickly understand the development of Redis as a message oriented middleware in Java environment.

You need to master the code before you start

First of all, you need to know the basic knowledge of Stream. Here is a link, which is the translated version of the official website. You can refer to it first to understand the use of each command

https://www.cnblogs.com/williamjie/p/11201654.html

The commands used in this article are as follows:

>Xadd mystream * hello world * create a mystream stream

>Xgroup create mystream group-1 $create consumer group-1

>Xgroup create mystream group-2 $create consumer group-2

>Xrange mystream - + query messages in the stream

>Xpending mystream group-1 # no ACK message in the group

>Xpending mystream group-1 0 + 10 consumer-1 , view the message of no consumption in consumer-1

It also includes XCLAIM group conversion command and XTRIM regular cleaning stream data command, which are implemented in java

Environmental preparation

I use redis5 0.2, here we will directly send you the source files of redis installed on linux.

Link: https://pan.baidu.com/s/1dYrf6vC8mNS-6O_D88j7Lw Extraction code: dwh8

Prepare three Spring boot projects, one producer and two consumers, consumer1 and consumer2

Roll ~ roll~

First, we need to create our stream and the corresponding group, which needs to be created manually in redis. Note: one more word here. The concepts of these flows and groups will not be repeated in this paper. I hope you can understand the basic operations first.

Here we create a stream: mystream; Two groups: group-1 and group-2

127.0.0.1:6379> XADD mystream * hello world "1617952839936-0" 127.0.0.1:6379> XGROUP CREATE mystream group-1 $ OK 127.0.0.1:6379> XGROUP CREATE mystream group-2 $ OK 127.0.0.1:6379>

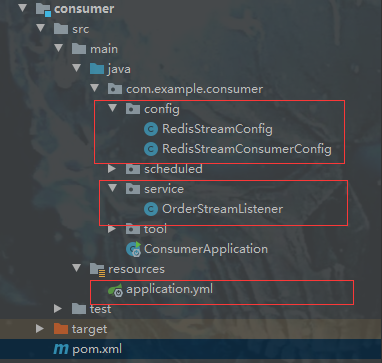

Now let's start to realize producers and consumers through Java code. The Spring Boot version I use is 2.4.3

producer

The code structure is as follows

pom needs to introduce the following

<dependency>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-starter-data-redis</artifactId>

</dependency>

<dependency>

<groupId>org.apache.commons</groupId>

<artifactId>commons-pool2</artifactId>

</dependency>Configuration file application YML, link redis, set the name of the stream

server:

port: 8080

servlet:

context-path: /

spring:

redis:

database: 0

host: 192.168.44.129

port: 6379

password:

timeout: 0

lettuce:

pool:

max-active: 8

max-wait: -1

max-idle: 8

min-idle: 0

redisstream:

stream: mystreamRedisStreamConfig only reads the name of the stream in the configuration

@Data

@Component

@ConfigurationProperties(prefix = "redisstream")

public class RedisStreamConfig {

private String stream;

}The PublishService class does a simple send operation. Here, we can send data to the corresponding stream by calling the test method

@Service

public class PublishService {

@Autowired

private StringRedisTemplate stringRedisTemplate;

@Autowired

private RedisStreamConfig redisStreamConfig;

public void test(String msg){

// Create a message record and specify the stream

StringRecord stringRecord = StreamRecords.string(Collections.singletonMap("name", msg)).withStreamKey(redisStreamConfig.getStream1());

// Add message to message queue

this.stringRedisTemplate.opsForStream().add(stringRecord);

}

}A simple call is made in the RedisStreamController class

@RestController

public class RedisStreamController {

@Autowired

private PublishService publishService;

@GetMapping("produceMsg")

public void produceMsg(@Param("msg")String msg){

publishService.test(msg);

}

}So far, a simple Redis producer has been completed. Let's test it

Open the browser and enter: localhost:8080/produceMsg?msg=nihao

After execution, we can see that the "nihao" just entered has been stored in the stream through the command > xrange mystream - + command.

127.0.0.1:6379> XRANGE mystream - +

1) 1) "1617952839936-0"

2) 1) "hello"

2) "world"

2) 1) "1617955133254-0"

2) 1) "name"

2) "nihao"

127.0.0.1:6379>

consumer

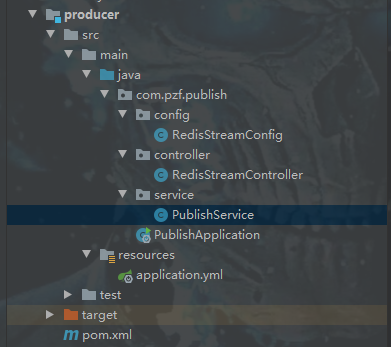

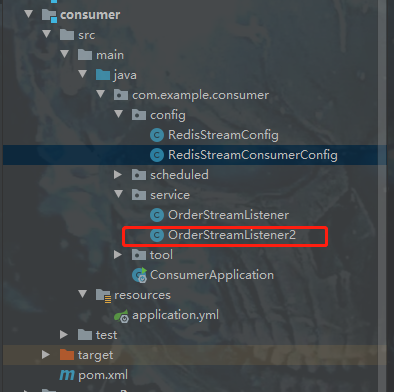

The code structure is as follows. Just pay attention to the files in the red box. pom files are the same as producers

application. The YML file is as follows. Set the current project to consume from group-1, and the current consumer name is consumer-1

server:

port: 8081

servlet:

context-path: /

spring:

redis:

database: 0

host: 192.168.44.129

port: 6379

password:

timeout: 0

lettuce:

pool:

max-active: 8

max-wait: -1

max-idle: 8

min-idle: 0

redisstream:

stream: mystream

group: group-1

consumer: consumer-1

RedisStreamConfig class files are as follows. Simply obtain the corresponding configuration

@Data

@Component

@ConfigurationProperties(prefix = "redisstream")

public class RedisStreamConfig {

private String stream;

private String group;

private String consumer;

}

The RedisStreamConsumerConfig class is as follows. The key points are here. The specific functions are written in detail. It only includes some configurations of binding the consumer monitoring class to the response stream and pulling messages.

Here, we turn off the automatic consumption of ACK, and we conduct manual consumption in the message monitoring class

@Configuration

public class RedisStreamConsumerConfig {

@Autowired

ThreadPoolTaskExecutor threadPoolTaskExecutor;

@Autowired

RedisStreamConfig redisStreamConfig;

/**

* The main task is to bind the OrderStreamListener listener to the consumer for receiving messages

*

* @param connectionFactory

* @param streamListener

* @return

*/

@Bean

public StreamMessageListenerContainer<String, ObjectRecord<String, String>> consumerListener1(

RedisConnectionFactory connectionFactory,

OrderStreamListener streamListener) {

StreamMessageListenerContainer<String, ObjectRecord<String, String>> container =

streamContainer(redisStreamConfig.getStream(), connectionFactory, streamListener);

container.start();

return container;

}

/**

* @param mystream From which stream do you receive data

* @param connectionFactory

* @param streamListener Bound listening class

* @return

*/

private StreamMessageListenerContainer<String, ObjectRecord<String, String>> streamContainer(String mystream, RedisConnectionFactory connectionFactory, StreamListener<String, ObjectRecord<String, String>> streamListener) {

StreamMessageListenerContainer.StreamMessageListenerContainerOptions<String, ObjectRecord<String, String>> options =

StreamMessageListenerContainer.StreamMessageListenerContainerOptions

.builder()

.pollTimeout(Duration.ofSeconds(5)) // Pull message timeout

.batchSize(10) // Batch grab message

.targetType(String.class) // Data type passed

.executor(threadPoolTaskExecutor)

.build();

StreamMessageListenerContainer<String, ObjectRecord<String, String>> container = StreamMessageListenerContainer

.create(connectionFactory, options);

//Specify the latest message for consumption

StreamOffset<String> offset = StreamOffset.create(mystream, ReadOffset.lastConsumed());

//Create consumer

Consumer consumer = Consumer.from(redisStreamConfig.getGroup(), redisStreamConfig.getConsumer());

StreamMessageListenerContainer.StreamReadRequest<String> streamReadRequest = StreamMessageListenerContainer.StreamReadRequest.builder(offset)

.errorHandler((error) -> {

})

.cancelOnError(e -> false)

.consumer(consumer)

//Turn off automatic ack confirmation

.autoAcknowledge(false)

.build();

//Specify consumer object

container.register(streamReadRequest, streamListener);

return container;

}

@Bean

public RedisTemplate<String, Object> redisTemplate(RedisConnectionFactory factory) {

RedisTemplate<String, Object> template = new RedisTemplate<String, Object>();

template.setConnectionFactory(factory);

Jackson2JsonRedisSerializer jackson2JsonRedisSerializer = new Jackson2JsonRedisSerializer(Object.class);

ObjectMapper om = new ObjectMapper();

om.setVisibility(PropertyAccessor.ALL, JsonAutoDetect.Visibility.ANY);

om.enableDefaultTyping(ObjectMapper.DefaultTyping.NON_FINAL);

jackson2JsonRedisSerializer.setObjectMapper(om);

StringRedisSerializer stringRedisSerializer = new StringRedisSerializer();

// The key adopts the serialization method of String

template.setKeySerializer(stringRedisSerializer);

// The key of hash is also serialized by String

template.setHashKeySerializer(stringRedisSerializer);

// value serialization adopts jackson

template.setValueSerializer(jackson2JsonRedisSerializer);

// The value serialization method of hash adopts jackson

template.setHashValueSerializer(jackson2JsonRedisSerializer);

template.afterPropertiesSet();

return template;

}

}

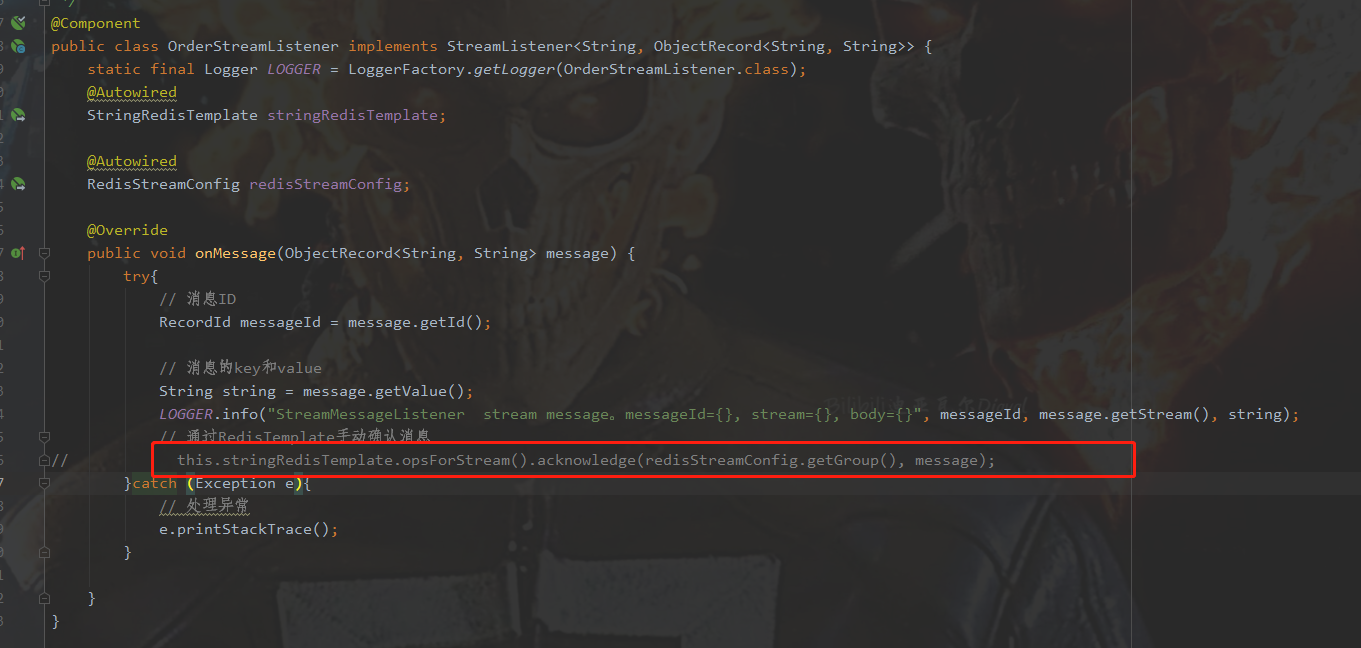

OrderStreamListener class is a specific listening class, which is used to get the received message for logical processing.

If the processing is successful, we will conduct manual ACK;

In case of exceptions, if it is click deploy, we can record the business exceptions directly to the DB or file, and then manually ACK. In case of network interruption, timeout and other exceptions, we can record them and try to re consume

In case of distributed deployment, there are multiple consumers under joining a consumption group, and one of them fails. If it is a business exception, direct ACK. If it is a non business exception (i.e. network interruption, timeout and other exceptions), we will transfer it to a group (which will be discussed in detail later)

@Component

public class OrderStreamListener implements StreamListener<String, ObjectRecord<String, String>> {

static final Logger LOGGER = LoggerFactory.getLogger(OrderStreamListener.class);

@Autowired

StringRedisTemplate stringRedisTemplate;

@Autowired

RedisStreamConfig redisStreamConfig;

@Override

public void onMessage(ObjectRecord<String, String> message) {

try{

// Message ID

RecordId messageId = message.getId();

// key and value of the message

String string = message.getValue();

LOGGER.info("StreamMessageListener stream message. messageId={}, stream={}, body={}", messageId, message.getStream(), string);

// Manually confirm the message through RedisTemplate

this.stringRedisTemplate.opsForStream().acknowledge(redisStreamConfig.getGroup(), message);

}catch (Exception e){

// Handling exceptions

e.printStackTrace();

}

}

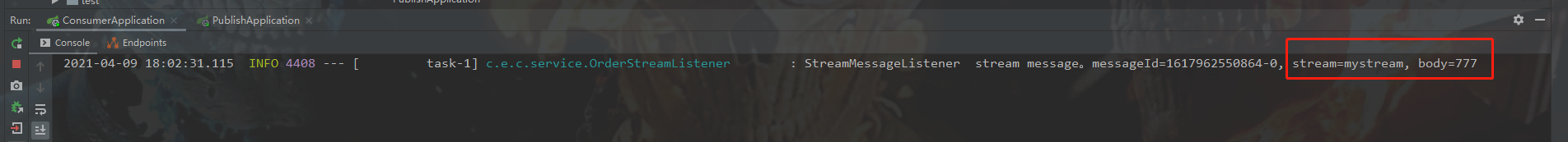

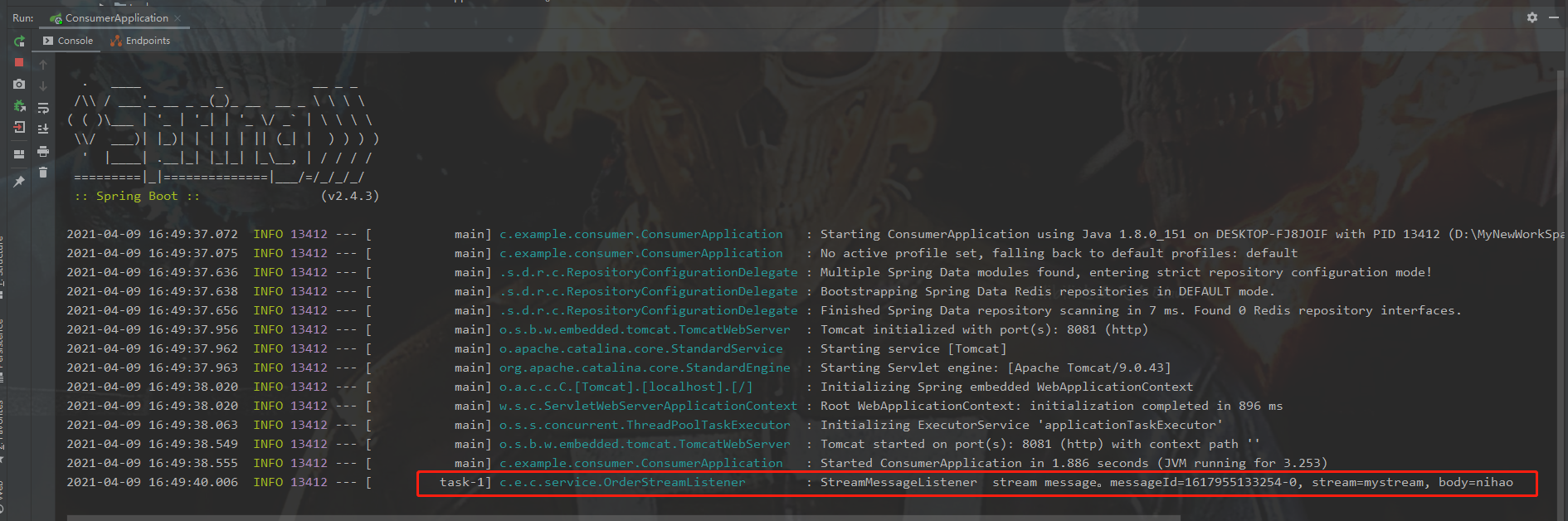

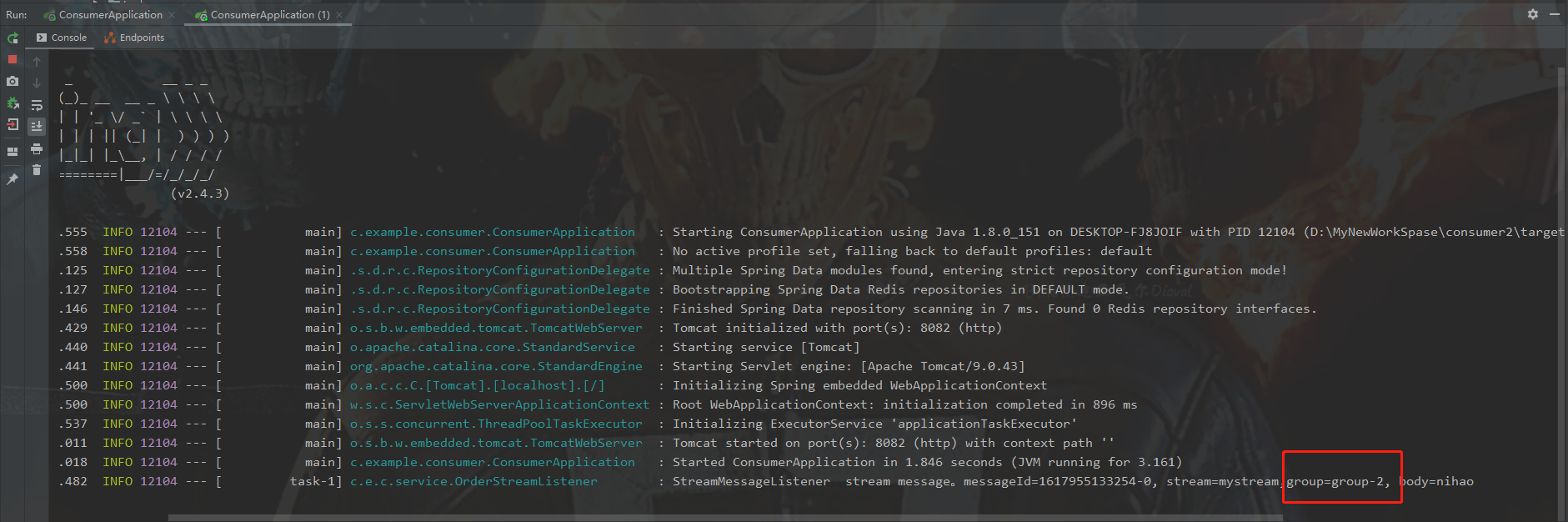

}So far, consumer 1 has been completed. We can run first to see the effect. Just now, we have thrown a piece of name: nihao data into mystream. We start consumers to see what they can consume

We can see that starting consumer 1 has successfully consumed the data.

Remember that we just created two groups (group-1 and group-2) for the stream. We just created consumer 1 and the consumer group is group-1. Now let's create a consumer 2, binding group-2.

Here we directly copy the above consumer project as follows: Here we mainly modify two places, one is the configuration file application YML (changed the port number, changed the configuration information of the group) and OrderStreamListener class (just added logs to distinguish it from consumer 1)

server:

port: 8082

servlet:

context-path: /

spring:

redis:

database: 0

host: 192.168.44.129

port: 6379

password:

timeout: 0

lettuce:

pool:

max-active: 8

max-wait: -1

max-idle: 8

min-idle: 0

redisstream:

stream: mystream

group: group-2

consumer: consumer-2

@Component

public class OrderStreamListener implements StreamListener<String, ObjectRecord<String, String>> {

static final Logger LOGGER = LoggerFactory.getLogger(OrderStreamListener.class);

@Autowired

StringRedisTemplate stringRedisTemplate;

@Autowired

RedisStreamConfig redisStreamConfig;

@Override

public void onMessage(ObjectRecord<String, String> message) {

try{

// Message ID

RecordId messageId = message.getId();

// key and value of the message

String string = message.getValue();

LOGGER.info("StreamMessageListener stream message. messageId={}, stream={},group={}, body={}", messageId, message.getStream(),redisStreamConfig.getGroup(), string);

// Manually confirm the message through RedisTemplate

this.stringRedisTemplate.opsForStream().acknowledge(redisStreamConfig.getGroup(), message);

}catch (Exception e){

// Handling exceptions

e.printStackTrace();

}

}

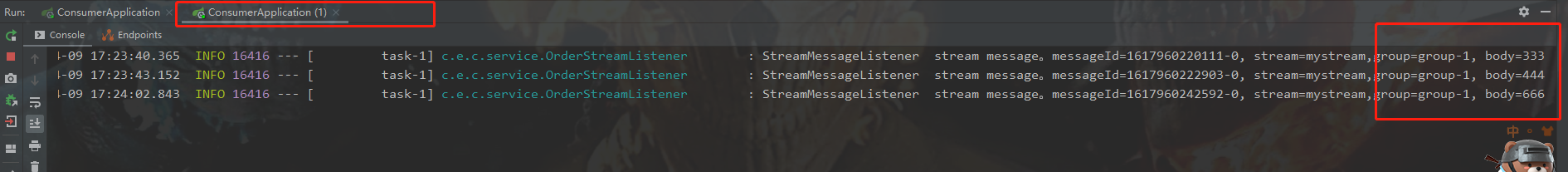

}At this point, we start consumer 2. Let's see if nihao will be consumed

We can see that it is also consumed. Do you know a little about Stream.

Conclusion: if a message is sent to a Stream stream, it exists in each group. This is like the concept of consumption group in kafka. When a message is sent, there is one in each consumption group.

Here, a simple message queue is completed, but it is still far from being used. Here are several problems:

- Can multiple nodes consume the same data, just as a consumption group in kafka can have multiple consumers consume the same data

- Where can I find this record after the consumer fails to consume

- How can a consumer bind multiple streams

- How to deal with dead letter (how to judge and deal with it)

- The data in the stream has been stored in memory for a long time. How to deal with it if there is not enough memory

The above are all the problems I encountered during development recently. Of course, these are also issues that must be considered when using message queue. Next, we will deal with them one by one.

Question 1: multiple nodes consume the same data

Then we need to make some modifications to the above code. We change the group in project consumer2 to group-1, as follows:

server:

port: 8082

servlet:

context-path: /

spring:

redis:

database: 0

host: 192.168.44.129

port: 6379

password:

timeout: 0

lettuce:

pool:

max-active: 8

max-wait: -1

max-idle: 8

min-idle: 0

redisstream:

stream: mystream

group: group-1

consumer: consumer-2

Now we are a stream (mystream) = "a group (group-1) =" two consumers (consumer-1,consumer-2)

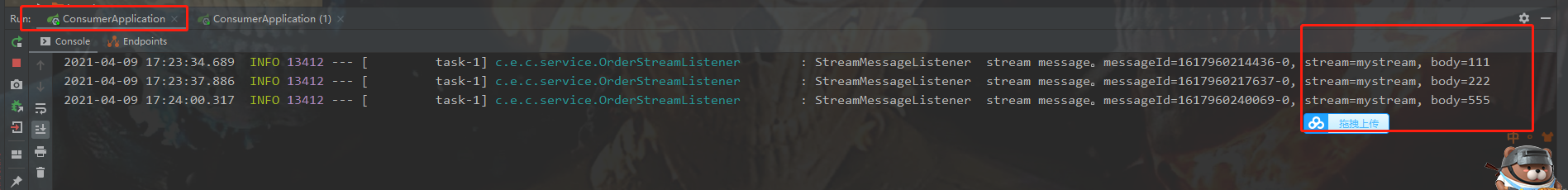

Start consumer2 and check the execution by sending a message to the producer

We call the send message interface continuously

localhost:8080/produceMsg?msg=111

localhost:8080/produceMsg?msg=222

localhost:8080/produceMsg?msg=333

localhost:8080/produceMsg?msg=444

localhost:8080/produceMsg?msg=555

localhost:8080/produceMsg?msg=666

Check the consumption: we can see that consumer 1 consumed 111, 222 and 555 and consumer 2 consumed 333, 444 and 666. This shows that if we deploy distributed, multiple nodes consume the same data, and even load balancing can be done automatically.

Question 2: where can I find this record after the consumer fails to consume

At this time, we stop the consumer2 project and keep the producer project and consumer project. Now we comment out the ACK where the consumer consumes. Let's see where this message goes.

Then send a message, localhost:8080/produceMsg?msg=777

We can see that consumer 1 has consumed this message, but has not made ACK confirmation.

If you have seen the basic operation of Redis Stream, you should know that this message exists in the pending of group-1.

We can check how much data in the group has not been confirmed for consumption through the command > xpending mystream group-1

Or > xpending mystream group-1 0 + 10 consumer-1 to view the data of the specific group of consumers who have not consumed

It can be seen that in group-1, there is a message in consumer-1 that has not been ACK

127.0.0.1:6379> XPENDING mystream group-1

1) (integer) 1

2) "1617962550864-0"

3) "1617962550864-0"

4) 1) 1) "consumer-1"

2) "1"

127.0.0.1:6379> XPENDING mystream group-1 0 + 10 consumer-1

1) 1) "1617962550864-0"

2) "consumer-1"

3) (integer) 74328

4) (integer) 1

127.0.0.1:6379>

Question 3: how can a consumer bind multiple streams

This is what I do now

First, we create another stream: mystream2; One group: group-1

127.0.0.1:6379> XADD mystream2 * hello world "1617970973509-0" 127.0.0.1:6379> XGROUP CREATE mystream2 group-1 $ OK 127.0.0.1:6379>

Configure producer

Modify the corresponding files in the project producer as follows

application.yml File added stream2

redisstream:

stream: mystream

stream2: mystream2

---------------------------------------------

RedisStreamConfig Class added stream2

@Data

@Component

@ConfigurationProperties(prefix = "redisstream")

public class RedisStreamConfig {

private String stream;

private String stream2;

}

---------------------------------------------

PublishService Class, go mystream After sending, continue to call private methods and send to mystream2

@Service

public class PublishService {

@Autowired

private StringRedisTemplate stringRedisTemplate;

@Autowired

private RedisStreamConfig redisStreamConfig;

public void test(String msg){

// Create a message record and specify the stream

StringRecord stringRecord = StreamRecords.string(Collections.singletonMap("name", msg)).withStreamKey(redisStreamConfig.getStream());

// Add message to message queue

this.stringRedisTemplate.opsForStream().add(stringRecord);

// Send to stream mystream2

sendToStream2(msg);

}

private void sendToStream2(String msg){

// Create a message record and specify the stream

StringRecord stringRecord = StreamRecords.string(Collections.singletonMap("name", msg)).withStreamKey(redisStreamConfig.getStream2());

// Add message to message queue

this.stringRedisTemplate.opsForStream().add(stringRecord);

}

}Configure consumer

Modify the corresponding configuration file in the project consumer,

application.yml File added stream2

redisstream:

stream: mystream

stream2: mystream2

group: group-1

consumer: consumer-1

---------------------------------------------

RedisStreamConfig Class added stream2

@Data

@Component

@ConfigurationProperties(prefix = "redisstream")

public class RedisStreamConfig {

private String stream;

private String stream2;

private String group;

private String consumer;

}

The new listening class OrderStreamListener2 does not need to be changed. Here we need to release all acks to receive messages in mystream2, as follows

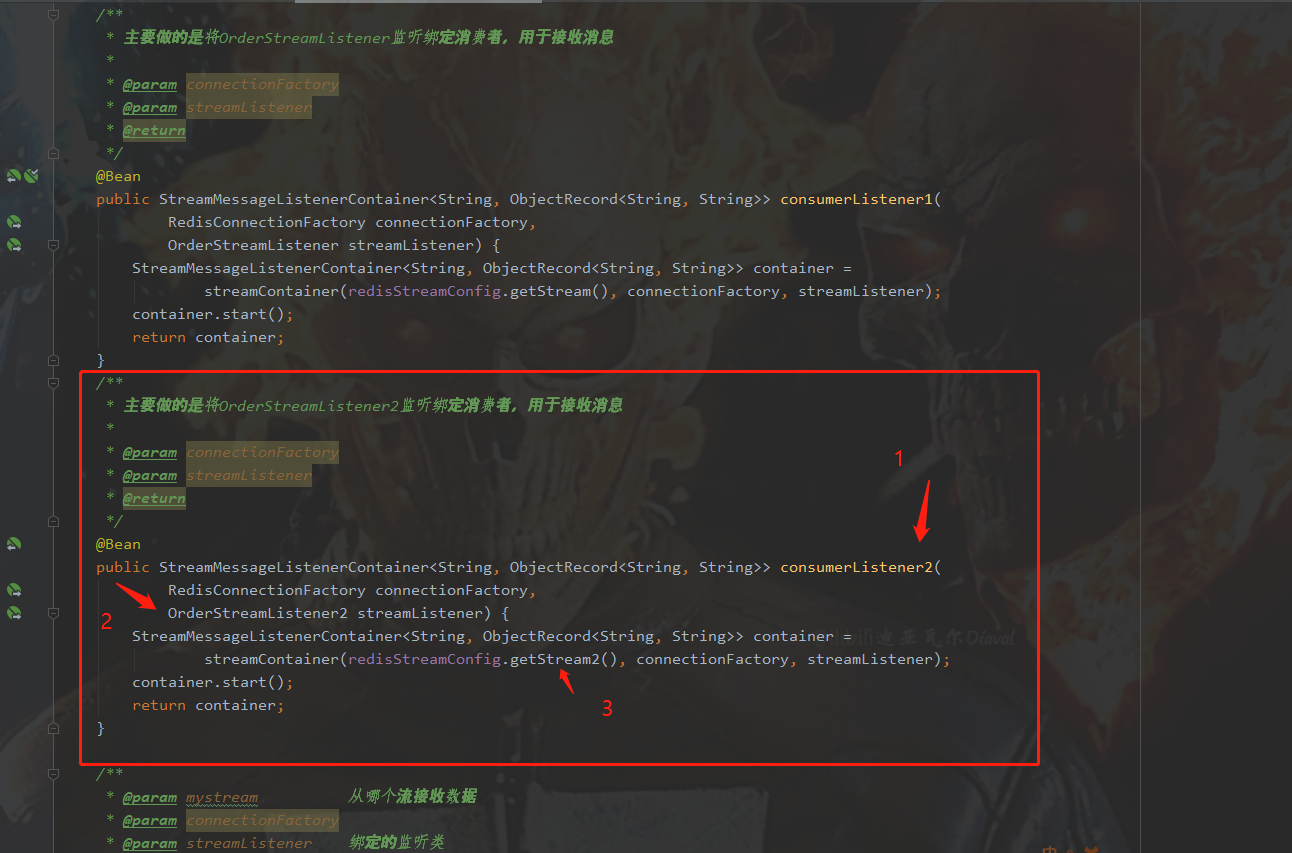

Modify the class RedisStreamConsumerConfig and bind the listening class OrderStreamListener2 as follows: the same as the previous listening class binding. Note the three places marked in red

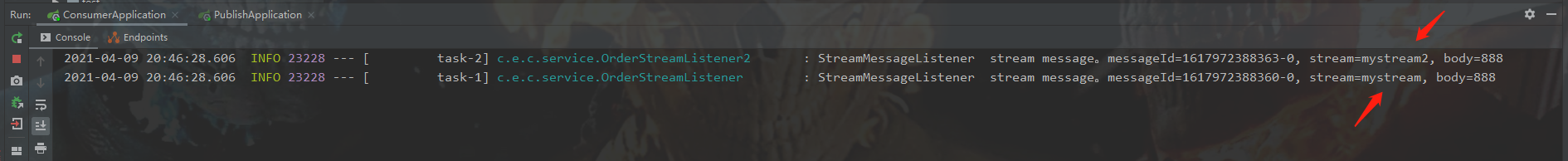

Such a consumer binding multiple streams has been implemented. Let's test it now

Call localhost:8080/produceMsg?msg=888

What we expect to see is that it is called once, and the consumer prints two data streams, one for mystream and one for mystream2. The results are as follows:

As a result, as we expected, the binding of multiple streams by one consumer has been completed;

Question 4: how to deal with dead letter (how to judge and deal with it)

What is a dead letter? That is, the message that the consumer can't consume.

Environment: one producer, two consumption nodes. The producer throws messages into the stream mystream, and both consumer nodes consumer-1 and consumer-2 consume messages from group-1

Logic: if consumer-1 fails to consume and there is no ack to confirm the consumption, the producer will scan the messages in group-1 that have not been acked regularly (the same as XPENDING operation above). At this time, you can get the message "time to get from the consumption group" and "times of group transfer", If the message is not consumed for more than 20 seconds (the time can be customized according to the system requirements) and the number of group conversions is 1, we will group it and transfer it to consumer-2. If the number of group conversions obtained is 2, it indicates that the group has been converted, which has not been consumed. We default that there is a problem with this message, and we will manually ack it.

A little too much to say, the code:

Configure producer

To modify a profile:

application.yml The document is modified as follows:

redisstream:

stream: mystream

stream2: mystream2

group: group-1

consumer1: consumer-1

consumer2: consumer-2

----------------------------------------------------

RedisStreamConfig Class is modified as follows:

@Data

@Component

@ConfigurationProperties(prefix = "redisstream")

public class RedisStreamConfig {

private String stream;

private String stream2;

private String group;

private String consumer1;

private String consumer2;

}Add a timer to scan messages that have not been ACK. The specific logic code comments are written in detail.

@Component

public class RedisStreamScheduled {

private static final Logger LOGGER = LoggerFactory.getLogger(RedisStreamScheduled.class);

@Autowired

private StringRedisTemplate stringRedisTemplate;

@Autowired

private RedisStreamConfig redisStreamConfig;

/**

* Scan every 5 seconds to see if there are any waiting for your consumption

* Process the dead letter queue. If no ack is sent to consumer 1 for more than 1 minute, forward it to consumer 2. If it exceeds 20 seconds and the number of forwarding times is 2, conduct manual ack. And record the abnormal information

*/

@Scheduled(cron="0/5 * * * * ?")

public void scanPendingMsg() {

StreamOperations<String, String, String> streamOperations = this.stringRedisTemplate.opsForStream();

// To obtain the pending message information in the group is essentially to execute the XPENDING instruction

PendingMessagesSummary pendingMessagesSummary = streamOperations.pending(redisStreamConfig.getStream(), redisStreamConfig.getGroup());

// Number of all pending messages

long totalPendingMessages = pendingMessagesSummary.getTotalPendingMessages();

if(totalPendingMessages == 0){

return;

}

// Consumer group name

String groupName= pendingMessagesSummary.getGroupName();

// Minimum ID in pending queue

String minMessageId = pendingMessagesSummary.minMessageId();

// Maximum ID in pending queue

String maxMessageId = pendingMessagesSummary.maxMessageId();

LOGGER.info("Flow:{},Consumer group:{},Altogether{}strip pending Message, Max ID={},minimum ID={}", redisStreamConfig.getStream(),groupName, totalPendingMessages, minMessageId, maxMessageId);

// Get the number of pending messages per consumer

Map<String, Long> pendingMessagesPerConsumer = pendingMessagesSummary.getPendingMessagesPerConsumer();

Map<String,List<RecordId>> consumerRecordIdMap = new HashMap<>();

// Traverse the pending message in each consumer

pendingMessagesPerConsumer.entrySet().forEach(entry -> {

// RecordId of the group to be transferred

List<RecordId> list = new ArrayList<>();

// consumer

String consumer = entry.getKey();

// Number of pending messages for consumers

long consumerTotalPendingMessages = entry.getValue();

LOGGER.info("consumer:{},Altogether{}strip pending news", consumer, consumerTotalPendingMessages);

if (consumerTotalPendingMessages > 0) {

// Read the first 10 records in the consumer pending queue from the record with ID=0 to the maximum ID

PendingMessages pendingMessages = streamOperations.pending(redisStreamConfig.getStream(), Consumer.from(redisStreamConfig.getGroup(), consumer), Range.closed("0", "+"), 10);

// Traverse the details of all Opending messages

pendingMessages.forEach(message -> {

// ID of the message

RecordId recordId = message.getId();

// The message is obtained from the consumer group and the time to this moment

Duration elapsedTimeSinceLastDelivery = message.getElapsedTimeSinceLastDelivery();

// The number of times the message was obtained

long deliveryCount = message.getTotalDeliveryCount();

// Judge whether there is no consumption for more than 60 seconds

if(elapsedTimeSinceLastDelivery.getSeconds()>20){

// If the number of times a message is consumed is 1, it will be grouped once; otherwise, it will be consumed manually

if( 1 == deliveryCount ){

list.add(recordId);

}else {

LOGGER.info("Manual ACK news,And record the abnormality, id={}, elapsedTimeSinceLastDelivery={}, deliveryCount={}", recordId, elapsedTimeSinceLastDelivery, deliveryCount);

streamOperations.acknowledge(redisStreamConfig.getStream(),redisStreamConfig.getGroup(),recordId);

}

}

});

if(list.size()>0){

consumerRecordIdMap.put(consumer,list);

}

}

});

// Finally, group the messages to be transferred

if(!consumerRecordIdMap.isEmpty()){

this.changeConsumer(consumerRecordIdMap);

}

}

/**

* Group messages

* @param consumerRecordIdMap

*/

private void changeConsumer(Map<String,List<RecordId>> consumerRecordIdMap) {

consumerRecordIdMap.entrySet().forEach(entry -> {

// Obtain another consumer according to the current consumer

String oldComsumer = entry.getKey();

String newConsumer = redisStreamConfig.getConsumers().stream().filter(s -> !s.equals(oldComsumer)).collect(Collectors.toList()).get(0);

List<RecordId> recordIds = entry.getValue();

List<ByteRecord> retVal = this.stringRedisTemplate.execute(new RedisCallback<List<ByteRecord>>() {

@Override

public List<ByteRecord> doInRedis(RedisConnection redisConnection) throws DataAccessException {

// It is equivalent to executing XCLAIM operation to transfer messages from one consumer to another in batches

return redisConnection.streamCommands().xClaim(redisStreamConfig.getStream().getBytes(),

redisStreamConfig.getGroup(), newConsumer, minIdle(Duration.ofSeconds(10)).ids(recordIds));

}

});

for (ByteRecord byteRecord : retVal) {

LOGGER.info("Consumers who changed the message: id={}, value={},newConsumer={}", byteRecord.getId(), byteRecord.getValue(),newConsumer);

}

});

}

}

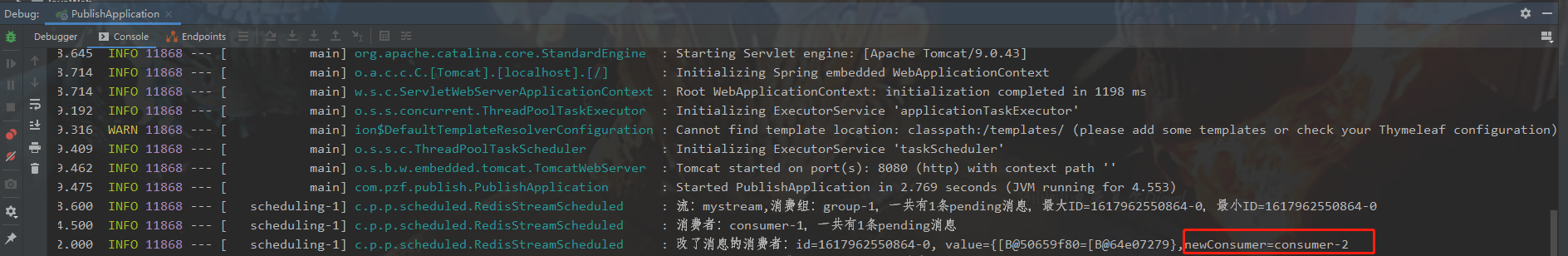

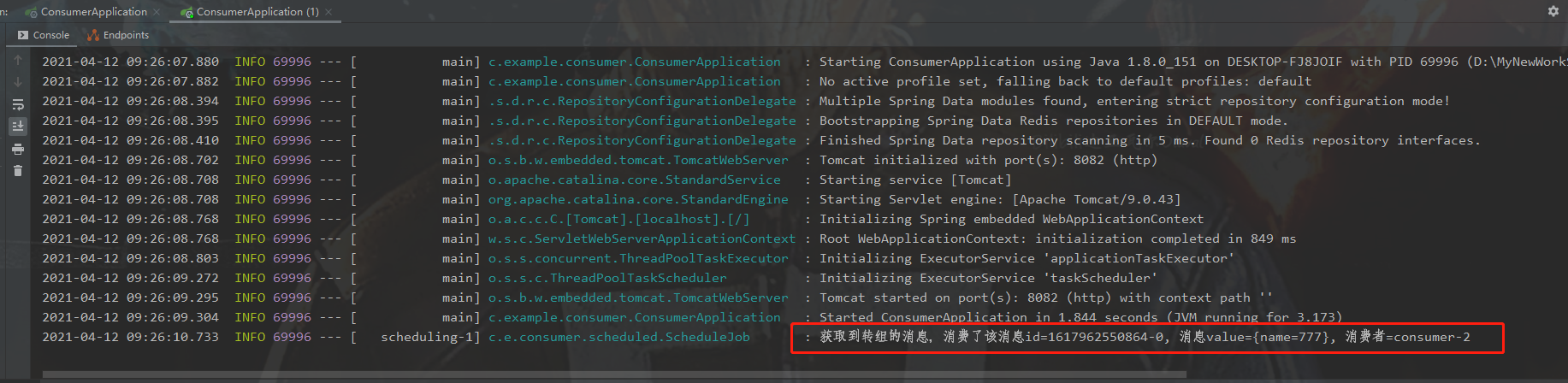

I don't know if you still remember that we have a 777 data sent to consumer 1, but there is no ACK data. At the moment, the specific receiving time has been far more than 20 seconds. Now let's run the program to see if we can transfer it to consumer 2.

The results are as follows:

We can see from the log that the group has been transferred successfully. Now we go to redis and execute the command > xpending mystream group-1 0 + 10 consumer-2 , to see if it has been transferred to consumer-2

127.0.0.1:6379> XPENDING mystream group-1 0 + 10 consumer-2 1) 1) "1617962550864-0" 2) "consumer-2" 3) (integer) 64710 4) (integer) 2 127.0.0.1:6379>

Obviously, this message has been transferred to consumer-2. As you can see, 3) 4) represent the time of receiving the message and the number of group conversions.

However, at this time, our consumer2 project cannot receive this group transfer message, because this message is only transferred from the pending of consumer-1 to the pending of consumer-2. If you want to consume, you must use the timer to scan the seconds regularly.

Let's take a look at how to make consumer 2 consume this message

Configure consumer consumer2:

Modify profile

application.yml The document is modified as follows:

redisstream:

stream: mystream

group: group-1

consumer: consumer-2

---------------------------------------------

RedisStreamConfig Class is modified as follows:

@Data

@Component

@ConfigurationProperties(prefix = "redisstream")

public class RedisStreamConfig {

private String stream;

private String group;

private String consumer;

}Add a timer to scan messages in pending regularly. We should pay attention to the judgment of if (totaldeliverycount > 1). We only consume those whose group transfer times are greater than 1 to avoid repeated consumption of new messages

@Component

public class ScheduleJob {

static final Logger LOGGER = LoggerFactory.getLogger(ScheduleJob.class);

@Autowired

StringRedisTemplate stringRedisTemplate;

@Autowired

RedisStreamConfig redisStreamConfig;

/**

* Scan every 5 seconds to see if there are any waiting for your consumption

* Mainly consume the messages transferred from the group. If the number of group transfers is greater than 1, try to consume

*/

@Scheduled(cron="0/5 * * * * ?")

public void reportCurrentTime() {

StreamOperations<String, String, String> streamOperations = this.stringRedisTemplate.opsForStream();

/*Read the message from the consumer's pending queue, and those who can enter it must be non business exceptions, such as interface timeout, server downtime, etc.

For business exceptions, such as field parsing failure, throw them into the exception table or redis*/

PendingMessages pendingMessages = streamOperations.pending(redisStreamConfig.getStream(), Consumer.from(redisStreamConfig.getGroup(), redisStreamConfig.getConsumer()));

if(pendingMessages.size() > 0){

pendingMessages.forEach( pendingMessage -> {

// The interval between the last consumption and now

Duration elapsedTimeSinceLastDelivery = pendingMessage.getElapsedTimeSinceLastDelivery();

// Group transfer times

long totalDeliveryCount = pendingMessage.getTotalDeliveryCount();

// Only those with more than one group transfer are consumed

if(totalDeliveryCount > 1){

try{

RecordId id = pendingMessage.getId();

List<MapRecord<String, String, String>> result = streamOperations.range(redisStreamConfig.getStream(), Range.rightOpen(id.toString(),id.toString()));

MapRecord<String, String, String> entries = result.get(0);

// Consumption news

LOGGER.info("Get the message of group transfer and consume the message id={}, news value={}, consumer={}", entries.getId(), entries.getValue(),redisStreamConfig.getConsumer());

// Manual ack message

streamOperations.acknowledge(redisStreamConfig.getGroup(), entries);

}catch (Exception e){

// exception handling

e.printStackTrace();

}

}

});

}

}

}Run the program and check the results: this message has been successfully consumed, and the manual ACK is lost.

At this point, we can see that there are no messages to be consumed in redis:

127.0.0.1:6379> XPENDING mystream group-1 0 + 10 consumer-2 (empty list or set) 127.0.0.1:6379> XPENDING mystream group-1 0 + 10 consumer-1 (empty list or set) 127.0.0.1:6379>

Question 5: the data in the stream has been stored in memory for a long time. How to deal with insufficient memory

Of course, Redis Stream also helps us think of this problem, and we should know that there are mainly two commands to control after seeing the introduction on the official website. XTRIM and MAXLEN respectively

In contrast, it is more reasonable to use XTRIM in java. A timer is added in producer to clean up data regularly

@Component

public class CleanStreamJob {

@Autowired

private StringRedisTemplate stringRedisTemplate;

@Autowired

private RedisStreamConfig redisStreamConfig;

@Scheduled(cron="0/5 * * * * ?")

public void reportCurrentTime() {

// Regularly clean up the data in the stream and keep 3

this.stringRedisTemplate.opsForStream().trim(redisStreamConfig.getStream(),3L);

// //Regularly clean up the data in the stream and keep about 3, not less than 3

// this.stringRedisTemplate.opsForStream().trim(redisStreamConfig.getStream(),3L,true);

}

}

The execution time of the specific timer and the number of reserved items can be modified according to the business.

Before starting execution, there are multiple records in our stream, and there should be the last three after execution. View through the > Xinfo stream mystream command:

127.0.0.1:6379> XINFO STREAM mystream

1) "length"

2) (integer) 3

3) "radix-tree-keys"

4) (integer) 1

5) "radix-tree-nodes"

6) (integer) 2

7) "groups"

8) (integer) 2

9) "last-generated-id"

10) "1617972388360-0"

11) "first-entry"

12) 1) "1617960242592-0"

2) 1) "name"

2) "666"

13) "last-entry"

14) 1) "1617972388360-0"

2) 1) "name"

2) "888"

127.0.0.1:6379>

So far, the whole set of Java development on Redis Stream distributed message queue has been completed.

It's not easy to create. I hope you can praise and support it.

Attach git address: https://gitee.com/adobepzf/redis-stream.git