Kerberos authentication and Kafka integration under centos7

Version environment: (1)centos7.6 (2)kafka_2.12-0.10.2.2 (3)kerberos (4)flink-1.11.3-bin-scala_2.11 (5)jdk1.8Note: where "b.kuxiao" is my hostname

1, kafka installation

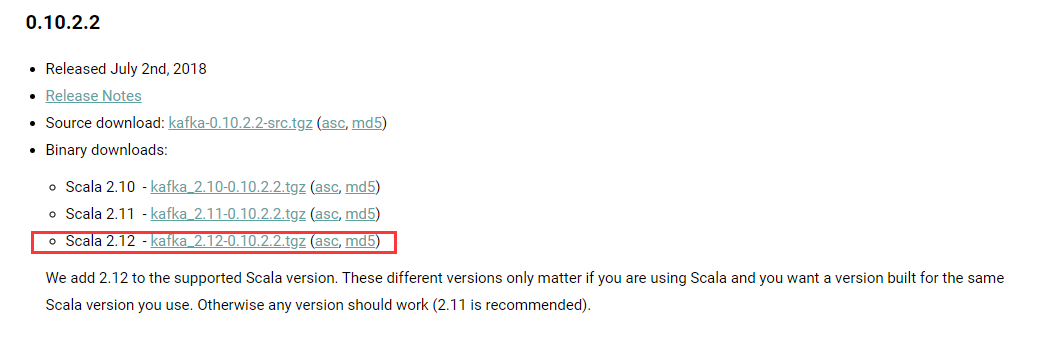

1.1. Go to the official website to download kafka

kafka official website: http://kafka.apache.org/downloads.

Choose the version you need: (I use version 0.10.2.2 in this article)

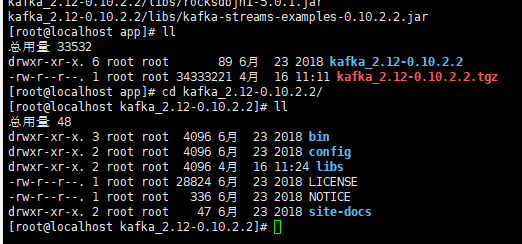

1.2. Unzip tgz

tar -zxvf kafka_2.12-0.10.2.2.tgz

1.3. Modify profile

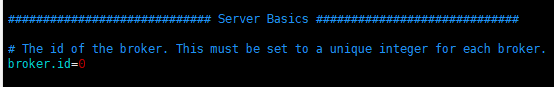

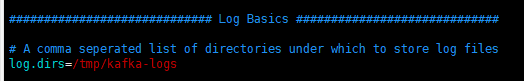

Enter config / server Properties file modification

broker.id: because kafka is generally deployed in a cluster, each stand-alone machine has a broker ID, because I only have one stand-alone test here, so it's OK to specify 0

log.dirs: specify kafka's log directory

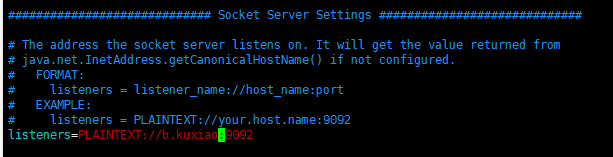

listeners: uncomment and change to "PLAINTEXT://b.kuxiao:9092", where "b.kuxiao" is my hostname (you can use the hostnamectl command to view your own hostname)

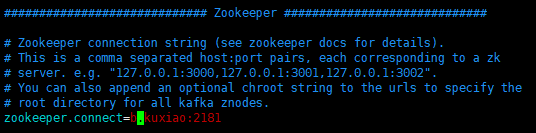

zookeeper.connect: modify to your own hostname

Enter config / producer Properties file modification

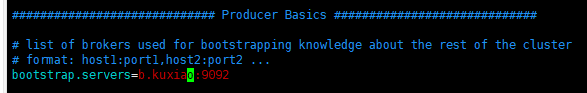

bootstrap.servers: modify to your own hostname

Enter config / consumer Properties file modification

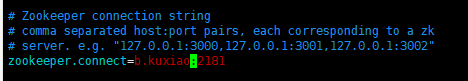

zookeeper.connect: modify to your own hostname

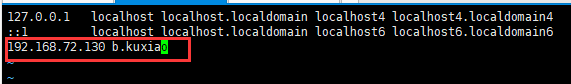

Enter vim /etc/hosts with the command and add your own hostname. "192.168.72.130" is my intranet ip address

1.4. Start zookeeper (zk from kafka)

./bin/zookeeper-server-start.sh ./config/zookeeper.properties or nohup ./bin/zookeeper-server-start.sh ./config/zookeeper.properties &

1.5. Start kafka

./bin/kafka-server-start.sh ./config/server.properties or nohup ./bin/kafka-server-start.sh ./config/server.properties &

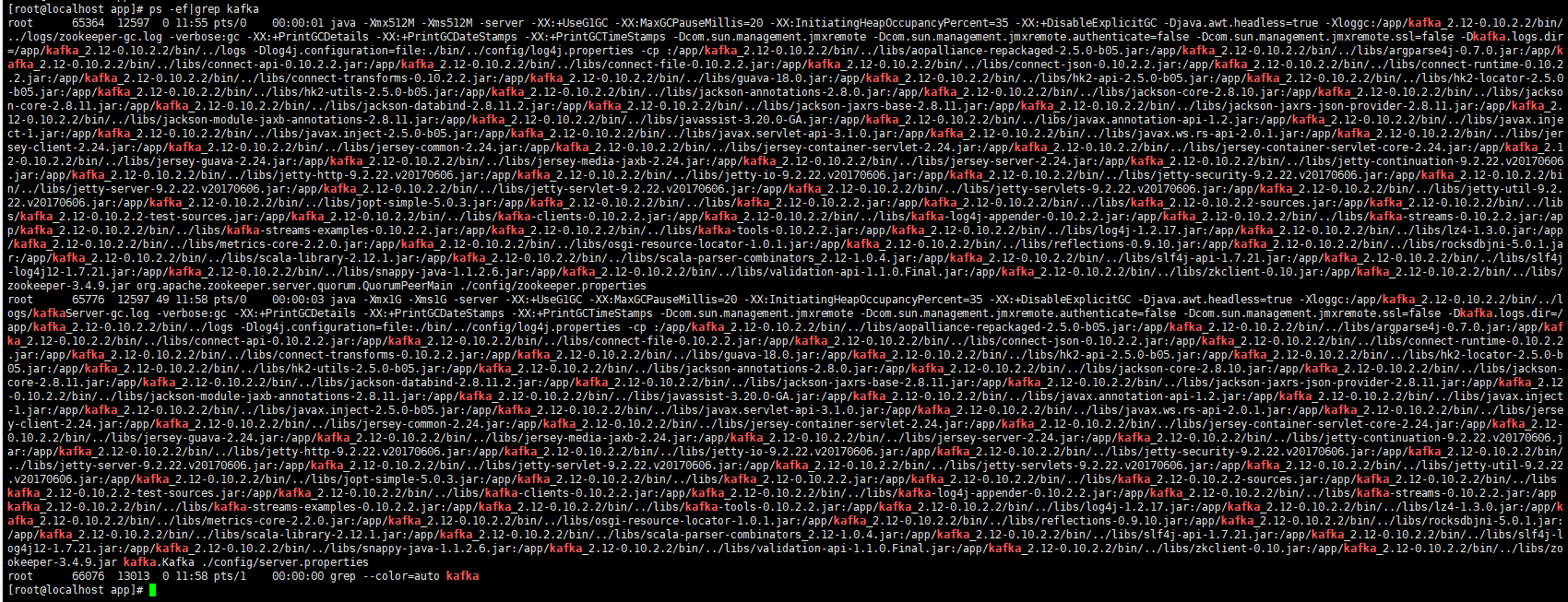

1.6. Use ps -ef|grep kafka to view and execute successfully

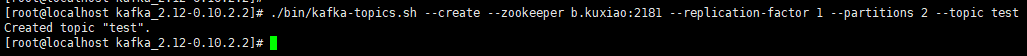

1.7. Create a test topic (test)

./bin/kafka-topics.sh --create --zookeeper b.kuxiao:2181 --replication-factor 1 --partitions 2 --topic test

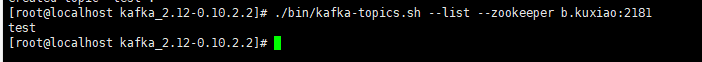

View existing topic s

./bin/kafka-topics.sh --list --zookeeper b.kuxiao:2181

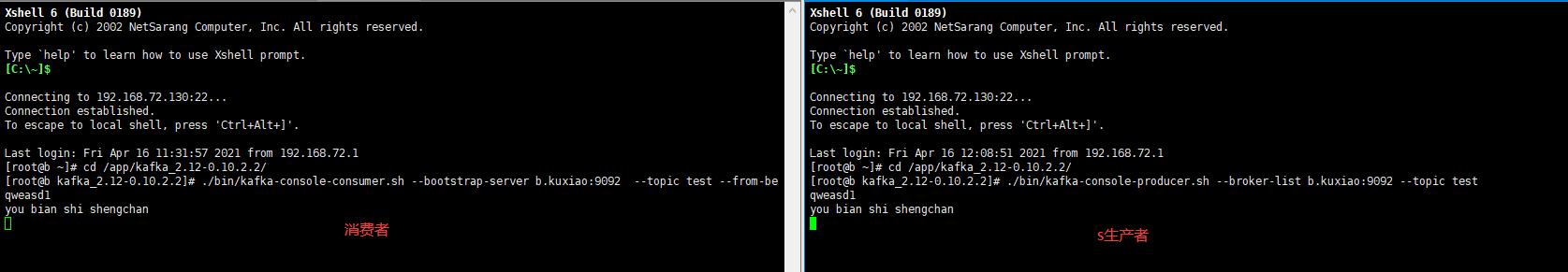

1.8. Start consumer

./bin/kafka-console-consumer.sh --bootstrap-server b.kuxiao:9092 --topic test --from-beginning

1.9. Start producer

./bin/kafka-console-producer.sh --broker-list b.kuxiao:9092 --topic test

1.10. test

2, Kerberos installation

2.1.Kerberos server installation

2.1.1. install

yum install krb5-server

2.1.2. Modify profile

Modify / var / Kerberos / krb5kdc / KDC conf

vim /var/kerberos/krb5kdc/kdc.conf

[kdcdefaults]

kdc_ports = 88

kdc_tcp_ports = 88

[realms]

KUXIAO.COM = {

#master_key_type = aes256-cts

acl_file = /var/kerberos/krb5kdc/kadm5.acl

dict_file = /usr/share/dict/words

admin_keytab = /var/kerberos/krb5kdc/kadm5.keytab

supported_enctypes = aes256-cts:normal aes128-cts:normal des3-hmac-sha1:normal arcfour-hmac:normal camellia256-cts:normal camellia128-cts:normal des-hmac-sha1:normal des-cbc-md5:normal des-cbc-crc:normal

}

Modify / var / Kerberos / krb5kdc / kadm5 acl

vim /var/kerberos/krb5kdc/kadm5.acl

*/admin@KUXIAO.COM *

Modify / etc / krb5 conf

vim /etc/krb5.conf

# Configuration snippets may be placed in this directory as well

includedir /etc/krb5.conf.d/

[logging]

default = FILE:/var/log/krb5libs.log

kdc = FILE:/var/log/krb5kdc.log

admin_server = FILE:/var/log/kadmind.log

[libdefaults]

default_realm = KUXIAO.COM

dns_lookup_kdc = false

dns_lookup_realm = false

ticket_lifetime = 86400

# renew_lifetime = 604800

forwardable = true

default_tgs_enctypes = aes128-cts aes256-cts-hmac-sha1-96 des3-hmac-sha1 arcfour-hmac

default_tkt_enctypes = aes128-cts aes256-cts-hmac-sha1-96 des3-hmac-sha1 arcfour-hmac

permitted_enctypes = aes128-cts aes256-cts-hmac-sha1-96 des3-hmac-sha1 arcfour-hmac

udp_preference_limit = 1

kdc_timeout = 60000

[realms]

KUXIAO.COM = {

kdc = b.kuxiao #hostname

admin_server = b.kuxiao

}

# EXAMPLE.COM = {

# kdc = kerberos.example.com

# admin_server = kerberos.example.com

# }

[domain_realm]

# .example.com = EXAMPLE.COM

# example.com = EXAMPLE.COM

2.1.3. Initialize KDC database

You need to enter a password to manage the KDC server. Don't forget

kdb5_util create -r KUXIAO.COM -s

Loading random data Initializing database '/var/kerberos/krb5kdc/principal' for realm 'KUXIAO.COM', master key name 'K/M@KUXIAO.COM' You will be prompted for the database Master Password. It is important that you NOT FORGET this password. Enter KDC database master key: Re-enter KDC database master key to verify:

2.1.4. Start KDC service

systemctl start krb5kdc#Start service systemctl status krb5kdc.service#View running status systemctl enable krb5kdc#Set startup and self startup

● krb5kdc.service - Kerberos 5 KDC

Loaded: loaded (/usr/lib/systemd/system/krb5kdc.service; disabled; vendor preset: disabled)

Active: active (running) since V 2021-04-16 14:41:38 CST; 7s ago

Process: 77245 ExecStart=/usr/sbin/krb5kdc -P /var/run/krb5kdc.pid $KRB5KDC_ARGS (code=exited, status=0/SUCCESS)

Main PID: 77249 (krb5kdc)

Tasks: 1

CGroup: /system.slice/krb5kdc.service

└─77249 /usr/sbin/krb5kdc -P /var/run/krb5kdc.pid

4 June 16-14:41:38 b.kuxiao systemd[1]: Starting Kerberos 5 KDC...

4 June 16-14:41:38 b.kuxiao systemd[1]: Started Kerberos 5 KDC.

2.1.5. Start kerberos service

systemctl start kadmin#Start service systemctl status kadmin#View running status systemctl enable kadmin#Set startup and self startup

● kadmin.service - Kerberos 5 Password-changing and Administration

Loaded: loaded (/usr/lib/systemd/system/kadmin.service; disabled; vendor preset: disabled)

Active: active (running) since V 2021-04-16 14:44:12 CST; 22s ago

Process: 77433 ExecStart=/usr/sbin/_kadmind -P /var/run/kadmind.pid $KADMIND_ARGS (code=exited, status=0/SUCCESS)

Main PID: 77438 (kadmind)

Tasks: 1

CGroup: /system.slice/kadmin.service

└─77438 /usr/sbin/kadmind -P /var/run/kadmind.pid

4 June 16-14:44:12 b.kuxiao systemd[1]: Starting Kerberos 5 Password-changing and Administration...

4 June 16-14:44:12 b.kuxiao systemd[1]: Started Kerberos 5 Password-changing and Administration.

2.1.6. Setup administrator

/usr/sbin/kadmin.local -q "addprinc admin/admin"

Authenticating as principal root/admin@KUXIAO.COM with password. WARNING: no policy specified for admin/admin@KUXIAO.COM; defaulting to no policy Enter password for principal "admin/admin@KUXIAO.COM": Re-enter password for principal "admin/admin@KUXIAO.COM": Principal "admin/admin@KUXIAO.COM" created.

2.1.7.kerberos daily operation

Log in to the administrator account: if you are on this computer, you can log in through kadmin Local login directly. For other machines, verify with kinit first

kadmin.local

or

[root@localhost app]# kinit admin/admin Password for admin/admin@KUXIAO.COM: [root@localhost app]# kadmin Authenticating as principal admin/admin@KUXIAO.COM with password. Password for admin/admin@KUXIAO.COM: kadmin:

Add, delete and check account

kadmin.local: addprinc -pw 123456 kafka/b.kuxiao@KUXIAO.COM#Create a new account WARNING: no policy specified for kafka/b.kuxiao@KUXIAO.COM; defaulting to no policy Principal "kafka/b.kuxiao@KUXIAO.COM" created. kadmin.local: listprincs #View all accounts K/M@KUXIAO.COM admin/admin@KUXIAO.COM kadmin/admin@KUXIAO.COM kadmin/b.kuxiao@KUXIAO.COM kadmin/changepw@KUXIAO.COM kafka/b.kuxiao@KUXIAO.COM kiprop/b.kuxiao@KUXIAO.COM krbtgt/KUXIAO.COM@KUXIAO.COM test@KUXIAO.COM kadmin.local: delprinc test#Delete test account Are you sure you want to delete the principal "test@KUXIAO.COM"? (yes/no): yes Principal "test@KUXIAO.COM" deleted. Make sure that you have removed this principal from all ACLs before reusing.

Generate keytab: use xst command or ktadd command

kadmin.local: ktadd -k /app/kafka.keytab -norandkey kafka/b.kuxiao Entry for principal kafka/b.kuxiao with kvno 1, encryption type aes256-cts-hmac-sha1-96 added to keytab WRFILE:/app/kafka.keytab. Entry for principal kafka/b.kuxiao with kvno 1, encryption type aes128-cts-hmac-sha1-96 added to keytab WRFILE:/app/kafka.keytab. Entry for principal kafka/b.kuxiao with kvno 1, encryption type des3-cbc-sha1 added to keytab WRFILE:/app/kafka.keytab. Entry for principal kafka/b.kuxiao with kvno 1, encryption type arcfour-hmac added to keytab WRFILE:/app/kafka.keytab. Entry for principal kafka/b.kuxiao with kvno 1, encryption type camellia256-cts-cmac added to keytab WRFILE:/app/kafka.keytab. Entry for principal kafka/b.kuxiao with kvno 1, encryption type camellia128-cts-cmac added to keytab WRFILE:/app/kafka.keytab. Entry for principal kafka/b.kuxiao with kvno 1, encryption type des-hmac-sha1 added to keytab WRFILE:/app/kafka.keytab. Entry for principal kafka/b.kuxiao with kvno 1, encryption type des-cbc-md5 added to keytab WRFILE:/app/kafka.keytab.

2.2.Kerberos client installation

2.2.1. install

yum install krb5-workstation krb5-libs krb5-auth-dialog

2.2.2. Change the / etc / krb5.0 file previously modified by the server Copy conf to the client (I only have one server, so I won't operate here)

2.2.3. User operation

Authenticated user

kinit -kt /app/kafka.keytab kafka/b.kuxiao

[root@localhost app]# kinit -kt /app/kafka.keytab kafka/b.kuxiao [root@localhost app]# klist Ticket cache: FILE:/tmp/krb5cc_0 Default principal: kafka/b.kuxiao@KUXIAO.COM Valid starting Expires Service principal 2021-04-16T15:25:28 2021-04-17T15:25:28 krbtgt/KUXIAO.COM@KUXIAO.COM

View current authenticated users

klist

[root@localhost app]# klist Ticket cache: FILE:/tmp/krb5cc_0 Default principal: kafka/b.kuxiao@KUXIAO.COM Valid starting Expires Service principal 2021-04-16T15:25:28 2021-04-17T15:25:28 krbtgt/KUXIAO.COM@KUXIAO.COM

Delete the cache of current authentication

kdestroy

[root@localhost app]# kdestroy [root@localhost app]# klist klist: No credentials cache found (filename: /tmp/krb5cc_0)

3, Fkaka security configuration

3.1. Generate user keytab

I directly use the generated in 2.1.7

kadmin.local: ktadd -k /app/kafka.keytab -norandkey kafka/b.kuxiao

3.2. create profile

Create JAAS / client.xml in kafka folder Properties file, as follows:

security.protocol=SASL_PLAINTEXT sasl.kerberos.service.name=kafka sasl.mechanism=GSSAPI

Create JAAS / kafka under the kafka folder_ server_ jaas. Conf file, as follows:

KafkaServer {

com.sun.security.auth.module.Krb5LoginModule required

useKeyTab=true

storeKey=true

keyTab="/app/kafka.keytab"

principal="kafka/b.kuxiao@KUXIAO.COM";

};

KafkaClient {

com.sun.security.auth.module.Krb5LoginModule required

useKeyTab=true

storeKey=true

keyTab="/app/kafka.keytab"

principal="kafka/b.kuxiao@KUXIAO.COM";

};

3.3. Modify profile

In config / server Modify properties and add the following configuration

listeners=SASL_PLAINTEXT://b.kuxiao:9092 security.inter.broker.protocol=SASL_PLAINTEXT sasl.mechanism.inter.broker.protocol=GSSAPI sasl.enabled.mechanisms=GSSAPI sasl.kerberos.service.name=kafka super.users=User:kafka authorizer.class.name=kafka.security.auth.SimpleAclAuthorizer

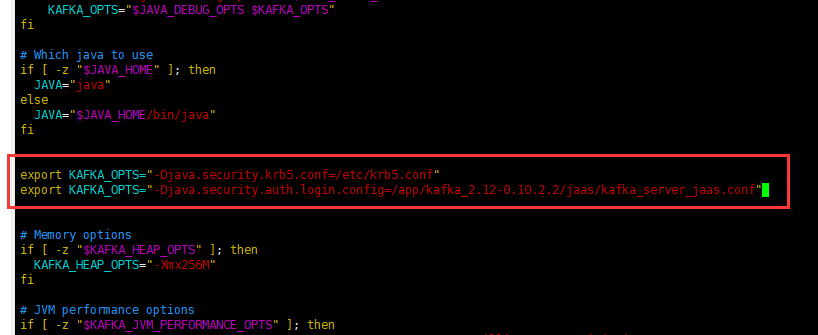

In bin / Kafka run class Add kafka jvm parameter to SH script

export KAFKA_OPTS="-Djava.security.krb5.conf=/etc/krb5.conf" export KAFKA_OPTS="-Djava.security.auth.login.config=/app/kafka_2.12-0.10.2.2/jaas/kafka_server_jaas.conf"

3.4. Restart kafka service test

zookeeper startup

nohup ./bin/zookeeper-server-start.sh ./config/zookeeper.properties &

kafka start

nohup ./bin/kafka-server-start.sh ./config/server.properties &

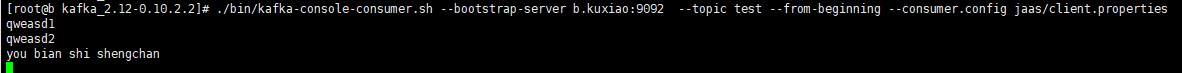

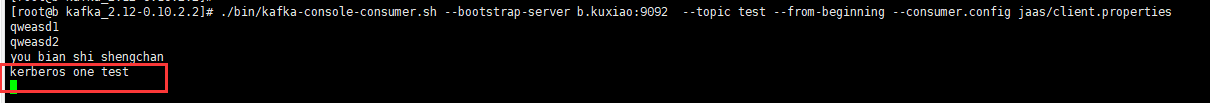

Consumer after Kerberos authentication

./bin/kafka-console-consumer.sh --bootstrap-server b.kuxiao:9092 --topic test --from-beginning --consumer.config jaas/client.properties

The consumer started successfully, as shown in the figure

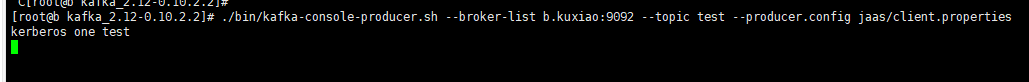

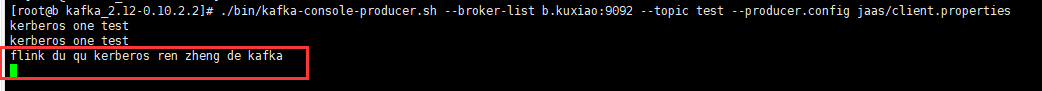

Kerberos certified producer

./bin/kafka-console-producer.sh --broker-list b.kuxiao:9092 --topic test --producer.config jaas/client.properties

Producer input data

Consumers get data

Now kafka adding Kerberos authentication is complete

4, The java code implements flink to subscribe to Kafka messages authenticated by Kerberos

4.1.flink installation

4.1.1. download

Download from the official website: https://flink.apache.org/downloads.html

4.1.2. decompression

tar -zxvf flink-1.11.3-bin-scala_2.11.tgz

4.1.3. Modify profile

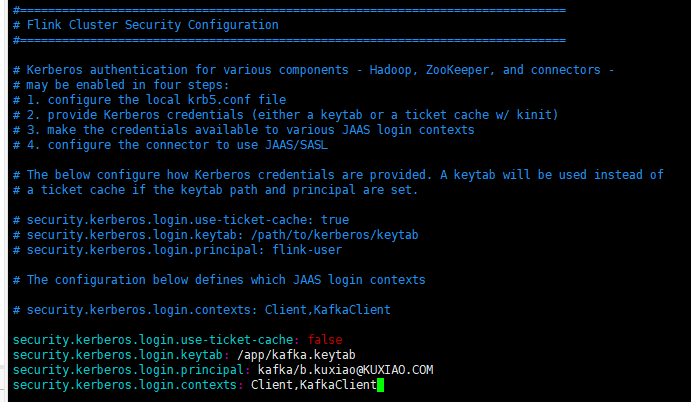

Modify the conf / flex-conf.yaml file and add the following contents: (I only have one server here, so I use a keytab)

security.kerberos.login.use-ticket-cache: false security.kerberos.login.keytab: /app/kafka.keytab security.kerberos.login.principal: kafka/b.kuxiao@KUXIAO.COM security.kerberos.login.contexts: Client,KafkaClient

Refer to figure:

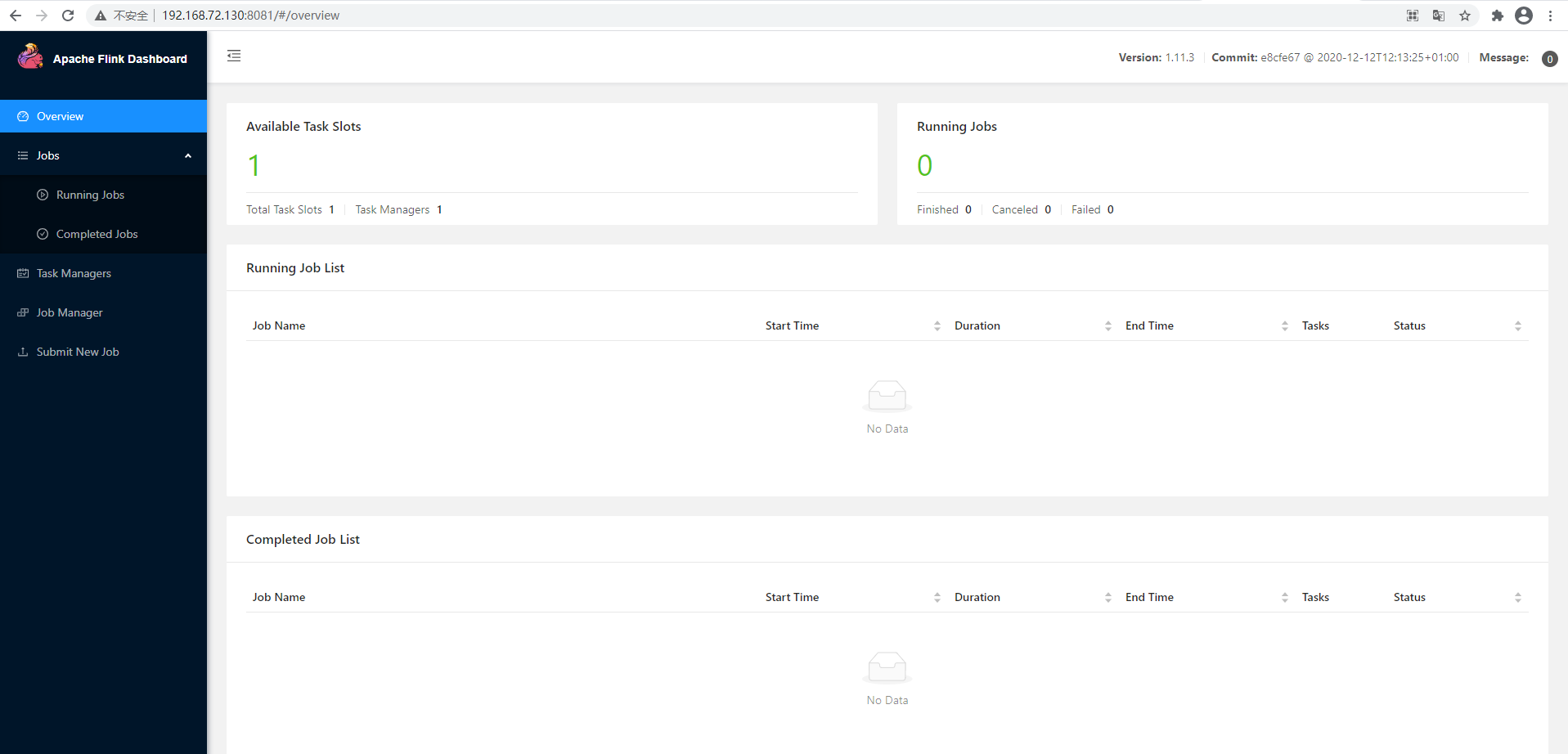

4.2. Start flick

./bin/start-cluster.sh

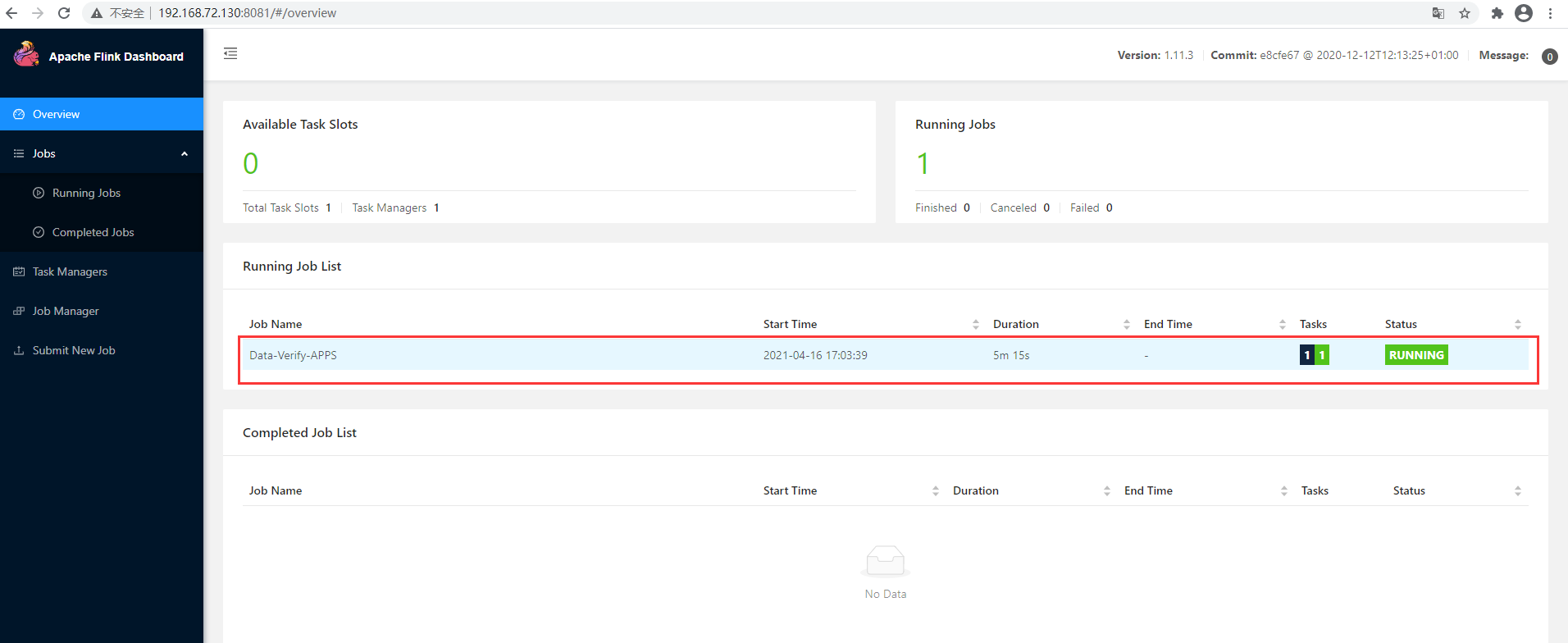

Open: http://192.168.72.130:8081/

4.3.Java code implementation

rely on

<project xmlns="http://maven.apache.org/POM/4.0.0"

xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance"

xsi:schemaLocation="http://maven.apache.org/POM/4.0.0 http://maven.apache.org/xsd/maven-4.0.0.xsd">

<modelVersion>4.0.0</modelVersion>

<groupId>com.cn</groupId>

<artifactId>point</artifactId>

<version>1.0</version>

<parent>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-starter-parent</artifactId>

<version>2.4.4</version>

<relativePath /> <!-- lookup parent from repository -->

</parent>

<properties>

<java.version>1.8</java.version>

<!--<flink.version>1.12.0</flink.version>-->

<flink.version>1.11.3</flink.version>

<!-- <flink.version>1.7.2</flink.version>-->

<hadoop.version>2.7.7</hadoop.version>

<scala.version>2.11.8</scala.version>

</properties>

<dependencies>

<!-- https://mvnrepository.com/artifact/org.springframework.boot/spring-boot-starter-parent -->

<!-- <dependency> <groupId>org.springframework.boot</groupId> <artifactId>spring-boot-starter-parent</artifactId>

<version>2.4.4</version> <type>pom</type> </dependency> -->

<!-- alibaba fastjson -->

<dependency>

<groupId>com.alibaba</groupId>

<artifactId>fastjson</artifactId>

<version>1.2.22</version>

</dependency>

<dependency>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-starter-web</artifactId>

</dependency>

<dependency>

<groupId>junit</groupId>

<artifactId>junit</artifactId>

<!-- The default version is 3.8.1,Change to 4.x,Because 3.x The method used is programming, 4.x In the form of annotations. -->

</dependency>

<!-- Introduction and installation required kafka Dependency of corresponding version -->

<!-- https://mvnrepository.com/artifact/org.springframework.kafka/spring-kafka -->

<dependency>

<groupId>org.springframework.kafka</groupId>

<artifactId>spring-kafka</artifactId>

</dependency>

<dependency>

<groupId>org.apache.kafka</groupId>

<artifactId>kafka_2.11</artifactId>

<version>1.1.1</version>

<exclusions>

<exclusion>

<groupId>org.apache.zookeeper</groupId>

<artifactId>zookeeper</artifactId>

</exclusion>

</exclusions>

</dependency>

<!-- flink core API -->

<!-- https://mvnrepository.com/artifact/org.apache.flink/flink-cep -->

<dependency>

<groupId>org.apache.flink</groupId>

<artifactId>flink-cep_2.11</artifactId>

<version>${flink.version}</version>

</dependency>

<dependency>

<groupId>org.apache.flink</groupId>

<artifactId>flink-java</artifactId>

<version>${flink.version}</version>

<exclusions>

<exclusion>

<artifactId>commons-compress</artifactId>

<groupId>org.apache.commons</groupId>

</exclusion>

</exclusions>

</dependency>

<dependency>

<groupId>org.apache.flink</groupId>

<artifactId>flink-scala_2.11</artifactId>

<version>${flink.version}</version>

<exclusions>

<exclusion>

<artifactId>scala-library</artifactId>

<groupId>org.scala-lang</groupId>

</exclusion>

</exclusions>

</dependency>

<dependency>

<groupId>org.apache.flink</groupId>

<artifactId>flink-streaming-java_2.11</artifactId>

<version>${flink.version}</version>

<exclusions>

<exclusion>

<artifactId>scala-library</artifactId>

<groupId>org.scala-lang</groupId>

</exclusion>

<exclusion>

<artifactId>scala-parser-combinators_2.11</artifactId>

<groupId>org.scala-lang.modules</groupId>

</exclusion>

<exclusion>

<artifactId>slf4j-api</artifactId>

<groupId>org.slf4j</groupId>

</exclusion>

</exclusions>

</dependency>

<dependency>

<groupId>org.apache.flink</groupId>

<artifactId>flink-streaming-scala_2.11</artifactId>

<version>${flink.version}</version>

<exclusions>

<exclusion>

<artifactId>scala-library</artifactId>

<groupId>org.scala-lang</groupId>

</exclusion>

</exclusions>

</dependency>

<dependency>

<groupId>org.apache.flink</groupId>

<artifactId>flink-clients_2.11</artifactId>

<version>${flink.version}</version>

</dependency>

<!--flink integration kafka -->

<dependency>

<groupId>org.apache.flink</groupId>

<artifactId>flink-connector-kafka_2.11</artifactId>

<version>${flink.version}</version>

<exclusions>

<exclusion>

<artifactId>slf4j-api</artifactId>

<groupId>org.slf4j</groupId>

</exclusion>

<exclusion>

<artifactId>snappy-java</artifactId>

<groupId>org.xerial.snappy</groupId>

</exclusion>

</exclusions>

</dependency>

<dependency>

<groupId>org.apache.flink</groupId>

<artifactId>flink-statebackend-rocksdb_2.11</artifactId>

<version>${flink.version}</version>

<scope>provided</scope>

</dependency>

<!-- https://mvnrepository.com/artifact/org.apache.hadoop/hadoop-client -->

<dependency>

<groupId>org.apache.hadoop</groupId>

<artifactId>hadoop-client</artifactId>

<version>${hadoop.version}</version>

<exclusions>

<exclusion>

<artifactId>jsr305</artifactId>

<groupId>com.google.code.findbugs</groupId>

</exclusion>

<exclusion>

<artifactId>guava</artifactId>

<groupId>com.google.guava</groupId>

</exclusion>

<exclusion>

<artifactId>commons-cli</artifactId>

<groupId>commons-cli</groupId>

</exclusion>

<exclusion>

<artifactId>commons-codec</artifactId>

<groupId>commons-codec</groupId>

</exclusion>

<exclusion>

<artifactId>commons-collections</artifactId>

<groupId>commons-collections</groupId>

</exclusion>

<exclusion>

<artifactId>commons-lang</artifactId>

<groupId>commons-lang</groupId>

</exclusion>

<exclusion>

<artifactId>commons-logging</artifactId>

<groupId>commons-logging</groupId>

</exclusion>

<exclusion>

<artifactId>netty</artifactId>

<groupId>io.netty</groupId>

</exclusion>

<exclusion>

<artifactId>log4j</artifactId>

<groupId>log4j</groupId>

</exclusion>

<exclusion>

<artifactId>commons-math3</artifactId>

<groupId>org.apache.commons</groupId>

</exclusion>

<exclusion>

<artifactId>jackson-core-asl</artifactId>

<groupId>org.codehaus.jackson</groupId>

</exclusion>

<exclusion>

<artifactId>jackson-mapper-asl</artifactId>

<groupId>org.codehaus.jackson</groupId>

</exclusion>

<exclusion>

<artifactId>slf4j-api</artifactId>

<groupId>org.slf4j</groupId>

</exclusion>

<exclusion>

<artifactId>slf4j-log4j12</artifactId>

<groupId>org.slf4j</groupId>

</exclusion>

<exclusion>

<artifactId>snappy-java</artifactId>

<groupId>org.xerial.snappy</groupId>

</exclusion>

</exclusions>

</dependency>

</dependencies>

<build>

<plugins>

<plugin>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-maven-plugin</artifactId>

</plugin>

<plugin>

<groupId>org.apache.maven.plugins</groupId>

<artifactId>maven-compiler-plugin</artifactId>

<configuration>

<source>8</source>

<target>8</target>

</configuration>

</plugin>

</plugins>

</build>

</project>

Flick startup code

package org.track.manager.data.verify.monitor.verify;

import org.apache.flink.api.common.functions.*;

import org.apache.flink.api.common.serialization.SimpleStringSchema;

import org.apache.flink.api.java.utils.ParameterTool;

import org.apache.flink.configuration.Configuration;

import org.apache.flink.streaming.api.CheckpointingMode;

import org.apache.flink.streaming.api.TimeCharacteristic;

import org.apache.flink.streaming.api.datastream.SingleOutputStreamOperator;

import org.apache.flink.streaming.api.environment.CheckpointConfig;

import org.apache.flink.streaming.api.environment.StreamExecutionEnvironment;

import org.apache.flink.streaming.connectors.kafka.FlinkKafkaConsumer010;

import org.apache.flink.util.OutputTag;

import org.slf4j.Logger;

import org.slf4j.LoggerFactory;

import java.util.*;

import java.util.concurrent.Executors;

import java.util.concurrent.ScheduledExecutorService;

import java.util.concurrent.TimeUnit;

public class DataVerifyApp {

final static Logger logger = LoggerFactory.getLogger(DataVerifyApp.class);

private static final Long INITIALDELAY = 5L;

private static final Long PERIOD = 5L;

//shunt

private static final OutputTag<String> countsTag = new OutputTag<String>("counts") {

};

public static void main(String[] args) throws Exception {

//Get external parameters

ParameterTool pt = ParameterTool.fromArgs(args);

String KAFKABROKER = "b.kuxiao:9092";// Kafka services

String TRANSACTIONGROUP = "grouptest";// Consumer group

String TOPICNAME = "test";// topic

String sasl_kerberos_service_name = "kafka";// Certified account

String security_protocol = "SASL_PLAINTEXT";

String sasl_mechanism = "GSSAPI";

StreamExecutionEnvironment streamEnv = StreamExecutionEnvironment.getExecutionEnvironment();

streamEnv.enableCheckpointing(5000); //Set flynk Auto Management checkpoints

streamEnv.getCheckpointConfig().setCheckpointingMode(CheckpointingMode.EXACTLY_ONCE); //Set the consumption semantics to actual_ Once (only once)

streamEnv.getCheckpointConfig().setMinPauseBetweenCheckpoints(30000); // Confirm that the time between checkpoints will be 30000 ms

streamEnv.getCheckpointConfig().setCheckpointTimeout(60000); // Checkpoint must be completed within 60000L, otherwise it will be abandoned

streamEnv.getCheckpointConfig().setMaxConcurrentCheckpoints(1); // Only one checkpoint is allowed at a time

//When the job is cancelled, the checkpoint of the job is retained. Note that in this case, you need to manually clear the checkpoints reserved by the job. By default, they are not reserved

streamEnv.getCheckpointConfig().enableExternalizedCheckpoints(CheckpointConfig.ExternalizedCheckpointCleanup.RETAIN_ON_CANCELLATION);

streamEnv.setStreamTimeCharacteristic(TimeCharacteristic.EventTime);

Properties properties = new Properties();

properties.setProperty("bootstrap.servers", KAFKABROKER); //IP or hostName of kafka node

properties.setProperty("group.id", TRANSACTIONGROUP); //Flink consumer group id

properties.setProperty("security.protocol", security_protocol);

properties.setProperty("sasl.mechanism", sasl_mechanism);

properties.setProperty("sasl.kerberos.service.name",sasl_kerberos_service_name);

//Create a consumer

FlinkKafkaConsumer010<String> kafkaConsumer = new FlinkKafkaConsumer010<>(TOPICNAME, new SimpleStringSchema(), properties);

// Define kafka flow

logger.info("definition kafka flow");

SingleOutputStreamOperator<String> kafkaDataStream = streamEnv.addSource(kafkaConsumer)

.filter(new RichFilterFunction<String>() {

@Override

public void open(Configuration parameters) throws Exception {

ScheduledExecutorService exec = Executors.newSingleThreadScheduledExecutor();

exec.scheduleAtFixedRate(() -> reload(), INITIALDELAY, PERIOD, TimeUnit.MINUTES);

}

//Read data repeatedly

public void reload() {

}

@Override

public boolean filter(String s) throws Exception {

logger.error("kafka data fetch : data = {}",s);

return true;

}

});

streamEnv.execute("Data-Verify-APPS");

}

}

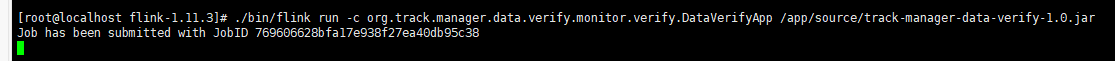

4.4. Run flink and run jar

./bin/flink run -c org.track.manager.data.verify.monitor.verify.DataVerifyApp /app/source/track-manager-data-verify-1.0.jar

Run successfully

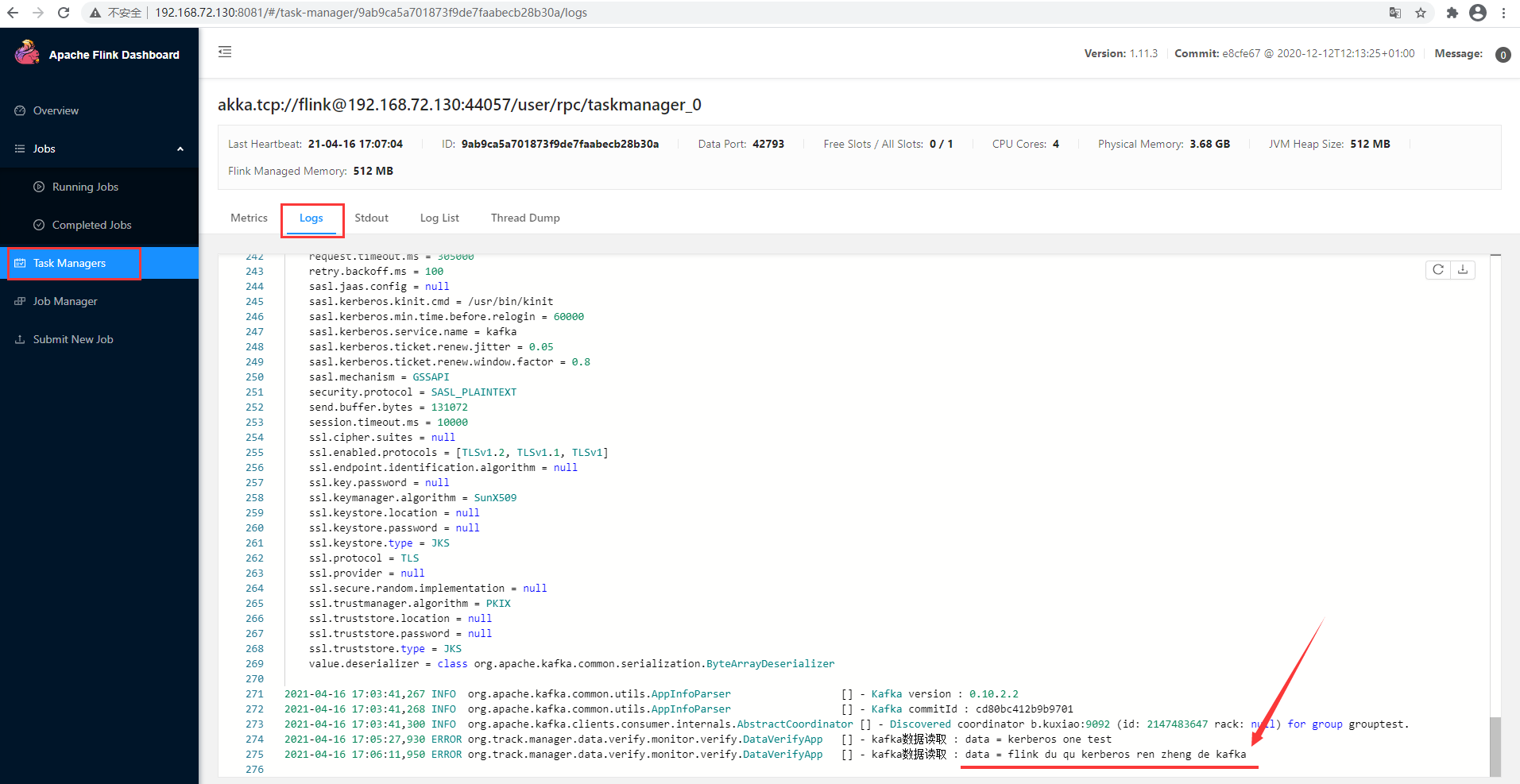

4.5. test

Producer input message

Successfully read data

5, java implementation of flink subscription Kerberos authentication Kafka message demo

In fact, the "4.3.Java code implementation" is already the source code. If you can't build it, you can download it at the following address

https://download.csdn.net/download/weixin_43857712/16713877

reference resources:

https://blog.csdn.net/qq_41787086/article/details/103434489

https://blog.csdn.net/Mrerlou/article/details/114986255