preface

import torch import torch.nn as nn from torch.nn.utils.rnn import pad_sequence from torch.nn.utils.rnn import pack_padded_sequence from torch.nn.utils.rnn import pad_packed_sequence

Notice utils. Above RNN, have you? These functions are "usually" used for RNN related processing. So you have to understand some standard concepts of RNN, and then you can understand it in 5 minutes.

#Suppose we have the following data. a=torch.tensor([1,2,3,4],dtype=torch.float32).unsqueeze(1)#[4,1] b=torch.tensor([5],dtype=torch.float32).unsqueeze(1)#[1,1] #shape[seq_len,emb_dim=1]

As you can see, the length of two sentences is seq_len is different. We know that the current neural network training adopts the mini batch method, which requires the same data length in a batch.

pad_sequence

We hope to make the above two data into a batch, but what if the length is different?

batch=[a,b] real_batch=pad_sequence(batch,batch_first=True) real_batch

Without explanation, this function realizes the same data length.

pack_padded_sequence

The real above_ Batch is called padded_sequence (filled sequence). This pack means compression.

Real above_ Batch can already be input into the neural network. Why do you need this function? The starting point now is to save computation and do not want those filled values to be RNN propagated.

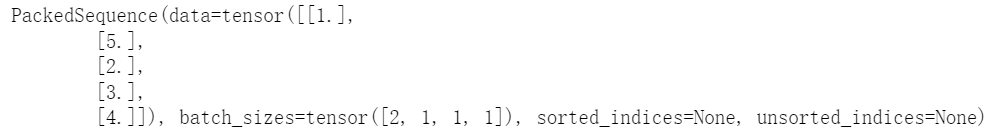

seq_len=[4,1]#The length of the first sentence is 4 and the length of the second sentence is 1 packed_real_batch = pack_padded_sequence(real_batch, seq_len, batch_first=True) packed_real_batch

This is also the official input object of pyrnch!! So we were confused before. We always thought that RNN only supported real format_ Objects such as batch are used as input.

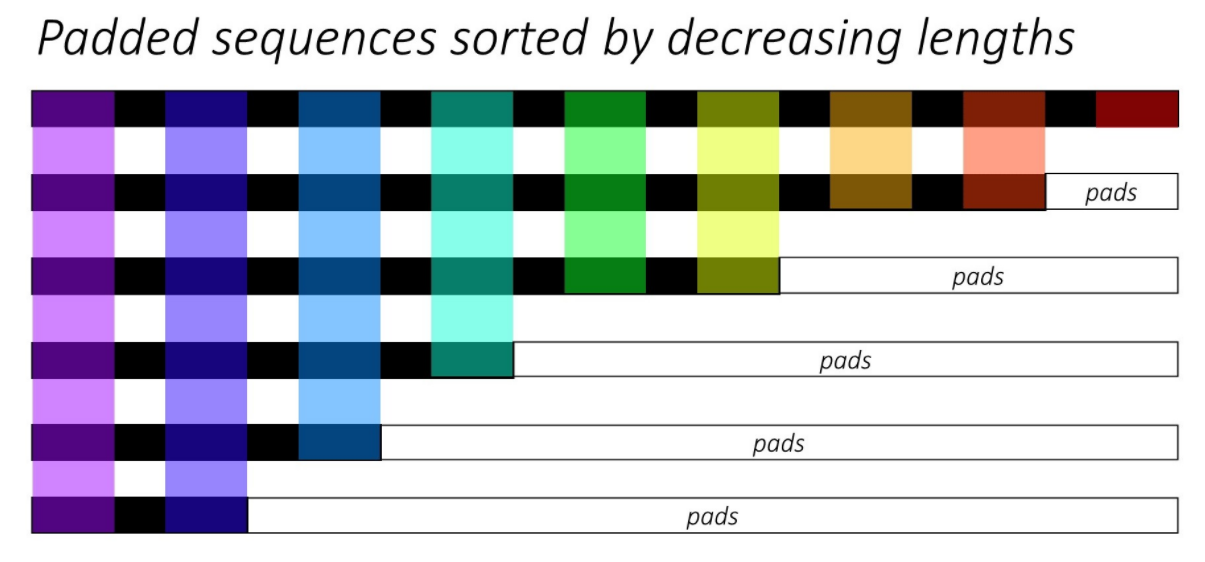

Let's analyze this object first. The first data is all our valid data. batch_sizes points out that in the first time step of RNN, the first two in data should be put into RNN as input at the same time. After obtaining the hidden vector, go to the second time step, batch_sizes points out that only one of the data needs to be input into the RNN,..., and so on. As follows:

Next, we input the above object into RNN as follows:

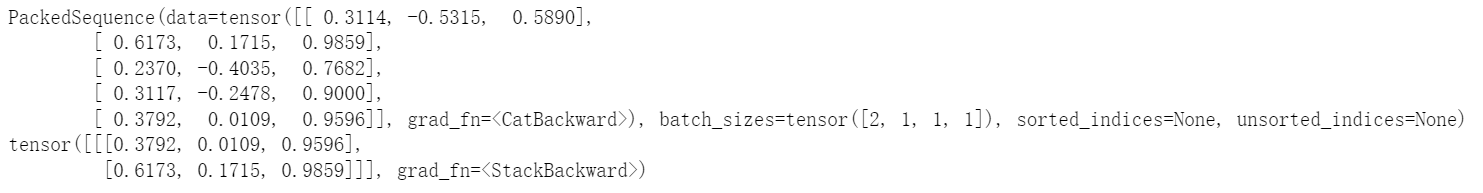

rnn=nn.RNN(input_size=1,hidden_size=3) all_hidden,last_hidden=rnn(packed_real_batch) print(all_hidden) print(last_hidden)

You can see! PackedSequence is officially supported as the input of RNN! And like the standard RNN, it returns two results (both understood), but somewhat different.

- The first is the PackedSequence object, while the standard RNN returns all sequences. The hidden vector output of each position is [batch_size,seq_len,hidden_size].

- As like as two peas, the second is the hidden vector output of the last position of each sequence of RNN, the shape is [batch_size,hidden_size].

Obviously, if we only use the second result returned by RNN for business, we can ignore it later. However, if we want to use the first result returned by RNN, the PackedSequence object above is not very friendly to subsequent processing, so:

pad_packed_sequence

Function: fill in the compressed sequence. Haha, isn't the compressed sequence PackedSequence above? So this is a reverse operation:

all_hidden_real_batch,seq_len = pad_packed_sequence(all_hidden, batch_first=True) print(all_hidden_real_batch) print(seq_len)

Come back.

be careful

The only thing to note is that in a batch, your data should be arranged in descending order according to the sequence length, that is, the long one is in the front and the short one is in the back.

a=torch.tensor([1,2,3,4],dtype=torch.float32).unsqueeze(1) b=torch.tensor([5],dtype=torch.float32).unsqueeze(1) batch=[a,b]#correct. batch=[b,a]#Error, the functions brought into the previous chapters in turn may have problems.

As long as you follow this principle, there will be no bug when you use the above functions.