1, Foreword

The pytest configuration file can change the operation mode of pytest. It is a fixed file pytest INI file, read the configuration information and run it in the specified way

2, ini configuration file

Some files in pytest are non test files

-

pytest. Ini is the main configuration file of pytest, which can change the default behavior of pytest

-

conftest. Some fixture configurations of Py test cases

-

_ init_.py identifies this folder as the package package of python

-

tox.ini and pytest Ini is similar. It is only useful when using the tox tool

-

setup.cfg is also an ini format file, which affects setup Py behavior

Basic format of ini file

# Save as pytest INI file [pytest] addopts = -rsxX xfail_strict = ture

Use the pytest - help command to view pytest Ini setting options

[pytest] ini-options in the first pytest.ini|tox.ini|setup.cfg file found: markers (linelist) markers for test functions empty_parameter_set_mark (string) default marker for empty parametersets norecursedirs (args) directory patterns to avoid for recursion testpaths (args) directories to search for tests when no files or dire console_output_style (string) console output: classic or with additional progr usefixtures (args) list of default fixtures to be used with this project python_files (args) glob-style file patterns for Python test module disco python_classes (args) prefixes or glob names for Python test class discover python_functions (args) prefixes or glob names for Python test function and m xfail_strict (bool) default for the strict parameter of addopts (args) extra command line options minversion (string) minimally required pytest version

- rsxX indicates the reason why pytest reports that all test cases are skipped, expected to fail, expected to fail but actually passed

3, Mark mark

The following example uses two Tags: webtest and hello. Using the mark tag function is very useful for classification testing in the future

# content of test_mark.py

import pytest

@pytest.mark.webtest

def test_send_http():

print("mark web test")

def test_something_quick():

pass

def test_another():

pass

@pytest.mark.hello

class TestClass:

def test_01(self):

print("hello :")

def test_02(self):

print("hello world!")

if __name__ == "__main__":

pytest.main(["-v", "test_mark.py", "-m=hello"])

Running results

============================= test session starts =============================

platform win32 -- Python 3.6.0, pytest-3.6.3, py-1.5.4, pluggy-0.6.0 -- D:\soft\python3.6\python.exe

cachedir: .pytest_cache

metadata: {'Python': '3.6.0', 'Platform': 'Windows-7-6.1.7601-SP1', 'Packages': {'pytest': '3.6.3', 'py': '1.5.4', 'pluggy': '0.6.0'}, 'Plugins': {'metadata': '1.7.0', 'html': '1.19.0', 'allure-adaptor': '1.7.10'}, 'JAVA_HOME': 'D:\\soft\\jdk18\\jdk18v'}

rootdir: D:\MOMO, inifile:

plugins: metadata-1.7.0, html-1.19.0, allure-adaptor-1.7.10

collecting ... collected 5 items / 3 deselected

test_mark.py::TestClass::test_01 PASSED [ 50%]

test_mark.py::TestClass::test_02 PASSED [100%]

=================== 2 passed, 3 deselected in 0.11 seconds ====================

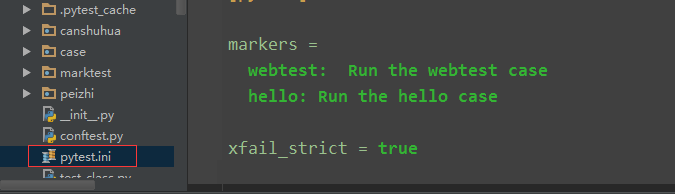

Sometimes there are too many labels and it is not easy to remember. In order to facilitate the subsequent execution of instructions, the label of mark can be written into pytest INI file

# pytest.ini [pytest] markers = webtest: Run the webtest case hello: Run the hello case

After marking, you can use pytest - markers to view

$ pytest —markers

D:\MOMO>pytest --markers

@pytest.mark.webtest: Run the webtest case

@pytest.mark.hello: Run the hello case

@pytest.mark.skip(reason=None): skip the given test function with an optional re

ason. Example: skip(reason="no way of currently testing this") skips the test.

@pytest.mark.skipif(condition): skip the given test function if eval(condition)

results in a True value. Evaluation happens within the module global context. E

xample: skipif('sys.platform == "win32"') skips the test if we are on the win32

platform. see http://pytest.org/latest/skipping.html

@pytest.mark.xfail(condition, reason=None, run=True, raises=None, strict=False):

mark the test function as an expected failure if eval(condition) has a True val

ue. Optionally specify a reason for better reporting and run=False if you don't

even want to execute the test function. If only specific exception(s) are expect

ed, you can list them in raises, and if the test fails in other ways, it will be

reported as a true failure. See http://pytest.org/latest/skipping.html

@pytest.mark.parametrize(argnames, argvalues): call a test function multiple tim

es passing in different arguments in turn. argvalues generally needs to be a lis

t of values if argnames specifies only one name or a list of tuples of values if

argnames specifies multiple names. Example: @parametrize('arg1', [1,2]) would l

ead to two calls of the decorated test function, one with arg1=1 and another wit

h arg1=2.see http://pytest.org/latest/parametrize.html for more info and example

s.

@pytest.mark.usefixtures(fixturename1, fixturename2, ...): mark tests as needing

all of the specified fixtures. see http://pytest.org/latest/fixture.html#usefix

tures

@pytest.mark.tryfirst: mark a hook implementation function such that the plugin

machinery will try to call it first/as early as possible.

@pytest.mark.trylast: mark a hook implementation function such that the plugin m

achinery will try to call it last/as late as possible.

The top two are just written to pytest Ini configuration

4, Disable xpass

Set xfail_strict = true can make those marked @ pytest mark. Xfail, but the test cases that actually pass are reported as failures

What is marked @ pytest mark. Xfail, but actually passed. This is more brain centered. Let's look at the following cases

# content of test_xpass.py

import pytest

def test_hello():

print("hello world!")

assert 1

@pytest.mark.xfail()

def test_momo1():

a = "hello"

b = "hello world"

assert a == b

@pytest.mark.xfail()

def test_momo2():

a = "hello"

b = "hello world"

assert a != b

if __name__ == "__main__":

pytest.main(["-v", "test_xpass.py"])

test result

collecting ... collected 3 items test_xpass.py::test_hello PASSED [ 33%] test_xpass.py::test_momo1 xfail [ 66%] test_xpass.py::test_momo2 XPASS [100%] =============== 1 passed, 1 xfailed, 1 xpassed in 0.27 seconds ================

test_momo1 and test_momo2 these two use cases are a == b and a= b. Both of them are marked as failed. We hope that the two use cases do not need to perform all display xfail. In fact, the last one shows xpass In order to make both display xfail, add a configuration

xfail_strict = true

# pytest.ini [pytest] markers = webtest: Run the webtest case hello: Run the hello case xfail_strict = true

Run again and the result becomes

collecting ... collected 3 items test_xpass.py::test_hello PASSED [ 33%] test_xpass.py::test_momo1 xfail [ 66%] test_xpass.py::test_momo2 FAILED [100%] ================================== FAILURES =================================== _________________________________ test_momo2 __________________________________ [XPASS(strict)] ================ 1 failed, 1 passed, 1 xfailed in 0.05 seconds ================

In this way, the marked xpasx is forced to become the result of failed

5, How to put configuration files

Generally, there is a pytest under a project INI file is OK. Put it under the top-level folder

6, addopts

The addopts parameter can change the default command line option, which will be used when we enter an instruction in cmd to execute the use case. For example, I want to generate a report after testing, and the instruction is relatively long

$ pytest -v —rerun 1 —html=report.html —self-contained-html

It's not easy to remember every time you enter so much, so you can add it to pytest Ini Li

# pytest.ini [pytest] markers = webtest: Run the webtest case hello: Run the hello case xfail_strict = true addopts = -v --rerun 1 --html=report.html --self-contained-html

In this way, the next time I open cmd and directly enter pytest, it will bring these parameters by default