preface

My articles related to multithreading

The best learning route of concurrent programming

[Java multithreading] the foundation of high concurrency cultivation. The concept of high concurrency must be understood

[Java multithreading] understand the concepts of thread lock pool and wait pool

[Java multithreading] understand the Java lock mechanism

[Java multithreading] thread communication

[Java basics] multithreading from getting started to mastering - section 15 Using the Concurrent collection

[Java multithreading] JUC's thread pool (I) a preliminary understanding of thread pool section 4 Work queue for thread pool

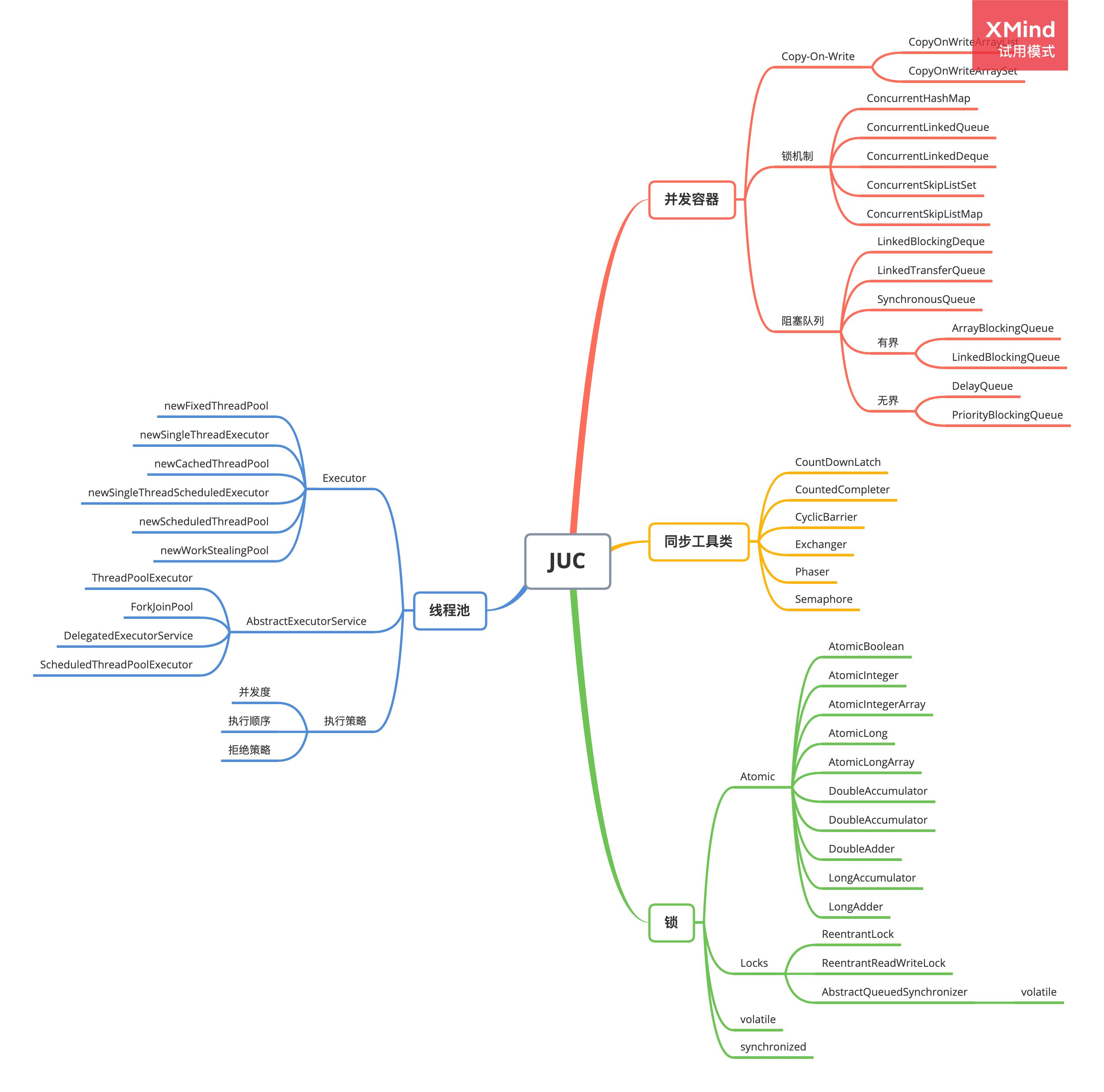

I JUC

1. Structure diagram of JUC

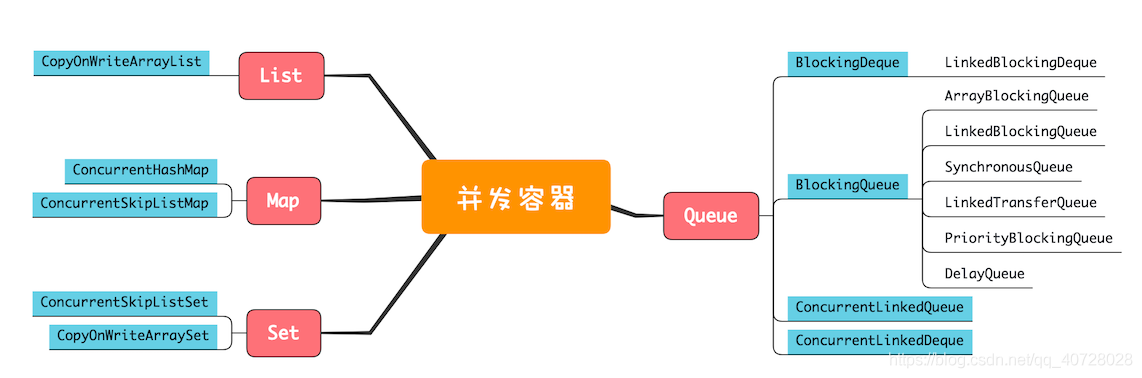

2.JUC concurrent container

3.JUC thread safe collection

Java. Of Java standard library util. The thread safe collection provided by the concurrent package: ArrayBlockingQueue.

| Interface | Thread unsafe | Thread safety |

|---|---|---|

| List | ArrayList | CopyOnWriteArrayList |

| Map | HashMap | ConcurrentHashMap |

| Set | HashSet / TreeSet | CopyOnWriteArraySet |

| Queue | ArrayDeque / LinkedList | ArrayBlockingQueue / LinkedBlockingQueue |

| Deque | ArrayDeque / LinkedList | LinkedBlockingDeque |

Using these concurrent collections is exactly the same as using non thread safe collection classes. Let's take ConcurrentHashMap as an example:

Map<String, String> map = ConcurrentHashMap<>();

// Read and write in different threads:

map.put("A", "1");

map.put("B", "2");

map.get("A", "1");

Because all synchronization and locking logics are implemented inside the collection, external callers only need to refer to the interface normally, and other codes are exactly the same as the original non thread safe code. When we need multithreading access, that is:

Map<String, String> map = HashMap<>(); //Change to Map<String, String> map = ConcurrentHashMap<>();

java. util. The collections utility class also provides an old thread safe collection converter that handles List/Set/Map

The syntax is collections synchronizedXXX(Collection c)

Map unsafeMap = new HashMap(); Map threadSafeMap = Collections.synchronizedMap(unsafeMap);

- It actually wraps a non thread safe Map with a wrapper class, and then locks all read and write methods with synchronized. In this way, the performance of the thread safe collection is better than that of Java util. The concurrent set is much lower, so it is not recommended.

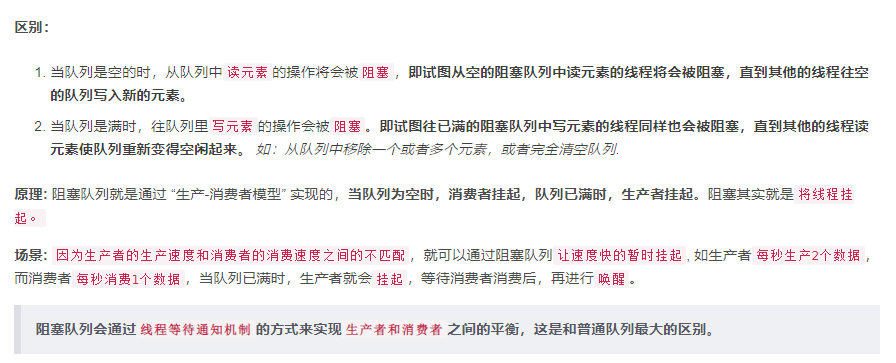

II What is a blocking queue

- In Java, the interface of BlockingQueue is located in Java util. In the concurrent package (provided from JDK1.5), we can see from the characteristics of the blocking queue described above that the blocking queue is thread safe.

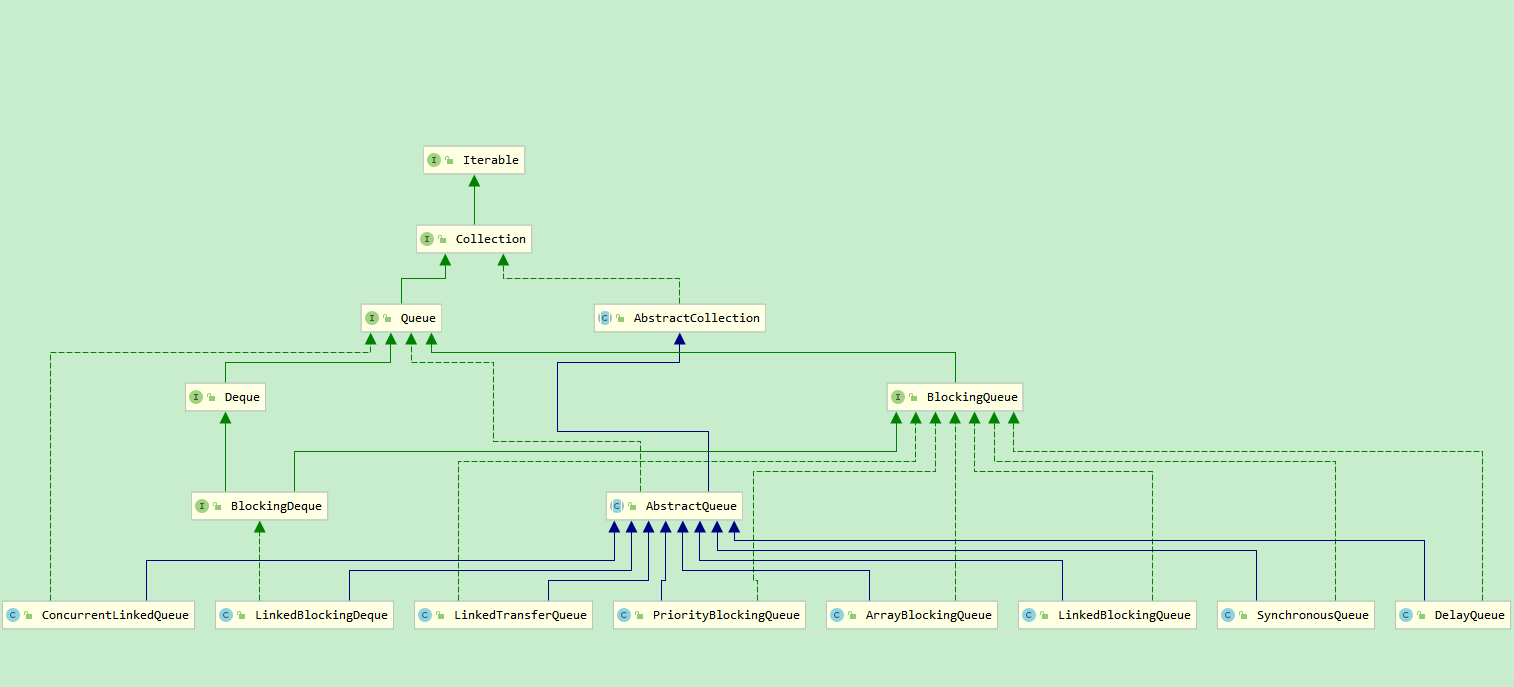

1. JDK provides two implementations on concurrent queues

- One is a high-performance non blocking queue represented by ConcurrentLinkedQueue,

- One is the blocking Queue represented by the BlockingQueue interface. No matter which top-level interface is inherited from the Queue.

- BlockingQueue is an interface. Its implementation classes include ArrayBlockingQueue, LinkedBlockingDeque, PriorityBlockingQueue, DelayQueue, SynchronousQueue, LinkedTransferQueue, LinkedBlockingQueue, etc. the difference of blocking queue is reflected in the difference of storage structure or element operation, but the principle of take and put operation is similar.

Queue summary

- ArrayDeque: array, double ended queue, non blocking queue

- PriorityQueue: priority queue, non blocking queue

- ArrayBlockingQueue: commonly used array based FIFO bounded blocking queue

- LinkedBlockingQueue: commonly used FIFO based on linked list and optional bounded blocking queue

- PriorityBlockingQueue, commonly used FIFO unbounded blocking queue with priority,

- SynchronousQueue common concurrent synchronization blocking queue

- DelayQueue: delay blocking queue, which implements the BlockingQueue interface

- LinkedBlockingDeque: FIFO double ended blocking queue based on linked list

- Concurrent linkedqueue: a lock free non blocking queue based on linked list nodes

- Concurrent linked deque: lockless two terminal non blocking queue based on linked list nodes

2. The difference between blocking queue and ordinary queue

2 sentence summary

- When the queue is empty, the thread that gets the element waits for the queue to become non empty.

- When the queue is full, the thread that stores the element waits for the queue to become available.

From the article [Java multithreading] thread pool of JUC (6) handwriting blocking queue - Section 1

3. The difference between bounded and unbounded

- Bounded means that its capacity is limited, that is, its container size must be specified during initialization, and once specified, the capacity size cannot be changed.

- Unbounded, that is, the capacity size is not specified, and integer is used MAX_ Value is the default capacity of

4.ReentrantLock and Condition

-

ReentrantLock is called reentrant exclusive lock. See the article for details [Java multithreading] display lock of JUC and AQS (queue synchronizer) Section III

-

Condition is called waiting condition. See the article for details [Java multithreading] JUC deep queue synchronizer (AQS) (II) source code analysis of ConditionObject Section II

Main methods of ReentrantLock:

- lock(): get lock

- Lockinterruptable(): obtain locks and support responding to interrupts

- tryLock(): when trying to obtain a lock, it returns true if successful. Otherwise, it returns immediately without waiting

- tryLock(long time,TimeUnit unit): try to obtain a lock within a given time, and support response interruption

- unlock(): release the lock

- Condition: through the ReentrantLock instance, the await() and signal() methods correspond to the wait() and notify() of the previous Object respectively

Condition main method

- await(): the current thread enters the waiting state and releases the lock at the same time. It supports responding to interrupts

- Awaituninterruptible(): the current thread enters the waiting state and releases the lock at the same time. It does not support responding to interrupts

- signal(): used to wake up a waiting thread

- singalAll(): used to wake up all waiting threads

5.BlockingQueue interface

public interface BlockingQueue<E> extends Queue<E> {

//-----------------Write start----------------------

//Write the element to the end of the queue and return true successfully. If the queue is full, throw the IllegalStateException("Queue full")

//If you only write values to a queue of a specified length, the offer() method is recommended.

boolean add(E e);

//Write the element to the end of the queue and return true successfully. If the queue is full, return false. The value of e cannot be empty. Otherwise, NullPointerException will be thrown.

boolean offer(E e);

//Write the element to the end of the queue. If the queue is full, block the calling thread until the queue has free space

void put(E e) throws InterruptedException;

//Write the element to the end of the queue. If the queue is full, block the calling thread for a limited time until the queue has free space or timeout

boolean offer(E e, long timeout, TimeUnit unit)throws InterruptedException;

//-----------------Write end----------------------

//-----------------Read start----------------------

//Read and remove elements from the queue head. If the queue is empty, block the calling thread until an element is written in the queue

E take() throws InterruptedException;

//Read and remove elements from the queue head. If the queue is empty, block the calling thread for a limited time until an element is written or timed out in the queue

E poll(long timeout, TimeUnit unit)throws InterruptedException;

//-----------------Read end----------------------

//-----------------Remove element start----------------------

//Removes the specified value from the queue. If the queue is empty, a NoSuchElementException exception is thrown

boolean remove(Object o);

//Remove all the values in the queue and set them to a given set concurrently.

int drainTo(Collection<? super E> c);

//Specify the maximum number limit, remove all the values in the queue, and set them concurrently in the given collection.

int drainTo(Collection<? super E> c, int maxElements);

//-----------------Remove element end----------------------

//Gets the space remaining in the queue.

int remainingCapacity();

//Determine whether the value is in the queue.

public boolean contains(Object o);

}

6. Main operation

| If the queue is full (insert) or empty (remove) | Throw exception | Return now | Blocking (response interrupt) | Timeout exit (response interrupt) |

|---|---|---|---|---|

| write in | boolean add(E e) | boolean offer(e)* | void put(E e) * | boolean offer(E e, long timeout, TimeUnit unit)* |

| Read (remove) | boolean remove(Object o) | E poll() * | E take() * | E poll(long timeout, TimeUnit unit) * |

| inspect | E element() | E peek() | N/A | N/A |

-

Exception:

- When the queue is full, an IllegalStateException("Queue full") exception will be thrown when an element is written to the queue

- When the queue is empty, NoSuchElementException will be thrown when reading elements from the queue.

-

Return now:

- If the element is written successfully, it will return true; otherwise, it will return false.

- Read the element, return directly if there is data, and return null if there is no data

-

Blocking:

- When the queue is full, writing elements to the queue will block the producer thread until there is more space in the queue or exit in response to an interrupt.

- When the queue is empty, reading elements from the queue will block the consumer thread until the queue is available.

-

Timeout exit:

- When the queue is full, writing elements to the queue will block the producer thread for a certain period of time. If the write timeout, false will be returned, otherwise true will be returned

- When the queue is empty, reading elements from the queue will block the consumer thread for a certain period of time, and return false when reading timeout, otherwise true

!!! Note: remove(Object o) can remove specific objects from the queue, but this method is not efficient. Because you need to traverse the queue to match a specific object before removing it.

7. How to select an appropriate queue

- boundary

- space

- throughput

III Preliminary use of blocking queue

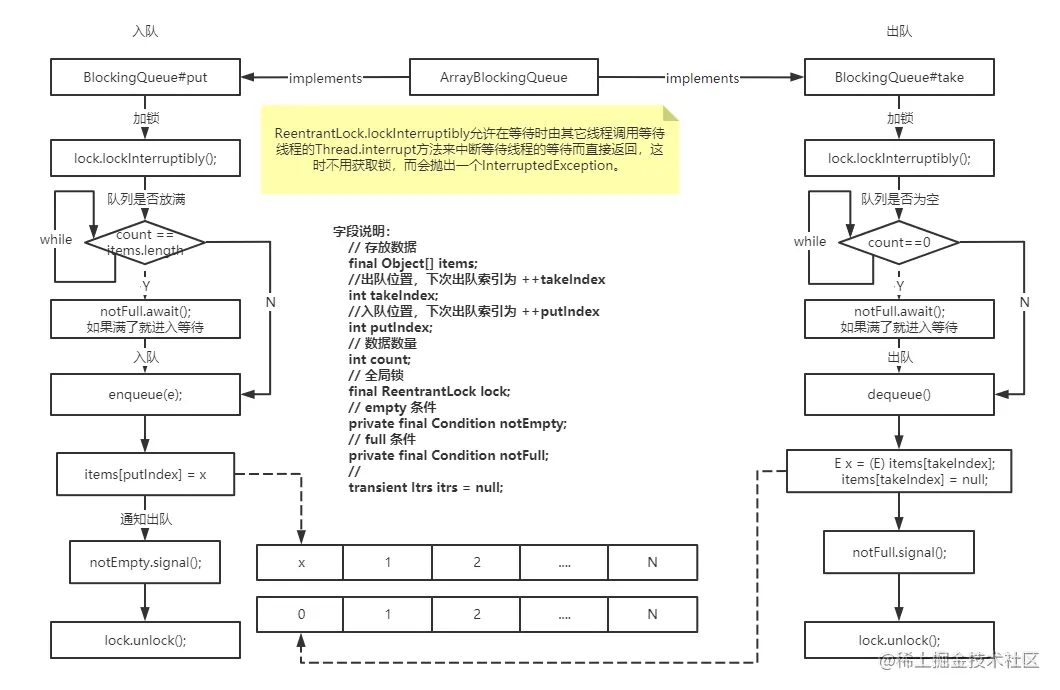

1. Arrayblockingqueue (array blocking queue)

- ArrayBlockingQueue: a bounded blocking queue implemented by an array. When initializing, you must specify the container size and store elements in the way of FIFO. ReentrantLock and Condition are used internally, and fair lock and unfair lock are supported.

- If threads apply for locks in sequence, it is fair, otherwise it is unfair

- Once the capacity size is set, it cannot be changed

Partial source code

/** Queue container */

final Object[] items;

//Unique global lock: the lock in charge of all access operations. Global sharing. Will use this lock.

final ReentrantLock lock;

//Two waiting queues

/** When the queue is empty, it is used to block the wake-up read-write thread */

private final Condition notEmpty;

/** Used to block wake-up write / read threads when the queue is full */

private final Condition notFull;

//Write element method

public void put(E e) throws InterruptedException {

checkNotNull(e);

final ReentrantLock lock = this.lock; // Unique lock

lock.lockInterruptibly();// Lock

try {

while (count == items.length)

notFull.await();//await gives up the right to operate

enqueue(e);// Wake up and join the queue.

} finally {

lock.unlock();// Unlock

}

}

//Remove element method

public E take() throws InterruptedException {

final ReentrantLock lock = this.lock; // Lock

lock.lockInterruptibly();

try {

while (count == 0)//If it is empty, the current lock will be released

notEmpty.await();

return dequeue();// Data is returned when the acquired lock is awakened

} finally {

lock.unlock();// Release lock

}

}

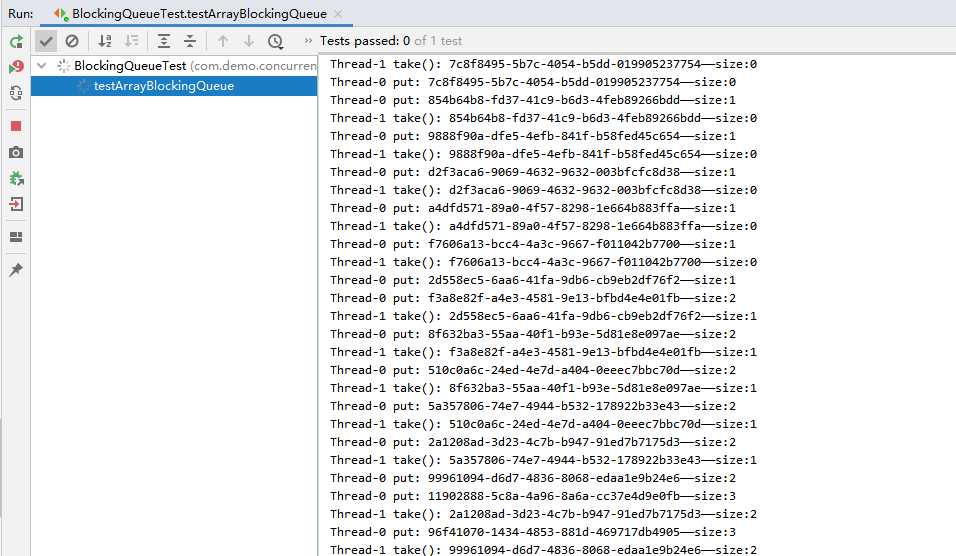

Mode of use

@Test

public void testArrayBlockingQueue() throws InterruptedException {

ArrayBlockingQueue<String> queue = new ArrayBlockingQueue(5);

//Producer (add element)

new Thread(() -> {

while (true) {

try {

String data = UUID.randomUUID().toString();

queue.put(data);

System.out.println(Thread.currentThread().getName()+" put: " + data + "-size:" + queue.size());

Thread.sleep(1000);

} catch (InterruptedException e) {

e.printStackTrace();

}

}

}).start();

//Consumer 1 (remove element)

new Thread(() -> {

while (true) {

try {

String data = queue.take();

System.out.println(Thread.currentThread().getName() + " take(): " + data + "-size:" + queue.size());

Thread.sleep(1200);

} catch (InterruptedException e) {

e.printStackTrace();

}

}

}).start();

//Sleep for 100S to prevent the main thread from ending directly

Thread.sleep(100000);

}

results of enforcement

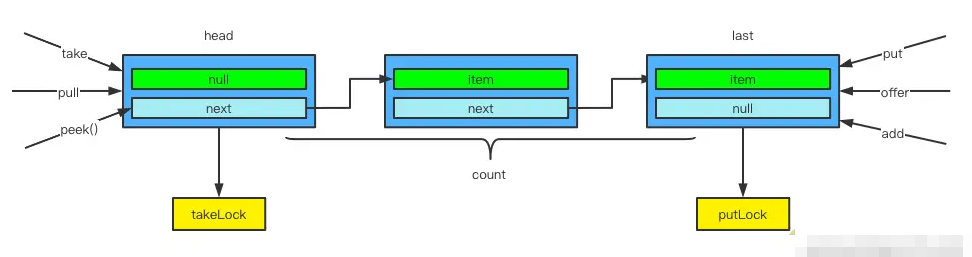

2. Linkedblockingqueue (one-way linked list blocking queue)

- LinkedBlockingQueue: a bounded blocking queue composed of one-way linked list structure. The queue capacity is optional, and the default size is integer MAX_ VALUE. Sort the storage elements according to FIFO., The throughput is usually higher than arrayblockingqueue

- The atomic class AtomicInteger used for internal counting of this queue

- The newFixedThreadPool thread pool uses this queue

Partial source code

//Node class for storing data

static class Node<E> {

E item;

Node<E> next;

Node(E x) { item = x; }

}

// The size of the blocking queue. The default is integer MAX_ VALUE

private final int capacity;

//Number of blocked elements in the current queue

private final AtomicInteger count = new AtomicInteger();

// Head node of blocking queue

transient Node<E> head;

// Tail node of blocking queue

private transient Node<E> last;

// The lock used to obtain and remove elements, such as take and poll

private final ReentrantLock takeLock = new ReentrantLock();

// notEmpty condition object, which is used to suspend the thread performing deletion when there is no data in the queue

private final Condition notEmpty = takeLock.newCondition();

// Locks used when adding elements, such as put and offer

private final ReentrantLock putLock = new ReentrantLock();

// notFull condition object, which is used to suspend the added thread when the queue data is full

private final Condition notFull = putLock.newCondition();

The elements written to the LinkedBlockingQueue queue queue will be encapsulated as Node nodes, which will be added to the linked list queue, where the head and last point to the head and tail nodes of the queue respectively. Unlike ArrayBlockingQueue, LinkedBlockingQueue uses takeLock and putLock to control concurrency. In other words, write and remove operations are not mutually exclusive and can be carried out at the same time, which can greatly improve throughput.

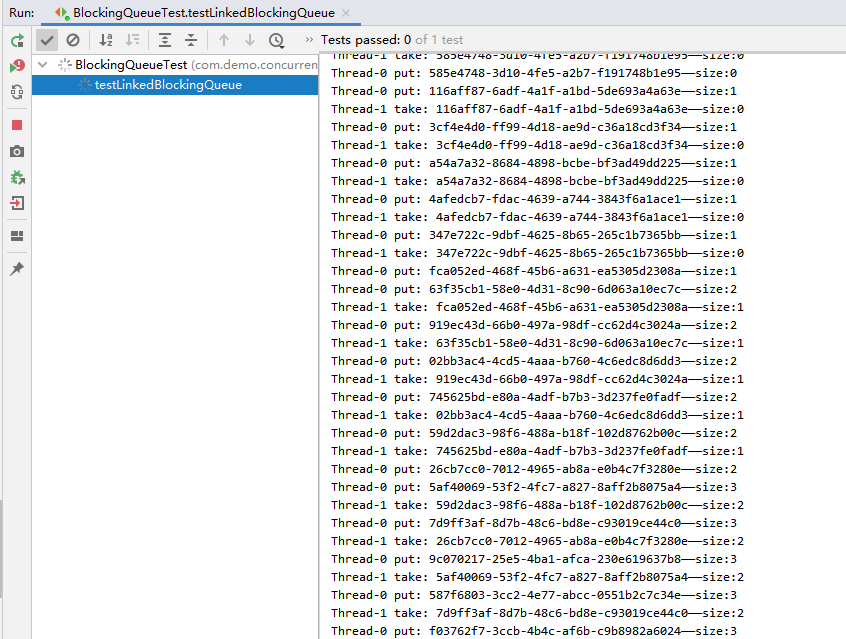

Mode of use

@Test

public void testLinkedBlockingQueue() throws InterruptedException {

LinkedBlockingQueue<String> queue = new LinkedBlockingQueue(5);

//Producer (add element)

new Thread( () -> {

while (true) {

try {

String data = UUID.randomUUID().toString();

queue.put(data);

System.out.println(Thread.currentThread().getName()+" put: " + data + "-size:" + queue.size());

Thread.sleep(1000);

} catch (InterruptedException e) {

e.printStackTrace();

}

}

}).start();

//Consumer 1 (remove element)

new Thread( () -> {

while (true) {

try {

String data = queue.take();

System.out.println(Thread.currentThread().getName() + " take: " + data+"-size:"+queue.size());

Thread.sleep(1200);

} catch (InterruptedException e) {

e.printStackTrace();

}

}

}).start();

//Sleep for 100S to prevent the main thread from ending directly

Thread.sleep(100000);

}

Execution effect:

3. Priority blocking queue

- PriorityBlockingQueue: it is implemented using balanced binary tree heap and supports unbounded blocking queue sorted by priority. The written object must implement the Comparable interface or pass in the Comparator in the queue construction method. The default is to sort in ascending natural order. The disadvantage is that the order of elements with the same priority cannot be guaranteed. Internally implemented using ReentrantLock and Condition

- CAS spin lock is used to control the dynamic expansion of the queue to ensure that the expansion operation will not block the execution of take operation

- Allow writing of null elements

- Binary reactor classification

- Maximum heap: the key value of the parent node is always greater than or equal to the key value of any child node

- Minimum heap: the key value of the parent node is always less than or equal to the key value of any child node

- The specific performance is as follows: the addition operation is constantly "rising", while the deletion operation is constantly "falling"

Partial source code

- The construction method is analyzed by priorityblockingqueue (collection <? Extensions E > C), as shown below

/**

* Construct a queue from an existing set

* If the existing collection is SortedSet or PriorityBlockingQueue, the original element order is maintained

*/

public PriorityBlockingQueue(Collection<? extends E> c) {

this.lock = new ReentrantLock();

this.notEmpty = lock.newCondition();

boolean heapify = true; // true if not known to be in heap order

boolean screen = true; // true if must screen for nulls

if (c instanceof SortedSet<?>) { // If it is an ordered set

SortedSet<? extends E> ss = (SortedSet<? extends E>) c;

this.comparator = (Comparator<? super E>) ss.comparator();

heapify = false;

} else if (c instanceof PriorityBlockingQueue<?>) { // If it is a priority queue

PriorityBlockingQueue<? extends E> pq = (PriorityBlockingQueue<? extends E>) c;

this.comparator = (Comparator<? super E>) pq.comparator();

screen = false;

if (pq.getClass() == PriorityBlockingQueue.class) // exact match

heapify = false;

}

Object[] a = c.toArray();

int n = a.length;

if (a.getClass() != Object[].class)

a = Arrays.copyOf(a, n, Object[].class);

if (screen && (n == 1 || this.comparator != null)) { // Verify the existence of null elements

for (int i = 0; i < n; ++i)

if (a[i] == null)

throw new NullPointerException();

}

this.queue = a;

this.size = n;

if (heapify) // Heap sort

heapify();

}

- Insert element offer() method analysis

public boolean offer(E e) {

if (e == null)

throw new NullPointerException();

final ReentrantLock lock = this.lock; // Lock

lock.lock();

int n, cap;

Object[] array;

while ((n = size) >= (cap = (array = queue).length)) // If the queue is full, expand the capacity

tryGrow(array, cap);

try {

Comparator<? super E> cmp = comparator;

if (cmp == null) // If the comparator is empty, the heap is adjusted according to the natural order of the elements

siftUpComparable(n, e, array);

else // If the comparator is not empty, adjust the heap according to the comparator

siftUpUsingComparator(n, e, array, cmp);

size = n + 1; // Total queue elements + 1

notEmpty.signal(); // Wake up an "out of queue thread" that may be waiting

} finally {

lock.unlock();

}

return true;

}

- The key methods above are the siftUpComparable() and the siftUpUsingComparator(), which are almost the same internally, except that the former is compared according to the natural order of elements and the latter is compared according to the external comparator. Let's focus on the siftUpComparable() method:

/**

* Insert the element x into the position of array[k]

* Then adjust the heap according to the natural order of elements - "floating up" to maintain the "heap" order

* The end result is a "small top pile"

*/

private static <T> void siftUpComparable(int k, T x, Object[] array) {

Comparable<? super T> key = (Comparable<? super T>) x;

while (k > 0) {

int parent = (k - 1) >>> 1; // Equivalent to (k-1) divided by 2, which is to find the parent index of K node

Object e = array[parent];

if (key.compareTo((T) e) >= 0) // If the value of the inserted node is greater than the parent node, exit

break;

// Otherwise, the values of the parent node and the current node are exchanged

array[k] = e;

k = parent;

}

array[k] = key;

}

-

The function of the siftUpComparable() method is actually the "floating adjustment" of the heap. You can imagine the heap as a complete binary tree. Each inserted element is linked to the bottom right of the binary tree, and then compare the inserted element with its parent node. If the parent node is large, exchange the elements until no parent node is larger than the inserted node. This ensures that the top of the heap (the root node of the binary tree) must be the smallest element. (Note: the above is only for "small top pile")

-

Capacity expansion tryGrow() method

private void tryGrow(Object[] array, int oldCap) {

lock.unlock(); // Capacity expansion and queue in / out can be carried out at the same time, so release the global lock first

Object[] newArray = null;

if (allocationSpinLock == 0 &&

UNSAFE.compareAndSwapInt(this, allocationSpinLockOffset,

0, 1)) { // allocationSpinLock set to 1 indicates capacity expansion

try {

// Calculate new array size

int newCap = oldCap + ((oldCap < 64) ?

(oldCap + 2) :

(oldCap >> 1));

if (newCap - MAX_ARRAY_SIZE > 0) { // Overflow judgment

int minCap = oldCap + 1;

if (minCap < 0 || minCap > MAX_ARRAY_SIZE)

throw new OutOfMemoryError();

newCap = MAX_ARRAY_SIZE;

}

if (newCap > oldCap && queue == array)

newArray = new Object[newCap]; // Allocate new array

} finally {

allocationSpinLock = 0;

}

}

if (newArray == null) // Capacity expansion failed (there may be other threads expanding, causing allocationSpinLock contention failure)

Thread.yield();

lock.lock(); // Get the global lock (because you want to modify the internal array queue)

if (newArray != null && queue == array) {

queue = newArray; // Point to a new internal array

System.arraycopy(array, 0, newArray, 0, oldCap);

}

}

- Analysis of take() method

/**

* Line up an element If the queue is empty, the thread is blocked

*/

public E take() throws InterruptedException {

final ReentrantLock lock = this.lock;

lock.lockInterruptibly(); // Get global lock

E result;

try {

while ((result = dequeue()) == null) // Queue is empty

notEmpty.await(); // Thread waiting in noEmpty condition queue

} finally {

lock.unlock();

}

return result;

}

private E dequeue() {

int n = size - 1; // n represents the number of remaining elements after the queue

if (n < 0) // If the queue is empty, null is returned

return null;

else {

Object[] array = queue;

E result = (E) array[0]; // array[0] is the top node of the heap. The top node of the heap is deleted every time you leave the queue

E x = (E) array[n]; // array[n] is the last node of the heap, that is, the lowest right node of the binary tree

array[n] = null;

Comparator<? super E> cmp = comparator;

if (cmp == null)

siftDownComparable(0, x, array, n);

else

siftDownUsingComparator(0, x, array, n, cmp);

size = n;

return result;

}

}

Mode of use

@Test

public void testPriorityBlockingQueue() throws InterruptedException {

//Using the default sorting method, i.e. sorting in natural order (i.e. from small to large), you can implement the Comparable interface through the element or pass it into the Comparator during construction for custom sorting

PriorityBlockingQueue<Integer> queue = new PriorityBlockingQueue(5);

queue.put(6);

queue.put(4);

queue.put(3);

queue.put(1);

queue.put(2);

queue.put(7);

System.out.println(queue.poll());//1

System.out.println(queue.poll());//2

}

results of enforcement

4. Delayqueue

-

DelayQueue: an unbounded blocking queue that internally uses the priority queue PriorityQueue to delay reading. Each element in the queue has an expiration time. When the element is obtained from the queue, only the expired element will be out of the queue. The queue header element is the element whose block will expire. ReentrantLock and Condition are used internally.

- The written element must implement the Delay interface, specifying the time to get the current element from the queue. Storage elements are sorted by expiration time

- This interface requires the implementation class to rewrite the compareTo() method and use it in combination with the getDelay() method (specific examples are given below)

// public interface Delayed extends Comparable<Delayed> { long getDelay(TimeUnit unit); }- The DelayQueue queue is used inside the newScheduledThreadPool thread pool.

- Application scenario:

- 1. Design of cache system: save the validity period of cache elements through DelayQueue, and open a thread to query DelayQueue circularly. Once the elements can be obtained from DelayQueue, it indicates that the validity period of cache has expired

- 2. Scheduled task scheduling: save the tasks and execution time that will be executed on the day through DelayQueue. Once the task is obtained from DelayQueue, it will be executed. For example, TimerQueue is realized by DelayQueue.)

- The written element must implement the Delay interface, specifying the time to get the current element from the queue. Storage elements are sorted by expiration time

Partial source code

- Insert element offer() method

public boolean offer(E e) {

// Acquire lock

final ReentrantLock lock = this.lock;

lock.lock();

try {

// Element is added to the priority queue

q.offer(e);

// Gets the priority header element, which is equal to the current element

// Clear the leader and release the read limit

if (q.peek() == e) {

leader = null;

available.signal();

}

return true;

} finally {

// Release lock

lock.unlock();

}

}

- Out of queue method take(). If it is empty, the current thread is blocked

public E take() throws InterruptedException {

// Acquire lock

final ReentrantLock lock = this.lock;

lock.lockInterruptibly();

try {

// spin

for (;;) {

// Get priority queue header node

E first = q.peek();

// Priority queue is empty

if (first == null)

// block

available.await();

else {

// Judge whether the remaining time of the header element is less than or equal to 0

long delay = first.getDelay(NANOSECONDS);

if (delay <= 0)

// Priority queue out

return q.poll();

// This indicates that the remaining time is greater than 0

// Null header reference

first = null;

// Whether the leader thread is empty. If not, wait

if (leader != null)

available.await();

else {

// Set the leader thread as the current thread

Thread thisThread = Thread.currentThread();

leader = thisThread;

try {

// Sleep seconds remaining

available.awaitNanos(delay);

} finally {

// After hibernation, is the leader thread still the current thread

// Empty leader

if (leader == thisThread)

leader = null;

}

}

}

}

} finally {

// The leader thread is empty and first is not empty

// Wake up the blocked leader and let it try again

if (leader == null && q.peek() != null)

available.signal();

// Unlock

lock.unlock();

}

}

Mode of use

//Saved DelayQueue element

public class Item implements Delayed {

String name;

//Trigger time

private long time;

public Item(String name, long time, TimeUnit unit) {

this.name = name;

this.time = System.currentTimeMillis() + (time > 0 ? unit.toMillis(time) : 0);

}

@Override

public long getDelay(TimeUnit unit) {

return time - System.currentTimeMillis();

}

@Override

public int compareTo(Delayed o) {

Item item = (Item) o;

long diff = this.time - item.time;

if (diff <= 0) {// Changing to > = will cause problems

return -1;

} else {

return 1;

}

}

@Override

public String toString() {

return "Item{" +

"time=" + time +

", name='" + name + '\'' +

'}';

}

@Test

public void testDelayQueue() throws InterruptedException {

Item item1 = new Item("item1", 5, TimeUnit.SECONDS);

Item item2 = new Item("item2", 10, TimeUnit.SECONDS);

Item item3 = new Item("item3", 15, TimeUnit.SECONDS);

DelayQueue<Item> queue = new DelayQueue<>();

queue.put(item1);

queue.put(item2);

queue.put(item3);

System.out.println("begin time:" + LocalDateTime.now().format(DateTimeFormatter.ISO_LOCAL_DATE_TIME));

for (int i = 0; i < 3; i++) {

Item take = queue.take();

System.out.format("name:{%s}, time:{%s}\n", take.name, LocalDateTime.now().format(DateTimeFormatter.ISO_DATE_TIME));

}

/*

* begin time:2020-07-07T17:19:53.038

* name:{item1}, time:{2020-07-07T17:19:57.982}

* name:{item2}, time:{2020-07-07T17:20:02.982}

* name:{item3}, time:{2020-07-07T17:20:07.982}

*/

}

}

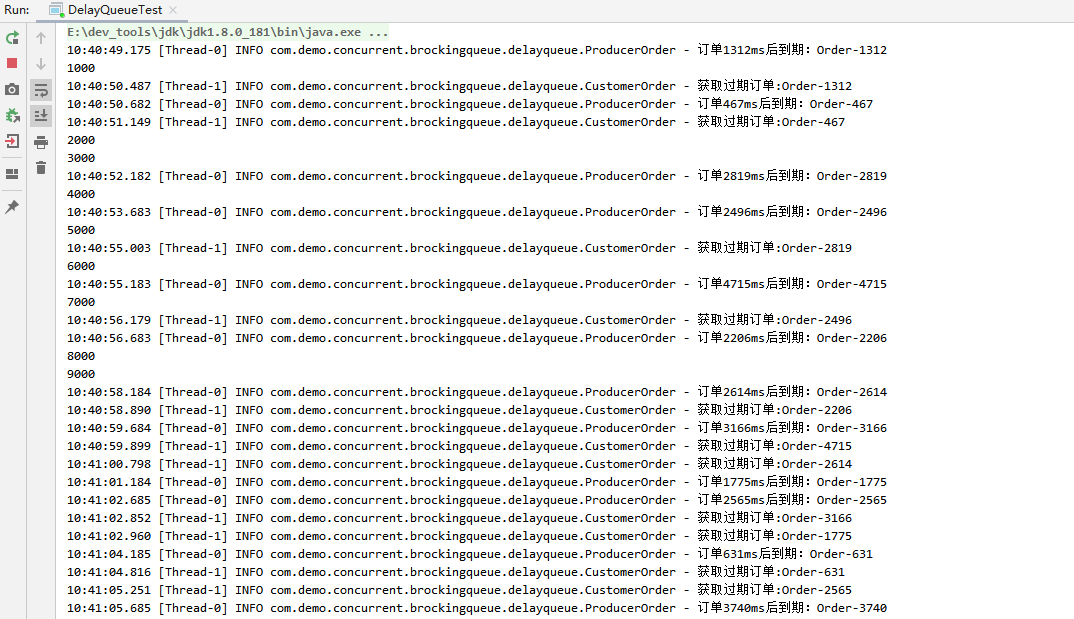

Specific example: an order system realizes the delayed consumption of orders through the blocking queue delay function, which requires (Order class, packaging order class, producer, consumer, test)

- Order class

public class Order {

//Order number

private final String orderNo;

//Order amount

private final double orderMoney;

public Order(String orderNo, double orderMoney) {

super();

this.orderNo = orderNo;

this.orderMoney = orderMoney;

}

public String getOrderNo() {

return orderNo;

}

public double getOrderMoney() {

return orderMoney;

}

}

- Packaging

// Class description: the element stored in the queue

public class ItemVo<T> implements Delayed {

private long activeTime;//Expiration time in milliseconds

private T object;

//activeTime is an expiration time

public ItemVo(long activeTime, T object) {

super();

this.activeTime = TimeUnit.NANOSECONDS.convert(activeTime, TimeUnit.MILLISECONDS) + System.nanoTime();

// Convert the incoming duration to the timeout time

this.object = object;

}

public T getObject() {

return object;

}

//Sort by remaining time

@Override

public int compareTo(Delayed o) {

long d = getDelay(TimeUnit.NANOSECONDS) - o.getDelay(TimeUnit.NANOSECONDS);

return (d == 0) ? 0 : ((d > 0) ? 1 : -1);

}

//Returns the remaining time of the element

@Override

public long getDelay(TimeUnit unit) {

long d = unit.convert(this.activeTime - System.nanoTime(), TimeUnit.NANOSECONDS);

return d;

}

}

- producer

@Slf4j

public class ProducerOrder implements Runnable {

private DelayQueue<ItemVo<Order>> queue;

public ProducerOrder(DelayQueue<ItemVo<Order>> queue) {

super();

this.queue = queue;

}

@SneakyThrows

@Override

public void run() {

while (true) {

Random random = new Random();

int num = random.nextInt(5000);//1-5000 random

Order order = new Order("Order-" + num, num);

ItemVo<Order> itemVo = new ItemVo<Order>(num, order);

//insert

queue.offer(itemVo);

log.info("order" + num + "ms Due after:" + order.getOrderNo());

TimeUnit.MILLISECONDS.sleep(1500);

}

}

}

- consumer

@Slf4j

public class CustomerOrder implements Runnable {

private DelayQueue<ItemVo<Order>> queue;

public FetchOrder(DelayQueue<ItemVo<Order>> queue) {

super();

this.queue = queue;

}

@Override

public void run() {

while (true) {

try {

ItemVo<Order> item = queue.take();

Order order = (Order) item.getObject();

log.info("Get expired orders:" + order.getOrderNo());

} catch (InterruptedException e) {

e.printStackTrace();

}

}

}

}

- test

public class DelayQueueTest {

public static void main(String[] args) throws InterruptedException {

//Delay queue

DelayQueue<ItemVo<Order>> queue = new DelayQueue<>();

//producer

new Thread(new ProducerOrder(queue)).start();

//consumer

new Thread(new CustomerOrder(queue)).start();

//Print a number every 1 second

for (int i = 1; i < 1000; i++) {

Thread.sleep(1000);

System.out.println(i * 1000);

}

}

}

results of enforcement

5. Synchronous queue

- SynchronousQueue: a blocking queue that does not store elements (no capacity). Each put operation must wait until another thread calls the take operation (that is, the write element must be removed before writing a new element). Otherwise, the write operation is always blocked. Support fair lock (transferqueue FIFO) and unfair lock (transferstack LIFO).

- Application scenario: the thread pool newCachedThreadPool() uses SynchronousQueue. When a new task arrives in this thread pool, if there is an idle thread, it will use the idle thread to execute (reuse). If there is no idle thread, it will create a new thread and will not cache the task

It is often used to exchange work. Producers and consumers synchronize to deliver certain information, events or tasks

@Test

public void testSynchronousQueue() throws InterruptedException {

SynchronousQueue<String> queue = new SynchronousQueue();

//Producer (add element)

new Thread( () -> {

while (true) {

try {

String data = UUID.randomUUID().toString();

System.out.println(Thread.currentThread().getName()+" put: " + data + "-size:" + queue.size());

queue.put(data);

Thread.sleep(100);

} catch (InterruptedException e) {

e.printStackTrace();

}

}

}).start();

//Consumer 1 (remove element)

new Thread( () -> {

while (true) {

try {

String data = queue.take();

System.out.println(Thread.currentThread().getName()+" take: " + data + "-size:" + queue.size());

Thread.sleep(500);

} catch (InterruptedException e) {

e.printStackTrace();

}

}

}).start();

Thread.sleep(100000);

}

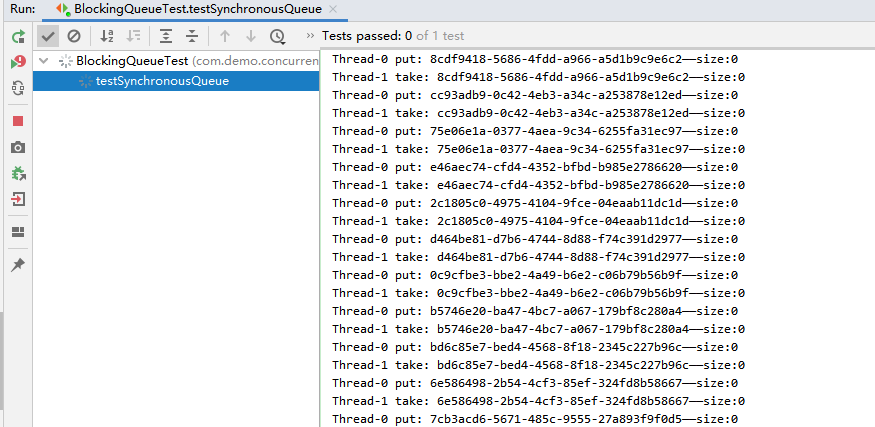

results of enforcement

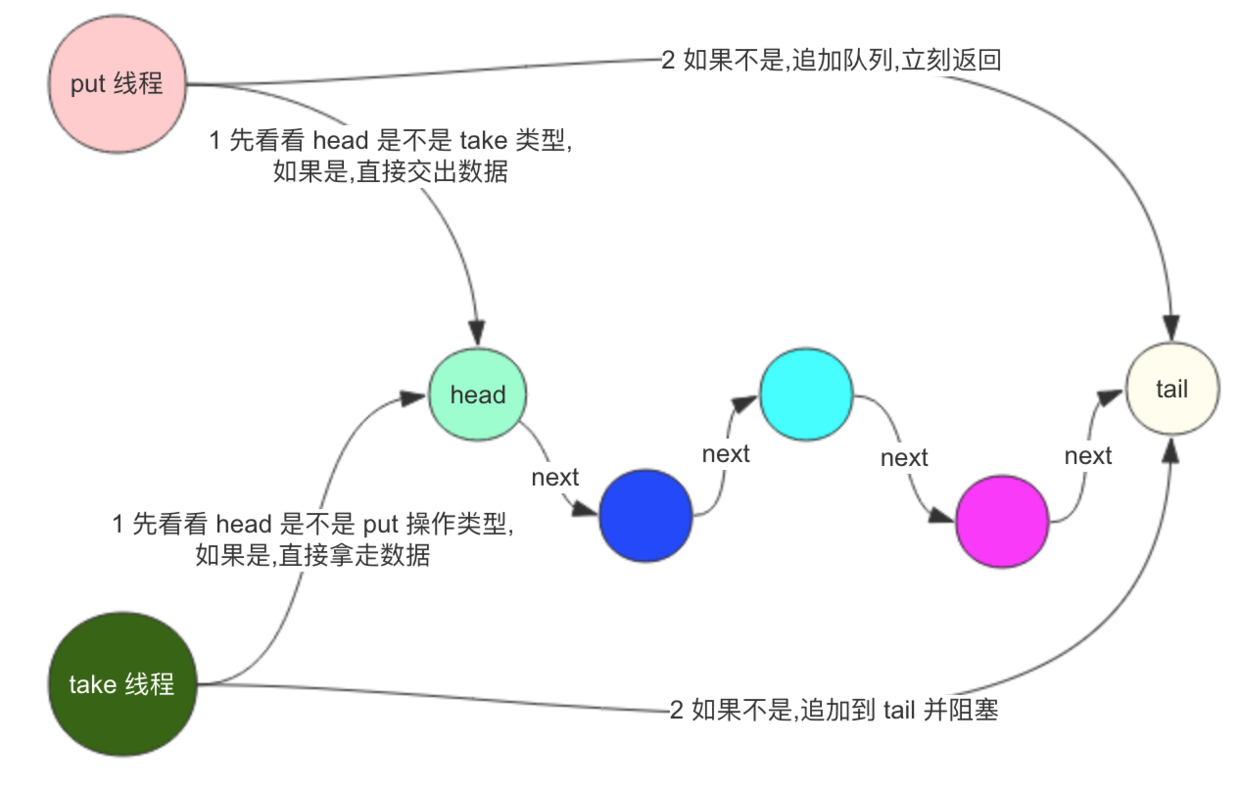

6. Linkedtransferqueue (linked list transferable blocking queue)

-

LinkedTransferQueue: an unbounded blocking queue composed of linked list structure. Compared with other queues, this class implements the TransferQueue interface and has more transfer() and tryTransfer() methods.

- If there is a consumer thread waiting for the transfer element, it will directly "hand over" the element to the waiting person; Otherwise, it will put the current element to the end of the queue and enter the blocking state, waiting for the consumer thread to transfer the element.

- The difference between put() and transfer() methods: put() returns immediately, and transfer() blocks waiting for the consumer to get the data before returning.

Idea: adopt preemption mode. That is, when the consumer gets the element, if the queue is not empty, the data will be directly taken away. If the queue is empty, a node (node element is null) will be generated to queue (put), and then the consumer will wait on this node. When the producer finds a node with null element, the producer will not queue (no put), and the element will be directly filled into this node, And wake up the waiting thread of the node. The awakened consumer thread takes the element and returns from the called method. We call this node operation as "matching".

- To put it simply: take it directly if you have it, and occupy the position if you don't until you get it or timeout or interrupt it

Concurrent programming -- LinkedTransferQueue

public interface TransferQueue<E> extends BlockingQueue<E> {

// If possible, immediately transfer the element to the waiting consumer.

// More specifically, if there is a consumer waiting to receive it (in take or timed poll(long, TimeUnit) pol), the specified element is transmitted immediately, otherwise false is returned.

//Non blocking

boolean tryTransfer(E e);

// Transfer the element to the consumer and wait if necessary.

// More precisely, if there is a consumer waiting to receive it (in take or timed poll(long, TimeUnit) pol), the specified element is transmitted immediately, otherwise wait until the element is received by the consumer.

//Support response interrupt

void transfer(E e) throws InterruptedException;

// Set the timeout based on the above method to support the response to interrupt

boolean tryTransfer(E e, long timeout, TimeUnit unit) throws InterruptedException;

// Returns true if at least one consumer is waiting

boolean hasWaitingConsumer();

// Returns an estimate of the number of waiting consumers

int getWaitingConsumerCount();

}

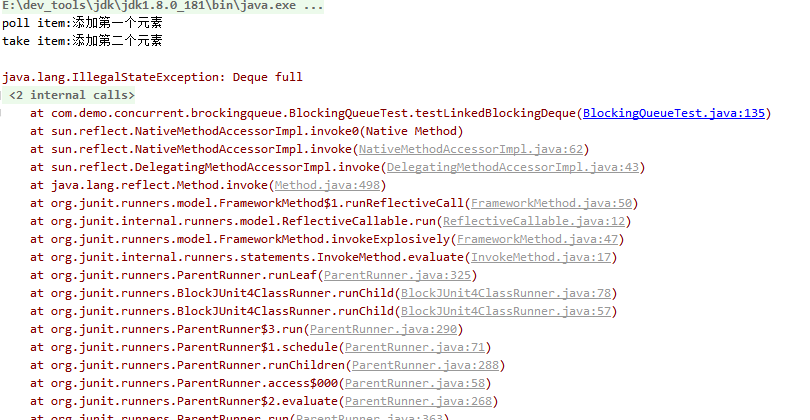

7. Linkedblockingdeque (bidirectional linked list blocking queue)

-

LinkedBlockingDeque: a bounded blocking queue composed of two-way linked lists. The queue capacity is optional, and the default size is integer MAX_ VALUE. Both the head and tail of the queue can write and remove elements, because there is an entry to the operation queue, which reduces the lock competition by half when multiple threads join the queue at the same time

- Deque property: elements can be inserted and removed at the head and tail of the team

- Compared with other blocking queues, LinkedBlockingDeque has addFirst(), addLast(), peekFirst(), peekLast() and other methods. The method ending with XXXFirst represents inserting, obtaining and removing the queue header elements of the double ended queue. The method ending with xxxLast means to insert, obtain and remove the tail element of the double ended queue.

- Scenario: commonly used in work stealing mode

Mode of use

@Test

public void testLinkedBlockingDeque (){

BlockingDeque<String> blockingDeque = new LinkedBlockingDeque<>(1);

// offer,poll, thread safety / blocking api

blockingDeque.offer("Add first element");

String item = blockingDeque.poll();

System.out.println("poll item:" + item);

// offer,poll thread safe / throw an exception if failed

try {

blockingDeque.put("Add a second element");

} catch (InterruptedException e) {

e.printStackTrace();

}

try {

String take = blockingDeque.take();

System.out.println("take item:" + take);

} catch (InterruptedException e) {

e.printStackTrace();

}

// Add and remove are not thread safe

blockingDeque.add("Add a fourth element");

blockingDeque.add("Add fifth element");

item = blockingDeque.remove();

System.out.println(item);

}

results of enforcement

8. Concurrentlinkedqueue (non blocking one-way linked list queue)

- ConcurrentLinkedQueue: a queue suitable for high concurrency scenarios. It is an unbounded non blocking thread safe queue based on the linked Node. CAS+volatile is used to achieve thread safety (without blocking function). The storage elements are sorted by FIFO, and the queue does not allow null elements to be written.

- In general, the performance of ConcurrentLinkedQueue is better than that of BlockingQueue

Mode of use

// Unbounded queue

ConcurrentLinkedQueue<String> queue = new ConcurrentLinkedQueue<>();

queue.offer("Zhang San");

queue.offer("Li Si");

queue.offer("Wang Wu");

//Get the element from the beginning and delete it

System.out.println(queue.poll());

//Get the element from the beginning without deleting it

System.out.println(queue.peek());

//Get capacity size

System.out.println(queue.size());

9. Concurrentlinkeddeque (non blocking bidirectional linked list queue)

ConcurrentLinkedDeque: an unbounded non blocking thread safe queue based on two-way linked list, which is similar to ConcurrentLinkedQueue.

10. Comparison

| Blocking lock | data structure | Is it bounded | Thread safety | Applicable scenario |

|---|---|---|---|---|

| ArrayBlockingQueue | array | Bounded. The size cannot be changed after confirmation | A ReentrantLock lock controls put and take | Production and consumption model, balance processing speed |

| LinkedBlockingQueue | Unidirectional linked list | Configurable | Two ReentrantLock locks, put and take, can be executed concurrently. | Production and consumption model, balance processing speed |

| LinkedBlockingDeque | Bidirectional linked list | Configurable | A ReentrantLock lock controls put and take. | Production and consumption model, balance processing speed |

| Priority queue PriorityBlockingQueue | Bifurcated small top reactor | Unbounded, it will expand automatically | A ReentrantLock lock controls put and take. When the queue is empty, take enters the condition and waits; | The verification code in the SMS queue is sent first |

| Synchronization queue | One way linked list or stack | Capacity 1 | CAS, put and take will be blocked until the pairing is successful | Transfer data between threads |

| Delay queue | PriorityQueue, binary small top pile | Unbounded, it will expand automatically | A ReentrantLock lock controls put and take. Only one thread can take at a time, and other threads enter the condition and wait; | Close hyperspace connection, task timeout processing |

| LinkedTransferQueue | Unidirectional linked list | unbounded | CAS | Preemption mode: if there is one, take it away directly. If there is no one, occupy this position until it is obtained or timeout or interruption |