Sogou lab: the search engine query log database is designed as a collection of Web query log data including some web query requirements of Sogou search engine and user clicks for about one month (June 2008). Provide benchmark research corpus for researchers who analyze the behavior of Chinese search engine users

catalogue

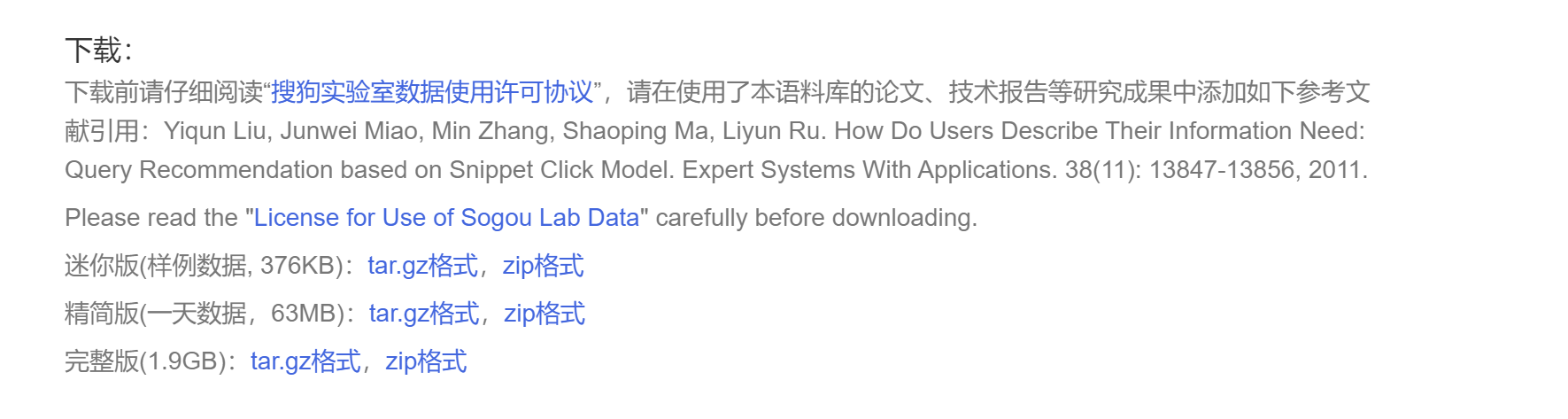

Sogou search log official website: http://www.sogou.com/labs/resource/q.php

Mini log download link: http://download.labs.sogou.com/dl/sogoulabdown/SogouQ/SogouQ.mini.zip

Note: due to the test use, the mini version of data can meet the requirements

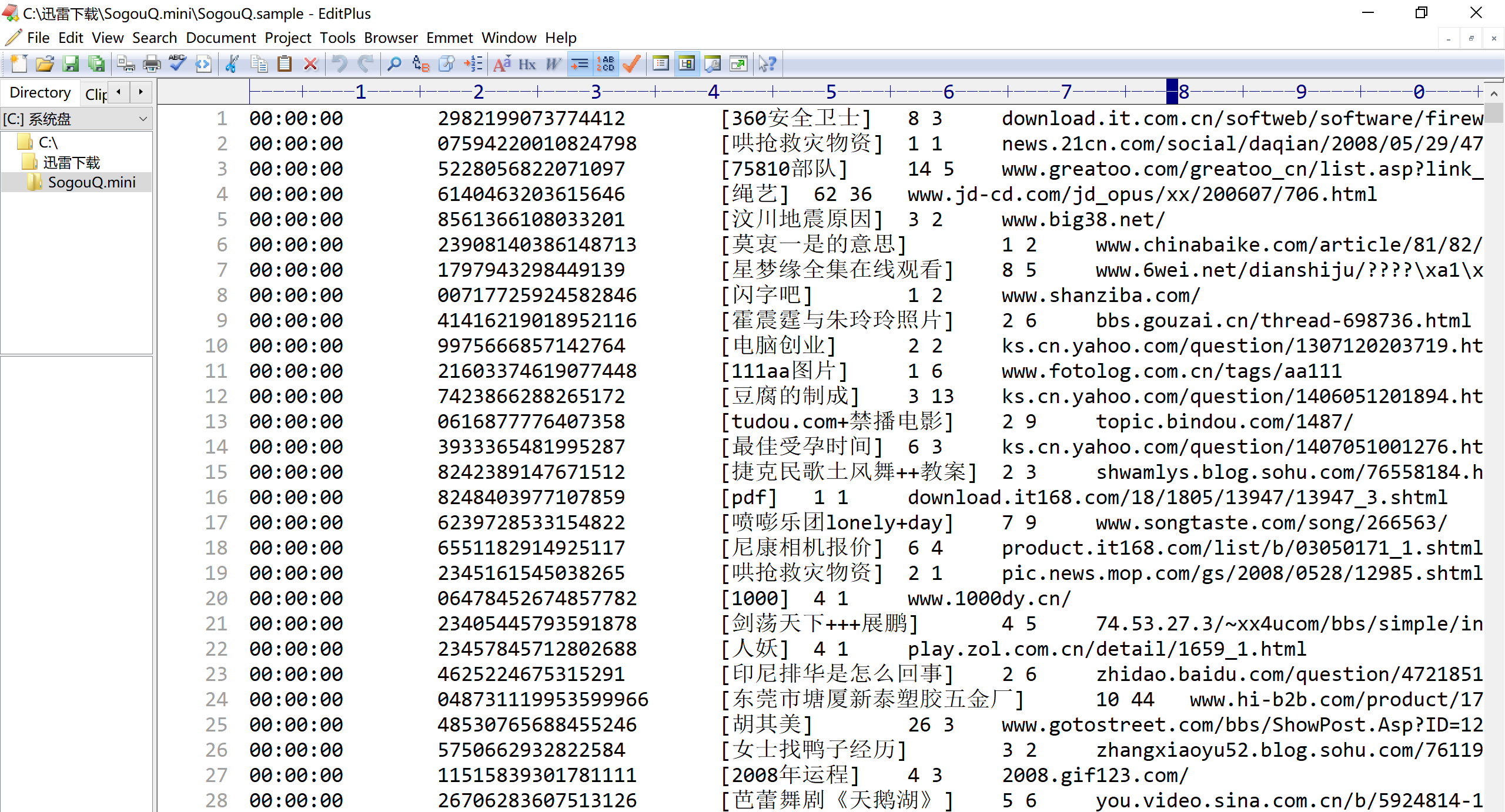

Original data display

Note: there are 10000 pieces of original data, and the fields are: access time \ tuser ID \t [query term] \ t the ranking of the URL in the returned results \ t the sequence number clicked by the user \ t # the URL clicked by the user

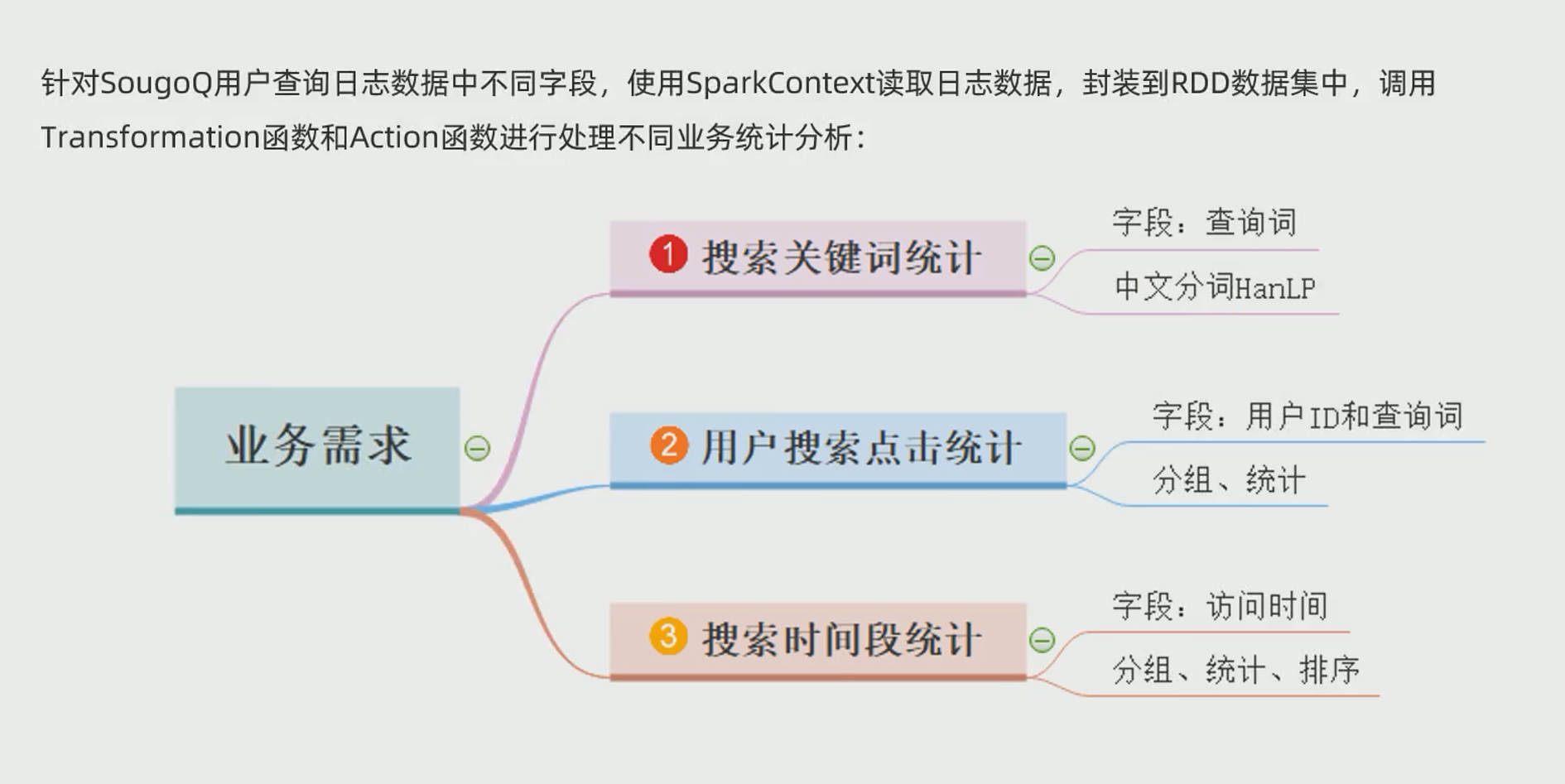

Business requirements

Requirement Description: segment SougouSearchLog and count the following indicators:

- Popular search terms

- User popular search terms (with user id)

- Search heat in each time period

Business logic

Business logic: for SougoQ users to query different fields in log data, use SparkContext to read log data, encapsulate it into RDD data set, invoke Transformation function and Action function to process different business statistics and analysis.

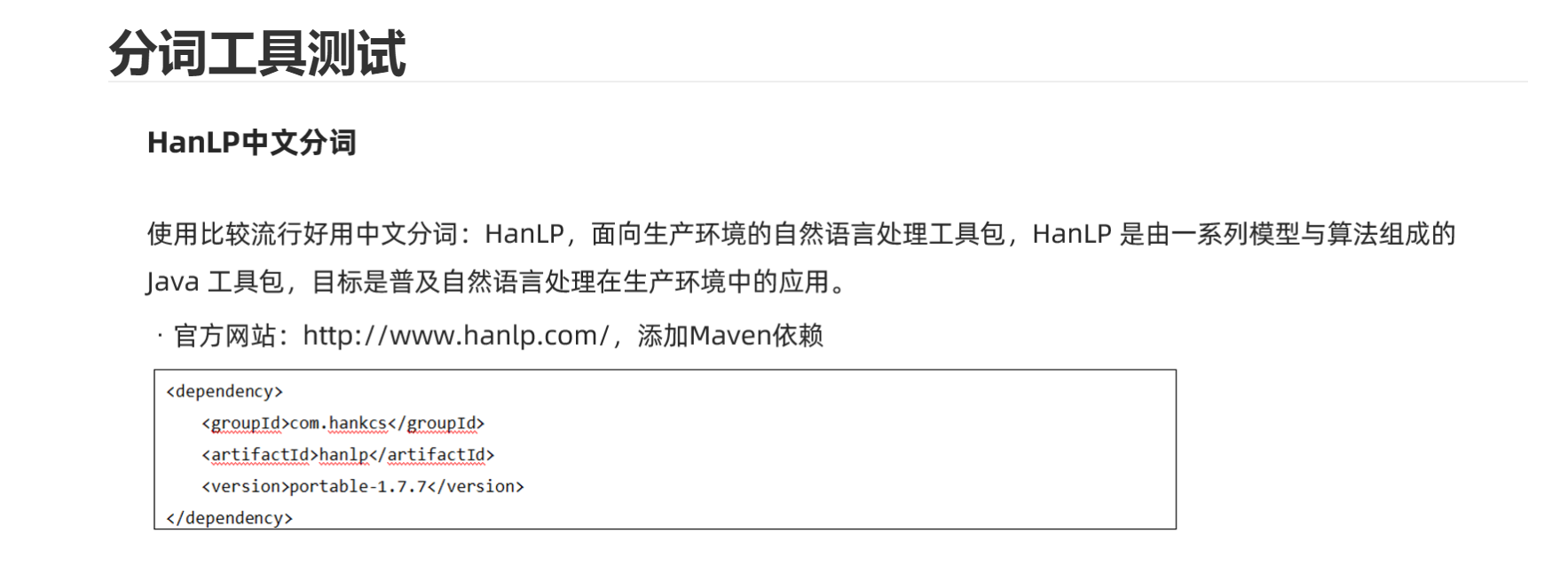

Word segmentation tool

HanLP official website: http://www.sogou.com/labs/resource/q.php

Main functions of HanLP: Based on the latest technology of HanLP, it uses 100 million General Corpus training and direct API call, which is simple and efficient!

Maven dependency

<dependency> <groupId>com.hankcs</groupId> <artifactId>hanlp</artifactId> <version>portable-1.7.7</version> </dependency>

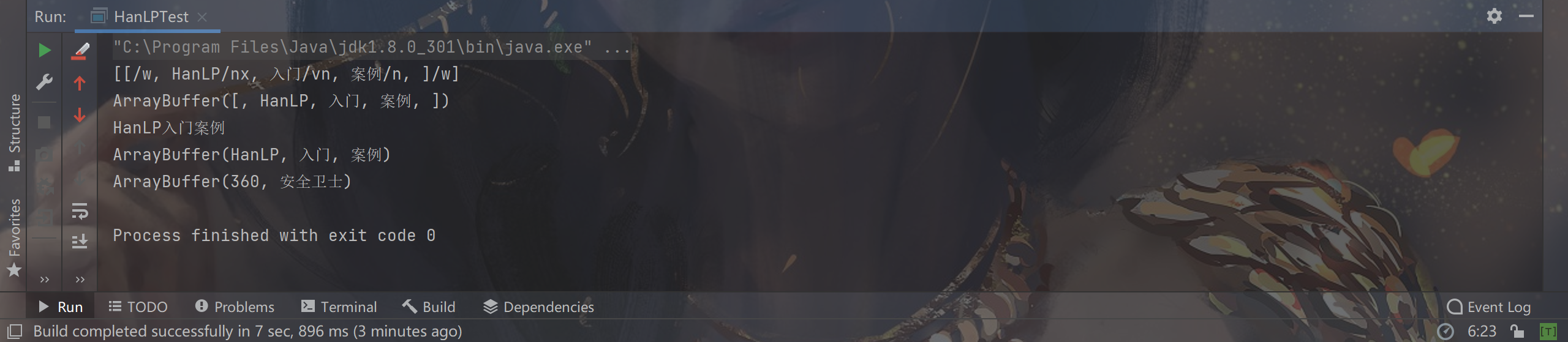

HanLP introduction case

package org.example.spark

import java.util

import com.hankcs.hanlp.HanLP

import com.hankcs.hanlp.seg.common.Term

/**

* Author tuomasi

* Desc HanLP Introductory case

*/

object HanLPTest {

def main(args: Array[String]): Unit = {

val words = "[HanLP Introductory case]"

val terms: util.List[Term] = HanLP.segment(words) //subsection

println(terms) //Directly print java list:[[/w, HanLP/nx, introduction / vn, case / N,] / w]

import scala.collection.JavaConverters._

println(terms.asScala.map(_.word)) //To scala's list:ArrayBuffer([, HanLP, introduction, case,])

val cleanWords1: String = words.replaceAll("\\[|\\]", "") //Replace "[" or "]" with empty "/" HanLP introduction case "

println(cleanWords1) //HanLP introduction case

println(HanLP.segment(cleanWords1).asScala.map(_.word)) //ArrayBuffer(HanLP, introduction, case)

val log = """00:00:00 2982199073774412 [360 Security guard] 8 3 download.it.com.cn/softweb/software/firewall/antivirus/20067/17938.html"""

val cleanWords2 = log.split("\\s+")(2) //[360 security guard]

.replaceAll("\\[|\\]", "") //360 security guard

println(HanLP.segment(cleanWords2).asScala.map(_.word)) //ArrayBuffer(360, security guard)

}

}Console printing effect

code implementation

package org.example.spark

import com.hankcs.hanlp.HanLP

import org.apache.spark.rdd.RDD

import org.apache.spark.{SparkConf, SparkContext}

import shapeless.record

import spire.std.tuples

import scala.collection.immutable.StringOps

import scala.collection.mutable

/**

* Author tuomasi

* Desc Requirement: segment SougouSearchLog and count the following indicators:

* 1.Popular search terms

* 2.User popular search terms (with user id)

* 3.Search heat in each time period

*/

object SougouSearchLogAnalysis {

def main(args: Array[String]): Unit = {

//TODO 0. Prepare the environment

val conf: SparkConf = new SparkConf().setAppName("spark").setMaster("local[*]")

val sc: SparkContext = new SparkContext(conf)

sc.setLogLevel("WARN")

//TODO 1. Load data

val lines: RDD[String] = sc.textFile("data/input/SogouQ.sample")

//TODO 2. Processing data

//Encapsulate data

val SogouRecordRDD: RDD[SogouRecord] = lines.map(line => { //map is one in and one out

val arr: Array[String] = line.split("\\s+")

SogouRecord(

arr(0),

arr(1),

arr(2),

arr(3).toInt,

arr(4).toInt,

arr(5)

)

})

//Cutting data

/* val wordsRDD0: RDD[mutable.Buffer[String]] = SogouRecordRDD.map(record => {

val wordsStr: String = record.queryWords.replaceAll("\\[|\\]", "") //360 Security guard

import scala.collection.JavaConverters._ //Convert Java collections to scala collections

HanLP.segment(wordsStr).asScala.map(_.word) //ArrayBuffer(360, Security guard)

})*/

val wordsRDD: RDD[String] = SogouRecordRDD.flatMap(record => { //flatMap is a security guard = = > [360, security guard]

val wordsStr: String = record.queryWords.replaceAll("\\[|\\]", "") //360 security guard

import scala.collection.JavaConverters._ //Convert Java collections to scala collections

HanLP.segment(wordsStr).asScala.map(_.word) //ArrayBuffer(360, security guard)

})

//TODO 3. statistical indicators

//--1. Popular search terms

val result1: Array[(String, Int)] = wordsRDD

.filter(word => !word.equals(".") && !word.equals("+"))

.map((_, 1))

.reduceByKey(_ + _)

.sortBy(_._2, false)

.take(10)

//--2. User popular search terms (with user id)

val userIdAndWordRDD: RDD[(String, String)] = SogouRecordRDD.flatMap(record => { //flatMap is a security guard = = > [360, security guard]

val wordsStr: String = record.queryWords.replaceAll("\\[|\\]", "") //360 security guard

import scala.collection.JavaConverters._ //Convert Java collections to scala collections

val words: mutable.Buffer[String] = HanLP.segment(wordsStr).asScala.map(_.word) //ArrayBuffer(360, security guard)

val userId: String = record.userId

words.map(word => (userId, word))

})

val result2: Array[((String, String), Int)] = userIdAndWordRDD

.filter(t => !t._2.equals(".") && !t._2.equals("+"))

.map((_, 1))

.reduceByKey(_ + _)

.sortBy(_._2, false)

.take(10)

//--3. Search heat in each time period

val result3: Array[(String, Int)] = SogouRecordRDD.map(record => {

val timeStr: String = record.queryTime

val hourAndMitunesStr: String = timeStr.substring(0, 5)

(hourAndMitunesStr, 1)

}).reduceByKey(_ + _)

.sortBy(_._2, false)

.take(10)

//TODO 4. Output results

result1.foreach(println)

result2.foreach(println)

result3.foreach(println)

//TODO 5. Release resources

sc.stop()

}

//Prepare a sample class to encapsulate the data

/**

* User search click web Record

*

* @param queryTime Access time, format: HH:mm:ss

* @param userId User ID

* @param queryWords Query words

* @param resultRank The ranking of the URL in the returned results

* @param clickRank Sequence number clicked by the user

* @param clickUrl URL clicked by the user

*/

case class SogouRecord(

queryTime: String,

userId: String,

queryWords: String,

resultRank: Int,

clickRank: Int,

clickUrl: String

)

}

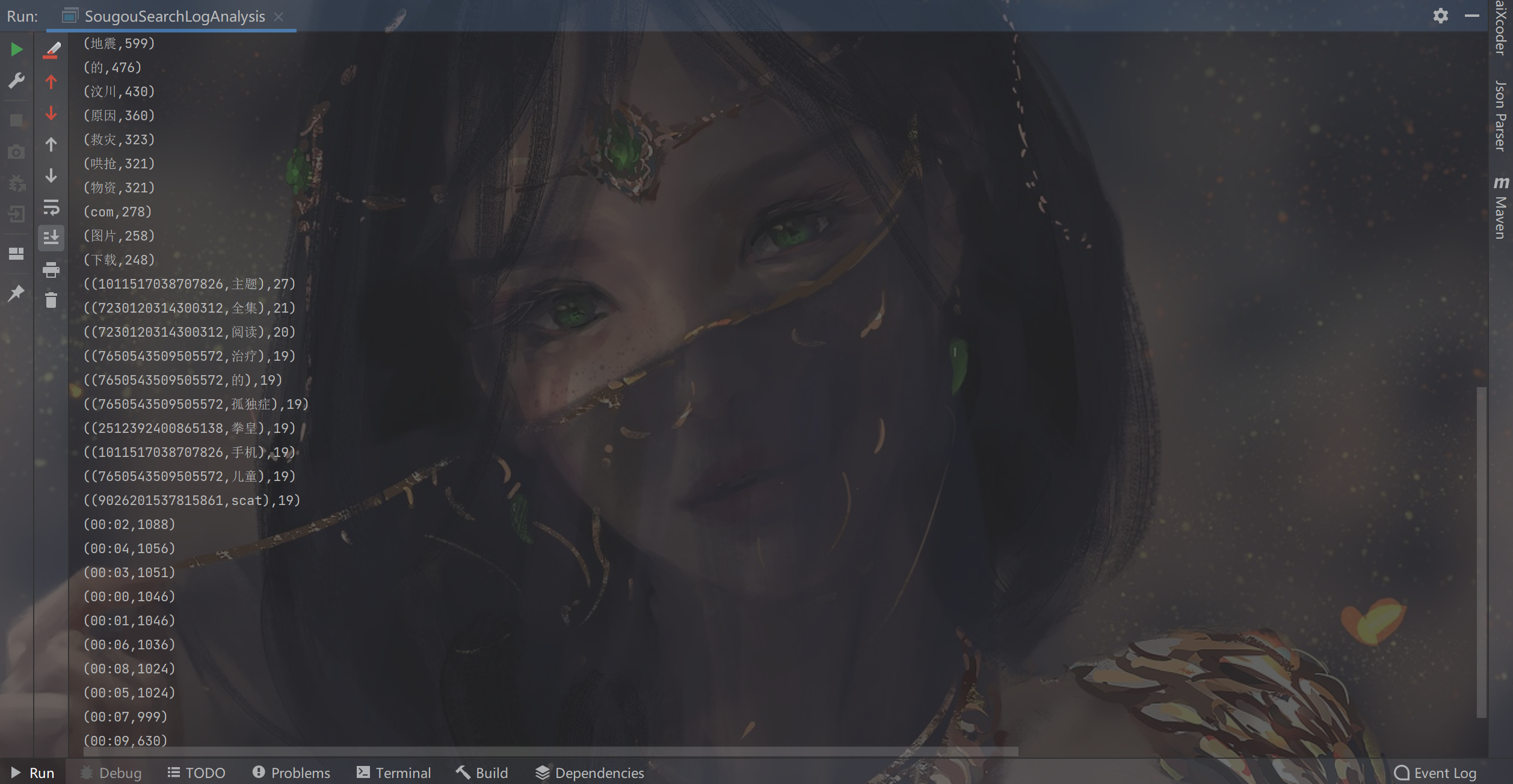

Effect display

Note: the SougouSearchLog is segmented and the following indicators are counted: popular search words, user popular search words (with user id) and search popularity in each time period. This effect is basically consistent with the expected idea