Functions to be used:

1,Mat getStructuringElement(int shape, Size esize, Point anchor = Point(-1, -1));

The first parameter of this function represents the shape of the kernel. There are three shapes to choose from.

Rectangle: MORPH_RECT;

Cross shape: MORPH_CROSS;

Oval: MORPH_ELLIPSE;

The second and third parameters are the size of the kernel and the location of the anchor, respectively. Generally, before calling the erode and dilate functions, define a variable of type Mat to obtain the return value of the getStructuringElement function. For the position of the anchor Point, there is a default value of Point (- 1, - 1), indicating that the anchor Point is located at the center Point. element shape only depends on the anchor position. In other cases, the anchor only affects the offset of morphological operation results.

Kernel:

Anchor point:

2. morphologyEx (morphological operation)

void morphologyEx( InputArray src, OutputArray dst,int op, InputArray kernel,Point anchor = Point(-1,-1), int iterations = 1,int borderType = BORDER_CONSTANT,const Scalar& borderValue = morphologyDefaultBorderValue() )

Parameter interpretation

src: source image Mat object

dst: target image Mat object

op: types of operations. We know from the source code that there are the following types:

enum MorphTypes{

MORPH_ERODE = 0, / / corrosion

MORPH_DILATE = 1, / / expansion

MORPH_OPEN = 2, / / open operation (in fact, the internal operation is corrosion first and then expansion)

MORPH_CLOSE = 3, / / close operation

MORPH_GRADIENT = 4, / / gradient operation

MORPH_TOPHAT = 5, / / top hat operation

MORPH_BLACKHAT = 6, / / black hat operation

MORPH_HITMISS = 7.

}

kernel: the structure element used for expansion operation. If the value is Mat(), a 3 x 3 square structure element is used by default. You can use getStructuringElement() to create the structure element

anchor: reference point, whose default value is (- 1, - 1), indicating that it is located in the center of the kernel.

borderType: edge type; the default is BORDER_CONSTANT.

borderValue: edge value. Use its default value.

3,boundingRect(InputArray points)

Points: enter information, which can be a vector containing points or a Mat.

Returns the smallest positive rectangle covering the input information.

4,HoughLinesP(InputArray image, OutputArray lines, double rho, double theta, int threshold, double minLineLength=0, double maxLineGap=0 )

Function function: extract line segments from the input image according to the given parameter requirements and put them in lines.

The first parameter, InputArray type image, is the input image.

The second parameter, lines of InputArray type, stores the output vector of the detected line after calling HoughLinesP function. Each line is represented by a vector with four elements (x_1,y_1, x_2, y_2), where, (x_1, y_1) and (x_2, y_2) are the end points of each detected line segment.

The third parameter, rho, is simply the resolution of the radius.

The fourth parameter, theta, is the angular accuracy in radians. Another way to describe it is the unit angle of the progressive dimension when searching for a straight line.

The fifth parameter, threshold, is the threshold parameter of the accumulation plane, that is, the value that a part must reach in the accumulation plane when it is recognized as a straight line in the graph. Only segments greater than the threshold can be detected and returned to the result.

The sixth parameter, minLineLength, has a default value of 0, indicating the length of the lowest line segment. The line segment shorter than this setting parameter cannot be displayed.

The seventh parameter, maxLineGap, has a default value of 0, which allows the maximum distance between points in the same line

The idea to solve the problem is to find the coordinates of four points to complete the perspective transformation.

Two main functions:

getPerspectiveTransform( InputArray src, InputArray dst );// Get perspective transformation matrix

warpPerspective( InputArray src, OutputArray dst, InputArray M, Size dsize,

int flags = INTER_LINEAR, int borderMode = BORDER_CONSTANT,

const Scalar& borderValue = Scalar());// Perspective transformation

General operation process:

(1) Gray processing, binarization and morphological operation form connected regions

(2) Contour discovery and drawing the contour of the target

(3) Line detection in the drawn contour

(4) Find the four edges and find the four corners

(5) Use the perspective transformation function to get the result

#include<iostream>

#include<opencv2\opencv.hpp>

using namespace cv;

using namespace std;

int main()

{

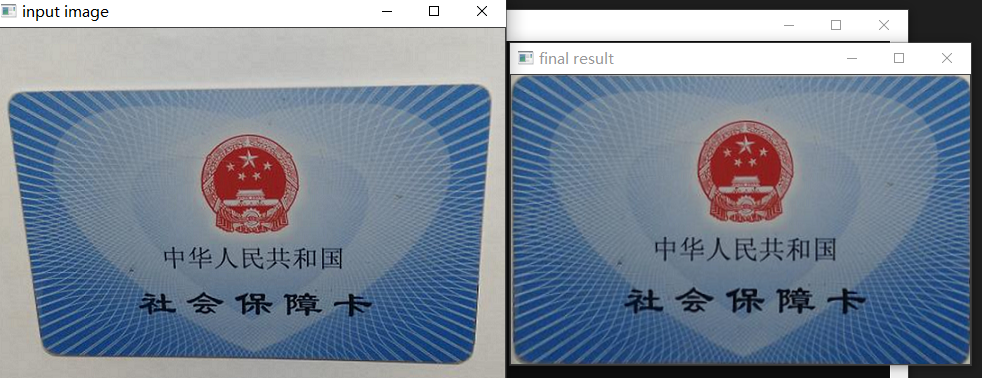

Mat src = imread("D://RM//OpenCv learning / / perspective transformation / / 11 png");

imshow("input image", src);

//bgr 2 gray to gray image

Mat src_gray;

cvtColor(src, src_gray, COLOR_BGR2GRAY);

//binary binarization

Mat binary;

threshold(src_gray, binary, 0, 255, THRESH_BINARY_INV | THRESH_OTSU); //THRESH_BINARY_INV is inversed after binarization

//imshow("binary", binary);// Because there are some spots

//Morphological closing operation: small areas can be filled

Mat morhp_img;

Mat kernel = getStructuringElement(MORPH_RECT, Size(5, 5), Point(-1, -1));

morphologyEx(binary, morhp_img, MORPH_CLOSE, kernel, Point(-1, -1), 3);

//imshow("morphology", morhp_img);

Mat dst;

bitwise_not(morhp_img, dst);//In reverse

imshow("dst", dst);//

//Contour discovery

vector<vector<Point>> contous;

vector<Vec4i> hireachy;

findContours(dst, contous, hireachy, RETR_TREE, CHAIN_APPROX_SIMPLE, Point());

cout << "contous.size:" << contous.size() << endl;

//Contour drawing

int width = src.cols;

int height = src.rows;

Mat drawImage = Mat::zeros(src.size(), CV_8UC3);

cout << contous.size() << endl;

for (size_t t = 0; t < contous.size(); t++)

{

Rect rect = boundingRect(contous[t]);

if (rect.width > width / 2 && rect.height > height / 2 && rect.width < width - 5 && rect.height < height - 5)

{

drawContours(drawImage, contous, static_cast<int>(t), Scalar(0, 0, 255), 2, 8, hireachy, 0, Point(0, 0));

}

}

imshow("contours", drawImage);//Show found contours

//Line detection

vector<Vec4i> lines;

Mat contoursImg;

int accu = min(width * 0.5, height * 0.5);

cvtColor(drawImage, contoursImg, COLOR_BGR2GRAY);

imshow("contours", contoursImg);

Mat linesImage = Mat::zeros(src.size(), CV_8UC3);

HoughLinesP(contoursImg, lines, 1, CV_PI / 180.0, accu, accu, 0);

for (size_t t = 0; t < lines.size(); t++)

{

Vec4i ln = lines[t];

line(linesImage, Point(ln[0], ln[1]), Point(ln[2], ln[3]), Scalar(0, 0, 255), 2, 8, 0);//draw a straight line

}

cout << "number of lines:" << lines.size() << endl;

imshow("linesImages", linesImage);

//Find and locate four straight lines up, down, left and right

int deltah = 0; //Height difference

int deltaw = 0; //Width difference

Vec4i topLine, bottomLine; //Line definition

Vec4i rightLine, leftLine;

for (int i = 0; i < lines.size(); i++)

{

Vec4i ln = lines[i];

deltah = abs(ln[3] - ln[1]); //Calculated height difference (y2-y1)

//topLine

if (ln[3] < height / 2.0 && ln[1] < height / 2.0 && deltah < accu - 1)

{

topLine = lines[i];

}

//bottomLine

if (ln[3] > height / 2.0 && ln[1] > height / 2.0 && deltah < accu - 1)

{

bottomLine = lines[i];

}

deltaw = abs(ln[2] - ln[0]); //Calculated width difference (x2-x1)

//leftLine

if (ln[0] < height / 2.0 && ln[2] < height / 2.0 && deltaw < accu - 1)

{

leftLine = lines[i];

}

//rightLine

if (ln[0] > width / 2.0 && ln[2] > width / 2.0 && deltaw < accu - 1)

{

rightLine = lines[i];

}

}

// Prints the coordinates of the four lines

cout << "topLine : p1(x,y)= " << topLine[0] << "," << topLine[1] << "; p2(x,y)= " << topLine[2] << "," << topLine[3] << endl;

cout << "bottomLine : p1(x,y)= " << bottomLine[0] << "," << bottomLine[1] << "; p2(x,y)= " << bottomLine[2] << "," << bottomLine[3] << endl;

cout << "leftLine : p1(x,y)= " << leftLine[0] << "," << leftLine[1] << "; p2(x,y)= " << leftLine[2] << "," << leftLine[3] << endl;

cout << "rightLine : p1(x,y)= " << rightLine[0] << "," << rightLine[1] << "; p2(x,y)= " << rightLine[2] << "," << rightLine[3] << endl;

//Fit four lines

float k1, k2, k3, k4, c1, c2, c3, c4;

k1 = float(topLine[3] - topLine[1]) / float(topLine[2] - topLine[0]);

c1 = topLine[1] - k1 * topLine[0];

k2 = float(bottomLine[3] - bottomLine[1]) / float(bottomLine[2] - bottomLine[0]);

c2 = bottomLine[1] - k2 * bottomLine[0];

k3 = float(leftLine[3] - leftLine[1]) / float(leftLine[2] - leftLine[0]);

c3 = leftLine[1] - k3 * leftLine[0];

k4 = float(rightLine[3] - rightLine[1]) / float(rightLine[2] - rightLine[0]);

c4 = rightLine[1] - k4 * rightLine[0];

//Find four corners,

Point p1;//Topline leftline upper left corner

p1.x = static_cast<int>(c1 - c3) / k3 - k1;

p1.y = k1 * p1.x + c1;

Point p2;//Topline rightline top right corner

p2.x = static_cast<int>(c1 - c4) / k4 - k1;

p2.y = k1 * p2.x + c1;

Point p3;//Bottomline leftline lower left corner

p3.x = static_cast<int>(c2 - c3) / k3 - k2;

p3.y = k2 * p3.x + c2;

Point p4;//Bottomline rightline lower right corner

p4.x = static_cast<int>(c2 - c4) / k4 - k2;

p4.y = k2 * p4.x + c2;

cout << "Point p1: (" << p1.x << "," << p1.y << ")" << endl;

cout << "Point p2: (" << p2.x << "," << p2.y << ")" << endl;

cout << "Point p3: (" << p3.x << "," << p3.y << ")" << endl;

cout << "Point p4: (" << p4.x << "," << p4.y << ")" << endl;

//Show four points

circle(linesImage, p1, 2, Scalar(0, 255, 0), 2);

circle(linesImage, p2, 2, Scalar(0, 255, 0), 2);

circle(linesImage, p3, 2, Scalar(0, 255, 0), 2);

circle(linesImage, p4, 2, Scalar(0, 255, 0), 2);

imshow("find four points", linesImage);

//Perspective transformation

vector<Point2f> src_corners(4);

src_corners[0] = p1;

src_corners[1] = p2;

src_corners[2] = p3;

src_corners[3] = p4;

Mat result_images = Mat::zeros(height * 0.7, width * 0.9, CV_8UC3);

vector<Point2f> dst_corners(4);

dst_corners[0] = Point(0, 0);

dst_corners[1] = Point(result_images.cols, 0);

dst_corners[2] = Point(0, result_images.rows);

dst_corners[3] = Point(result_images.cols, result_images.rows);

Mat warpmatrix = getPerspectiveTransform(src_corners, dst_corners); //Get perspective transformation matrix

warpPerspective(src, result_images, warpmatrix, result_images.size()); //Perspective transformation

imshow("final result", result_images);

imwrite("D:/images/warpPerspective.png", result_images);

waitKey(0);

return 0;

}