Official documents: Logging facility for Python — Python 3 documentation

Basic Usage

logging is configured with six log levels by default (the values corresponding to the levels are in parentheses), in ascending order of priority, as follows:

- NOTSET(0)

- DEBUG(10)

- INFO(20)

- WARNING(30)

- ERROR(40)

- CRITICAL(50)

When logging is executed, log information greater than or equal to the set log level is output. If the set log level is INFO, logs of INFO, WARNING, ERROR and CRITICAL levels will be output

Let's start with a simple usage example:

import logging

logging.basicConfig() # Automated configuration

logging.warning('This is a warning message') # The default log output level is Warning

logging. Common parameters in basicconfig (* * kwargs) include:

- Filename: specifies to create a FileHandler using the specified filename instead of StreamHandler

- File mode: if filename is specified, the file will be opened in this mode ('r ',' w ',' a '). The default is "a"

- Format: configure log format string, refer to: logrecord-attributes

- datefmt: configure time format string and support time Date / time format accepted by strftime()

- Level: Specifies the logger level. The default value is WARNING

- Handlers: specify handlers. Note that this parameter is incompatible with filename and filemode, and an error will be reported during configuration

basicConfig configuration example:

logging.basicConfig(filename="test.log",

filemode="w",

format="%(asctime)s %(name)s:%(levelname)s:%(message)s",

datefmt="%d-%M-%Y %H:%M:%S",

level=logging.DEBUG)

Advanced configuration

Let's first understand the main modules in logging:

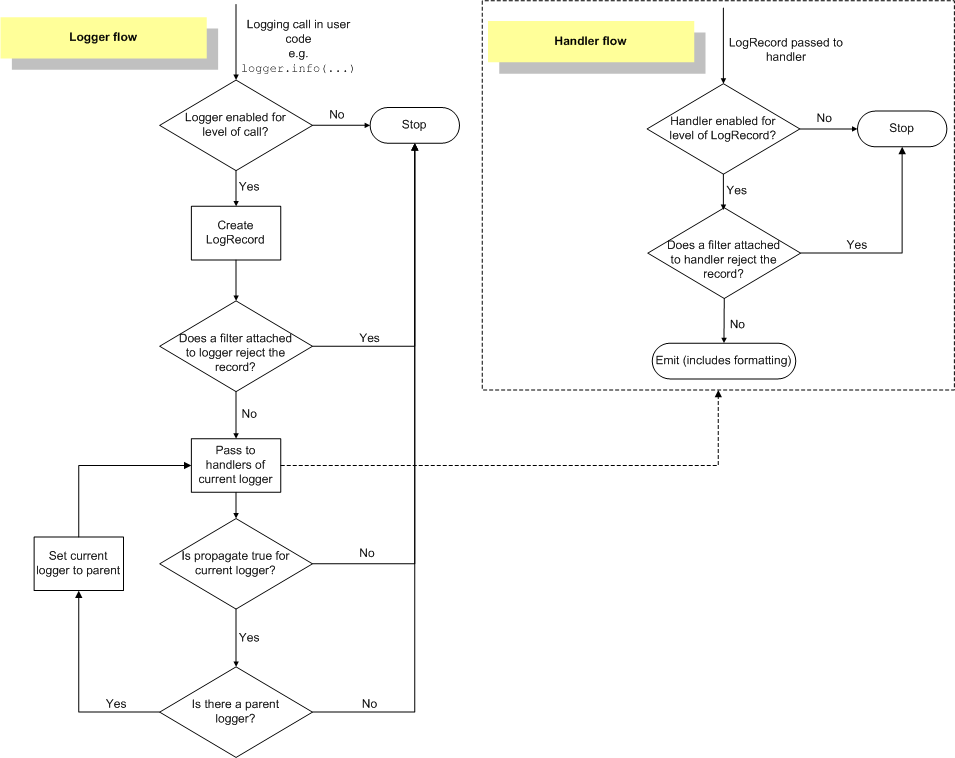

- Loggers: expose the interface that application code directly uses.

- Handlers: send the log records (created by loggers) to the appropriate destination.

- Filters: provide a finer grained facility for determining which log records to output.

- Formatters: specify the layout of log records in the final output.

The log information sent in the program will be wrapped into LogRecord Object is passed into each component of logging:

Loggers

Logger objects have a threefold job. First, they expose several methods to application code so that applications can log messages at runtime. Second, logger objects determine which log messages to act upon based upon severity (the default filtering facility) or filter objects. Third, logger objects pass along relevant log messages to all interested log handlers.

The relationship between Logger and handler is similar to mail and mailbox. A mail can be copied to multiple inboxes when needed. Common configuration methods of Logger include:

- Logger.setLevel() : sets the minimum display level

- Logger.addHandler() and Logger.removeHandler() : addition and deletion Handlers , each Logger can correspond to 0 or more handler s

- Logger.addFilter() and Logger.removeFilter() : addition and deletion Format configuration item

Each logger has a name attribute, which represents the module to which the logger belongs in the user program (similar to the concept of scope). The name of the logger under different modules can be passed through To organize hierarchical relationships, such as hook, hook spark,hook.spark.attachment, etc. The relationship between loggers at different levels is similar to "inheritance" in programming objects: various configuration items of the parent logger will be inherited by the child logger (including handler, filter, etc.)

We can pass logging.getLogger() Method to obtain the logger object and configure the name. Since the logger follows the singleton pattern, when getLogger() is called multiple times and the same name is configured, the interface will return the same logger object

Handlers

When do we need multiple handlers?

As an example scenario, an application may want to send all log messages to a log file, all log messages of error or higher to stdout, and all messages of critical to an email address.

A series of predefined handlers are provided in the standard library. Refer to: Useful Handlers , their common configuration methods include:

- setLevel() Set the level above which the handler will process messages

- setFormatter() Specify a format configuration object for the Handler

- addFilter() and removeFilter() Add / delete Filter

Formatters

formatter is used to configure various log formats. It includes three parameters:

logging.Formatter.__init__(fmt=None, datefmt=None, style='%')

Of which:

- fmt specifies the message format of the log, for example: '% (actime) s -% (levelname) s -% (message) s'

- datefmt specifies the organization format of the date, for example:% Y -% m -% d% H:% m:% s

- style allows the user to specify what type of identifier to use to describe the contents of the first two parameters. For example, in the above two examples, we use the% identifier. In addition, we can also use {} and $identifiers. For details, please refer to: LogRecord attributes

Overall configuration

Logging can be configured in three ways:

- Explicitly declare components such as loggers, handlers and formatters in python code and call relevant methods for configuration

- Create a logging configuration file and call fileConfig() Configure

- Declare a dict containing configuration information and pass it in dictConfig() Configure

For details of the latter two configuration methods, please refer to: Configuration functions Next, a simple demo of the first configuration method is shown:

import logging

# create formatter

formatter = logging.Formatter('%(asctime)s - %(name)s - %(levelname)s - %(message)s')

# create console handler and set level to debug

ch = logging.StreamHandler()

ch.setLevel(logging.DEBUG)

# add formatter to ch

ch.setFormatter(formatter)

# create logger

logger = logging.getLogger('simple_example')

logger.setLevel(logging.DEBUG)

# add ch to logger

logger.addHandler(ch)

# 'application' code

logger.debug('debug message')

The above code first creates a formatter, then adds it to the handler, then associates the handler with a logger, and then we can use this logger to record information

Code sample

An example of log module encapsulation:

import os

import logging

from logging.handlers import RotatingFileHandler

class Log:

_logger = None

_log_dir = None

@staticmethod

def get():

if Log._logger:

return Log._logger

else:

return Log._build_logger()

@staticmethod

def set_dir(file_dir):

if Log._log_dir:

raise Exception('log directory has already been set. (Check if "get()" has been called before)')

else:

if not (os.path.exists(file_dir) and os.path.isdir(file_dir)):

os.mkdir(file_dir)

Log._log_dir = file_dir

@staticmethod

def filter(name, level):

level = str.upper(level)

assert level in {'DEBUG', 'INFO', 'WARNING', 'ERROR'}

logging.getLogger(name).setLevel(getattr(logging, level))

@staticmethod

def _build_logger():

log_fmt = '[%(asctime)s | \"%(filename)s\" line %(lineno)s | %(levelname)s] %(message)s'

formatter = logging.Formatter(log_fmt, datefmt="%Y/%m/%d %H:%M:%S")

if not Log._log_dir:

os.makedirs('logs', exist_ok=True)

Log._log_dir = 'logs'

log_filepath = os.path.join(Log._log_dir, "rotating.log")

log_file_handler = RotatingFileHandler(filename=log_filepath, maxBytes=500, backupCount=3)

log_file_handler.setFormatter(formatter)

stream_handler = logging.StreamHandler()

stream_handler.setFormatter(formatter)

logging.basicConfig(level=logging.INFO, handlers=(log_file_handler, stream_handler))

Log._logger = logging.getLogger()

return Log._logger

if __name__ == '__main__':

import time

# Log.set_dir('logs') # Configure the log directory before use, if needed.

log = Log.get()

Log.set_dir('logs')

for count in range(20):

log.error(f"logger count: {count}")

time.sleep(1)

Another way is to automatically name the logger according to the file location of the caller:

import os

import logging

import traceback

from logging.handlers import RotatingFileHandler

class Log:

_root = None

_logger = None

@staticmethod

def get():

# Construct the name of the logger based on the file path

code_file = traceback.extract_stack()[-2].filename # Get the file path of the caller

if Log._root not in os.path.abspath(code_file):

raise Exception(f'The file calling the method is outside the home directory: "{code_file}"')

relpath = os.path.relpath(code_file, Log._root).replace('.py', '').replace('/', '.')

root_name = os.path.basename(Log._root)

return logging.getLogger(f"{root_name}.{relpath}")

@staticmethod

def init(home):

assert os.path.isdir(home), f'invalid home directory: "{home}"'

Log._root = os.path.abspath(home)

log_dir = os.path.join(Log._root, 'logs')

if not os.path.isdir(log_dir):

os.mkdir(log_dir)

Log._configure_root_logger(log_dir)

@staticmethod

def filter(name, level):

level = str.upper(level)

assert level in {'DEBUG', 'INFO', 'WARNING', 'ERROR'}

logging.getLogger(name).setLevel(getattr(logging, level))

@staticmethod

def _configure_root_logger(log_dir):

log_fmt = '[%(asctime)s | %(name)s | %(levelname)s] %(message)s'

formatter = logging.Formatter(log_fmt, datefmt="%Y/%m/%d %H:%M:%S")

stream_handler = logging.StreamHandler()

stream_handler.setFormatter(formatter)

log_filepath = os.path.join(log_dir, "rotating.log")

log_file_handler = RotatingFileHandler(filename=log_filepath, maxBytes=1e5, backupCount=3)

log_file_handler.setFormatter(formatter)

root_logger_name = os.path.basename(Log._root)

root_logger = logging.getLogger(root_logger_name)

root_logger.addHandler(log_file_handler)

root_logger.addHandler(stream_handler)

root_logger.setLevel(logging.DEBUG) # default level

if __name__ == '__main__':

import os, time

log_dir = os.path.dirname(__file__)

Log.init(log_dir) # project root directory

log = Log.get()

for count in range(20):

log.info(f"logger count: {count}")

time.sleep(1)