A garbage sorting project takes you to play with the paddle (2)

Continued above< A garbage sorting project takes you to play with the paddle (1) >: Based on PaddleClas Realize garbage classification, export the information model, use PaddleHub Serving for service deployment, and use PYQT5 to realize visualization.

This paper takes waste classification as the starting point of the project, adopts the PaddleX "propeller" deep learning whole process development tool, and realizes multi-objective waste classification and detection based on Yolov3-DarkNet53 model. The model is deployed on the ground based on EdgeBoard edge deployment equipment, so as to accelerate the efficiency of waste classification and help social environmental protection.

Garbage detection based on PaddleX

With the in-depth implementation of waste classification policy, now, waste classification related products have sprung up. However, nowadays, garbage classification products mostly use the image classification technology in computer vision to identify and classify garbage. Although this scheme can accurately identify the types of garbage, it is limited by the technology itself. In order to ensure the recognition accuracy, its recognition image can only exist in one kind of garbage, that is, when users use this product, Only one kind of garbage can be placed in the identification area during this identification process. Therefore, this scheme greatly hinders the improvement of waste classification efficiency. The use of target detection technology can effectively solve this problem, accelerate the efficiency of waste classification, really help the in-depth implementation of waste classification policies, and popularize waste classification knowledge to the society.

Introduction to PaddleX

🤗 PaddleX integrates the task capabilities of image classification, target detection, semantic segmentation and instance segmentation in the field of propeller intelligent vision, connects the whole process of deep learning development from data preparation, model training and optimization to multi-end deployment, and provides a unified task API interface and graphical development interface Demo. Developers can quickly complete the whole process development of the propeller in the form of low code without installing different packages separately.

🏭 PaddleX has been verified by more than ten industry application scenarios such as quality inspection, security, patrol inspection, remote sensing, retail and medical treatment, precipitated industry practical experience, and provided rich case practice tutorials to help developers implement industry practice in the whole process.

At present, PaddleX has released version 2.0.4rc and officially released, realizing the comprehensive upgrade of dynamic graph. In order to ensure that the model can be successfully deployed on the EdgeBoard development board, version 1.3.11 is still used in this project.

Decompress dataset

The mounted data set of the project provides a data set that conforms to both VOC format and COCO format, which can meet the data set format requirements of paddex or paddedetection model training.

[refer to for relevant data set conversion methods This Github warehouse Welcome to contribute code, star 😋]

!unzip -oq /home/aistudio/data/data100472/dataset.zip -d dataset

model training

- First, install version paddlex==1.3.11;

- Select the training model according to the EdgeBoard development board model support list and specific deployment scenarios;

- Model export;

1. Considering that the project needs to be deployed in the EdgeBoard development board, the version of pagex = = 1.3.11 is installed.

!pip install paddlex==1.3.11

2. Model training

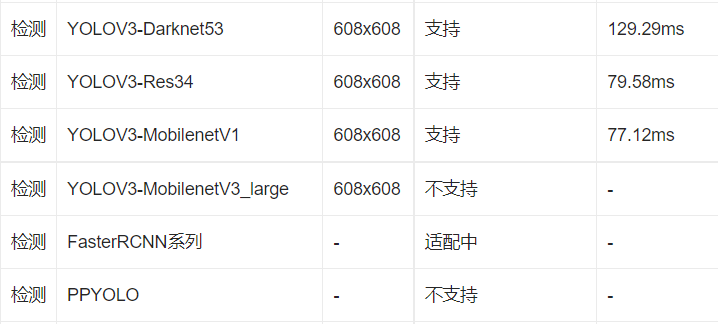

In terms of model selection, EdgeBoard provides PaddleX based deployment In this model list (only detection support models are shown here, please query for detailed models) This link)

In this project, Yolov3-DarkNet53 model is selected for training based on the support list of this detection model and the actual deployment scenario, with accuracy as the first selection priority. Here are the detailed training Codes:

import os

os.environ['CUDA_VISIBLE_DEVICES'] = '0'

from paddlex.det import transforms

import paddlex as pdx

# Define transforms for training and validation

# API description https://paddlex.readthedocs.io/zh_CN/develop/apis/transforms/det_transforms.html

train_transforms = transforms.Compose([

transforms.MixupImage(mixup_epoch=250), transforms.RandomDistort(),

transforms.RandomExpand(), transforms.RandomCrop(), transforms.Resize(

target_size=608, interp='RANDOM'), transforms.RandomHorizontalFlip(),

transforms.Normalize()

])

eval_transforms = transforms.Compose([

transforms.Resize(

target_size=608, interp='CUBIC'), transforms.Normalize()

])

# Define the data sets used for training and validation

# API explain: https://paddlex.readthedocs.io/zh_CN/develop/apis/datasets.html#paddlex-datasets-vocdetection

train_dataset = pdx.datasets.VOCDetection(

data_dir='/home/aistudio/dataset/VOC2007',

file_list='/home/aistudio/dataset/VOC2007/train_list.txt',

label_list='/home/aistudio/dataset/VOC2007/labels.txt',

transforms=train_transforms,

shuffle=True)

eval_dataset = pdx.datasets.VOCDetection(

data_dir='/home/aistudio/dataset/VOC2007',

file_list='/home/aistudio/dataset/VOC2007/val_list.txt',

label_list='/home/aistudio/dataset/VOC2007/labels.txt',

transforms=eval_transforms)

# Initialize the model and train

# You can use VisualDL to view training indicators, refer to https://paddlex.readthedocs.io/zh_CN/develop/train/visualdl.html

num_classes = len(train_dataset.labels)

# API explain: https://paddlex.readthedocs.io/zh_CN/develop/apis/models/detection.html#paddlex-det-yolov3

model = pdx.det.YOLOv3(num_classes=num_classes, backbone='DarkNet53')

# API explain: https://paddlex.readthedocs.io/zh_CN/develop/apis/models/detection.html#id1

# Introduction and adjustment description of each parameter: https://paddlex.readthedocs.io/zh_CN/develop/appendix/parameters.html

model.train(

num_epochs=270,

train_dataset=train_dataset,

train_batch_size=16,

eval_dataset=eval_dataset,

learning_rate=0.000125,

save_interval_epochs=2,

lr_decay_epochs=[104, 126, 240],

save_dir='output/yolov3_darknet53',

use_vdl=True)

3. Model export

!paddlex --export_inference --model_dir=image_model --save_dir=./inference_model

Implementation of model deployment based on EdgeBoard

EdgeBoard brief introduction

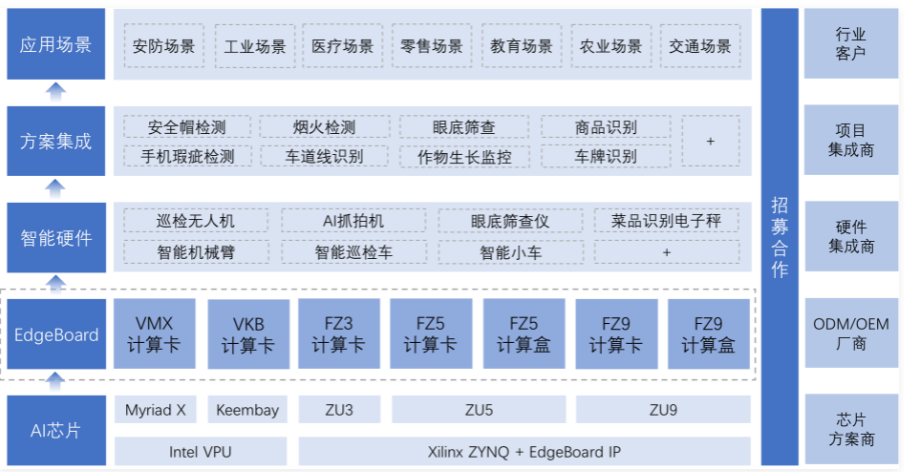

EdgeBoard is an AI solution created by Baidu for embedded and edge computing scenarios. Rich hardware selection can meet the changeable edge deployment requirements. Seamlessly compatible with Baidu brain tool platform and algorithm model, developers can select existing models or customize algorithms. At the same time, the whole process of model training and deployment is visualized, which greatly reduces the threshold of development and integration. EdgeBoard's flexible chip architecture can adapt to the most cutting-edge and effective algorithm model in the industry. The software and hardware integrated products based on EdgeBoard can be widely used in security, industry, medical treatment, retail, education, agriculture, transportation and other scenarios.

Hardware preparation

- EdgeBoard and its power supply; display; miniDP to HDMI converter; Network cable usb camera; computer

EdgeBoard specific operation

- start-up

Use the matching 12V power adapter to plug into the power interface of EdgeBoard. The power interface of EdgeBoard is a 2pin green terminal block with a spacing of 3.81mm, as shown in the figure below. Pay attention to the positive and negative power supply. Other interfaces have no power supply function.

- Shut down

When EdgeBoard is powered off, it is recommended to power off the software operating system through the poweroff command, and then cut off the external power supply to protect the SD card file system from accidental damage.

- restart

There are two ways to restart EdgeBoard. One is to input the reboot command in the operating system window to complete the system restart; The other is to briefly press the reset button on the device to restart the EdgeBoard system

Windows Environment

Configure computer IP

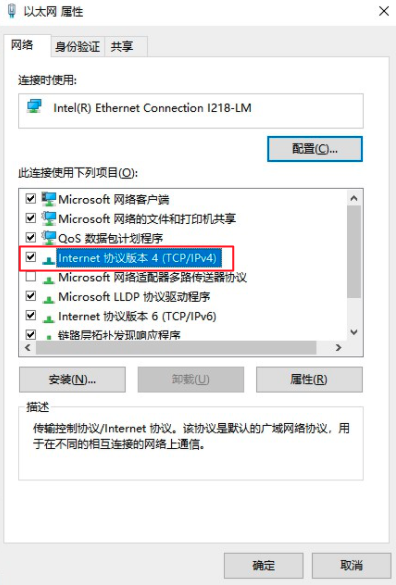

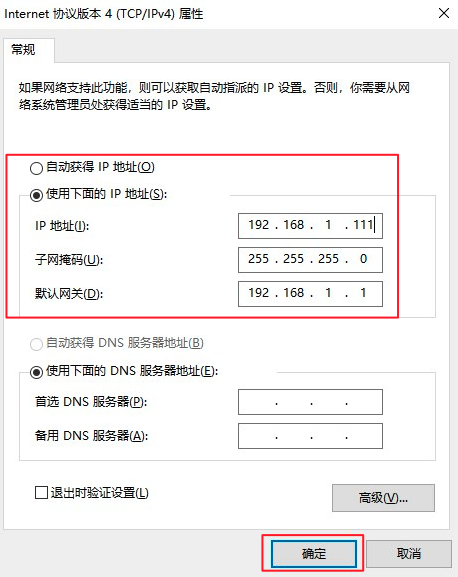

The steps for configuring the IP address of the computer are as follows: after the computer is directly connected to the Edgeboard, enter the control panel – > network and Internet – > view the network status and tasks

Click Ethernet

Click Properties

Click Internet Protocol version 4

Set the computer IP address as a static IP address

Click OK.

Log in to Edgeboard system

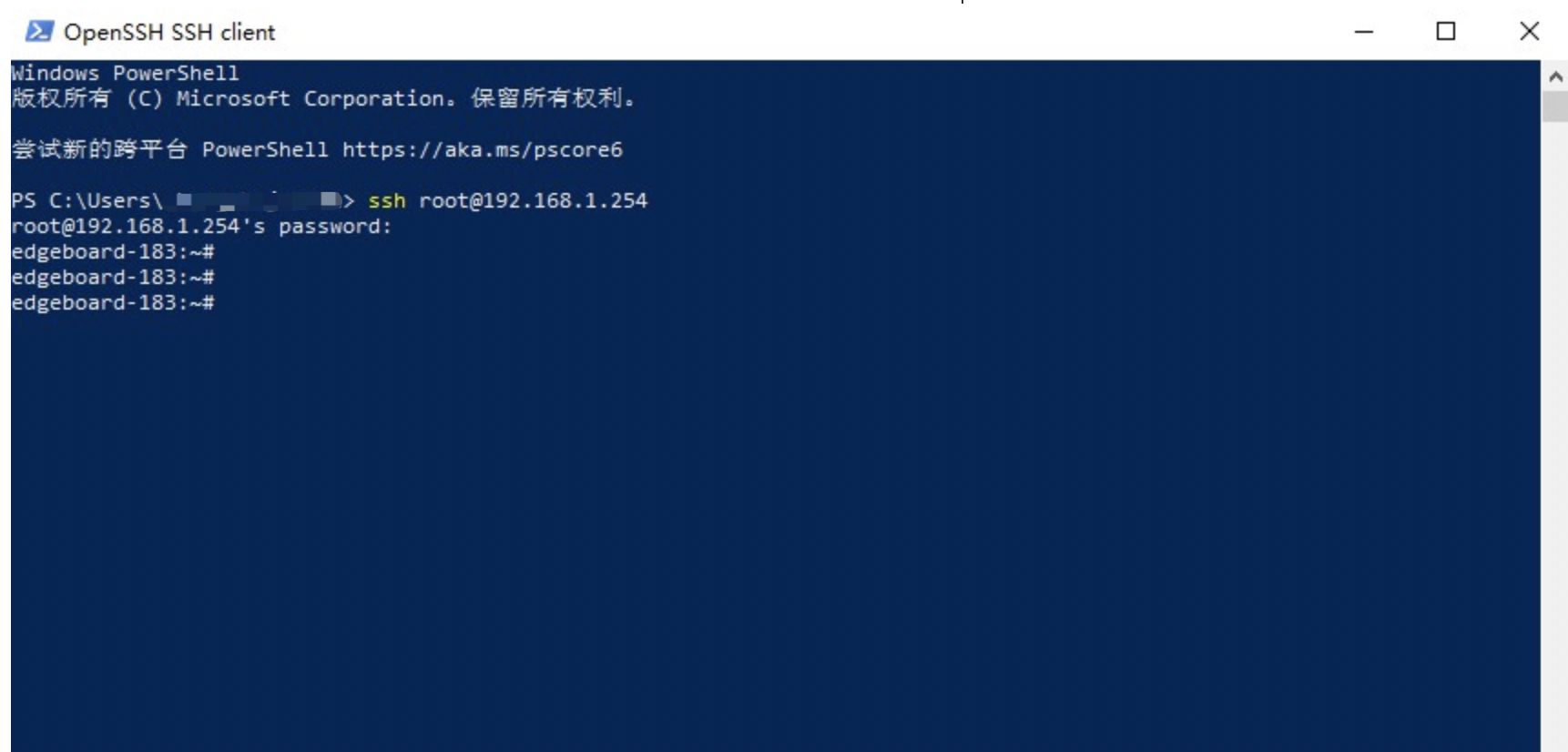

Edgeboard system is a simplified linux system, which can communicate with it through network SSH protocol. After the device is powered on, log in to the edgeboard system through the computer for operation. The user name and password of the edgeboard system are root. This tutorial uses the terminal Windows PowerShell that comes with window. The specific operations are as follows:

Open Windows PowerShell and enter ssh root@192.168.1.254 , click enter. If the ip address is connected for the first time, you will be asked whether to access it. Enter yes and then enter. The password is an invisible character. Enter root and enter directly to enter the Edgeboard system

EdgeBoard serial port connection device communication

If the ssh connection fails, or you need to view the ip (after the device ip is dynamically obtained), you need to use the serial port to enter the device console.

The supporting USB debugging cable can be used to connect the USB UART debugging interface of EdgeBoard, and the computer can be used to connect the EdgeBoard system.

Windows serial port connection method

Installation dependency

1. Install the driver: when using USB to serial port equipment for the first time, install the driver CP210x_Windows_Drivers, download link: https://cn.silabs.com/developers/usb-to-uart-bridge-vcp-drivers

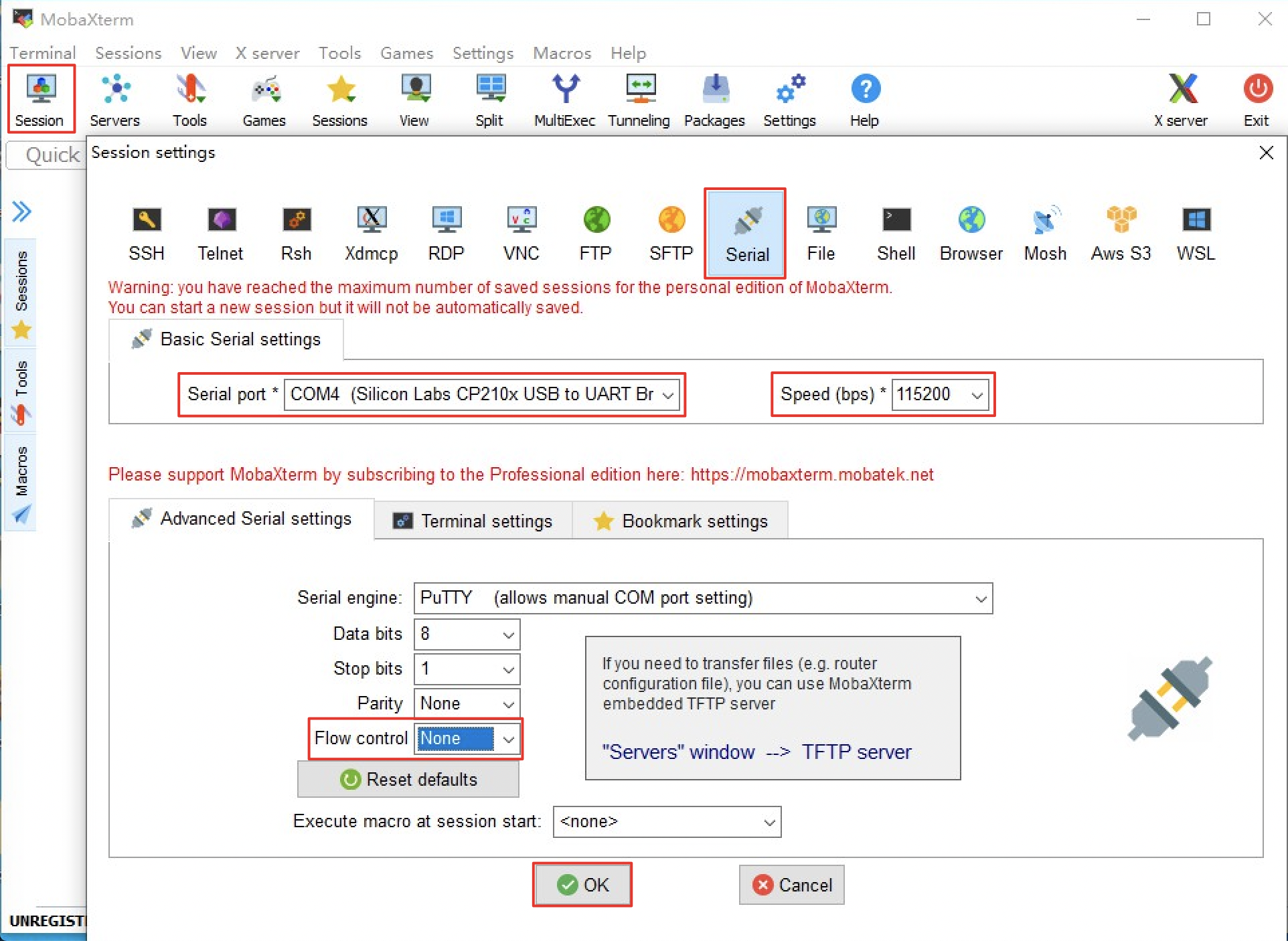

2. Installation and debugging tools: the MobaXterm tool is taken as an example below. Download the tools: https://mobaxterm.mobatek.net/download.html

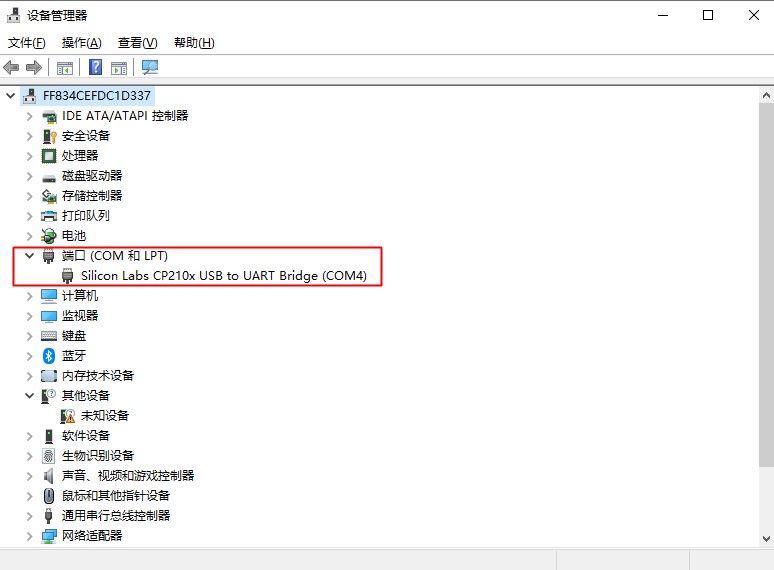

Connecting equipment

1. Ensure that the computer is connected to the USB UART interface of EdgeBoard (please refer to the hardware introduction of the corresponding model for the interface location), enter [control bread - > hardware and sound - > device manager] to view the port number mapped in the device manager, as shown in the figure, and the port number is COM4

2. Open MobaXterm, click Session, enter Session settings, select Serial port, select the mapped port, Speed is 115200, Flow Control is None, and click OK, as shown in the figure below

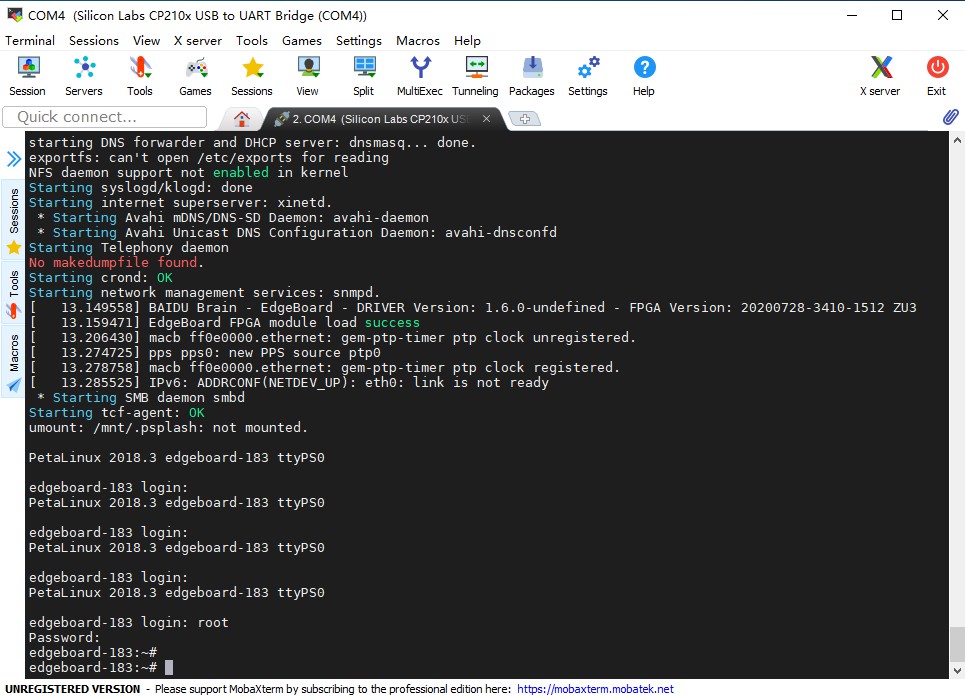

3. After the configuration is completed, enter the Session page, power on the Edgeboard, and you will see the system startup information. After the startup is completed, enter the user name and password root/root to enter the device system. As shown in the figure below

File transfer mode

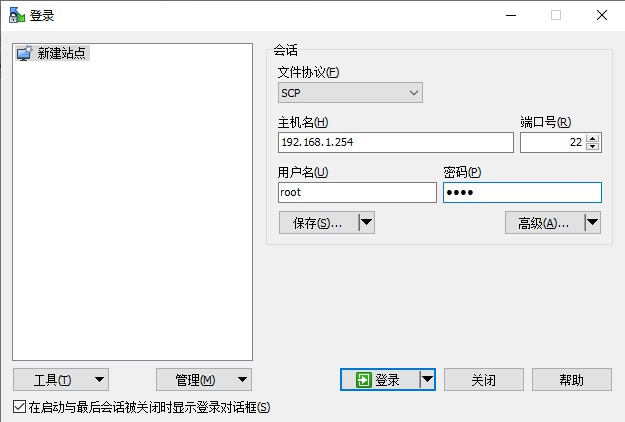

Recommended tool: winscp, download link: https://winscp.net/eng/download.php "Respect intellectual property rights, abide by tool usage specifications, and recommend you to use genuine software"

After referring to the following configuration, the file protocol: scp; Host name: Edgeboard IP; User name & password: Root & root. Click login

After logging in, you can copy files between computers and devices directly through the copy and paste command

Deployment based on Edgeboard 1.8.0 model

Upload model

After the model training, upload the model file to Edgeboard and store it in / home/root/workspace/PaddleLiteDemo/res/models. Select the folder corresponding to the model. For example, yolov3 model is stored in the detection directory. The model file needs to be included in the file corresponding to the example, including

model, params: model file (the trained model names may be different due to different paddlepaddle versions, as long as they are corresponding in config.json)

label_list.txt: model label file

img: folder where forecast pictures are placed

config.json: the configuration file needs to be changed to the parameters of the self training model based on the structure given in the example

Modify model profile parameters

The contents of config.json file are as follows:

{

"network_type":"YOLOV3",

"model_file_name":"model",

"params_file_name":"params",

"labels_file_name":"label_list.txt",

"format":"RGB",

"input_width":608,

"input_height":608,

"mean":[123.675, 116.28, 103.53],

"scale":[0.0171248, 0.017507, 0.0174292],

"threshold":0.3

}

Note: the calculation methods of mean and scale in config.json file may be different from those in training model. Generally, there are the following two methods:

For example, R is the value of the R channel of a pixel in a picture

①(R / 255 – mean') / std'

② (R – mean) * scale (EdgeBoard processing method)

If the mean and std of the training model are the first calculation method, it needs to be calculated as the Edgeboard processing method through the following operations.

edgeboard Medium mean value = mean' * 255 Edgeboard Medium scale value = 1 / ( 255 * std' )

Modify system parameter configuration file

The system configuration file is stored in / home / root / workspace / paddlelitedemo / configurations. Modify the corresponding configuration file according to the model properties, such as yolov3 model, and modify the model file path and prediction picture path in the image.json file.

{

"model_config": "../../res/models/detection/yolov3",

"input": {

"type": "image",

"path": "../../res/models/detection/yolov3/img/screw.jpg"

},

"debug": {

"display_enable": true,

"predict_log_enable": true,

"predict_time_log_enable": false

}

}

Execution procedure

After modifying the model configuration file and system configuration file, run the program

cd /home/root/workspace/PaddleLiteDemo/C++/build ./detection ../../configs/detection/yolov3/image.json