Python wechat ordering applet course video

https://edu.csdn.net/course/detail/36074

Python actual combat quantitative transaction financial management system

https://edu.csdn.net/course/detail/35475

A glimpse of AQS source code

Considering the large amount of code and information involved in AQS, the plan is to use the commonly used ReentrantLock code to analyze the AQS source code, take a look at the internal implementation, then comprehensively analyze the AQS, and finally analyze the synchronizer based on it.

For the time being, it can be understood that the core of AQS is composed of two parts:

- The int field state modified by volatile indicates the synchronizer state

- FIFO synchronization queue, which is composed of nodes

Node mode

The fields contained in the Node definition mean that the Node has the attributes of the mode.

- EXCLUSIVE mode

When one thread obtains, other threads will fail to obtain

- SHARED mode

When multiple threads acquire concurrently, they may all succeed

There is a nextWaiter field in the Node. It doesn't look like the name. In fact, this is a common field for two queues. One is used to point to a Node under the condition queue, and the other can represent the mode of synchronization queue nodes, which can be seen in the SHARED and EXCLUSIVE definitions of the following code.

Because there are conditional queues only in exclusive mode, you only need to define a node in shared mode to distinguish between the two modes:

/** Marker to indicate a node is waiting in shared mode */

static final Node SHARED = new Node();

/** Marker to indicate a node is waiting in exclusive mode */

static final Node EXCLUSIVE = null;

/**

* Link to next node waiting on condition, or the special

* value SHARED. Because condition queues are accessed only

* when holding in exclusive mode, we just need a simple

* linked queue to hold nodes while they are waiting on

* conditions. They are then transferred to the queue to

* re-acquire. And because conditions can only be exclusive,

* we save a field by using special value to indicate shared

* mode.

*/

Node nextWaiter;

/**

* Returns true if node is waiting in shared mode.

*/

final boolean isShared() {

return nextWaiter == SHARED;

}

SHARED is a static variable, and the address will not change, so you can directly use the isShared() method to directly determine the mode. Exclusive mode is like the lock ability of universal cognition, such as ReentrantLock. The sharing mode supports more capabilities for other requirements as synchronizers, such as Semaphore.

Node status

Node status is an int field waitStatus modified by volatile.

- CANCELLED(1): indicates that the current node has canceled scheduling. When a timeout or is interrupted (in the case of responding to an interrupt), it will trigger to change to this state, and the node after entering this state will not change again.

- SIGNAL(-1): indicates that the successor node is waiting for the current node to wake up. When the successor node joins the queue, the status of the predecessor node will be updated to SIGNAL.

- CONDITION(-2): indicates that the node is waiting on the condition. When other threads call the signal() method of the condition, the node in the condition state will be transferred from the waiting queue to the synchronization queue, waiting to obtain the synchronization lock.

- PROPAGATE(-3): in the sharing mode, the predecessor node will wake up not only its successor node, but also the successor node.

- 0: the default status when new nodes join the queue.

A positive number means that the node does not need to wake up, so in some cases, you only need to judge the positive and negative values of the value.

AQS exclusive mode source code

Start with ReentrantLock to learn about the source code in AQS exclusive mode.

Test code:

public class AQSTest implements Runnable{

static ReentrantLock reentrantLock = new ReentrantLock();

public static void main(String[] args) throws InterruptedException {

AQSTest aqsTest = new AQSTest();

Thread t1 = new Thread(aqsTest);

t1.start();

Thread t2 = new Thread(aqsTest);

t2.start();

t1.join();

t2.join();

}

/**

* Execution takes 5 seconds

*/

@Override

public void run() {

reentrantLock.lock();

try {

TimeUnit.SECONDS.sleep(5);

} catch (InterruptedException e) {

e.printStackTrace();

}finally {

reentrantLock.unlock();

}

}

}

The test code simulates the scene of two threads competing for locks. One thread obtains the lock first, and the other thread enters the queue to wait. Five seconds later, the first thread releases the thread, and the second thread obtains the lock.

Acquire lock

The acquire method in AQS provides the ability to acquire locks in exclusive mode.

/**

* Acquires in exclusive mode, ignoring interrupts. Implemented

* by invoking at least once {@link #tryAcquire},

* returning on success. Otherwise the thread is queued, possibly

* repeatedly blocking and unblocking, invoking {@link

* #tryAcquire} until success. This method can be used

* to implement method {@link Lock#lock}.

*

* @param arg the acquire argument. This value is conveyed to

* {@link #tryAcquire} but is otherwise uninterpreted and

* can represent anything you like.

*/

@ReservedStackAccess

public final void acquire(int arg) {

if (!tryAcquire(arg) &&

acquireQueued(addWaiter(Node.EXCLUSIVE), arg))

selfInterrupt();

}

First, execute tryAcquire. If successful, it will end. If failed, it will wait in the queue.

Join the team

addWaiter

Create a queued Node for the current thread according to the incoming mode. There is a premise here that the execution of the team entry process means that competition has occurred. This premise can help you read the following code.

/**

* Creates and enqueues node for current thread and given mode.

*

* @param mode Node.EXCLUSIVE for exclusive, Node.SHARED for shared

* @return the new node

*/

private Node addWaiter(Node mode) {

// Create Node

Node node = new Node(Thread.currentThread(), mode);

// Try the fast path of enq; backup to full enq on failure

// First, execute the fast path join logic (under the premise of competition, the prediction head and tail nodes have been initialized) [1]

Node pred = tail;

// Tail node is not empty

if (pred != null) {

node.prev = pred;

// Try to join the queue behind the tail node [2]

if (compareAndSetTail(pred, node)) {

pred.next = node;

return node;

}

}

// The complete execution path is placed at the end of the queue

enq(node);

return node;

}

/**

* Inserts node into queue, initializing if necessary. See picture above.

* @param node the node to insert

* @return node's predecessor

*/

private Node enq(final Node node) {

// spin

for (;;) {

Node t = tail;

// This node is uninitialized if the header and footer are 3

if (t == null) { // Must initialize

// cas set header node [ 4 ]

if (compareAndSetHead(new Node()))

// The tail node is consistent with the head node

tail = head;

} else {// This branch is consistent with the logic of fast path queue in front [5]

node.prev = t;

if (compareAndSetTail(t, node)) {

t.next = node;

return t;

}

}

}

}

/**

* Head of the wait queue, lazily initialized. Except for

* initialization, it is modified only via method setHead. Note:

* If head exists, its waitStatus is guaranteed not to be

* CANCELLED.

*/

private transient volatile Node head;

/**

* Tail of the wait queue, lazily initialized. Modified only via

* method enq to add new wait node.

*/

private transient volatile Node tail;

/**

* CAS head field. Used only by enq.

*/

private final boolean compareAndSetHead(Node update) {

return unsafe.compareAndSwapObject(this, headOffset, null, update);

}

/**

* CAS tail field. Used only by enq.

*/

private final boolean compareAndSetTail(Node expect, Node update) {

return unsafe.compareAndSwapObject(this, tailOffset, expect, update);

}

- [1] , the idea of fast path joining the team is also found in other codes of this class. My understanding is to predict that a branch is the branch that occurs in most cases in the code branch, so give priority to execution. If not, go through the complete code without entering. Here, enq is the complete code, including the initialization logic for processing the head and tail nodes. Because the head and tail nodes are operations that are executed only once in the queue life cycle, most scenarios do not need to consider the branches of initializing the head and tail nodes, so there is the so-called fast path here

- [2] , cas operates the tail node successfully before executing the last step of tail queue entry: the next of the original tail node points to itself. For a two-way linked list, inserting an element in the tail requires two steps: A, your prev points to the current tail node; B. The next of the current tail node points to itself. In the synchronization queue of AQS, there is also a tail pointing to the current tail node, so another step is to point the tail to yourself, a total of three steps. Go back and read the code carefully. Its operation steps are: first set your prev to point to the possible tail node, and then cas operation tail (compare and set tail) to point to yourself. If successful, update the next point of the tail node to point to yourself. In the concurrent scenario, cas may fail, so its prev may need to be changed constantly, and the next of the tail node in the current queue is only changed once after cas sets tail.

- [3] , the head and tail nodes are lazily initialized and will not be initialized until there is no queue operation. Initialization is to set a Node with waitstatus 0 to the head, and then assign a value to the tail Node (tail = head;).

- [4] [5] initializing the head and tail node consists of two steps. The tail node will be set only after the head node cas is set successfully. Therefore, it can be determined that as long as the tail node is not null, the head node must not be empty.

Assuming that compareAndSetHead successfully sets the head, will the tail node have been modified by other threads when performing tail node assignment?

No, because the compareAndSetHead operation is only triggered when the enq method is called and the header node is not initialized. If the header node is initialized successfully, the tail at this time must be null. Therefore, the previous logic cannot modify the branch code whose tail is not null, and can only enter the branch of the initialization header and tail node, so it will spin on the compareAndSetHead, Until the end of the tail setting, you can enter the branch code where the tail is not null. Think about this design carefully. As long as you first judge whether the tail is empty, it is equivalent to judging whether the initialization is over.

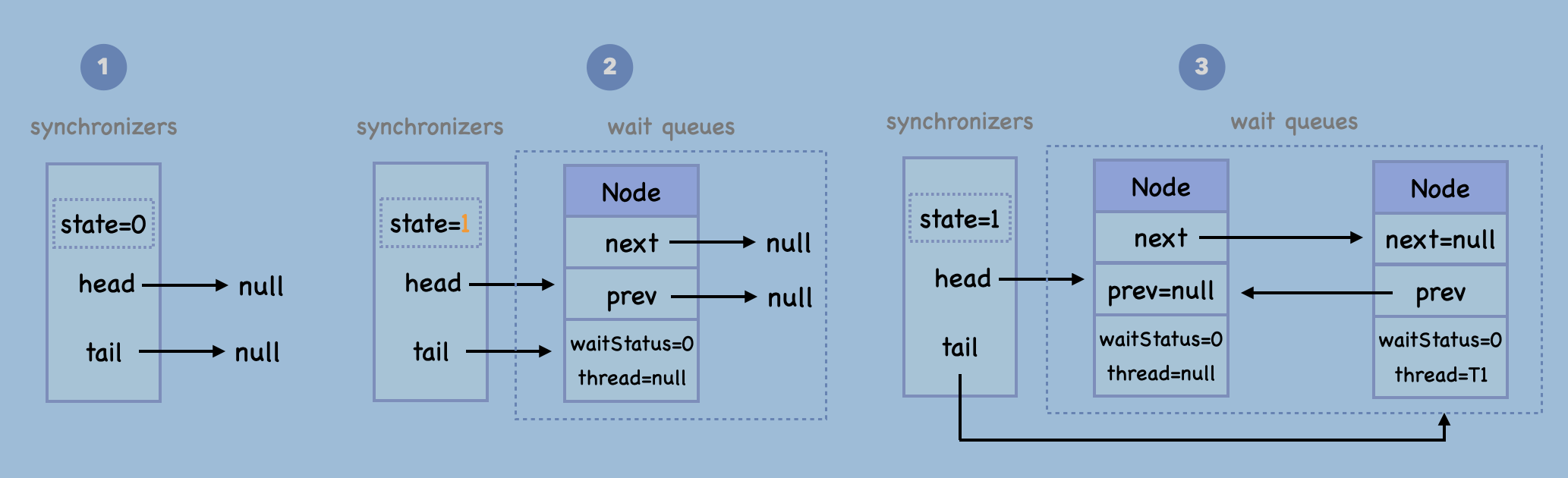

The following figure shows the changes of synchronization queue nodes in this scenario:

- 1. Initially, the head and tail of the synchronization queue are null, and the state is 0

- In this case, it is not necessary to initialize the first thread to null and set the first and second threads to null. When another thread obtains the lock, it needs to wait in the queue. The first cycle of spin in enq method will trigger the initialization of the head and tail nodes. The thread of this node is null, waitStatus is the initialization state 0, and the points of next and prev are also null.

- 3. After initializing the head and tail nodes, the next step is to put the newly created Node at the end of the synchronization queue.

acquireQueued

The previous queue has been successfully queued, but the thread has not yet entered the waiting state. Next, naturally, the thread will be turned into waiting, just as it has been physically handled and queued, it is still short of waiting in magic.

/**

* Acquires in exclusive uninterruptible mode for thread already in

* queue. Used by condition wait methods as well as acquire.

*

* @param node the node

* @param arg the acquire argument

* @return {@code true} if interrupted while waiting

*/

@ReservedStackAccess

final boolean acquireQueued(final Node node, int arg) {

// Identity acquisition failed

boolean failed = true;

try {

// Identify whether the thread is interrupted (the waiting is in the following spin, and the thread interrupt status will be checked after waking up in the future)

boolean interrupted = false;

// Spin [1]

for (;;) {

// Get the front node of the current node

final Node p = node.predecessor();

// If the previous node is already the head node, it means that the opportunity has come. Conduct a tryAcquire and try to obtain the lock [2]

if (p == head && tryAcquire(arg)) {

// Update header node

setHead(node);

// Node next reference before disconnection

p.next = null; // help GC

failed = false;

return interrupted;

}

// Check whether park is required, and wait for threads if necessary [3]

// The logic of shouldParkAfterFailedAcquire is that I have to lie down and have a rest. I have to make sure someone in front can wake me up

if (shouldParkAfterFailedAcquire(p, node) &&

parkAndCheckInterrupt())

interrupted = true;

}

} finally {

if (failed)

// Cancels the node trying to acquire the lock

cancelAcquire(node);

}

}

/**

* Checks and updates status for a node that failed to acquire.

* Returns true if thread should block. This is the main signal

* control in all acquire loops. Requires that pred == node.prev.

*

* @param pred node's predecessor holding status

* @param node the node

* @return {@code true} if thread should block

*/

private static boolean shouldParkAfterFailedAcquire(Node pred, Node node) {

// waitStatus of the previous node

int ws = pred.waitStatus;

// The status of SIGNAL is returned directly

if (ws == Node.SIGNAL)

/*

* This node has already set status asking a release

* to signal it, so it can safely park.

*/

return true;

// If the status is greater than 0, the previous node is in cancelled status, but has not been removed from the queue

if (ws > 0) {

/*

* Predecessor was cancelled. Skip over predecessors and

* indicate retry.

*/

do {

// To remove a node with a status greater than 0 here is to move the prev of the current node forward

node.prev = pred = pred.prev;

// Move forward until you find a node that is not in the cancelled state

} while (pred.waitStatus > 0);

// Front node next setting (general operation of bidirectional linked list)

pred.next = node;

} else {

/*

* waitStatus must be 0 or PROPAGATE. Indicate that we

* need a signal, but don't park yet. Caller will need to

* retry to make sure it cannot acquire before parking.

*/

// Before cas is set, the node state is SIGNAL. This cas operation requires the external caller to confirm again whether the lock cannot be obtained before performing the park operation

compareAndSetWaitStatus(pred, ws, Node.SIGNAL);

}

return false;

}

/**

* CAS waitStatus field of a node.

*/

private static final boolean compareAndSetWaitStatus(Node node,

int expect,

int update) {

return unsafe.compareAndSwapInt(node, waitStatusOffset,

expect, update);

}

/**

* Sets head of queue to be node, thus dequeuing. Called only by

* acquire methods. Also nulls out unused fields for sake of GC

* and to suppress unnecessary signals and traversals.

*

* @param node the node

*/

// Set the incoming node as head and erase unnecessary references in the node. Note that there is no cas operation,

private void setHead(Node node) {

head = node;

node.thread = null;

node.prev = null;

}

/**

* Convenience method to park and then check if interrupted

*

* @return {@code true} if interrupted

*/

private final boolean parkAndCheckInterrupt() {

LockSupport.park(this);

return Thread.interrupted();

- [1] In this spin, it is necessary to spin when the state of the former node fails to be modified or the state of the former node is cancelled.

- [2] If the front node is already a head, it means that it is qualified to compete for lock resources. Of course, if it is not obtained, it is still the logic of waiting. If it is obtained, it means that the front node did not obtain the lock when it joined the queue, and the lock has been released at this time, then it will become the thread to obtain the lock, The own node in the queue will replace the current head node as a new head.

The setHead method does not perform spin operation, but is a collection of several simple assignment operations. Because this method is executed after the success of tryAcquire or tryAcquireShared, it does not need to consider concurrency. In the method, both thread and prev will be set to null. In fact, these two information in the lock node have no effect, and the next value of its previous node will also be null, cutting off the reference to itself.

- [3] The synchronization queue determines whether to wake up the post Node according to the waitStatus in its own Node. If it is in SIGNAL status, it will wake up the post Node. Therefore, before each queued Node enters the waiting state, it is necessary to ensure that the state of the previous Node is the SIGNAL state, so as to ensure that it can be awakened in the future. This is what the shouldParkAfterFailedAcquire method does.

The shouldParkAfterFailedAcquire method name also clearly indicates that this is the precondition judgment of thread park. As long as this method returns true, the thread can wait at ease. We'll see the implementation code of the specific method in detail. When we continue to look down, we will find that true will be returned only when we judge that the waitstatus of the front node is in the SIGNAL state. There are two other situations: A. if the status of the front node is in the cancel state, the reference of the front node will be moved forward until the front node is not the cancel node, and then exit the method to continue spinning; B. If the front node is in 0 or PROPAGATE state, cas will be modified to SIGNAL state. Whether it succeeds or fails, it will exit and continue to spin. Therefore, except that the front node is already in the SIGNAL state, it will spin again in other cases. At the beginning of the spin, it will judge the head node once, so as to ensure that the node can quickly obtain the spin after the head. As mentioned in [2] above, the wait logic will still be used when it is not obtained, that is to say, the waitstatus state of the head node must already be the SIGNAL state.

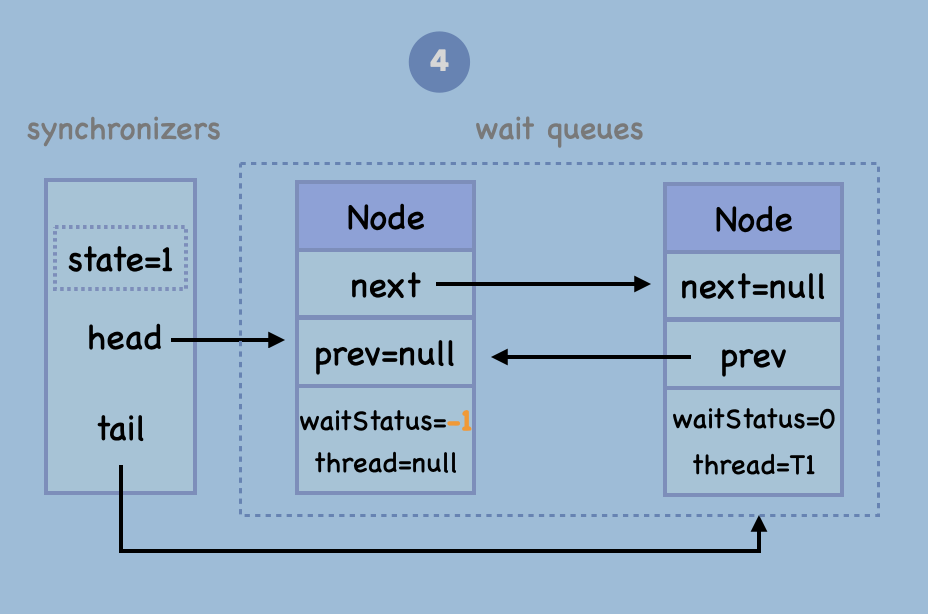

Continue the previous test code and continue to illustrate the changes of node data:

Supplementary notes:

Because the test code is a thread acquiring lock and a thread waiting, there will only be two nodes in the queue, one head and one waiting node. In the spin code of the waiting node's waitStatus before setting, the judgment of whether the former node is head is true. Therefore, tryAcquire will be executed at the first spin, After shouldParkAfterFailedAcquire is executed, the waitStatus of the head node is updated to the SIGNAL state, and then tryAcquire is executed again. Because the previous node has not released the lock, both tryacquires fail, and then park is executed, and the thread enters the waiting state.

In the last finally code block in the acquirequeueueueueueueueued method, judge whether the failed field is true. If so, the cancelAcquire method will be executed to cancel the node. When will the failed field be true? Some students have also thought about this problem . I have another understanding: originally, AQS is provided in the form of a framework. Subclasses implement some methods to achieve their desired synchronizer form. The tryAcquire method here is implemented by subclasses. Since it is implemented by subclass extension, it is impossible to guarantee whether this method will run out of the inheritance interrupt spin and lead to the execution of cancelAcquire method.

By the way, read the source code of cancelAcquire method:

/**

* Cancels an ongoing attempt to acquire.

*

* @param node the node

*/

private void cancelAcquire(Node node) {

// Ignore if node doesn't exist

if (node == null)

return;

node.thread = null;

// Skip cancelled predecessors

// In this section, when a node is in cancellation status, the node forward code is consistent with shouldParkAfterFailedAcquire

Node pred = node.prev;

while (pred.waitStatus > 0)

node.prev = pred = pred.prev;

// predNext is the apparent node to unsplice. CASes below will

// fail if not, in which case, we lost race vs another cancel

// or signal, so no further action is necessary.

Node predNext = pred.next;

// Can use unconditional write instead of CAS here.

// After this atomic step, other Nodes can skip past us.

// Before, we are free of interference from other threads.

node.waitStatus = Node.CANCELLED;

// If we are the tail, remove ourselves.

// If you are the tail node, the operation is relatively simple. cas operates the tail point, and then sets the prev point of the front node to null

if (node == tail && compareAndSetTail(node, pred)) {

compareAndSetNext(pred, predNext, null);

} else {

// If successor needs signal, try to set pred's next-link

// so it will get one. Otherwise wake it up to propagate.

int ws;

// It is neither the tail nor the back node of the head. Judge whether the waitStatus is SIGNAL. If not, cas will set it to SIGNAL once

if (pred != head &&

((ws = pred.waitStatus) == Node.SIGNAL ||

(ws <= 0 && compareAndSetWaitStatus(pred, ws, Node.SIGNAL))) &&

pred.thread != null) {

Node next = node.next;

if (next != null && next.waitStatus <= 0)

// Connect the front node and back node of your own node

compareAndSetNext(pred, predNext, next);

} else {

// The front node is head. At this time, its waitStatus is CANCELLED. Unparkwinner will skip its own node to wake up the qualified nodes

unparkSuccessor(node);

}

node.next = node; // help GC

}

}

Release lock

/**

* Releases in exclusive mode. Implemented by unblocking one or

* more threads if {@link #tryRelease} returns true.

* This method can be used to implement method {@link Lock#unlock}.

*

* @param arg the release argument. This value is conveyed to

* {@link #tryRelease} but is otherwise uninterpreted and

* can represent anything you like.

* @return the value returned from {@link #tryRelease}

*/

@ReservedStackAccess

public final boolean release(int arg) {

// First, perform a release operation [1]

if (tryRelease(arg)) {

// The node holding the lock is always the head node [2]

Node h = head;

if (h != null && h.waitStatus != 0)

// Node thread after wake-up

unparkSuccessor(h);

return true;

}

return false;

}

- [1] This release operation is performed first. Only when it is successful will it enter the operation of the node after waking up from the beginning. Therefore, this important prerequisite is in the code logic of the subsequent unparksuccess, which needs special attention.

- [2] Here, the head cannot be null. This is determined by the whole synchronization queue mechanism. Whether it is the initialized head node or the later node that will be awakened to obtain the lock is replaced by the head node. It can be considered that the head node represents the node that obtains the lock, although this head node does not maintain the thread. Then it will judge that the waitStatus status of the head is not 0, because it has been mentioned in the previous queue code that the front node needs to be set to the SIGNAL state before park ing its own thread.

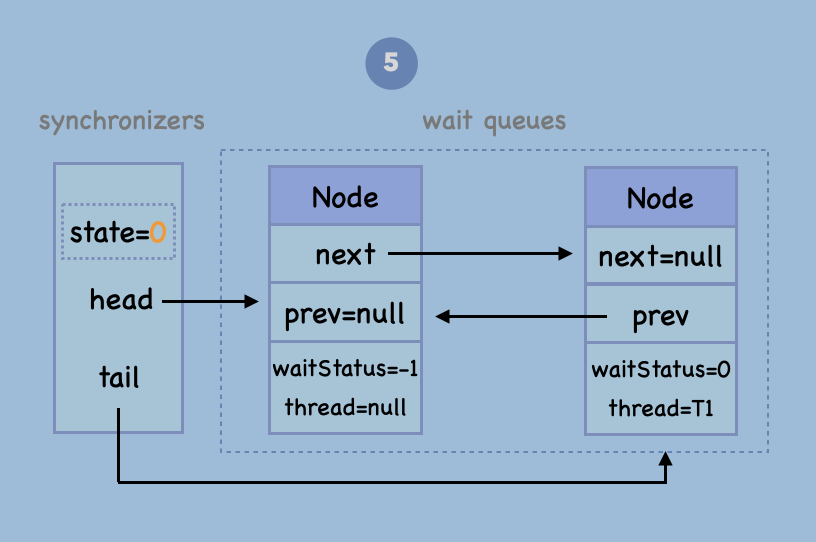

Assuming that the unlock in the test code is executed, the change of node data is as follows:

Node thread after wake-up

unparkSuccessor

/**

* Wakes up node's successor, if one exists.

*

* @param node the node

*/

private void unparkSuccessor(Node node) {

/*

* If status is negative (i.e., possibly needing signal) try

* to clear in anticipation of signalling. It is OK if this

* fails or if status is changed by waiting thread.

*/

int ws = node.waitStatus;

// As long as the status is less than 0, cas is set to 0 [1] once

if (ws < 0)

compareAndSetWaitStatus(node, ws, 0);

/*

* Thread to unpark is held in successor, which is normally

* just the next node. But if cancelled or apparently null,

* traverse backwards from tail to find the actual

* non-cancelled successor.

*/

Node s = node.next;

// At this time, there is no back node or the status of the back node is cancelled [2]

if (s == null || s.waitStatus > 0) {

s = null;

for (Node t = tail; t != null && t != node; t = t.prev)

if (t.waitStatus <= 0)

s = t;

}

if (s != null)

// Wake up node holding thread [3]

LockSupport.unpark(s.thread);

}

- [1] Perform a cas reset waitStatus, but there is no spin blessing, so failure is allowed

- [2] In the comment, the information is as follows: the thread that needs to wake up is on the next node. If the node pointed to from next does not match the wake-up node (null or the status is cancelled), then look forward to the node that has not been cancelled from the end of the queue. Of course, the node that needs to wake up may not be found. The comment does not explain why this is necessary. Let's review the code analysis of the node placed at the end of the queue (queue [2]). compareAndSetTail succeeds, which ensures that the prev of the current node and the tail of the queue are successful, and the last step is pred The next point is executed after the cas operation is successful. There will be such a scenario that the cas execution is successful and has not been executed to pred Next points to the operation. At this time, if the queue looks for a node that has not been cancelled from the front to the back, it will find null, and there is no problem traversing from the tail to the front.

- [3] The unpark operation corresponds to the previous queue waiting for the park operation, that is, the awakened thread will continue to execute from the waiting place at that time. The code that continues to execute is the spin part of acquirequeueueueued. Therefore, after waking up the waiting thread, the spin code will check whether the head is in front of its own node. If so, it will perform a lock acquisition operation. If not, it will execute the shouldParkAfterFailedAcquire method.

According to the unlock trigger in the previous example, first execute the unparksuccess method to update the waitStatus of the head node to 0, and then unpark the post node thread. The awakened thread starts to execute the spin of the acquirequeueueueueueueued method. If it is judged that the front node of the current thread node is the head, it will execute tryAcquire to return success, and then start to replace the head node.

The change of queue node data is shown in the figure below:

ReentrantLock source code

ReentrantLock is a reentrant lock based on AQS and supports fairness and unfairness.

After analyzing the AQS code above, the code of ReentrantLock becomes extremely simple. Considering the limited space, the following only analyzes fairness and reentry, and reentrant lock will be used again in subsequent articles.

Fair / unfair

ReentrantLock internally implements an internal abstract class Sync. Its subclasses include FairSync and NonfairSync. You can see from the name that the specific fair and unfair implementations of these two classes are different.

The FIFO synchronization queue built in AQS is naturally fair after joining the team. When will it be unfair?

Unfairness here means that a thread that has just come to obtain resources will compete with the thread that has been queued in the queue. The threads waiting in the queue are unlucky and can never compete with the new threads. If the new threads keep coming, the threads waiting in the queue will succeed and wait a long time, then the so-called thread hunger problem will appear, This is the injustice that needs to be solved here. Fairness is to determine the order of obtaining resources according to the order of joining the queue. Then the new thread should obtain resources after all the threads that have joined the queue. To achieve this fair effect, when each thread comes in to obtain resources, first judge whether there are other threads waiting to obtain resources. If so, you don't need to obtain resources, just join the team directly.

hasQueuedPredecessors

The judgment method is hasQueuedPredecessors:

/**

* Queries whether any threads have been waiting to acquire longer

* than the current thread.

**/

public final boolean hasQueuedPredecessors() {

// The correctness of this depends on head being initialized

// before tail and on head.next being accurate if the current

// thread is first in queue.

Node t = tail; // Read fields in reverse initialization order

Node h = head;

Node s;

return h != t &&

((s = h.next) == null || s.thread != Thread.currentThread());

}

Intercept some method comments: as long as a thread waits longer than the current thread, it should return true, otherwise it should return false.

Although there are not many judgment codes in this method, it will be a little confused to read it directly, but with the foreshadowing of the previous code analysis, this code can be understood instantly.

A key correlation information is the initialization of head and tail nodes in the enq method described above. We already know that the initialization of head and tail nodes is not an atomic operation, which is divided into two steps:

compareAndSetHead(new Node()) // 1 tail = head // 2

Therefore, this judgment is analyzed from the perspective of initializing the head and tail nodes. The following is the analysis of three scenarios:

- 1. It is completely uninitialized, that is, there is no competition scenario. All head and tail are null, H= T is false and returns false

- 2. The initialization is in the middle, that is, compareAndSetHead(new Node()) has not been executed, and tail = head; has not been executed;, Tail is null, head is not equal to null, H= T is true because the next point of head is still empty, (s = h.next) == null is true and returns true. This scenario means that a thread triggers the initialization of the head and tail nodes due to competition. Although it has not been successfully queued, it is still considered to be ahead of the current thread.

- 3. Initialization ends. For H= There are several situations T:

- There is only head node in the queue, and there is no successful thread. Because initialization has ended, head and tail point to the same object, H= T is false and returns false

- There are only head nodes in the queue, and there are successful threads. Because initialization has ended, head and tail point to the same object, H= T is false and returns false. It looks the same as the first case. There are the following cases when expanding

- No thread is queued. In this case, only the current thread is acquiring operations, so there is no need to queue. It is OK to return false

- A thread is joining the queue, but the compareAndSetTail operation is not successful. This scenario can also be ignored, because the order of joining the queue is cas operation, and the code does not determine which thread is in the order before cas is not successful.

- In addition to the head node, there is another node in the queue. This is the successful queue entry of the node after the head, which means that the compareAndSetTail operation is successful, H= If t is true, there are two scenarios,

- The next pointing operation of head (t.next = node), (s = h.next) == null is false, and the returned result is s.thread= Thread. The result of currentthread() (if the second node returns true, if not false)

- The next pointing operation of head has not been executed yet, (s = h.next) == null is true and returns true. This scenario is the same as the initialization of head and tail, and the queue operation is half.

Because the node has interruption, cancellation and timeout, this method cannot guarantee the correctness of the returned results in the case of concurrent changes in the node state.

It is necessary to understand the comment Read fields in reverse initialization order. Some people on the Internet also asked why it is necessary to obtain tail before obtaining head?

The essential reason is that the initialization of the head and tail nodes is also sequential. The tail will not be set until the cas sets the head successfully. The read order here will not be reordered because both tail and head are volatile. We will not describe various concurrency situations in detail here, but only assume that there will be problems when reading the head first and then the tail

If you read head first and then tail, there will be problems in the following scenarios, which should be understood at a glance:

Then head is null, tail is not null, H= T is true, and then executing (s = h.next) == null will null the pointer. So there's no problem getting the tail first and then the head. If you're interested, you can deduce various scenarios that can be read in reverse order.

realization

The FairSync class in ReentrantLock is responsible for providing the ability of fair lock. The core is the user-defined tryAcquire method

/**

* Fair version of tryAcquire. Don't grant access unless

* recursive call or no waiters or is first.

*/

@ReservedStackAccess

protected final boolean tryAcquire(int acquires) {

final Thread current = Thread.currentThread();

int c = getState();

// Lock not acquired state

if (c == 0) {

// First use hasQueuedPredecessors to judge whether to queue, and then return false to conduct a cas competition

if (!hasQueuedPredecessors() &&

compareAndSetState(0, acquires)) {

// Sets the thread that currently acquires the lock

setExclusiveOwnerThread(current);

return true;

}

}

// When the lock is acquired, judge whether it is the lock acquired by yourself

else if (current == getExclusiveOwnerThread()) {

int nextc = c + acquires;

if (nextc < 0)

throw new Error("Maximum lock count exceeded");

setState(nextc);

return true;

}

return false;

}

The difference between the implementation of tryAcquire method of fair lock is that there is no! hasQueuedPredecessors's judgment is as like as two peas. With the understanding of the hasQueuedPredecessors method, the implementation of this fair lock is more profound.

Reentrant

Reentrant is the ability to support a thread to acquire a lock multiple times. When releasing a lock, it also needs to be released multiple times. This implementation is embodied in the Sync#nonfairTryAcquire method and FairSync#tryAcquire method. It uses if (current == getExclusiveOwnerThread()) to judge that if it is the current thread, it will accumulate state.

The implementation of tryRelease also handles reentrant logic:

protected final boolean tryRelease(int releases) {

int c = getState() - releases;

if (Thread.currentThread() != getExclusiveOwnerThread())

throw new IllegalMonitorStateException();

boolean free = false;

if (c == 0) {

free = true;

// It can only be set when state is reduced to 0

setExclusiveOwnerThread(null);

}

setState(c);

return free;

}

summary

This paper directly analyzes the core structure and source code of AQS source code in detail, and then uses ReentrantLock as the synchronizer to partially understand it, so as to lay a foundation for the interpretation of class source code in subsequent JUC. The content involved in this paper is the core part of the implementation basis of various synchronizers in jdk. Personal energy is limited. Please leave a message to point out the irregularities.

This article has been posted on the official account. Thank you for your attention and look forward to communicating with you.

[external chain picture transfer failed. The source station may have anti-theft chain mechanism. It is recommended to save the picture and upload it directly (img-a04tk683-164444535009)( https://images.cnblogs.com/cnblogs_com/killbug/2038380/o_210928142639qrcode_for_gh_587c1ab9714d_344.jpg%0A )]