LVS test report

test plan

- Basic Function Testing

- Flow pressure test

- Response time test

- Configuration Correctness Test

- Disaster Recovery Testing

Test points

- Basic Function Testing

- Client IP Address Correctness

- Real Server Access Internet Tests (including Iptables Rule Priorities)

- Flow pressure test

- Flow peak test

- CPU, network card IO, soft interrupt etc. after traffic reaches a certain value

- Connection number peak test

- When the number of connections reaches a certain value, memory, CPU, etc.

- Flow peak test

- Response time test

- Time comparison before and after adding LVS

- Configuration Correctness Test

- Expected value of RR algorithm (basic function)

- Performance under multiple configurations

- Does Forwarding Performance Affect by Adding Ten Thousands of Rules

- Disaster Recovery Testing

- Configuration export import test

testing environment

- CPU Intel(R) Xeon(R) CPU E5506 @ 2.13GHz x 8

- Memory 16G

- Network Card negotiated 1000baseT-FD

- System Ubuntu 12.04

- Kernel 3.5.0-23-generic

Measured results

1. Basic Functional Testing

Client Address Correctness

Access process Web Browser.Zhuhai 113.106.x.x -> LVS(58.215.138.160) -> RS(10.20.165.174) The RS Nginx log is as follows 113.106.x.x - - [12/Feb/2015:00:18:48 +0800] "GET / HTTP/1.1" 200 612 "

Conclusion:

Verify the correctness of client address in NAT mode to obtain real IP.

Real Server Accessing the Internet

The RS network is configured as follows. The gateway is the intranet IP of LVS.

auto eth0 iface eth0 inet static address 10.20.165.173 gateway 10.20.165.121 netmask 255.255.255.0

Add the following iptables rules under LVS

/sbin/iptables -t nat -I POSTROUTING -o eth0 -j MASQUERADE

Measurements:

zhangbo3@rise-vm-173:~$ ping 8.8.8.8 PING 8.8.8.8 (8.8.8.8) 56(84) bytes of data. 64 bytes from 8.8.8.8: icmp_req=1 ttl=44 time=62.0 ms 64 bytes from 8.8.8.8: icmp_req=2 ttl=44 time=62.2 ms

2. Flow and Pressure Testing

High Flow Testing

For a LVS, a high traffic test was carried out. During the test process, 200,20,000 requests were concurrently made.

For network card traffic only, memory, disk, CPU User time are not counted.

Each request returns a 7MB package.

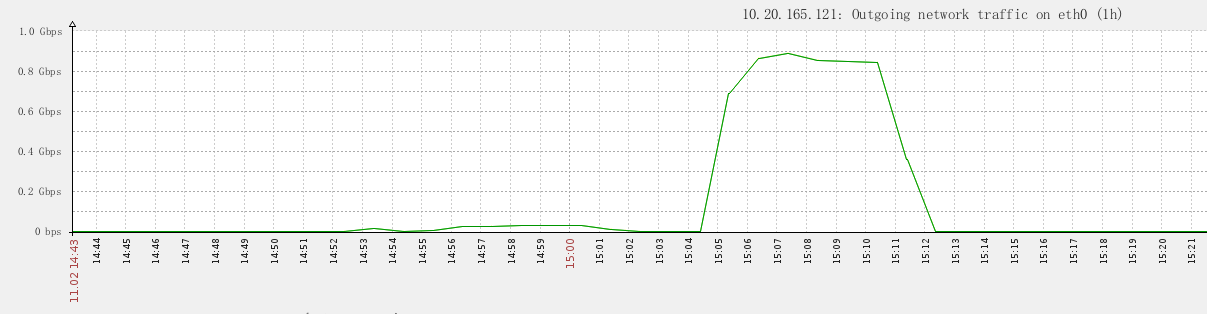

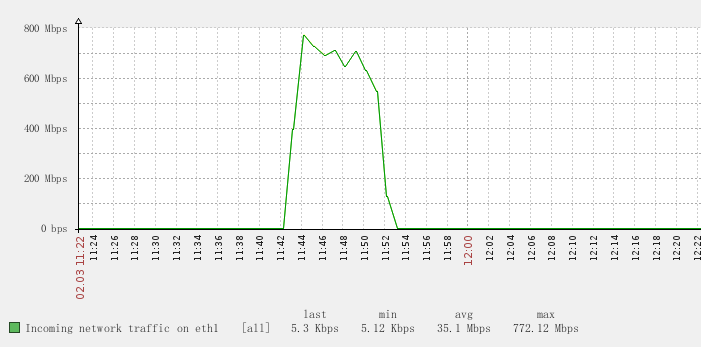

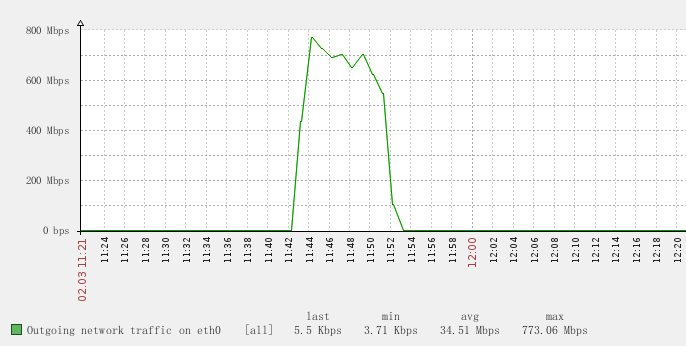

Pressure peak 800Mb

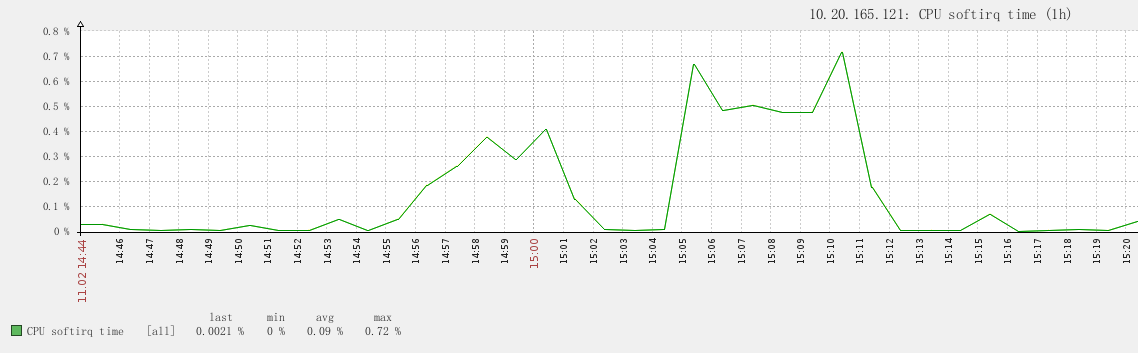

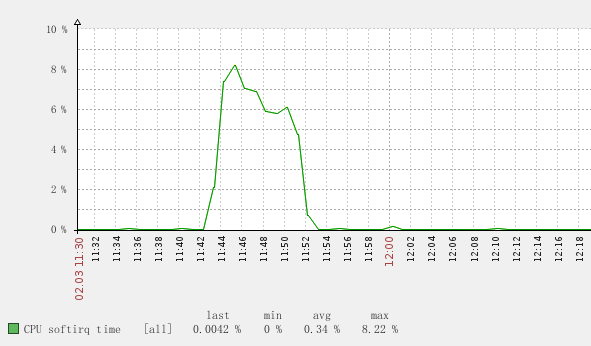

Soft interrupt at this time

The measured peak value of soft interrupt is only 0.7%.

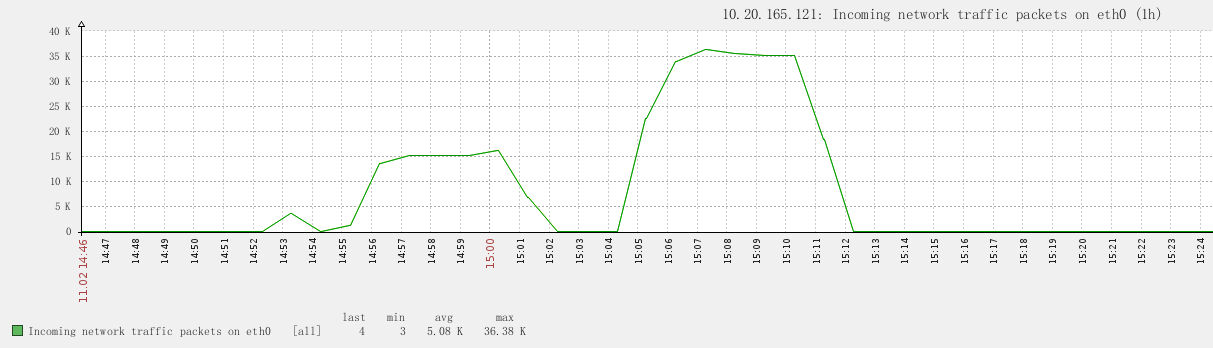

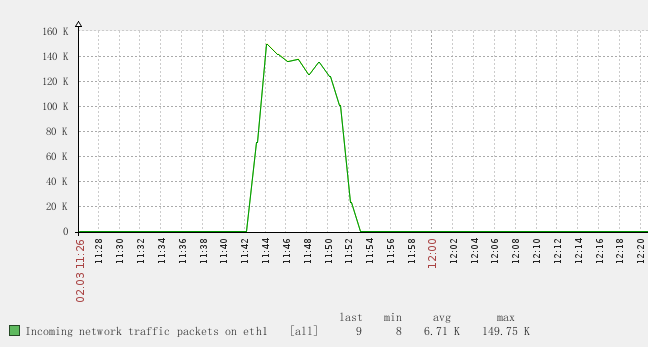

Number of IN packets at this time

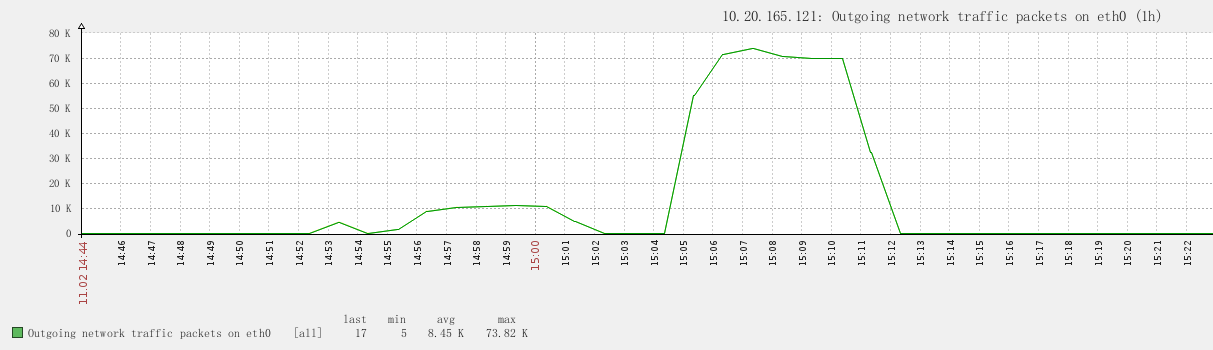

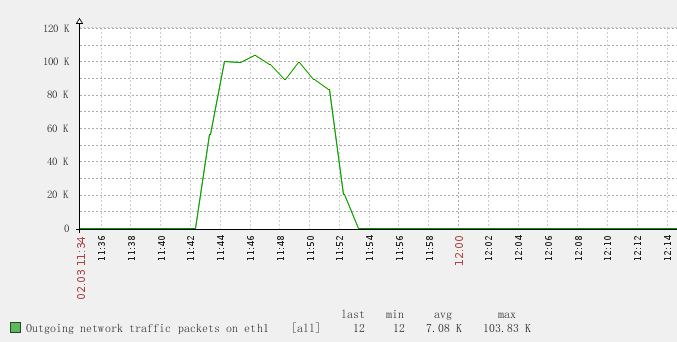

Number of OUT packages at this time

The peak value of IN + OUT is 100K

High concurrency package testing

A high concurrent package test is conducted for a LVS. During the test process, 80,000,4 KW requests are concurrent.

Each request returns a 2K-sized package.

Peak IN flow of 772 Mbps averages about 750 Mbps

Peak OUT flow of 773 Mbps averages about 750 Mbps

Peak IN Packet Number 149 KPS Average about 140 KPS

Peak OUT Packet Number 103KPS Average Approximate 90KPS

Soft interrupt peak 8.2% during testing averages about 7%.

Test results:

The performance of LVS is tested in the case of large packet traffic and high concurrency of small package.

In the case of high traffic, the performance of network card can be fully utilized, and there are no packet loss and error. The traffic of 1000M network card reaches 800Mb, and the average soft interrupt is 0.7%.

In the case of high concurrent packet, the bandwidth is 750 Mbps, the packet flow is 250 KPs (approaching the network card limit), and the average soft interrupt is 7%.

The test results in both cases show that LVS can perform normally within the rated value of the network card, regardless of high traffic or high concurrency.

All the above tests are the performance of soft interrupt binding for multi-queue network cards.

3. Response time test

The corresponding time changes before and after increasing LVS

10,000 concurrent, 10W request

In the case of adding a Real Server to the back end of LVS

Concurrency Level: 10000 Time taken for tests: 13.198 seconds Time per request: 0.132 [ms]

Test Realserver data separately without adding LVS

Concurrency Level: 10000 Time taken for tests: 14.474 seconds Time per request: 0.145 [ms]

Summary:

Before and after adding LVS, the response time has little effect.

4. Configuration Correctness Testing

Expected value of RR algorithm (basic function)

Two independent IP machines are used to access LVS for a large number of long connections. The following is the connection distribution of LVS.

zhangbo3@rise-rs-135:/usr/local/nginx/conf$ sudo ipvsadm -ln IP Virtual Server version 1.2.1 (size=4096) Prot LocalAddress:Port Scheduler Flags -> RemoteAddress:Port Forward Weight ActiveConn InActConn TCP 58.215.x.x:80 rr -> 10.20.165.173:80 Masq 1 3332 14797 -> 10.20.165.174:80 Masq 1 3198 14931

Summary:

RR algorithm, the same Src IP will also be directed to the same LVS

Performance under multiple configurations

Initially, in general configuration, single machine pressure measurement data

Concurrency Level: 10000 Time taken for tests: 5.530 seconds Complete requests: 50000 Failed requests: 0 Write errors: 0 Keep-Alive requests: 49836 Total transferred: 42149180 bytes HTML transferred: 30600000 bytes Requests per second: 9040.98 [#/sec] (mean) Time per request: 1106.074 [ms] (mean) Time per request: 0.111 [ms] (mean, across all concurrent requests) Transfer rate: 7442.78 [Kbytes/sec] received

Adding 1W port-mapped piezometric data to LVS

Concurrency Level: 10000 Time taken for tests: 5.588 seconds Complete requests: 50000 Failed requests: 0 Write errors: 0 Keep-Alive requests: 49974 Total transferred: 42149870 bytes HTML transferred: 30600000 bytes Requests per second: 8948.49 [#/sec] (mean) Time per request: 1117.506 [ms] (mean) Time per request: 0.112 [ms] (mean, across all concurrent requests) Transfer rate: 7366.76 [Kbytes/sec] received

Summary:

The performance of the system is not affected by adding the port mapping of the netbar.

5. Disaster recovery testing

Connection state testing

In the case of keeping alived dual-computer backup, after opening the connection status of LVS, the synchronization status found that there was no synchronization ESTABLISHED status, SYNC_RCV, TIME_WAIT status were synchronized, the handshake and closed status would be synchronized, but the status of ESTABLISHED would send a certain amount of data packets to synchronize, the default data packet was 3, 50% per second. Frequency of each package.

Configuration export import test

sudo ipvsadm -Sn Exportable current configuration