WeChat official account: big data development and operation framework

Follow to learn more about big data. For official account, please leave a message.

If you think "big data development operation and maintenance architecture" is helpful to you, please forward it to the circle of friends

Copy from WeChat official account, some of the format is chaotic, it is recommended to go to the official account directly.

Combined with the official website and network materials, this paper explains Flink's asynchronous I/O API for accessing external data storage. For users who are not familiar with asynchronous or event driven programming, it is recommended to reserve some knowledge about Future and event driven programming.

Flink asynchronous IO official document address:

https://ci.apache.org/projects/flink/flink-docs-release-1.13/zh/docs/dev/datastream/operators/asyncio/

The general flow of our program is to receive the user data user (ID, userNum and age) from the socket stream, use the userNum field in the record data to query the userName in the user name dictionary table userCode of Mysql database, fill the queried userName field back into the user, and finally save the backfilled data into the userInfo table of Mysql database.

1. Table creation statement:

Corresponding DDL statements of userCode (dictionary table) and userInfo table (target table):

CREATE TABLE `userInfo` ( `id` int(11) NOT NULL, `userNum` int(11) DEFAULT NULL, `userName` varchar(64) COLLATE utf8mb4_unicode_ci DEFAULT NULL, `age` int(11) DEFAULT NULL, PRIMARY KEY (`id`) ) ENGINE=InnoDB DEFAULT CHARSET=utf8mb4 COLLATE=utf8mb4_unicode_ci

CREATE TABLE `userCode` ( `id` int(11) NOT NULL, `name` varchar(50) COLLATE utf8mb4_unicode_ci DEFAULT NULL, PRIMARY KEY (`id`) ) ENGINE=InnoDB DEFAULT CHARSET=utf8mb4 COLLATE=utf8mb4_unicode_ci

2.pom.xml file, here Alibaba druid is introduced

<dependencies>

<dependency>

<groupId>org.apache.flink</groupId>

<artifactId>flink-streaming-java_${scala.binary.version}</artifactId>

<version>${flink.version}</version>

</dependency>

<dependency>

<groupId>org.apache.flink</groupId>

<artifactId>flink-java</artifactId>

<version>${flink.version}</version>

</dependency>

<dependency>

<groupId>org.apache.flink</groupId>

<artifactId>flink-clients_${scala.binary.version}</artifactId>

<version>${flink.version}</version>

</dependency>

<dependency>

<groupId>org.apache.flink</groupId>

<artifactId>flink-connector-kafka_${scala.binary.version}</artifactId>

<version>${flink.version}</version>

</dependency>

<dependency>

<groupId>mysql</groupId>

<artifactId>mysql-connector-java</artifactId>

<version>5.1.44</version>

</dependency>

<dependency>

<groupId>com.alibaba</groupId>

<artifactId>druid</artifactId>

<version>1.1.20</version>

</dependency>

</dependencies>3. The overall structure of the code is as follows:

Main function: FlinkMysqlAsyncIOMain

Asynchronous IO processing class, mainly used to query mysql:MysqlAsyncRichMapFunction

On the Sink side, output the result to mysql: MysqlSinkFunction

Database tool class: DBUtils

User entity class: user

package com.hadoop.ljs.flink112.study.asyncIO;

import org.apache.flink.streaming.api.datastream.AsyncDataStream;

import org.apache.flink.streaming.api.datastream.DataStream;

import org.apache.flink.streaming.api.environment.StreamExecutionEnvironment;

import java.util.concurrent.TimeUnit;

/**

* @author: Created By lujisen

* @company China JiNan

* @date: 2021-08-18 14:50

* @version: v1.0

* @description: com.hadoop.ljs.flink112.study.asyncIO

*/

public class FlinkMysqlAsyncIOMain {

public static void main(String[] args) throws Exception {

int maxIORequestCount = 20; /*Maximum number of asynchronous requests*/

StreamExecutionEnvironment senv = StreamExecutionEnvironment.getExecutionEnvironment();

DataStream<String> socketDataStream = senv.socketTextStream("192.168.0.111", 9999);

DataStream<User> userDataStream = AsyncDataStream.orderedWait(

socketDataStream,

new MysqlAsyncRichMapFunction(), //Custom Mysql asynchronous processing class

500000, //Asynchronous timeout

TimeUnit.MILLISECONDS, //Time unit

maxIORequestCount //Maximum number of asynchronous concurrent requests

);

userDataStream.print();

userDataStream.addSink(new MysqlSinkFunction<User>());

/* userDataStream.print();*/

senv.execute("FlinkMysqlAsyncIOMain");

}

}package com.hadoop.ljs.flink112.study.asyncIO;

import com.alibaba.druid.pool.DruidDataSource;

import java.sql.Connection;

import java.sql.PreparedStatement;

import org.apache.flink.api.java.tuple.Tuple2;

import org.apache.flink.configuration.Configuration;

import org.apache.flink.streaming.api.functions.async.ResultFuture;

import org.apache.flink.streaming.api.functions.async.RichAsyncFunction;

import java.sql.ResultSet;

import java.sql.SQLException;

import java.util.Collections;

import java.util.concurrent.*;

import java.util.function.Supplier;

/**

* @author: Created By lujisen

* @company China JiNan

* @date: 2021-08-18 10:25

* @version: v1.0

* @description: com.hadoop.ljs.flink112.study.asyncIO

*/

public class MysqlAsyncRichMapFunction extends RichAsyncFunction<String,User> {

/** The database client that can use the callback function to send requests concurrently, plus transient, will not be serialized */

/** Create thread pool and Mysql connection pool */

@Override

public void open(Configuration parameters) throws Exception {

super.open(parameters);

}

@Override

public void asyncInvoke(String line, ResultFuture<User> resultFuture) throws Exception {

String[] split = line.split(",");

User user = new User();

user.setId(Integer.valueOf(split[0]));

user.setUserNum(Integer.valueOf(split[1]));

user.setAge(Integer.valueOf(split[2]));

Future<String> dbResult = DBUtils.executorService.submit(new Callable<String>() {

@Override

public String call() throws Exception {

ResultSet resultSet=null;

PreparedStatement statement=null;

String sql = "SELECT id, name FROM userCode WHERE id = ?";

String userName=null;

if(user.getUserNum()==1001){

System.out.println("current getUserNum: "+user.getUserNum()+"Start sleeping for 30 seconds!!!");

Thread.sleep(30000);

}

try {

statement = DBUtils.getConnection().prepareStatement(sql);

statement.setInt(1,user.getUserNum());

resultSet = statement.executeQuery();

while (resultSet.next()) {

userName= resultSet.getString("name");

}

} finally {

if (resultSet != null) {

resultSet.close();

}

if (statement != null) {

statement.close();

}

}

return userName;

}

});

CompletableFuture.supplyAsync(new Supplier<String>() {

@Override

public String get() {

try {

return dbResult.get();

} catch (InterruptedException | ExecutionException e) {

// Handle exceptions explicitly.

return null;

}

}

}).thenAccept( (String userName) -> {

user.setUserName(userName);

resultFuture.complete(Collections.singleton(user));

});

}

@Override

public void close() throws Exception {

super.close();

}

}

package com.hadoop.ljs.flink112.study.asyncIO;

import org.apache.flink.configuration.Configuration;

import org.apache.flink.streaming.api.functions.sink.RichSinkFunction;

import java.sql.PreparedStatement;

/**

* @author: Created By lujisen

* @company China JiNan

* @date: 2021-08-18 15:02

* @version: v1.0

* @description: com.hadoop.ljs.flink112.study.asyncIO

*/

public class MysqlSinkFunction<U> extends RichSinkFunction<User> {

private static final String UPSERT_CASE = "INSERT INTO userInfo(id,userNum,userName,age) VALUES (?, ?,?,?)";

private PreparedStatement statement=null;

@Override

public void open(Configuration parameters) throws Exception {

super.open(parameters);

statement = DBUtils.getConnection().prepareStatement(UPSERT_CASE);

}

@Override

public void invoke(User user, Context context) throws Exception {

statement.setInt(1,user.getId());

statement.setInt(2, user.userNum);

statement.setString(3, user.getUserName());

statement.setInt(4, user.getAge());

statement.addBatch();

statement.executeBatch();

}

@Override

public void close() throws Exception {

super.close();

if(statement!=null){

statement.close();

}

}

}

package com.hadoop.ljs.flink112.study.asyncIO;

import com.alibaba.druid.pool.DruidDataSource;

import java.sql.Connection;

import java.sql.SQLException;

import java.util.concurrent.ExecutorService;

import static java.util.concurrent.Executors.newFixedThreadPool;

/**

* @author: Created By lujisen

* @company China JiNan

* @date: 2021-08-18 15:19

* @version: v1.0

* @description: com.hadoop.ljs.flink112.study.asyncIO

*/

public class DBUtils {

private static String jdbcUrl = "jdbc:mysql://192.168.0.111:3306/lujisen?characterEncoding=utf8";

private static String username = "root";

private static String password = "123456a?";

private static String driverName = "com.mysql.jdbc.Driver";

public static Connection connection=null;

public static transient ExecutorService executorService = null;

public static transient DruidDataSource dataSource = null;

/*Connection pool maximum threads*/

private static int maxPoolConn=20;

/*initiate static*/

static {

//Create thread pool

executorService = newFixedThreadPool(maxPoolConn);

dataSource=new DruidDataSource();

dataSource.setDriverClassName(driverName);

dataSource.setUsername(username);

dataSource.setPassword(password);

dataSource.setUrl(jdbcUrl);

dataSource.setMaxActive(maxPoolConn);

}

public static Connection getConnection() throws SQLException {

if(connection==null){

connection= dataSource.getConnection();

}

return connection;

}

}package com.hadoop.ljs.flink112.study.asyncIO;

/**

* @author: Created By lujisen

* @company China JiNan

* @date: 2021-08-18 10:13

* @version: v1.0

* @description: com.hadoop.ljs.flink112.study.asyncIO

*/

public class User {

int id;

int userNum;

String userName;

int age;

public User() {

}

public User(int id, int userNum, String userName, int age) {

this.id = id;

this.userNum = userNum;

this.userName = userName;

this.age = age;

}

public int getId() {

return id;

}

public void setId(int id) {

this.id = id;

}

public int getUserNum() {

return userNum;

}

public void setUserNum(int userNum) {

this.userNum = userNum;

}

public String getUserName() {

return userName;

}

public void setUserName(String userName) {

this.userName = userName;

}

public int getAge() {

return age;

}

public void setAge(int age) {

this.age = age;

}

@Override

public String toString() {

return "User{" +

"id=" + id +

", userNum=" + userNum +

", userName='" + userName + '\'' +

", age=" + age +

'}';

}

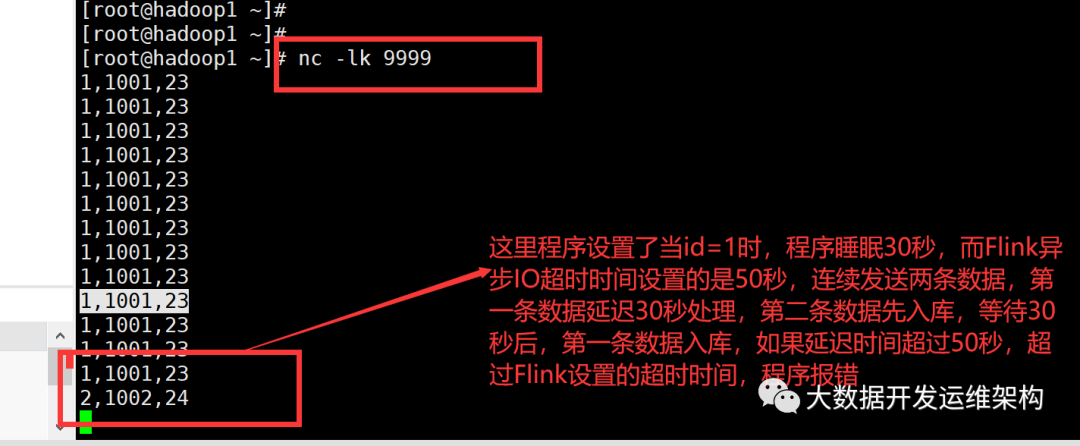

}Note: the above multi table data can be ignored here, and only the next two data can be sent. The Flink asynchronous IO timeout is set to 50s in the Main function, while the asyncInvoke function processing logic of MysqlAsyncRichMapFunction file. When the data id is 1, the process sleeps for 30 seconds, the first data blocks execution, and the second data does not block, Directly process the second data and write it into the MySQL database. After 30 seconds, the program continues to process the first data. You can observe the MySQL data table userInfo. You can see that the second data is stored first and the first data is stored after 30 seconds.

1,1001,23 Article 1 data 2,1002,24 Article 2 data