Install the corresponding version of Bazel

The corresponding commit of tensorflow downloaded today is 5a6c3d2139547b19fe8ed5ae856ed8c6ebbd7f7, and the corresponding version of bazel is 4.2.2. There is no correct version of bazel on the docker of tflite SOC, so it needs to be installed. The steps are as follows (it is in the docker container by default and has root user permission, otherwise sudo needs to be added to some command lines):

Install dependencies first

apt-get install build-essential openjdk-11-jdk python zip unzip

If an error is reported and openjdk-11-jdk cannot be found, set the following

add-apt-repository ppa:openjdk-r/ppa apt-get update apt-get install openjdk-11-jdk

If you continue to report an error, ModuleNotFoundError: No module named 'apt_pkg ', perform the following operations

cd /usr/lib/python3/dist-packages ln -s apt_pkg.cpython-35m-x86_64-linux-gnu.so apt_pkg.so

Then from Hua Weiyun Download the corresponding installation file (bazel-4.2.2-installer-linux-x86_64.sh here)

Installing Bazel

Refer to Zhihu article Installation of Bazel 4.0.0 under Linux , do the following:

be careful! The above command will overwrite the original bazel. Use it carefully!

chmod +x bazel-<version>-installer-linux-x86_64.sh sudo ./bazel-<version>-installer-linux-x86_64.sh

Modify the corresponding tensorflow Lite source code

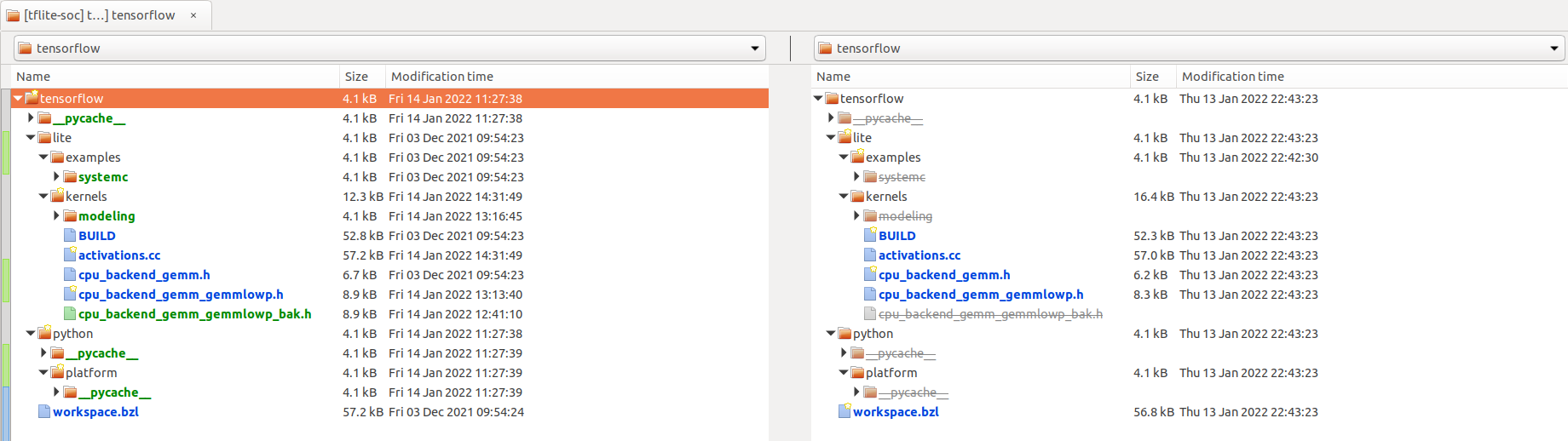

The following figure shows the difference between the tensorflow version used by TFLITE-SOC (added support for system C and several examples) and the official website version

We use this as a blueprint to change the latest version of tensorflow

Add example

Will/ tensorflow/tensorflow/lite/examples/systemc and/ Copy tensorflow/tensorflow/lite/kernels/modeling to the corresponding location

Add SystemC Library

Open/ tensor/tensor/workspace2.bzl file, add the following definition:

tf_http_archive( name = "systemc",

build_file = "//third_party:systemc.BUILD",

sha256 = "5781b9a351e5afedabc37d145e5f7edec08f3fd5de00ffeb8fa1f3086b1f7b3f",

urls = tf_mirror_urls("https://www.accellera.org/images/downloads/standards/systemc/systemc-2.3.3.tar.gz"),

)

Modify kernels/BUILD file

Open/ For the tensorflow/tensorflow/kernels/BUILD file, replace lines 405 - 442 with the following code

cc_library(

name = "cpu_backend_gemm_lib",

hdrs = [

"cpu_backend_gemm.h",

"cpu_backend_gemm_params.h",

],

copts = tflite_copts(),

deps = [

#"//tensorflow/lite/kernels/internal:common",

"//tensorflow/lite/kernels/internal:compatibility",

#"//tensorflow/lite/kernels/internal:types",

],

)

cc_library(

name = "cpu_backend_gemm",

srcs = [

"cpu_backend_gemm_custom_gemv.h",

"cpu_backend_gemm_eigen.cc",

"cpu_backend_gemm_eigen.h",

"cpu_backend_gemm_gemmlowp.h",

"cpu_backend_gemm_ruy.h",

"cpu_backend_gemm_x86.h",

],

# hdrs = [

# "cpu_backend_gemm.h",

# "cpu_backend_gemm_params.h",

# ],

copts = tflite_copts(),

deps = [

":tflite_with_ruy",

"//tensorflow/lite/kernels/internal:common",

"//tensorflow/lite/kernels/internal:compatibility",

"//tensorflow/lite/kernels/internal:types",

":cpu_backend_context",

":cpu_backend_threadpool",

# Isolated so it can be used by systemc

"//tensorflow/lite/kernels:cpu_backend_gemm_lib",

# Depend on ruy regardless of `tflite_with_ruy`. See the comment in

# cpu_backend_gemm.h about why ruy is the generic path.

"@ruy//ruy",

"@ruy//ruy:matrix",

"@ruy//ruy:path",

"@ruy//ruy/profiler:instrumentation",

# We only need to depend on gemmlowp and Eigen when tflite_with_ruy

# is false, but putting these dependencies in a select() seems to

# defeat copybara's rewriting rules.

"@gemmlowp",

"//third_party/eigen3",

"//tensorflow/lite/kernels/modeling:util"

],

)

Simple explanation

The system c example modifies the cpu_backend_gemm.h and CPU_ backend_ gemm_ lowp. Part of the code of H is used to print matrix information, so the library function CPU is used here_ backend_ gemm_ Lib proposed to compile separately, which has no essential change to the structure of the whole project. It is worth noting that src and hdrs correspond to compiling CPUs respectively_ backend_ The source files and header files required by GEMM library need to contain cpu_backend_gemm.h (compared with TFLITE-SOC, cpu_backend_gemm_x86.h file is added in the latest version, so this header file needs to be added in srcs).

Modify kernel code

Modify cpu_backend_gemm.h

- Declaration header file

#ifdef TOGGLE_TFLITE_SOC #include "tensorflow/lite/kernels/modeling/util.sc.h" #endif

- Add matrix print information (#ifdef toggle_tflite_code between SOC and #endif)

/* Public entry point */

template <typename LhsScalar, typename RhsScalar, typename AccumScalar,

typename DstScalar, QuantizationFlavor quantization_flavor>

void Gemm(const MatrixParams<LhsScalar>& lhs_params, const LhsScalar* lhs_data,

const MatrixParams<RhsScalar>& rhs_params, const RhsScalar* rhs_data,

const MatrixParams<DstScalar>& dst_params, DstScalar* dst_data,

const GemmParams<AccumScalar, DstScalar, quantization_flavor>& params,

CpuBackendContext* context) {

/*

* The intermediate code is omitted here

*/

#ifdef TOGGLE_TFLITE_SOC

// GemmImpl::Run(...) function executes a GEMM with different libraries

// depending on the context.

//

// For quantized inputs it will use gemmlowp library

// For floating pointing inputs it will use eigen library

tflite_soc::PrintMatricesInfo<LhsScalar, RhsScalar, DstScalar>(

lhs_params, rhs_params, dst_params);

#endif

// Generic case: dispatch to any backend as a general GEMM.

GemmImpl<LhsScalar, RhsScalar, AccumScalar, DstScalar,

quantization_flavor>::Run(lhs_params, lhs_data, rhs_params, rhs_data,

dst_params, dst_data, params, context);

}

Modify cpu_backend_gemm_gemmlowp.h

template <typename LhsScalar, typename RhsScalar, typename AccumScalar,

typename DstScalar>

struct GemmImplUsingGemmlowp<

LhsScalar, RhsScalar, AccumScalar, DstScalar,

QuantizationFlavor::kIntegerWithUniformMultiplier> {

static_assert(std::is_same<LhsScalar, RhsScalar>::value, "");

static_assert(std::is_same<AccumScalar, std::int32_t>::value, "");

using SrcScalar = LhsScalar;

/*

* The intermediate code is omitted here

*/

using BitDepthParams = typename GemmlowpBitDepthParams<SrcScalar>::Type;

#ifdef TOGGLE_TFLITE_SOC

// printf("%d,%d,%d\n", lhs_params.rows, lhs_params.cols, rhs_params.cols);

tflite_soc::PrintMatrices<LhsScalar, RhsScalar, DstScalar>(

lhs_params, lhs_data, rhs_params, rhs_data, dst_params, dst_data);

#endif

if (params.bias) {

ColVectorMap bias_vector(params.bias, lhs_params.rows);

gemmlowp::OutputStageBiasAddition<ColVectorMap> bias_addition_stage;

/*

* The intermediate code is omitted here

*/

#ifdef TOGGLE_TFLITE_SOC

printf("\nout after OP execution (%d,%d)\n", dst_params.rows,

dst_params.cols);

tflite_soc::PrintMatrix<DstScalar>(dst_params, dst_data);

#endif

}

};

So far, all the parts that need to be modified have been completed, and other versions of tensorflow can also realize SystemC support according to the above methods (there may be an error when compiling with bazel, but it is basically a dependency problem. Modify the corresponding BUILD file according to the error).

It can now run tflite-soc Examples of all SystemC on

# Hello World example

bazel build --jobs 1 //tensorflow/lite/examples/systemc:hello_systemc

bazel run //tensorflow/lite/examples/systemc:hello_systemc

# 2D Systolic array accelerator in isolation

bazel build --jobs 1 //tensorflow/lite/kernels/modeling:systolic_run

bazel run //tensorflow/lite/kernels/modeling:systolic_run

# 2D Systolic array accelerator with TFLite benchmarking tools

bazel build //tensorflow/lite/tools/benchmark:benchmark_model --cxxopt=-DTOGGLE_TFLITE_SOC=1

bazel-bin/tensorflow/lite/tools/benchmark/benchmark_model \

--use_gpu=false --num_threads=1 --enable_op_profiling=true \

--graph=../tensorflow-models/mobilenet-v1/mobilenet_v1_1.0_224_quant.tflite \

--num_runs=1