1. Add node node

[root@k8s-4 ~]# kubeadm join 192.168.191.30:6443 --token 6zs63l.4qmypshahrd3rt3x \ --discovery-token-ca-cert-hash sha256:851c0bf733fe1e9bff54af08b84e93635d5b9c6e047a68c694c613391e024185 W0323 15:58:36.010687 1427 join.go:346] [preflight] WARNING: JoinControlPane.controlPlane settings will be ignored when control-plane flag is not set. [preflight] Running pre-flight checks [preflight] Reading configuration from the cluster... [preflight] FYI: You can look at this config file with 'kubectl -n kube-system get cm kubeadm-config -oyaml' [kubelet-start] Downloading configuration for the kubelet from the "kubelet-config-1.17" ConfigMap in the kube-system namespace [kubelet-start] Writing kubelet configuration to file "/var/lib/kubelet/config.yaml" [kubelet-start] Writing kubelet environment file with flags to file "/var/lib/kubelet/kubeadm-flags.env" [kubelet-start] Starting the kubelet [kubelet-start] Waiting for the kubelet to perform the TLS Bootstrap... This node has joined the cluster: * Certificate signing request was sent to apiserver and a response was received. * The Kubelet was informed of the new secure connection details. Run 'kubectl get nodes' on the control-plane to see this node join the cluster.

2. After the execution is successful, the file information such as kubelet.conf and pki will be created in the / etc/kubernetes directory of the node. When the same node joins again, the file information in the directory needs to be cleared

[root@k8s-4 kubernetes]# pwd /etc/kubernetes [root@k8s-4 kubernetes]# ll //Total dosage 4 -rw-------. 1 root root 1855 3 month 23 16:15 kubelet.conf drwxr-xr-x. 2 root root 6 3 month 13 07:57 manifests #Component profile at cluster startup drwxr-xr-x. 2 root root 20 3 month 23 16:16 pki

3. View the cluster node information in the master node

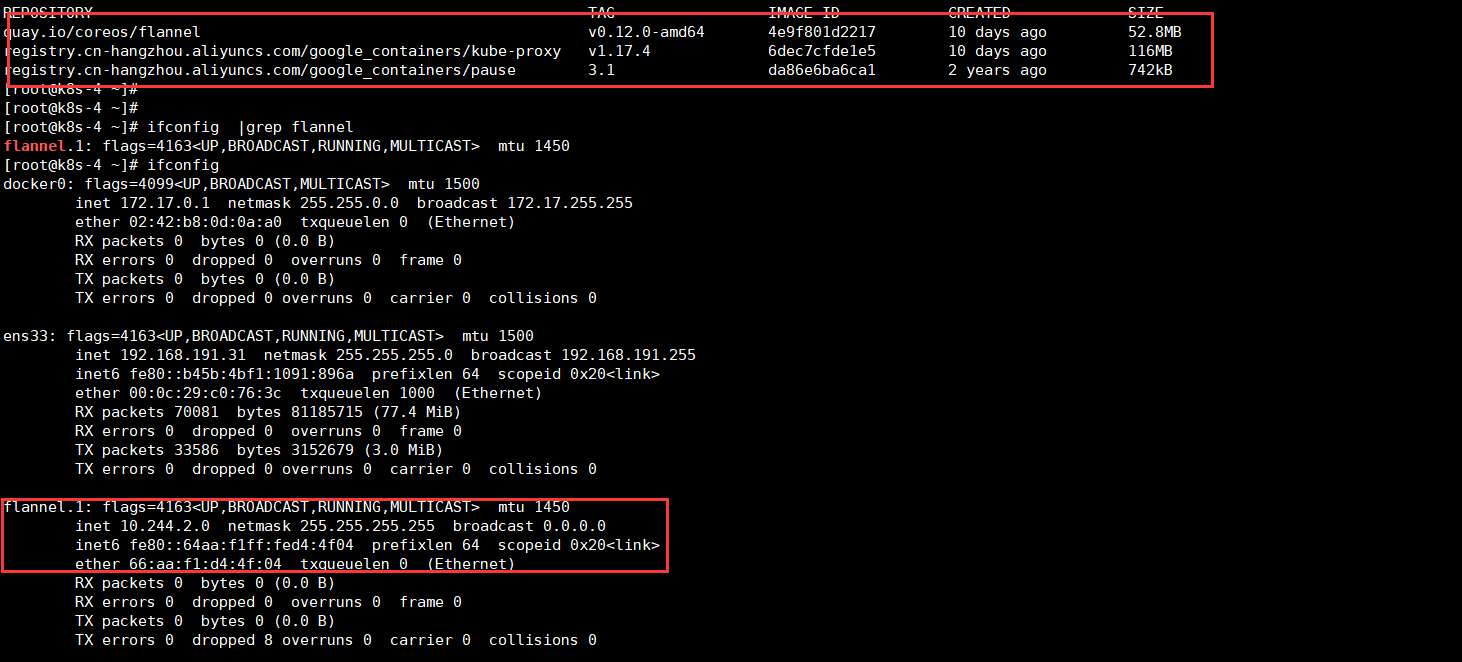

[root@k8s-3 ~]# kubectl get node NAME STATUS ROLES AGE VERSION k8s-3 Ready master 44h v1.17.4 k8s-4 NotReady <none> 111s v1.17.4 #When viewing the information, you can see that the status of several nodes is NotReady, because the flannel plug-in has not been installed, #Wait a few minutes for the information, view the docker image of the node, download the flannel image automatically and create the network card information [root@k8s-3 ~]# kubectl get nodes NAME STATUS ROLES AGE VERSION k8s-3 Ready master 44h v1.17.4 k8s-4 Ready <none> 5m40s v1.17.4

4. Set the role of node

[root@k8s-3 ~]#kubectl label nodes k8s-4 (node name) node role.kubernetes.io/node= node/k8s-4 labeled [root@k8s-3 ~]# [root@k8s-3 ~]# kubectl get nodes NAME STATUS ROLES AGE VERSION k8s-3 Ready master 44h v1.17.4 k8s-4 Ready node 12m v1.17.4

5. Set the node to maintenance mode first (k8s-4 is the node name)

[root@k8s-3 ~]# kubectl drain k8s-4 --delete-local-data --force --ignore-daemonsets node/k8s-4 node/k8s-4 cordoned node/k8s-4 cordoned node/k8s-4 drained node/k8s-4 drained [root@k8s-3 ~]# kubectl get nodes NAME STATUS ROLES AGE VERSION k8s-3 Ready master 45h v1.17.4 k8s-4 Ready,SchedulingDisabled node 43m v1.17.4 //Restore node state [root@k8s-3 ~]# kubectl uncordon k8s-4 node/k8s-4 uncordoned [root@k8s-3 ~]# kubectl get nodes NAME STATUS ROLES AGE VERSION k8s-3 Ready master 45h v1.17.4 k8s-4 Ready node 46m v1.17.4

6. Delete k8s-4 node

[root@k8s-3 ~]# kubectl delete node k8s-4 node "k8s-4" deleted [root@k8s-3 ~]# kubectl get nodes NAME STATUS ROLES AGE VERSION k8s-3 Ready master 44h v1.17.4

matters needing attention:

1. Operation of nodes after joining the cluster

I. add a kubectl file and pki directory,

2. Start the process of kubectl. The default port is 10250. The process of kubelet needs to be stopped when the same node joins again

At present, there are only these, and there are new findings in the follow-up.