preface

In a kafka cluster, add or delete a service node or topic. How does kafka manage when a partition is added to a topic? Today we will analyze one of kafka's core components, controller

What is controller

Controller Broker (kafkacontroller) is a Kafka service. It runs on each Broker in the Kafka cluster, but only one can be active at any point in time (election).

Main selection process of controller

Let's first look at the startup process of each Broker node,

As can be seen from the above figure, the KakfaController component can be started only after the start of SocketServer (network service), AlterIsrManager (), ReplicaManager (replica manager) and so on are ready.

def startup() = {

zkClient.registerStateChangeHandler(new StateChangeHandler {

override val name: String = StateChangeHandlers.ControllerHandler

override def afterInitializingSession(): Unit = {

eventManager.put(RegisterBrokerAndReelect) //When the network flicker is abnormal, register the broker information and initiate the re-election of the main controller. The event is put into the event manager

}

override def beforeInitializingSession(): Unit = {

val queuedEvent = eventManager.clearAndPut(Expire)

// Block initialization of the new session until the expiration event is being handled,

// which ensures that all pending events have been processed before creating the new session

queuedEvent.awaitProcessing()

}

})

eventManager.put(Startup)//Put the Startup event into the event queue

eventManager.start()//Start event handler

}

The above function mainly does the following three things

- Registered zk listening events

- Put the Startup event into the event manager

- Start event handler

The principle of EventManager will be analyzed later. Let's continue to analyze how to handle Startup events

private def processStartup(): Unit = {

zkClient.registerZNodeChangeHandlerAndCheckExistence(controllerChangeHandler)//Register listener controller changehandler

elect()//Conduct elections

}

- Register the listening controller node processor ControllerChangeHandler

- Conduct elections

class ControllerChangeHandler(eventManager: ControllerEventManager) extends ZNodeChangeHandler {

override val path: String = ControllerZNode.path

override def handleCreation(): Unit = eventManager.put(ControllerChange)//When listening to the zk node controller creation, add the ControllerChange event

override def handleDeletion(): Unit = eventManager.put(Reelect)//Listen to the event of re electing the main controller when the zk node controller is removed

override def handleDataChange(): Unit = eventManager.put(ControllerChange)//Add the ControllerChange event when listening to the zk node controller data change

}

Watching elect ion

private def elect(): Unit = {

activeControllerId = zkClient.getControllerId.getOrElse(-1)//Obtain node information from zk node controller. If no information is obtained, return - 1 directly

if (activeControllerId != -1) {//If the obtained id is not - 1, it means that a node has become the master node of the controller. It returns directly and does not participate in the selection of the master node

return

}

try {

val (epoch, epochZkVersion) = zkClient.registerControllerAndIncrementControllerEpoch(config.brokerId)//Register the temporary node controller with zk. If the registration is unsuccessful, throw an exception

controllerContext.epoch = epoch

controllerContext.epochZkVersion = epochZkVersion

activeControllerId = config.brokerId

onControllerFailover()

} catch {

case e: ControllerMovedException =>

maybeResign()

if (activeControllerId != -1)

debug(s"Broker $activeControllerId was elected as controller instead of broker ${config.brokerId}", e)

else

warn("A controller has been elected but just resigned, this will result in another round of election", e)

case t: Throwable =>

error(s"Error while electing or becoming controller on broker ${config.brokerId}. " +

s"Trigger controller movement immediately", t)

triggerControllerMove()

}

}

Real election process:

- Obtain node information from zk node controller. If no node information is obtained, return - 1 directly

- If the id is not equal to - 1, it indicates that another broker has created a controller node and is not executing further

- Register the temporary node controller with zk. If the registration is unsuccessful, throw an exception. If the registration is successful, execute onControllerFailover. The processing of broker, topic and partition are all in it. We will analyze it later

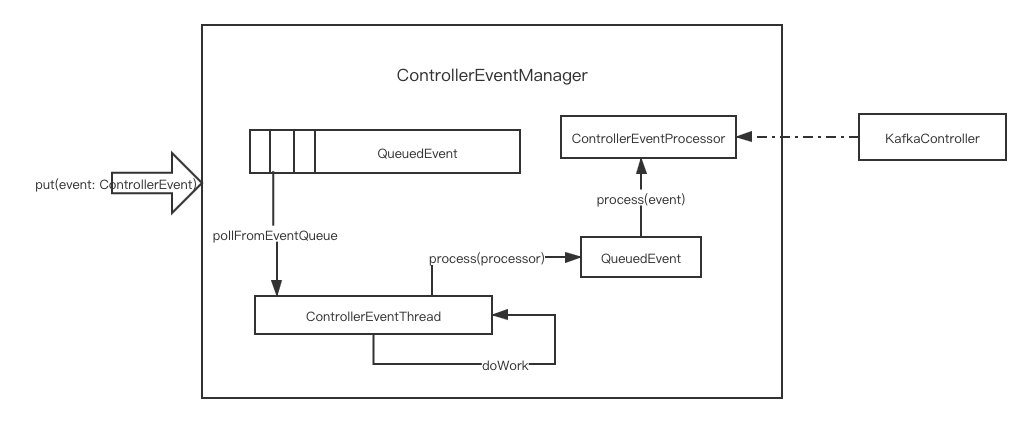

Analysis of ControllerEventManager

ControllerEventManager is mainly composed of ControllerEventProcessor, QueuedEvent and ControllerEventThread

- ControllerEventProcessor: event processor interface. At present, only KafkaController implements this interface.

- QueuedEvent: the event object on the event queue.

- ControllerEventThread: inherits the ShutdownableThread thread class

The ControllerEventManager has built-in LinkedBlockingQueue of ControllerEventThread and QueuedEvent. When the ControllerEventManager is started, the ControllerEventThread thread thread will be started, and the run method of ControllerEventThread will call doWork circularly

override def run(): Unit = {

isStarted = true

info("Starting")

try {

while (isRunning)

doWork()

} catch {

case e: FatalExitError =>

shutdownInitiated.countDown()

shutdownComplete.countDown()

info("Stopped")

Exit.exit(e.statusCode())

case e: Throwable =>

if (isRunning)

error("Error due to", e)

} finally {

shutdownComplete.countDown()

}

info("Stopped")

}

The implementation of ControllerEventThread for doWork is as follows

override def doWork(): Unit = {

val dequeued = pollFromEventQueue()

dequeued.event match {

case ShutdownEventThread => // The shutting down of the thread has been initiated at this point. Ignore this event.

case controllerEvent =>

_state = controllerEvent.state

eventQueueTimeHist.update(time.milliseconds() - dequeued.enqueueTimeMs)

try {

def process(): Unit = dequeued.process(processor)

rateAndTimeMetrics.get(state) match {

case Some(timer) => timer.time { process() }

case None => process()

}

} catch {

case e: Throwable => error(s"Uncaught error processing event $controllerEvent", e)

}

_state = ControllerState.Idle

}

}

The doWork method continuously takes the QueuedEvent event from the blocking queue and executes the process method of QueuedEvent

def process(processor: ControllerEventProcessor): Unit = {

if (spent.getAndSet(true))

return

processingStarted.countDown()//In order to deal with abnormal situations such as network flash off, when creating a new session, you need to wait until all event s in pending status are executed

processor.process(event)//Call the process of KafkaController to process events

}

Call the process method of ControllerEventProcessor to process events. ControllerEventProcessor is an interface. At present, only KafkaController implements this interface. Now let's look at the process method of KafkaController

override def process(event: ControllerEvent): Unit = {

try {

event match {

case event: MockEvent =>

// Used only in test cases

event.process()

case ShutdownEventThread =>

error("Received a ShutdownEventThread event. This type of event is supposed to be handle by ControllerEventThread")

case AutoPreferredReplicaLeaderElection =>

processAutoPreferredReplicaLeaderElection()

case ReplicaLeaderElection(partitions, electionType, electionTrigger, callback) =>

processReplicaLeaderElection(partitions, electionType, electionTrigger, callback)

case UncleanLeaderElectionEnable =>

processUncleanLeaderElectionEnable()

case TopicUncleanLeaderElectionEnable(topic) =>

processTopicUncleanLeaderElectionEnable(topic)

case ControlledShutdown(id, brokerEpoch, callback) =>

processControlledShutdown(id, brokerEpoch, callback)

case LeaderAndIsrResponseReceived(response, brokerId) =>

processLeaderAndIsrResponseReceived(response, brokerId)

case UpdateMetadataResponseReceived(response, brokerId) =>

processUpdateMetadataResponseReceived(response, brokerId)

case TopicDeletionStopReplicaResponseReceived(replicaId, requestError, partitionErrors) =>

processTopicDeletionStopReplicaResponseReceived(replicaId, requestError, partitionErrors)

case BrokerChange =>

processBrokerChange()

case BrokerModifications(brokerId) =>

processBrokerModification(brokerId)

case ControllerChange =>

processControllerChange()

case Reelect =>

processReelect()

case RegisterBrokerAndReelect =>

processRegisterBrokerAndReelect()

case Expire =>

processExpire()

case TopicChange =>

processTopicChange()

case LogDirEventNotification =>

processLogDirEventNotification()

case PartitionModifications(topic) =>

processPartitionModifications(topic)

case TopicDeletion =>

processTopicDeletion()

case ApiPartitionReassignment(reassignments, callback) =>

processApiPartitionReassignment(reassignments, callback)

case ZkPartitionReassignment =>

processZkPartitionReassignment()

case ListPartitionReassignments(partitions, callback) =>

processListPartitionReassignments(partitions, callback)

case UpdateFeatures(request, callback) =>

processFeatureUpdates(request, callback)

case PartitionReassignmentIsrChange(partition) =>

processPartitionReassignmentIsrChange(partition)

case IsrChangeNotification =>

processIsrChangeNotification()

case AlterIsrReceived(brokerId, brokerEpoch, isrsToAlter, callback) =>

processAlterIsr(brokerId, brokerEpoch, isrsToAlter, callback)

case Startup =>

processStartup()

}

} catch {

case e: ControllerMovedException =>

info(s"Controller moved to another broker when processing $event.", e)

maybeResign()

case e: Throwable =>

error(s"Error processing event $event", e)

} finally {

updateMetrics()

}

}

From the above code, we can see that different events define corresponding processing functions in KafkaController. The Startup event corresponds to the processStartup method. When the master is selected, the Startup event is added to the ControllerEventManager event manager. After a series of processing of ControllerEventThread, it is finally recalled to the processStartup of KafkaController

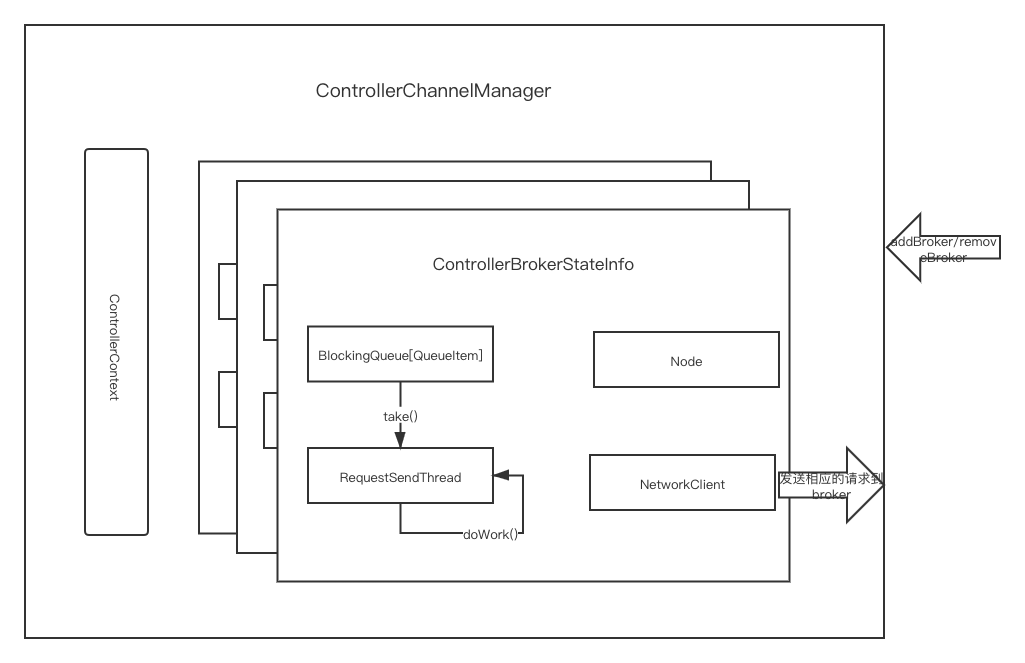

Analysis of ControllerChannelManager

When creating a topic, adding partitions under a topic and other operations are completed by the controller, the controller will broadcast to other brokers after processing. The ControllerChannelManager here is used to manage the network connection and request sending with all other broker node s

class ControllerChannelManager(controllerContext: ControllerContext,

config: KafkaConfig,

time: Time,

metrics: Metrics,

stateChangeLogger: StateChangeLogger,

threadNamePrefix: Option[String] = None) extends Logging with KafkaMetricsGroup {

import ControllerChannelManager._

protected val brokerStateInfo = new HashMap[Int, ControllerBrokerStateInfo]

def addBroker(broker: Broker): Unit = {

brokerLock synchronized {

if (!brokerStateInfo.contains(broker.id)) {

addNewBroker(broker)

startRequestSendThread(broker.id)

}

}

}

def removeBroker(brokerId: Int): Unit = {

brokerLock synchronized {

removeExistingBroker(brokerStateInfo(brokerId))

}

}

The main member variables of ControllerChannelManager are controllerContext and brokerStateInfo

- controllerContext: metadata information of controller

- brokerStateInfo: the information of the broker. The main controller and other brokers send corresponding requests. The key is the id of the broker. When a request needs to be sent to a broker, the controller brokerStateInfo information is directly found according to the id and handed to the controller brokerStateInfo to send the corresponding network request for processing. brokerStateInfo also maintains all broker information under this cluster

When a new broker is created or launched in the cluster, the controller finds that a new node is online by listening to zk's node information. Finally, it will call the addBroker(broker: Broker) method of ControllerChannelManager. Let's see how addBroker handles it. From the above code, we can find and handle two things: addNewBroker(broker) and startRequestSendThread(broker.id)- addNewBroker mainly builds an instance of the ControllerBrokerStateInfo class and then adds it to the brokerStateInfo

- startRequestSendThread mainly starts the startRequestSendThread thread in the ControllerBrokerStateInfo built by addNewBroker

Let's take a look at the information of ControllerBrokerStateInfo

case class ControllerBrokerStateInfo(networkClient: NetworkClient,

brokerNode: Node,

messageQueue: BlockingQueue[QueueItem],

requestSendThread: RequestSendThread,

queueSizeGauge: Gauge[Int],

requestRateAndTimeMetrics: Timer,

reconfigurableChannelBuilder: Option[Reconfigurable])

The main members of ControllerBrokerStateInfo are networkClient, brokerNode, messageQueue and requestSendThread

- networkClient: the client of the network request

- brokerNode: registration information of the broker, Ip of the host, port number and other information

- messageQueue: message queue

- requestSendThread: Request send queue

When the controller needs to send a request to a broker, it directly calls sendRequest

def sendRequest(brokerId: Int, request: AbstractControlRequest.Builder[_ <: AbstractControlRequest],

callback: AbstractResponse => Unit = null): Unit = {

brokerLock synchronized {

val stateInfoOpt = brokerStateInfo.get(brokerId)

stateInfoOpt match {

case Some(stateInfo) =>

stateInfo.messageQueue.put(QueueItem(request.apiKey, request, callback, time.milliseconds()))

case None =>

warn(s"Not sending request $request to broker $brokerId, since it is offline.")

}

}

}

Directly find the corresponding ControllerBrokerStateInfo according to the requested brokerId and add the sent request message to the queue. However, the RequestSendThread thread keeps polling to get the request information from the queue and send the task

override def doWork(): Unit = {

def backoff(): Unit = pause(100, TimeUnit.MILLISECONDS)

val QueueItem(apiKey, requestBuilder, callback, enqueueTimeMs) = queue.take()

requestRateAndQueueTimeMetrics.update(time.milliseconds() - enqueueTimeMs, TimeUnit.MILLISECONDS)

var clientResponse: ClientResponse = null

try {

var isSendSuccessful = false

while (isRunning && !isSendSuccessful) {

// if a broker goes down for a long time, then at some point the controller's zookeeper listener will trigger a

// removeBroker which will invoke shutdown() on this thread. At that point, we will stop retrying.

try {

if (!brokerReady()) {

isSendSuccessful = false

backoff()

}

else {

val clientRequest = networkClient.newClientRequest(brokerNode.idString, requestBuilder,

time.milliseconds(), true)

clientResponse = NetworkClientUtils.sendAndReceive(networkClient, clientRequest, time)

isSendSuccessful = true

}

} catch {

case e: Throwable => // if the send was not successful, reconnect to broker and resend the message

warn(s"Controller $controllerId epoch ${controllerContext.epoch} fails to send request $requestBuilder " +

s"to broker $brokerNode. Reconnecting to broker.", e)

networkClient.close(brokerNode.idString)

isSendSuccessful = false

backoff()

}

}

if (clientResponse != null) {

val requestHeader = clientResponse.requestHeader

val api = requestHeader.apiKey

if (api != ApiKeys.LEADER_AND_ISR && api != ApiKeys.STOP_REPLICA && api != ApiKeys.UPDATE_METADATA)

throw new KafkaException(s"Unexpected apiKey received: $apiKey")

val response = clientResponse.responseBody

stateChangeLogger.withControllerEpoch(controllerContext.epoch).trace(s"Received response " +

s"$response for request $api with correlation id " +

s"${requestHeader.correlationId} sent to broker $brokerNode")

if (callback != null) {

callback(response)

}

}

} catch {

case e: Throwable =>

error(s"Controller $controllerId fails to send a request to broker $brokerNode", e)

// If there is any socket error (eg, socket timeout), the connection is no longer usable and needs to be recreated.

networkClient.close(brokerNode.idString)

}

}

Analysis of OnControllerFailover process

When a broker competes with the controller successfully, it will call back onControllerFailover. onControllerFailover mainly has the next responsibility

- Register the listening and processing function for the sub nodes of broker, topic, topicdeletion and isr, and register the listening and processing function for reassign_partitions,preferred_ replica_ Selection register node change listening and processing function

- Initialize the controller's metadata object ControllerContext, where the metadata object contains the current topics, the broker s that are alive, and the leaders of all partitions

- Start the ControllerChannelManager and send information to each broker

- Start replica state machine

- Start partition state machine

- Start auto balance partition timer

private def onControllerFailover(): Unit = {

maybeSetupFeatureVersioning()

info("Registering handlers")

// before reading source of truth from zookeeper, register the listeners to get broker/topic callbacks

//Register and listen to the sub nodes of broker, topic, topicdeletion and isr

val childChangeHandlers = Seq(brokerChangeHandler, topicChangeHandler, topicDeletionHandler, logDirEventNotificationHandler,

isrChangeNotificationHandler)

childChangeHandlers.foreach(zkClient.registerZNodeChildChangeHandler)

//Yes, reassign_partitions,preferred_ replica_ Selection node change listening and processing

val nodeChangeHandlers = Seq(preferredReplicaElectionHandler, partitionReassignmentHandler)

nodeChangeHandlers.foreach(zkClient.registerZNodeChangeHandlerAndCheckExistence)

info("Deleting log dir event notifications")

zkClient.deleteLogDirEventNotifications(controllerContext.epochZkVersion)

info("Deleting isr change notifications")

zkClient.deleteIsrChangeNotifications(controllerContext.epochZkVersion)

info("Initializing controller context")

initializeControllerContext() //Initialize metadata, which comes from the data on zk

info("Fetching topic deletions in progress")

val (topicsToBeDeleted, topicsIneligibleForDeletion) = fetchTopicDeletionsInProgress()

info("Initializing topic deletion manager")

topicDeletionManager.init(topicsToBeDeleted, topicsIneligibleForDeletion)

// We need to send UpdateMetadataRequest after the controller context is initialized and before the state machines

// are started. The is because brokers need to receive the list of live brokers from UpdateMetadataRequest before

// they can process the LeaderAndIsrRequests that are generated by replicaStateMachine.startup() and

// partitionStateMachine.startup().

info("Sending update metadata request")

//Send metadata update requests to all broker s in the cluster

sendUpdateMetadataRequest(controllerContext.liveOrShuttingDownBrokerIds.toSeq, Set.empty)

replicaStateMachine.startup()//Start replica state machine

partitionStateMachine.startup()//Start partition state machine

info(s"Ready to serve as the new controller with epoch $epoch")

initializePartitionReassignments()

topicDeletionManager.tryTopicDeletion()

val pendingPreferredReplicaElections = fetchPendingPreferredReplicaElections()

onReplicaElection(pendingPreferredReplicaElections, ElectionType.PREFERRED, ZkTriggered)

info("Starting the controller scheduler")

kafkaScheduler.startup()

if (config.autoLeaderRebalanceEnable) {

scheduleAutoLeaderRebalanceTask(delay = 5, unit = TimeUnit.SECONDS)//Start auto balance partition timer

}

if (config.tokenAuthEnabled) {

info("starting the token expiry check scheduler")

tokenCleanScheduler.startup()

tokenCleanScheduler.schedule(name = "delete-expired-tokens",

fun = () => tokenManager.expireTokens(),

period = config.delegationTokenExpiryCheckIntervalMs,

unit = TimeUnit.MILLISECONDS)

}

}

Main responsibilities of initializeControllerContext

- Obtain all the following broker information and epoch from zk node / broker/ids and assign them to controllerContext

- Get all topics from zk and assign them to controllerContext

- Listen for metadata changes on these topics

- Get ReplicaAssignment under all topics

- Update the leader and isr information of each partition

- Start the ControllerChannelManager (its principle and purpose have been described above)

private def initializeControllerContext(): Unit = {

// update controller cache with delete topic information

//Obtain all the following broker information and epoch from zk node / broker/ids and assign them to controllerContext

val curBrokerAndEpochs = zkClient.getAllBrokerAndEpochsInCluster

val (compatibleBrokerAndEpochs, incompatibleBrokerAndEpochs) = partitionOnFeatureCompatibility(curBrokerAndEpochs)

if (!incompatibleBrokerAndEpochs.isEmpty) {

warn("Ignoring registration of new brokers due to incompatibilities with finalized features: " +

incompatibleBrokerAndEpochs.map { case (broker, _) => broker.id }.toSeq.sorted.mkString(","))

}

controllerContext.setLiveBrokers(compatibleBrokerAndEpochs)

info(s"Initialized broker epochs cache: ${controllerContext.liveBrokerIdAndEpochs}")

//Get all topics from zk and assign them to controllerContext

controllerContext.setAllTopics(zkClient.getAllTopicsInCluster(true))

//Listen for metadata changes on these topics

registerPartitionModificationsHandlers(controllerContext.allTopics.toSeq)

val replicaAssignmentAndTopicIds = zkClient.getReplicaAssignmentAndTopicIdForTopics(controllerContext.allTopics.toSet)

processTopicIds(replicaAssignmentAndTopicIds)

replicaAssignmentAndTopicIds.foreach { case TopicIdReplicaAssignment(_, _, assignments) =>

assignments.foreach { case (topicPartition, replicaAssignment) =>

controllerContext.updatePartitionFullReplicaAssignment(topicPartition, replicaAssignment)

if (replicaAssignment.isBeingReassigned)

controllerContext.partitionsBeingReassigned.add(topicPartition)

}

}

controllerContext.clearPartitionLeadershipInfo()

controllerContext.shuttingDownBrokerIds.clear()

// register broker modifications handlers

registerBrokerModificationsHandler(controllerContext.liveOrShuttingDownBrokerIds)

// update the leader and isr cache for all existing partitions from Zookeeper

updateLeaderAndIsrCache()//Update the leader and isr information of each partition

// start the channel manager

controllerChannelManager.startup()//Start ControllerChannelManager

}

ReplicaStateMachine replica state machine mainly listens to the state and processing methods