Karto Gu slam can be said to be the first time to use the optimization content of factor map in slam, which is an epoch-making open source laser slam system.

Its main contributions are as follows: (1) a better scanning matching process is proposed;

(2) using the observation information and pose relationship to construct factor graph to solve the best loop estimation.

Reference resources: Karto_slam framework and code analysis

Karto SLAM algorithm learning

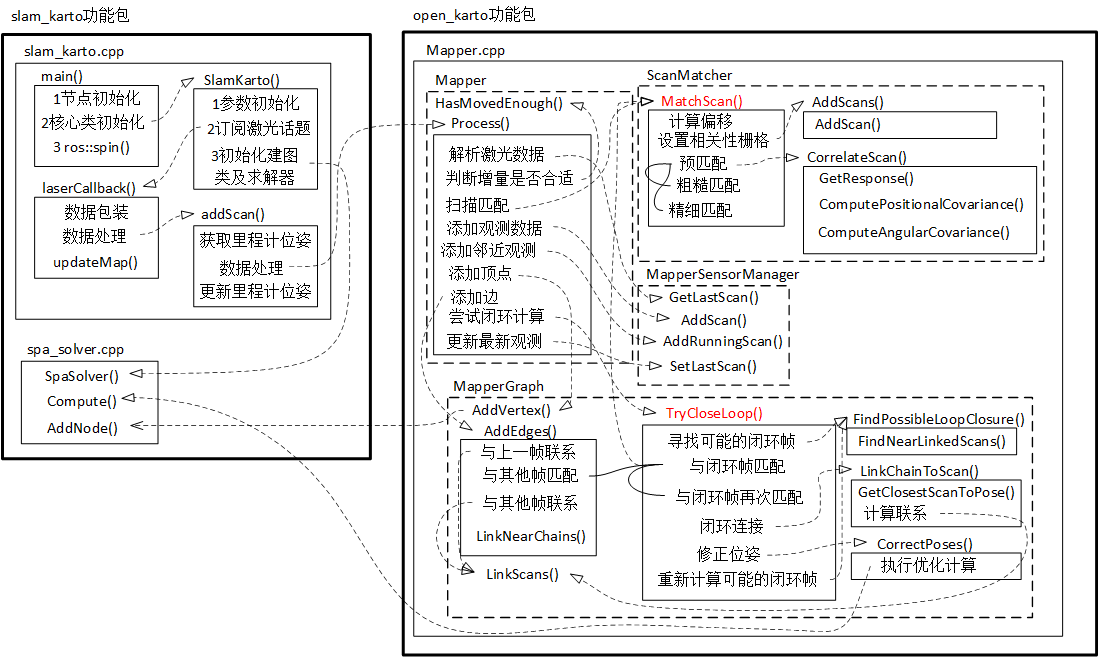

I. overall framework

Generally speaking, looking at the code, the karto code framework is relatively clean. The only bad thing is that multiple classes are put under the same file, resulting in a huge code, especially the Mapper.cpp file, which basically contains all the algorithm cores.

In the karto slam folder, we mainly focus on two aspects: slam slam karto.cpp and Spa solver.cpp.

In the open karto folder, we mainly focus on Mapper.cpp in the src folder. Mapper.h in the include folder has the specific implementation of map search template.

Again, the important functions are those marked in red: MatchScan() and TryCloseLoop().

II. Scan matching

kt_double ScanMatcher::MatchScan(LocalizedRangeScan* pScan, const LocalizedRangeScanVector& rBaseScans, Pose2& rMean,

Matrix3& rCovariance, kt_bool doPenalize, kt_bool doRefineMatch)

{

// set scan pose to be center of grid1.

Pose2 scanPose = pScan->GetSensorPose();

// scan has no readings; cannot do scan matching

// best guess of pose is based off of adjusted odometer reading

//The scan has no reading, so it can't be matched.

//The best guess for posture is based on the adjusted odometer reading

if (pScan->GetNumberOfRangeReadings() == 0)

{

rMean = scanPose;

// maximum covariance

rCovariance(0, 0) = MAX_VARIANCE; // XX

rCovariance(1, 1) = MAX_VARIANCE; // YY

rCovariance(2, 2) = 4 * math::Square(m_pMapper->m_pCoarseAngleResolution->GetValue()); // TH*TH

return 0.0;

}

// 2. Get the grid size

Rectangle2<kt_int32s> roi = m_pCorrelationGrid->GetROI();

// 3. Calculate offset (in meters - lower left corner)

Vector2<kt_double> offset;

offset.SetX(scanPose.GetX() - (0.5 * (roi.GetWidth() - 1) * m_pCorrelationGrid->GetResolution()));

offset.SetY(scanPose.GetY() - (0.5 * (roi.GetHeight() - 1) * m_pCorrelationGrid->GetResolution()));

// 4. Set offset

m_pCorrelationGrid->GetCoordinateConverter()->SetOffset(offset);

// Set correlation grid

AddScans(rBaseScans, scanPose.GetPosition());

// Calculate how far to search in each direction

Vector2<kt_double> searchDimensions(m_pSearchSpaceProbs->GetWidth(), m_pSearchSpaceProbs->GetHeight());

Vector2<kt_double> coarseSearchOffset(0.5 * (searchDimensions.GetX() - 1) * m_pCorrelationGrid->GetResolution(),

0.5 * (searchDimensions.GetY() - 1) * m_pCorrelationGrid->GetResolution());

// a coarse search only checks half the cells in each dimension

Vector2<kt_double> coarseSearchResolution(2 * m_pCorrelationGrid->GetResolution(),

2 * m_pCorrelationGrid->GetResolution());

// Actual scan matching

kt_double bestResponse = CorrelateScan(pScan, scanPose, coarseSearchOffset, coarseSearchResolution,

m_pMapper->m_pCoarseSearchAngleOffset->GetValue(),

m_pMapper->m_pCoarseAngleResolution->GetValue(),

doPenalize, rMean, rCovariance, false);

if (m_pMapper->m_pUseResponseExpansion->GetValue() == true)

{

if (math::DoubleEqual(bestResponse, 0.0))

{

// try and increase search angle offset with 20 degrees and do another match

//Try to increase the search angle offset by 20 degrees and make another match

kt_double newSearchAngleOffset = m_pMapper->m_pCoarseSearchAngleOffset->GetValue();

for (kt_int32u i = 0; i < 3; i++)

{

newSearchAngleOffset += math::DegreesToRadians(20);

bestResponse = CorrelateScan(pScan, scanPose, coarseSearchOffset, coarseSearchResolution,

newSearchAngleOffset, m_pMapper->m_pCoarseAngleResolution->GetValue(),

doPenalize, rMean, rCovariance, false);

if (math::DoubleEqual(bestResponse, 0.0) == false)

{

break;

}

}

}

}

if (doRefineMatch)

{//Make a fine match

Vector2<kt_double> fineSearchOffset(coarseSearchResolution * 0.5);

Vector2<kt_double> fineSearchResolution(m_pCorrelationGrid->GetResolution(), m_pCorrelationGrid->GetResolution());

bestResponse = CorrelateScan(pScan, rMean, fineSearchOffset, fineSearchResolution,

0.5 * m_pMapper->m_pCoarseAngleResolution->GetValue(),

m_pMapper->m_pFineSearchAngleOffset->GetValue(),

doPenalize, rMean, rCovariance, true);

}

assert(math::InRange(rMean.GetHeading(), -KT_PI, KT_PI));

return bestResponse;

}

III. loop estimation

kt_bool MapperGraph::TryCloseLoop(LocalizedRangeScan* pScan, const Name& rSensorName)

{

kt_bool loopClosed = false;

kt_int32u scanIndex = 0;

//Looking for observation frames that may be closed to the current frame

LocalizedRangeScanVector candidateChain = FindPossibleLoopClosure(pScan, rSensorName, scanIndex);

while (!candidateChain.empty())

{

Pose2 bestPose;

Matrix3 covariance;

kt_double coarseResponse = m_pLoopScanMatcher->MatchScan(pScan, candidateChain,

bestPose, covariance, false, false);//Make a scan match

m_pMapper->FireLoopClosureCheck(stream.str());

if ((coarseResponse > m_pMapper->m_pLoopMatchMinimumResponseCoarse->GetValue()) &&

(covariance(0, 0) < m_pMapper->m_pLoopMatchMaximumVarianceCoarse->GetValue()) &&

(covariance(1, 1) < m_pMapper->m_pLoopMatchMaximumVarianceCoarse->GetValue()))

{

LocalizedRangeScan tmpScan(pScan->GetSensorName(), pScan->GetRangeReadingsVector());

tmpScan.SetUniqueId(pScan->GetUniqueId());

tmpScan.SetTime(pScan->GetTime());

tmpScan.SetStateId(pScan->GetStateId());

tmpScan.SetCorrectedPose(pScan->GetCorrectedPose());

tmpScan.SetSensorPose(bestPose); // This also updates OdometricPose.

kt_double fineResponse = m_pMapper->m_pSequentialScanMatcher->MatchScan(&tmpScan, candidateChain,

bestPose, covariance, false);//Scan match again

m_pMapper->FireLoopClosureCheck(stream1.str());

if (fineResponse < m_pMapper->m_pLoopMatchMinimumResponseFine->GetValue())

m_pMapper->FireLoopClosureCheck("REJECTED!");

else

{

m_pMapper->FireBeginLoopClosure("Closing loop...");

pScan->SetSensorPose(bestPose);

LinkChainToScan(candidateChain, pScan, bestPose, covariance);

CorrectPoses();//Perform optimization calculation and correct posture

m_pMapper->FireEndLoopClosure("Loop closed!");

loopClosed = true;

}

}

candidateChain = FindPossibleLoopClosure(pScan, rSensorName, scanIndex);

}

return loopClosed;

}