Analysis of problems encountered when edgemesh hijacks traffic using iptables

concept

- Cloud side communication: cloud container. Access the edge container through serviceName/clusterIP;

- Edge cloud communication: in contrast to the above, the edge container accesses the cloud container through serviceName/clusterIP;

- Edge to edge communication: the container on the edge host accesses the container of the edge host or other edge hosts through serviceName/clusterIP;

edgemesh architecture

Compared with kubernetes, kubeedge mainly provides the scheme of building clusters across subnets; Its most powerful feature is that kubernetes is not limited to a physical computer room; The host nodes in the cluster can be in different private LANs.

Hosts under different private networks need to communicate with each other, which is mainly limited by the firewall NAT form:

-

Full cone NAT

-

Restricted cone NAT

-

Port restricted cone NAT

-

Symmetric NAT

Among the above networking schemes, the security is enhanced in turn. In a symmetrical conical network, all hosts are behind their own firewalls, so direct penetration access is very difficult. Therefore, direct edgemesh provides two penetration access schemes:

deploy

Edgemesh server: deployment cloud

Edgemesh agent: daemonset cloud + edge

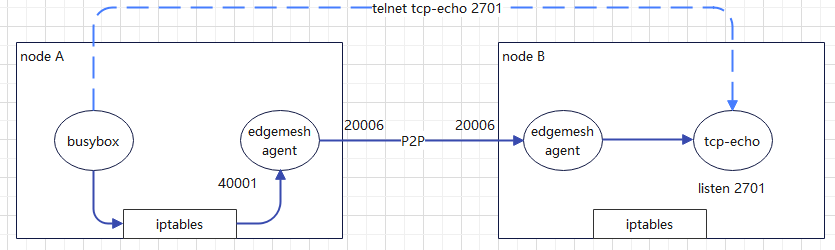

Direct penetration access

For full cone NAT, restricted NAT and restricted cone NAT access of some ports, the following schemes can be directly adopted;

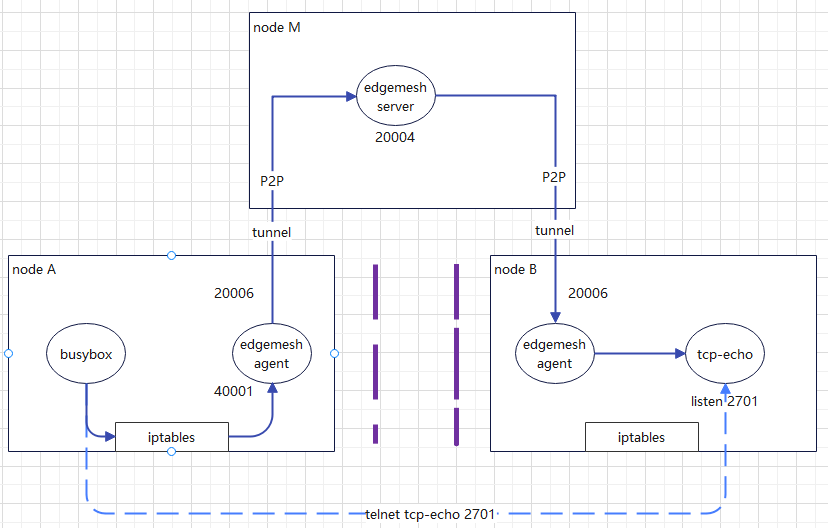

Relay penetration access

For requests that cannot be accessed through direct penetration, the edgemesh agent will forward the traffic to the edgemesh server for relay, because after all edgemesh agents are started, a tunnel network will be established in the edgemesh server in the cloud;

edgemesh principle

On the edge cluster composed of kubernetes+kubeedge, edgemesh agent is deployed regardless of cloud or edge host, which has two main functions:

- The 53 port is exposed to the public, and the function of querying k8s:service configuration through serviceName to obtain the corresponding clusterIP is realized;

- Create a dummy device network card on the host, which is exposed to a port on 169.254.96.16:40001 by default, and configure the EDGE-MESH chain of preouting and OUTPUT through iptables to hijack all traffic access.

- In the following NAT table settings of iptables, we can see the related service target addresses configured in the EDGE-MESH chain: that is, services that do not need EDGEMESH proxy will RETURN to the next line as proxy, that is, dock - > other proxy services (Kube proxy, Kube router...). The declaration of these services is to add the label of noproxy=edgemesh when k8s creating servcie, It will be written to the location of the host iptables by the EDGEMESH agent. These services are actually services that do not require cloud side communication;

- When the traffic that needs to be represented by edgemesh agent, i.e. cloud side communication, cloud side communication and edge side communication, is hijacked to the traffic, the traffic will be hijacked and forwarded to 169.254.96.16:40001 by the kernel, and then forwarded to the remote edgemesh agent: 20006 or edgemesh server: 20004 through port 20006 of edgemesh agent, The edgemesh of the final target end forwards the traffic to the accessed service container;

[root@edge001 ~]# iptables -t nat -nvL --line Chain PREROUTING (policy ACCEPT 9734 packets, 833K bytes) num pkts bytes target prot opt in out source destination 1 32317 3700K EDGE-MESH all -- * * 0.0.0.0/0 0.0.0.0/0 /* edgemesh root chain */ 2 14436 930K DOCKER all -- * * 0.0.0.0/0 0.0.0.0/0 ADDRTYPE match dst-type LOCAL Chain INPUT (policy ACCEPT 9734 packets, 833K bytes) num pkts bytes target prot opt in out source destination Chain OUTPUT (policy ACCEPT 278 packets, 18244 bytes) num pkts bytes target prot opt in out source destination 1 893 60171 EDGE-MESH all -- * * 0.0.0.0/0 0.0.0.0/0 /* edgemesh root chain */ 2 47 3390 DOCKER all -- * * 0.0.0.0/0 !127.0.0.0/8 ADDRTYPE match dst-type LOCAL Chain POSTROUTING (policy ACCEPT 278 packets, 18244 bytes) num pkts bytes target prot opt in out source destination 1 0 0 MASQUERADE all -- * !docker0 172.17.0.0/16 0.0.0.0/0 2 0 0 RETURN all -- * * 192.168.122.0/24 224.0.0.0/24 3 0 0 RETURN all -- * * 192.168.122.0/24 255.255.255.255 4 0 0 MASQUERADE tcp -- * * 192.168.122.0/24 !192.168.122.0/24 masq ports: 1024-65535 5 0 0 MASQUERADE udp -- * * 192.168.122.0/24 !192.168.122.0/24 masq ports: 1024-65535 6 0 0 MASQUERADE all -- * * 192.168.122.0/24 !192.168.122.0/24 Chain DOCKER (2 references) num pkts bytes target prot opt in out source destination 1 27 1896 RETURN all -- docker0 * 0.0.0.0/0 0.0.0.0/0 Chain EDGE-MESH (2 references) num pkts bytes target prot opt in out source destination 1 0 0 RETURN all -- * * 0.0.0.0/0 10.254.165.225 /* ignore kubeedge/cloudcore service */ 2 0 0 RETURN all -- * * 0.0.0.0/0 10.242.247.106 /* ignore kube-system/traefik-web-ui service */ 3 0 0 RETURN all -- * * 0.0.0.0/0 10.243.116.144 /* ignore kube-system/traefik-ingress-service service */ 4 0 0 RETURN all -- * * 0.0.0.0/0 10.255.163.200 /* ignore kube-system/prometheus-service service */ 5 0 0 RETURN all -- * * 0.0.0.0/0 10.240.83.24 /* ignore kube-system/metrics-server service */ 6 0 0 RETURN all -- * * 0.0.0.0/0 10.254.210.250 /* ignore kube-system/kube-dns service */ 7 0 0 RETURN all -- * * 0.0.0.0/0 10.240.0.1 /* ignore default/kubernetes service */ 8 0 0 EDGE-MESH-TCP tcp -- * * 0.0.0.0/0 10.240.0.0/12 /* tcp service proxy */ Chain EDGE-MESH-TCP (1 references) num pkts bytes target prot opt in out source destination 1 0 0 DNAT tcp -- * * 0.0.0.0/0 0.0.0.0/0 to:169.254.96.16:40001

Normal access analysis

Direct penetration request side packet capture

Environmental Science:

4.4.2.4.2.4.2.4.2.4.2 el7. elrepo. x86_ sixty-four

mkedge2 10.201.82.131 edgemesh agent role: edgenode kernel: 4.15.6-1 el7. elrepo. x86_ sixty-four

Use case:

Start the TCP echo cloud container on mkedge2 and remotely telnet TCP echo cloud 2701 through the busybox container on mkedge3

explain:

From the perspective of actual traffic packet capturing, the host of the temple of heaven computer room can be directly accessed from the office computer room without relay;

Packet capture details

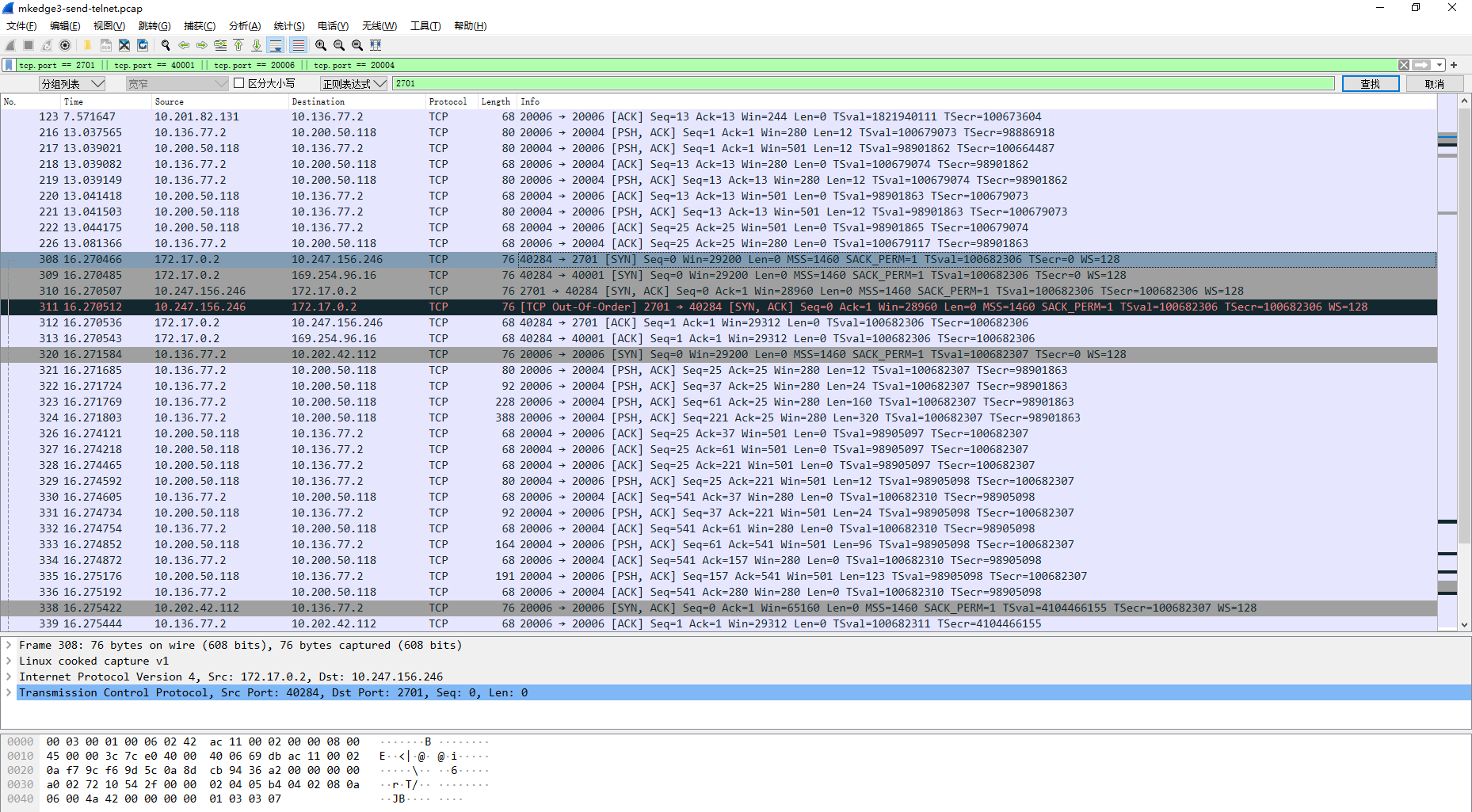

mkedge3 initiates a tcp packet capture request

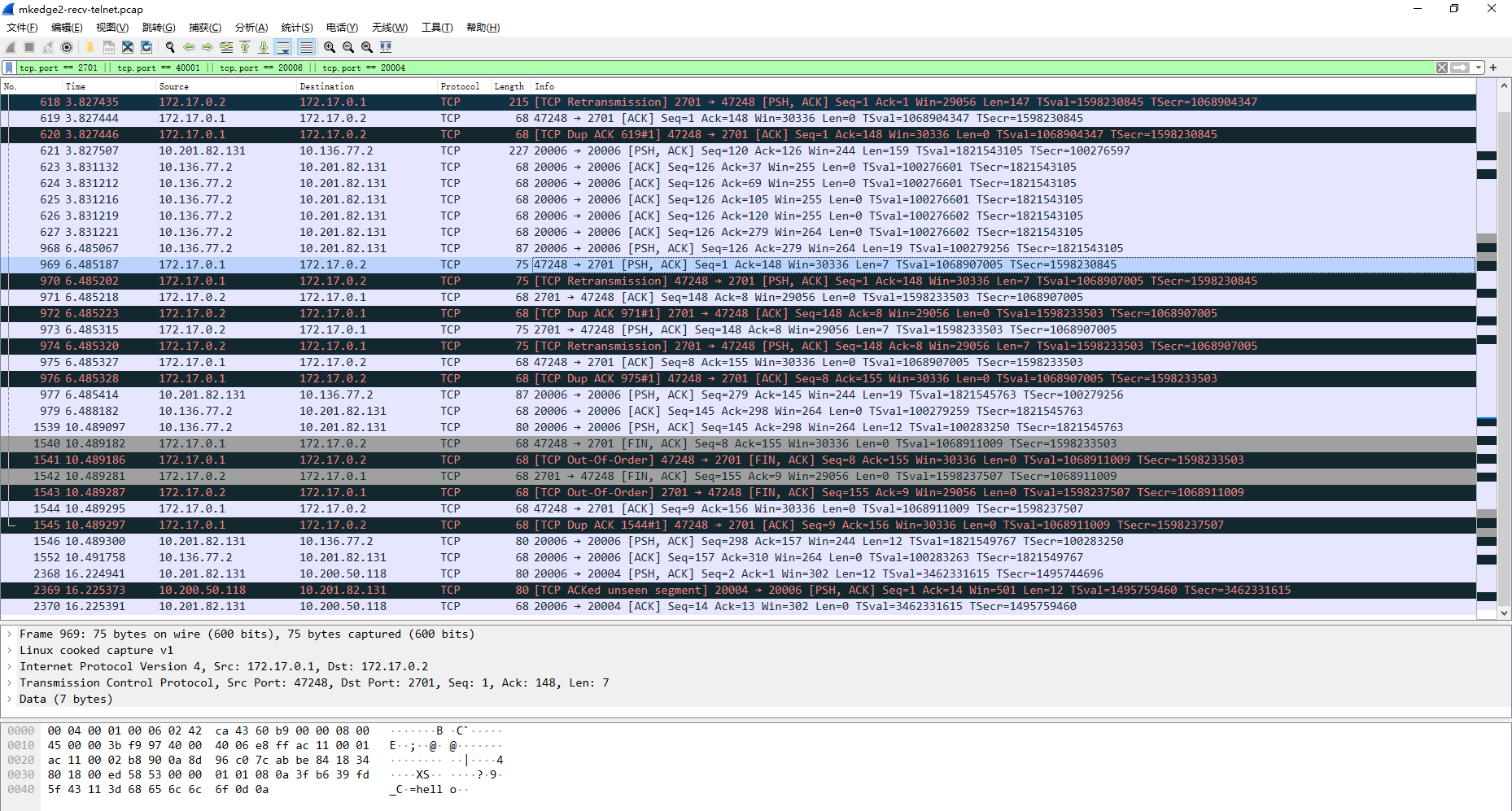

Receive payload tcp packets on mkedge2

Relay penetration request side packet capture

Environmental Science:

mkmaster1 10.200.50.118 international trade machine room edgemesh server, edgemesh agent role: Master kernel: 5.2.14-1 el7. elrepo. x86_ sixty-four

mkworker3 10.202.42.112 edgemesh agent role: cloudnode kernel: 5.2.14-1 el7. elrepo. x86_ sixty-four

mkedge3 10.136.77.2 edgemesh agent role: edgenode kernel: 3.10.0-514 el7. x86_ sixty-four

Use case:

Start the TCP echo cloud container on mkworker3 and remotely telnet TCP echo cloud 2701 through the busybox container on mkedge3

explain:

Judging from the actual traffic packet capturing, the host of Yizhuang machine room cannot be directly accessed from the office machine room, and the edgemesh server relay of ITC machine room is required;

Packet capture details

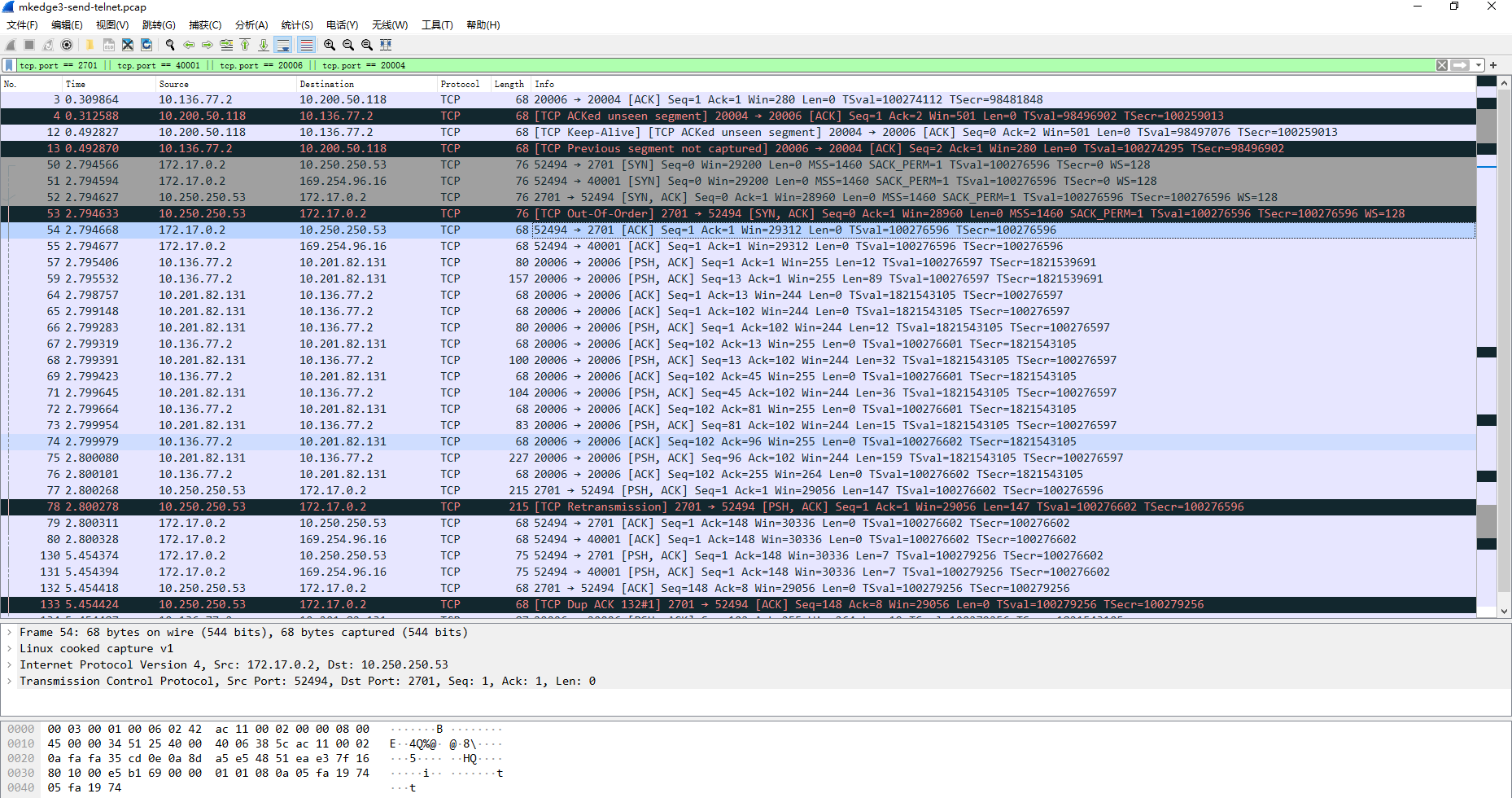

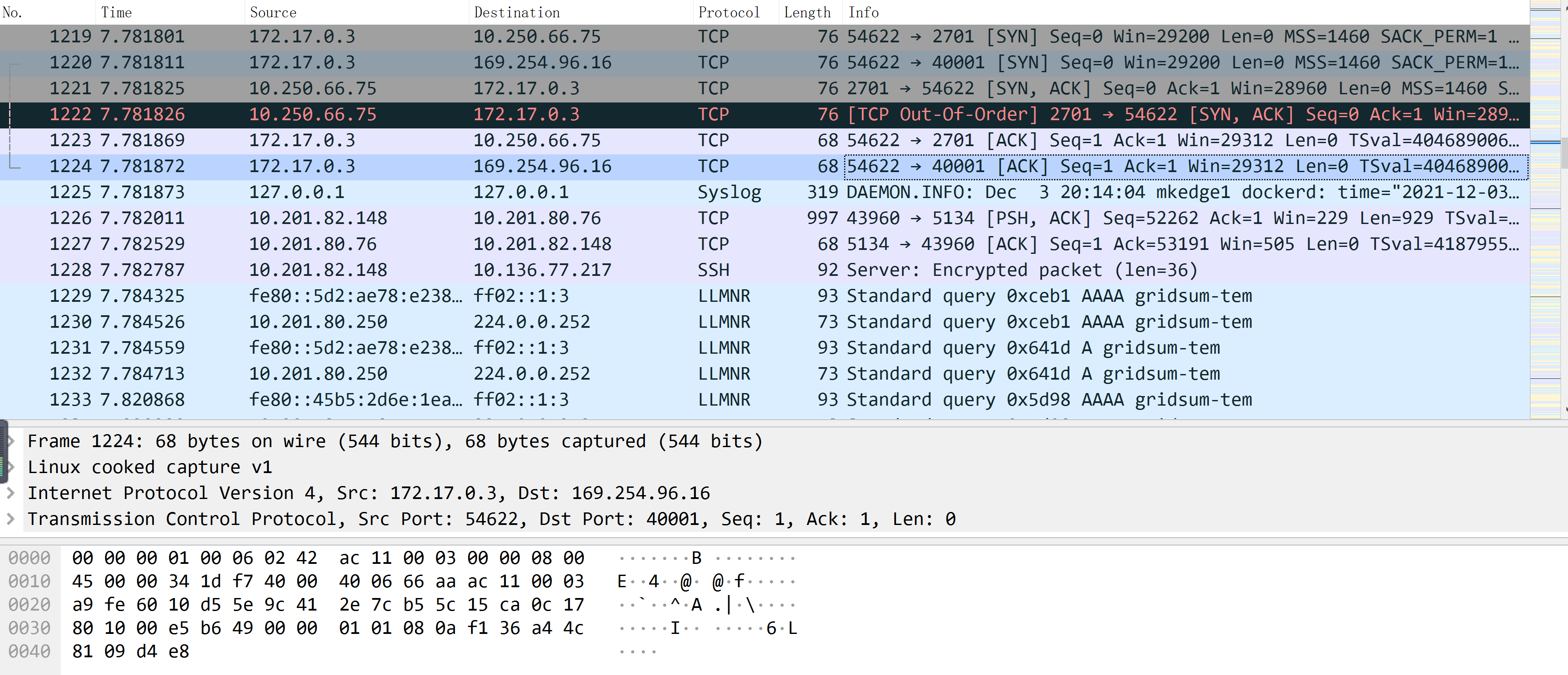

mkedge3 initiates a tcp packet capture request

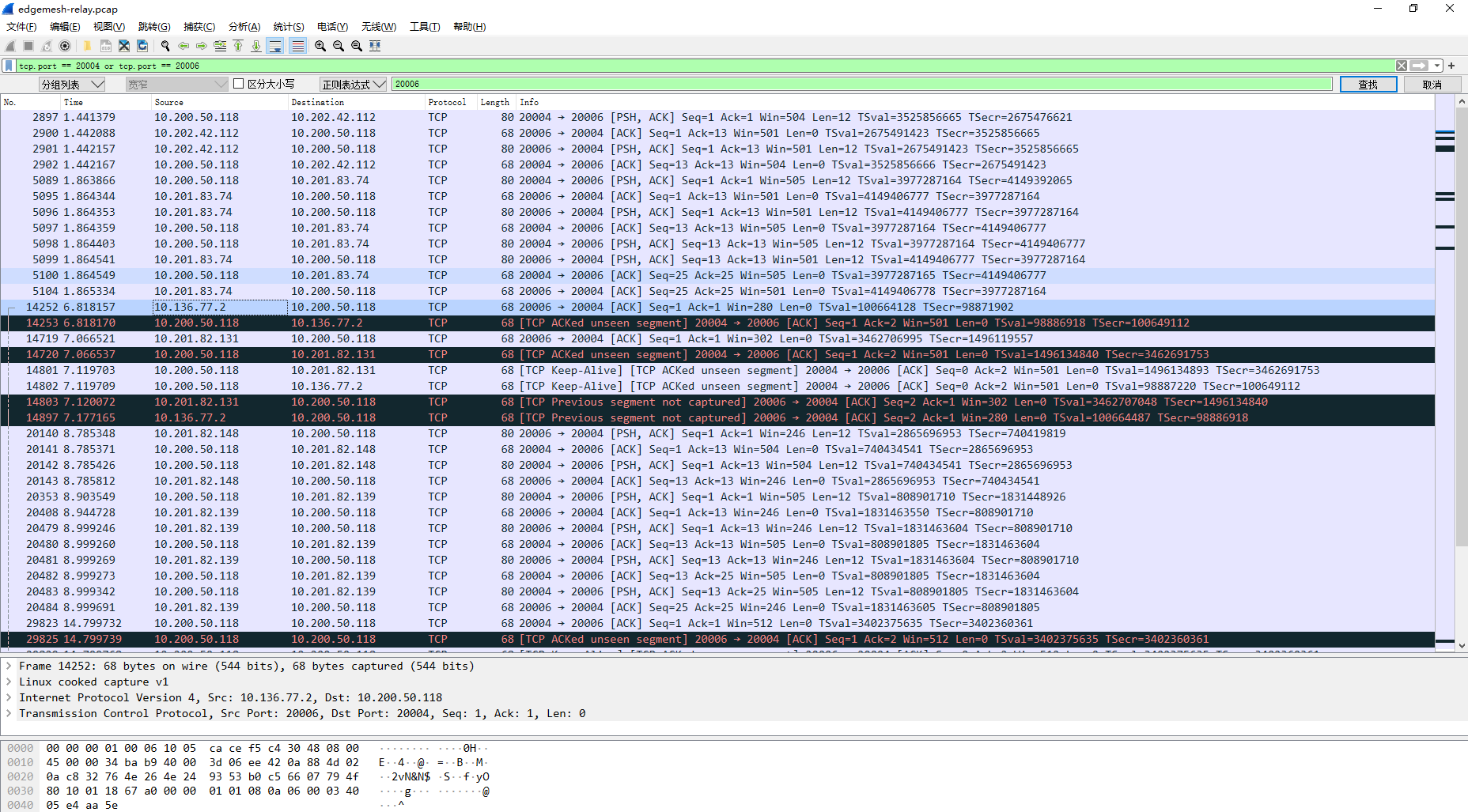

mkmaster1 relay tcp packet capture

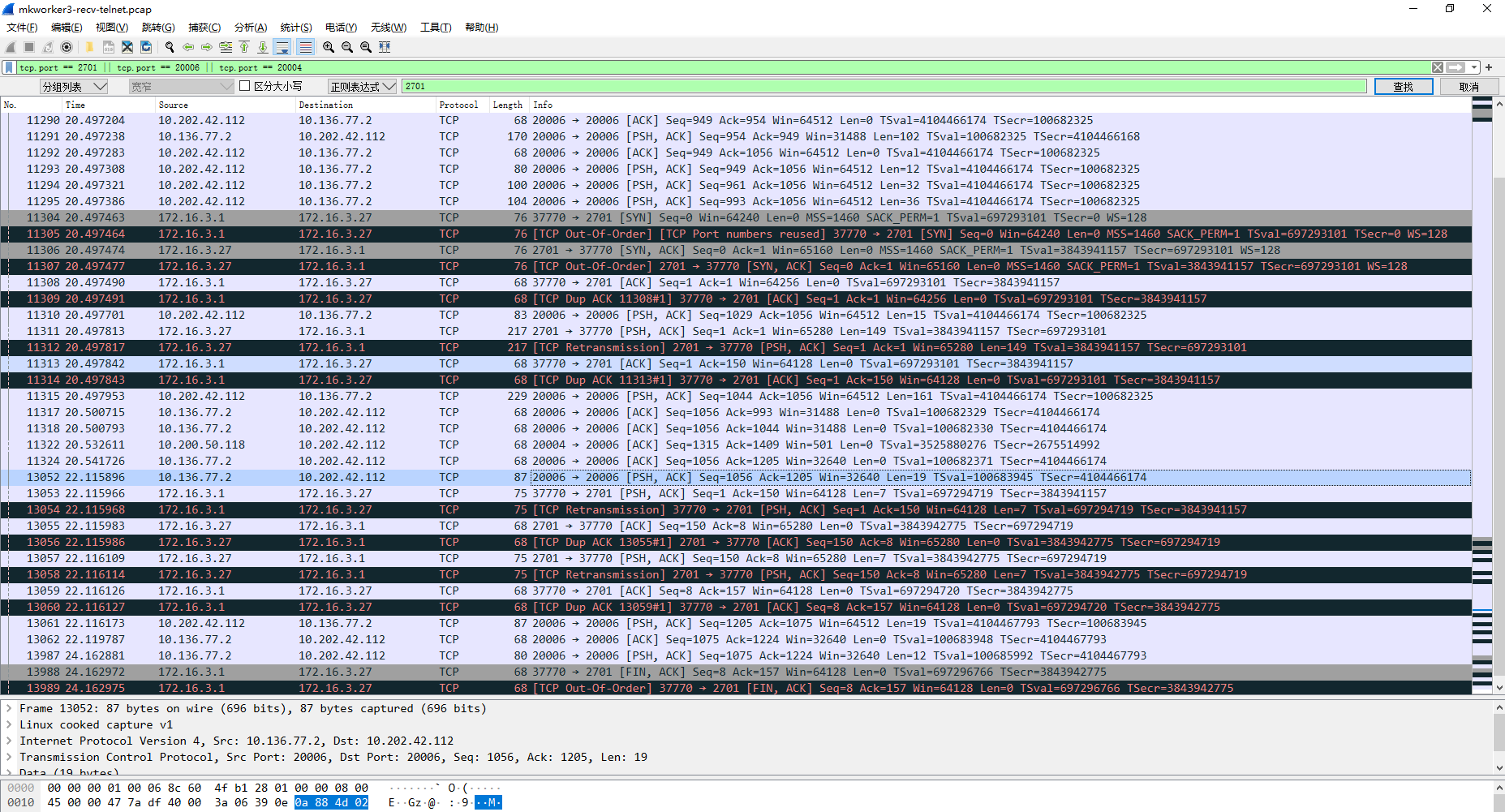

Receive payload tcp packets on mkworker3

Failure access analysis

Let's start with the conclusion. It is suspected that the host default kernel is 4.15.6-1 el7. elrepo. x86_ 64 (centos7) has a serious bug calling the system kernel function getsockopt!!!

Failed penetration request packet capture

Environmental Science:

mkmaster1 10.200.50.118 international trade machine room edgemesh server, edgemesh agent role: Master kernel: 5.2.14-1 el7. elrepo. x86_ sixty-four

mkworker1 10.201.82.139 edge mesh agent role: cloudnode kernel: 5.2.14-1 el7. elrepo. x86_ sixty-four

mkworker2 10.201.83.74 edge mesh agent role: cloudnode kernel: 5.2.14-1 el7. elrepo. x86_ sixty-four

mkedge1 10.201.82.148 edgemesh agent role: edgenode kernel: 4.15.6-1 el7. elrepo. x86_ sixty-four

mkedge2 10.201.82.131 edgemesh agent role: edgenode kernel: 4.15.6-1 el7. elrepo. x86_ sixty-four

mkedge3 10.136.77.2 edgemesh agent role: edgenode kernel: 3.10.0-514 el7. x86_ sixty-four

Use case:

Start the TCP echo cloud container on mkworker1, mkworker2, mkedge1 and mkedge2, and remotely access these services through the busybox container on mkedge1. It is found that none of them is available;

present situation:

Judging from the actual traffic packet capturing, the traffic disappeared after the service container sent a request to the edgemesh agent: 40001 port;

- After repeated startup and shutdown of these containers, it is true that normal communication cannot be carried out after many tests;

- It is suspected that the problem is iptables. After the docker chain is lost, the rule chain cannot be created by restarting the docker service;

- Restart the edgemesh agent service. It is found that its container cannot be cleared and is always in Running status. If docker rm -f is forcibly stopped, edgemesh agent will leave zombie processes on the host machine;

Initial troubleshooting stage

What is the wrong version of Go? Container mirroring problem? One by one:

To edgemesh agent: proxy In the Run() function of go, the print log is added before the call of realserveraddress (& conn). At the same time, some log output flag bits are added in this function call. The container is re made for testing. It is found that the output log 555 is not output 666, and the process status exception changes to D:

func (proxy *EdgeProxy) Run() {

// ensure ipatbles

proxy.Proxier.Start()

// start tcp proxy

for {

conn, err := proxy.TCPProxy.Listener.Accept()

if err != nil {

klog.Warningf("get tcp conn error: %v", err)

continue

}

klog.Info("!!! has workload !!!")

ip, port, err := realServerAddress(&conn)

...

}

}

// realServerAddress returns an intercepted connection's original destination.

func realServerAddress(conn *net.Conn) (string, int, error) {

tcpConn, ok := (*conn).(*net.TCPConn)

if !ok {

return "", -1, fmt.Errorf("not a TCPConn")

}

klog.Info("111")

file, err := tcpConn.File()

if err != nil {

return "", -1, err

}

defer file.Close()

klog.Info("222")

// To avoid potential problems from making the socket non-blocking.

tcpConn.Close()

*conn, err = net.FileConn(file)

if err != nil {

return "", -1, err

}

klog.Info("333")

fd := file.Fd()

klog.Info("444")

var addr sockAddr

size := uint32(unsafe.Sizeof(addr))

err = getSockOpt(int(fd), syscall.SOL_IP, SoOriginalDst, uintptr(unsafe.Pointer(&addr)), &size)

if err != nil {

return "", -1, err

}

klog.Info("777")

var ip net.IP

switch addr.family {

case syscall.AF_INET:

ip = addr.data[2:6]

default:

return "", -1, fmt.Errorf("unrecognized address family")

}

klog.Info("888")

port := int(addr.data[0])<<8 + int(addr.data[1])

if err := syscall.SetNonblock(int(fd), true); err != nil {

return "", -1, nil

}

return ip.String(), port, nil

}

func getSockOpt(s int, level int, name int, val uintptr, vallen *uint32) (err error) {

klog.Info("555")

_, _, e1 := syscall.Syscall6(syscall.SYS_GETSOCKOPT, uintptr(s), uintptr(level), uintptr(name), uintptr(val), uintptr(unsafe.Pointer(vallen)), 0)

if e1 != 0 {

err = e1

}

klog.Info("666")

return

}

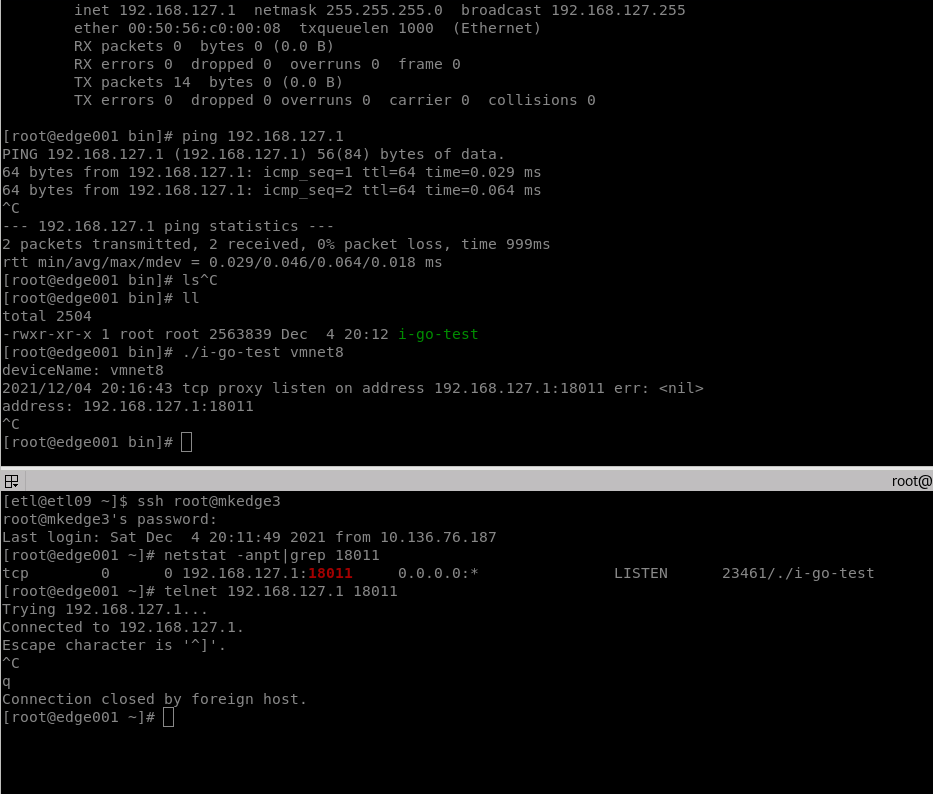

Then I began to suspect that there was a problem with the kernel call. First, I wrote a go program: https://gitee.com/Hu-Lyndon/gogetsockopt.git

The following is the kernel on the mkedge3 host: 3.10.0-514 el7. x86_ 64. Start the program on one terminal to listen to port 18011, and then directly telnet the port on another terminal connected to mkedge3. The program can communicate correctly and output the print log address: 192.168.127.1:18011

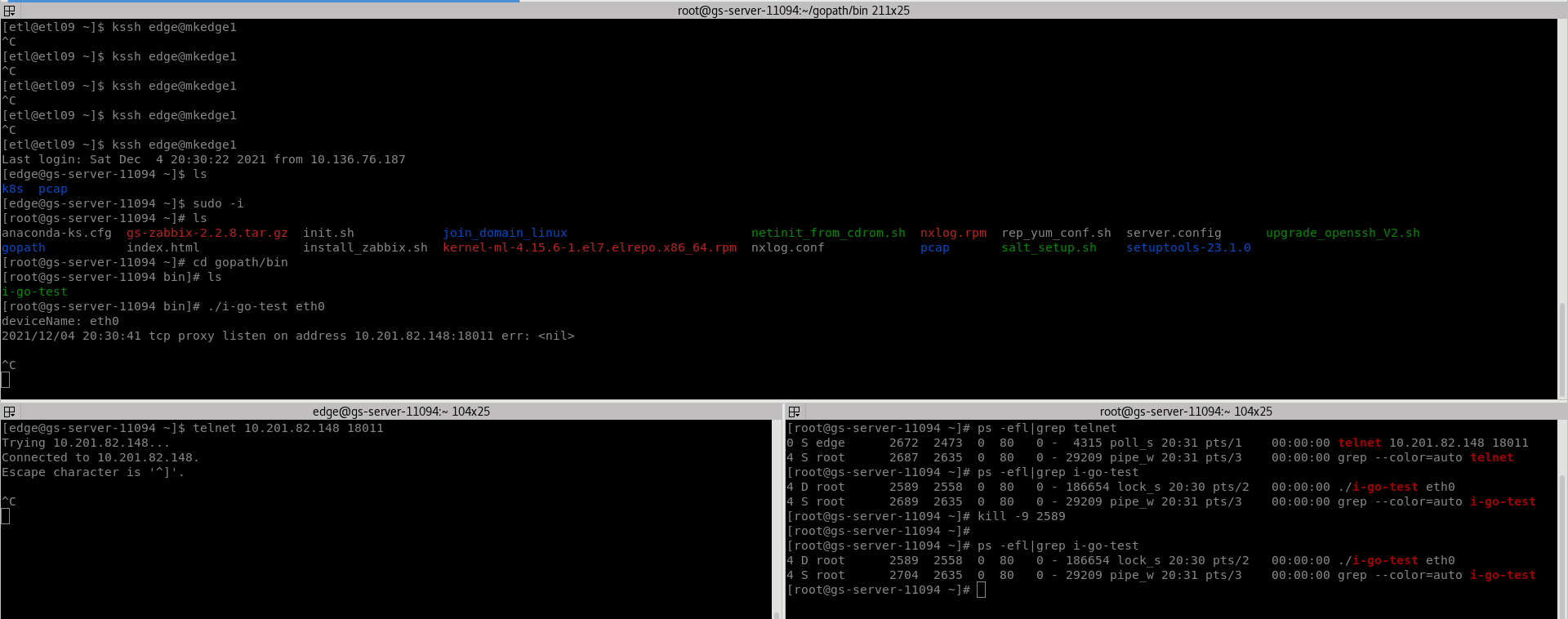

The following is the test on mkedge1, kernel: 4.15.6-1 el7. elrepo. x86_ 64. After telnet is found, the print log cannot be output, and the program cannot kill -9 in the third terminal window, and the process state changes to D;

If you don't give up, the program is still the same on mkedge2;

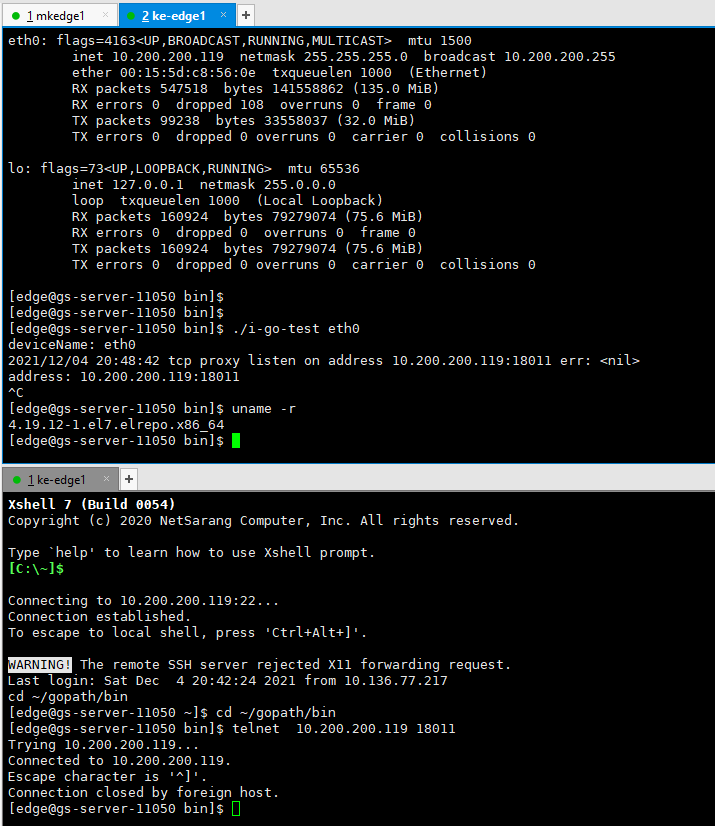

I didn't give up. I found a kernel and upgraded it to kernel: 4.19.12-1 el7. elrepo. x86_ 64 host, the result is correct;

Re troubleshooting phase

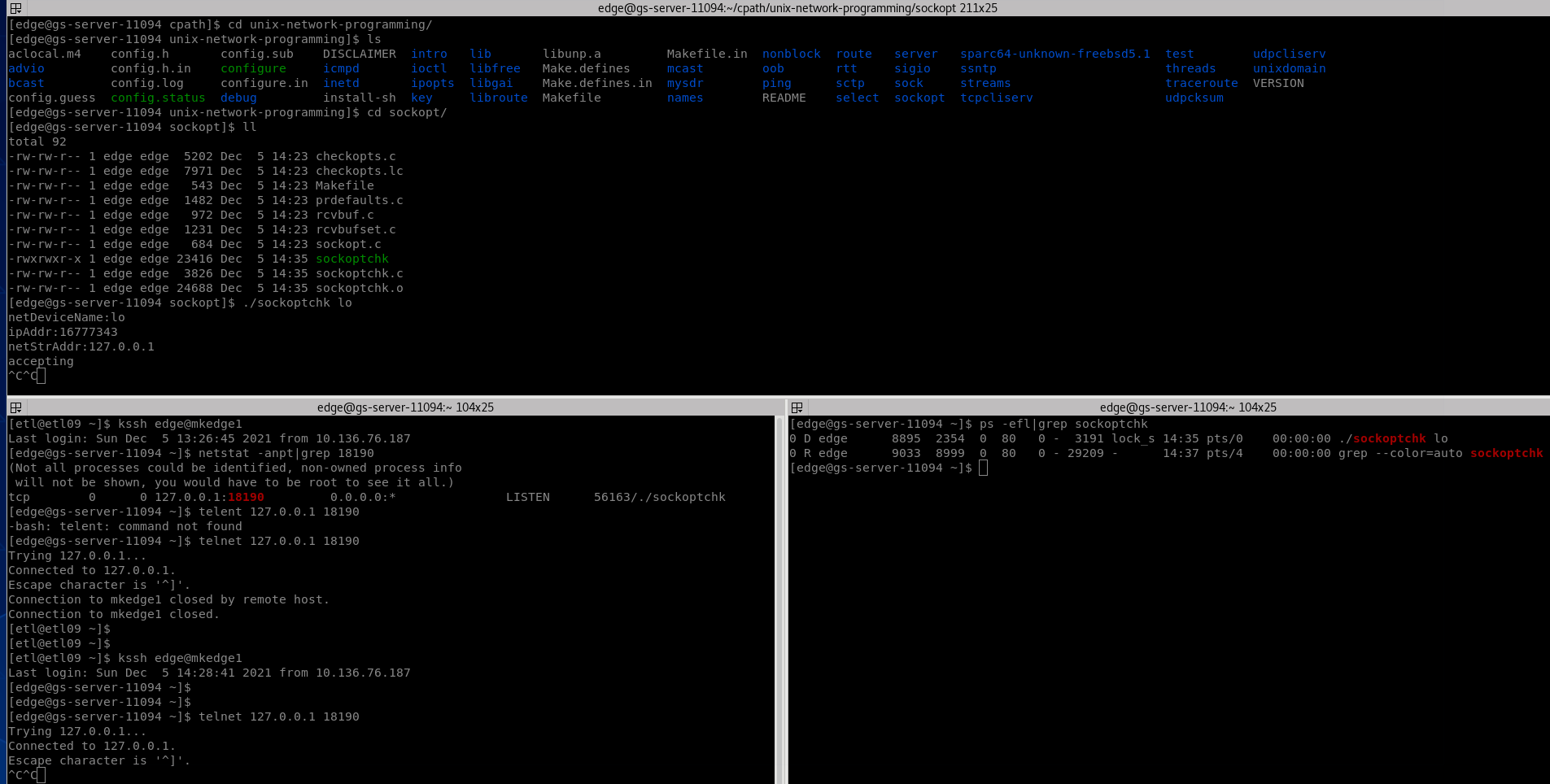

Never give up: Although I seriously doubt the kernel: 4.15.6-1 el7. elrepo. x86_ 64. Is it the Go problem or the system kernel problem? So I wrote a C program to test: https://gitee.com/Hu-Lyndon/unix-network-programming , in compile-on-kernel 4 15.6 branch / sockopt / sockoptchk c

The above problems still appear, which means that it should be a kernel problem. It is recommended not to install this version of the kernel after O & M. at present, the kernel above 4.19.12 or simply 3.10 has been patch ed.

Relevant information

https://go.dev/play/p/GMAaKucHOr

https://elixir.bootlin.com/linux/latest/C/ident/sys_getsockopt

https://git.kernel.org/pub/scm/linux/kernel/git/torvalds/linux.git/tree/net/socket.c

https://bugzilla.kernel.org/show_bug.cgi?id=198791

http://patchwork.ozlabs.org/project/netfilter-devel/patch/a4752d0887579496c5db267d9db7ff77719436d8.1518088560.git.pabeni@redhat.com/

https://github.com/cybozu-go/transocks/pull/14