Current limiting is one of the three powerful tools to protect high concurrent systems, and the other two are cache and degradation. Current limitation is used to limit concurrency and requests in many scenarios, such as second-kill snap-up, protection of their own systems and downstream systems from being overwhelmed by huge traffic, etc.

The purpose of current limiting is to protect the system by limiting the speed of concurrent access/requests or requests within a time window. Once the limit rate is reached, the system can deny service or perform traffic shaping.

_Commonly used current limiting methods and scenarios are: limiting total concurrency (such as database connection pool, thread pool), limiting instantaneous concurrency (such as limit_conn module of nginx, which is used to limit instantaneous concurrent connections, Java emaphore can also be implemented), limiting the average rate in time windows (such as Sate Limiter of Guava, limit_req module of nginx, which limits the average rate per second). Others include restricting the call rate of remote interfaces and the consumption rate of MQ. In addition, it can limit the current according to the number of network connections, network traffic, CPU or memory load.

For example, if we need to limit the number of concurrent calls to no more than 100 (concurrent calls at the same time), we can use the semaphore Semaphore. But if we want to limit the average number of calls to a method over a period of time to no more than 100, we need to use RateLimiter.

Fundamental Algorithms for Current Limitation

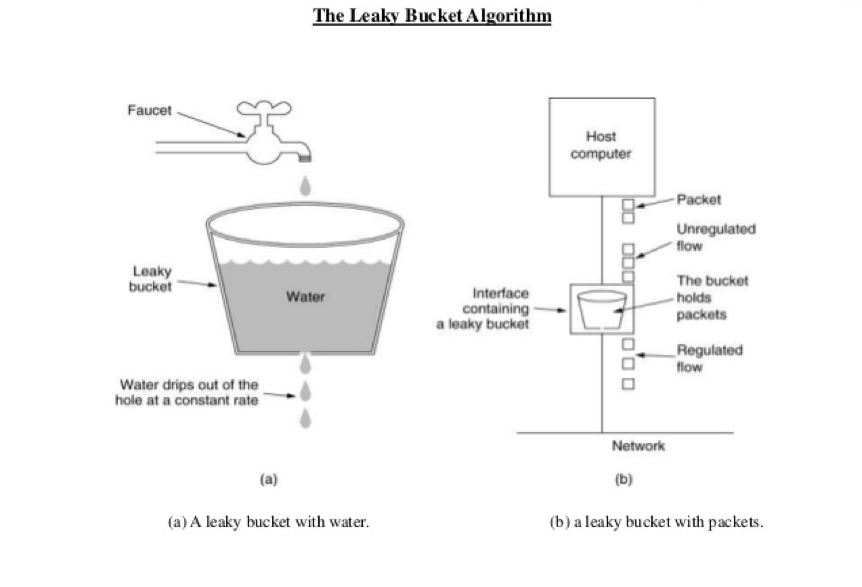

Let's first explain two basic current limiting algorithms: leaky bucket algorithm and token bucket algorithm.

From the picture above, we can see that, like a funnel, the amount of water coming in is like the flow of access, and the amount of water going out is like the amount of water our system processes requests. When the flow of access is too large, water will accumulate in the funnel, and if there is too much water, it will overflow.

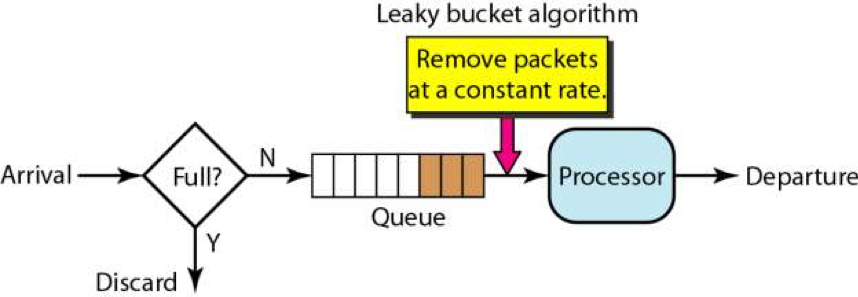

The implementation of leaky bucket algorithm often depends on queue. If the queue is not full, the request will be put into the queue directly. Then a processor will take out the request from the queue head at a fixed frequency for processing. If the number of requests is large, the queue will be full, and the new requests will be discarded.

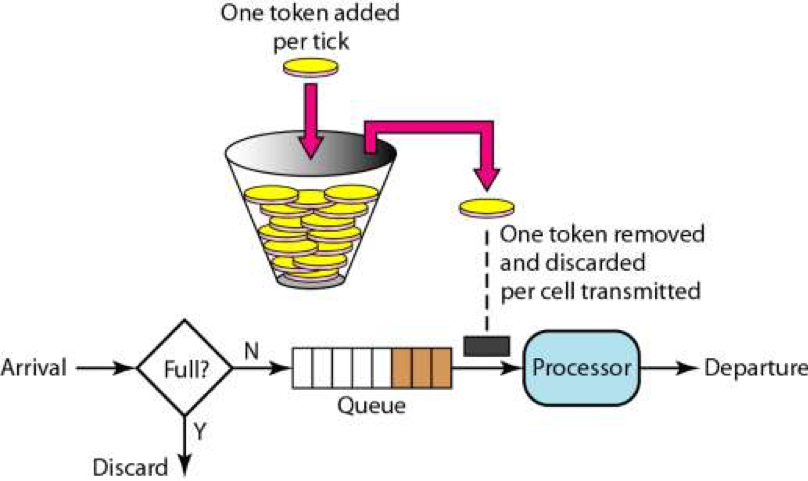

The token bucket algorithm is a bucket that stores a fixed capacity token and adds tokens to the bucket at a fixed rate. The maximum number of tokens stored in the bucket is discarded or rejected. When traffic or network requests arrive, each request needs to obtain a token, if it can be obtained, it is processed directly, and the token bucket deletes a token. If the access is different, the request will be current-limited, either discarded directly or waiting in the buffer.

Token bucket versus leaky bucket:

The token bucket adds tokens to the bucket at a fixed rate. Whether the request is processed depends on whether the token in the bucket is sufficient or not. When the number of tokens decreases to zero, the new request is rejected. The leaky bucket outflows the request at a constant fixed rate at any rate of incoming request. When the number of incoming requests accumulates to the capacity of the leaky bucket, the new incoming request is rejected.

The token bucket limits the average inflow rate, allowing burst requests to be processed as long as there are tokens, supporting three tokens and four tokens at a time; the leaky bucket limits the constant outflow rate, that is, the outflow rate is a fixed constant value, such as the rate of all 1, but not one at a time and two at a time, so as to smooth the burst inflow rate;

The token bucket allows a certain degree of burst, and the main purpose of the leaky bucket is to smooth the outflow rate.

Guava RateLimiter

Guava is an excellent open source project in Java field. It contains many very useful functions in Java project, such as Collections, Caching, Concurrency, Common annotations, String operations and I/O operations.

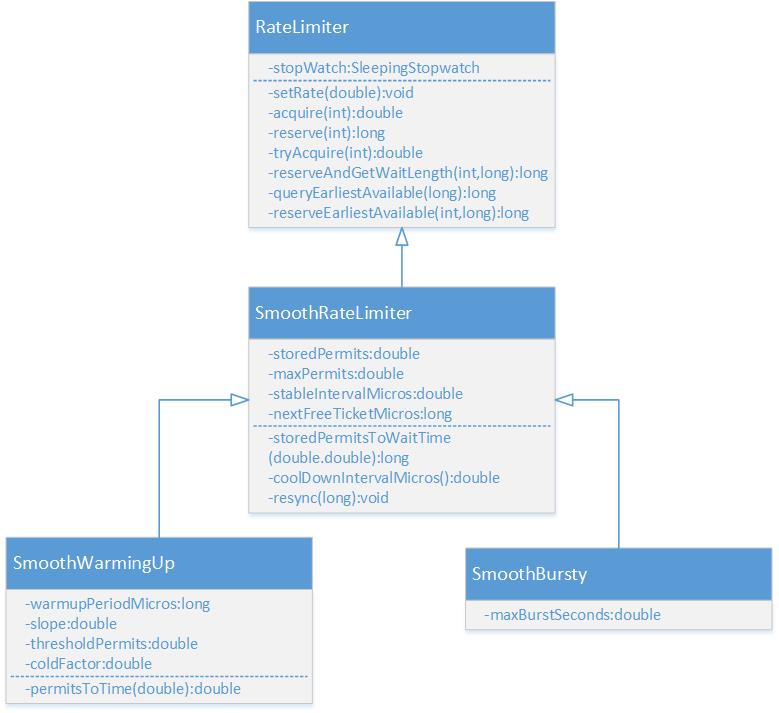

Guava's Rete Limiter provides token bucket implementation: Smooth Bursty and Smooth Warming Up.

RateLimiter's class diagram is shown above, where RateLimiter is the entry class, which provides two sets of factory methods to create two subclasses. This is in line with the suggestion in Effective Java to replace constructors with static factory methods. After all, the author of the book is also the main maintainer of the Guava library, and the two are better coordinated with "eating".

// RateLimiter provides two factory methods that eventually call the following two functions to generate two subclasses of RateLimiter. static RateLimiter create(SleepingStopwatch stopwatch, double permitsPerSecond) { RateLimiter rateLimiter = new SmoothBursty(stopwatch, 1.0 /* maxBurstSeconds */); rateLimiter.setRate(permitsPerSecond); return rateLimiter; } static RateLimiter create( SleepingStopwatch stopwatch, double permitsPerSecond, long warmupPeriod, TimeUnit unit, double coldFactor) { RateLimiter rateLimiter = new SmoothWarmingUp(stopwatch, warmupPeriod, unit, coldFactor); rateLimiter.setRate(permitsPerSecond); return rateLimiter; }

Smoothing Burst Current Limitation

A current limiter is created using RateLimiter's static method, which sets the number of tokens placed per second to five. The RateLimiter object returned guarantees that no more than five tokens will be given in one second and placed at a fixed rate to achieve smooth output.

public void testSmoothBursty() { RateLimiter r = RateLimiter.create(5); while (true) { System.out.println("get 1 tokens: " + r.acquire() + "s"); } /** * output: Basically, it is executed once in 0.2s, which meets the setting of issuing five tokens per second. * get 1 tokens: 0.0s * get 1 tokens: 0.182014s * get 1 tokens: 0.188464s * get 1 tokens: 0.198072s * get 1 tokens: 0.196048s * get 1 tokens: 0.197538s * get 1 tokens: 0.196049s */ }

RateLimiter uses the token bucket algorithm to accumulate tokens. If the frequency of acquiring tokens is relatively low, it will not cause waiting and get tokens directly.

public void testSmoothBursty2() { RateLimiter r = RateLimiter.create(2); while (true) { System.out.println("get 1 tokens: " + r.acquire(1) + "s"); try { Thread.sleep(2000); } catch (Exception e) {} System.out.println("get 1 tokens: " + r.acquire(1) + "s"); System.out.println("get 1 tokens: " + r.acquire(1) + "s"); System.out.println("get 1 tokens: " + r.acquire(1) + "s"); System.out.println("end"); /** * output: * get 1 tokens: 0.0s * get 1 tokens: 0.0s * get 1 tokens: 0.0s * get 1 tokens: 0.0s * end * get 1 tokens: 0.499796s * get 1 tokens: 0.0s * get 1 tokens: 0.0s * get 1 tokens: 0.0s */ } }

RateLimiter can cope with burst traffic because it accumulates tokens. In the following code, one request directly requests five tokens, but because there are accumulated tokens in the token bucket at this time, it is sufficient to respond quickly.

RateLimiter uses lag processing when there are not enough tokens to be issued, that is, the waiting time for the previous request to obtain the token is borne by the next request, that is, to wait instead of the previous request.

public void testSmoothBursty3() { RateLimiter r = RateLimiter.create(5); while (true) { System.out.println("get 5 tokens: " + r.acquire(5) + "s"); System.out.println("get 1 tokens: " + r.acquire(1) + "s"); System.out.println("get 1 tokens: " + r.acquire(1) + "s"); System.out.println("get 1 tokens: " + r.acquire(1) + "s"); System.out.println("end"); /** * output: * get 5 tokens: 0.0s * get 1 tokens: 0.996766s The lag effect requires waiting for the previous request * get 1 tokens: 0.194007s * get 1 tokens: 0.196267s * end * get 5 tokens: 0.195756s * get 1 tokens: 0.995625s The lag effect requires waiting for the previous request * get 1 tokens: 0.194603s * get 1 tokens: 0.196866s */ } }

Smooth preheating and current limiting

RateLimiter's Smooth Warming Up is a smooth current limiter with a preheating period. It will start up with a preheating period, gradually increasing the distribution frequency to the configuration rate.

For example, in the following code, create an average token distribution rate of 2 with a preheating period of 3 minutes. Since the preheating time is set to 3 seconds, the token bucket will not issue a token in 0.5 seconds at the beginning, but form a smooth and linear descent slope, the frequency is getting higher and higher, within 3 seconds to reach the original set frequency, and then output at a fixed frequency. This feature is suitable for scenarios where the system just starts and takes a little time to "warm up".

public void testSmoothwarmingUp() { RateLimiter r = RateLimiter.create(2, 3, TimeUnit.SECONDS); while (true) { System.out.println("get 1 tokens: " + r.acquire(1) + "s"); System.out.println("get 1 tokens: " + r.acquire(1) + "s"); System.out.println("get 1 tokens: " + r.acquire(1) + "s"); System.out.println("get 1 tokens: " + r.acquire(1) + "s"); System.out.println("end"); /** * output: * get 1 tokens: 0.0s * get 1 tokens: 1.329289s * get 1 tokens: 0.994375s * get 1 tokens: 0.662888s The time taken by the top three times adds up to exactly 3 seconds. * end * get 1 tokens: 0.49764s Normal rate 0.5 seconds a token * get 1 tokens: 0.497828s * get 1 tokens: 0.49449s * get 1 tokens: 0.497522s */ } }

Source code analysis

After looking at the basic examples of RateLimiter, let's learn how it works. Let's first look at the meanings of some of the more important member variables.

//SmoothRateLimiter.java //Current number of storage tokens double storedPermits; //Maximum number of storage tokens double maxPermits; //Added token interval double stableIntervalMicros; /** * The start time of the token can be obtained by the next request * Since RateLimiter allows pre-consumption, after the last request for a pre-consumption token * Next request needs to wait until next FreeTicketMicros time to get the token */ private long nextFreeTicketMicros = 0L;

Smoothing Burst Current Limitation

RateLimiter's principle is to compare current time with next FreeTicket Microsoft each time acquire is invoked, and refresh store permits according to the interval between the two and the interval between stableIntervalMicros when adding unit tokens. Then acquire will sleep until next FreeTicket Micros.

_acquire function is shown below. It calls reserve function to calculate the waiting time needed to get the number of target tokens, then uses SleepStopwatch to sleep, and finally returns the waiting time.

public double acquire(int permits) { // Calculate the waiting time required to obtain a token long microsToWait = reserve(permits); // Thread sleep stopwatch.sleepMicrosUninterruptibly(microsToWait); return 1.0 * microsToWait / SECONDS.toMicros(1L); } final long reserve(int permits) { checkPermits(permits); // Because concurrent operations are involved, concurrent operations are performed using synchronized synchronized (mutex()) { return reserveAndGetWaitLength(permits, stopwatch.readMicros()); } } final long reserveAndGetWaitLength(int permits, long nowMicros) { // Calculate the time when the target number of tokens can be obtained from the current time long momentAvailable = reserveEarliestAvailable(permits, nowMicros); // Subtract the two times to get the time to wait return max(momentAvailable - nowMicros, 0); }

ReserveEarliest Available is a key function of the number of refresh tokens and the next time to get a token, next FreeTicketMicros. It has three steps, one is to call the resync function to increase the number of tokens, the other is to calculate the additional waiting time for prepaid tokens, and the third is to update the next acquisition time of the token, nextFreeTicketMicros, and store the number of tokens Permits.

This involves a feature of RateLimiter, which is that tokens can be paid in advance, and the waiting time is actually executed the next time the token is acquired. See the comments for a detailed explanation of the code logic.

final long reserveEarliestAvailable(int requiredPermits, long nowMicros) { // The number of refresh tokens, equivalent to refreshing the token according to the time at each acquisition resync(nowMicros); long returnValue = nextFreeTicketMicros; // Compare the number of tokens available and the number of target tokens needed to be acquired, and calculate the number of tokens available at present. double storedPermitsToSpend = min(requiredPermits, this.storedPermits); // freshPermits are tokens that need to be paid in advance, that is, the number of target tokens minus the number of tokens currently available. double freshPermits = requiredPermits - storedPermitsToSpend; // Because there will be a sudden influx of requests and the number of existing tokens is insufficient, a certain number of tokens will be paid in advance. // WatMicros is the time when the number of prepaid tokens is generated, and the next time the token is added, the time should be calculated plus watiMicros. long waitMicros = storedPermitsToWaitTime(this.storedPermits, storedPermitsToSpend) + (long) (freshPermits * stableIntervalMicros); // StordPermitsToWaitTime is implemented differently in SmothWarming Up and SmothBurest to achieve a preheating buffer period // SmoothBuresty's storedPermitsToWaitTime returns zero directly, so watiMicros is the waiting time for a prepaid token try { // Update nextFreeTicketMicros, and the waiting time for this prepaid token will allow the next request to actually wait. this.nextFreeTicketMicros = LongMath.checkedAdd(nextFreeTicketMicros, waitMicros); } catch (ArithmeticException e) { this.nextFreeTicketMicros = Long.MAX_VALUE; } // Update token number, minimum number is 0 this.storedPermits -= storedPermitsToSpend; // Returns the old nextFreeTicketMicros value without additional waiting time for the prepaid token. return returnValue; } // SmoothBurest long storedPermitsToWaitTime(double storedPermits, double permitsToTake) { return 0L; }

_resync function is used to add storage tokens, the core logic is (nowMicros - nextFreeTicketMicros) / stableIntervalMicros. Refresh when the current time is longer than nextFreeTicketMicros, otherwise return directly.

void resync(long nowMicros) { // The current time is later than nextFreeTicketMicros, so refresh the token and nextFreeTicketMicros if (nowMicros > nextFreeTicketMicros) { // The coolDownIntervalMicros function takes one token per second, and SmoothWarmingUp and SmothBuresty are implemented differently. // CoolDown IntervalMicros of SmoothBuresty returns directly to stableIntervalMicros // The current time minus the time acquisition interval for updating the token, and then divides by the time interval for adding the token to obtain the number of tokens to be added during this period. storedPermits = min(maxPermits, storedPermits + (nowMicros - nextFreeTicketMicros) / coolDownIntervalMicros()); nextFreeTicketMicros = nowMicros; } // If the current time is earlier than nextFreeTicketMicros, the thread that gets the token will wait until nextFreeTicketMicros, which needs to get the token. // The extra waiting time is replaced by the next acquired thread. } double coolDownIntervalMicros() { return stableIntervalMicros; }

Let's give you an example to better understand the logic of resync and reserveEarliest Available functions.

For example, RateLimiter's stableIntervalMicros issuing two tokens in one second, storedPermits issuing 0, and NextFreeTicketMicros issuing 15539 18495748. Thread 1 acquire(2), the current time is 15539 18496248. First, the resync function is calculated, (15539184248 - 1553918495748)/500 = 1, so the number of tokens currently available is 1, but because it can be prepaid, nextFreeTicketMicros = nextFreeTicket Micro + 1 * 500 = 1553918496748. Threads need not wait.

Thread 2 also comes to acquire(2). First, the resync function finds that the current time is earlier than nextFreeTicketMicros, so it can not increase the number of tokens, so it needs to pay two tokens in advance. nextFreeTicketMicros = nextFreeTicketMicros Micro + 2 * 500 = 1553918497748. Thread 2 needs to wait for 1553918496748, which is the next FreeTicket Micros time calculated when the thread is fetched. Similarly, thread 3 needs to wait for the next FreeTicket Micros time of thread 2 computation when it acquires the token.

Smooth preheating and current limiting

The above is the implementation of RateLimiter for smoothing burst current limiting. Now let's look at the implementation principle of adding preheating buffer period.

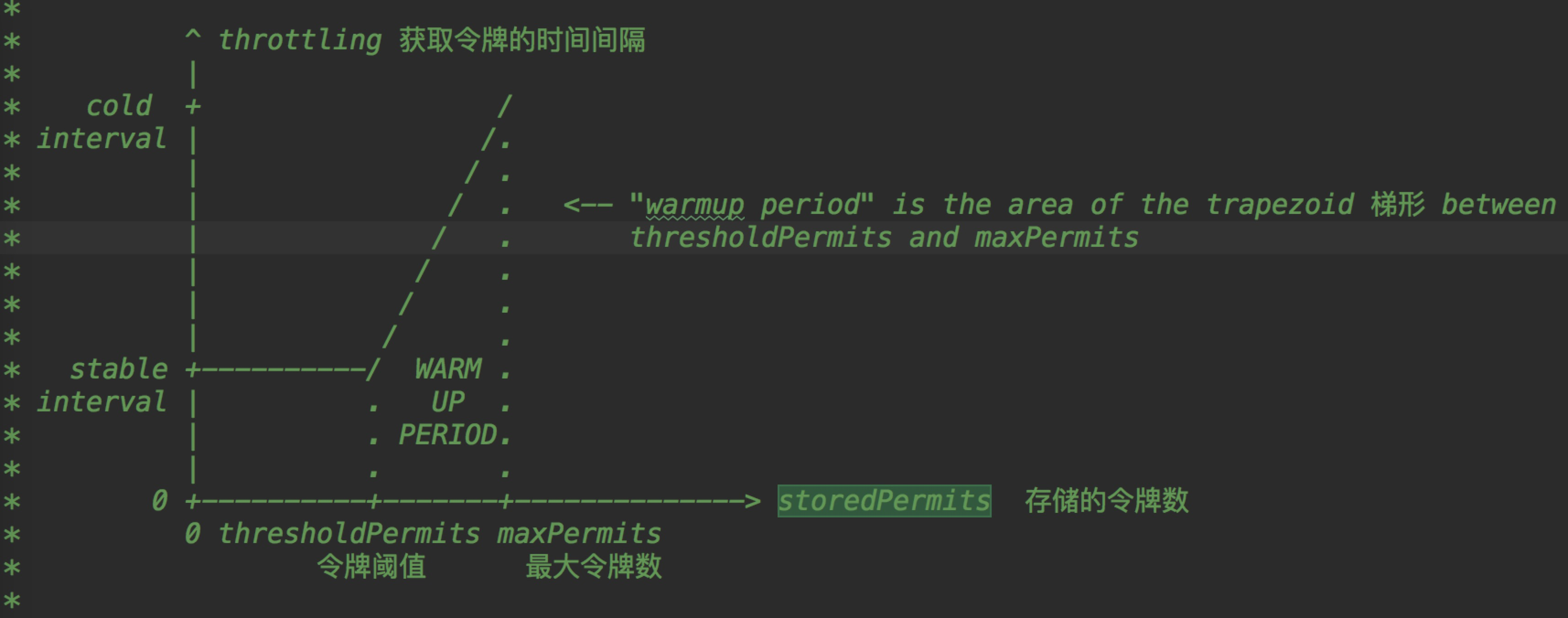

_The key of Smooth Warming Up to achieve preheating buffer is that the rate of token distribution will change with time and the number of tokens, and the rate will slow first and then fast. As shown in the figure below, the time interval for token refresh is gradually shortened from long to short. When the number of storage tokens reaches threshold Permits from maxPermits, the time price of issuing tokens decreases from coldInterval to stable Interval.

The relevant code for Smooth Warming Up is shown below, and the relevant logic is written in the comments.

// Smooth Warming Up, the waiting time is to calculate the area of the trapezoid or square in the figure above. long storedPermitsToWaitTime(double storedPermits, double permitsToTake) { /** * Current permits exceed the threshold */ double availablePermitsAboveThreshold = storedPermits - thresholdPermits; long micros = 0; /** * If the number of tokens currently stored exceeds thresholdPermits */ if (availablePermitsAboveThreshold > 0.0) { /** * Number of tokens that need to be consumed on the right side of the threshold */ double permitsAboveThresholdToTake = min(availablePermitsAboveThreshold, permitsToTake); /** * Trapezoidal area * * High * (top * bottom) / 2 * * The high is permits Above ThresholdToTake, which is the number of tokens that need to be consumed on the right side. * Long bottom permits to Time (available Permits Above Threshold) * Shorter top permits ToTime (available Permits Above Threshold - permits Above Threshold ToTake) */ micros = (long) (permitsAboveThresholdToTake * (permitsToTime(availablePermitsAboveThreshold) + permitsToTime(availablePermitsAboveThreshold - permitsAboveThresholdToTake)) / 2.0); /** * Subtract the number of tokens that have been acquired on the right side of the threshold */ permitsToTake -= permitsAboveThresholdToTake; } /** * The area of a stationary period is just the length multiplied by the width. */ micros += (stableIntervalMicros * permitsToTake); return micros; } double coolDownIntervalMicros() { /** * The number of tokens added per second is warmup time/maxPermits. In this case, in warmuptime time, the number of additional tokens is increased. * For maxPermits */ return warmupPeriodMicros / maxPermits; }

Epilogue

RateLimiter can only be used for single-machine current limiting. If you want cluster current limiting, you need to introduce redis or Ali open source sentinel middleware. Please continue to pay attention to it.