brief introduction

- In the previous notes, I've sorted out the Camera control flow part quite clearly. In the Camera process, there is another important part, namely data flow.

-

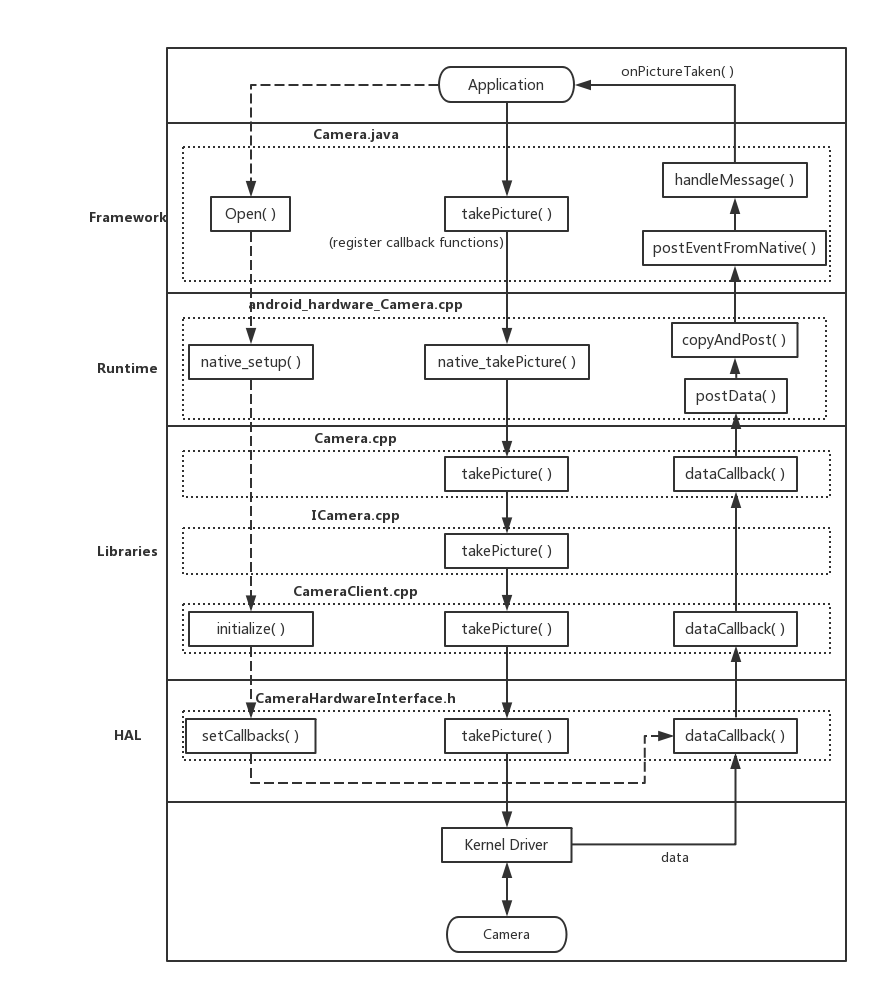

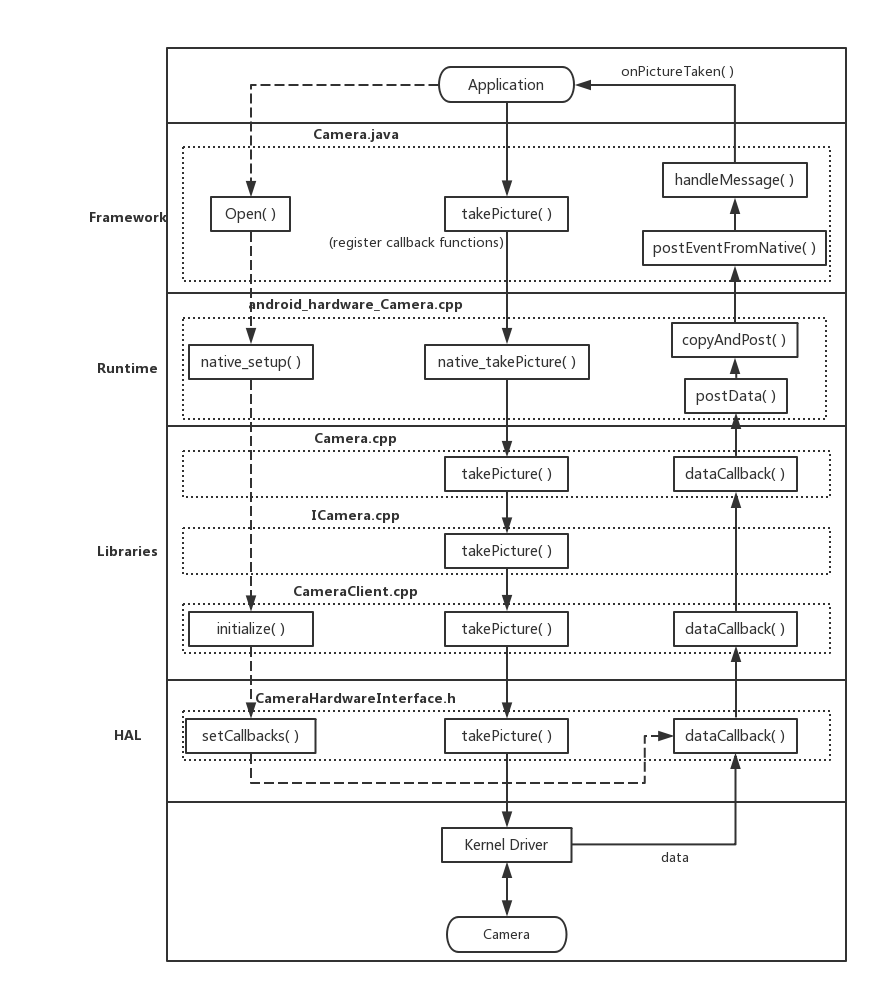

In Camera API 1, data streams are return ed to Applications layer by layer, mainly through function callbacks, in accordance with the direction from bottom to top.

- Because the part of data flow is relatively simple, I combine it with Camera's control flow, and trace a relatively complete Camera process from the takePicture() method. This series of notes will be finished by this article.

takePicture() flow

1. Open process

-

Camera Open's process has been described in more detail in a previous note.

- Here, let's focus on the HAL layer part of the process.

1.1 CameraHardwareInterface.h

- Location: frameworks/av/services/camera/libcameraservice/device1/Camera Hardware Interface.h

-

setCallback():

- Set the notify callback to notify that the data has been updated.

- Setting data callback and data Timestamp callback corresponds to function pointers mDataCb and mDataCvTimestamp.

- Notice that when mDevice - > OPS is set to correspond to the callback function, a function like _data_cb is passed in instead of the function pointer previously set. In this file, _data_cb is implemented, and the callback function is encapsulated in one layer.

void setCallbacks(notify_callback notify_cb,

data_callback data_cb,

data_callback_timestamp data_cb_timestamp,

void* user)

{

mNotifyCb = notify_cb;

mDataCb = data_cb;

mDataCbTimestamp = data_cb_timestamp;

mCbUser = user;

ALOGV("%s(%s)", __FUNCTION__, mName.string());

if (mDevice->ops->set_callbacks) {

mDevice->ops->set_callbacks(mDevice,

__notify_cb,

__data_cb,

__data_cb_timestamp,

__get_memory,

this);

}

}

-

__data_cb():

- For the simple encapsulation of the original callback function, an additional judgment is added to prevent the array from crossing the boundary.

static void __data_cb(int32_t msg_type,

const camera_memory_t *data, unsigned int index,

camera_frame_metadata_t *metadata,

void *user)

{

ALOGV("%s", __FUNCTION__);

CameraHardwareInterface *__this =

static_cast<CameraHardwareInterface *>(user);

sp<CameraHeapMemory> mem(static_cast<CameraHeapMemory *>(data->handle));

if (index >= mem->mNumBufs) {

ALOGE("%s: invalid buffer index %d, max allowed is %d", __FUNCTION__,

index, mem->mNumBufs);

return;

}

__this->mDataCb(msg_type, mem->mBuffers[index], metadata, __this->mCbUser);

}

2. control flow

2.1 Framework

2.1.1 Camera.java

- Location: frameworks/base/core/java/android/hardware/Camera.java

-

takePicture():

- Set shutter callback.

- Set up various types of picture data callbacks.

- Call the JNI takePicture method.

- Note that the incoming parameter msgType is determined by the presence or absence of the corresponding CallBack. Each Callback should correspond to a number in the binary system (e.g. 1, 10, 100), so the |= operation is used here to assign a value to it.

public final void takePicture(ShutterCallback shutter, PictureCallback raw,

PictureCallback postview, PictureCallback jpeg) {

mShutterCallback = shutter;

mRawImageCallback = raw;

mPostviewCallback = postview;

mJpegCallback = jpeg;

int msgType = 0;

if (mShutterCallback != null) {

msgType |= CAMERA_MSG_SHUTTER;

}

if (mRawImageCallback != null) {

msgType |= CAMERA_MSG_RAW_IMAGE;

}

if (mPostviewCallback != null) {

msgType |= CAMERA_MSG_POSTVIEW_FRAME;

}

if (mJpegCallback != null) {

msgType |= CAMERA_MSG_COMPRESSED_IMAGE;

}

native_takePicture(msgType);

mFaceDetectionRunning = false;

}

2.2 Android Runtime

2.2.1 android_hardware_Camera.cpp

- Location: frameworks/base/core/jni/android_hardware_Camera.cpp

-

takePicture():

- Get the open camera instance and call its takePicture() interface.

- Note that in this function, there are some additional operations for RAW_IMAGE:

- If the callback of RAW is set, check if the corresponding Buffer can be found in the context.

- If Buffer cannot be found, the information of CAMERA_MSG_RAW_IMAGE is removed and replaced by CAMERA_MSG_RAW_IMAGE_NOTIFY.

- After replacement, only notification messages will be obtained, without corresponding image data.

static void android_hardware_Camera_takePicture(JNIEnv *env, jobject thiz, jint msgType)

{

ALOGV("takePicture");

JNICameraContext* context;

sp<Camera> camera = get_native_camera(env, thiz, &context);

if (camera == 0) return;

if (msgType & CAMERA_MSG_RAW_IMAGE) {

ALOGV("Enable raw image callback buffer");

if (!context->isRawImageCallbackBufferAvailable()) {

ALOGV("Enable raw image notification, since no callback buffer exists");

msgType &= ~CAMERA_MSG_RAW_IMAGE;

msgType |= CAMERA_MSG_RAW_IMAGE_NOTIFY;

}

}

if (camera->takePicture(msgType) != NO_ERROR) {

jniThrowRuntimeException(env, "takePicture failed");

return;

}

}

2.3 C/C++ Libraries

2.3.1 Camera.cpp

- Location: frameworks/av/camera/Camera.cpp

-

takePicture():

- Get an ICamera and call its takePicture interface.

- It is relatively simple to call it directly by return.

status_t Camera::takePicture(int msgType)

{

ALOGV("takePicture: 0x%x", msgType);

sp <::android::hardware::ICamera> c = mCamera;

if (c == 0) return NO_INIT;

return c->takePicture(msgType);

}

2.3.2 ICamera.cpp

- Location: frameworks/av/camera/ICamera.cpp

-

takePicture():

- Binder mechanism is used to send corresponding instructions to the server.

- The CameraClient::takePicture() function is actually called.

status_t takePicture(int msgType)

{

ALOGV("takePicture: 0x%x", msgType);

Parcel data, reply;

data.writeInterfaceToken(ICamera::getInterfaceDescriptor());

data.writeInt32(msgType);

remote()->transact(TAKE_PICTURE, data, &reply);

status_t ret = reply.readInt32();

return ret;

}

2.3.3 CameraClient.cpp

- Location: frameworks/av/services/camera/libcameraservice/api1/CameraClient.cpp

-

takePicture():

- Note that the CAMERA_MSG_RAW_IMAGE instruction and CAMERA_MSG_RAW_IMAGE_NOTIFY instruction are not valid at the same time and need to be checked accordingly.

- Filtering the incoming instructions leaves only those related to the takePicture() operation.

- Call the takePicture() interface in Camera Hardware Interface.

status_t CameraClient::takePicture(int msgType) {

LOG1("takePicture (pid %d): 0x%x", getCallingPid(), msgType);

Mutex::Autolock lock(mLock);

status_t result = checkPidAndHardware();

if (result != NO_ERROR) return result;

if ((msgType & CAMERA_MSG_RAW_IMAGE) &&

(msgType & CAMERA_MSG_RAW_IMAGE_NOTIFY)) {

ALOGE("CAMERA_MSG_RAW_IMAGE and CAMERA_MSG_RAW_IMAGE_NOTIFY"

" cannot be both enabled");

return BAD_VALUE;

}

int picMsgType = msgType

& (CAMERA_MSG_SHUTTER |

CAMERA_MSG_POSTVIEW_FRAME |

CAMERA_MSG_RAW_IMAGE |

CAMERA_MSG_RAW_IMAGE_NOTIFY |

CAMERA_MSG_COMPRESSED_IMAGE);

enableMsgType(picMsgType);

return mHardware->takePicture();

}

2.4 HAL

2.4.1 CameraHardwareInterface.h

- Location: frameworks/av/services/camera/libcameraservice/device1/Camera Hardware Interface.h

-

takePicture():

- Through the function pointer set in mDevice, the implementation logic of takePicture operation corresponding to specific platform in HAL layer is invoked.

- Next is the process related to the specific platform, this part of the content is not major to me, and in the last note has been more in-depth exploration, so here we will not continue to dig down.

- After the control process reaches the HAL layer, it sends control instructions to Linux Drivers, which enables specific Camera devices to execute instructions and obtain data.

status_t takePicture()

{

ALOGV("%s(%s)", __FUNCTION__, mName.string());

if (mDevice->ops->take_picture)

return mDevice->ops->take_picture(mDevice);

return INVALID_OPERATION;

}

3. data flow

- Since the data flow is implemented through the callback function, I analyze the process from the bottom to the top.

3.1 HAL

3.1.1 CameraHardwareInterface.h

- Location: frameworks/av/services/camera/libcameraservice/device1/Camera Hardware Interface.h

- Here we only select the data Callback related process for analysis.

-

__data_cb():

- The callback function is set in the setCallbacks() function implemented in the same file.

-

When the Camera device gets the data, it transfers it up and calls the callback function in the HAL layer.

- The data is uploaded by calling callbacks from the previous layer through the function pointer mDataCb.

- This mDataCb pointer corresponds to the dataCallback() implemented in the CameraClient class.

static void __data_cb(int32_t msg_type,

const camera_memory_t *data, unsigned int index,

camera_frame_metadata_t *metadata,

void *user)

{

ALOGV("%s", __FUNCTION__);

CameraHardwareInterface *__this =

static_cast<CameraHardwareInterface *>(user);

sp<CameraHeapMemory> mem(static_cast<CameraHeapMemory *>(data->handle));

if (index >= mem->mNumBufs) {

ALOGE("%s: invalid buffer index %d, max allowed is %d", __FUNCTION__,

index, mem->mNumBufs);

return;

}

__this->mDataCb(msg_type, mem->mBuffers[index], metadata, __this->mCbUser);

}

3.2 C/C++ Libraries

3.2.1 CameraClient.cpp

- Location: frameworks/av/services/camera/libcameraservice/api1/CameraClient.cpp

-

dataCallback():

- This callback is set to the Camera Hardware Interface in the initialize() function implemented in the file.

- When this callback is started, the connected client is retrieved from the Cookie.

- Start the corresponding handle operation according to msgType.

- Choose the handle function of one of the branches to see.

void CameraClient::dataCallback(int32_t msgType,

const sp<IMemory>& dataPtr, camera_frame_metadata_t *metadata, void* user) {

LOG2("dataCallback(%d)", msgType);

sp<CameraClient> client = static_cast<CameraClient*>(getClientFromCookie(user).get());

if (client.get() == nullptr) return;

if (!client->lockIfMessageWanted(msgType)) return;

if (dataPtr == 0 && metadata == NULL) {

ALOGE("Null data returned in data callback");

client->handleGenericNotify(CAMERA_MSG_ERROR, UNKNOWN_ERROR, 0);

return;

}

switch (msgType & ~CAMERA_MSG_PREVIEW_METADATA) {

case CAMERA_MSG_PREVIEW_FRAME:

client->handlePreviewData(msgType, dataPtr, metadata);

break;

case CAMERA_MSG_POSTVIEW_FRAME:

client->handlePostview(dataPtr);

break;

case CAMERA_MSG_RAW_IMAGE:

client->handleRawPicture(dataPtr);

break;

case CAMERA_MSG_COMPRESSED_IMAGE:

client->handleCompressedPicture(dataPtr);

break;

default:

client->handleGenericData(msgType, dataPtr, metadata);

break;

}

}

-

handleRawPicture():

- In the open process, when the connect() function is called, mRemoteCallback has been set as a client instance, which corresponds to the strong pointer of ICameraClient.

- Through this example, we start the client's dataCallback based on the Binder mechanism.

- The client's dataCallback is implemented in the Camera class.

void CameraClient::handleRawPicture(const sp<IMemory>& mem) {

disableMsgType(CAMERA_MSG_RAW_IMAGE);

ssize_t offset;

size_t size;

sp<IMemoryHeap> heap = mem->getMemory(&offset, &size);

sp<hardware::ICameraClient> c = mRemoteCallback;

mLock.unlock();

if (c != 0) {

c->dataCallback(CAMERA_MSG_RAW_IMAGE, mem, NULL);

}

}

3.2.2 Camera.cpp

- Location: frameworks/av/camera/Camera.cpp

-

dataCallback():

- Call CameraListener's postData interface to continue the data up.

-

The postData interface is implemented in android_hardware_Camera.cpp.

void Camera::dataCallback(int32_t msgType, const sp<IMemory>& dataPtr,

camera_frame_metadata_t *metadata)

{

sp<CameraListener> listener;

{

Mutex::Autolock _l(mLock);

listener = mListener;

}

if (listener != NULL) {

listener->postData(msgType, dataPtr, metadata);

}

}

3.3 Android Runtime

3.3.1 android_hardware_Camera.cpp

- Location: frameworks/base/core/jni/android_hardware_Camera.cpp

-

postData():

- It is a member function of the JNICameraContext class, which inherits CameraListener.

- First, get the virtual machine pointer.

- Then filter out CAMERA_MSG_PREVIEW_METADATA information.

- Enter branch processing.

- For data transmission paths, the key is the copyAndPost() function.

void JNICameraContext::postData(int32_t msgType, const sp<IMemory>& dataPtr,

camera_frame_metadata_t *metadata)

{

Mutex::Autolock _l(mLock);

JNIEnv *env = AndroidRuntime::getJNIEnv();

if (mCameraJObjectWeak == NULL) {

ALOGW("callback on dead camera object");

return;

}

int32_t dataMsgType = msgType & ~CAMERA_MSG_PREVIEW_METADATA;

switch (dataMsgType) {

case CAMERA_MSG_VIDEO_FRAME:

break;

case CAMERA_MSG_RAW_IMAGE:

ALOGV("rawCallback");

if (mRawImageCallbackBuffers.isEmpty()) {

env->CallStaticVoidMethod(mCameraJClass, fields.post_event,

mCameraJObjectWeak, dataMsgType, 0, 0, NULL);

} else {

copyAndPost(env, dataPtr, dataMsgType);

}

break;

case 0:

break;

default:

ALOGV("dataCallback(%d, %p)", dataMsgType, dataPtr.get());

copyAndPost(env, dataPtr, dataMsgType);

break;

}

if (metadata && (msgType & CAMERA_MSG_PREVIEW_METADATA)) {

postMetadata(env, CAMERA_MSG_PREVIEW_METADATA, metadata);

}

}

-

copyAndPost():

- First, confirm whether data exists in Memory.

- Apply for a Java byte array (jbyteArray, jbyte*) and assign Memory data to it.

- The emphasis is on this function:

- env->CallStaticVoidMethod(mCameraJClass, fields.post_event, mCameraJObjectWeak, msgType, 0, 0, obj);

- Its function is to pass images to the Java side.

- Through the field post_event, Java method is called in c++ and the corresponding parameters are passed in.

- Finally, the postEventFromNative() method on the Java side is invoked.

void JNICameraContext::copyAndPost(JNIEnv* env, const sp<IMemory>& dataPtr, int msgType)

{

jbyteArray obj = NULL;

if (dataPtr != NULL) {

ssize_t offset;

size_t size;

sp<IMemoryHeap> heap = dataPtr->getMemory(&offset, &size);

ALOGV("copyAndPost: off=%zd, size=%zu", offset, size);

uint8_t *heapBase = (uint8_t*)heap->base();

if (heapBase != NULL) {

const jbyte* data = reinterpret_cast<const jbyte*>(heapBase + offset);

if (msgType == CAMERA_MSG_RAW_IMAGE) {

obj = getCallbackBuffer(env, &mRawImageCallbackBuffers, size);

} else if (msgType == CAMERA_MSG_PREVIEW_FRAME && mManualBufferMode) {

obj = getCallbackBuffer(env, &mCallbackBuffers, size);

if (mCallbackBuffers.isEmpty()) {

ALOGV("Out of buffers, clearing callback!");

mCamera->setPreviewCallbackFlags(CAMERA_FRAME_CALLBACK_FLAG_NOOP);

mManualCameraCallbackSet = false;

if (obj == NULL) {

return;

}

}

} else {

ALOGV("Allocating callback buffer");

obj = env->NewByteArray(size);

}

if (obj == NULL) {

ALOGE("Couldn't allocate byte array for JPEG data");

env->ExceptionClear();

} else {

env->SetByteArrayRegion(obj, 0, size, data);

}

} else {

ALOGE("image heap is NULL");

}

}

env->CallStaticVoidMethod(mCameraJClass, fields.post_event,

mCameraJObjectWeak, msgType, 0, 0, obj);

if (obj) {

env->DeleteLocalRef(obj);

}

}

3.4 Framework

3.4.1 Camera.java

- Location: frameworks/base/core/java/android/hardware/Camera.java

- The following two methods are members of EventHandler, which inherits the Handler class.

-

postEventFromNative():

- First, determine whether Camera has been instantiated.

- After confirmation, the obtainMessage method of mEventHandler, a member of Camera, encapsulates the data obtained from the Native environment into an instance of the Message class, and then calls sendMessage() method to send the data out.

private static void postEventFromNative(Object camera_ref,

int what, int arg1, int arg2, Object obj)

{

Camera c = (Camera)((WeakReference)camera_ref).get();

if (c == null)

return;

if (c.mEventHandler != null) {

Message m = c.mEventHandler.obtainMessage(what, arg1, arg2, obj);

c.mEventHandler.sendMessage(m);

}

}

-

handleMessage():

-

The data from the sendMessage() method is processed in this way and sent to the corresponding callback class.

- Notice that there are onPictureTaken() methods in several different callback classes (mRawImageCallback, mJpegCallback, etc.). By invoking this method, the data transferred from the bottom layer is eventually sent to the top Java application, and the upper application can get the captured image by parsing the Message, so that it can perform subsequent operations.

- This is where the flow of data streams I've analyzed ends.

@Override

public void handleMessage(Message msg) {

switch(msg.what) {

case CAMERA_MSG_SHUTTER:

if (mShutterCallback != null) {

mShutterCallback.onShutter();

}

return;

case CAMERA_MSG_RAW_IMAGE:

if (mRawImageCallback != null) {

mRawImageCallback.onPictureTaken((byte[])msg.obj, mCamera);

}

return;

case CAMERA_MSG_COMPRESSED_IMAGE:

if (mJpegCallback != null) {

mJpegCallback.onPictureTaken((byte[])msg.obj, mCamera);

}

return;

case CAMERA_MSG_PREVIEW_FRAME:

PreviewCallback pCb = mPreviewCallback;

if (pCb != null) {

if (mOneShot) {

mPreviewCallback = null;

} else if (!mWithBuffer) {

setHasPreviewCallback(true, false);

}

pCb.onPreviewFrame((byte[])msg.obj, mCamera);

}

return;

case CAMERA_MSG_POSTVIEW_FRAME:

if (mPostviewCallback != null) {

mPostviewCallback.onPictureTaken((byte[])msg.obj, mCamera);

}

return;

case CAMERA_MSG_FOCUS:

AutoFocusCallback cb = null;

synchronized (mAutoFocusCallbackLock) {

cb = mAutoFocusCallback;

}

if (cb != null) {

boolean success = msg.arg1 == 0 ? false : true;

cb.onAutoFocus(success, mCamera);

}

return;

case CAMERA_MSG_ZOOM:

if (mZoomListener != null) {

mZoomListener.onZoomChange(msg.arg1, msg.arg2 != 0, mCamera);

}

return;

case CAMERA_MSG_PREVIEW_METADATA:

if (mFaceListener != null) {

mFaceListener.onFaceDetection((Face[])msg.obj, mCamera);

}

return;

case CAMERA_MSG_ERROR :

Log.e(TAG, "Error " + msg.arg1);

if (mErrorCallback != null) {

mErrorCallback.onError(msg.arg1, mCamera);

}

return;

case CAMERA_MSG_FOCUS_MOVE:

if (mAutoFocusMoveCallback != null) {

mAutoFocusMoveCallback.onAutoFocusMoving(msg.arg1 == 0 ? false : true, mCamera);

}

return;

default:

Log.e(TAG, "Unknown message type " + msg.what);

return;

}

}

Flowchart

Summary

- In this note, we start with the Camera.takePicture() method and simply track the entire Camera process with the Open process we learned before.

- Whether it is control flow or data flow, the next step is to be executed through five levels in turn. Control flow is the flow of commands from the top to the bottom, while data flow is the flow of data from the bottom to the top.

- If we want to customize a C++ function library for data processing and add it to the camera, we can modify the HAL layer, flow the RAW image to our processing process, and then send the processed RAW image back to the HAL layer (we need some processing on the HAL layer to upload the image). Finally, we can upload the image through the normal callback process. Like in a top-level application, we can implement our custom functions.

- So far, we have a clear understanding of the whole Camera framework and its operation.

- After Android 5.0, Camera launched Camera API 2, which has a new process (but the overall architecture will not change much). Next, I will find free time to learn this new thing, and then open a series of learning notes.