1. Integrated iFLYTEK

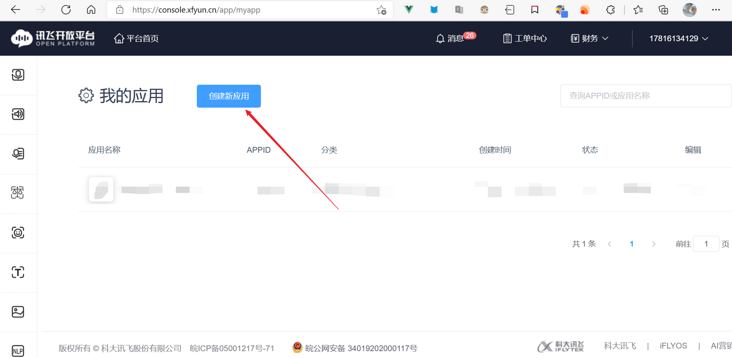

stay IFLYTEK open platform Register and complete identity authentication, and enter the console page to create an application

Complete the filling as required, click create, and then enter the application just created, where you can see all the function details

The key appid apisecret apikey can be seen on the right

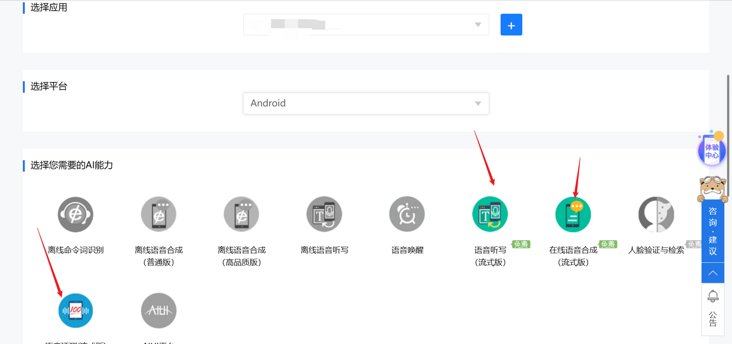

I plan to use three functions: voice dictation, voice synthesis and voice evaluation, so next, go to the aggregation SDK download page to download the combined SDK

After the ability and application of AI are downloaded, you can select the required platform

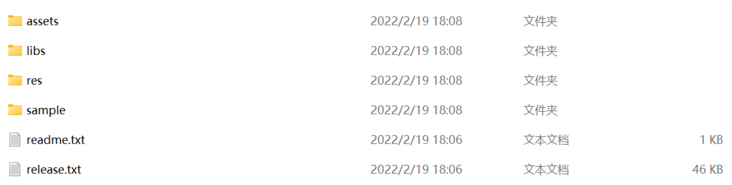

After downloading, unzip the compressed package and the directory results are as follows:

The sample directory is an instance Demo provided by the official. We can open it separately with AS (it doesn't matter to report an error, you can see the code clearly). The subsequent encapsulation is carried out with reference to the official Demo Official Portal: Official documents

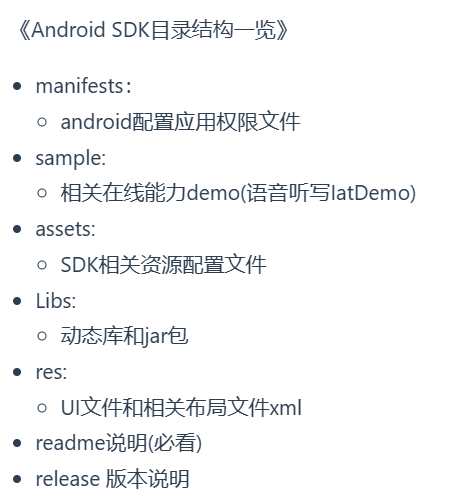

SDK package description:

Then import the SDK into our project. First, copy all sub files in the libs directory of the Android SDK package downloaded on the official website to the libs directory of the Android project:

Open our project with AS and right-click MSC Jar Add As Library

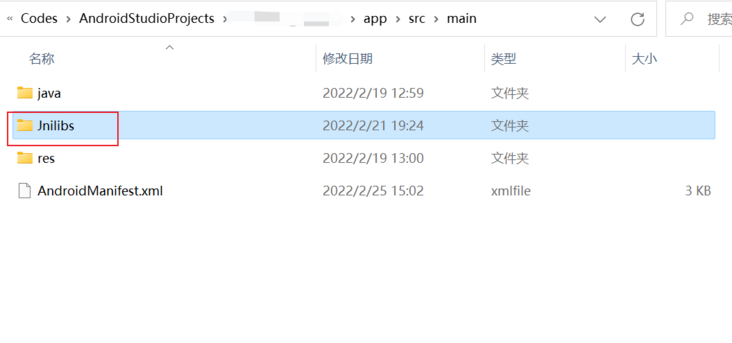

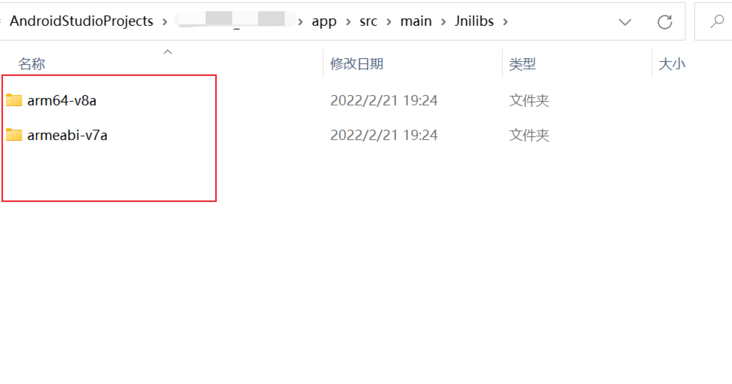

Then create a new Jnilibs directory under the main directory of our project, and copy the two folders under the libs Directory:

If there is an error in the operation, you can use the Module level build Add to gradle:

....buildTypes {...}

// Add:

// Specify libs folder location

sourceSets {

main{

jniLibs.srcDirs = ['libs']

}

}Finally, initialize iFLYTEK SDK. It is recommended to initialize under Application:

@Override

public void onCreate() {

super.onCreate();

mContext = this.getApplication();

// SDK initialization is implemented here,

// Do not add any empty characters or escape characters between "=" and appid

SpeechUtility.createUtility(mContext, SpeechConstant.APPID + "=" + mContext.getString(R.string.APPID));

}It is recommended to store constant characters in RES / values / string XML

So far, the integration SDK has been completed. Next, function encapsulation:

2. Functional packaging

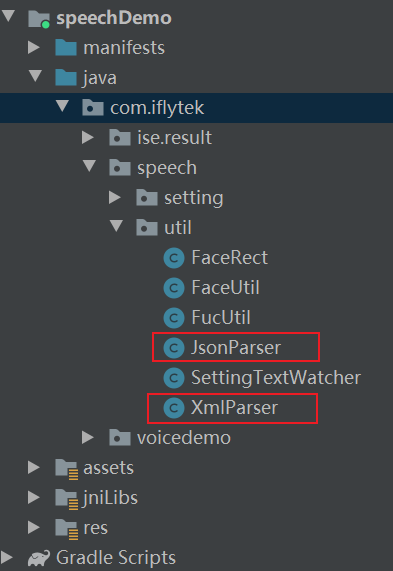

Create a utils package in our package directory. There are two useful tool classes in the official Demo. Add them to the utils package:

Create a new iflytek directory under our package directory to store iflytek related classes. First, encapsulate voice dictation:

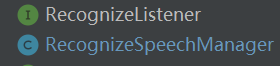

New interface: RecognizeListener, new class: recognizespechmanager

/**

* Dictation callback

*/

public interface RecognizeListener {

void onNewResult(String result);

void onTotalResult(String result,boolean isLast);

void onError(SpeechError speechError);

}/**

* Audio read-write conversion

*/

public class RecognizeSpeechManager implements RecognizerListener, InitListener {

private static final String TAG = "RecognizeSpeechManager";

// Result callback object

private RecognizeListener recognizeListener;

// Voice dictation object

private SpeechRecognizer iat;

private StringBuffer charBufffer = new StringBuffer();

// Weak reference of context, so as to recycle when not in use and avoid memory leakage (when an object is only pointed by weak reference and no other strong reference, it will be recycled in the next gc run)

private WeakReference<Context> bindContext;

// Single case

private static RecognizeSpeechManager instance;

private RecognizeSpeechManager() {

}

/**

* Single case method

*/

public static RecognizeSpeechManager instance() {

if (instance == null) {

instance = new RecognizeSpeechManager();

}

return instance;

}

/**

* Set result callback object

*/

public void setRecognizeListener(RecognizeListener recognizeListener) {

this.recognizeListener = recognizeListener;

}

/**

* initialization

*/

public void init(Context context) {

if (bindContext == null) {

bindContext = new WeakReference<Context>(context);

}

if (iat == null) {

iat = SpeechRecognizer.createRecognizer(bindContext.get(), this);

}

}

@Override

public void onInit(int code) {

if (code != ErrorCode.SUCCESS) {

Log.d(TAG, "init error code " + code);

}

}

/**

* Start listening

* ErrorCode.SUCCESS Listening success status code

*/

public int startRecognize() {

setParam();

return iat.startListening(this);

}

/**

* Cancel dictation

*/

public void cancelRecognize() {

iat.cancel();

}

/**

* Stop dictation

*/

public void stopRecognize() {

iat.stopListening();

}

public void release() {

iat.cancel();

iat.destroy();

// iat = null;

bindContext.clear();

// bindContext = null;

charBufffer.delete(0, charBufffer.length());

}

@Override

public void onVolumeChanged(int i, byte[] bytes) {

}

@Override

public void onBeginOfSpeech() {

Log.d(TAG, "onBeginOfSpeech");

}

@Override

public void onEndOfSpeech() {

Log.d(TAG, "onEndOfSpeech isListening " + iat.isListening());

}

@Override

public void onResult(RecognizerResult results, boolean b) {

if (recognizeListener != null) {

recognizeListener.onNewResult(printResult(results));

recognizeListener.onTotalResult(charBufffer.toString(), iat.isListening());

}

}

@Override

public void onError(SpeechError speechError) {

if (recognizeListener != null) {

recognizeListener.onError(speechError);

}

}

@Override

public void onEvent(int i, int i1, int i2, Bundle bundle) {

Log.d(TAG, "onEvent type " + i);

}

private String printResult(RecognizerResult results) {

String text = JsonParser.parseIatResult(results.getResultString());

Log.d(TAG, "printResult " + text + " isListening " + iat.isListening());

String sn = null;

// Read sn field in json result

try {

JSONObject resultJson = new JSONObject(results.getResultString());

sn = resultJson.optString("sn");

} catch (JSONException e) {

e.printStackTrace();

}

if (!TextUtils.isEmpty(text)) {

charBufffer.append(text);

}

return text;

}

/**

* Parameter setting

*

* @return

*/

private void setParam() {

// Clear parameters

iat.setParameter(SpeechConstant.PARAMS, null);

// Set dictation engine

iat.setParameter(SpeechConstant.ENGINE_TYPE, SpeechConstant.TYPE_CLOUD);

// Format the returned results

iat.setParameter(SpeechConstant.RESULT_TYPE, "json");

iat.setParameter(SpeechConstant.LANGUAGE, "zh_cn");

iat.setParameter(SpeechConstant.ACCENT, "mandarin");

//This is used to set that the error code information is not displayed in the dialog

//iat.setParameter("view_tips_plain","false");

// Set the voice front endpoint: Mute timeout, that is, how long the user does not speak will be treated as timeout

iat.setParameter(SpeechConstant.VAD_BOS, "10000");

// Set the end point after voice: the mute detection time of the back-end point, that is, the user will not input any more within how long he stops talking, and the recording will be stopped automatically

iat.setParameter(SpeechConstant.VAD_EOS, "10000");

// Set the punctuation mark, set it to "0" to return the result without punctuation, and set it to "1" to return the result with punctuation

iat.setParameter(SpeechConstant.ASR_PTT, "1");

// Set the audio saving path. The audio saving format supports pcm and wav. Set the path to sd card. Please note WRITE_EXTERNAL_STORAGE permission

/* iat.setParameter(SpeechConstant.AUDIO_FORMAT, "wav");

iat.setParameter(SpeechConstant.ASR_AUDIO_PATH, Environment.getExternalStorageDirectory() + "/msc/iat.wav");*/

}

}Use (where ViewModel and butterknife are used):

public class HomeFragment extends Fragment implements RecognizeListener {

//The presentation and events of the UI view are contained in the Fragment or Activity

private Unbinder unbinder;

private HomeViewModel homeViewModel;

@BindView(R.id.tvContent)

TextView tvContent;

public Context mContext;

public View onCreateView(@NonNull LayoutInflater inflater,

ViewGroup container, Bundle savedInstanceState) {

mContext = this.getContext();

//Build ViewModel instance

homeViewModel =

ViewModelProviders.of(this).get(HomeViewModel.class);

//Create view object

View root = inflater.inflate(R.layout.fragment_home, container, false);

// fragment binding buterknife

unbinder = ButterKnife.bind(this,root);

// Let the UI observe the changes of data in the ViewModel and update the UI in real time

homeViewModel.getRecognizeText().observe(getViewLifecycleOwner(), new Observer<String>() {

@Override

public void onChanged(@Nullable String s) {

tvContent.setText(s);

}

});

//Initialize iFLYTEK audio read / write management class

RecognizeSpeechManager.instance().init(mContext);

RecognizeSpeechManager.instance().setRecognizeListener(this);

//Return to view object

return root;

}

@OnClick({R.id.btStart, R.id.btCancel, R.id.btStop})

public void onClick(View v) {

switch (v.getId()){

case R.id.btStart:

RecognizeSpeechManager.instance().startRecognize();

break;

case R.id.btCancel:

RecognizeSpeechManager.instance().cancelRecognize();

break;

case R.id.btStop:

RecognizeSpeechManager.instance().stopRecognize();

break;

}

}

@Override

public void onDestroy() {

super.onDestroy();

if(unbinder != null) {

unbinder.unbind();//View must be unbound when destroyed

}

RecognizeSpeechManager.instance().release();

}

@Override

public void onNewResult(String result) {

homeViewModel.setRecognizeText(homeViewModel.getRecognizeText().getValue() + "Latest translation:" + result + "\n");

}

@Override

public void onTotalResult(String result, boolean isLast) {

homeViewModel.setRecognizeText(homeViewModel.getRecognizeText().getValue() + "All translations:" + result + "\n");

}

@Override

public void onError(SpeechError speechError) {

Toast.makeText(mContext, "Error " + speechError, Toast.LENGTH_SHORT).show();

}

}public class HomeViewModel extends ViewModel {

// Data acquisition and processing are included in the ViewModel

// Identification result data

private MutableLiveData<String> recognizeText;

public HomeViewModel() {

mText = new MutableLiveData<>();

recognizeText = new MutableLiveData<>();

recognizeText.setValue("");

}

// get method

public LiveData<String> getRecognizeText () {

return recognizeText;

}

// set method

public void setRecognizeText (String recognizeText) {

this.recognizeText.setValue(recognizeText);

}

}Encapsulated speech synthesis:

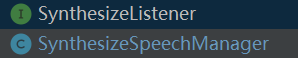

New interface: SynthesizeListener new class: synthesizespechmanager

/**

* Composite callback

*/

public interface SynthesizeListener {

void onError(SpeechError speechError);

}/**

* speech synthesis

*/

public class SynthesizeSpeechManager implements SynthesizerListener, InitListener {

private static final String TAG = "SynthesizeSpeechManager";

// Default speaker

private String voicer = "xiaoyan";

// Result callback object

private SynthesizeListener synthesizeListener;

// Speech synthesis object

private SpeechSynthesizer tts;

// The weak reference of the context, so as to recycle when not in use and avoid memory leakage

private WeakReference<Context> bindContext;

// Single case

private static SynthesizeSpeechManager instance;

private SynthesizeSpeechManager() {

}

/**

* Single case method

*/

public static SynthesizeSpeechManager instance() {

if (instance == null) {

instance = new SynthesizeSpeechManager();

}

return instance;

}

/**

* Set result callback object

*/

public void setSynthesizeListener(SynthesizeListener synthesizeListener) {

this.synthesizeListener = synthesizeListener;

}

/**

* initialization

*/

public void init(Context context) {

if (bindContext == null) {

bindContext = new WeakReference<Context>(context);

}

if (tts == null) {

tts = SpeechSynthesizer.createSynthesizer(bindContext.get(), this);

}

}

@Override

public void onInit(int code) {

if (code != ErrorCode.SUCCESS) {

Log.d(TAG, "init error code " + code);

}

}

// Next, we need to implement three methods of user-defined interface

/**

* Start synthesis

*/

public int startSpeak(String texts) {

setParam();

return tts.startSpeaking(texts, this);

}

/**

* Cancel composition

*/

public void stopSpeak() {

tts.stopSpeaking();

}

/**

* Pause playback

*/

public void pauseSpeak() {

tts.pauseSpeaking();

}

/**

* Continue playing

*/

public void resumeSpeak() {

tts.resumeSpeaking();

}

/**

* garbage collection

*/

public void release() {

tts.stopSpeaking();

tts.destroy();

// tts = null;

bindContext.clear();

// bindContext = null;

}

@Override

public void onSpeakBegin() {

Log.d(TAG, "Start playing");

}

@Override

public void onBufferProgress(int percent, int beginPos, int endPos, String info) {

Log.d(TAG, "Synthesis progress: percent =" + percent);

}

@Override

public void onSpeakPaused() {

Log.d(TAG, "Pause playback");

}

@Override

public void onSpeakResumed() {

Log.d(TAG, "Continue playing");

}

@Override

public void onSpeakProgress(int percent, int beginPos, int endPos) {

Log.e(TAG, "Playback progress: percent =" + percent);

}

@Override

public void onCompleted(SpeechError speechError) {

Log.d(TAG, "Playback complete");

if (speechError != null) {

Log.d(TAG, speechError.getPlainDescription(true));

synthesizeListener.onError(speechError);

}

}

@Override

public void onEvent(int eventType, int arg1, int arg2, Bundle bundle) {

// The following code is used to obtain the session id with the cloud. When there is a business error, the session id is provided to the technical support personnel, which can be used to query the session log and locate the cause of the error

if(bundle != null) {

Log.d(TAG, "session id =" + bundle.getString(SpeechEvent.KEY_EVENT_SESSION_ID));

Log.e(TAG, "EVENT_TTS_BUFFER = " + Objects.requireNonNull(bundle.getByteArray(SpeechEvent.KEY_EVENT_TTS_BUFFER)).length);

}

}

/**

* Parameter setting

*/

private void setParam() {

// Clear parameters

tts.setParameter(SpeechConstant.PARAMS, null);

// Set composition engine

tts.setParameter(SpeechConstant.ENGINE_TYPE, SpeechConstant.TYPE_CLOUD);

// Support real-time audio return, which is only supported under the condition of synthesizeToUri

tts.setParameter(SpeechConstant.TTS_DATA_NOTIFY, "1");

// mTts.setParameter(SpeechConstant.TTS_BUFFER_TIME,"1");

// Set up online synthetic speaker

tts.setParameter(SpeechConstant.VOICE_NAME, voicer);

//Set synthetic speed

tts.setParameter(SpeechConstant.SPEED, "50");

//Set synthetic tone

tts.setParameter(SpeechConstant.PITCH, "50");

//Set synthetic volume

tts.setParameter(SpeechConstant.VOLUME, "50");

//Set player audio stream type

tts.setParameter(SpeechConstant.STREAM_TYPE, "3");

// Set to play synthetic audio to interrupt music playback. The default value is true

tts.setParameter(SpeechConstant.KEY_REQUEST_FOCUS, "false");

// Set the audio saving path. The audio saving format supports pcm and wav. Set the path to sd card. Please note WRITE_EXTERNAL_STORAGE permission

/* mTts.setParameter(SpeechConstant.AUDIO_FORMAT, "pcm");

mTts.setParameter(SpeechConstant.TTS_AUDIO_PATH,

getExternalFilesDir("msc").getAbsolutePath() + "/tts.pcm"); */

}

}use:

public class DashboardFragment extends Fragment implements SynthesizeListener {

private Unbinder unbinder;

private DashboardViewModel dashboardViewModel;

@BindView(R.id.etEva)

EditText editText;

public Context mContext;

public View onCreateView(@NonNull LayoutInflater inflater,

ViewGroup container, Bundle savedInstanceState) {

mContext = this.getContext();

dashboardViewModel =

ViewModelProviders.of(this).get(DashboardViewModel.class);

View root = inflater.inflate(R.layout.fragment_dashboard, container, false);

unbinder = ButterKnife.bind(this,root);

dashboardViewModel.getText().observe(getViewLifecycleOwner(), new Observer<String>() {

@Override

public void onChanged(@Nullable String s) {

editText.setText(s);

}

});

//Initialize iFLYTEK audio synthesis management class

SynthesizeSpeechManager.instance().init(mContext);

SynthesizeSpeechManager.instance().setSynthesizeListener(this);

return root;

}

@OnClick({R.id.btParse, R.id.btPaused, R.id.btResumed})

public void onClick(View v) {

switch (v.getId()){

case R.id.btParse:

SynthesizeSpeechManager.instance().startSpeak(dashboardViewModel.getText().getValue());

break;

case R.id.btPaused:

SynthesizeSpeechManager.instance().pauseSpeak();

break;

case R.id.btResumed:

SynthesizeSpeechManager.instance().resumeSpeak();

break;

}

}

@Override

public void onDestroy() {

super.onDestroy();

if(unbinder != null) {

unbinder.unbind();//View must be unbound when destroyed

}

SynthesizeSpeechManager.instance().release();

}

@Override

public void onError(SpeechError speechError) {

Toast.makeText(mContext, "Error " + speechError, Toast.LENGTH_SHORT).show();

}

}public class DashboardViewModel extends ViewModel {

private MutableLiveData<String> mText;

public DashboardViewModel() {

mText = new MutableLiveData<>();

mText.setValue("This is dashboard fragment");

}

public LiveData<String> getText() {

return mText;

}

}Encapsulated voice evaluation:

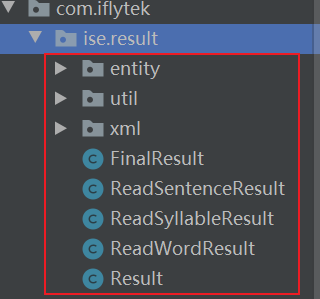

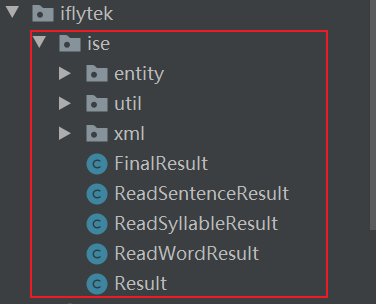

Voice evaluation is relatively complex, so it is necessary to set ISE. In the demo All the contents under the result package are moved to our project (the package name must be changed to directly copy the files. If you are afraid of making mistakes, you can manually create one by one)

Create a new ise package under our project iflytek package and add the content shown in the figure above:

Under iflytek package, create a new interface: EvaluateListener and a new class: EvaluateSpeechManager

public interface EvaluateListener {

void onNewResult(String result);

void onTotalResult(String result,boolean isLast);

void onError(SpeechError speechError);

}/**

* Speech Assessment

*/

public class EvaluateSpeechManager implements EvaluatorListener {

private static final String TAG = "EvaluatSpeechManager";

private final static String PREFER_NAME = "ise_settings";

private final static int REQUEST_CODE_SETTINGS = 1;

// The weak reference of the context, so as to recycle when not in use and avoid memory leakage

private WeakReference<Context> bindContext;

// Result callback object

private EvaluateListener evaluateListener;

// Speech evaluation object

private SpeechEvaluator ise;

// Analytical results

private String lastResult;

// Single case

private static EvaluateSpeechManager instance;

private EvaluateSpeechManager() {

}

/**

* Single case method

*/

public static EvaluateSpeechManager instance() {

if (instance == null) {

instance = new EvaluateSpeechManager();

}

return instance;

}

/**

* Set result callback object

*/

public void setEvaluateListener(EvaluateListener evaluateListener) {

this.evaluateListener = evaluateListener;

}

/**

* initialization

*/

public void init(Context context) {

if (bindContext == null) {

bindContext = new WeakReference<Context>(context);

}

if (ise == null) {

ise = SpeechEvaluator.createEvaluator(bindContext.get(), null);

}

}

/**

* Start evaluation

* @String category Evaluation type

* - read_syllable : word

* - read_word : Words

* - read_sentence : sentence

* - read_chapter : poetry

* @String evaText Evaluation content

*/

public int startEvaluate(String category, String evaText) {

lastResult = null;

assert ise!=null;

setParams(category);

return ise.startEvaluating(evaText, null, this);

}

/**

* Stop evaluation

*/

public void stopEvaluate() {

ise.stopEvaluating();

}

/**

* Cancel evaluation

*/

public void cancelEvaluate() {

ise.cancel();

lastResult = null;

}

/**

* Result analysis

*/

public Result parseResult() {

if(lastResult == null) {

return new FinalResult();

}

XmlResultParser resultParser = new XmlResultParser();

return resultParser.parse(lastResult);

}

public void release() {

ise.cancel();

ise.destroy();

// ise = null;

bindContext.clear();

// bindContext = null;

}

@Override

public void onVolumeChanged(int volume, byte[] data) {

Log.d(TAG, "Currently speaking, volume = " + volume + " Return audio data = " + data.length);

}

@Override

public void onBeginOfSpeech() {

Log.d(TAG, "evaluator begin");

}

@Override

public void onEndOfSpeech() {

Log.d(TAG, "onEndOfSpeech isListening " + ise.isEvaluating());

}

@Override

public void onResult(EvaluatorResult evaluatorResult, boolean isLast) {

Log.d(TAG, "evaluator result :" + isLast);

StringBuilder builder = new StringBuilder();

builder.append(evaluatorResult.getResultString()); // evaluatorResult is the original xml analysis result, which needs to call the parsing function to get the final result

lastResult = builder.toString();

if(evaluateListener != null) {

evaluateListener.onNewResult(builder.toString());

evaluateListener.onTotalResult(builder.toString(), isLast);

}

}

@Override

public void onError(SpeechError speechError) {

if(evaluateListener != null) {

evaluateListener.onError(speechError);

}

}

@Override

public void onEvent(int eventType, int arg1, int arg2, Bundle obj) {

Log.d(TAG, "onEvent type " + eventType);

}

private void setParams(String category) {

// Set evaluation language

String language = "zh_cn";

// Set the result level (Chinese only supports complete)

String result_level = "complete";

// Set the voice front endpoint: Mute timeout, that is, how long the user does not speak will be treated as timeout

String vad_bos = "5000";

// Set the end point after voice: the mute detection time of the back-end point, that is, the user will not input any more within how long he stops talking, and the recording will be stopped automatically

String vad_eos = "1800";

// Voice input timeout, that is, how long the user can speak continuously at most;

String speech_timeout = "-1";

// Parameters required for setting streaming version: ent sub plev

ise.setParameter("ent", "cn_vip");

ise.setParameter(SpeechConstant.SUBJECT, "ise");

ise.setParameter("plev", "0");

// Set the scoring percentage system and use ISE_ unite rst extra_ Capability parameter

ise.setParameter("ise_unite", "1");

ise.setParameter("rst", "entirety");

ise.setParameter("extra_ability", "syll_phone_err_msg;pitch;multi_dimension");

ise.setParameter(SpeechConstant.LANGUAGE, language);

// Set the type to be profiled

ise.setParameter(SpeechConstant.ISE_CATEGORY, category);

ise.setParameter(SpeechConstant.TEXT_ENCODING, "utf-8");

ise.setParameter(SpeechConstant.VAD_BOS, vad_bos);

ise.setParameter(SpeechConstant.VAD_EOS, vad_eos);

ise.setParameter(SpeechConstant.KEY_SPEECH_TIMEOUT, speech_timeout);

ise.setParameter(SpeechConstant.RESULT_LEVEL, result_level);

ise.setParameter(SpeechConstant.AUDIO_FORMAT_AUE, "opus");

// Set the audio saving path, save the audio format, support pcm and wav,

/* ise.setParameter(SpeechConstant.AUDIO_FORMAT, "wav");

ise.setParameter(SpeechConstant.ISE_AUDIO_PATH,

getExternalFilesDir("msc").getAbsolutePath() + "/ise.wav"); */

//This setting is only required when writing audio directly through writeaudio

//mIse.setParameter(SpeechConstant.AUDIO_SOURCE,"-1");

}

}use:

public class NotificationsFragment extends Fragment implements EvaluateListener {

private Unbinder unbinder;

private NotificationsViewModel notificationsViewModel;

@BindView(R.id.etEva)

EditText etEva;

public Context mContext;

public View onCreateView(@NonNull LayoutInflater inflater,

ViewGroup container, Bundle savedInstanceState) {

mContext = this.getContext();

notificationsViewModel =

ViewModelProviders.of(this).get(NotificationsViewModel.class);

View root = inflater.inflate(R.layout.fragment_notifications, container, false);

// fragment binding buterknife

unbinder = ButterKnife.bind(this,root);

final TextView textView = root.findViewById(R.id.text_notifications);

notificationsViewModel.getText().observe(getViewLifecycleOwner(), new Observer<String>() {

@Override

public void onChanged(@Nullable String s) {

textView.setText(s);

}

});

notificationsViewModel.getEvaluateText().observe(getViewLifecycleOwner(), new Observer<String>() {

@Override

public void onChanged(String s) {

etEva.setText(s);

}

});

//Initialize iFLYTEK audio evaluation management class

EvaluateSpeechManager.instance().init(mContext);

EvaluateSpeechManager.instance().setEvaluateListener(this);

return root;

}

@OnClick({R.id.btStart, R.id.btCancel, R.id.btStop, R.id.btParse})

public void onClick(View v) {

switch (v.getId()){

case R.id.btStart:

EvaluateSpeechManager.instance().startEvaluate("read_word",notificationsViewModel.getEvaluateText().getValue());

break;

case R.id.btCancel:

EvaluateSpeechManager.instance().cancelEvaluate();

break;

case R.id.btStop:

EvaluateSpeechManager.instance().stopEvaluate();

break;

case R.id.btParse:

notificationsViewModel.setText(EvaluateSpeechManager.instance().parseResult().toString());

}

}

@Override

public void onDestroy() {

super.onDestroy();

if(unbinder != null) {

unbinder.unbind();//View must be unbound when destroyed

}

EvaluateSpeechManager.instance().release();

}

@Override

public void onNewResult(String result) {

notificationsViewModel.setText(result);

}

@Override

public void onTotalResult(String result, boolean isLast) {

// Toast.makeText(mContext, result, Toast.LENGTH_SHORT).show();

}

@Override

public void onError(SpeechError speechError) {

Toast.makeText(mContext, "Error " + speechError, Toast.LENGTH_SHORT).show();

}

}public class NotificationsViewModel extends ViewModel {

private MutableLiveData<String> mText;

private MutableLiveData<String> evaluateText;

public NotificationsViewModel() {

mText = new MutableLiveData<>();

evaluateText = new MutableLiveData<>();

mText.setValue("This is notifications fragment");

evaluateText.setValue("watermelon");

}

public LiveData<String> getText() {

return mText;

}

public LiveData<String> getEvaluateText() {

return evaluateText;

}

public void setText(String mText) {

this.mText.setValue(mText);

}

public void setEvaluateText(String evaluateText) {

this.evaluateText.setValue(evaluateText);

}

}Layout file res/layout (Tencent's QMUI is used. If you don't have access to the QMUI, you just need to replace the QMUIRoundButton with an ordinary button):

fragment_home.xml

<?xml version="1.0" encoding="utf-8"?>

<androidx.constraintlayout.widget.ConstraintLayout xmlns:android="http://schemas.android.com/apk/res/android"

xmlns:app="http://schemas.android.com/apk/res-auto"

xmlns:tools="http://schemas.android.com/tools"

android:layout_width="match_parent"

android:layout_height="match_parent"

tools:context=".ui.home.HomeFragment">

<TextView

android:id="@+id/tvContent"

android:layout_width="match_parent"

android:layout_height="wrap_content"

android:layout_gravity="top"

android:layout_marginTop="84dp"

android:padding="20dp"

app:layout_constraintBottom_toTopOf="@+id/text_home"

app:layout_constraintStart_toStartOf="parent"

app:layout_constraintTop_toTopOf="parent" />

<Button

android:id="@+id/btStart"

android:layout_width="wrap_content"

android:layout_height="wrap_content"

android:layout_marginStart="32dp"

android:text="Start identification"

app:layout_constraintStart_toStartOf="@+id/tvContent"

app:layout_constraintTop_toBottomOf="@+id/tvContent" />

<Button

android:id="@+id/btCancel"

android:layout_width="wrap_content"

android:layout_height="wrap_content"

android:layout_marginEnd="32dp"

android:text="cancel"

app:layout_constraintEnd_toEndOf="parent"

app:layout_constraintTop_toBottomOf="@+id/tvContent" />

<Button

android:id="@+id/btStop"

android:layout_width="wrap_content"

android:layout_height="wrap_content"

android:text="stop it"

app:layout_constraintEnd_toStartOf="@+id/btCancel"

app:layout_constraintStart_toEndOf="@+id/btStart"

app:layout_constraintTop_toBottomOf="@+id/tvContent" />

<TextView

android:id="@+id/text_home"

android:layout_width="match_parent"

android:layout_height="wrap_content"

android:layout_marginStart="8dp"

android:layout_marginTop="8dp"

android:layout_marginEnd="8dp"

android:textAlignment="center"

android:textSize="20sp"

app:layout_constraintBottom_toBottomOf="parent"

app:layout_constraintEnd_toEndOf="parent"

app:layout_constraintStart_toStartOf="parent"

app:layout_constraintTop_toTopOf="parent" />

<com.qmuiteam.qmui.widget.roundwidget.QMUIRoundButton

android:layout_width="wrap_content"

android:layout_height="wrap_content"

android:layout_centerInParent="true"

android:paddingLeft="16dp"

android:paddingTop="10dp"

android:paddingRight="16dp"

android:paddingBottom="10dp"

android:text="The fillet is half the short side"

app:layout_constraintBottom_toBottomOf="parent"

app:layout_constraintEnd_toEndOf="@+id/text_home"

app:layout_constraintStart_toStartOf="@+id/text_home"

app:layout_constraintTop_toBottomOf="@+id/text_home"

app:layout_constraintVertical_bias="0.26"

app:qmui_isRadiusAdjustBounds="true" />

</androidx.constraintlayout.widget.ConstraintLayout>fragment_dashboard.xml

<?xml version="1.0" encoding="utf-8"?>

<androidx.constraintlayout.widget.ConstraintLayout xmlns:android="http://schemas.android.com/apk/res/android"

xmlns:app="http://schemas.android.com/apk/res-auto"

xmlns:tools="http://schemas.android.com/tools"

android:layout_width="match_parent"

android:layout_height="match_parent"

tools:context=".ui.dashboard.DashboardFragment">

<EditText

android:id="@+id/etEva"

android:layout_width="match_parent"

android:layout_height="wrap_content"

android:layout_marginStart="8dp"

android:layout_marginTop="8dp"

android:layout_marginEnd="8dp"

android:textAlignment="center"

android:textSize="20sp"

app:layout_constraintBottom_toBottomOf="parent"

app:layout_constraintEnd_toEndOf="parent"

app:layout_constraintStart_toStartOf="parent"

app:layout_constraintTop_toTopOf="parent" />

<com.qmuiteam.qmui.widget.roundwidget.QMUIRoundButton

android:id="@+id/btParse"

android:layout_width="93dp"

android:layout_height="40dp"

android:layout_centerInParent="true"

android:paddingLeft="16dp"

android:paddingTop="10dp"

android:paddingRight="16dp"

android:paddingBottom="10dp"

android:text="Start playing"

app:layout_constraintStart_toStartOf="@+id/etEva"

app:layout_constraintTop_toBottomOf="@+id/etEva"

app:qmui_isRadiusAdjustBounds="true" />

<com.qmuiteam.qmui.widget.roundwidget.QMUIRoundButton

android:id="@+id/btResumed"

android:layout_width="93dp"

android:layout_height="40dp"

android:layout_centerInParent="true"

android:paddingLeft="16dp"

android:paddingTop="10dp"

android:paddingRight="16dp"

android:paddingBottom="10dp"

android:text="Continue playing"

app:layout_constraintEnd_toEndOf="@+id/etEva"

app:layout_constraintTop_toBottomOf="@+id/etEva"

app:qmui_isRadiusAdjustBounds="true" />

<com.qmuiteam.qmui.widget.roundwidget.QMUIRoundButton

android:id="@+id/btPaused"

android:layout_width="93dp"

android:layout_height="40dp"

android:layout_centerInParent="true"

android:paddingLeft="16dp"

android:paddingTop="10dp"

android:paddingRight="16dp"

android:paddingBottom="10dp"

android:text="Pause playback"

app:layout_constraintEnd_toStartOf="@+id/btResumed"

app:layout_constraintHorizontal_bias="0.52"

app:layout_constraintStart_toEndOf="@+id/btParse"

app:layout_constraintTop_toBottomOf="@+id/etEva"

app:qmui_isRadiusAdjustBounds="true" />

</androidx.constraintlayout.widget.ConstraintLayout>fragment_notifications.xml

<?xml version="1.0" encoding="utf-8"?>

<androidx.constraintlayout.widget.ConstraintLayout xmlns:android="http://schemas.android.com/apk/res/android"

xmlns:app="http://schemas.android.com/apk/res-auto"

xmlns:tools="http://schemas.android.com/tools"

android:layout_width="match_parent"

android:layout_height="match_parent"

tools:context=".ui.notifications.NotificationsFragment">

<EditText

android:id="@+id/etEva"

android:layout_width="match_parent"

android:layout_height="100dp"

android:layout_marginStart="8dp"

android:layout_marginTop="96dp"

android:layout_marginEnd="8dp"

android:textAlignment="center"

android:textSize="20sp"

app:layout_constraintEnd_toEndOf="parent"

app:layout_constraintHorizontal_bias="0.0"

app:layout_constraintStart_toStartOf="parent"

app:layout_constraintTop_toTopOf="parent" />

<com.qmuiteam.qmui.widget.roundwidget.QMUIRoundButton

android:id="@+id/btStart"

android:layout_width="93dp"

android:layout_height="40dp"

android:layout_centerInParent="true"

android:layout_marginTop="20dp"

android:text="Start evaluation"

app:layout_constraintEnd_toEndOf="@+id/btParse"

app:layout_constraintHorizontal_bias="0.0"

app:layout_constraintStart_toStartOf="@+id/btParse"

app:layout_constraintTop_toBottomOf="@+id/btParse"

app:qmui_isRadiusAdjustBounds="true" />

<com.qmuiteam.qmui.widget.roundwidget.QMUIRoundButton

android:id="@+id/btStop"

android:layout_width="93dp"

android:layout_height="40dp"

android:layout_centerInParent="true"

android:layout_marginTop="20dp"

android:text="Stop evaluation"

app:layout_constraintEnd_toEndOf="@+id/btCancel"

app:layout_constraintHorizontal_bias="0.0"

app:layout_constraintStart_toStartOf="@+id/btCancel"

app:layout_constraintTop_toBottomOf="@+id/btCancel"

app:qmui_isRadiusAdjustBounds="true" />

<com.qmuiteam.qmui.widget.roundwidget.QMUIRoundButton

android:id="@+id/btCancel"

android:layout_width="93dp"

android:layout_height="40dp"

android:layout_centerInParent="true"

android:layout_marginEnd="52dp"

android:text="Cancel evaluation"

app:layout_constraintEnd_toEndOf="@+id/etEva"

app:layout_constraintTop_toBottomOf="@+id/etEva"

app:qmui_isRadiusAdjustBounds="true" />

<com.qmuiteam.qmui.widget.roundwidget.QMUIRoundButton

android:id="@+id/btParse"

android:layout_width="93dp"

android:layout_height="40dp"

android:layout_centerInParent="true"

android:layout_marginStart="56dp"

android:text="Result analysis"

app:layout_constraintStart_toStartOf="@+id/etEva"

app:layout_constraintTop_toBottomOf="@+id/etEva"

app:qmui_isRadiusAdjustBounds="true" />

<TextView

android:id="@+id/text_notifications"

android:layout_width="364dp"

android:layout_height="285dp"

android:layout_marginStart="8dp"

android:layout_marginEnd="8dp"

android:textAlignment="center"

android:textSize="20sp"

app:layout_constraintBottom_toBottomOf="parent"

app:layout_constraintEnd_toEndOf="parent"

app:layout_constraintStart_toStartOf="parent"

app:layout_constraintTop_toBottomOf="@+id/btStart"

app:layout_constraintVertical_bias="0.060000002" />

</androidx.constraintlayout.widget.ConstraintLayout>