Visualization of real-time audio with OpenGL ES

Visual real-time audio

1. Collection of audio and video data

OpenGL has a clear idea of realizing visual real-time audio. Uncoded audio raw data (PCM data) can be collected by using the API AudioRecorder of Java layer, or by using OpenSLES interface in the Native layer.

Then the collected audio data is regarded as a group of audio intensity values, and then the grid is generated according to this group of intensity values, and finally the real-time rendering is carried out.

For the convenience of demonstration, this paper directly uses the API AudioRecorder of Android to collect raw audio data, then passes it into the Native layer through JNI, and finally generates a grid for rendering.

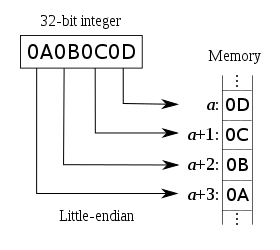

When using AudioRecorder, the acquisition format is ENCODING_PCM_16BIT audio data needs to be understood: the byte storage mode of the collected audio data in the memory is small endian (small endian), that is, the low address stores the low bit and the high address stores the high bit. Therefore, special attention should be paid to converting 2 bytes into short data.

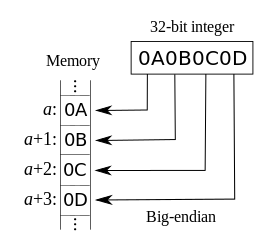

In addition, the large end sequence is opposite to the small end sequence, that is, the low address stores the high order and the high address stores the low order.

Little endian small end sequence

Big endian large end sequence

The following methods can be used to convert byte data stored in small and medium-sized end order into short value in Java:

byte firstByte = 0x10, secondByte = 0x01; //0x0110 ByteBuffer bb = ByteBuffer.allocate(2); bb.order(ByteOrder.LITTLE_ENDIAN); bb.put(firstByte); bb.put(secondByte); short shortVal = bb.getShort(0);

In order to avoid the trouble of data conversion, the AudioRecorder class of Android also provides an API that can directly output short array audio data. I found it after stepping on the pit.

public int read(short[] audioData, int offsetInShorts, int sizeInShorts, int readMode)

Android uses the AudioRecorder to collect audio, which is simply encapsulated in the Java layer:

public class AudioCollector implements AudioRecord.OnRecordPositionUpdateListener{

private static final String TAG = "AudioRecorderWrapper";

private static final int RECORDER_SAMPLE_RATE = 44100; //sampling rate

private static final int RECORDER_CHANNELS = 1; //Number of channels

private static final int RECORDER_AUDIO_ENCODING = AudioFormat.ENCODING_PCM_16BIT; //Audio format

private static final int RECORDER_ENCODING_BIT = 16;

private AudioRecord mAudioRecord;

private Thread mThread;

private short[] mAudioBuffer;

private Handler mHandler;

private int mBufferSize;

private Callback mCallback;

public AudioCollector() {

//Calculate buffer size

mBufferSize = 2 * AudioRecord.getMinBufferSize(RECORDER_SAMPLE_RATE,

RECORDER_CHANNELS, RECORDER_AUDIO_ENCODING);

}

public void init() {

mAudioRecord = new AudioRecord(MediaRecorder.AudioSource.MIC, RECORDER_SAMPLE_RATE,

RECORDER_CHANNELS, RECORDER_AUDIO_ENCODING, mBufferSize);

mAudioRecord.startRecording();

//Continuously collect audio data in a new working thread

mThread = new Thread("Audio-Recorder") {

@Override

public void run() {

super.run();

mAudioBuffer = new short[mBufferSize];

Looper.prepare();

mHandler = new Handler(Looper.myLooper());

//Via audiorecord Onrecordpositionupdatelistener keeps collecting audio data

mAudioRecord.setRecordPositionUpdateListener(AudioCollector.this, mHandler);

int bytePerSample = RECORDER_ENCODING_BIT / 8;

float samplesToDraw = mBufferSize / bytePerSample;

mAudioRecord.setPositionNotificationPeriod((int) samplesToDraw);

mAudioRecord.read(mAudioBuffer, 0, mBufferSize);

Looper.loop();

}

};

mThread.start();

}

public void unInit() {

if(mAudioRecord != null) {

mAudioRecord.stop();

mAudioRecord.release();

mHandler.getLooper().quitSafely();

mHandler = null;

mAudioRecord = null;

}

}

public void addCallback(Callback callback) {

mCallback = callback;

}

@Override

public void onMarkerReached(AudioRecord recorder) {

}

@Override

public void onPeriodicNotification(AudioRecord recorder) {

if (mAudioRecord.getRecordingState() == AudioRecord.RECORDSTATE_RECORDING

&& mAudioRecord.read(mAudioBuffer, 0, mAudioBuffer.length) != -1)

{

if(mCallback != null)

//The audio data is transferred to the Native layer through the interface callback

mCallback.onAudioBufferCallback(mAudioBuffer);

}

}

public interface Callback {

void onAudioBufferCallback(short[] buffer);

}

}

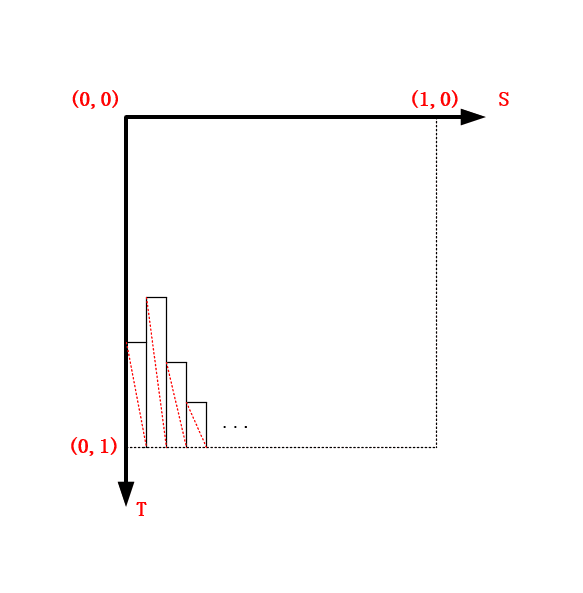

2 Audio visualization

The PCM audio data (short type array) collected by the AudioRecorder is obtained in the Native layer, and then the S-axis of the texture coordinate system is divided equidistantly according to the length of the array, and then the bar graph is constructed with the value in the array (similar to the intensity value of sound) as the height to generate the corresponding texture coordinates and vertex coordinates.

Build bar graph

Because the array corresponding to "one frame" audio data is relatively large, the drawn audio bar graph has become a lump. In order to intuitively represent the audio in the time domain, it is also necessary to sample the data before drawing.

float dx = 1.0f / m_RenderDataSize;

for (int i = 0; i < m_RenderDataSize; ++i) {

int index = i * RESAMPLE_LEVEL; //RESAMPLE_LEVEL indicates the sampling interval

float y = m_pAudioData[index] * dy * -1;

y = y < 0 ? y : -y; //Indicates that the value of the audio becomes positive

//Construct 4 points of the strip rectangle

vec2 p1(i * dx, 0 + 1.0f);

vec2 p2(i * dx, y + 1.0f);

vec2 p3((i + 1) * dx, y + 1.0f);

vec2 p4((i + 1) * dx, 0 + 1.0f);

//Construction texture coordinates

m_pTextureCoords[i * 6 + 0] = p1;

m_pTextureCoords[i * 6 + 1] = p2;

m_pTextureCoords[i * 6 + 2] = p3;

m_pTextureCoords[i * 6 + 3] = p1;

m_pTextureCoords[i * 6 + 4] = p3;

m_pTextureCoords[i * 6 + 5] = p4;

m_pTextureCoords[i * 6 + 2] = p4;

m_pTextureCoords[i * 6 + 3] = p4;

m_pTextureCoords[i * 6 + 4] = p2;

m_pTextureCoords[i * 6 + 5] = p3;

//Construct vertex coordinates and convert texture coordinates to vertex coordinates

m_pVerticesCoords[i * 6 + 0] = GLUtils::texCoordToVertexCoord(p1);

m_pVerticesCoords[i * 6 + 1] = GLUtils::texCoordToVertexCoord(p2);

m_pVerticesCoords[i * 6 + 2] = GLUtils::texCoordToVertexCoord(p3);

m_pVerticesCoords[i * 6 + 3] = GLUtils::texCoordToVertexCoord(p1);

m_pVerticesCoords[i * 6 + 4] = GLUtils::texCoordToVertexCoord(p3);

m_pVerticesCoords[i * 6 + 5] = GLUtils::texCoordToVertexCoord(p4);

m_pVerticesCoords[i * 6 + 2] = GLUtils::texCoordToVertexCoord(p4);

m_pVerticesCoords[i * 6 + 3] = GLUtils::texCoordToVertexCoord(p4);

m_pVerticesCoords[i * 6 + 4] = GLUtils::texCoordToVertexCoord(p2);

m_pVerticesCoords[i * 6 + 5] = GLUtils::texCoordToVertexCoord(p3);

}

The Java layer inputs "one frame" audio data, and the Native layer draws one frame:

void VisualizeAudioSample::Draw(int screenW, int screenH) {

LOGCATE("VisualizeAudioSample::Draw()");

if (m_ProgramObj == GL_NONE) return;

//Add mutex lock to ensure the synchronization of audio data drawing and update

std::unique_lock<std::mutex> lock(m_Mutex);

//Update texture coordinates and vertex coordinates based on audio data

UpdateMesh();

UpdateMVPMatrix(m_MVPMatrix, m_AngleX, m_AngleY, (float) screenW / screenH);

// Generate VBO Ids and load the VBOs with data

if(m_VboIds[0] == 0)

{

glGenBuffers(2, m_VboIds);

glBindBuffer(GL_ARRAY_BUFFER, m_VboIds[0]);

glBufferData(GL_ARRAY_BUFFER, sizeof(GLfloat) * m_RenderDataSize * 6 * 3, m_pVerticesCoords, GL_DYNAMIC_DRAW);

glBindBuffer(GL_ARRAY_BUFFER, m_VboIds[1]);

glBufferData(GL_ARRAY_BUFFER, sizeof(GLfloat) * m_RenderDataSize * 6 * 2, m_pTextureCoords, GL_DYNAMIC_DRAW);

}

else

{

glBindBuffer(GL_ARRAY_BUFFER, m_VboIds[0]);

glBufferSubData(GL_ARRAY_BUFFER, 0, sizeof(GLfloat) * m_RenderDataSize * 6 * 3, m_pVerticesCoords);

glBindBuffer(GL_ARRAY_BUFFER, m_VboIds[1]);

glBufferSubData(GL_ARRAY_BUFFER, 0, sizeof(GLfloat) * m_RenderDataSize * 6 * 2, m_pTextureCoords);

}

if(m_VaoId == GL_NONE)

{

glGenVertexArrays(1, &m_VaoId);

glBindVertexArray(m_VaoId);

glBindBuffer(GL_ARRAY_BUFFER, m_VboIds[0]);

glEnableVertexAttribArray(0);

glVertexAttribPointer(0, 3, GL_FLOAT, GL_FALSE, 3 * sizeof(GLfloat), (const void *) 0);

glBindBuffer(GL_ARRAY_BUFFER, GL_NONE);

glBindBuffer(GL_ARRAY_BUFFER, m_VboIds[1]);

glEnableVertexAttribArray(1);

glVertexAttribPointer(1, 2, GL_FLOAT, GL_FALSE, 2 * sizeof(GLfloat), (const void *) 0);

glBindBuffer(GL_ARRAY_BUFFER, GL_NONE);

glBindVertexArray(GL_NONE);

}

// Use the program object

glUseProgram(m_ProgramObj);

glBindVertexArray(m_VaoId);

glUniformMatrix4fv(m_MVPMatLoc, 1, GL_FALSE, &m_MVPMatrix[0][0]);

GLUtils::setFloat(m_ProgramObj, "drawType", 1.0f);

glDrawArrays(GL_TRIANGLES, 0, m_RenderDataSize * 6);

GLUtils::setFloat(m_ProgramObj, "drawType", 0.0f);

glDrawArrays(GL_LINES, 0, m_RenderDataSize * 6);

}

The rendering results of real-time audio are as follows:

Rendering results of real-time audio

However, the above real-time audio rendering effect does not give people the feeling of time passing, that is, simply drawing one group and then drawing another group of data without any transition.

We draw audio data in the time domain (or transform it into the frequency domain through Fourier transform). If we want to draw the effect with the feeling of time passing, we need to perform offset rendering on the Buffer.

That is, gradually discard the old data and gradually add new data, so that the drawn effect has the feeling of time passing.

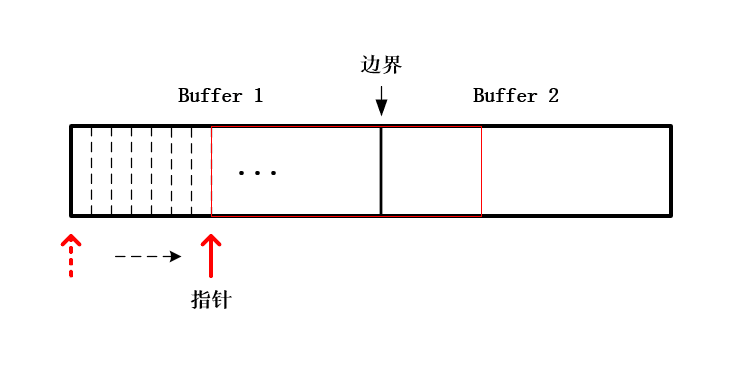

The pointer is offset in a fixed step

First, our Buffer should be doubled (or several times). Collect 2 frames of audio data to fill the Buffer. At this time, block the audio acquisition thread, and then notify the rendering thread (the data is ready) to draw. Then, the pointer to the Buffer will be offset according to a specific step, and the offset will be drawn once at a time.

When the pointer is offset to the boundary shown in the above figure, the data in the Buffer is drawn, and the rendering thread pauses drawing.

Then notify the audio acquisition thread to unblock, copy the data in Buffer2 into Buffer1, and receive new data into Buffer2. At this time, block the audio acquisition thread again, and notify the rendering thread that the data update is completed and can be drawn.

void VisualizeAudioSample::UpdateMesh() {

//Set an offset step

int step = m_AudioDataSize / 64;

//Determines whether the pointer is offset to the boundary

if(m_pAudioBuffer + m_AudioDataSize - m_pCurAudioData >= step)

{

float dy = 0.5f / MAX_AUDIO_LEVEL;

float dx = 1.0f / m_RenderDataSize;

for (int i = 0; i < m_RenderDataSize; ++i) {

int index = i * RESAMPLE_LEVEL;

float y = m_pCurAudioData[index] * dy * -1;

y = y < 0 ? y : -y;

vec2 p1(i * dx, 0 + 1.0f);

vec2 p2(i * dx, y + 1.0f);

vec2 p3((i + 1) * dx, y + 1.0f);

vec2 p4((i + 1) * dx, 0 + 1.0f);

m_pTextureCoords[i * 6 + 0] = p1;

m_pTextureCoords[i * 6 + 1] = p2;

m_pTextureCoords[i * 6 + 2] = p4;

m_pTextureCoords[i * 6 + 3] = p4;

m_pTextureCoords[i * 6 + 4] = p2;

m_pTextureCoords[i * 6 + 5] = p3;

m_pVerticesCoords[i * 6 + 0] = GLUtils::texCoordToVertexCoord(p1);

m_pVerticesCoords[i * 6 + 1] = GLUtils::texCoordToVertexCoord(p2);

m_pVerticesCoords[i * 6 + 2] = GLUtils::texCoordToVertexCoord(p4);

m_pVerticesCoords[i * 6 + 3] = GLUtils::texCoordToVertexCoord(p4);

m_pVerticesCoords[i * 6 + 4] = GLUtils::texCoordToVertexCoord(p2);

m_pVerticesCoords[i * 6 + 5] = GLUtils::texCoordToVertexCoord(p3);

}

m_pCurAudioData += step;

}

else

{

//Notify the audio collection thread to update the data when the offset reaches the boundary

m_bAudioDataReady = false;

m_Cond.notify_all();

return;

}

}

void VisualizeAudioSample::LoadShortArrData(short *const pShortArr, int arrSize) {

if (pShortArr == nullptr || arrSize == 0)

return;

m_FrameIndex++;

std::unique_lock<std::mutex> lock(m_Mutex);

//The Buffer is directly filled with the data of the first two frames

if(m_FrameIndex == 1)

{

m_pAudioBuffer = new short[arrSize * 2];

memcpy(m_pAudioBuffer, pShortArr, sizeof(short) * arrSize);

m_AudioDataSize = arrSize;

return;

}

//The Buffer is directly filled with the data of the first two frames

if(m_FrameIndex == 2)

{

memcpy(m_pAudioBuffer + arrSize, pShortArr, sizeof(short) * arrSize);

m_RenderDataSize = m_AudioDataSize / RESAMPLE_LEVEL;

m_pVerticesCoords = new vec3[m_RenderDataSize * 6]; //(x,y,z) * 6 points

m_pTextureCoords = new vec2[m_RenderDataSize * 6]; //(x,y) * 6 points

}

//Copy the data in Buffer2 to Buffer1, and receive the new data into Buffer2,

if(m_FrameIndex > 2)

{

memcpy(m_pAudioBuffer, m_pAudioBuffer + arrSize, sizeof(short) * arrSize);

memcpy(m_pAudioBuffer + arrSize, pShortArr, sizeof(short) * arrSize);

}

//At this time, block the audio acquisition thread and notify the rendering thread that the data update is complete

m_bAudioDataReady = true;

m_pCurAudioData = m_pAudioBuffer;

m_Cond.wait(lock);

}

The results are shown in the first figure of this paper