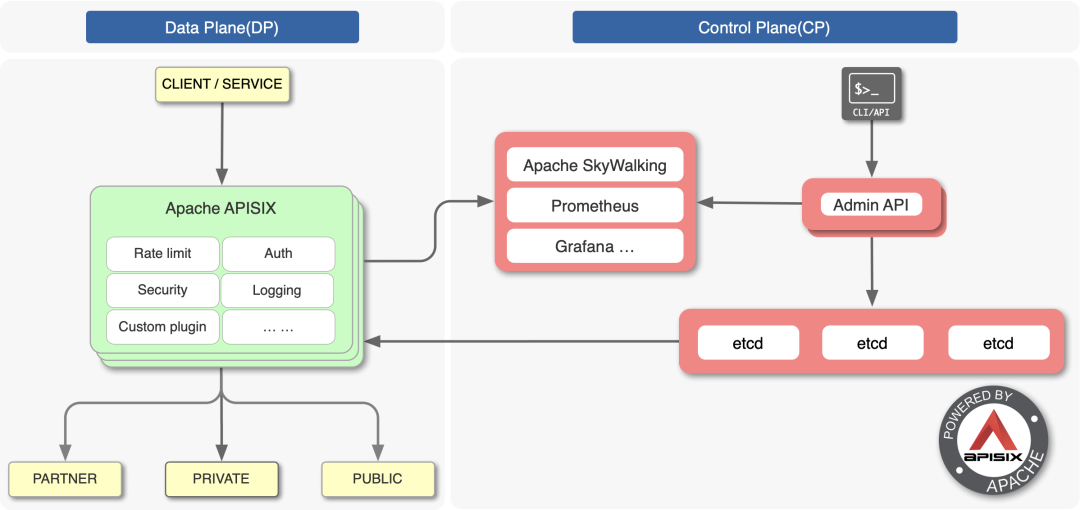

The most criticized thing about the open source version of Nginx is that it does not have the ability of dynamic configuration, remote API and cluster management. As an open source seven layer gateway graduated from CNCF, apisid realizes the dynamic management of Nginx cluster based on etcd and Lua.

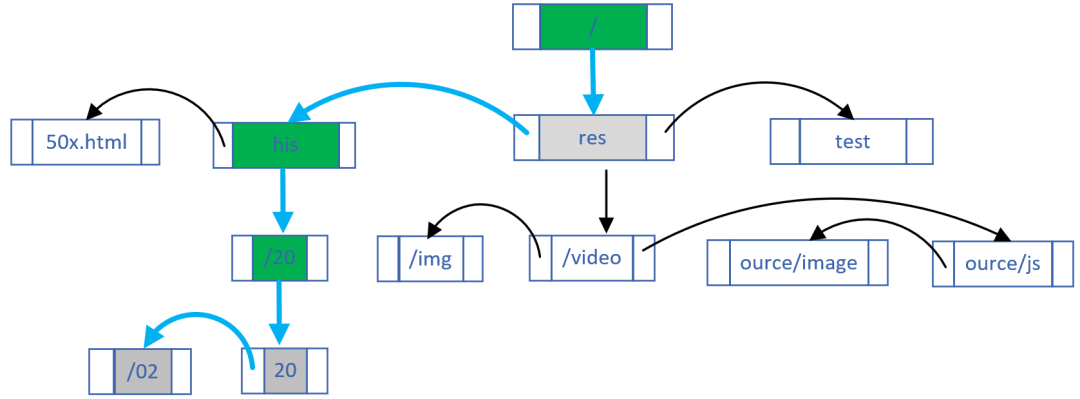

APISIX architecture diagram

It is not easy for Nginx to have dynamic and cluster management capabilities, because it will face the following problems:

- Microservice architecture makes upstream services have many types and large quantities, which leads to frequent changes in routing rules and upstream servers. The routing matching of Nginx is based on static Trie prefix tree, hash table and regular array_ If the name and location change, the dynamic change of configuration cannot be realized without reload;

- Nginx locates itself in ADC edge load balancing, so it does not support HTTP 2 protocol for upstream. This makes it more difficult for OpenResty ecology to implement etcd gRPC interface, so it is bound to be inefficient to receive configuration changes through the watch mechanism;

- The multi process architecture increases the difficulty of data synchronization between Worker processes. A low-cost implementation mechanism must be selected to ensure that each Nginx node and Worker process have the latest configuration;

wait.

APISIX realizes the dynamic management of configuration based on Lua timer and Lua rest etcd module. This paper will be based on APISIX2 8,OpenResty1.19.3.2,Nginx1.19.3 analyze the principle of apisik to realize REST API remote control of nginx cluster.

Next, I will analyze the solution of apisik.

Configuration synchronization scheme based on etcd watch mechanism

Cluster management must rely on centralized configuration. Etcd is such a database. APIs IX does not choose relational database as the configuration center because etcd has the following two advantages:

- etcd adopts Paxos like Raft protocol to ensure data consistency. It is a decentralized distributed database with higher reliability than relational database;

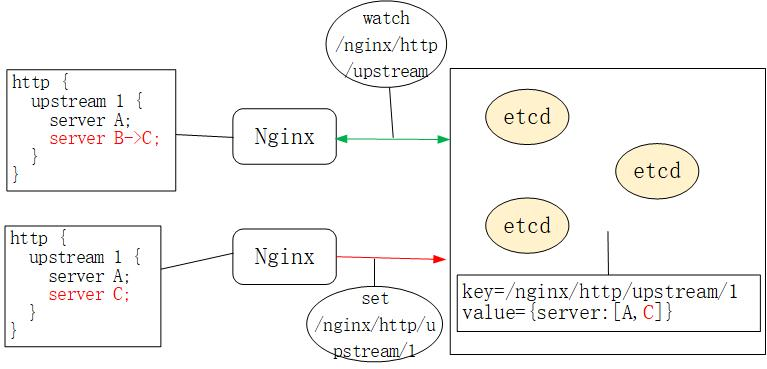

- The watch mechanism of etcd allows the client to monitor the change of a key, that is, if the value value of a key such as / nginx/http/upstream changes, the client of watch will immediately receive a notification, as shown in the following figure:

Synchronization of nginx configuration based on etcd

Therefore, unlike Orange[1] using MySQL and Kong[2] using PostgreSQL as the configuration center (both of which are also API gateways based on OpenResty), Apis IX uses etcd as the centralized configuration component.

Therefore, you can see the following similar configurations through etcdctl in Apis IX of the production environment:

$ etcdctl get "/apisix/upstreams/1"

/apisix/upstreams/1

{"hash_on":"vars","nodes":{"httpbin.org:80":1},"create_time":1627982128,"update_time":1627982128,"scheme":"http","type":"roundrobin","pass_host":"pass","id":"1"}

The prefix / apifix can be found in conf / config Modify in yaml, for example:

etcd:

host:

- "http://127.0.0.1:2379"

prefix: /apisix ## apisix configurations prefix

upstreams/1 is equivalent to nginx Http {upstream 1 {}} configuration in conf. Similar keywords include / apisik / services /, / apisik / routes /.

So, how does Nginx get etcd configuration data changes through the watch mechanism? Is there a new agent process started? Does it communicate with etcd via HTTP/1.1 or gRPC?

ngx.timer.at timer

APIs IX does not start processes other than Nginx to communicate with etcd. It's actually through NGX timer. At this timer implements the watch mechanism. In order to facilitate students who don't know much about OpenResty, let's take a look at how the timer in Nginx is implemented. It is the basis of the implementation of the watch mechanism.

Nginx's red black tree timer

Nginx adopts the multiplexing mechanism of epoll + nonblock socket to realize the event processing model, in which each worker process will cycle to process network IO and timer events:

//See Src / OS / Unix / NGX in Nginx_ process_ cycle. C Documents

static void

ngx_worker_process_cycle(ngx_cycle_t *cycle, void *data)

{

for ( ;; ) {

ngx_process_events_and_timers(cycle);

}

}

// See ngx_proc.c Documents

void

ngx_process_events_and_timers(ngx_cycle_t *cycle)

{

timer = ngx_event_find_timer();

(void) ngx_process_events(cycle, timer, flags);

ngx_event_process_posted(cycle, &ngx_posted_accept_events);

ngx_event_expire_timers();

ngx_event_process_posted(cycle, &ngx_posted_events);

}

ngx_ event_ expire_ The timers function calls the handler method for all timeout events. In fact, the timer is implemented by the red black tree [3] (a balanced ordered binary tree), where key is the absolute expiration time of each event. In this way, as long as the minimum node is compared with the current time, the expired event can be found quickly.

Lua timer for OpenResty

Of course, the development efficiency of the above C functions is very low. Therefore, OpenResty encapsulates the Lua interface through NGX timer. At [4] NGX_ timer_ The C function add is exposed to Lua language:

//See OpenResty /ngx_lua-0.10.19/src/ngx_http_lua_timer.c Documents

void

ngx_http_lua_inject_timer_api(lua_State *L)

{

lua_createtable(L, 0 /* narr */, 4 /* nrec */); /* ngx.timer. */

lua_pushcfunction(L, ngx_http_lua_ngx_timer_at);

lua_setfield(L, -2, "at");

lua_setfield(L, -2, "timer");

}

static int

ngx_http_lua_ngx_timer_at(lua_State *L)

{

return ngx_http_lua_ngx_timer_helper(L, 0);

}

static int

ngx_http_lua_ngx_timer_helper(lua_State *L, int every)

{

ngx_event_t *ev = NULL;

ev->handler = ngx_http_lua_timer_handler;

ngx_add_timer(ev, delay);

}

So when we call NGX timer. At this Lua timer, NGX is added to the red black tree timer of Nginx_ http_ lua_ timer_ Handler callback function, which will not block Nginx.

Let's take a look at how APIs IX uses NGX timer. At.

APISIX watch mechanism based on timer implementation

The Nginx framework provides many hooks for C module development, and OpenResty exposes some hooks in the form of Lua language, as shown in the following figure:

openresty hook

APIs IX only uses 8 hooks (note that set_by_lua and rewrite_by_lua are not used in Apis IX, and the plugin in rewrite phase is actually customized by APIs IX and has nothing to do with Nginx), including:

- init_by_lua: initialization when the Master process starts;

- init_worker_by_lua: initialization of each Worker process at startup (including the initialization of privileged agent process, which is the key to realize the remote RPC call of multilingual plugin such as java);

- ssl_certificate_by_lua: when handling TLS handshake, openssl provides a hook. OpenResty exposes the hook in Lua by modifying the Nginx source code;

- access_by_lua: after receiving the downstream HTTP request header, match the Host domain name, URI, Method and other routing rules here, and select the Plugin and Upstream Server in Service and Upstream;

- balancer_by_lua: all reverse proxy modules executed in the content phase will call back init when selecting the upstream Server_ Upstream hook function, named balancer by OpenResty_ by_ lua;

- header_filter_by_lua: the hook executed before sending the HTTP response header to the downstream;

- body_filter_by_lua: the hook executed before sending the HTTP response package to the downstream;

- log_by_lua: hook when logging access logs.

With the above knowledge ready, we can answer how APIs IX receives etcd data updates.

nginx. Generation method of conf

Each Nginx Worker process is in init_worker_by_lua phase via http_ init_ The worker function starts the timer:

init_worker_by_lua_block {

apisix.http_init_worker()

}

About nginx For conf configuration syntax, you can refer to my article learning the customization instructions of nginx module from general rules [5]. You may be curious that you didn't see nginx after downloading the API IX source code Conf, where did this configuration come from?

Here's nginx Conf is actually generated in real time by the start command of APIs IX. When you execute make run, it will be based on the Lua template apifix / cli / NGX_ tpl. The Lua file generates nginx conf. Please note that the template rules here are self implemented by OpenResty. See Lua resty template [6] for syntax details. Generate nginx For the specific code of conf, see apifix / cli / OPS Lua file:

local template = require("resty.template")

local ngx_tpl = require("apisix.cli.ngx_tpl")

local function init(env)

local yaml_conf, err = file.read_yaml_conf(env.apisix_home)

local conf_render = template.compile(ngx_tpl)

local ngxconf = conf_render(sys_conf)

local ok, err = util.write_file(env.apisix_home .. "/conf/nginx.conf",

ngxconf)

Of course, Apis IX allows users to modify nginx Some data in the conf template. The specific method is to imitate conf / config default Modify the syntax of yaml conf / config Yaml configuration. See read for its implementation principle_ yaml_ Conf function:

function _M.read_yaml_conf(apisix_home)

local local_conf_path = profile:yaml_path("config-default")

local default_conf_yaml, err = util.read_file(local_conf_path)

local_conf_path = profile:yaml_path("config")

local user_conf_yaml, err = util.read_file(local_conf_path)

ok, err = merge_conf(default_conf, user_conf)

end

Visible, ngx_tpl. Only part of the data in Lua template can be replaced by yaml configuration, where conf / config default Yaml is the official default configuration, while conf / config Yaml is a user-defined configuration overwritten by the user. If you think it is not enough to replace the template data, you can modify NGX directly_ TPL template.

How apicix gets etcd notifications

Apisid stores the configurations to be monitored in etcd with different prefixes. At present, there are 11 types:

- /apisix/consumers /: APISIX supports the abstraction of upstream types with consumers;

- /apisix/global_rules /: global general rules;

- /apisix/plugin_configs /: plugins that can be reused between different routes;

- /apisix/plugin_metadata /: metadata of some plug-ins;

- /Apifix / plugins /: list of all Plugin plug-ins;

- /APIs IX / proto /: when transmitting the gRPC protocol, some plug-ins need to convert the protocol content, and the configuration stores the protobuf message definition;

- /Apifix / routes /: route information, which is the entry for HTTP request matching. You can directly specify the upstream Server or mount services or upstream;

- /Apifix / services /: you can abstract the common parts of similar router s into services, and then mount the plugin;

- /Apifix / SSL /: SSL certificate public and private keys and related matching rules;

- /apisix/stream_routes /: route matching rules of OSI layer 4 gateway;

- /Apifix / upstreams /: abstraction of a group of upstream Server hosts;

Here, the processing logic corresponding to each type of configuration is different, so apifix abstracts apifix / core / config_etcd. Lua file, focusing on the update and maintenance of various configurations on etcd. At http_ init_ Each type of configuration in the worker function generates a config_etcd object:

function _M.init_worker()

local err

plugin_configs, err = core.config.new("/plugin_configs", {

automatic = true,

item_schema = core.schema.plugin_config,

checker = plugin_checker,

})

end

And in config_ In the new function of etcd, circular registration will be performed_ automatic_fetch timer:

function _M.new(key, opts)

ngx_timer_at(0, _automatic_fetch, obj)

end

_ automatic_ The fetch function performs sync repeatedly_ Data function (wrapped under xpcall to catch exceptions):

local function _automatic_fetch(premature, self)

local ok, err = xpcall(function()

local ok, err = sync_data(self)

end, debug.traceback)

ngx_timer_at(0, _automatic_fetch, self)

end

sync_ The data function will be updated through the watch mechanism of etcd, and its implementation mechanism will be analyzed in detail next.

To sum up:

Apisid starts each Nginx Worker process through NGX timer. The at function will_ automatic_fetch insert timer_ automatic_ The fetch function executes through sync_ The data function receives the configuration change notification in etcd based on the watch mechanism. In this way, each Nginx node and each Worker process will maintain the latest configuration. There is another obvious advantage of this design: the configuration in etcd is directly written to the Nginx Worker process, so that the new configuration can be directly used when processing requests without synchronizing the configuration between processes, which is simpler than starting an agent process!

HTTP/1.1 protocol of lua rest etcd Library

sync_ How does the data function get the configuration change message of etcd? Look at sync first_ Data source code:

local etcd = require("resty.etcd")

etcd_cli, err = etcd.new(etcd_conf)

local function sync_data(self)

local dir_res, err = waitdir(self.etcd_cli, self.key, self.prev_index + 1, self.timeout)

end

local function waitdir(etcd_cli, key, modified_index, timeout)

local res_func, func_err, http_cli = etcd_cli:watchdir(key, opts)

if http_cli then

local res_cancel, err_cancel = etcd_cli:watchcancel(http_cli)

end

end

The Lua rest etcd library actually communicates with etcd [7]. The watchdir function provided by it is used to receive the notification sent after etcd finds that the value corresponding to the key directory has changed.

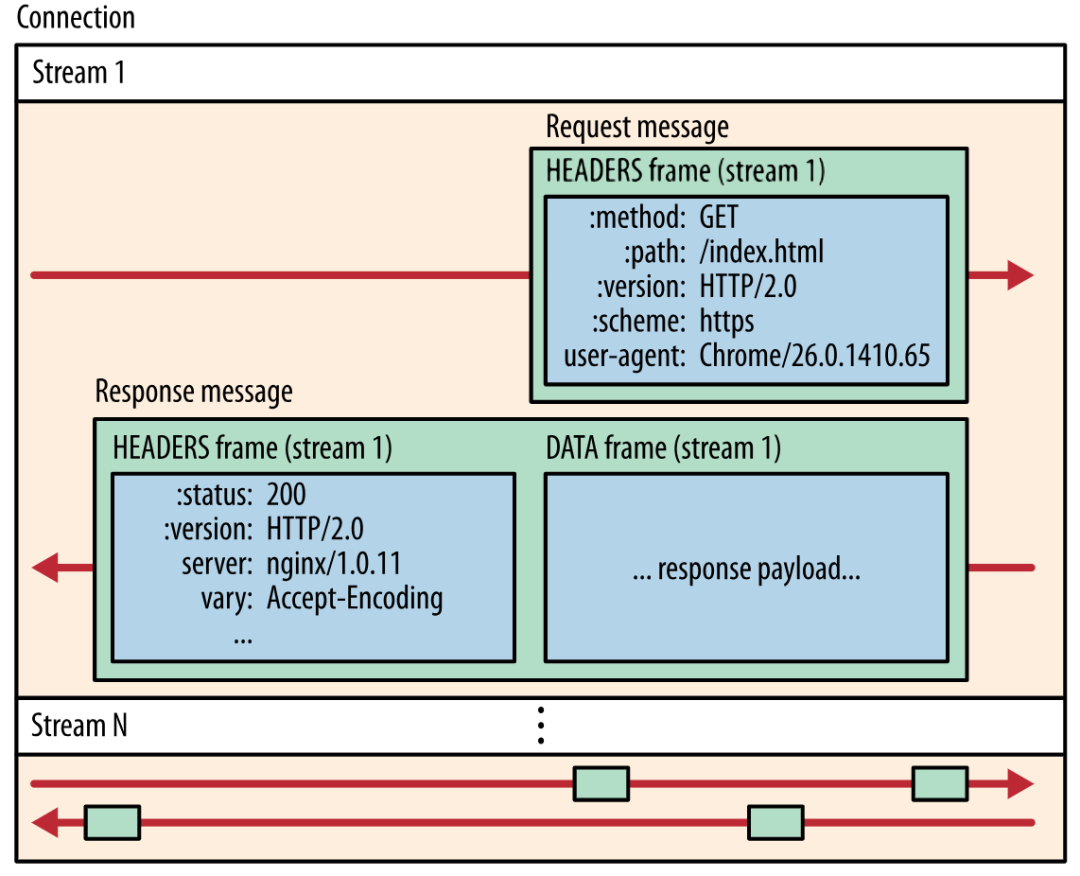

What does the watchcancel function do? This is actually caused by the shortcomings of OpenResty ecology. etcd v3 already supports the efficient gRPC protocol (the bottom layer is HTTP2 protocol). You may have heard that HTTP2 not only has the ability of multiplexing, but also supports the server to push messages directly. For details of HTTP2, please refer to my article in-depth analysis of HTTP3 protocol [8], and understand HTTP2 from the comparison of HTTP3 protocol:

Multiplexing and server push of http2

However, * * Lua ecology does not support HTTP2 protocol at present** Therefore, the Lua rest etcd library actually communicates with etcd through the inefficient HTTP/1.1 protocol, so the / watch notification is also received through the / v3/watch request with timeout. This phenomenon is actually caused by two reasons:

- Nginx positions itself as edge load balancing, so the upstream must be the enterprise intranet with low delay and large bandwidth. Therefore, it is not necessary to support HTTP2 protocol for the upstream protocol!

- When Nginx's upstream cannot provide an HTTP2 mechanism to Lua, Lua can only implement it based on cosocket. The HTTP2 protocol is very complex. At present, there is no HTTP2 cosocket library available in the production environment.

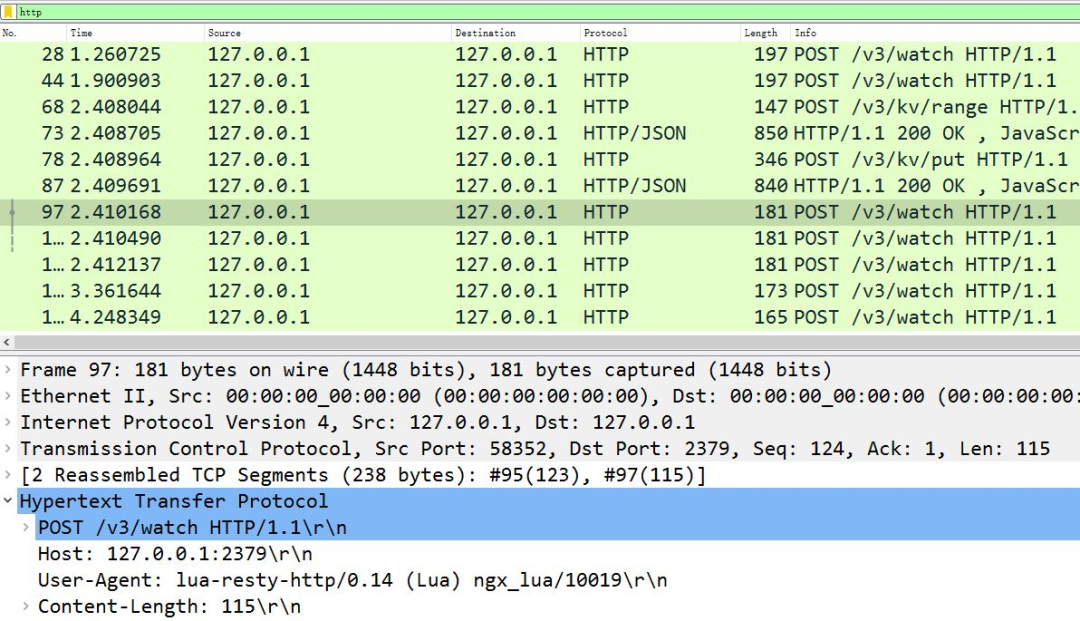

Using the Lua rest etcd Library of HTTP/1.1 is actually inefficient. If you capture packets on apisex, you will see frequent POST messages, in which the URI is / v3/watch, and the Body is the watch directory encoded by Base64:

APIs IX communicates with etcd via HTTP 1

We can verify the implementation details of the watchdir function:

-- lib/resty/etcd/v3.lua file

function _M.watchdir(self, key, opts)

return watch(self, key, attr)

end

local function watch(self, key, attr)

callback_fun, err, http_cli = request_chunk(self, 'POST', '/watch',

opts, attr.timeout or self.timeout)

return callback_fun

end

local function request_chunk(self, method, path, opts, timeout)

http_cli, err = utils.http.new()

-- launch TCP connect

endpoint, err = http_request_chunk(self, http_cli)

-- send out HTTP request

res, err = http_cli:request({

method = method,

path = endpoint.api_prefix .. path,

body = body,

query = query,

headers = headers,

})

end

local function http_request_chunk(self, http_cli)

local endpoint, err = choose_endpoint(self)

ok, err = http_cli:connect({

scheme = endpoint.scheme,

host = endpoint.host,

port = endpoint.port,

ssl_verify = self.ssl_verify,

ssl_cert_path = self.ssl_cert_path,

ssl_key_path = self.ssl_key_path,

})

return endpoint, err

end

It can be seen that in each worker process, apisex passes NGX timer. At and Lua rest etcd libraries repeatedly request etcd to ensure that each worker process contains the latest configuration.

Remote change of apisex configuration and plug-in

Next, let's look at how to remotely modify the configuration in etcd.

Of course, we can directly modify the content of the corresponding key in etcd through the gRPC interface, and then make the Nginx cluster automatically update the configuration based on the above watch mechanism. However, there is a great risk in doing so, because the configuration request has not been verified, resulting in the mismatch between the configuration data and the Nginx cluster!

Modify the configuration through the / apifix / admin / interface of Nginx

Apisid provides a mechanism: access any Nginx node, verify the success of the request through the Lua code in its Worker process, and then write it to etcd through the / v3/dv/put interface. Let's take a look at how APIs IX is implemented.

First, make run generates nginx Conf will automatically listen to port 9080 (which can be modified through the apifix.node_listen configuration in config.yaml). When apifix.enable_admin is set to true, nginx.conf will generate the following configuration:

server {

listen 9080 default_server reuseport;

location /apisix/admin {

content_by_lua_block {

apisix.http_admin()

}

}

}

In this way, the / apifix / Admin request received by Nginx will be http_admin function processing:

-- /apisix/init.lua file

function _M.http_admin()

local ok = router:dispatch(get_var("uri"), {method = get_method()})

end

For the API s that the admin interface can handle, see github document [9]. When the method method is different from the URI, dispatch will execute different processing functions according to the following:

-- /apisix/admin/init.lua file

local uri_route = {

{

paths = [[/apisix/admin/*]],

methods = {"GET", "PUT", "POST", "DELETE", "PATCH"},

handler = run,

},

{

paths = [[/apisix/admin/stream_routes/*]],

methods = {"GET", "PUT", "POST", "DELETE", "PATCH"},

handler = run_stream,

},

{

paths = [[/apisix/admin/plugins/list]],

methods = {"GET"},

handler = get_plugins_list,

},

{

paths = reload_event,

methods = {"PUT"},

handler = post_reload_plugins,

},

}

For example, when creating an Upstream through / apifix / admin / Upstream / 1 and PUT methods:

$ curl "http://127.0.0.1:9080/apisix/admin/upstreams/1" -H "X-API-KEY: edd1c9f034335f136f87ad84b625c8f1" -X PUT -d '

> {

> "type": "roundrobin",

> "nodes": {

> "httpbin.org:80": 1

> }

> }'

{"action":"set","node":{"key":"\/apisix\/upstreams\/1","value":{"hash_on":"vars","nodes":{"httpbin.org:80":1},"create_time":1627982128,"update_time":1627982128,"scheme":"http","type":"roundrobin","pass_host":"pass","id":"1"}}}

You will be in error The following logs can be seen in the log (to see this line of logs, you must set nginx_config.error_log_level in config.yaml to INFO):

2021/08/03 17:15:28 [info] 16437#16437: *23572 [lua] init.lua:130: handler(): uri: ["","apisix","admin","upstreams","1"], client: 127.0.0.1, server: _, request: "PUT /apisix/admin/upstreams/1 HTTP/1.1", host: "127.0.0.1:9080"

This line of log is actually composed of / apifix / admin / init The run function in Lua prints, and its execution is based on the above uri_route dictionary. Let's look at the contents of the run function:

-- /apisix/admin/init.lua file

local function run()

local uri_segs = core.utils.split_uri(ngx.var.uri)

core.log.info("uri: ", core.json.delay_encode(uri_segs))

local seg_res, seg_id = uri_segs[4], uri_segs[5]

local seg_sub_path = core.table.concat(uri_segs, "/", 6)

local resource = resources[seg_res]

local code, data = resource[method](seg_id, req_body, seg_sub_path,

uri_args)

end

Here, the resource[method] function is abstracted again, which is determined by the resources Dictionary:

-- /apisix/admin/init.lua file

local resources = {

routes = require("apisix.admin.routes"),

services = require("apisix.admin.services"),

upstreams = require("apisix.admin.upstreams"),

consumers = require("apisix.admin.consumers"),

schema = require("apisix.admin.schema"),

ssl = require("apisix.admin.ssl"),

plugins = require("apisix.admin.plugins"),

proto = require("apisix.admin.proto"),

global_rules = require("apisix.admin.global_rules"),

stream_routes = require("apisix.admin.stream_routes"),

plugin_metadata = require("apisix.admin.plugin_metadata"),

plugin_configs = require("apisix.admin.plugin_config"),

}

Therefore, the curl request above will be used by / apifix / admin / upstreams For the put function processing of lua file, see the implementation of put function:

-- /apisix/admin/upstreams.lua file

function _M.put(id, conf)

-- Verify the validity of the requested data

local id, err = check_conf(id, conf, true)

local key = "/upstreams/" .. id

core.log.info("key: ", key)

-- generate etcd Configuration data in

local ok, err = utils.inject_conf_with_prev_conf("upstream", key, conf)

-- write in etcd

local res, err = core.etcd.set(key, conf)

end

-- /apisix/core/etcd.lua

local function set(key, value, ttl)

local res, err = etcd_cli:set(prefix .. key, value, {prev_kv = true, lease = data.body.ID})

end

Finally, the new configuration is written to etcd. It can be seen that Nginx will verify the data and then write it to etcd, so that other Worker processes and Nginx nodes will receive the correct configuration through the watch mechanism. You can go through the above process through error Log validation in log:

2021/08/03 17:15:28 [info] 16437#16437: *23572 [lua] upstreams.lua:72: key: /upstreams/1, client: 127.0.0.1, server: _, request: "PUT /apisix/admin/upstreams/1 HTTP/1.1", host: "127.0.0.1:9080"

Why can the new configuration take effect without reload?

Let's look at the principle that the Nginx Worker process can take effect immediately after the admin request is executed.

The request matching of the open source version of Nginx is based on three different containers:

- The server in the static hash table_ The name configuration matches the Host domain name of the request. For details, see how HTTP requests are associated with Nginx server {} blocks [10];

- Secondly, match the location configuration in the static Trie prefix tree with the requested URI. For details, see how do URL s associate with Nginx location configuration blocks [11];

- In the above two processes, if regular expressions are included, they are matched based on the array order (the order in nginx.conf).

Although the execution efficiency of the above process is very high, it is written dead in find_ In the config phase and the Nginx HTTP framework, * * once changed, it can take effect only after nginx -s reload** Therefore, APISIX simply completely abandoned the above process!

From nginx As you can see in conf, requests to access any domain name or URI will match http_access_phase lua function:

server {

server_name _;

location / {

access_by_lua_block {

apisix.http_access_phase()

}

proxy_pass $upstream_scheme://apisix_backend$upstream_uri;

}

}

And at http_ access_ In the phase function, the Method, domain name and URI will be matched based on a cardinal prefix tree implemented in C language (only wildcards are supported, regular expressions are not supported). This library is Lua rest radixtree [12]. Whenever the routing rules change, Lua code will rebuild this cardinal tree:

function _M.match(api_ctx)

if not cached_version or cached_version ~= user_routes.conf_version then

uri_router = base_router.create_radixtree_uri_router(user_routes.values,

uri_routes, false)

cached_version = user_routes.conf_version

end

end

In this way, after the route is changed, it can take effect without reload. The rules for enabling, parameter and sequence adjustment of Plugin are similar.

Finally, Script is mutually exclusive with Plugin. The previous dynamic adjustment only changed the configuration. In fact, the timely compilation of Lua JIT also provides another killer, loadstring, which can convert strings into Lua code. Therefore, after storing Lua code in etcd and setting it as Script, it can be transferred to Nginx to process the request.

Summary

The management of Nginx cluster must rely on centralized configuration components, and highly reliable etcd with watch push mechanism is undoubtedly the most appropriate choice! Although there is no gRPC client in the Resty ecosystem at present, it is still a good scheme for each Worker process to synchronize etcd configuration directly through HTTP/1.1 protocol.

The key to dynamically modifying Nginx configuration lies in two points: the flexibility of Lua language is much higher than Nginx Conf syntax, and Lua code can be imported from external data through loadstring! Of course, in order to ensure the execution efficiency of route matching, APISIX implements the prefix cardinality tree through C language, and performs request matching based on Host, Method and URI, which improves the performance on the basis of ensuring the dynamics.

Apisid has many excellent designs. This paper only discusses the dynamic management of Nginx cluster. The next article will analyze the design of Lua Plugin.

Reference link

[1]

Orange: https://github.com/orlabs/orange

[2]

Kong: https://konghq.com/

[3]

Red black tree: https://zh.wikipedia.org/zh-hans/ Red black tree

[4]

ngx.timer.at: https://github.com/openresty/lua-nginx-module#ngxtimerat

[5]

Learning customization instructions of nginx module from general rules: https://www.taohui.pub/2020/12/23/nginx/ Learn the custom instructions of nginx module from the general rules/

[6]

lua-resty-template: https://github.com/bungle/lua-resty-template

[7]

Lua rest etcd Library: https://github.com/api7/lua-resty-etcd

[8]

In depth analysis of HTTP3 protocol: https://www.taohui.pub/2021/02/04/ Network protocol / in-depth analysis of HTTP3 protocol/

[9]

GitHub documentation: https://github.com/apache/apisix/blob/release/2.8/docs/zh/latest/admin-api.md

[10]

How are HTTP requests associated with Nginx server {} blocks https://www.taohui.pub/2021/08/09/nginx/HTTP How are requests associated with Nginx server blocks/

[11]

How do URLs relate to Nginx location configuration blocks https://www.taohui.pub/2021/08/09/nginx/URL How is the location configuration block associated/

[12]

lua-resty-radixtree: https://github.com/api7/lua-resty-radixtree