1. Custom thread pool

Thread is a kind of system resource. Every time it is created, it will consume some system resources. If a new thread is created for each task in a high concurrency scenario, it will occupy a lot of system space and even cause the problem of memory overflow. Moreover, the number of threads cannot be too large. If there are too many, frequent context switching, There will also be a great burden. For these two reasons, we can make full use of existing threads instead of creating new threads every time

The first is the blocking queue

@Slf4j

class BlockingQueue<T> {

/**

* Default blocking task queue size

*/

private static final Integer DEFAULT_QUEUE_CAPACITY = 5;

/**

* Blocking queue

*/

private Queue<T> queue;

/**

* The blocking queue may have multiple thread operations, so the thread safety needs to be guaranteed, so the lock needs to be used

*/

private final ReentrantLock lock = new ReentrantLock();

/**

* If the queue is full, no more tasks can be added to it, and the thread adding the task needs to be blocked

*/

private final Condition putWaitSet = lock.newCondition();

/**

* If the queue is empty, the task cannot be retrieved, and the thread that fetches the task needs to be blocked

*/

private final Condition getWaitSet = lock.newCondition();

/**

* Blocking task queue capacity

*/

private final int capacity;

/**

* Nonparametric structure

*/

public BlockingQueue() {

this.capacity = DEFAULT_QUEUE_CAPACITY;

initQueue();

}

/**

* Parametric structure with initial capacity

*

* @param capacity Custom capacity size

*/

public BlockingQueue(int capacity) {

this.capacity = capacity;

initQueue();

}

/**

* Create task queue

*/

private void initQueue() {

queue = new ArrayDeque<>(this.capacity);

}

/**

* Block get task without timeout

*

* @return T Task object

*/

public T get() {

lock.lock();

try {

while (queue.size() == 0) {

try {

getWaitSet.await();

} catch (InterruptedException e) {

e.printStackTrace();

}

}

T t = queue.poll();

putWaitSet.signalAll();

return t;

} finally {

lock.unlock();

}

}

/**

* Block get task with timeout

*

* @param timeout Timeout

* @param timeUnit Timeout unit

* @return T Task object

*/

public T get(long timeout, TimeUnit timeUnit) {

lock.lock();

try {

long nanosTime = timeUnit.toNanos(timeout);

while (queue.size() == 0) {

try {

if (nanosTime <= 0) {

return null;

}

nanosTime = getWaitSet.awaitNanos(nanosTime);

} catch (InterruptedException e) {

e.printStackTrace();

}

}

T t = queue.poll();

putWaitSet.signalAll();

return t;

} finally {

lock.unlock();

}

}

/**

* Block add task

*

* @param t Task object

*/

public void put(T t) {

lock.lock();

try {

while (queue.size() == capacity) {

try {

log.info("task:{} Waiting to join the blocking task queue", t);

putWaitSet.await();

} catch (InterruptedException e) {

e.printStackTrace();

}

}

queue.add(t);

getWaitSet.signalAll();

log.info("task:{} Join blocking task queue", t);

} finally {

lock.unlock();

}

}

/**

* Get task queue size

*

* @return int Task queue size

*/

public int size() {

lock.lock();

try {

return queue.size();

} finally {

lock.unlock();

}

}

}

Then there is the custom thread pool

@Slf4j

class ThreadPool {

/**

* Blocked task queue

*/

private final BlockingQueue<Runnable> blockingQueue;

/**

* Worker thread

*/

private final Set<Worker> workers;

/**

* Default number of worker threads

*/

private static final int DEFAULT_POOL_SIZE = 5;

/**

* Default number of blocked task queue tasks

*/

private static final int DEFAULT_QUEUE_SIZE = 5;

/**

* Default waiting time unit

*/

private static final TimeUnit DEFAULT_TIME_UNIT = TimeUnit.SECONDS;

/**

* Default blocking wait time

*/

private static final int DEFAULT_TIMEOUT = 5;

/**

* Number of worker threads

*/

private final int poolSize;

/**

* Maximum number of task queue tasks

*/

private final int queueSize;

/**

* Waiting time unit

*/

private final TimeUnit timeUnit;

/**

* Blocking waiting time

*/

private final int timeout;

/**

* Nonparametric structure

*/

public ThreadPool() {

poolSize = DEFAULT_POOL_SIZE;

queueSize = DEFAULT_QUEUE_SIZE;

timeUnit = DEFAULT_TIME_UNIT;

timeout = DEFAULT_TIMEOUT;

blockingQueue = new BlockingQueue<>(queueSize);

workers = new HashSet<>(poolSize);

}

/**

* Zone parameter structure

*

* @param queueSize Maximum number of blocked task queue tasks

* @param poolSize Number of worker threads

*/

public ThreadPool(int queueSize, int poolSize, TimeUnit timeUnit, int timeout) {

this.poolSize = poolSize;

this.queueSize = queueSize;

this.timeUnit = timeUnit;

this.timeout = timeout;

this.blockingQueue = new BlockingQueue<>(queueSize);

this.workers = new HashSet<>(poolSize);

}

/**

* Perform a task

*

* @param task Task object

*/

public void execute(Runnable task) {

synchronized (workers) {

if (workers.size() < poolSize) {

Worker worker = new Worker(task);

log.info("Create a new task worker thread: {} ,Perform tasks:{}", worker, task);

workers.add(worker);

worker.start();

} else {

blockingQueue.put(task);

}

}

}

/**

* Wrapper class for worker thread

*/

@Data

@EqualsAndHashCode(callSuper = false)

@NoArgsConstructor

@AllArgsConstructor

class Worker extends Thread {

private Runnable task;

@Override

public void run() {

while (task != null || (task = blockingQueue.get(timeout, timeUnit)) != null) {

try {

log.info("Executing:{}", task);

task.run();

} catch (Exception e) {

e.printStackTrace();

} finally {

task = null;

}

}

synchronized (workers) {

log.info("Worker thread removed:{}", this);

workers.remove(this);

}

}

}

}

test

@Slf4j

public class TestPool {

public static void main(String[] args) {

ThreadPool pool = new ThreadPool(10, 2, TimeUnit.SECONDS, 2);

for (int i = 0; i < 15; i++) {

int j = i;

pool.execute(() -> {

try {

TimeUnit.SECONDS.sleep(10);

} catch (InterruptedException e) {

e.printStackTrace();

}

log.info(String.valueOf(j));

});

}

}

}

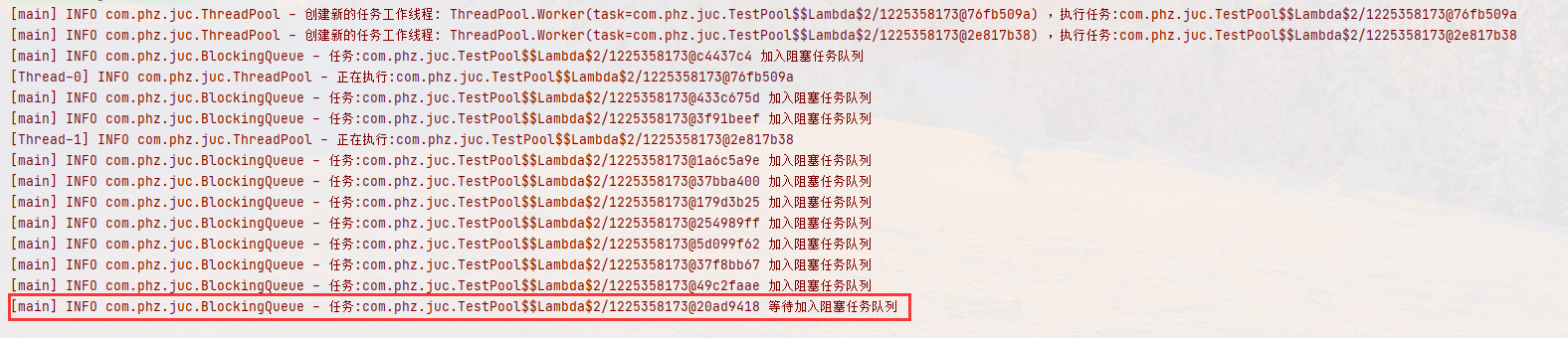

[main] INFO com.phz.juc.ThreadPool - Create a new task worker thread: ThreadPool.Worker(task=com.phz.juc.TestPool$$Lambda$2/1225358173@76fb509a) ,Perform tasks:com.phz.juc.TestPool$$Lambda$2/1225358173@76fb509a [main] INFO com.phz.juc.ThreadPool - Create a new task worker thread: ThreadPool.Worker(task=com.phz.juc.TestPool$$Lambda$2/1225358173@2e817b38) ,Perform tasks:com.phz.juc.TestPool$$Lambda$2/1225358173@2e817b38 [main] INFO com.phz.juc.BlockingQueue - task:com.phz.juc.TestPool$$Lambda$2/1225358173@c4437c4 Join blocking task queue [Thread-0] INFO com.phz.juc.ThreadPool - Executing:com.phz.juc.TestPool$$Lambda$2/1225358173@76fb509a [main] INFO com.phz.juc.BlockingQueue - task:com.phz.juc.TestPool$$Lambda$2/1225358173@433c675d Join blocking task queue [Thread-1] INFO com.phz.juc.ThreadPool - Executing:com.phz.juc.TestPool$$Lambda$2/1225358173@2e817b38 [main] INFO com.phz.juc.BlockingQueue - task:com.phz.juc.TestPool$$Lambda$2/1225358173@3f91beef Join blocking task queue [main] INFO com.phz.juc.BlockingQueue - task:com.phz.juc.TestPool$$Lambda$2/1225358173@1a6c5a9e Join blocking task queue [main] INFO com.phz.juc.BlockingQueue - task:com.phz.juc.TestPool$$Lambda$2/1225358173@37bba400 Join blocking task queue [main] INFO com.phz.juc.BlockingQueue - task:com.phz.juc.TestPool$$Lambda$2/1225358173@179d3b25 Join blocking task queue [main] INFO com.phz.juc.BlockingQueue - task:com.phz.juc.TestPool$$Lambda$2/1225358173@254989ff Join blocking task queue [main] INFO com.phz.juc.BlockingQueue - task:com.phz.juc.TestPool$$Lambda$2/1225358173@5d099f62 Join blocking task queue [main] INFO com.phz.juc.BlockingQueue - task:com.phz.juc.TestPool$$Lambda$2/1225358173@37f8bb67 Join blocking task queue [main] INFO com.phz.juc.BlockingQueue - task:com.phz.juc.TestPool$$Lambda$2/1225358173@49c2faae Join blocking task queue [main] INFO com.phz.juc.BlockingQueue - task:com.phz.juc.TestPool$$Lambda$2/1225358173@20ad9418 Waiting to join the blocking task queue [Thread-0] INFO com.phz.juc.TestPool - 0 [Thread-1] INFO com.phz.juc.TestPool - 1 [Thread-0] INFO com.phz.juc.ThreadPool - Executing:com.phz.juc.TestPool$$Lambda$2/1225358173@c4437c4 [main] INFO com.phz.juc.BlockingQueue - task:com.phz.juc.TestPool$$Lambda$2/1225358173@20ad9418 Join blocking task queue [main] INFO com.phz.juc.BlockingQueue - task:com.phz.juc.TestPool$$Lambda$2/1225358173@439f5b3d Waiting to join the blocking task queue [Thread-1] INFO com.phz.juc.ThreadPool - Executing:com.phz.juc.TestPool$$Lambda$2/1225358173@433c675d [main] INFO com.phz.juc.BlockingQueue - task:com.phz.juc.TestPool$$Lambda$2/1225358173@439f5b3d Join blocking task queue [main] INFO com.phz.juc.BlockingQueue - task:com.phz.juc.TestPool$$Lambda$2/1225358173@1d56ce6a Waiting to join the blocking task queue [Thread-1] INFO com.phz.juc.TestPool - 3 [Thread-0] INFO com.phz.juc.TestPool - 2 [Thread-1] INFO com.phz.juc.ThreadPool - Executing:com.phz.juc.TestPool$$Lambda$2/1225358173@3f91beef [Thread-0] INFO com.phz.juc.ThreadPool - Executing:com.phz.juc.TestPool$$Lambda$2/1225358173@1a6c5a9e [main] INFO com.phz.juc.BlockingQueue - task:com.phz.juc.TestPool$$Lambda$2/1225358173@1d56ce6a Join blocking task queue [Thread-1] INFO com.phz.juc.TestPool - 4 [Thread-0] INFO com.phz.juc.TestPool - 5 [Thread-1] INFO com.phz.juc.ThreadPool - Executing:com.phz.juc.TestPool$$Lambda$2/1225358173@37bba400 [Thread-0] INFO com.phz.juc.ThreadPool - Executing:com.phz.juc.TestPool$$Lambda$2/1225358173@179d3b25 [Thread-1] INFO com.phz.juc.TestPool - 6 [Thread-0] INFO com.phz.juc.TestPool - 7 [Thread-1] INFO com.phz.juc.ThreadPool - Executing:com.phz.juc.TestPool$$Lambda$2/1225358173@254989ff [Thread-0] INFO com.phz.juc.ThreadPool - Executing:com.phz.juc.TestPool$$Lambda$2/1225358173@5d099f62 [Thread-0] INFO com.phz.juc.TestPool - 9 [Thread-1] INFO com.phz.juc.TestPool - 8 [Thread-0] INFO com.phz.juc.ThreadPool - Executing:com.phz.juc.TestPool$$Lambda$2/1225358173@37f8bb67 [Thread-1] INFO com.phz.juc.ThreadPool - Executing:com.phz.juc.TestPool$$Lambda$2/1225358173@49c2faae [Thread-0] INFO com.phz.juc.TestPool - 10 [Thread-1] INFO com.phz.juc.TestPool - 11 [Thread-0] INFO com.phz.juc.ThreadPool - Executing:com.phz.juc.TestPool$$Lambda$2/1225358173@20ad9418 [Thread-1] INFO com.phz.juc.ThreadPool - Executing:com.phz.juc.TestPool$$Lambda$2/1225358173@439f5b3d [Thread-0] INFO com.phz.juc.TestPool - 12 [Thread-1] INFO com.phz.juc.TestPool - 13 [Thread-0] INFO com.phz.juc.ThreadPool - Executing:com.phz.juc.TestPool$$Lambda$2/1225358173@1d56ce6a [Thread-1] INFO com.phz.juc.ThreadPool - Worker thread removed:ThreadPool.Worker(task=null) [Thread-0] INFO com.phz.juc.TestPool - 14 [Thread-0] INFO com.phz.juc.ThreadPool - Worker thread removed:ThreadPool.Worker(task=null)

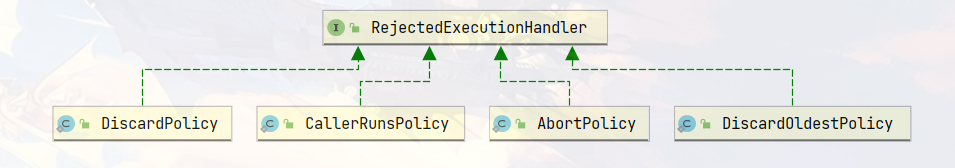

2. Reject strategy

What happens if the task to be performed takes a long time?

@Slf4j

public class TestPool {

public static void main(String[] args) {

ThreadPool pool = new ThreadPool(10, 2, TimeUnit.SECONDS, 2);

for (int i = 0; i < 20; i++) {

int j = i;

pool.execute(() -> {

try {

TimeUnit.SECONDS.sleep(1000);

} catch (InterruptedException e) {

e.printStackTrace();

}

log.info(String.valueOf(j));

});

}

}

}

We can see that the main thread has been dead waiting here and can't continue to perform subsequent tasks. In this case, should we throw an exception or refuse to execute to optimize, or just die, or let the caller execute the task by himself... There are so many possibilities. If applied to the code, there will probably be a lot of if branches, At this time, we can use the policy model to summarize all rejection strategies

@FunctionalInterface

interface RejectPolicy<T> {

/**

* Reject policy method

*

* @param queue Queue blocking

* @param task Task object

*/

void reject(ArrayDeque<T> queue, T task);

}

Modify ThreadPool accordingly

@Slf4j

class ThreadPool {

/**

* Blocked task queue

*/

private final BlockingQueue<Runnable> blockingQueue;

/**

* Worker thread

*/

private final Set<Worker> workers;

/**

* Default number of working threads

*/

private static final int DEFAULT_POOL_SIZE = 5;

/**

* Default number of blocked task queue tasks

*/

private static final int DEFAULT_QUEUE_SIZE = 5;

/**

* Default waiting time unit

*/

private static final TimeUnit DEFAULT_TIME_UNIT = TimeUnit.SECONDS;

/**

* Default blocking wait time

*/

private static final int DEFAULT_TIMEOUT = 5;

/**

* Number of worker threads

*/

private final int poolSize;

/**

* Maximum number of task queue tasks

*/

private final int queueSize;

/**

* Waiting time unit

*/

private final TimeUnit timeUnit;

/**

* Blocking waiting time

*/

private final int timeout;

/**

* Reject strategy

*/

private final RejectPolicy<Runnable> rejectPolicy;

/**

* Nonparametric structure

*/

public ThreadPool() {

poolSize = DEFAULT_POOL_SIZE;

queueSize = DEFAULT_QUEUE_SIZE;

timeUnit = DEFAULT_TIME_UNIT;

timeout = DEFAULT_TIMEOUT;

blockingQueue = new BlockingQueue<>(queueSize);

workers = new HashSet<>(poolSize);

//The default calling thread executes by itself

rejectPolicy = (queue, task) -> task.run();

}

/**

* Zone parameter structure

*

* @param queueSize Maximum number of blocked task queue tasks

* @param poolSize Number of worker threads

*/

public ThreadPool(int queueSize, int poolSize, TimeUnit timeUnit, int timeout, RejectPolicy<Runnable> rejectPolicy) {

this.poolSize = poolSize;

this.queueSize = queueSize;

this.timeUnit = timeUnit;

this.timeout = timeout;

this.blockingQueue = new BlockingQueue<>(queueSize);

this.workers = new HashSet<>(poolSize);

this.rejectPolicy = rejectPolicy;

}

/**

* Perform a task

*

* @param task Task object

*/

public void execute(Runnable task) {

synchronized (workers) {

if (workers.size() < poolSize) {

Worker worker = new Worker(task);

log.info("Create a new task worker thread: {} ,Perform tasks:{}", worker, task);

workers.add(worker);

worker.start();

} else {

blockingQueue.tryPut(rejectPolicy, task);

}

}

}

/**

* Wrapper class for worker thread

*/

@Data

@EqualsAndHashCode(callSuper = false)

@NoArgsConstructor

@AllArgsConstructor

class Worker extends Thread {

private Runnable task;

@Override

public void run() {

while (task != null || (task = blockingQueue.get(timeout, timeUnit)) != null) {

try {

log.info("Executing:{}", task);

task.run();

} catch (Exception e) {

e.printStackTrace();

} finally {

task = null;

}

}

synchronized (workers) {

log.info("Worker thread removed:{}", this);

workers.remove(this);

}

}

}

}

The blocking queue also needs to be modified

@Slf4j

class BlockingQueue<T> {

/**

* Default blocking task queue size

*/

private static final Integer DEFAULT_QUEUE_CAPACITY = 5;

/**

* Blocking queue

*/

private ArrayDeque<T> queue;

/**

* The blocking queue may have multiple thread operations, so the thread safety needs to be guaranteed, so the lock needs to be used

*/

private final ReentrantLock lock = new ReentrantLock();

/**

* If the queue is full, no more tasks can be added to it, and the thread adding the task needs to be blocked

*/

private final Condition putWaitSet = lock.newCondition();

/**

* If the queue is empty, the task cannot be retrieved, and the thread that fetches the task needs to be blocked

*/

private final Condition getWaitSet = lock.newCondition();

/**

* Blocking task queue capacity

*/

private final int capacity;

/**

* Nonparametric structure

*/

public BlockingQueue() {

this.capacity = DEFAULT_QUEUE_CAPACITY;

initQueue();

}

/**

* Parametric structure with initial capacity

*

* @param capacity Custom capacity size

*/

public BlockingQueue(int capacity) {

this.capacity = capacity;

initQueue();

}

/**

* Create task queue

*/

private void initQueue() {

queue = new ArrayDeque<>(this.capacity);

}

/**

* Block get task without timeout

*

* @return T Task object

*/

public T get() {

lock.lock();

try {

while (queue.size() == 0) {

try {

getWaitSet.await();

} catch (InterruptedException e) {

e.printStackTrace();

}

}

T t = queue.poll();

putWaitSet.signalAll();

return t;

} finally {

lock.unlock();

}

}

/**

* Block get task with timeout

*

* @param timeout Timeout

* @param timeUnit Timeout unit

* @return T Task object

*/

public T get(long timeout, TimeUnit timeUnit) {

lock.lock();

try {

long nanosTime = timeUnit.toNanos(timeout);

while (queue.size() == 0) {

try {

if (nanosTime <= 0) {

return null;

}

nanosTime = getWaitSet.awaitNanos(nanosTime);

} catch (InterruptedException e) {

e.printStackTrace();

}

}

T t = queue.poll();

putWaitSet.signalAll();

return t;

} finally {

lock.unlock();

}

}

/**

* Block add task

*

* @param t Task object

*/

public void put(T t) {

lock.lock();

try {

while (queue.size() == capacity) {

try {

log.info("task:{} Waiting to join the blocking task queue", t);

putWaitSet.await();

} catch (InterruptedException e) {

e.printStackTrace();

}

}

queue.add(t);

getWaitSet.signalAll();

log.info("task:{} Join blocking task queue", t);

} finally {

lock.unlock();

}

}

/**

* Add task with timeout blocking

*

* @param t Task object

* @param timeout Timeout duration

* @param timeUnit Timeout duration unit

*/

public boolean put(T t, long timeout, TimeUnit timeUnit) {

lock.lock();

try {

long nanos = timeUnit.toNanos(timeout);

while (queue.size() == capacity) {

try {

if (nanos <= 0) {

log.info("Adding task timed out, cancel adding");

return false;

}

log.info("task:{} Waiting to join the blocking task queue", t);

nanos = putWaitSet.awaitNanos(nanos);

} catch (InterruptedException e) {

e.printStackTrace();

}

}

queue.add(t);

getWaitSet.signalAll();

log.info("task:{} Join blocking task queue", t);

return true;

} finally {

lock.unlock();

}

}

/**

* Get task queue size

*

* @return int Task queue size

*/

public int size() {

lock.lock();

try {

return queue.size();

} finally {

lock.unlock();

}

}

/**

* Add task with reject policy

*

* @param rejectPolicy Reject strategy

* @param task Task object

*/

public void tryPut(RejectPolicy<T> rejectPolicy, T task) {

lock.lock();

try {

if (queue.size() == capacity) {

rejectPolicy.reject(queue, task);

} else {

log.info("Join task queue: {}", task);

queue.add(task);

getWaitSet.signalAll();

}

} finally {

lock.unlock();

}

}

}

test

ThreadPool pool = new ThreadPool(10, 2, TimeUnit.SECONDS, 2,

//Waiver of execution

(blockingQueue, task) -> {

log.info("give up{}", task);

}

);

for (int i = 0; i < 20; i++) {

int j = i;

pool.execute(() -> {

try {

TimeUnit.SECONDS.sleep(10);

} catch (InterruptedException e) {

e.printStackTrace();

}

log.info(String.valueOf(j));

});

}

[main] INFO com.phz.juc.ThreadPool - Create a new task worker thread: ThreadPool.Worker(task=com.phz.juc.TestPool$$Lambda$3/2121055098@4d405ef7) ,Perform tasks:com.phz.juc.TestPool$$Lambda$3/2121055098@4d405ef7 [main] INFO com.phz.juc.ThreadPool - Create a new task worker thread: ThreadPool.Worker(task=com.phz.juc.TestPool$$Lambda$3/2121055098@3f91beef) ,Perform tasks:com.phz.juc.TestPool$$Lambda$3/2121055098@3f91beef [main] INFO com.phz.juc.BlockingQueue - Join task queue: com.phz.juc.TestPool$$Lambda$3/2121055098@1a6c5a9e [Thread-0] INFO com.phz.juc.ThreadPool - Executing:com.phz.juc.TestPool$$Lambda$3/2121055098@4d405ef7 [Thread-1] INFO com.phz.juc.ThreadPool - Executing:com.phz.juc.TestPool$$Lambda$3/2121055098@3f91beef [main] INFO com.phz.juc.BlockingQueue - Join task queue: com.phz.juc.TestPool$$Lambda$3/2121055098@37bba400 [main] INFO com.phz.juc.BlockingQueue - Join task queue: com.phz.juc.TestPool$$Lambda$3/2121055098@179d3b25 [main] INFO com.phz.juc.BlockingQueue - Join task queue: com.phz.juc.TestPool$$Lambda$3/2121055098@254989ff [main] INFO com.phz.juc.BlockingQueue - Join task queue: com.phz.juc.TestPool$$Lambda$3/2121055098@5d099f62 [main] INFO com.phz.juc.BlockingQueue - Join task queue: com.phz.juc.TestPool$$Lambda$3/2121055098@37f8bb67 [main] INFO com.phz.juc.BlockingQueue - Join task queue: com.phz.juc.TestPool$$Lambda$3/2121055098@49c2faae [main] INFO com.phz.juc.BlockingQueue - Join task queue: com.phz.juc.TestPool$$Lambda$3/2121055098@20ad9418 [main] INFO com.phz.juc.BlockingQueue - Join task queue: com.phz.juc.TestPool$$Lambda$3/2121055098@31cefde0 [main] INFO com.phz.juc.BlockingQueue - Join task queue: com.phz.juc.TestPool$$Lambda$3/2121055098@439f5b3d [main] INFO com.phz.juc.TestPool - give up com.phz.juc.TestPool$$Lambda$3/2121055098@1d56ce6a [main] INFO com.phz.juc.TestPool - give up com.phz.juc.TestPool$$Lambda$3/2121055098@5197848c [main] INFO com.phz.juc.TestPool - give up com.phz.juc.TestPool$$Lambda$3/2121055098@17f052a3 [main] INFO com.phz.juc.TestPool - give up com.phz.juc.TestPool$$Lambda$3/2121055098@2e0fa5d3 [main] INFO com.phz.juc.TestPool - give up com.phz.juc.TestPool$$Lambda$3/2121055098@5010be6 [main] INFO com.phz.juc.TestPool - give up com.phz.juc.TestPool$$Lambda$3/2121055098@685f4c2e [main] INFO com.phz.juc.TestPool - give up com.phz.juc.TestPool$$Lambda$3/2121055098@7daf6ecc [main] INFO com.phz.juc.TestPool - give up com.phz.juc.TestPool$$Lambda$3/2121055098@2e5d6d97 [Thread-0] INFO com.phz.juc.TestPool - 0 [Thread-1] INFO com.phz.juc.TestPool - 1 [Thread-0] INFO com.phz.juc.ThreadPool - Executing:com.phz.juc.TestPool$$Lambda$3/2121055098@1a6c5a9e [Thread-1] INFO com.phz.juc.ThreadPool - Executing:com.phz.juc.TestPool$$Lambda$3/2121055098@37bba400 [Thread-1] INFO com.phz.juc.TestPool - 3 [Thread-0] INFO com.phz.juc.TestPool - 2 [Thread-1] INFO com.phz.juc.ThreadPool - Executing:com.phz.juc.TestPool$$Lambda$3/2121055098@179d3b25 [Thread-0] INFO com.phz.juc.ThreadPool - Executing:com.phz.juc.TestPool$$Lambda$3/2121055098@254989ff [Thread-0] INFO com.phz.juc.TestPool - 5 [Thread-1] INFO com.phz.juc.TestPool - 4 [Thread-0] INFO com.phz.juc.ThreadPool - Executing:com.phz.juc.TestPool$$Lambda$3/2121055098@5d099f62 [Thread-1] INFO com.phz.juc.ThreadPool - Executing:com.phz.juc.TestPool$$Lambda$3/2121055098@37f8bb67 [Thread-1] INFO com.phz.juc.TestPool - 7 [Thread-0] INFO com.phz.juc.TestPool - 6 [Thread-1] INFO com.phz.juc.ThreadPool - Executing:com.phz.juc.TestPool$$Lambda$3/2121055098@49c2faae [Thread-0] INFO com.phz.juc.ThreadPool - Executing:com.phz.juc.TestPool$$Lambda$3/2121055098@20ad9418 [Thread-1] INFO com.phz.juc.TestPool - 8 [Thread-0] INFO com.phz.juc.TestPool - 9 [Thread-1] INFO com.phz.juc.ThreadPool - Executing:com.phz.juc.TestPool$$Lambda$3/2121055098@31cefde0 [Thread-0] INFO com.phz.juc.ThreadPool - Executing:com.phz.juc.TestPool$$Lambda$3/2121055098@439f5b3d [Thread-1] INFO com.phz.juc.TestPool - 10 [Thread-0] INFO com.phz.juc.TestPool - 11 [Thread-1] INFO com.phz.juc.ThreadPool - Worker thread removed:ThreadPool.Worker(task=null) [Thread-0] INFO com.phz.juc.ThreadPool - Worker thread removed:ThreadPool.Worker(task=null)

Other rejection strategies

- The caller executes it himself

(blockingQueue, task) -> {

task.run();

}

- wait stupidly for too long

BlockingQueue::put

- Throw exception

(blockingQueue, task) -> {

throw new RuntimeException("Task execution failed " + task);

}

- With timeout, etc

(blockingQueue, task) -> {

blockingQueue.put(task, 1500, TimeUnit.MILLISECONDS);

}

- Waiver of execution

(blockingQueue, task) -> {

log.info("give up{}", task);

}

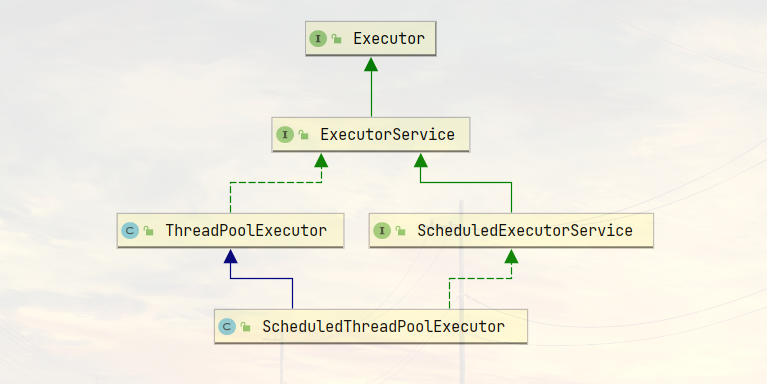

3,ThreadPoolExecutor

3.1 thread pool status

ThreadPoolExecutor uses the upper 3 bits of int to indicate the thread pool status and the lower 29 bits to indicate the number of threads

| Status name | High 3 bits | Receive new tasks | Processing blocking queue tasks | explain |

|---|---|---|---|---|

| RUNNING | 111 | Y | Y | |

| SHUTDOWN | 000 | N | Y | New tasks are not received, but the remaining tasks in the blocking queue are processed |

| STOP | 001 | N | N | Interrupts the task in progress and discards the blocking Queue task |

| TIDYING | 010 | - | - | The task is fully executed, and the active thread is 0. It is about to enter the end |

| TERMINATED | 011 | - | - | End state |

private static final int RUNNING = -1 << COUNT_BITS; private static final int SHUTDOWN = 0 << COUNT_BITS; private static final int STOP = 1 << COUNT_BITS; private static final int TIDYING = 2 << COUNT_BITS; private static final int TERMINATED = 3 << COUNT_BITS;

In terms of numbers, terminated > tidying > stop > shutdown > running (111, the highest bit is 1, indicating a negative number). These information are stored in an atomic variable ctl to combine the thread pool state with the number of threads, so that one CAS atomic operation can be used for assignment

// rs is the high three bits, indicating the status, wc is the low 29 bits, indicating the number of threads, and then merge them

private static int ctlOf(int rs, int wc) { return rs | wc; }

//c is the original value, and the return value of ctlOf is the expected value

ctl.compareAndSet(c, ctlOf(targetState, workerCountOf(c))))

3.2 construction method

public ThreadPoolExecutor(int corePoolSize,

int maximumPoolSize,

long keepAliveTime,

TimeUnit unit,

BlockingQueue<Runnable> workQueue,

ThreadFactory threadFactory,

RejectedExecutionHandler handler) {

if (corePoolSize < 0 ||

maximumPoolSize <= 0 ||

maximumPoolSize < corePoolSize ||

keepAliveTime < 0)

throw new IllegalArgumentException();

if (workQueue == null || threadFactory == null || handler == null)

throw new NullPointerException();

this.acc = System.getSecurityManager() == null ?

null :

AccessController.getContext();

this.corePoolSize = corePoolSize;

this.maximumPoolSize = maximumPoolSize;

this.workQueue = workQueue;

this.keepAliveTime = unit.toNanos(keepAliveTime);

this.threadFactory = threadFactory;

this.handler = handler;

}

- corePoolSize number of core threads (maximum number of threads reserved)

- maximumPoolSize maximum number of threads (minus the number of core threads = number of emergency threads)

- keepAliveTime survival time - for emergency thread unit time - for emergency thread

- workQueue blocking queue

- threadFactory thread factory (you can give a good name when creating threads, which is convenient for debugging)

- handler reject policy

Among them, the emergency thread is used. If the tasks executed by the core thread are not finished and the blocking queue is full, it depends on whether there is an emergency thread. If there is an emergency thread, it will be executed. After the emergency thread is executed, it will be destroyed after a certain time (survival time). However, after the core thread is executed, it will not be destroyed and will still survive in the background. If the core thread blocks the queue, When the emergency threads are full, the reject policy will be executed

The working mode is as follows:

-

There are no threads in the thread pool at first. When a task is submitted to the thread pool, the thread pool will create a new thread to execute the task.

-

When the number of threads reaches the corePoolSize and no thread is idle, add a task, and the newly added task will be added to the workQueue queue until there are idle threads.

-

If a bounded queue is selected for the queue, threads of maximumPoolSize - corePoolSize will be created to rescue when the task exceeds the queue size.

-

If the thread reaches maximumPoolSize and there are still new tasks, the reject policy will be executed. The rejection policy JDK provides four implementations, and other well-known frameworks also provide implementations

- AbortPolicy allows the caller to throw a RejectedExecutionException exception, which is the default policy

- CallerRunsPolicy lets the caller run the task

- Discard policy abandons this task

- DiscardOldestPolicy discards the earliest task in the queue and replaces it with this task

- The implementation of Dubbo will record the log and dump the thread stack information before throwing the RejectedExecutionException exception, which is convenient to locate the problem

- Netty's implementation is to create a new thread to perform tasks

- The implementation of ActiveMQ, with timeout waiting (60s), tries to put into the queue, which is similar to our previously customized rejection policy

- The implementation of PinPoint (link tracking), which uses a rejection policy chain, will try each rejection policy in the policy chain one by one

-

After the peak has passed, if the emergency thread exceeding the corePoolSize has no task to do for a period of time, it needs to end to save resources. This time is controlled by keepAliveTime and unit.

According to this construction method, the JDK Executors class provides many factory methods to create thread pools for various purposes

3.3. Several factory methods

Relevant methods can be viewed in the Executors class

3.3.1,newFixedThreadPool

public static ExecutorService newFixedThreadPool(int nThreads) {

return new ThreadPoolExecutor(nThreads, nThreads,

0L, TimeUnit.MILLISECONDS,

new LinkedBlockingQueue<Runnable>());

}

- Number of core threads = = maximum number of threads (no emergency threads were created), so there is no timeout

- The blocking queue is unbounded and can hold any number of tasks

It is applicable to tasks with known amount of tasks and relatively time-consuming

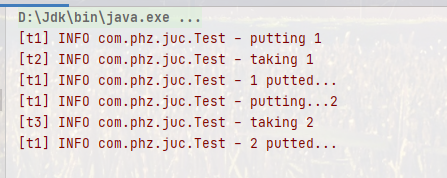

3.3.2,newCachedThreadPool

public static ExecutorService newCachedThreadPool() {

return new ThreadPoolExecutor(0, Integer.MAX_VALUE,

60L, TimeUnit.SECONDS,

new SynchronousQueue<Runnable>());

}

- The number of core threads is 0, and the maximum number of threads is integer MAX_ Value, the idle lifetime of the emergency thread is 60s, which means

- All are emergency threads (can be recycled after 60s)

- Emergency threads can be created indefinitely

- The queue is implemented by SynchronousQueue, which is characterized by no capacity and no thread to get it. It can't be put in (pay money and deliver goods)

SynchronousQueue<Integer> integers = new SynchronousQueue<>();

new Thread(() -> {

try {

log.info("putting {} ", 1);

integers.put(1);

log.info("{} putted...", 1);

log.info("putting...{} ", 2);

integers.put(2);

log.info("{} putted...", 2);

} catch (InterruptedException e) {

e.printStackTrace();

}

}, "t1").start();

TimeUnit.SECONDS.sleep(1);

new Thread(() -> {

try {

log.info("taking {}", 1);

integers.take();

} catch (InterruptedException e) {

e.printStackTrace();

}

}, "t2").start();

TimeUnit.SECONDS.sleep(1);

new Thread(() -> {

try {

log.info("taking {}", 2);

integers.take();

} catch (InterruptedException e) {

e.printStackTrace();

}

}, "t3").start();

The whole thread pool shows that the number of threads will increase continuously according to the number of tasks, and there is no upper limit. When the task is completed, the threads will be released after being idle for 1 minute. It is suitable for the situation that the number of tasks is relatively intensive, but the execution time of each task is short

3.2.3,newSingleThreadExecutor

public static ExecutorService newSingleThreadExecutor() {

return new FinalizableDelegatedExecutorService

(new ThreadPoolExecutor(1, 1,

0L, TimeUnit.MILLISECONDS,

new LinkedBlockingQueue<Runnable>()));

}

It is applicable to multiple tasks that you want to queue up for execution. When the number of threads is fixed to 1 and the number of tasks is more than 1, it will be put into an unbounded queue. When the task is completed, the only thread will not be released.

If there is only one thread, can it also be called thread pool?

-

Create a single thread serial execution task by yourself. If the task fails to execute and terminates, there is no remedy, and a new thread will be created in the thread pool to ensure the normal operation of the pool

-

Executors. The number of threads of newsinglethreadexecutor() is always 1 and cannot be modified

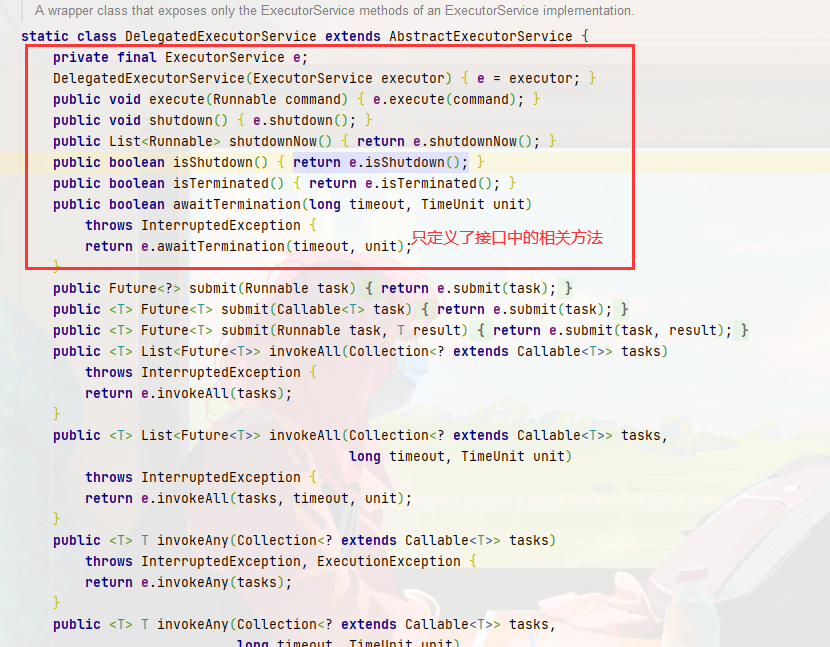

- The returned is a FinalizableDelegatedExecutorService, in which the ThreadPoolExecutor is created only by parameters. Here, the decorator mode is applied, which only exposes the ExecutorService interface, limiting us to call the methods of the interface instead of the unique methods in ThreadPoolExecutor

static class FinalizableDelegatedExecutorService extends DelegatedExecutorService { FinalizableDelegatedExecutorService(ExecutorService executor) { super(executor); } protected void finalize() { super.shutdown(); } }

-

Executors.newFixedThreadPool(1) is initially 1 and can be modified later

- External exposure is ThreadPoolExecutor object, which can be changed by calling setCorePoolSize and other methods.

3.4 task submission

// Perform tasks

void execute(Runnable command);

// Submit the task and obtain the task execution result with the return value Future

<T> Future<T> submit(Callable<T> task);

// Submit all tasks in tasks

<T> List<Future<T>> invokeAll(Collection<? extends Callable<T>> tasks)

throws InterruptedException;

// Submit all tasks in tasks

<T> List<Future<T>> invokeAll(Collection<? extends Callable<T>> tasks)

throws InterruptedException;

// Submit all tasks in tasks with timeout

<T> List<Future<T>> invokeAll(Collection<? extends Callable<T>> tasks,

long timeout, TimeUnit unit)

throws InterruptedException;

// Submit all tasks in the tasks. Which task is successfully executed first will return the execution result of this task, and other tasks will be cancelled

<T> T invokeAny(Collection<? extends Callable<T>> tasks)

throws InterruptedException, ExecutionException;

// Submit all tasks in the tasks. Which task is successfully executed first will return the execution result of this task. Other tasks will be cancelled with timeout

<T> T invokeAny(Collection<? extends Callable<T>> tasks,

long timeout, TimeUnit unit)

throws InterruptedException, ExecutionException, TimeoutException;

3.5. Close thread pool

- shutdown

//When the thread pool status changes to SHUTDOWN, it will not receive new tasks, but the submitted tasks will be completed (including those in execution and blocking queue). This method will not block the execution of the calling thread

public void shutdown() {

final ReentrantLock mainLock = this.mainLock;

mainLock.lock();

try {

checkShutdownAccess();

//Modify thread pool status

advanceRunState(SHUTDOWN);

//Only idle threads are interrupted

interruptIdleWorkers();

onShutdown(); //Extension point for subclass ScheduledThreadPoolExecutor

} finally {

mainLock.unlock();

}

//Try to end

tryTerminate();

}

- shutdownNow

//When the thread pool status changes to STOP, it will not receive new tasks, return the tasks in the task queue, and interrupt the executing tasks in the way of interrupt

public List<Runnable> shutdownNow() {

List<Runnable> tasks;

final ReentrantLock mainLock = this.mainLock;

mainLock.lock();

try {

checkShutdownAccess();

// Modify thread pool status

advanceRunState(STOP);

// Break all threads

interruptWorkers();

// Get the remaining tasks in the queue

tasks = drainQueue();

} finally {

mainLock.unlock();

}

// Try to end

tryTerminate();

return tasks;

}

- Other methods

// If the thread pool is not in the RUNNING state, this method returns true boolean isShutdown(); // Is the thread pool status TERMINATED boolean isTerminated(); // After calling shutdown, because the calling thread will not wait for all tasks to finish running, it can use this method to wait if it wants to do something after the thread pool is TERMINATED boolean awaitTermination(long timeout, TimeUnit unit) throws InterruptedException;

4. Task scheduling thread pool

4.1 delayed execution

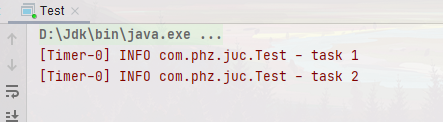

Before the "task scheduling thread pool" function is added, you can use Java util. Timer is used to realize the timing function. The advantage of timer is that it is simple and easy to use. However, since all tasks are scheduled by the same thread, all tasks are executed in series. Only one task can be executed at the same time, and the delay or exception of the previous task will affect the subsequent tasks.

Timer timer = new Timer();

TimerTask task1 = new TimerTask() {

@SneakyThrows

@Override

public void run() {

log.info("task 1");

TimeUnit.SECONDS.sleep(2);

}

};

TimerTask task2 = new TimerTask() {

@Override

public void run() {

log.info("task 2");

}

};

// Use timer to add two tasks, hoping that they will be executed in 1s

// However, since there is only one thread in the timer to execute the tasks in the queue in sequence, the delay of "task 1" affects the execution of "task 2"

timer.schedule(task1, 1000);

timer.schedule(task2, 1000);

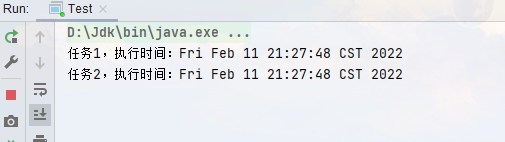

Transformation using task scheduling thread pool:

ScheduledExecutorService executor = Executors.newScheduledThreadPool(2);

// Add two tasks and expect them to be executed in 1s

executor.schedule(() -> {

System.out.println("Task 1, execution time:" + new Date());

try {

Thread.sleep(2000);

} catch (InterruptedException e) {

e.printStackTrace();

}

}, 1000, TimeUnit.MILLISECONDS);

executor.schedule(() -> {

System.out.println("Task 2, execution time:" + new Date());

}, 1000, TimeUnit.MILLISECONDS);

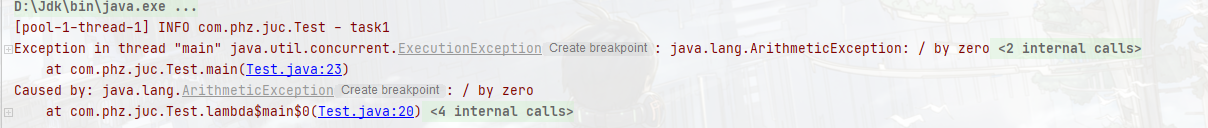

If one thread reports an error, it will not affect the execution of other threads

ScheduledExecutorService executor = Executors.newScheduledThreadPool(1);

// Add two tasks and expect them to be executed in 1s

executor.schedule(() -> {

System.out.println("Task 1, execution time:" + new Date());

int i = 1 / 0;

}, 1000, TimeUnit.MILLISECONDS);

executor.schedule(() -> {

System.out.println("Task 2, execution time:" + new Date());

}, 1000, TimeUnit.MILLISECONDS);

But the expected exception did not appear. What's going on? For exceptions, we can have the following solutions

- Self capture

ScheduledExecutorService executor = Executors.newScheduledThreadPool(1);

// Add two tasks and expect them to be executed in 1s

executor.schedule(() -> {

try {

System.out.println("Task 1, execution time:" + new Date());

int i = 1 / 0;

} catch (Exception e) {

e.printStackTrace();

}

}, 1000, TimeUnit.MILLISECONDS);

executor.schedule(() -> {

System.out.println("Task 2, execution time:" + new Date());

}, 1000, TimeUnit.MILLISECONDS);

- Use future

ExecutorService pool = Executors.newFixedThreadPool(1);

Future<Boolean> f = pool.submit(() -> {

log.info("task1");

int i = 1 / 0;

return true;

});

log.info("result:{}", f.get());

4.2 regular execution

ScheduledExecutorService pool = Executors.newScheduledThreadPool(1);

log.debug("start...");

pool.scheduleAtFixedRate(() -> {

log.info("running...");

}, 1, 1, TimeUnit.SECONDS);

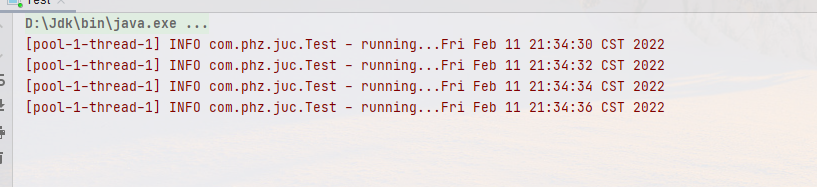

What if the task itself takes longer than the interval?

ScheduledExecutorService pool = Executors.newScheduledThreadPool(1);

log.debug("start...");

pool.scheduleAtFixedRate(() -> {

log.info("running..." + new Date());

try {

TimeUnit.SECONDS.sleep(2);

} catch (InterruptedException e) {

e.printStackTrace();

}

}, 1, 1, TimeUnit.SECONDS);

- At first, the delay is 1s. Then, because the task execution time > interval time, the interval is "supported" to 2s, that is, the maximum value of interval time and actual time is taken

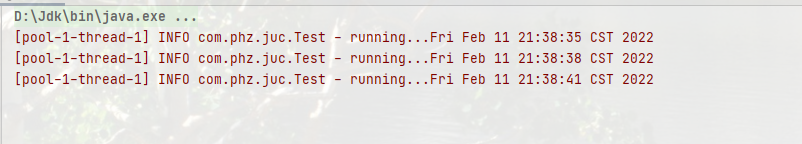

You can use the scheduleWithFixedDelay method if you want two tasks to be executed at intervals of several times

ScheduledExecutorService pool = Executors.newScheduledThreadPool(1);

log.debug("start...");

pool.scheduleWithFixedDelay(() -> {

log.info("running..." + new Date());

try {

TimeUnit.SECONDS.sleep(2);

} catch (InterruptedException e) {

e.printStackTrace();

}

}, 1, 1, TimeUnit.SECONDS);

- The delay is 1s. The interval of scheduleWithFixedDelay is the end of the previous task < - > delay < - > the start of the next task, so the interval is 3s

5. Tomcat thread pool

- LimitLatch is used to limit current and control the maximum number of connections, similar to Semaphore in JUC

- Acceptor is only responsible for accepting new Socket connections

- Poller is only responsible for monitoring whether the Socket Channel has readable IO events. Once readable, it encapsulates a socketProcessor task object and submits it to the Executor thread pool for processing

- The worker thread in the Executor is ultimately responsible for processing the request

Tomcat thread pool, also known as ThreadPoolExecutor, is modified from JDK, so it is slightly different from that in JDK

- If the total number of threads reaches maximumPoolSize, the RejectedExecutionException exception will not be thrown immediately, but the task will be put into the queue. If it still fails, the exception will be thrown

Source code tomcat-catalina-9.0.58

public void execute(Runnable command, long timeout, TimeUnit unit) {

submittedCount.incrementAndGet();

try {

// If the thread is full, a RejectedExecutionException exception is thrown

executeInternal(command);

} catch (RejectedExecutionException rx) {

if (getQueue() instanceof TaskQueue) {

// Some tasks may be queued due to the use of Tomcat execute() instead of the maximum queue size. If this happens, add them to the queue.

// The blocking queue has also been extended

final TaskQueue queue = (TaskQueue) getQueue();

try {

// Try to join the queue

if (!queue.force(command, timeout, unit)) {

// If the join fails, an exception will be thrown

submittedCount.decrementAndGet();

throw new RejectedExecutionException(sm.getString("threadPoolExecutor.queueFull"));

}

} catch (InterruptedException x) {

submittedCount.decrementAndGet();

throw new RejectedExecutionException(x);

}

} else {

submittedCount.decrementAndGet();

throw rx;

}

}

}

Connector configuration item, corresponding to Tomcat server XML file connector tag

| Item Configuration | Default value | explain |

|---|---|---|

| acceptorThreadCount | 1 | Number of acceptor threads |

| pollerThreadCount | 1 | poller threads |

| minSpareThreads | 10 | Number of core threads, i.e. corePoolSize |

| maxThreads | 200 | Maximum number of threads, i.e. maximumPoolSize |

| executor | - | Executor name, used to refer to the following executor |

- acceptor: if the number of threads is 1, it is blocked when there is no request. There is no need to use too many threads

- poller: it adopts the idea of multiplexing and can monitor many Channel read-write events, so one is OK

- executor: a tag refers to the following configuration. If configured, minSpareThreads and maxThreads will be overwritten

Executor thread configuration, corresponding to Tomcat server XML file executor tag (commented out by default)

| Item Configuration | Default value | explain |

|---|---|---|

| threadPriority | 5 | thread priority |

| daemon | true | Daemon thread |

| minSpareThreads | 25 | Number of core threads, i.e. corePoolSize |

| maxThreads | 200 | Maximum number of threads, i.e. maximumPoolSize |

| maxIdleTime | 60000 | Thread lifetime, in milliseconds. The default value is 1 minute |

| maxQueueSize | Integer.MAX_VALUE | queue length |

| prestartminSpareThreads | false | Whether the core thread starts when the server starts |

- Daemon: all threads in tomcat are daemon threads. Once the main service is shut down, other threads will be shut down

- maxIdleTime: that is, the survival time of the emergency thread

- maxQueueSize: the length of the blocking queue. The default is unbounded queue. If the server is under too much pressure, it may cause the accumulation of tasks

- prestartminSpareThreads: is the creation of core threads lazy

Since the blocking queue is unbounded, is the emergency thread useless? Because in JDK, if the blocking queue is bounded, the emergency thread will be created only when the blocking queue is full. Here, the blocking queue is

An unbounded, the emergency thread will soon be useless

In fact, in the Tomcat source code just now, the TaskQueue has been expanded. In tomcat, the number of tasks being executed will be counted, the number of submitted tasks will be increased by one, and the number of completed tasks will be reduced by one. If the number of submitted tasks is still less than the number of core threads, they will be directly added to the blocking queue for execution. If they are already greater than the core threads, they will not be added to the blocking queue immediately, It will first judge whether the number of tasks submitted is greater than the maximum number of threads. If it is less than, an emergency thread will be created for execution. If it is greater than, it will join the blocking queue