What is a 1.k8s workload controller

Workload Controllers are an abstract concept of K8s for deploying and managing Pod s at higher levels of objects.

-

Common workload controllers:

- Deployment: Stateless application deployment

- StatefulSet: Stateful application deployment

- DaemonSet: Make sure all Node s run the same Pod

- Job: One-time task

- Cronjob: Timed tasks

-

Controller's role:

- Manage Pod Objects

- Use labels to associate with Pod

- The controller implements the operation of Pod, such as rolling updates, scaling, replica management, maintenance of Pod status, and so on.

-

Example:

2. Introduction to Deployment

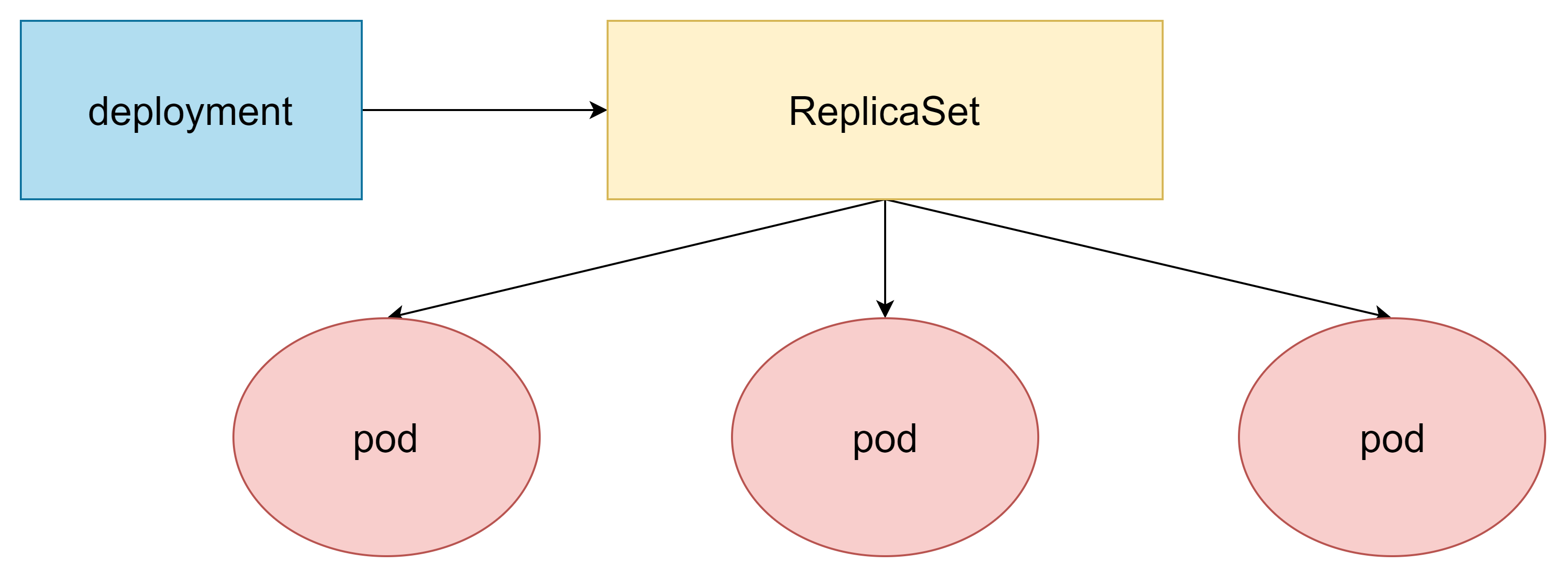

In order to better solve the problem of service organization, k8s in V1. Starting with version 2, deployment controllers were introduced, and it is worth noting that they do not manage pod s directly.

Instead, the pod is managed indirectly by managing the replicaset, that is, deployment manages the replicaset, and replicaset manages the pod. So deployment is more powerful than replicaset.

The main functions of deployment are as follows:

- Supports all functions of replicaset

- Supports Publishing Stop, Continue

- Supports rolling updates and version fallback for versions

2.1 deployment Resource Inventory File

//Example:

apiVersion: apps/v1 #version number

kind: Deployment #type

metadata: #metadata

name: #rs name

namespace: #Owning Namespace

labels: #Label

controller: deploy

spec: #Detailed description

replicas: #Number of copies

revisionHistoryLimit: #Keep historical version, default is 10

paused: #Suspend deployment, default is false

progressDeadlineSeconds: #Deployment timeout (s), default is 600

strategy: #strategy

type: RollingUpdates #Rolling Update Policy

rollingUpdate: #Scroll Update

maxSurge: #The maximum additional number of copies that can exist, either as a percentage or as an integer

maxUnavaliable: #The maximum pod of the maximum unavailable state, either as a percentage or as an integer

selector: #Selector, which specifies which pod s are managed by the controller

matchLabels: #Labels Matching Rule

app: nginx-pod

matchExpressions: #Expression Matching Rule

- {key: app, operator: In, values: [nginx-pod]}

template: #Template, when the number of copies is insufficient, a pod copy will be created from the following template

metadata:

labels:

app: nginx-pod

spec:

containers:

- name: nginx

image: nginx:1.17.1

ports:

- containerPort: 80

[root@master ~]# cat test.yaml

---

apiVersion: apps/v1 //Version information of api

kind: Deployment //type

metadata:

name: test //Container name

namespace: default //Use default namespace

spec:

replicas: 3 //Three Container Copies

selector:

matchLabels:

app: busybox //Container label

template:

metadata:

labels:

app: busybox

spec:

containers:

- name: b1

image: busybox //Mirror used

command: ["/bin/sh","-c","sleep 9000"]

[root@master ~]# kubectl apply -f test.yaml

deployment.apps/amu created

[root@master ~]# kubectl get pod

NAME READY STATUS RESTARTS AGE

test-864fd4fb54-4hz2h 1/1 Running 0 1ms

test-864fd4fb54-j9ht4 1/1 Running 0 1m

test-864fd4fb54-w485p 1/1 Running 0 1m

2.2 Deployment: Rolling Upgrade

- kubectl apply -f xxx.yaml

- kubectl set image deployment/web nginx=nignx:1.16

- kubectl edit deployment/web

Rolling Upgrade: The default policy of K8s for Pod upgrade, which allows downtime publishing by gradually updating older versions of Pod with new versions of Pod, is user-insensitive.

// Rolling Update Policy

spec:

replicas: 3

revisionHistoryLimit: 10

selector:

matchLabels:

app: web

strategy:

rollingUpdate:

maxSurge: 25%

maxUnavaliable: 25%

type: RollingUpdate

-

maxSurge: Maximum number of Pod copies in the rolling update process to ensure that the number of Pods started at update time is 25% more than expected (replicsa) Pods

-

maxUnavailable: Maximum number of unavailable Pod copies during rolling updates, ensuring that the maximum 25%Pod number is unavailable during updates, that is, ensuring that 75%Pod number is available

Example:

// Create four httpd containers

[root@master ~]# cat test1.yaml

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: web

namespace: default

spec:

replicas: 4

selector:

matchLabels:

app: httpd

template:

metadata:

labels:

app: web

spec:

containers:

- name: httpd

image: gaofan1225/httpd:v0.2

imagePullPolicy: IfNotPresent

[root@master ~]# kubectl apply -f test1.yaml

deployment.apps/web created

[root@master ~]# kubectl get pods

web-5c688b9779-7gi6t 1/1 Running 0 50s

web-5c688b9779-9unfy 1/1 Running 0 50s

web-5c688b9779-ft69k 1/1 Running 0 50s

web-5c688b9779-vmlkg 1/1 Running 0 50s

[root@master ~]# cat test1.yaml

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: web

namespace: default

spec:

replicas: 4

strategy:

rollingUpdate: // Scroll Update

maxSurge: 25% // Maximum can exceed 25%

maxUnavailable: 25% // Cannot use up to 25%

type: RollingUpdate

selector:

matchLabels:

app: httpd

template:

metadata:

labels:

app: httpd

spec:

containers:

- name: httpd

image: dockerimages123/httpd:v0.2

imagePullPolicy: IfNotPresent

[root@master ~]# kubectl apply -f test1.yaml

deployment.apps/web configured

// No change at this time

[root@master ~]# kubectl get pods

NAME READY STATUS RESTARTS AGE

web-5c697b9779-7gi6t 1/1 Running 0 8m

web-5c697b9779-9unfy 1/1 Running 0 8m

web-5c697b9779-ft69k 1/1 Running 0 8m

web-5c697b9779-vmlkg 1/1 Running 0 8m

// Modify the mirror to httpd, image=httpd

// Apply Again

[root@master ~]# kubectl apply -f test1.yaml

deployment.apps/web configured

// We found that we deleted three, started two new and one old web

[root@master ~]# kubectl get pods

NAME READY STATUS RESTARTS AGE

web-5d688b9779-7gi6t 1/1 Terminating 0 18m

web-5d688b9779-9unfy 0/1 Running 0 20m

web-5d688b9779-ft69k 0/1 Terminating 0 20m

web-5d688b9779-vmlkg 0/1 Terminating 0 20m

web-f8bcfc88-vddfk 0/1 Running 0 80s

web-f8bcfc88-yur8y 0/1 Running 0 80s

web-f8bcfc88-t9ryx 1/1 ContainerCreating 0 55s

web-f8bcfc88-k07k 1/1 ContainerCreating 0 56s

// Last changed to 4 NEW

[root@master ~]# kubectl get pods

NAME READY STATUS RESTARTS AGE

web-f8bcfc88vddfk 1/1 Running 0 80s

web-f8bcfc88-yur8y 1/1 Running 0 80s

web-f8bcfc88-t9ryx 1/1 Running 0 55s

web-f8bcfc88-k07k 1/1 Running 0 56s

2.3 Deployment: Horizontal expansion

- Modify the replicas value in yanl and apply

- kubectl scale deployment web --replicas=10

[root@master ~]# kubectl get pods

NAME READY STATUS RESTARTS AGE

web-f8bcfc88vddfk 1/1 Running 0 80s

web-f8bcfc88-yur8y 1/1 Running 0 80s

web-f8bcfc88-t9ryx 1/1 Running 0 55s

web-f8bcfc88-k07k 1/1 Running 0 56s

// Create 10 containers

[root@master ~]# cat test1.yaml

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: web

namespace: default

spec:

replicas: 10

strategy:

rollingUpdate:

maxSurge: 55%

maxUnavailable: 50%

type: RollingUpdate

selector:

matchLabels:

app: httpd

template:

metadata:

labels:

app: httpd

spec:

containers:

- name: httpd

image: dockerimages123/httpd:v0.2

imagePullPolicy: IfNotPresent

[root@master ~]# kubectl apply -f test1.yaml

deployment.apps/web created

[root@master ~]# kubectl get pods

NAME READY STATUS RESTARTS AGE

web-5c688b9779-pb4x8 1/1 Running 0 50s

web-5c688b9779-kf8vq 1/1 Running 0 50s

web-5c688b9779-ki8s3 1/1 Running 0 50s

web-5c688b9779-o9gx6 1/1 Running 0 50s

web-5c688b9779-i8g4w 1/1 Running 0 50s

web-5c688b9779-olgxt 1/1 Running 0 50s

web-5c688b9779-khctw 1/1 Running 0 50s

web-5c688b9779-ki8d6 1/1 Running 0 50s

web-5c688b9779-i9g5s 1/1 Running 0 50s

web-5c688b9779-jsj8k 1/1 Running 0 50s

// Modify replicas value to achieve horizontal scaling

[root@master ~]# vim test1.yaml

replicas: 3

[root@master ~]# kubectl get pods

NAME READY STATUS RESTARTS AGE

web-5c688b9779-pb4x8 1/1 Running 0 50s

web-5c688b9779-kf8vq 1/1 Running 0 50s

web-5c688b9779-ki8s3 1/1 Running 0 50s

web-5c688b9779-o9gx6 1/1 Running 0 50s

web-5c688b9779-i8g4w 1/1 Running 0 50s

web-5c688b9779-olgxt 1/1 Running 0 50s

web-5c688b9779-khctw 1/1 Running 0 50s

web-5c688b9779-ki8d6 1/1 Running 0 50s

web-5c688b9779-i9g5s 1/1 Running 0 50s

web-5c688b9779-jsj8k 1/1 Running 0 50s

web-i9olh676-jdkrg 0/1 ContainerCreating 0 8s

web-i9olh676-opy5b 0/1 ContainerCreating 0 8s

web-i9olh676-k8st4 0/1 ContainerCreating 0 8s

[root@master ~]# kubectl get pods

NAME READY STATUS RESTARTS AGE

web-i9olh676-jdkrg 1/1 Running 0 2m19s

web-i9olh676-opy5b 1/1 Running 0 2m19s

web-i9olh676-k8st4 1/1 Running 0 2m19s

2.4 Deployment: Rollback

kubectl rollout history deployment/web //view historical release

kubectl rollout undo deployment/web //rollback previous version

kubectl rollout undo deployment/web --to-revision=2 //rollback history specified version

[root@master ~]# kubectl rollout history deploy/web deployment.apps/web REVISION CHANGE-CAUSE 1 <none> 2 <none> // If you roll back the current version, you will skip [root@master ~]# kubectl rollout undo deploy/web --to-revision 2 deployment.apps/web skipped rollback (current template already matches revision 2) // Rollback first version [root@master ~]# kubectl rollout undo deploy/web --to-revision 1 deployment.apps/web rolled back // Rollback succeeded [root@master ~]# kubectl rollout history deploy/web deployment.apps/web REVISION CHANGE-CAUSE 2 <none> 3 <none>

2.5 Deployment: Delete

kubectl delete deploy/web

kubectl delete svc/web

kubectl delete pods/web

// Establish [root@master ~]# kubectl create deployment nginx --image=nginx deployment.apps/nginx created [root@master ~]# kubectl get deploy NAME READY UP-TO-DATE AVAILABLE AGE nginx 1/1 1 1 35s web 3/3 3 3 20m // Delete a pod [root@master ~]# kubectl delete deploy/nginx deployment.apps "nginx" deleted [root@master ~]# kubectl get deploy NAME READY UP-TO-DATE AVAILABLE AGE web 3/3 3 3 20m // Delete All [root@master ~]# kubectl delete deployment --all deployment.apps "web" deleted [root@master ~]# kubectl get deployment No resources found in default namespace.

2.6 Deployment: ReplicaSet

ReplicaSet Controller Purpose:

- Pod Replica Quantity Management, continuously comparing current and expected Pod quantities

- Deployment creates an RS as a record each time it publishes for rollback

[root@master ~]# kubectl get rs NAME DESIRED CURRENT READY AGE web-5d7hy50s8a 3 3 3 6m4s web-f8bki8h5 0 0 0 6m25s kubectl rollout history deployment web // Version Corresponds to RS Record [root@master ~]# kubectl rollout history deployment web deployment.apps/web REVISION CHANGE-CAUSE 2 <none> 3 <none>

[root@master ~]# kubectl get rs NAME DESIRED CURRENT READY AGE web-5d7hy50s8a 3 3 3 6m4s web-f8bki8h5 0 0 0 6m25s // Change mirror to httpd, other changes [root@master ~]# Kubectl apply-f test2. Yaml //reapply deployment.apps/web configured [root@master ~]# kubectl get pods web-5d688b9745-dpmsd 1/1 Terminating 0 9m web-5d688b9745-q6dls 1/1 Terminating 0 9m web-i80gjk6t-ku6f4 0/1 ContainerCreating 0 5s web-i80gjk6t-9j5tu 1/1 Running 0 5s web-i80gjk6t-9ir4h 1/1 Running 0 5s [root@master ~]# kubectl get rs NAME DESIRED CURRENT READY AGE web-5d7hy50s8a 0 0 0 12m web-f8bki8h5 3 3 3 12m

ReplicaSet: Controlled by the parameters of relicas in Deployment

[root@node2 ~]# docker ps | grep web 4c938ouhc8j0 dabutse0c4fy "httpd-foreground" 13 seconds ago Up 12 seconds k8s_httpd_web-f8bcfc88-4rkfx_default_562616cd-1552-4610-bf98-e470225e4c31_1 452713eeccad registry.aliyuncs.com/google_containers/pause:3.6 "/pause" 5 minutes ago Up 5 minutes k8s_POD_web-f8bcfc88-4rkfx_default_562616cd-1552-4610-bf98-e470225e4c31_0 // Delete one [root@node2 ~]# docker kill 4c938ad0c01d 8htw4ad0cu8s [root@master ~]# kubectl get rs NAME DESIRED CURRENT READY AGE web-5d7hy50s8a 0 0 0 15m web-f8bki8h5 3 3 3 15m [root@master ~]# kubectl get rs NAME DESIRED CURRENT READY AGE web-5d7hy50s8a 0 0 0 16m web-f8bki8h5 3 3 3 18m [root@master ~]# kubectl get pods NAME READY STATUS RESTARTS AGE web-o96gb3sm-9ht4c 1/1 Running 2 (80s ago) 6m32s web-o96gb3sm-ki85s 1/1 Running 0 6m32s web-o96gb3sm-ku5sg 1/1 Running 0 6m32s

3. DameonSet

DameonSet functionality:

- Run a Pod on each Node

- New odes also automatically run a Pod

// Delete resource, container will also be deleted

[root@master ~]# kubectl delete -f test1.yml

deployment.apps "web" deleted

[root@master ~]# cat daemon.yml

---

apiVersion: apps/v1

kind: DaemonSet // Type is DaemonSet

metadata:

name: filebeat

namespace: kube-system

spec:

selector:

matchLabels:

name: filebeat

template:

metadata:

labels:

name: filebeat

spec:

containers: // Log Mirror

- name: log

image: elastic/filebeat:7.16.2

imagePullPolicy: IfNotPresent

[root@master ~]# kubectl get pods -n kube-system

NAME READY STATUS RESTARTS AGE

coredns-6d8c4cb4d-9m5jg 1/1 Running 14 (4h36m ago) 6d4h

coredns-6d8c4cb4d-mp662 1/1 Running 14 (4h36m ago) 6d4h

etcd-master 1/1 Running 13 (3h30m ago) 6d4h

kube-apiserver-master 1/1 Running 13 (3h30m ago) 6d4h

kube-controller-manager-master 1/1 Running 14 (3h30m ago) 6d4h

kube-flannel-ds-g9jsh 1/1 Running 9 (3h30m ago) 6d1h

kube-flannel-ds-qztxc 1/1 Running 9 (3h30m ago) 6d1h

kube-flannel-ds-t8lts 1/1 Running 13 (3h30m ago) 6d1h

kube-proxy-q2jmh 1/1 Running 12 (3h30mago) 6d4h

kube-proxy-r28dn 1/1 Running 13 (3h30m ago) 6d4h

kube-proxy-x4cns 1/1 Running 13 (3h30m ago) 6d4h

kube-scheduler-master 1/1 Running 15 (3h30m ago) 6d4h

[root@master ~]# kubectl apply -f daemon.yaml

deployment.apps/filebeat created

[root@master ~]# kubectl get pods -n kube-system

NAME READY STATUS RESTARTS AGE

coredns-6d8c4cb4d-9m5jg 1/1 Running 14 (4h36m ago) 6d4h

coredns-6d8c4cb4d-mp662 1/1 Running 14 (4h36m ago) 6d4h

etcd-master 1/1 Running 13 (3h30m ago) 6d4h

kube-apiserver-master 1/1 Running 13 (3h30m ago) 6d4h

kube-controller-manager-master 1/1 Running 14 (3h30m ago) 6d4h

kube-flannel-ds-g9jsh 1/1 Running 9 (3h30m ago) 6d1h

kube-flannel-ds-qztxc 1/1 Running 9 (3h30m ago) 6d1h

kube-flannel-ds-t8lts 1/1 Running 13 (3h30m ago) 6d1h

kube-proxy-q2jmh 1/1 Running 12 (3h30mago) 6d4h

kube-proxy-r28dn 1/1 Running 13 (3h30m ago) 6d4h

kube-proxy-x4cns 1/1 Running 13 (3h30m ago) 6d4h

kube-scheduler-master 1/1 Running 15 (3h30m ago) 6d4h

filebeat-oiugt 1/1 Running 0 50s

filebeat-9jhgt 1/1 Running 0 50s

[root@master ~]# kubectl get pods -n kube-system -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

filebeat-9ck6z 1/1 Running 0 3m9s 10.242.2.215 node2.example.com <none> <none>

filebeat-d2psf 1/1 Running 0 3m9s 10.242.1.161 node1.example.com <none> <none>

4. Job and ronJob

Job is divided into regular tasks (Job) and fixed-time tasks (CronJob)

- One-time Execution (Job)

- Timed Tasks (CronJob)

Scenario: Offline Data Processing, Video Decoding, etc. (Job)

Scenario: Notification, Backup (CronJob)

[root@master ~]# cat job.yaml

apiVersion: batch/v1

kind: Job

metadata:

name: test

spec:

template:

spec:

containers:

- name: test

image: perl

command: ["perl","-Mbignum=bpi","-wle","print bpi(2000)"]

restartPolicy: Never

backoffLimit: 4

[root@master ~]# kubectl apply -f job.yaml

job.batch/test created

[root@master ~]# kubectl get pods -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

test-27xrt 0/1 ContainerCreating 0 10m28s <none> node2.example.com <none> <none>

[root@node2 ~]# docker ps | grep test

e55ac8842c89 registry.aliyuncs.com/google_containers/pause:3.6 "/pause" 6 minutes ago Up 6 minutes k8s_POD_test-27xrt_default_698b4c91-ef54-4fe9-b62b-e0abcujhts5o90_9

[root@master ~]# kubectl get pods

NAME READY STATUS RESTARTS AGE

test-27xrt 0/1 Completed 0 15m

[root@node2 ~]# docker images | grep perl

perl latest f9596eddf06f 5 days ago 568MB

[root@master ~]# kubectl describe job/test

Pods Statuses: 0 Active / 1 Succeeded / 0 Failed

[root@master ~]# kubelet --version

Kubernetes v1.23.1

[root@master ~]# cat cronjob.yaml

---

apiVersion: batch/v1

kind: CronJob

metadata:

name: hello

spec:

schedule: "*/1****"

jobTemplate:

spec:

template:

spec:

containers:

- name: hello

image: busybox

args:

- /bin/sh

- -c

- date;echo Hello world

restartPolicy: OnFailure

[root@master ~]# kubectl apply -f cronjob.yaml

Warning: batch/v1beta1 CronJob is deprecated in v1.21+, unavailable in v1.25+; use batch/v1 CronJob

[root@master ~]# kubectl get pods

NAME READY STATUS RESTARTS AGE

hello-kihtwoab-kox6w 0/1 Completed 0 5m42s

hello-kihtwoab-o96vw 0/1 Completed 0 90s

hello-kihtwoab-kus6n 0/1 Completed 0 76s

[root@master ~]# kubectl get pods

NAME READY STATUS RESTARTS AGE

hello-kihtwoab-kox6w 0/1 Completed 0 6m26s

hello-kihtwoab-o96vw 0/1 Completed 0 2m11s

hello-kihtwoab-kus6n 0/1 Completed 0 2m10s

hello-kuhdoehs-ki8gr 0/1 ContainerCreating 0 36s

[root@master ~]# kubectl get pods

NAME READY STATUS RESTARTS AGE

hello-kihtwoab-o96vw 0/1 Completed 0 2m11s

hello-kihtwoab-kus6n 0/1 Completed 0 2m10s

hello-kuhdoehs-ki8gr 0/1 Completed 0 45s

[root@master ~]# kubectl describe cronjob/hello

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Normal SuccessfulCreate 5m36s (x133 over 135m) cronjob-controller (combined from similar events): Created job hello-kisgejxbw

// Bach/v1beta1 CronJob in v1.21+ is discarded in v1.25+ is not available; Use batch/v1 CronJob.