What is a workload controller

Workload Controller Concepts

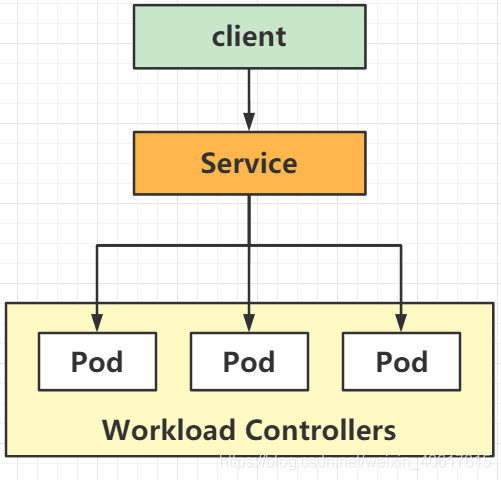

Workload Controllers are an abstract concept of K8s for deploying and managing Pod s at higher levels of objects.

Common workload controllers

Deployment: Stateless application deployment

StatefulSet: Stateful application deployment

DaemonSet: Make sure all Node s run the same Pod

Job: One-time task

Cronjob: Timed tasks

Controller Role

Manage Pod Objects

Use labels to associate with Pod

Controller implements Pod operations such as rolling updates, scaling, replica management, maintenance of Pod status, etc.

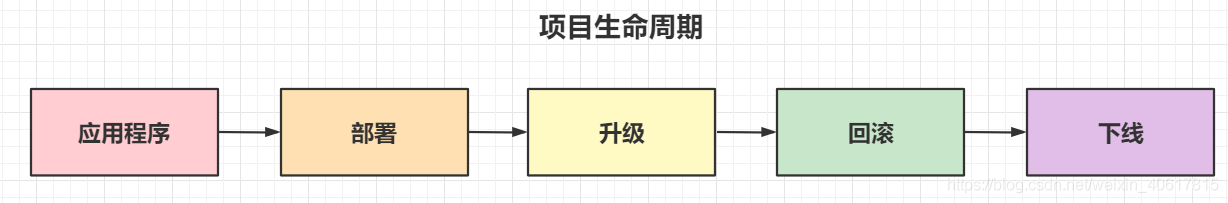

Project life cycle introduction

The actual project deployment process is as follows. The next section describes the entire life cycle of a project in the K8S cluster in the order of application deployment, rolling upgrade, rollback, and project offline. This article focuses on deployment and lifecycle management for stateless applications, so in fact, it describes the specific use of Deployment Controller in project lifecycle management.

Project life cycle introduction

The actual project deployment process is as follows. The next section describes the entire life cycle of a project in the K8S cluster in the order of application deployment, rolling upgrade, rollback, and project offline. This article focuses on deployment and lifecycle management for stateless applications, so in fact, it describes the specific use of Deployment Controller in project lifecycle management.

Deployments (Stateless Application Deployment)

Introduction to Deployments

A Deployment controller is Pods and ReplicaSets Provides declarative updating capabilities.

You are responsible for describing the target state in Deployment, and the Deployment controller changes the actual state to the desired state at a controlled rate. You can define Deployment to create a new ReplicaSet, or delete an existing Deployment and adopt its resources through the new Deployment.

Note: Do not manage ReplicaSet s owned by Deployment. If there are usage scenarios that are not covered below, consider raising a Issue in the Kubernetes repository.

Basic Writing

[root@master manifest]# cat busybox.yaml

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: xx

namespace: default

spec:

replicas: 3

selector:

matchLabels:

app: busybox

template:

metadata:

labels:

app: busybox

spec:

containers:

- name: b4

image: busybox

command: ["/bin/sh","-c","sleep 9000"]

[root@master manifest]# kubectl apply -f busybox.yaml

deployment.apps/xx created

[root@master manifest]# kubectl get pods

NAME READY STATUS RESTARTS AGE

xx-758696cd47-2k2wv 1/1 Running 0 13s

xx-758696cd47-q2tqk 1/1 Running 0 13s

xx-758696cd47-wjtjr 1/1 Running 0 13s

Deployment for rolling upgrade

[root@master manifest]# vim apache.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: web

spec:

replicas: 4

strategy:

rollingUpdate:

maxSurge: 20%

maxUnavailable: 20%

type: RollingUpdate

selector:

matchLabels:

app: httpd

template:

metadata:

labels:

app: httpd

spec:

containers:

- name: httpd

image: hyhxy0206/apache:v1

imagePullPolicy: IfNotPresent

# maxSurge: 20%//Update, number of pods started, 1/5 of total

# maxUnavailable: 20%//Update, number of pods closed, 1/5 of total

# replicas: 4 copies

[root@master manifest]# kubectl apply -f apache.yaml

deployment.apps/web created

[root@master manifest]# kubectl get pods

NAME READY STATUS RESTARTS AGE

web-64bbf59bf8-8c8q2 1/1 Running 0 11s

web-64bbf59bf8-ll6tg 1/1 Running 0 11s

web-64bbf59bf8-qk5j7 1/1 Running 0 11s

web-64bbf59bf8-vkrr6 1/1 Running 0 11s

//Modify VIM apache. Direct upgrade of yaml V2 version number

[root@master manifest]# kubectl apply -f apache.yaml

deployment.apps/web configured

[root@master manifest]# kubectl get pods

NAME READY STATUS RESTARTS AGE

web-64bbf59bf8-8c8q2 1/1 Running 0 77s

web-64bbf59bf8-ll6tg 1/1 Terminating 0 77s

web-64bbf59bf8-qk5j7 1/1 Running 0 77s

web-64bbf59bf8-vkrr6 1/1 Terminating 0 77s

web-6d5489d59f-j42tq 1/1 Running 0 6s

web-6d5489d59f-ppgn9 1/1 Running 0 3s

web-6d5489d59f-r4kjm 0/1 ContainerCreating 0 1s

Deployment Regulation

[root@master manifest]# vim apache.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: web

spec:

replicas: 4

revisionHistoryLimit: 4 //Number of Versions

strategy:

rollingUpdate:

maxSurge: 20%

maxUnavailable: 20%

type: RollingUpdate

selector:

matchLabels:

app: httpd

template:

metadata:

labels:

app: httpd

spec:

containers:

- name: httpd

image: hyhxy0206/apache:v1

imagePullPolicy: IfNotPresent

[root@master manifest]# kubectl apply -f apache.yaml

deployment.apps/web created

[root@master manifest]# vim apache.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: web

spec:

replicas: 4

revisionHistoryLimit: 4

strategy:

rollingUpdate:

maxSurge: 20%

maxUnavailable: 20%

type: RollingUpdate

selector:

matchLabels:

app: httpd

template:

metadata:

labels:

app: httpd

spec:

containers:

- name: httpd

image: hyhxy0206/apache:v2 //Version Number Modified

imagePullPolicy: IfNotPresent

[root@master manifest]# kubectl apply -f apache.yaml

deployment.apps/web configured

//View historical versions

[root@master manifest]# kubectl rollout history deployment/web

deployment.apps/web

REVISION CHANGE-CAUSE

1 <none>

2 <none>

# revisionHistoryLimit:4, we only have 2 version numbers

//Rollback version 1 disappears, indicating that it has changed

[root@master manifest]# kubectl rollout undo deployment/web

deployment.apps/web rolled back

[root@master manifest]# kubectl rollout history deployment/web

deployment.apps/web

REVISION CHANGE-CAUSE

2 <none>

3 <none>

//Rollback to specified version 2 disappears, indicating a change

[root@master manifest]# kubectl rollout undo deploy/web --to-revision 2

deployment.apps/web rolled back

[root@master manifest]# kubectl rollout history deployment/web

deployment.apps/web

REVISION CHANGE-CAUSE

3 <none>

4 <none>

Replicaset (Replica Controller)

The purpose of the ReplicaSet is to maintain a stable collection of Pod copies that are running at all times. Therefore, it is often used to guarantee the availability of a given number of identical Pods.

How ReplicaSet works

RepicaSet is defined by a set of fields, including a selection operator that identifies the set of available pods, a number that indicates the number of copies that should be maintained, a Pod template that specifies the number of copies that should be created to meet the number of copies condition, and so on. Each ReplicaSet achieves its value by creating and deleting Pods as needed to make the number of copies as expected. The provided Pod template is used when ReplicaSet needs to create a new Pod.

ReplicaSet passes through the metadata.ownerReferences The field is connected to the attached Pod, which gives the primary resource of the current object. Pods obtained by ReplicaSet contain the identity information of the owner ReplicaSet in their ownerReferences field. It is through this connection that ReplicaSet knows the state of the Pod Collection it maintains and plans its behavior accordingly.

ReplicaSet uses its selection operator to identify the Pod set to be obtained. If a Pod does not have an OwnerReference or its OwnerReference is not one Controller And its matching to the selection operator of a ReplicaSet, the Pod is immediately obtained by the ReplicaSet.

When to use ReplicaSet

ReplicaSet ensures that a specified number of Pod copies are running at any time. However, Deployment is a more advanced concept that manages ReplicaSets and provides Pod with declarative updates and many other useful functions. Therefore, we recommend using Deployment instead of using ReplicaSet directly, unless you need to customize the update business process or do not need the update at all.

This actually means that you may never need to manipulate ReplicaSet objects: instead, use Deployment and define your application in the spec section.

//Deployment creates an RS as a record each time it publishes for rollback

[root@master manifest]# kubectl get rs

NAME DESIRED CURRENT READY AGE

web-64bbf59bf8 4 4 4 9m57s

web-6d5489d59f 0 0 0 9m37s

[root@master manifest]# kubectl rollout history deployment web

deployment.apps/web

REVISION CHANGE-CAUSE

4 <none>

5 <none>

//Change Version Number

[root@master manifest]# vim apache.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: web

spec:

replicas: 4

revisionHistoryLimit: 4

strategy:

rollingUpdate:

maxSurge: 20%

maxUnavailable: 20%

type: RollingUpdate

selector:

matchLabels:

app: httpd

template:

metadata:

labels:

app: httpd

spec:

containers:

- name: httpd

image: hyhxy0206/apache:v1

imagePullPolicy: IfNotPresent

[root@master manifest]# kubectl apply -f apache.yaml

deployment.apps/web configured

[root@master manifest]# kubectl get rs

NAME DESIRED CURRENT READY AGE

web-64bbf59bf8 4 4 4 10m

web-6d5489d59f 0 0 0 10m

DaemonSet (make sure Node runs the same pod)

DaemonSet ensures that a copy of a Pod is run on all (or some) nodes. When nodes join the cluster, a new Pod is added to them. When nodes are removed from the cluster, these Pods are also recycled. Deleting DaemonSet deletes all Pods it creates.

Some typical uses of DaemonSet:

- Run the cluster daemon on each node

- Run the log collection daemon on each node

- Run the monitoring daemon on each node

A simple use is to start a DaemonSet on all nodes for each type of daemon. A slightly more complex use is to deploy multiple DaemonSets for the same daemon process; Each has a different flag and has different memory, CPU requirements for different hardware types.

[root@master manifest]# vim filebeat.yaml

---

apiVersion: apps/v1

kind: DaemonSet // type

metadata:

name: filebeat

namespace: kube-system //Namespace

spec:

selector:

matchLabels:

name: filebeat

template:

metadata:

labels:

name: filebeat

spec:

containers:

- name: log

image: elastic/filebeat:7.16.2

imagePullPolicy: IfNotPresent

[root@master manifest]# kubectl apply -f filebeat.yaml

daemonset.apps/filebeat created

[root@master manifest]# kubectl get pods -n kube-system -o wide | grep filebeat

filebeat-bcxq4 1/1 Running 0 7m58s 10.244.1.23 node2.example.com <none> <none>

filebeat-zzlfl 1/1 Running 0 7m58s 10.244.1.70 node1.example.com <none> <none>

Jobs (one-time service)

Job creates one or more Pods and ensures that a specified number of Pods terminate successfully. Job tracks the number of Pods successfully completed as Pods successfully ended. The task (that is, Job) ends when the number reaches the specified threshold for the number of successes. Deleting a Job clears all created Pods.

In a simple usage scenario, you create a Job object to run a Pod in a reliable way until it is complete. The Job object starts a new Pod when the first Pod fails or is deleted, such as because the node hardware failed or restarted.

You can also use Job to run multiple Pod s in parallel.

[root@master ~]# vi job.yaml

apiVersion: batch/v1

kind: Job

metadata:

name: pi

spec:

template:

spec:

containers:

- name: pi

image: perl

command: ["perl","-Mbignum=bpi","-wle","print bpi(2000)"]

restartPolicy: Never

backoffLimit: 4 // retry count

[root@master ~]# kubectl apply -f job.yaml

job.batch/pi created

[root@master ~]# kubectl get pods -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

pi-dtgj6 0/1 ContainerCreating 0 8s <none> node01 <none> <none>

//Complete when the process has finished running

[root@master ~]# kubectl get pods -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

pi-dtgj6 0/1 Completed 0 5m7s 10.244.1.23 node01 <none> <none>

CronJob (Timed Task)

Cron Job creates a Jobs based on time scheduling.

A CronJob object is like a line in a crontab (cron table) file. It is written in Cron format and periodically executes a Job at a given schedule time.

Be careful:

All CronJob schedule s: times are based on kube-controller-manager. Time zone.

If your control plane runs kube-controller-manager in a Pod or bare container, the time zone set for that container will determine the time zone used by Cron Job's controller.

When creating a list of CronJob resources, make sure that the name provided is a valid DNS subdomain name. Name cannot exceed 52 characters. This is because the CronJob controller will automatically append 11 characters to the Job name provided, and there is a limitation that the maximum length of a Job name cannot exceed 63 characters.

[root@master manifest]# vim cronjob.yaml

apiVersion: batch/v1beta1

kind: CronJob

metadata:

name: hello

spec:

schedule: "*/1 * * * *" //Time Sharing Day Month Week

jobTemplate:

spec:

template:

spec:

containers:

- name: hello

image: busybox

args:

- /bin/sh

- -c

- date;echo Hello aliang

restartPolicy: OnFailure

[root@master manifest]# kubectl apply -f cronjob.yaml

cronjob.batch/hello created

[root@master manifest]# kubectl get pods

NAME READY STATUS RESTARTS AGE

pi-lrgcs 0/1 ContainerCreating 0 10m

//After a minute of waiting

[root@master manifest]# kubectl get pods

NAME READY STATUS RESTARTS AGE

hello-1640363760-gvktn 0/1 ContainerCreating 0 25s

pi-lrgcs 0/1 ContainerCreating 0 11m

ot@master manifest]# kubectl get pods

NAME READY STATUS RESTARTS AGE

pi-lrgcs 0/1 ContainerCreating 0 10m

//After one minute of waiting

[root@master manifest]# kubectl get pods

NAME READY STATUS RESTARTS AGE

hello-1640363760-gvktn 0/1 ContainerCreating 0 25s

pi-lrgcs 0/1 ContainerCreating 0 11m