Do you know? I think it is one of the core functions of linux! For example, if the thread sleep(5000) wakes up for execution after 5s, how does the cpu know that 5s is up? In addition, nginx reverse proxy needs to check whether the client is still connected at regular intervals. If the line drops, there is no need to allocate resources to maintain the connection relationship. So how is the timing mechanism of detecting heartbeat at fixed intervals implemented?

1. (1) jiffies is the core variable related to Linux system and time! In include \ Linux \ raid \ PQ H is defined as follows:

# define jiffies raid6_jiffies() #define HZ 1000 //Returns the millisecond value of the current time, such as timestamp static inline uint32_t raid6_jiffies(void) { struct timeval tv; gettimeofday(&tv, NULL); return tv.tv_sec*1000 //tv_sec Is seconds, multiplied by 1000 to milliseconds + tv.tv_usec/1000;//tv_usec Is microseconds, divided by 1000 to milliseconds }

There is a lot of information in this code. The core of this code is two points:

- A macro definition is HZ and the value is 1000; Since HZ is related to time here, you can guess that it should be in milliseconds, because 1s=1000ms; Normal definition: HZ refers to the number of clock interrupts generated in one second. Here, 1000 clock interrupts are generated per second, that is, the interval between each clock interrupt is 1 millisecond!

- The gettimeofday function stores the value of the current timestamp in the timeval structure. The two fields are seconds and subtle units respectively; Finally, raid6_jiffies are returned in milliseconds. So, isn't this just a timestamp?

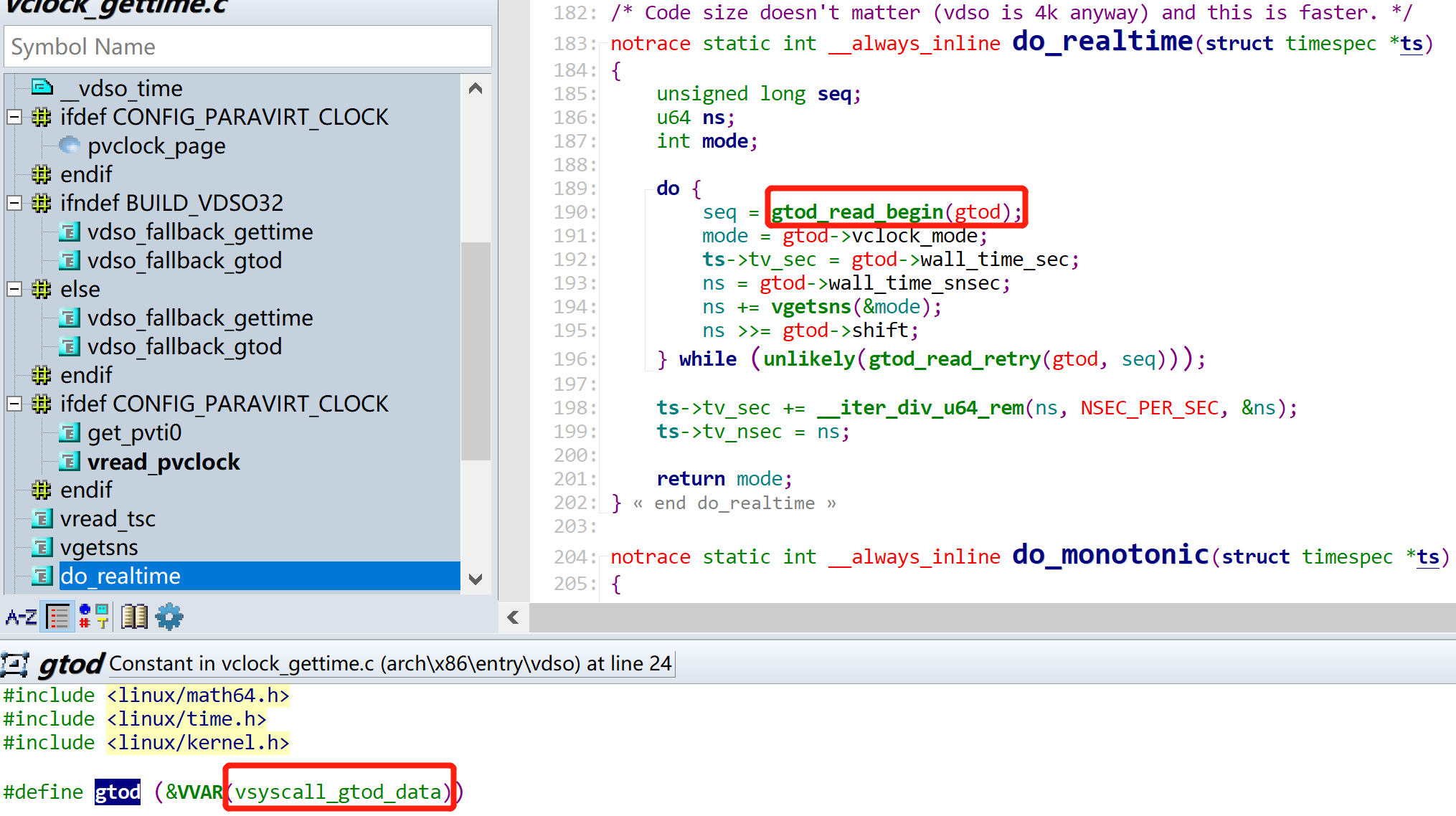

Continue to go deep into the gettimeofday function and find that the current time is finally obtained through the system call! The core structure is vsyscall_gtod_data!

If it is a 32-bit system, the maximum value of jiffies is 2 ^ 32. Exceeding this value will cause overflow, wrap around to 0 and restart counting! Therefore, in order to deal with the rewind problem, the linux kernel specifically provides a comparison method:

#define time_after(a,b) \ (typecheck(unsigned long, a) && \ typecheck(unsigned long, b) && \ ((long)(b) - (long)(a) < 0)) #define time_before(a,b) time_after(b,a) #define time_after_eq(a,b) \ (typecheck(unsigned long, a) && \ typecheck(unsigned long, b) && \ ((long)(a) - (long)(b) >= 0)) #define time_before_eq(a,b) time_after_eq(b,a)

(2) timer, timer, the essence is to do specific work at a specific time! For example, social livestock farmers get up at 7:20 in the morning, take the bus to work at 7:50, turn on the computer at 9:00 and start moving bricks! In the linux kernel, how to associate a specific time with a specific action? C + + can create a class, the member variable is time, and the member function is a specific action; The linux kernel is written in C, and the structure can also complete the same function. All this is done with timer_ The list structure is implemented as follows:

struct timer_list { /* * All fields that change during normal runtime grouped to the * same cacheline */ struct hlist_node entry;//Linked list structure timer_list unsigned long expires;//Expiration time, generally used jiffies+5*HZ: Indicates the return function that triggers the timer after 5 seconds void (*function)(unsigned long);//Callback function after timer time expires unsigned long data;//Parameters of callback function u32 flags; #ifdef CONFIG_TIMER_STATS int start_pid; void *start_site; char start_comm[16]; #endif #ifdef CONFIG_LOCKDEP struct lockdep_map lockdep_map; #endif };

After the structure is defined, how to use it? Let's take a look at a demo code to understand the usage of timer:

#include <linux/module.h> #include <linux/timer.h> #include <linux/jiffies.h> void time_pre(struct timer_list *timer); struct timer_list mytimer; // DEFINE_TIMER(mytimer, time_pre); void time_pre(struct timer_list *timer) { printk("%s\n", __func__); mytimer.expires = jiffies + 500 * HZ/1000; // 500ms Run once mod_timer(&mytimer, mytimer.expires); // 2.2 If the task needs to be executed periodically, add in the timer callback function mod_timer } // Drive interface int __init chr_init(void) { timer_setup(&mytimer, time_pre, 0); // 1. initialization mytimer.expires = jiffies + 500 * HZ/1000; //Trigger once every 0.5 seconds add_timer(&mytimer); // 2.1 Add timer to kernel printk("init success\n"); return 0; } void __exit chr_exit(void) { if(timer_pending(&mytimer)) { del_timer(&mytimer); // 3.Release timer } printk("exit Success \n"); } module_init(chr_init); module_exit(chr_exit); MODULE_LICENSE("GPL"); MODULE_AUTHOR("XXX"); MODULE_DESCRIPTION("a simple char device example");

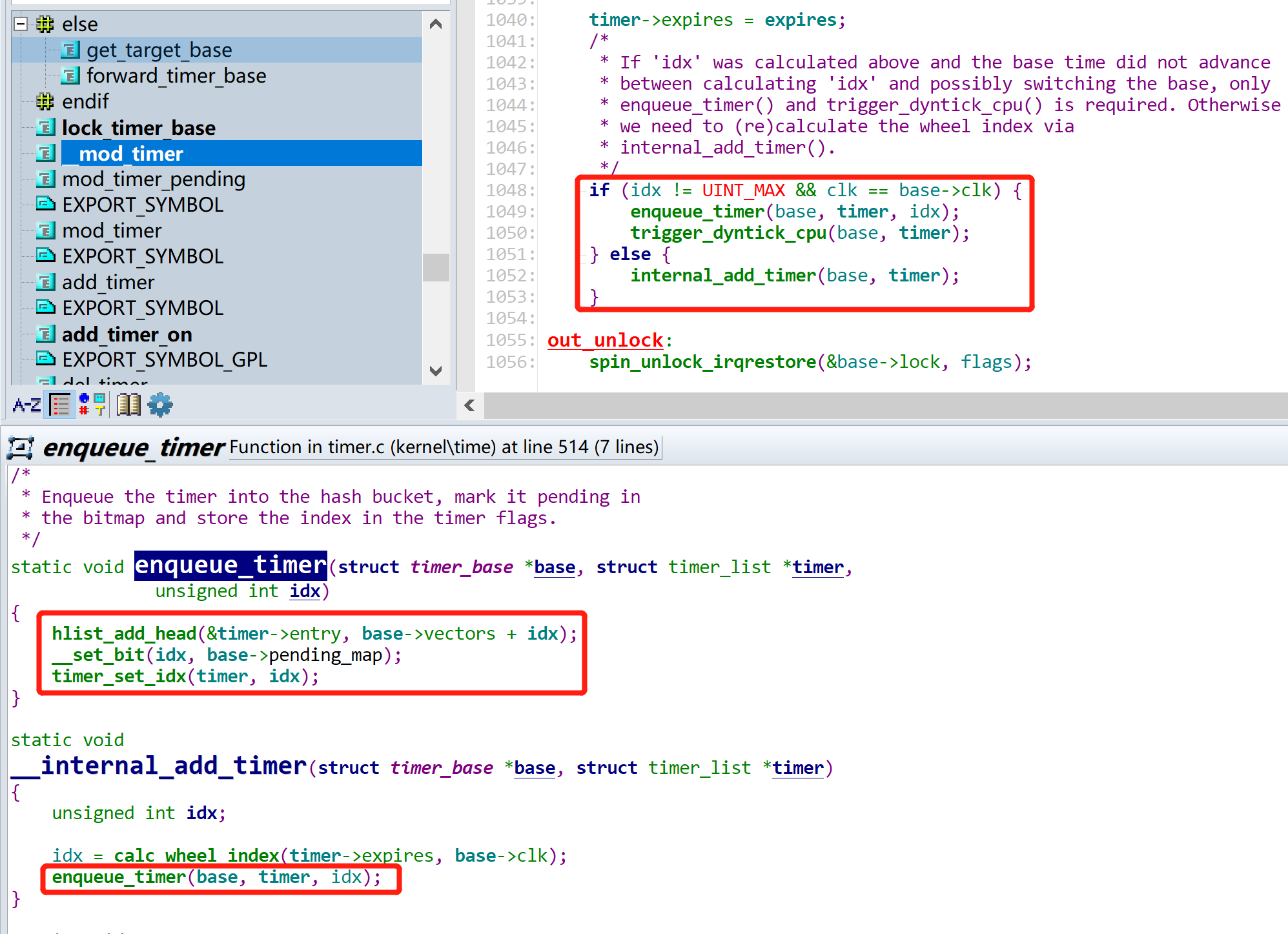

General idea: generate timer_list structure and initialize it, and then use add_timer register the timer just initialized, and call the callback function when the expire time expires! The idea is very simple. You can see the core function add from here_ Timer and mod_timer function, and these two functions are finally called__ mod_timer function; From the source code of the function, queues are used to organize timers. What about the legendary red black tree?

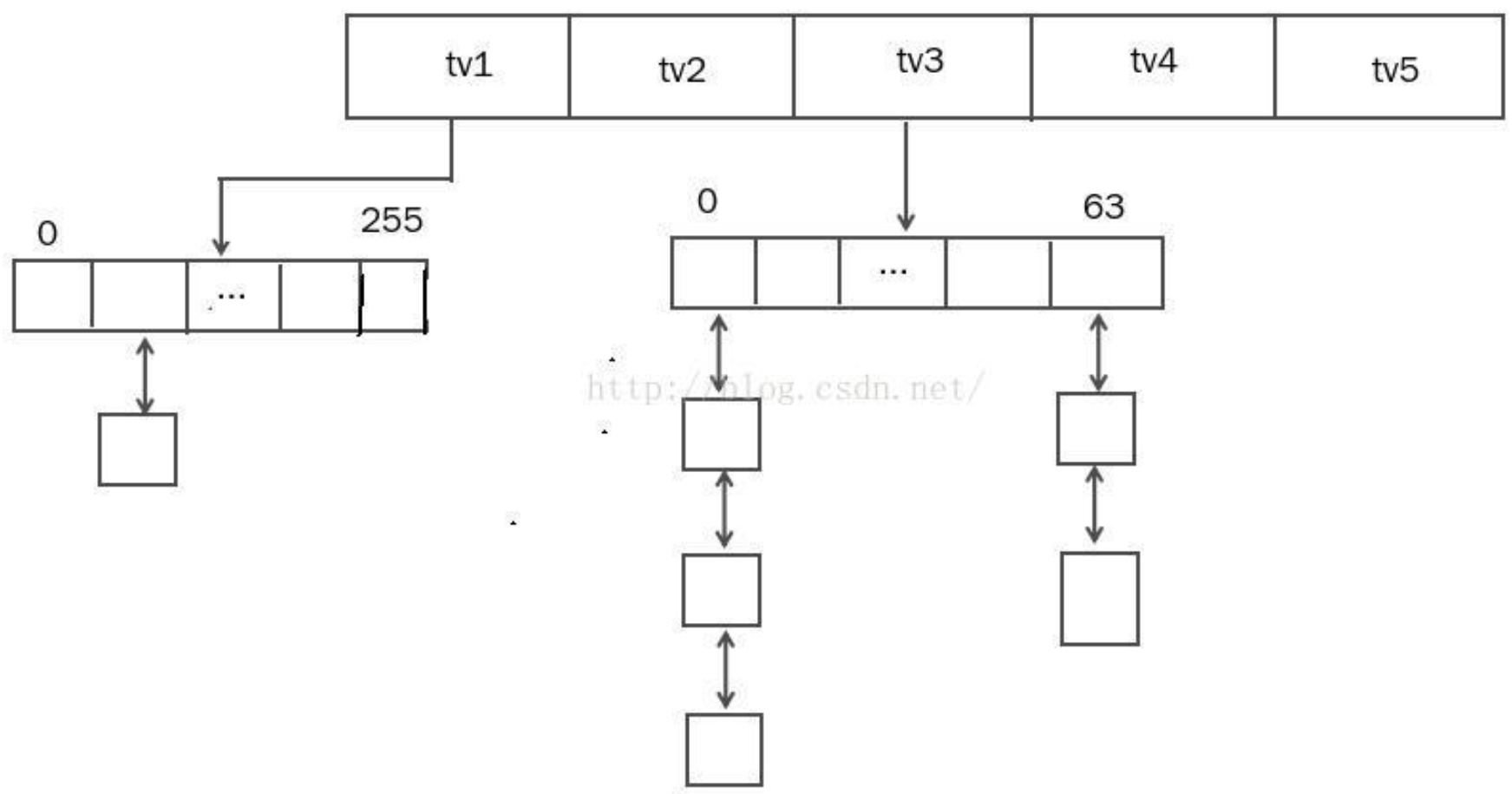

These timers above all rely on HZ. This kind of timer is called low resolution timer. It can be seen from the name that the accuracy is not high. Low resolution timer uses timer wheel mechanism to manage timers in the system. Under the mechanism of timer wheel, the timers in the system are not managed by a single linked list. In order to achieve efficient access and consume minimal cpu resources, the linux system uses five linked list arrays for management (the principle is similar to the O(1) algorithm of process scheduling, which divides the original single queue into several according to priority): there is a carry operation between the five arrays like a watch. Store this timer in tv1_ Jiffies to timer_jiffies+256, that is, the range of tv1 storage timer is 0-255. If there may be multiple identical timers to be processed on each tick, then use the linked list to string the same timers together and process them when timeout occurs. tv2 has 64 cells, and each cell has 256 ticks. Therefore, the timeout range of tv2 is 256-256 * 64-1 (2 ^ 14 - 1). This is an analogy to tv3, tv4 and tv5. The range of each tv is as follows:

|

array |

idx range |

|---|---|

|

tv1 |

0--2^8-1 |

|

tv2 |

2^8--2^14-1 |

|

tv3 |

2^14--2^20-1 |

|

tv4 |

2^20--2^26-1 |

|

tv5 |

2^26--2^32-1 |

The whole timer wheel is shown as follows:

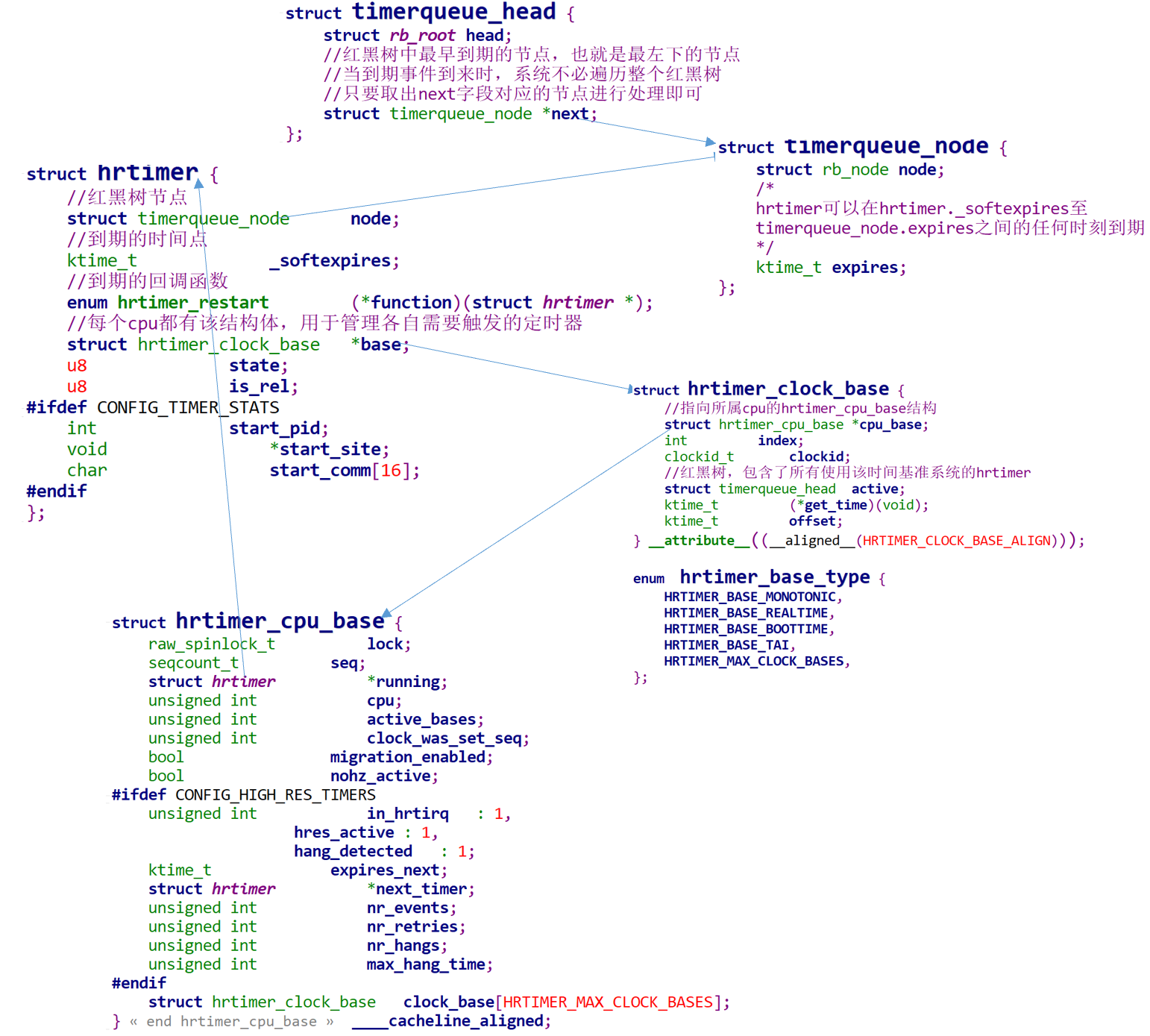

2. (1) low precision timers are no longer suitable for some demanding scenarios (such as watchdog, usb, ethernet, block device, kvm and other subsystems); In order to improve the accuracy and compatible with the lower version of linux kernel, a new timer named hrtimer needs to be redesigned. There are several structures matched with hrtimer. In order to intuitively feel the relationship between these structures, it is shown in the following figure:

(2) hrtimer structure and timer_ Similar to list, both have an expire field and a callback function field. It can be found that the relationship between structures is relatively complex, and even nested with each other. How are complex structures and how is the linux kernel used?

No matter which timer it is, first generate an instance of the timer, which mainly records the expire time and callback function, so call it first__ hrtimer_init method initializes the timer. The code is as follows:

static void __hrtimer_init(struct hrtimer *timer, clockid_t clock_id, enum hrtimer_mode mode) { struct hrtimer_cpu_base *cpu_base; int base; memset(timer, 0, sizeof(struct hrtimer)); //initialization hrtimer of base field cpu_base = raw_cpu_ptr(&hrtimer_bases); if (clock_id == CLOCK_REALTIME && mode != HRTIMER_MODE_ABS) clock_id = CLOCK_MONOTONIC; base = hrtimer_clockid_to_base(clock_id); timer->base = &cpu_base->clock_base[base]; //Initialize the of red black tree node node timerqueue_init(&timer->node); #ifdef CONFIG_TIMER_STATS timer->start_site = NULL; timer->start_pid = -1; memset(timer->start_comm, 0, TASK_COMM_LEN); #endif }

The core function is to assign a value to the attribute of the base field, and then initialize the node node of the red black tree! Then the node is added to the red black tree to facilitate the subsequent dynamic and rapid addition, deletion, modification and query of timers! The function to build the red black tree is hrtimer_start_range_ns, the code is as follows:

/** * hrtimer_start_range_ns - (re)start an hrtimer on the current CPU * @timer: the timer to be added * @tim: expiry time * @delta_ns: "slack" range for the timer * @mode: expiry mode: absolute (HRTIMER_MODE_ABS) or * relative (HRTIMER_MODE_REL) */ void hrtimer_start_range_ns(struct hrtimer *timer, ktime_t tim, u64 delta_ns, const enum hrtimer_mode mode) { struct hrtimer_clock_base *base, *new_base; unsigned long flags; int leftmost; base = lock_hrtimer_base(timer, &flags); /* Remove an active timer from the queue: Finally, the timerqueue is called_ Del function delete from red black tree */ remove_hrtimer(timer, base, true); /* If it is relative time, you need to add the current time, because the internal uses absolute time */ if (mode & HRTIMER_MODE_REL) tim = ktime_add_safe(tim, base->get_time()); tim = hrtimer_update_lowres(timer, tim, mode); /* Set the time range for expiration */ hrtimer_set_expires_range_ns(timer, tim, delta_ns); /* Switch the timer base, if necessary: */ new_base = switch_hrtimer_base(timer, base, mode & HRTIMER_MODE_PINNED); timer_stats_hrtimer_set_start_info(timer); /* Sort hrtime according to the expiration time and add it to the red black tree of the corresponding time benchmark system */ /* If the timer expires at the earliest, it will return true The final call is timerqueue_add function */ leftmost = enqueue_hrtimer(timer, new_base); if (!leftmost) goto unlock; if (!hrtimer_is_hres_active(timer)) { /* * Kick to reschedule the next tick to handle the new timer * on dynticks target. */ if (new_base->cpu_base->nohz_active) wake_up_nohz_cpu(new_base->cpu_base->cpu); } else { hrtimer_reprogram(timer, new_base); } unlock: unlock_hrtimer_base(timer, &flags); }

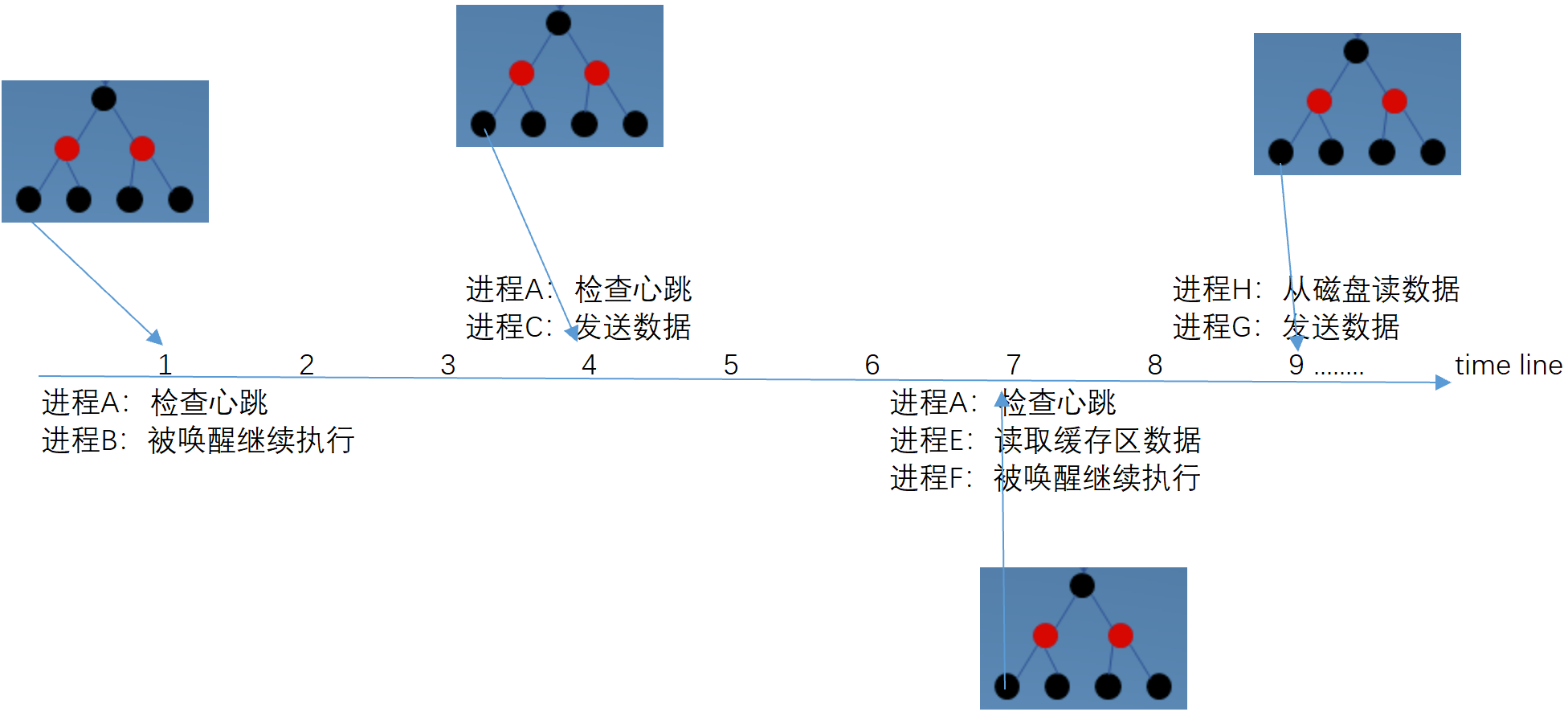

(3) how to use the red black tree of the timer after it is built? Since it is related to time, there must be an inseparable mechanism: clock interrupt! There is A special kind of hardware on the computer motherboard, which will send pulse signals to the cpu at the same interval, which is equivalent to the "pulse" of the computer; After receiving this signal, the cpu can respond to some actions. This mechanism is clock interrupt. The most common clock interrupt action is process switching! However, in addition, clock interrupt has A very important role: trigger and manage timer! Reviewing the above structure definition and usage process, we will find that multiple processes may need to trigger timers at the same time. The figure is as follows: for example, in the 1s, both processes A and B have timers to be triggered; For example, in the 7th s, processes A, E and F also have timers to be triggered. How does the operating system know when to trigger which timers?

At this time, the role of the red black tree is highlighted: each time a clock interrupt occurs, in addition to the necessary process / thread switching, you also need to check the red black tree to see if the expire of the leftmost node has arrived. If not, it will not be processed. Wait for the next clock interrupt to check again; If it has arrived, execute the callback function of the node and delete the node at the same time; This process is in hrtimer_ Executed in interrupt, the function code is as follows:

/* * High resolution timer interrupt * Called with interrupts disabled */ void hrtimer_interrupt(struct clock_event_device *dev) { struct hrtimer_cpu_base *cpu_base = this_cpu_ptr(&hrtimer_bases); ktime_t expires_next, now, entry_time, delta; int retries = 0; BUG_ON(!cpu_base->hres_active); cpu_base->nr_events++; dev->next_event.tv64 = KTIME_MAX; raw_spin_lock(&cpu_base->lock); entry_time = now = hrtimer_update_base(cpu_base); retry: cpu_base->in_hrtirq = 1; /* * We set expires_next to KTIME_MAX here with cpu_base->lock * held to prevent that a timer is enqueued in our queue via * the migration code. This does not affect enqueueing of * timers which run their callback and need to be requeued on * this CPU. */ cpu_base->expires_next.tv64 = KTIME_MAX; /*View the lowest node of the red black tree. If it expires, execute the callback function and delete the node*/ __hrtimer_run_queues(cpu_base, now); /* Reevaluate the clock bases for the next expiry Find next expired timer */ expires_next = __hrtimer_get_next_event(cpu_base); /* * Store the new expiry value so the migration code can verify * against it. */ cpu_base->expires_next = expires_next; cpu_base->in_hrtirq = 0; raw_spin_unlock(&cpu_base->lock); /* Reprogramming necessary ? */ if (!tick_program_event(expires_next, 0)) { cpu_base->hang_detected = 0; return; } /* * The next timer was already expired due to: * - tracing * - long lasting callbacks * - being scheduled away when running in a VM * * We need to prevent that we loop forever in the hrtimer * interrupt routine. We give it 3 attempts to avoid * overreacting on some spurious event. * * Acquire base lock for updating the offsets and retrieving * the current time. */ raw_spin_lock(&cpu_base->lock); now = hrtimer_update_base(cpu_base); cpu_base->nr_retries++; if (++retries < 3) goto retry; /* * Give the system a chance to do something else than looping * here. We stored the entry time, so we know exactly how long * we spent here. We schedule the next event this amount of * time away. */ cpu_base->nr_hangs++; cpu_base->hang_detected = 1; raw_spin_unlock(&cpu_base->lock); delta = ktime_sub(now, entry_time); if ((unsigned int)delta.tv64 > cpu_base->max_hang_time) cpu_base->max_hang_time = (unsigned int) delta.tv64; /* * Limit it to a sensible value as we enforce a longer * delay. Give the CPU at least 100ms to catch up. */ if (delta.tv64 > 100 * NSEC_PER_MSEC) expires_next = ktime_add_ns(now, 100 * NSEC_PER_MSEC); else expires_next = ktime_add(now, delta); tick_program_event(expires_next, 1); printk_once(KERN_WARNING "hrtimer: interrupt took %llu ns\n", ktime_to_ns(delta)); }

The most important thing is__ hrtimer_run_queues and continue to call__ run_ The code of hrtimer function is as follows, and the important part is annotated in Chinese:

/* * The write_seqcount_barrier()s in __run_hrtimer() split the thing into 3 * distinct sections: * * - queued: the timer is queued * - callback: the timer is being ran * - post: the timer is inactive or (re)queued * * On the read side we ensure we observe timer->state and cpu_base->running * from the same section, if anything changed while we looked at it, we retry. * This includes timer->base changing because sequence numbers alone are * insufficient for that. * * The sequence numbers are required because otherwise we could still observe * a false negative if the read side got smeared over multiple consequtive * __run_hrtimer() invocations. */ static void __run_hrtimer(struct hrtimer_cpu_base *cpu_base, struct hrtimer_clock_base *base, struct hrtimer *timer, ktime_t *now) { enum hrtimer_restart (*fn)(struct hrtimer *); int restart; lockdep_assert_held(&cpu_base->lock); debug_deactivate(timer); cpu_base->running = timer; /* * Separate the ->running assignment from the ->state assignment. * * As with a regular write barrier, this ensures the read side in * hrtimer_active() cannot observe cpu_base->running == NULL && * timer->state == INACTIVE. */ raw_write_seqcount_barrier(&cpu_base->seq); /*Call timerqueue_del delete node from red black tree*/ __remove_hrtimer(timer, base, HRTIMER_STATE_INACTIVE, 0); timer_stats_account_hrtimer(timer); /*The most important callback function*/ fn = timer->function; /* * Clear the 'is relative' flag for the TIME_LOW_RES case. If the * timer is restarted with a period then it becomes an absolute * timer. If its not restarted it does not matter. */ if (IS_ENABLED(CONFIG_TIME_LOW_RES)) timer->is_rel = false; /* * Because we run timers from hardirq context, there is no chance * they get migrated to another cpu, therefore its safe to unlock * the timer base. The timer is triggered by the clock interrupt at the hardware level, so this callback function must be executed by the current cpu */ raw_spin_unlock(&cpu_base->lock); trace_hrtimer_expire_entry(timer, now); //The callback function was finally executed restart = fn(timer); trace_hrtimer_expire_exit(timer); raw_spin_lock(&cpu_base->lock); /* * Note: We clear the running state after enqueue_hrtimer and * we do not reprogram the event hardware. Happens either in * hrtimer_start_range_ns() or in hrtimer_interrupt() * * Note: Because we dropped the cpu_base->lock above, * hrtimer_start_range_ns() can have popped in and enqueued the timer * for us already. */ if (restart != HRTIMER_NORESTART && !(timer->state & HRTIMER_STATE_ENQUEUED)) enqueue_hrtimer(timer, base);//call timerqueue_add hold timer Join the red and black tree /* * Separate the ->running assignment from the ->state assignment. * * As with a regular write barrier, this ensures the read side in * hrtimer_active() cannot observe cpu_base->running == NULL && * timer->state == INACTIVE. */ raw_write_seqcount_barrier(&cpu_base->seq); WARN_ON_ONCE(cpu_base->running != timer); cpu_base->running = NULL; } static void __hrtimer_run_queues(struct hrtimer_cpu_base *cpu_base, ktime_t now) { struct hrtimer_clock_base *base = cpu_base->clock_base; unsigned int active = cpu_base->active_bases; /*Traverse each time benchmark system and query each hrtimer_clock_base corresponds to the lower left node of the red black tree, Judge whether its time expires. If it expires, pass__ run_ The hrtimer function processes the expiration timer, Including: calling the callback function of the timer, removing the timer from the red black tree Decide whether to restart the timer according to the return value of the callback function*/ for (; active; base++, active >>= 1) { struct timerqueue_node *node; ktime_t basenow; if (!(active & 0x01)) continue; basenow = ktime_add(now, base->offset); /* Returns the lower left node in the red black tree. This function can be used in the while loop, Because__ run_hrtimer will when removing the old lower left node, The new lower left node will be updated to the base - > active - > next field, This allows the loop to continue until there is no new expiration timer */ while ((node = timerqueue_getnext(&base->active))) { struct hrtimer *timer; timer = container_of(node, struct hrtimer, node); /* * The immediate goal for using the softexpires is * minimizing wakeups, not running timers at the * earliest interrupt after their soft expiration. * This allows us to avoid using a Priority Search * Tree, which can answer a stabbing querry for * overlapping intervals and instead use the simple * BST we already have. * We don't add extra wakeups by delaying timers that * are right-of a not yet expired timer, because that * timer will have to trigger a wakeup anyway. */ if (basenow.tv64 < hrtimer_get_softexpires_tv64(timer)) break; __run_hrtimer(cpu_base, base, timer, &basenow); } } }

lib\timerqueue. Three important tool functions in C file: they are all routine operations of red black tree!

/** * timerqueue_add - Adds timer to timerqueue. * * @head: head of timerqueue * @node: timer node to be added * * Adds the timer node to the timerqueue, sorted by the * node's expires value. */ bool timerqueue_add(struct timerqueue_head *head, struct timerqueue_node *node) { struct rb_node **p = &head->head.rb_node; struct rb_node *parent = NULL; struct timerqueue_node *ptr; /* Make sure we don't add nodes that are already added */ WARN_ON_ONCE(!RB_EMPTY_NODE(&node->node)); while (*p) { parent = *p; ptr = rb_entry(parent, struct timerqueue_node, node); if (node->expires.tv64 < ptr->expires.tv64) p = &(*p)->rb_left; else p = &(*p)->rb_right; } rb_link_node(&node->node, parent, p); rb_insert_color(&node->node, &head->head); if (!head->next || node->expires.tv64 < head->next->expires.tv64) { head->next = node; return true; } return false; } EXPORT_SYMBOL_GPL(timerqueue_add); /** * timerqueue_del - Removes a timer from the timerqueue. * * @head: head of timerqueue * @node: timer node to be removed * * Removes the timer node from the timerqueue. */ bool timerqueue_del(struct timerqueue_head *head, struct timerqueue_node *node) { WARN_ON_ONCE(RB_EMPTY_NODE(&node->node)); /* update next pointer */ if (head->next == node) { struct rb_node *rbn = rb_next(&node->node); head->next = rbn ? rb_entry(rbn, struct timerqueue_node, node) : NULL; } rb_erase(&node->node, &head->head); RB_CLEAR_NODE(&node->node); return head->next != NULL; } EXPORT_SYMBOL_GPL(timerqueue_del); /** * timerqueue_iterate_next - Returns the timer after the provided timer * * @node: Pointer to a timer. * * Provides the timer that is after the given node. This is used, when * necessary, to iterate through the list of timers in a timer list * without modifying the list. */ struct timerqueue_node *timerqueue_iterate_next(struct timerqueue_node *node) { struct rb_node *next; if (!node) return NULL; next = rb_next(&node->node); if (!next) return NULL; return container_of(next, struct timerqueue_node, node); } EXPORT_SYMBOL_GPL(timerqueue_iterate_next);

Calling process of the whole function: tick_ program_ Event (register clock_event_device) - > hrtimer_ inerrupt->__ hrtimer_ run_ queues->__ run_ hrtimer

(4) the most important thing in the timer is to execute the callback function. The R & D personnel have designed such a complex process and structure. Isn't the ultimate goal to execute the correct callback function at the right time? Since the callback function is executed asynchronously, which is similar to a "software interrupt" and is in a non process context, the callback function needs to pay attention to the following three points:

- There is no current pointer and access to user space is not allowed. Because there is no process context, the relevant code has no connection with the interrupted process.

- Hibernation (or functions that may cause hibernation) and scheduling cannot be performed.

- Any accessed data structure should be protected against concurrent access to prevent race conditions.

3. Why is hrtimer better than timer_ High accuracy?

(1) the timing unit of the low resolution timer is based on the count of jiffies value, that is, its accuracy is only 1/HZ. If the HZ configured by the kernel is 1000, it means that the accuracy of the low resolution timer in the system is 1ms; So the question is, in order to improve the accuracy, why not set the HZ value higher? Like 10000, 1000000, etc? Increasing the clock interrupt frequency will also have side effects. The higher the interrupt frequency, the burden of the system will increase. The processor needs to spend time executing the interrupt handler, and the more cpu time the interrupt processor occupies. In this way, the processor has less time to perform other work, and it will disrupt the processor cache (caused by process switching). Therefore, when selecting the clock interrupt frequency, we should consider many aspects and obtain an appropriate frequency with compromise in all aspects.

(2) most of the time, time wheel can realize O(1) time complexity. However, when carry occurs, the unpredictable cascade migration time of O(N) timer greatly affects the accuracy of timer; It happens that the time complexity of adding, deleting, changing and checking the red black tree can be controlled at O(lgN), coupled with the progress of hardware counting, so the accuracy can be better controlled at the nanosecond level!

reference resources:

1, https://blog.csdn.net/droidphone/article/details/8051405 Principle of low resolution timer

2, https://blog.csdn.net/hongzg1982/article/details/54881361 Principle of high precision timer hrtimer

3, https://cloud.tencent.com/developer/article/1603333?from=15425 Implementation of kernel low resolution timer

4, https://zhuanlan.zhihu.com/p/83078387 Introduction to the principle of high precision timer