Autonomous pod application

Most of the pods we come into contact with are controller-controlled pods, so today we are talking about autonomous pods (that is, pods created by yaml files), which are pods that control themselves and prevent them from being killed by the controller.

1. First, let's create a pod resource object for nginx:

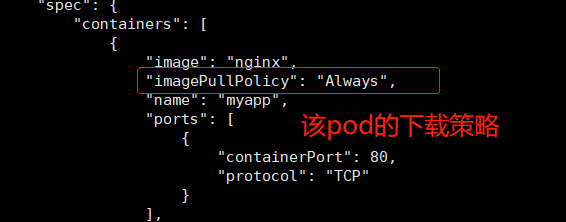

Before creating a pod, let's look at the download strategy for the mirror:

[root@master yaml]# kubectl explain pod.spec.containers

//View the policy fields for imagePullpolicy:

imagePullPolicy <string>

Image pull policy. One of Always, Never, IfNotPresent. Defaults to Always

if :latest tag is specified, or IfNotPresent otherwise. Cannot be updated.

More info:

https://kubernetes.io/docs/concepts/containers/images#updating-imagesSeveral strategic explanations:

-

Always: Always get a mirror from the specified repository (Dockerhub) when the mirror label is "latest" or when the mirror label does not exist.

Docker currently officially maintains a public warehouse, Docker Hub, which already includes more than 2,650,000 mirrors.Most of this can be achieved by downloading the mirror directly from the Docker Hub. - Nerver: Downloading mirrors from the repository is prohibited, that is, using only local mirrors.If there is no mirror locally, the pod will not run.

- IfNotPresent: Download from the target repository only if there is no corresponding local image.

The default acquisition strategies are as follows:

The mirror label is "latest", and its default download policy is Always.

The mirror label is custom (not the default latest), and its default download policy is IfNotPresent.

//Create a pod (define a policy):

[root@master yaml]# vim test-pod.yaml

apiVersion: v1

kind: Pod

metadata:

name: test-pod

spec:

containers:

- name: myapp

image: nginx

imagePullPolicy: Always #Define the mirror download policy (get the mirror from the specified repository)

ports:

- containerPort: 80 #Expose the port of the container//Execute yaml file: [root@master yaml]# vim test-pod.yaml [root@master yaml]# kubectl apply -f test-pod.yaml

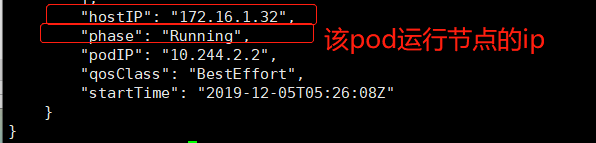

//View the status of the pod: [root@master yaml]# kubectl get pod test-pod -o wide NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES test-pod 1/1 Running 0 7m55s 10.244.2.2 node02 <none> <none>

//View a details of the pod in json format: [root@master yaml]# kubectl get pod test-pod -o json

image mirror phase (state):

-

The container required by Running:Pod has been successfully dispatched to a node and has been successfully run.

-

Pending: APIserver created the pod resource object and saved it in etcd, but it has not been scheduled to complete or is still in the repository downloading the mirror.

-

All containers in Succeeded: Pod terminated successfully and will not be restarted.

-

Failed: All containers in Pod have terminated, and at least one container has terminated due to a failure.That is, the container either exits in a non-zero state or is terminated by the system

- Unknown: APIserver cannot get the state of the pod object properly, usually because it cannot communicate with the kubelet of its working node.

Be familiar with and remember these states, as they help you find problems accurately and troubleshoot errors quickly when your cluster fails.

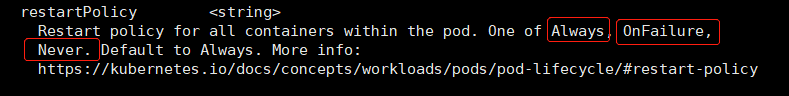

2. Restart policy for pod:

//First let's look at a restart policy for pod: [root@master yaml]# kubectl explain pod.spec #View restart policy for spec field of pod

- Field Explanation:

- Always: But restart whenever the pod object terminates. This is the default policy.

- OnFailure: Restart only if the pod object has an error. Containers will not restart if they are in the complete state, which is the normal exit state.

- Never: Never restart.

3. Let's simulate creating a pod that uses mirrors from a private repository inside the company with a download strategy of Never (using local mirrors) and Never (never restart) for the pod.

[root@master yaml]# vim nginx-pod1.yaml

apiVersion: v1

kind: Pod

metadata:

name: mynginx

labels: #Definition label associated with servic e

test: myweb

spec:

restartPolicy: Never #Define mirror restart policy as Never

containers:

- name: myweb

image: 172.16.1.30:5000/nginx:v1

imagePullPolicy: Never #Define mirror download policy as Never

---

apiVersion: v1

kind: Service

metadata:

name: mynginx

spec:

type: NodePort

selector:

test: myweb

ports:

- name: nginx

port: 8080 #Define Cluster IP Port

targetPort: 80 #Expose container ports

nodePort: 30000 #Ports defined to be exposed by the external network through the host[root@master yaml]# kubectl apply -f nginx-pod1.yaml pod/mynginx created service/mynginx created

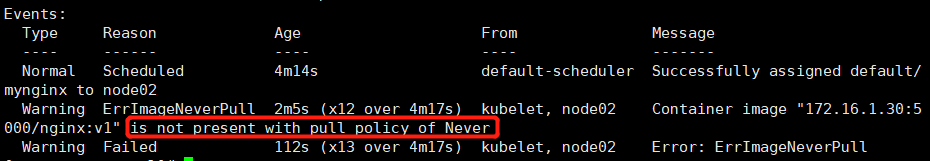

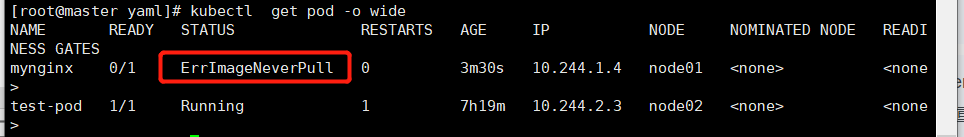

//View the current status of the pod:

Looking at the pod information you can see that the mirror pull failed, so we look at the details of the pod for sorting out:

[root@master yaml]# kubectl describe pod mynginx

You can see that the reason for the policy is that the Nerver policy can only be downloaded from a local image of the node, so we need to pull the mirror from the private repository locally on that node (node02).

[root@node02 ~]# docker pull 172.16.1.30:5000/nginx:v1 v1: Pulling from nginx Digest: sha256:189cce606b29fb2a33ebc2fcecfa8e33b0b99740da4737133cdbcee92f3aba0a Status: Downloaded newer image for 172.16.1.30:5000/nginx:v1

//Go back to master and check again if pod1 is running: [root@master yaml]# kubectl get pod -o wide NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES mynginx 1/1 Running 0 7m32s 10.244.2.4 node02 <none> <none> test-pod 1/1 Running 1 7h10m 10.244.2.3 node02 <none> <none>

2) Test the pod restart policy:

We simulated abnormal exit of pod

[root@master yaml]# vim nginx-pod1.yaml

apiVersion: v1

kind: Pod

metadata:

name: mynginx

labels:

test: myweb

spec:

restartPolicy: Never

containers:

- name: myweb

image: 172.16.1.30:5000/nginx:v1

imagePullPolicy: Never

args: [/bin/sh -c sleep 10; exit 1] #Add args field to simulate sleep and exit after 10 seconds//Restart pod [root@master yaml]# kubectl delete -f nginx-pod1.yaml pod "mynginx" deleted service "mynginx" deleted [root@master yaml]# kubectl apply -f nginx-pod1.yaml pod/mynginx created service/mynginx created

PS: Because the restart policy of pod is set to Never in the pod file, you need to delete the pod and re-execute the yaml file to generate a new pod if the policy cannot be modified.

//View pod status for the first time:

You see the new pod on the node01 node, so we need to pull the mirror locally on the node01.

[root@node01 ~]# docker pull 172.16.1.30:5000/nginx:v1 v1: Pulling from nginx Digest: sha256:189cce606b29fb2a33ebc2fcecfa8e33b0b99740da4737133cdbcee92f3aba0a Status: Downloaded newer image for 172.16.1.30:5000/nginx:v1

For a better view, we re-execute the yaml file to generate a new pod:

[root@master yaml]# kubectl delete -f nginx-pod1.yaml

[root@master yaml]# kubectl apply -f nginx-pod1.yaml

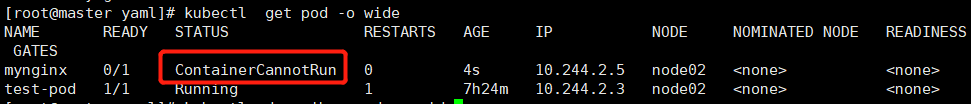

//View the final status of the pod:

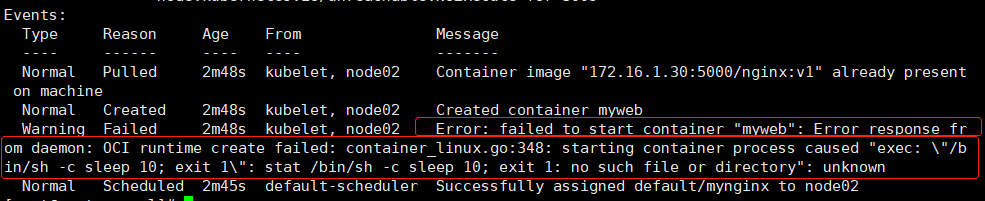

Let's look at the details of the pod (see why the pod failed): [root@master yaml]# kubectl describe pod mynginx

From the above information, we can see that pod exited abnormally after creating the container, which resulted in the container creation failure.

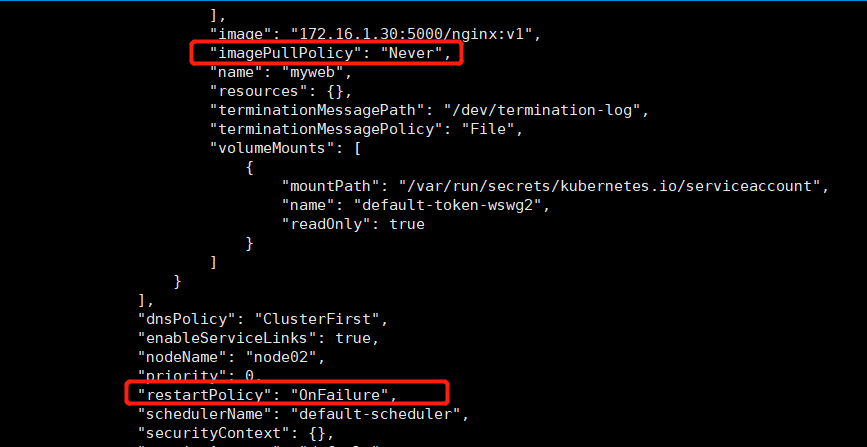

3) We modify the policy rules to set them to OnFailure:

//Rerun pod: [root@master yaml]# kubectl delete -f nginx-pod1.yaml pod "mynginx" deleted service "mynginx" deleted [root@master yaml]# kubectl apply -f nginx-pod1.yaml pod/mynginx created service/mynginx created

See pod Status (Failure): [root@master yaml]# kubectl get pod -o wide NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES mynginx 0/1 RunContainerError 3 55s 10.244.2.8 node02 <none> <none>

[root@master yaml]# kubectl get pod -o json

We can see that the current pod failed to create a container.

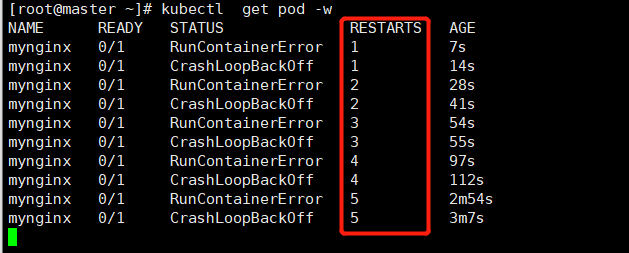

//We will now monitor the current status of the pod in real time:

You can see that the pod keeps restarting because when we set the pod to restart to OnFailure, we also set the pod to restart after 10 seconds of sleep, so the pod keeps restarting.

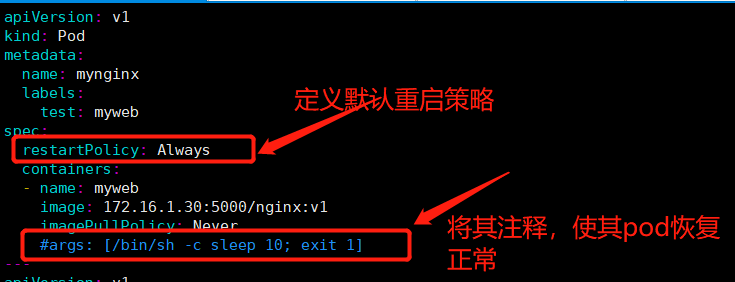

4) We modify the policy rules and restore their pod s to normal operation:

//Rerun the yaml file to generate a new pod: [root@master yaml]# kubectl delete -f nginx-pod1.yaml pod "mynginx" deleted service "mynginx" deleted k[root@master yaml]# kubectl apply -f nginx-pod1.yaml pod/mynginx created service/mynginx created

//Check to see if the pod is back to normal operation: (normal operation) [root@master yaml]# kubectl get pod -o wide NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES mynginx 1/1 Running 0 71s 10.244.1.8 node01 <none> <none>

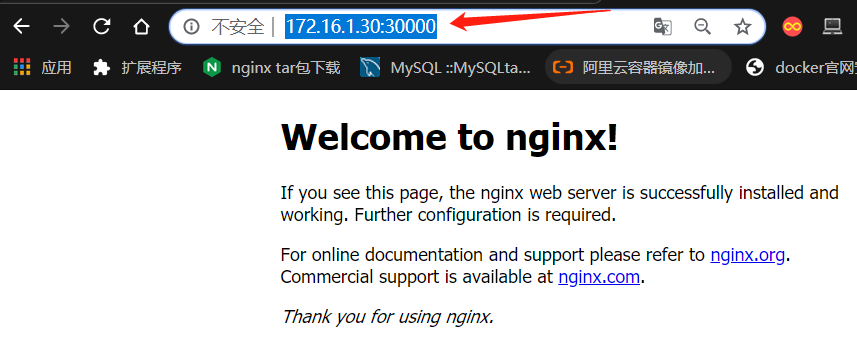

5) Check the service information to verify that the web interface of nginx can be accessed properly:

[root@master yaml]# kubectl get svc mynginx NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE mynginx NodePort 10.101.131.36 <none> 8080:30000/TCP 4m23s

_________