AQS

AbstractQueuedSynchronized:

Implementation description

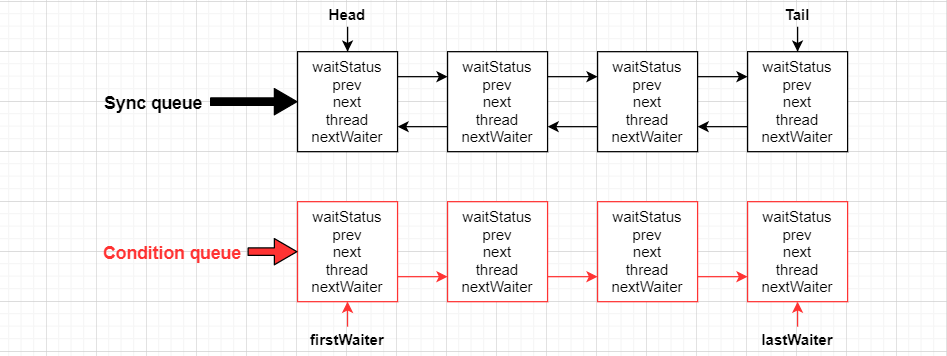

| Using Node to implement FIFO queue can be used to build the basic framework of lock or other synchronization devices. |

| An int type is used to represent the state; |

| The use method is inheritance; |

| Subclasses manipulate the state by inheriting and managing its state {acquire and release} through the methods that implement it; |

| Exclusive lock and shared lock modes (exclusive and shared) can be implemented |

General idea of AQS implementation

aqs internally maintains a CLH queue to manage locks. The thread will first try to obtain locks. If it fails, it will package the thread and waiting status information into a Node node and add it to the Sync Queue synchronization queue. Then it will continue to cycle to try to obtain locks. Its condition is that the immediate postscript of the current Node is head will try. If it fails, it will block itself, Until it wakes up, and when the thread holding the lock releases the lock, it wakes up the successor thread in the queue.

AQS synchronization component

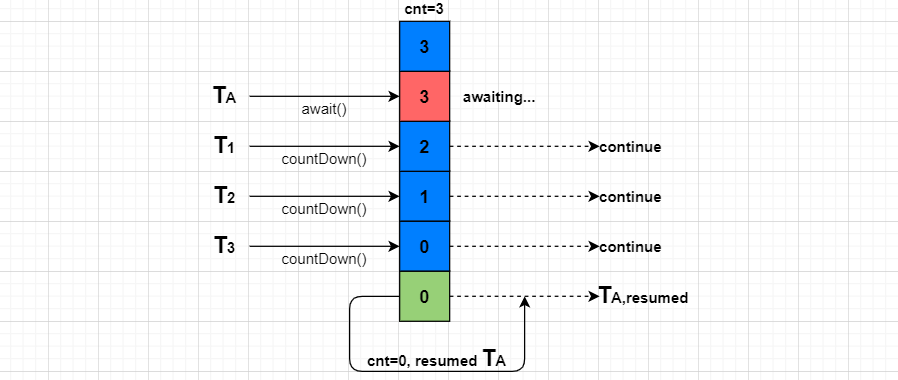

| CountDownLatch | The main mechanism provided is that multiple threads (the specific number is equal to the value of initializing CountDownLatch) reach the expected state or complete the expected work. The thread that reaches its expected state will call the countDown method of CountDownLatch, and the waiting thread will call the await method of CountDownLatch. |

| Semaphore | Semaphore, which controls the number of threads accessed concurrently. Control the number of simultaneous accesses to a resource. |

| CyclicBarrier | CyclicBarrier literally refers to the loop barrier. It can cooperate with multiple threads to make multiple threads wait in front of the barrier until all threads reach the barrier, and then continue to perform the following actions together. |

| ReentrantLock | Reentrant lock. |

| Condition | |

| Future | You can get the return values of other threads. |

| FutureTask | It can be executed as Callable, and the return value can be obtained as Future |

CountDownLatch

CountDownLatch class is a synchronization tool class, which allows one or more threads to wait for each other until other threads finish executing and then execute the following code.

Implementation principle:

CountDownLatch is implemented through a counter whose initialization value is the number of threads. Each time a thread completes its task, the value of the counter is reduced by 1. When the counter reaches 0, it indicates that all threads have completed the task, and then the thread waiting on the lock can resume executing the task.

The countDownLatch.await() method guarantees that countDown decrements to 0.

public class CountDownLatchTest {

public static int clientTotal = 5000;//Total requests

public static int threadTotal = 100;//Number of threads executing concurrently

public static int count = 0;

public static void main(String[] args) throws InterruptedException {

ExecutorService executorService = Executors.newCachedThreadPool();

final Semaphore semaphore = new Semaphore(threadTotal);

final CountDownLatch countDownLatch = new CountDownLatch(clientTotal);

for(int i=0; i<clientTotal; i++){

executorService.execute(()->{

try {

semaphore.acquire();

add();

semaphore.release();

} catch (InterruptedException e) {

e.printStackTrace();

} finally {

countDownLatch.countDown();//Guarantee implementation

}

});

}

countDownLatch.await();//Block to ensure that the previous thread is completed and wait for the counter to decrease to 0

//countDownLatch.await(10, TimeUnit.MICROSECONDS);// Threads are only allowed to wait 10 milliseconds

System.out.println(count);

executorService.shutdown();

}

private static void add() throws InterruptedException { //Thread unsafe

Thread.sleep(100);

count++;

}

}Semaphore

Semaphore class is a counting semaphore that must be released by the thread that obtains it. It is usually used to limit the number of threads that can access some resources. Semaphores control the number of concurrent threads.

Implementation principle:

Semaphore is used to protect access to one or more shared resources. Semaphore internally maintains a counter whose value is the number of shared resources that can be accessed. If a thread wants to access shared resources, it first obtains the semaphore. If the counter value of the semaphore is greater than 1, it means that there are shared resources to access, it subtracts 1 from its counter value, and then accesses the shared resources.

If the counter value is 0, the thread enters sleep. When a thread uses the shared resources, it releases the semaphore and increases the internal counter of the semaphore by 1. The thread that entered sleep before will be awakened and try to obtain the semaphore again.

public class SemaphoreTest {

private static final int threadCount = 20;

public static void main(String[] args) throws InterruptedException {

ExecutorService executorService = Executors.newCachedThreadPool();

Semaphore semaphore = new Semaphore(3);//Control the number of concurrent

for (int i=0; i<threadCount; i++){

final int num = i;

executorService.execute(()->{

try {

if(semaphore.tryAcquire()){//Trying to get a license

test(num);

semaphore.release();

}

} catch (InterruptedException e) {

e.printStackTrace();

}

});

}

executorService.shutdown();

log.info("finish");

}

private static void test(int threadNum) throws InterruptedException {

log.info("{}",threadNum);

Thread.sleep(1000);

}

}CyclicBarrier

A synchronization helper class that allows a group of threads to all wait for each other to reach a common barrier point. These threads must wait for each other, and each thread is ready to perform subsequent operations. The barrier is called a cycle , Because it can be reused after the waiting thread is released.

Implementation principle:

The CyclicBarrier.await method calls the CyclicBarrier.dowait method. Each call of the await method will make the counter - 1. When it is reduced to 0, it will wake up all threads.

The await() method puts the thread into wait.

public class CyclicBarrierTest {

private static CyclicBarrier cyclicBarrier = new CyclicBarrier(5,()->{

log.info("callback is running");//Priority after entering the barrier

});

public static void main(String[] args) throws InterruptedException {

ExecutorService executorService = Executors.newCachedThreadPool();

for (int i=0; i<10; i++){

final int threadNum = i;

Thread.sleep(1000);

executorService.execute(()->{

try {

race(threadNum);

} catch (InterruptedException e) {

e.printStackTrace();

} catch (BrokenBarrierException e) {

log.error("exception", e);

}

});

}

}

private static void race(int threadNum) throws InterruptedException, BrokenBarrierException {

Thread.sleep(1000);

log.info("{} is ready",threadNum);

cyclicBarrier.await();

log.info("{} continue",threadNum);

}

}Differences between CountDownLatch and CyclicBarrier:

| The counter of CountDownLatch can only be used once, while the counter of CyclicBarrier can be reset by rset method and recycled. |

| CountDownLatch enables one or more threads to wait for other threads to complete an operation before continuing to execute downward (the relationship between one or more threads waiting for other threads to complete). CyclicBarrier enables multiple threads to wait for each other until all threads meet the conditions before performing subsequent operations (multiple threads wait for each other). |

| CyclicBarrier also supports the Runnable parameter, which indicates the contents of the Runnable to be executed first after the barrier is completed. |

ReentrantLock

ReentrantLock is mainly implemented by CAS+AQS queue. It supports fair locks and unfair locks, and their implementation is similar.

| Reentrant mutex, which has the same basic behavior and semantics as the implicit monitor lock accessed using synchronized methods and statements, but is more powerful. |

| ReentrantLock holds the object monitor. |

| The lock held by ReentrantLock needs to be unlocked () manually. |

Unique features of ReentrantLock:

| You can specify whether the lock is fair or unfair. |

| A Condition class is provided to group the threads that need to be awakened. |

| It provides a thread mechanism that can interrupt waiting for locks, lock.lockInterruptibly(). |

characteristic:

| ReentrantLock holds the object monitor. |

| Fair lock means that the order in which threads acquire locks is allocated according to the order in which threads queue. |

| An unfair lock is a preemptive mechanism for obtaining locks. Locks are obtained randomly. Those who come first may not necessarily get the lock first. From this perspective, synchronized is actually an unfair lock. |

The way of unfair lock may cause some threads to fail to get the lock all the time. Naturally, it is unfair.

When new ReentrantLock is used, a single parameter constructor indicates whether a fair lock or a non fair lock is constructed. Just pass in true (ReentrantLock is a non fair lock by default).

public class ReentrantLockTest {

public static int clientTotal = 5000;//Total requests

public static int threadTotal = 100;//Number of threads executing concurrently

public static int count = 0;

private static Lock lock = new ReentrantLock();

public static void main(String[] args) throws InterruptedException {

ExecutorService executorService = Executors.newCachedThreadPool();

final Semaphore semaphore = new Semaphore(threadTotal);

final CountDownLatch countDownLatch = new CountDownLatch(clientTotal);

for(int i=0; i<clientTotal; i++){

executorService.execute(()->{

try {

semaphore.acquire();

add();

semaphore.release();

} catch (InterruptedException e) {

e.printStackTrace();

}

countDownLatch.countDown();

});

}

countDownLatch.await();

System.out.println(count);

executorService.shutdown();

}

private static void add(){

lock.lock();

try{

count++;

}finally {

lock.unlock();

}

}

}ReentrantLock API:

| getHoldCount() | Returns the number of times lock() was called by the current thread. The getHoldCount() method returns as many times as the lock () method of the same ReentrantLock of the same thread is called. |

| getQueueLength() | Gets the estimated number of threads waiting to acquire this lock. |

| isFair() | Gets whether this lock is fair. |

| hasQueuedThread() | Queries whether the specified thread is waiting to get the specified object monitor. |

| hasQueuedThreads() | Queries whether a thread is waiting to get the specified object monitor. |

| isHeldByCurrentThread() | Whether the object monitor is held by the current thread. |

| isLocked() | Whether this object monitor is held by any thread. |

| tryLock() | When calling the try() method, if the lock is not held by another thread, it returns true, otherwise it returns false. |

| tryLock(long timeout, TimeUnit unit) | If the lock is obtained within the specified waiting time, it returns true; otherwise, it returns false. |

| tryLock() | It only detects whether the lock is. It does not have the function of lock(). To obtain the lock, you have to call the lock() method. |

Read write lock

ReentrantReadWriteLock

The write lock can only be obtained without any read-write lock. It may cause starvation of threads that write locks.

| Read / write locks represent two locks. One is a lock related to read operations, which is called a shared lock; The other is the lock related to write operation, which is called exclusive lock. |

| Read and read are not mutually exclusive, because read operations do not have thread safety problems. |

| Write and write are mutually exclusive to avoid one write operation affecting another write operation and causing thread safety problems. |

| Read and write are mutually exclusive to avoid that the write operation modifies the content during the read operation and causes thread safety problems. |

| To sum up, multiple threads can read at the same time, but only one Thread is allowed to write at the same time |

If there is always a read lock, it may cause the write lock thread to starve.

public class ReentrantReadWriteLockTest {

private final Map<String, Data> map = new TreeMap<>();

private final static ReentrantReadWriteLock lock = new ReentrantReadWriteLock();

private final static Lock readLock = lock.readLock();

private final static Lock writeLock = lock.writeLock();

public Data get(String key) {

readLock.lock();

try{

return map.get(key);

}finally {

readLock.unlock();

}

}

private Set<String> getAllKeys() {

readLock.lock();

try{

return map.keySet();

}finally {

readLock.unlock();

}

}

public Data put(String key, Data value){

writeLock.lock();

try{

return map.put(key,value);

}finally {

writeLock.unlock();

}

}

class Data{

}

}StamptedLock

public class StampedLockTest{

class Point {

private double x, y;

private final StampedLock sl = new StampedLock();

void move(double deltaX, double deltaY) { // an exclusively locked method

long stamp = sl.writeLock();

try {

x += deltaX;

y += deltaY;

} finally {

sl.unlockWrite(stamp);

}

}

//Let's take a look at the optimistic lock reading case

double distanceFromOrigin() { // A read-only method

long stamp = sl.tryOptimisticRead(); //Get an optimistic read lock

double currentX = x, currentY = y; //Read the two fields into the local local variable

if (!sl.validate(stamp)) { //Check whether there are other write locks after issuing optimistic read locks?

stamp = sl.readLock(); //If not, we get a read lock again

try {

currentX = x; // Read the two fields into the local local variable

currentY = y; // Read the two fields into the local local variable

} finally {

sl.unlockRead(stamp);

}

}

return Math.sqrt(currentX * currentX + currentY * currentY);

}

//The following is a pessimistic lock reading case

void moveIfAtOrigin(double newX, double newY) { // upgrade

// Could instead start with optimistic, not read mode

long stamp = sl.readLock();

try {

while (x == 0.0 && y == 0.0) { //Cycle to check whether the current state meets the requirements

long ws = sl.tryConvertToWriteLock(stamp); //Convert read lock to write lock

if (ws != 0L) { //This is to confirm whether the conversion to write lock is successful

stamp = ws; //If the ticket is successfully replaced

x = newX; //Make a state change

y = newY; //Make a state change

break;

} else { //If it cannot be successfully converted to write lock

sl.unlockRead(stamp); //We explicitly release the read lock

stamp = sl.writeLock(); //Explicitly write the lock directly, and then try again through a loop

}

}

} finally {

sl.unlock(stamp); //Release the read lock or write lock

}

}

}

}Condition

synchronized can be combined with wait() and nitrofy() / notifyall() methods to realize the wait / notification model. ReentrantLock can also be used, but conditions need to be used, and conditions have better flexibility, which is embodied in:

| Multiple Condition instances can be created in a Lock to realize multi-channel notification. |

| When the notify() method notifies, the notified thread is randomly selected by the Java virtual machine, but ReentrantLock combined with Condition can realize selective notification, which is very important. |

Multiple Condition instances can be created in a Lock to realize multi-channel notification.

public class ConditionTest{

public static void main(String[] args) {

ReentrantLock reentrantLock = new ReentrantLock();

Condition condition = reentrantLock.newCondition();

new Thread(() -> {

try {

reentrantLock.lock();

log.info("wait signal"); // 1

condition.await();

} catch (InterruptedException e) {

e.printStackTrace();

}

log.info("get signal"); // 4

reentrantLock.unlock();

}).start();

new Thread(() -> {

reentrantLock.lock();

log.info("get lock"); // 2

try {

Thread.sleep(3000);

} catch (InterruptedException e) {

e.printStackTrace();

}

condition.signalAll();

log.info("send signal ~ "); // 3

reentrantLock.unlock();

}).start();

}

}When the notify() method notifies, the notified thread is randomly selected by the Java virtual machine, but ReentrantLock combined with Condition can realize selective notification.

Note that if one Condition is used, then multiple threads are sent to await() by the Condition, and the Condition (signalAll()) method wakes up all threads.

What if you want to wake up some threads separately? Just create multiple conditions, which will also help to improve the efficiency of the program. It is very common to use multiple conditions, such as ArrayBlockingQueue.

Let's take a look at the simplest usage of using Condition to implement the wait / notification model. The following code notes that before await() and signal(), lock() must obtain the lock. After use, unlock() releases the lock in finally. This is the same as that before wait()/notify()/notifyAll()

Comparison between synchronized and ReentrantLock:

| Synchronized is a keyword. Like if...else... It is a syntax level implementation. Therefore, synchronized lock acquisition and lock release are completed by the Java virtual machine. ReentrantLock is a class level implementation. Therefore, the user needs to operate the lock acquisition and lock release. Remind me again that when ReentrantLock () is finished, you must manually unlock(). |

| synchronized is simple, and simplicity means inflexibility. The lock mechanism of ReentrantLock provides great flexibility for users. It is reflected incisively and vividly in Hashtable and ConcurrentHashMap. synchronized locks the entire Hash table as soon as it is locked, while concurrent HashMap uses ReentrantLock to realize lock separation. Only segment s are locked, not the entire Hash table. |

| synchronized is an unfair lock, and ReentrantLock can specify whether the lock is fair or unfair. |

| synchronized implements the wait / notification mechanism. The threads notified are random, and ReentrantLock implements the wait / notification mechanism to selectively notify. |

| Compared with synchronized, ReentrantLock provides users with a variety of methods to obtain lock information, such as whether lock is obtained by the current thread, how many times lock is called by the same thread, whether lock is obtained by any thread, etc. |

| To sum up, I think if you only need to lock simple methods and simple code blocks, consider using synchronized. In complex multithreading scenarios, consider using ReentrantLock. Of course, this is only a suggestion, but it still needs to be analyzed in specific scenarios. |

Callable, Future, and FutureTask

The difference between Callable and Runnable

| Runnable has no return value; Callable can return execution results. It is a generic type. It can be used to obtain asynchronous execution results in combination with Future and FutureTask. |

| The call() method of the Callable interface allows exceptions to be thrown; The run() method exception of Runnable can only be digested internally and cannot be thrown upward. |

Note: the callable interface supports the return of execution results, which needs to be obtained by calling futureask. Get(). This method will block the continued execution of the main process. If it is not called, it will not be blocked.

Callable

Callable is similar to Runnable. Both are designed for classes whose instances may be executed by another thread. The main difference is that Runnable does not return the result of thread operation. Callable can (if the thread needs to return the running result)

Future

public class FutureExample {

static class MyCallable implements Callable<String> {

@Override

public String call() throws Exception {

log.info("do something in callable");

Thread.sleep(5000);

return "Done";

}

}

public static void main(String[] args) throws Exception {

ExecutorService executorService = Executors.newCachedThreadPool();

Future<String> future = executorService.submit(new MyCallable());

log.info("do something in main");

Thread.sleep(1000);

String result = future.get();

log.info("result: {}", result);

}

}Future is an interface that represents the results of asynchronous calculation. It provides a method to check whether the calculation is completed, so as to wait for the completion of the calculation and obtain the calculation results. Future provides four methods: get(), cancel(), isCancel(), isDone(), indicating that future has three functions:

| Judge whether the task is completed |

| Interrupt task |

| Get task execution results |

FutureTask

FutureTask is the implementation class of Future, which provides the basic implementation of Future. FutureTask can be used to wrap Callable or Runnable objects. Because FutureTask implements Runnable, FutureTask can also be submitted to the Executor.

public class FutureTaskExample {

public static void main(String[] args) throws Exception {

FutureTask<String> futureTask = new FutureTask<String>(new Callable<String>() {

@Override

public String call() throws Exception {

log.info("do something in callable");

Thread.sleep(5000);

return "Done";

}

});

new Thread(futureTask).start();

log.info("do something in main");

Thread.sleep(1000);

String result = futureTask.get();

log.info("result: {}", result);

}

}usage method:

Callable, Future and FutureTask are generally used in conjunction with thread pool, because the parent class AbstractExecutorService of ThreadPoolExecutor of thread pool provides three submit methods:

| public Future<?> submit(Runnable task){...} |

| public <T> Future<T> submit<Runnable task, T result>{...} |

| public <T> Future<T> submit<Callable<T> task>{...} |

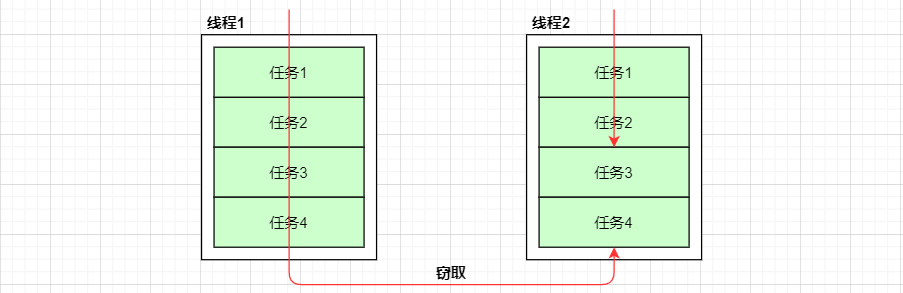

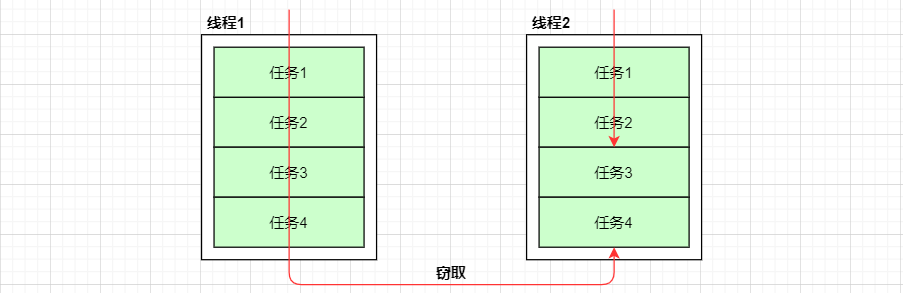

Fork/Join:

Work stealing algorithm + double ended queue:

Fork/Join framework is a framework that divides a large task into several small tasks, and finally summarizes the results of each small task to obtain the results of the large task. The Fork/Join framework does two things:

Fork/Join framework is a framework that divides a large task into several small tasks, and finally summarizes the results of each small task to obtain the results of the large task. The Fork/Join framework does two things:

1. Task segmentation: first, the Fork/Join framework needs to divide large tasks into sufficiently small subtasks. If the subtasks are relatively large, the subtasks should be continuously divided.

2. Execute tasks and merge results: the divided subtasks are placed in the dual end queue, and then several startup threads obtain task execution from the dual end queue respectively. The results of subtask execution are placed in another queue.

public class ForkJoinTaskTest extends RecursiveTask<Integer> {

public static final int threshold = 2;

private int start;

private int end;

public ForkJoinTaskExample(int start, int end) {

this.start = start;

this.end = end;

}

@Override

protected Integer compute() {

int sum = 0;

boolean canCompute = (end - start) <= threshold;//If the task is small enough, calculate the task

if (canCompute) {

for (int i = start; i <= end; i++) {

sum += i;

}

} else {

int middle = (start + end) / 2; // If the task is greater than the threshold, it is split into two subtasks for calculation

ForkJoinTaskExample leftTask = new ForkJoinTaskExample(start, middle);

ForkJoinTaskExample rightTask = new ForkJoinTaskExample(middle + 1, end);

leftTask.fork();// Execute subtasks

rightTask.fork();

int leftResult = leftTask.join();// Wait for task execution to end and merge its results

int rightResult = rightTask.join();

sum = leftResult + rightResult;// Merge subtasks

}

return sum;

}

public static void main(String[] args) {

ForkJoinPool forkjoinPool = new ForkJoinPool();

ForkJoinTaskExample task = new ForkJoinTaskExample(1, 100);//Generate a calculation task to calculate 1 + 2 + 3 + 4

Future<Integer> result = forkjoinPool.submit(task);//Perform a task

try {

log.info("result:{}", result.get());

} catch (Exception e) {

log.error("exception", e);

}

}

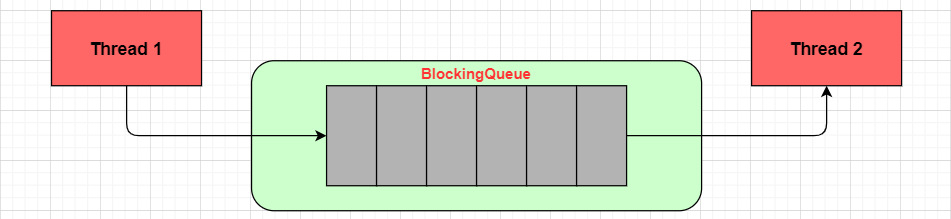

}BlockingQueue

BlockingQueue solves the problem of how to "transmit" data efficiently and safely in multithreading. These efficient and thread safe queue classes bring great convenience for us to quickly build high-quality multithreaded programs.

API:

| boolean add(E e)

| Set the given element to the queue. If the setting is successful, it returns true; otherwise, it returns false. If you are setting a value to a queue with a limited length, the offer() method is recommended. |

| boolean offer(E e) | Set the given element to the queue. If the setting is successful, it returns true, otherwise it returns false. The value of e cannot be null, otherwise a null pointer exception will be thrown. |

| void put(E e) throws InterruptedException | Set the element to the queue. If there is no extra space in the queue, the method will block until there is extra space in the queue. |

| boolean offer(E e, long timeout, TimeUnit unit)throws InterruptedException | Set the given element to the queue within a given time. If the setting is successful, it returns true, otherwise it returns false |

| E take() throws InterruptedException | Get the value from the queue. If there is no value in the queue, the thread will block until there is a value in the queue and the method obtains the value. |

| E poll(long timeout, TimeUnit unit)throws InterruptedException | In a given time, get the value from the queue. When the time comes, directly call the ordinary poll method. If it is null, it will directly return null. |

| int remainingCapacity() | Gets the space remaining in the queue. |

| boolean remove(Object o) | Removes the specified value from the queue. |

| public boolean contains(Object o) | Determine whether the value is in the queue. |

| int drainTo(Collection<? super E> c) | Remove all the values in the queue and set them to a given set concurrently. |

| int drainTo(Collection<? super E> c, int maxElements) | Specify the maximum number limit, remove all the values in the queue, and set them concurrently to the given collection. |

Members of the blocking queue

| queue | Boundedness | lock | data structure |

| ArrayBlockingQueue | bounded | Lock | arrayList |

| Is a bounded blocking queue implemented by array. This queue sorts the elements according to the first in first out (FIFO) principle. Supports fair locks and unfair locks. [Note: each thread may queue up when acquiring a lock. If the request of the thread that acquires the lock first must be satisfied first in terms of waiting time, the lock is fair. On the contrary, the lock is unfair. Acquiring a lock fairly means that the thread with the longest waiting time acquires the lock first] | |||

| LinkedBlockingQueue | optionally-bounded | Lock | linkedList |

| A bounded queue composed of linked list structure. The length of this queue is Integer.MAX_VALUE. This queue is sorted in first in first out order. | |||

| PriorityBlockingQueue | unbounded | Lock | heap |

| An unbounded queue that supports thread priority sorting. It sorts in natural order by default. You can also customize the implementation of compareTo() method to specify the element sorting rules. The order of elements with the same priority cannot be guaranteed. | |||

| DelayQueue | unbounded | Lock | heap |

| An unbounded queue that implements PriorityBlockingQueue and delays acquisition. When creating elements, you can specify how long it takes to obtain the current element from the queue. Elements can only be obtained from the queue after the delay expires. (DelayQueue can be used in the following application scenarios: 1. Design of cache system: you can use DelayQueue to save the validity period of cache elements, and use a thread to cycle query DelayQueue. Once the elements can be obtained from DelayQueue, it indicates that the cache validity period has expired. 2. Scheduled task scheduling. Use DelayQueue to save the tasks and execution time that will be executed on the current day, and once from delayqu Get the task from eue and execute it, For example, TimerQueue is implemented using DelayQueue.) | |||

| SynchronousQueue | bounded | Lock | nothing |

| A blocking queue that does not store elements. Each put operation must wait for the take operation, otherwise elements cannot be added. Supports fair locks and unfair locks. A usage scenario for synchronous queue is in the thread pool. Executors.newCachedThreadPool() uses SynchronousQueue. This thread pool creates new threads as needed (when a new task arrives). If there are idle threads, they will be reused, and the threads will be recycled after they are idle for 60 seconds. | |||

| LinkedTransferQueue | unbounded | Lock | heap |

| An unbounded blocking queue composed of linked list structure is equivalent to other queues. The LinkedTransferQueue queue has more transfer and tryTransfer methods. | |||

| LinkedBlockingDeque | unbounded | No lock | heap |

| A bidirectional blocking queue composed of linked list structure. Both the head and tail of the queue can add and remove elements. When multithreading is concurrent, the lock contention can be reduced to half at most. | |||