title: audio and video series 4: acquisition of audio and video frame data by ffmpeg

categories:[ffmpeg]

tags: [audio and video programming]

date: 2021/11/29

< div align = 'right' > Author: Hackett < / div >

<div align ='right'> WeChat official account: overtime apes </div>

1, AVFrame decoded video

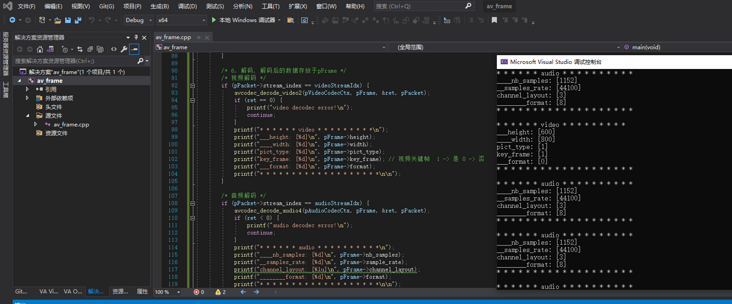

1. First post a screenshot of 20 frames of flv file data parsed by ffmpeg. AVFrame is a structure containing many code stream parameters. The source code of the structure is located in libavcodec/avcodec.h

Full code:

#include <stdio.h>

#ifdef __cplusplus

extern "C" {

#endif

#include <libavcodec/avcodec.h>

#include <libavformat/avformat.h>

#ifdef __cplusplus

};

#endif

int openCodecContext(const AVFormatContext* pFormatCtx, int* pStreamIndex, enum AVMediaType type, AVCodecContext** ppCodecCtx) {

int streamIdx = -1;

// Get stream subscript

for (int i = 0; i < pFormatCtx->nb_streams; i++) {

if (pFormatCtx->streams[i]->codec->codec_type == type) {

streamIdx = i;

break;

}

}

if (streamIdx == -1) {

printf("find video stream failed!\n");

exit(-1);

}

// Find decoder

AVCodecContext* pCodecCtx = pFormatCtx->streams[streamIdx]->codec;

AVCodec* pCodec = avcodec_find_decoder(pCodecCtx->codec_id);

if (NULL == pCodec) {

printf("avcode find decoder failed!\n");

exit(-1);

}

//Open decoder

if (avcodec_open2(pCodecCtx, pCodec, NULL) < 0) {

printf("avcode open failed!\n");

exit(-1);

}

*ppCodecCtx = pCodecCtx;

*pStreamIndex = streamIdx;

return 0;

}

int main(void)

{

AVFormatContext* pInFormatCtx = NULL;

AVCodecContext* pVideoCodecCtx = NULL;

AVCodecContext* pAudioCodecCtx = NULL;

AVPacket* pPacket = NULL;

AVFrame* pFrame = NULL;

int ret;

/* Support for local files and network URLs */

const char streamUrl[] = "./ouput_1min.flv";

/* 1. register */

av_register_all();

pInFormatCtx = avformat_alloc_context();

/* 2. Open stream */

if (avformat_open_input(&pInFormatCtx, streamUrl, NULL, NULL) != 0) {

printf("Couldn't open input stream.\n");

return -1;

}

/* 3. Get stream information */

if (avformat_find_stream_info(pInFormatCtx, NULL) < 0) {

printf("Couldn't find stream information.\n");

return -1;

}

int videoStreamIdx = -1;

int audioStreamIdx = -1;

/* 4. Find and open decoder */

openCodecContext(pInFormatCtx, &videoStreamIdx, AVMEDIA_TYPE_VIDEO, &pVideoCodecCtx);

openCodecContext(pInFormatCtx, &audioStreamIdx, AVMEDIA_TYPE_AUDIO, &pAudioCodecCtx);

pPacket = av_packet_alloc();

pFrame = av_frame_alloc();

int cnt = 20; // Read 20 frames of data (audio and video)

while (cnt--) {

/* 5. Read the stream data, and store the uncoded data in the pPacket */

ret = av_read_frame(pInFormatCtx, pPacket);

if (ret < 0) {

printf("av_read_frame error\n");

break;

}

/* 6. Decode and store the decoded data in pFrame */

/* Video decoding */

if (pPacket->stream_index == videoStreamIdx) {

avcodec_decode_video2(pVideoCodecCtx, pFrame, &ret, pPacket);

if (ret == 0) {

printf("video decodec error!\n");

continue;

}

printf("* * * * * * video * * * * * * * * *\n");

printf("___height: [%d]\n", pFrame->height);

printf("____width: [%d]\n", pFrame->width);

printf("pict_type: [%d]\n", pFrame->pict_type);

printf("key_frame: [%d]\n", pFrame->key_frame); // Video keyframe 1 - > Yes 0 - > no

printf("___format: [%d]\n", pFrame->format);

printf("* * * * * * * * * * * * * * * * * * *\n\n");

}

/* Audio decoding */

if (pPacket->stream_index == audioStreamIdx) {

avcodec_decode_audio4(pAudioCodecCtx, pFrame, &ret, pPacket);

if (ret < 0) {

printf("audio decodec error!\n");

continue;

}

printf("* * * * * * audio * * * * * * * * * *\n");

printf("____nb_samples: [%d]\n", pFrame->nb_samples);

printf("__samples_rate: [%d]\n", pFrame->sample_rate);

printf("channel_layout: [%lu]\n", pFrame->channel_layout);

printf("________format: [%d]\n", pFrame->format);

printf("* * * * * * * * * * * * * * * * * * *\n\n");

}

av_packet_unref(pPacket); /* Set the reference count of the cache space to - 1 and the other fields in the Packet to the initial value. If the reference count is 0, the cache space is automatically released */

}

/* Release resources */

av_frame_free(&pFrame);

av_packet_free(&pPacket);

avcodec_close(pVideoCodecCtx);

avcodec_close(pAudioCodecCtx);

avformat_close_input(&pInFormatCtx);

return 0;

}2. Briefly introduce the meaning of each function in the process:

av_register_all(): register all codecs of FFmpeg.

avformat_open_input(): open the AVFormatContext of the stream.

avformat_find_stream_info(): get the information of the stream.

avcodec_find_encoder(): find the encoder.

avcodec_open2(): open the encoder.

av_read_frame(): read stream data.

avcodec_decode_video2(): video decoding.

av_write_frame(): write the encoded video code stream to the file.

av_packet_unref(): count the reference of the cache space to - 1 and set other fields in the Packet to the initial value. If the reference count is 0, the cache space is automatically released.

2, AVFrame data structure

AVFrame structure is generally used to store original data (i.e. uncompressed data, such as YUV and RGB for video and PCM for audio). In addition, it also contains some relevant information.

The comments on the source code here are too lengthy, so they are omitted.

typedef struct AVFrame {

#define AV_NUM_DATA_POINTERS 8

uint8_t *data[AV_NUM_DATA_POINTERS];

int linesize[AV_NUM_DATA_POINTERS];

uint8_t **extended_data;

int width, height;

int nb_samples;

int format;

int key_frame;

enum AVPictureType pict_type;

AVRational sample_aspect_ratio;

int64_t pts;

#if FF_API_PKT_PTS

attribute_deprecated

int64_t pkt_pts;

#endif

int64_t pkt_dts;

int coded_picture_number;

int display_picture_number;

int quality;

void *opaque;

#if FF_API_ERROR_FRAME

attribute_deprecated

uint64_t error[AV_NUM_DATA_POINTERS];

#endif

int repeat_pict;

int interlaced_frame;

int top_field_first;

int palette_has_changed;

int64_t reordered_opaque;

int sample_rate;

uint64_t channel_layout;

AVBufferRef *buf[AV_NUM_DATA_POINTERS];

AVBufferRef **extended_buf;

int nb_extended_buf;

AVFrameSideData **side_data;

int nb_side_data;

#define AV_FRAME_FLAG_CORRUPT (1 << 0)

#define AV_FRAME_FLAG_DISCARD (1 << 2)

int flags;

enum AVColorRange color_range;

enum AVColorPrimaries color_primaries;

enum AVColorTransferCharacteristic color_trc;

enum AVColorSpace colorspace;

enum AVChromaLocation chroma_location;

int64_t best_effort_timestamp;

int64_t pkt_pos;

int64_t pkt_duration;

AVDictionary *metadata;

int decode_error_flags;

#define FF_DECODE_ERROR_INVALID_BITSTREAM 1

#define FF_DECODE_ERROR_MISSING_REFERENCE 2

#define FF_DECODE_ERROR_CONCEALMENT_ACTIVE 4

#define FF_DECODE_ERROR_DECODE_SLICES 8

int channels;

int pkt_size;

#if FF_API_FRAME_QP

attribute_deprecated

int8_t *qscale_table;

attribute_deprecated

int qstride;

attribute_deprecated

int qscale_type;

attribute_deprecated

AVBufferRef *qp_table_buf;

#endif

AVBufferRef *hw_frames_ctx;

AVBufferRef *opaque_ref;

size_t crop_top;

size_t crop_bottom;

size_t crop_left;

size_t crop_right;

AVBufferRef *private_ref;

} AVFrame;Next, let's focus on some common structure members:

2.1 data

uint8_t *data[AV_NUM_DATA_POINTERS]; // Original data after decoding (YUV, RGB for video and PCM for audio)

data is a pointer array. Each element of the array is a pointer to a plane of an image in video or a plane of a channel in audio.

2.2 linesize

int linesize[AV_NUM_DATA_POINTERS]; // The size of "one row" data in data. Note: it may not be equal to the width of the image, but is generally greater than the width of the image

For video, linesize each element is the size (in bytes) of a row of images in an image plane. Pay attention to alignment requirements

For audio, linesize each element is the size (in bytes) of an audio plane

Linesize may fill in some additional data due to performance considerations, so linesize may be larger than the actual corresponding audio and video data size.

2.3 width, height;

int width, height; // Video frame width and height (1920x10801280x720...)

2.4 nb_samples

int nb_samples; // The number of sampling points contained in a single channel in an audio frame.

2.5 format

int format; // Original data type after decoding

For video frames, this value corresponds to enum AVPixelFormat

enum AVPixelFormat {

AV_PIX_FMT_NONE = -1,

AV_PIX_FMT_YUV420P, ///< planar YUV 4:2:0, 12bpp, (1 Cr & Cb sample per 2x2 Y samples)

AV_PIX_FMT_YUYV422, ///< packed YUV 4:2:2, 16bpp, Y0 Cb Y1 Cr

AV_PIX_FMT_RGB24, ///< packed RGB 8:8:8, 24bpp, RGBRGB...

AV_PIX_FMT_BGR24, ///< packed RGB 8:8:8, 24bpp, BGRBGR...

AV_PIX_FMT_YUV422P, ///< planar YUV 4:2:2, 16bpp, (1 Cr & Cb sample per 2x1 Y samples)

AV_PIX_FMT_YUV444P, ///< planar YUV 4:4:4, 24bpp, (1 Cr & Cb sample per 1x1 Y samples)

AV_PIX_FMT_YUV410P, ///< planar YUV 4:1:0, 9bpp, (1 Cr & Cb sample per 4x4 Y samples)

AV_PIX_FMT_YUV411P, ///< planar YUV 4:1:1, 12bpp, (1 Cr & Cb sample per 4x1 Y samples)

AV_PIX_FMT_GRAY8, ///< Y , 8bpp

AV_PIX_FMT_MONOWHITE, ///< Y , 1bpp, 0 is white, 1 is black, in each byte pixels are ordered from the msb to the lsb

AV_PIX_FMT_MONOBLACK, ///< Y , 1bpp, 0 is black, 1 is white, in each byte pixels are ordered from the msb to the lsb

AV_PIX_FMT_PAL8, ///< 8 bit with PIX_FMT_RGB32 palette

AV_PIX_FMT_YUVJ420P, ///< planar YUV 4:2:0, 12bpp, full scale (JPEG), deprecated in favor of PIX_FMT_YUV420P and setting color_range

...((omitted)

} For audio frames, this value corresponds to enum AVSampleFormat

enum AVSampleFormat {

AV_SAMPLE_FMT_NONE = -1,

AV_SAMPLE_FMT_U8, ///< unsigned 8 bits

AV_SAMPLE_FMT_S16, ///< signed 16 bits

AV_SAMPLE_FMT_S32, ///< signed 32 bits

AV_SAMPLE_FMT_FLT, ///< float

AV_SAMPLE_FMT_DBL, ///< double

AV_SAMPLE_FMT_U8P, ///< unsigned 8 bits, planar

AV_SAMPLE_FMT_S16P, ///< signed 16 bits, planar

AV_SAMPLE_FMT_S32P, ///< signed 32 bits, planar

AV_SAMPLE_FMT_FLTP, ///< float, planar

AV_SAMPLE_FMT_DBLP, ///< double, planar

AV_SAMPLE_FMT_NB ///< Number of sample formats. DO NOT USE if linking dynamically

}; 2.6 key_frame

int key_frame; // Is it a keyframe

2.7 pict_type

enum AVPictureType pict_type; // Frame type (I,B,P...)

Video frame type (I, B, P, etc.)

enum AVPictureType {

AV_PICTURE_TYPE_NONE = 0, ///< Undefined

AV_PICTURE_TYPE_I, ///< Intra

AV_PICTURE_TYPE_P, ///< Predicted

AV_PICTURE_TYPE_B, ///< Bi-dir predicted

AV_PICTURE_TYPE_S, ///< S(GMC)-VOP MPEG-4

AV_PICTURE_TYPE_SI, ///< Switching Intra

AV_PICTURE_TYPE_SP, ///< Switching Predicted

AV_PICTURE_TYPE_BI, ///< BI type

};2.8 sample_aspect_ratio

AVRational sample_aspect_ratio; // Video aspect ratio (16:9, 4:3...)

2.9 pts

int64_t pts; // The time stamp is displayed in time_base

2.10 pkt_pts

int64_t pkt_pts;

The decoding timestamp in the packet corresponding to this frame. This value is obtained by copying DTS from the corresponding packet (decoding to generate this frame).

If there is only dts in the corresponding packet and pts is not set, this value is also the pts of this frame.

2.11 coded_picture_number

int coded_picture_number; // Coded frame sequence number

2.12 display_picture_number

int display_picture_number; // Display frame sequence number

2.13 interlaced_frame

int interlaced_frame; // Is it interlaced

2.14 sample_rate

int sample_rate; // Audio sampling rate

2.15 buf

AVBufferRef *buf[AV_NUM_DATA_POINTERS];

The data of this frame can be managed by AVBufferRef, which provides AVBuffer reference mechanism

AVBuffer is a commonly used buffer in FFmpeg. The buffer uses the reference counted mechanism

2.16 pkt_pos

int64_t pkt_pos; // The position offset of the last packet thrown into the decoder in the input file

2.17 pkt_duration

int64_t pkt_duration;// The duration of the corresponding packet, in avstream - > time_ base

2.18 channels

int channels;// Number of audio channels

2.19 pkt_size

int pkt_size;// Corresponding packet size

2.20 crop_

size_t crop_top; size_t crop_bottom; size_t crop_left; size_t crop_right;

Used for video frame image cutting. The four values are the number of pixels cut from the top / bottom / left / right boundary of the frame.

If you think the article is good, you can give it a "three company"

I'm an overtime ape. I'll see you next time