Audio Open Output business logic

As the name suggests, open output can be understood as opening an output channel on which specific data such as a certain format and sampling rate are running. The end of the channel is an audio device for playback; Therefore, the open output service is to initialize the channel and establish various required functions for the channel.

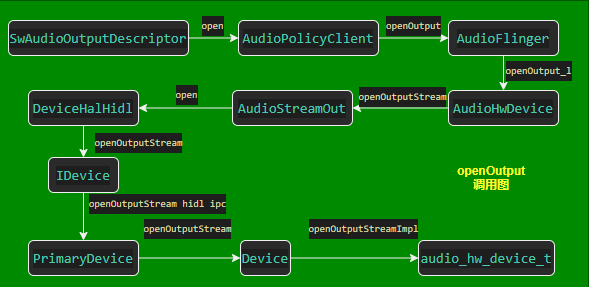

General flow chart of openOutput

Key points in openOutput process

Process start point

This analysis starts with the initialize function of AudioPolicyManager class. When AudioPolicyManager loads and parses the audio configuration file audio_ policy_ After configuration, each module in the configuration file is loaded loadHwModule , traverse all data streams in the module, and finally open each stream and its supported devices; The following code:

status_t AudioPolicyManager::initialize() {

for (const auto& hwModule : mHwModulesAll) {

//The configuration file is loaded as module. Now we need to load the so source code. mpClientInterface is the AudioPolicyService side, which is responsible for loading the source code

hwModule->setHandle(mpClientInterface->loadHwModule(hwModule->getName()));

if (hwModule->getHandle() == AUDIO_MODULE_HANDLE_NONE) {

ALOGW("could not open HW module %s", hwModule->getName());

continue;

}

mHwModules.push_back(hwModule);

for (const auto& outProfile : hwModule->getOutputProfiles()) {

//Select the highest priority supported by the current output stream, mdefaultoutputdevice > supportdevice [0]

const DeviceVector &supportedDevices = outProfile->getSupportedDevices();

//M availableoutputdevices is an attached label device

DeviceVector availProfileDevices = supportedDevices.filter(mAvailableOutputDevices);

sp<DeviceDescriptor> supportedDevice = 0;

if (supportedDevices.contains(mDefaultOutputDevice)) {

//The default bound device is preferred

supportedDevice = mDefaultOutputDevice;

} else {

// choose first device present in profile's SupportedDevices also part of

// mAvailableOutputDevices.

if (availProfileDevices.isEmpty()) {

continue;

}

//First priority

supportedDevice = availProfileDevices.itemAt(0);

}

if (!mAvailableOutputDevices.contains(supportedDevice)) {

continue;

}

//Finally, the supportedDevice is a DeviceDescriptor, which exists in the attached or defauleAttach tag

sp<SwAudioOutputDescriptor> outputDesc = new SwAudioOutputDescriptor(outProfile,

mpClientInterface);

audio_io_handle_t output = AUDIO_IO_HANDLE_NONE;

status_t status = outputDesc->open(nullptr, DeviceVector(supportedDevice),

AUDIO_STREAM_DEFAULT,

AUDIO_OUTPUT_FLAG_NONE, &output);

......

//Open the successful stream and join it

addOutput(output, outputDesc);

setOutputDevices(outputDesc,

DeviceVector(supportedDevice),

true,

0,

NULL);

}

updateDevicesAndOutputs();

return status;

}

The above code execution logic:

- Traverse each HwModule, and traverse all ioprofiles in the HwModule.

- IOProfile is understood as a data stream. It may support multiple formats, sampleRate, channel, etc. these parameters need to be passed when opening later. If an IOProfile supports multiple formats, which one to choose?

- At the same time, if the IOProfile is an output stream, the data stream will eventually flow to the audio device. There may be multiple devices supporting the playback of this stream, which device to choose?

The above code has told us how to select which audio Device for an IOProfile. The rules are as follows:

a. Get all supported device s of IOProfile first

b. Use mcavailableoutputdevices to filter all the devices in the previous step, that is, take an intersection. This mavailleoutputdevices is actually audio_ policy_ Devices in the attachedDevices tag in the configuration file

c. If the filtered device set devices contains the device specified in the defaultOutputDevice tag of the configuration file, select it; otherwise, select the first device in the set as the selected device;

In this way, you can determine which device to open, and after the clock is punched successfully, use the mOutputs member variable to store it;

If you don't know what the above tags and configuration files are, you may still need to learn This article

Which format does IOProfile choose?

The format determination logic is in the open function of SwAudioOutputDescriptor, as follows:

status_t SwAudioOutputDescriptor::open(const audio_config_t *config,

const DeviceVector &devices,

audio_stream_type_t stream,

audio_output_flags_t flags,

audio_io_handle_t *output)

{

mDevices = devices;

//address is usually an empty string, and most of them are empty strings here

const String8& address = devices.getFirstValidAddress();

/* devices Is a collection. The types here return the type or of all devices,

* Moreover, the type type can reflect the device type, and name, such as AUDIO_DEVICE_OUT_EARPIECE

* It is an output type device called earPiece

**/

audio_devices_t device = devices.types();

audio_config_t lConfig;

if (config == nullptr) {

lConfig = AUDIO_CONFIG_INITIALIZER;

/* SwAudioOutputDescriptor There are multiple profiles in the IOProfile, which supports multiple formats?

* Take the valid one, that is, the one whose samplerate, channel and format are not empty and whose format is the largest,

* mSampleRate It is determined when the constructor*/

lConfig.sample_rate = mSamplingRate;

lConfig.channel_mask = mChannelMask;

lConfig.format = mFormat;

} else {

lConfig = *config;

}

......

ALOGV("opening output for device %s profile %p name %s",

mDevices.toString().c_str(), mProfile.get(), mProfile->getName().string());

//It will go to the driver layer kernel and open an output. A thread will be created for it in audioFlinger and stored with key value pairs. The key is the returned output and saved locally

status_t status = mClientInterface->openOutput(mProfile->getModuleHandle(),

output,

&lConfig,

&device,

address,

&mLatency,

mFlags);

.....

return status;

}

The above code has determined to select a specific device. Do you see it?

Right in the code audio_devices_t device = devices.types() here, devices is a collection. The types here return the type or value of all devices, and the type type can reflect the device type, and name, such as audio_ DEVICE_ OUT_ Earpice is an output type device called earPiece

In addition, the member variables mSamplingRate, mChannelMask and mFormat are directly assigned to the open parameter. When did these members assign initial values?

The answer is to sprinkle in the constructor:

AudioOutputDescriptor::AudioOutputDescriptor(const sp<AudioPort>& port,

AudioPolicyClientInterface *clientInterface)

: mPort(port), mClientInterface(clientInterface)

{

if (mPort.get() != nullptr) {

//Because there may be multiple profile s in mPort, different formats and sample s are supported, and the one with the most valid format is selected

mPort->pickAudioProfile(mSamplingRate, mChannelMask, mFormat);

....

}

}

void AudioPort::pickAudioProfile(uint32_t &samplingRate,

audio_channel_mask_t &channelMask,

audio_format_t &format) const

{

format = AUDIO_FORMAT_DEFAULT;

samplingRate = 0;

channelMask = AUDIO_CHANNEL_NONE;

// Check that at least one of the collection profile s is valid, that is, samplerate, channel and format cannot be empty at the same time

if (!mProfiles.hasValidProfile()) {

return;

}

//sPcmFormatCompareTable is an initialized array that enumerates the supported format. The maximum sampling rate is taken here

audio_format_t bestFormat = sPcmFormatCompareTable[ARRAY_SIZE(sPcmFormatCompareTable) - 1];

......

//Traverse the sampling rate, format and other information of each Profile, and take the one with valid and maximum format

for (size_t i = 0; i < mProfiles.size(); i ++) {

......

audio_format_t formatToCompare = mProfiles[i]->getFormat();

//The comparison format takes a larger value, and the current format cannot be larger than the last value of the sPcmFormatCompareTable array,

//That is, it must be a format supported by sPcmFormatCompareTable

if ((compareFormats(formatToCompare, format) > 0) &&

(compareFormats(formatToCompare, bestFormat) <= 0)) {

uint32_t pickedSamplingRate = 0;

audio_channel_mask_t pickedChannelMask = AUDIO_CHANNEL_NONE;

pickChannelMask(pickedChannelMask, mProfiles[i]->getChannels());

pickSamplingRate(pickedSamplingRate, mProfiles[i]->getSampleRates());

//If the above selection result is valid, write the value to the pointer of the parameter; This also determines the member variable of AudioOutputDescriptor

if (formatToCompare != AUDIO_FORMAT_DEFAULT && pickedChannelMask != AUDIO_CHANNEL_NONE

&& pickedSamplingRate != 0) {

format = formatToCompare;

channelMask = pickedChannelMask;

samplingRate = pickedSamplingRate;

// TODO: shall we return on the first one or still trying to pick a better Profile?

}

}

}

}

To sum up, select a profile with the largest format and values for sampleRate, format and channel.

Here we can analyze that the essence of an openOutput is to open an output channel and make it available. The audio data stream flows from this channel to a device. This channel supports specific sampleRate, format, channel and its audio devices. Finally, the device can receive the audio data and play it directly

AudioFlinger create Thread

Openoutput in AudioFlinger_ L method, after openOutputStream is opened successfully, a playback thread will be created for it, but why should a thread be created for a channel when it is opened?

I think that after this channel is successfully created, it will continue to pour audio data in the future. Sometimes there are real-time requirements for playing audio. For this demand, a thread will be created for it to run, which will not affect other tasks of AudioFlinger, but also ensure that its own data can run exclusively by one thread.

I think so, I don't know, right?

sp<AudioFlinger::ThreadBase> AudioFlinger::openOutput_l(audio_module_handle_t module,

audio_io_handle_t *output,

audio_config_t *config,

audio_devices_t devices,

const String8& address,

audio_output_flags_t flags)

{

AudioHwDevice *outHwDev = findSuitableHwDev_l(module, devices);

.......

AudioStreamOut *outputStream = NULL;

status_t status = outHwDev->openOutputStream(

&outputStream,

*output,

devices,

flags,

config,

address.string());

mHardwareStatus = AUDIO_HW_IDLE;

/**

* After each output is opened successfully, a thread will be created for it, and different threads will be created according to its flag, MmapPlaybackThread

* OffloadThread,DirectOutputThread,MixerThread Wait for threads, and finally save them to mMmapThreads and mPlaybackThreads,

* key Is the output handle, and the value is the thread we created

* */

if (status == NO_ERROR) {

if (flags & AUDIO_OUTPUT_FLAG_MMAP_NOIRQ) {

sp<MmapPlaybackThread> thread =

new MmapPlaybackThread(this, *output, outHwDev, outputStream,

devices, AUDIO_DEVICE_NONE, mSystemReady);

mMmapThreads.add(*output, thread);

.......

} else {

sp<PlaybackThread> thread;

if (flags & AUDIO_OUTPUT_FLAG_COMPRESS_OFFLOAD) {

thread = new OffloadThread(this, outputStream, *output, devices, mSystemReady);

......

} else if ((flags & AUDIO_OUTPUT_FLAG_DIRECT)

|| !isValidPcmSinkFormat(config->format)

|| !isValidPcmSinkChannelMask(config->channel_mask)) {

thread = new DirectOutputThread(this, outputStream, *output, devices, mSystemReady);

........

} else {

thread = new MixerThread(this, outputStream, *output, devices, mSystemReady);

........

}

mPlaybackThreads.add(*output, thread);

mPatchPanel.notifyStreamOpened(outHwDev, *output);

return thread;

}

}

return 0;

}

After the creation is successful, AudioFlinger creates different threads according to the parameter flags when it is opened. Finally, it uses the key value pair type (output, thread) to store the data. After obtaining the output handle, it can transfer the data to the corresponding thread. There are four main types of threads:

| Thread name | Thread meaning |

|---|---|

| MmapPlaybackThread | I don't know. I'll talk about it later |

| OffloadThread | It inherits DirectOutputThread and is used to play some tracks in non PCM format. The data on this thread needs to be transmitted to the hardware decoder for decoding and playing |

| DirectOutputThread | Direct output, no mixing, only one Track thread |

| MixerThread | Mixing threads can have multiple tracks |

The above threads correspond to FLAG, so we need to send the audio data to the corresponding thread during playback, and then pass in its FLAG.

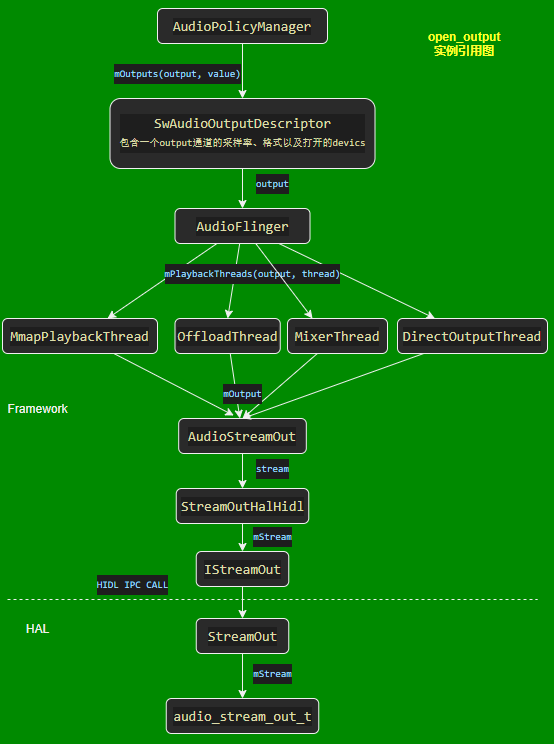

In addition to the above key points, other processes of openOutput are logical call and parameter conversion in turn, and there are no other important things to do; The following figure summarizes the reference diagram between various objects after the openOutput process, as follows:

There are several points worth noting:

- After the channel is successfully opened, SwAudioOutputDescriptor will save the usage parameters of this opening, such as SampleRate, channel, format and device DeviceDescriptor; At the same time, it will also synchronize the cache policy. The policy is not well understood and needs to be supplemented later!

- The parameter FLAG for opening the channel is very important. Different flags will create different threads in AudioFlinger, and the thread will be decided according to the FLAG during later playback