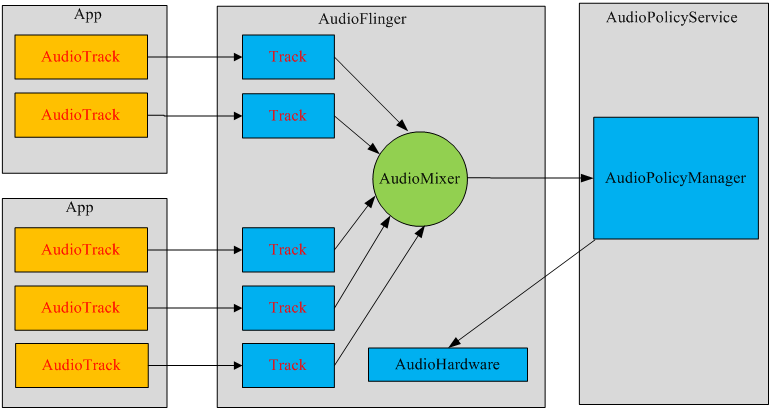

In the previous article, we introduced the startup and initialization of AudioPolicyService and AudioFlinger services. Here's a look at what AudioTrack and AudioFlinger did.

MediaPlayer will create the corresponding audio decoder in the framework layer. AudioTrack can only play the decoded PCM data stream. MediaPlayer also creates AudioTrack in the framework layer to pass the decoded PCM data stream to AudioTrack. AudioTrack is passed to AudioFlinger for mixing, and then passed to hardware for playback. Therefore, MediaPlayer includes AudioTrack.

Example:

AudioTrack audio = new AudioTrack(

AudioManager.STREAM_MUSIC, // Specifies the type of stream

32000, // Set the sampling rate of audio data 32k, assuming that 44.1k is 44100

AudioFormat.CHANNEL_OUT_STEREO, // Set the output channel to dual channel stereo, while CHANNEL_OUT_MONO type is mono

AudioFormat.ENCODING_PCM_16BIT, // Sets whether the audio data block is 8 bits or 16 bits. This is set to 16 bits.

It seems that most of today's audio is 16 bit

AudioTrack.MODE_STREAM // Set the mode type here as the flow type and the second MODE_STATIC seems to have little effect

);

audio.play(); // Start the audio device. Now you can really start playing audio data

// Open mp3 file, read data, decoding and other operations are omitted

byte[] buffer = new buffer[4096];

int count;

while(true)

{

// The key is to write the decoded data from the buffer to the AudioTrack object

audio.write(buffer, 0, 4096);

if(End of file) break;

}

//Close and free resources

audio.stop();

audio.release();Each audio stream will create an instance of AudioTrack class, and each AudioTrack will be registered in AudioFlinger when it is created. AudioFlinger mixes all audiotracks. Then transported to Play in AudioHardware. At present, Android can create up to 32 audio streams at the same time, that is to say. Mixer can process up to 32 AudioTrack data streams at one time. (online resources)

Let's analyze the source code:

AudioTtack.java

/**

* streamType:Audio stream type

* sampleRateInHz:Sampling rate

* channelConfig:Audio Channel

* audioFormat:Audio format

* bufferSizeInBytes Buffer size:

* mode:Audio data loading mode

* sessionId:Session id

*/

private AudioTrack(AudioAttributes attributes, AudioFormat format, int bufferSizeInBytes,

int mode, int sessionId, boolean offload, int encapsulationMode,

@Nullable TunerConfiguration tunerConfiguration)

throws IllegalArgumentException {

super(attributes, AudioPlaybackConfiguration.PLAYER_TYPE_JAM_AUDIOTRACK);

// mState already == STATE_UNINITIALIZED

mConfiguredAudioAttributes = attributes; // object copy not needed, immutable.

if (format == null) {

throw new IllegalArgumentException("Illegal null AudioFormat");

}

// Check if we should enable deep buffer mode

if (shouldEnablePowerSaving(mAttributes, format, bufferSizeInBytes, mode)) {

mAttributes = new AudioAttributes.Builder(mAttributes)

.replaceFlags((mAttributes.getAllFlags()

| AudioAttributes.FLAG_DEEP_BUFFER)

& ~AudioAttributes.FLAG_LOW_LATENCY)

.build();

}

// remember which looper is associated with the AudioTrack instantiation

//Remember which circulator is associated with the AudioTrack instantiation

Looper looper;

if ((looper = Looper.myLooper()) == null) {

looper = Looper.getMainLooper();

}

int rate = format.getSampleRate();

if (rate == AudioFormat.SAMPLE_RATE_UNSPECIFIED) {

rate = 0;

}

int channelIndexMask = 0;

if ((format.getPropertySetMask()

& AudioFormat.AUDIO_FORMAT_HAS_PROPERTY_CHANNEL_INDEX_MASK) != 0) {

channelIndexMask = format.getChannelIndexMask();

}

int channelMask = 0;

if ((format.getPropertySetMask()

& AudioFormat.AUDIO_FORMAT_HAS_PROPERTY_CHANNEL_MASK) != 0) {

channelMask = format.getChannelMask();

} else if (channelIndexMask == 0) { // if no masks at all, use stereo

channelMask = AudioFormat.CHANNEL_OUT_FRONT_LEFT

| AudioFormat.CHANNEL_OUT_FRONT_RIGHT;

}

int encoding = AudioFormat.ENCODING_DEFAULT;

if ((format.getPropertySetMask() & AudioFormat.AUDIO_FORMAT_HAS_PROPERTY_ENCODING) != 0) {

encoding = format.getEncoding();

}

/**

* Parameter check

* 1.Check whether the streamType is: STREAM_ALARM,STREAM_MUSIC,STREAM_RING,STREAM_SYSTEM,STREAM_VOICE_CALL,

* STREAM_NOTIFICATION,STREAM_BLUETOOTH_SCO,STREAM_BLUETOOTH_SCO,And assign it to mStreamType

* 2.Check that sampleRateInHz is between 4000 and 48000. And assign it to mSampleRate

* 3.Set mChannels:

* CHANNEL_OUT_DEFAULT,CHANNEL_OUT_MONO,CHANNEL_CONFIGURATION_MONO ---> CHANNEL_OUT_MONO

* CHANNEL_OUT_STEREO,CHANNEL_CONFIGURATION_STEREO ---> CHANNEL_OUT_STEREO

* 4.Set mAudioFormat:

* ENCODING_PCM_16BIT,ENCODING_DEFAULT ---> ENCODING_PCM_16BIT

* ENCODING_PCM_8BIT ---> ENCODING_PCM_8BIT

* 5.Set mDataLoadMode:

* MODE_STREAM

* MODE_STATIC

*/

audioParamCheck(rate, channelMask, channelIndexMask, encoding, mode);

mOffloaded = offload;

mStreamType = AudioSystem.STREAM_DEFAULT;

/**

* buffer Size check, calculate the byte size of each frame, assuming encoding_ PCM_ mChannelCount * 2 if 16bit

* mNativeBufferSizeInFrames Is the number of frames

*/

audioBuffSizeCheck(bufferSizeInBytes);

mInitializationLooper = looper;

if (sessionId < 0) {

throw new IllegalArgumentException("Invalid audio session ID: "+sessionId);

}

//Enter native layer initialization

int[] sampleRate = new int[] {mSampleRate};

int[] session = new int[1];

session[0] = sessionId;

// native initialization

int initResult = native_setup(new WeakReference<AudioTrack>(this), mAttributes,

sampleRate, mChannelMask, mChannelIndexMask, mAudioFormat,

mNativeBufferSizeInBytes, mDataLoadMode, session, 0 /*nativeTrackInJavaObj*/,

offload, encapsulationMode, tunerConfiguration);

if (initResult != SUCCESS) {

loge("Error code "+initResult+" when initializing AudioTrack.");

return; // with mState == STATE_UNINITIALIZED

}

mSampleRate = sampleRate[0];

mSessionId = session[0];

// TODO: consider caching encapsulationMode and tunerConfiguration in the Java object.

if ((mAttributes.getFlags() & AudioAttributes.FLAG_HW_AV_SYNC) != 0) {

int frameSizeInBytes;

if (AudioFormat.isEncodingLinearFrames(mAudioFormat)) {

frameSizeInBytes = mChannelCount * AudioFormat.getBytesPerSample(mAudioFormat);

} else {

frameSizeInBytes = 1;

}

mOffset = ((int) Math.ceil(HEADER_V2_SIZE_BYTES / frameSizeInBytes)) * frameSizeInBytes;

}

if (mDataLoadMode == MODE_STATIC) {

mState = STATE_NO_STATIC_DATA;

} else {

mState = STATE_INITIALIZED;

}

baseRegisterPlayer();

}AudioTrack has two data loading modes:

MODE_STREAM: the upper layer continues to write the audio data stream to the AudioTrack. Write is blocked until the data stream is transmitted from the Java layer to the native layer and added to the playback queue at the same time. This mode is suitable for playing large audio data, but it will cause a certain delay. It is generally used to play online music or large audio files.

MODE_STATIC: before playing, write all data to the internal buffer of AudioTrack at one time. It is suitable for playing audio data with small memory occupation and high delay requirements. Generally used for prompt tone, key tone, etc

In the construction of AudioTrack, some parameters are checked above. Here is native_ The setup function creates an AudioTrack in the C + + layer through Jni.

android_media_AudioTrack.cpp

static const JNINativeMethod gMethods[] = {

// name, signature, funcPtr

{"native_is_direct_output_supported", "(IIIIIII)Z",

(void *)android_media_AudioTrack_is_direct_output_supported},

{"native_start", "()V", (void *)android_media_AudioTrack_start},

{"native_stop", "()V", (void *)android_media_AudioTrack_stop},

{"native_pause", "()V", (void *)android_media_AudioTrack_pause},

{"native_flush", "()V", (void *)android_media_AudioTrack_flush},

{"native_setup", "(Ljava/lang/Object;Ljava/lang/Object;[IIIIII[IJZILjava/lang/Object;)I",

(void *)android_media_AudioTrack_setup},

...

...

}JNI uses dynamic registration. The knowledge related to JNI is not introduced here.

static jint android_media_AudioTrack_setup(JNIEnv *env, jobject thiz, jobject weak_this,

jobject jaa, jintArray jSampleRate,

jint channelPositionMask, jint channelIndexMask,

jint audioFormat, jint buffSizeInBytes, jint memoryMode,

jintArray jSession, jlong nativeAudioTrack,

jboolean offload, jint encapsulationMode,

jobject tunerConfiguration) {

...

...

...

// create the native AudioTrack object

lpTrack = new AudioTrack();

// read the AudioAttributes values

auto paa = JNIAudioAttributeHelper::makeUnique();

jint jStatus = JNIAudioAttributeHelper::nativeFromJava(env, jaa, paa.get());

if (jStatus != (jint)AUDIO_JAVA_SUCCESS) {

return jStatus;

}

ALOGV("AudioTrack_setup for usage=%d content=%d flags=0x%#x tags=%s",

paa->usage, paa->content_type, paa->flags, paa->tags);

// initialize the callback information:

// this data will be passed with every AudioTrack callback

// Create a container for storing audio data

lpJniStorage = new AudioTrackJniStorage();

//Save the AudioTrack reference of the Java layer to AudioTrackJniStorage

lpJniStorage->mCallbackData.audioTrack_class = (jclass)env->NewGlobalRef(clazz);

// we use a weak reference so the AudioTrack object can be garbage collected.

lpJniStorage->mCallbackData.audioTrack_ref = env->NewGlobalRef(weak_this);

lpJniStorage->mCallbackData.isOffload = offload;

lpJniStorage->mCallbackData.busy = false;

audio_offload_info_t offloadInfo;

if (offload == JNI_TRUE) {

offloadInfo = AUDIO_INFO_INITIALIZER;

offloadInfo.format = format;

offloadInfo.sample_rate = sampleRateInHertz;

offloadInfo.channel_mask = nativeChannelMask;

offloadInfo.has_video = false;

offloadInfo.stream_type = AUDIO_STREAM_MUSIC; //required for offload

}

// initialize the native AudioTrack object

//Initialize native AudioTrack objects in different modes

status_t status = NO_ERROR;

switch (memoryMode) { //stream mode

case MODE_STREAM:

status = lpTrack->set(

AUDIO_STREAM_DEFAULT,// stream type, but more info conveyed in paa (last argument)

sampleRateInHertz,

format,// word length, PCM

nativeChannelMask,

offload ? 0 : frameCount,

offload ? AUDIO_OUTPUT_FLAG_COMPRESS_OFFLOAD : AUDIO_OUTPUT_FLAG_NONE,

audioCallback, &(lpJniStorage->mCallbackData),//callback, callback data (user)

0,// notificationFrames == 0 since not using EVENT_MORE_DATA to feed the AudioTrack

0,//Shared memory in stream mode is created in AudioFlinger

true,// thread can call Java

sessionId,// audio session ID

offload ? AudioTrack::TRANSFER_SYNC_NOTIF_CALLBACK : AudioTrack::TRANSFER_SYNC,

offload ? &offloadInfo : NULL,

-1, -1, // default uid, pid values

paa.get());

break;

case MODE_STATIC: //static mode

// AudioTrack is using shared memory

// Allocate shared memory area for AudioTrack

if (!lpJniStorage->allocSharedMem(buffSizeInBytes)) {

ALOGE("Error creating AudioTrack in static mode: error creating mem heap base");

goto native_init_failure;

}

status = lpTrack->set(

AUDIO_STREAM_DEFAULT,// stream type, but more info conveyed in paa (last argument)

sampleRateInHertz,

format,// word length, PCM

nativeChannelMask,

frameCount,

AUDIO_OUTPUT_FLAG_NONE,

audioCallback, &(lpJniStorage->mCallbackData),//callback, callback data (user));

0,// notificationFrames == 0 since not using EVENT_MORE_DATA to feed the AudioTrack

lpJniStorage->mMemBase,// shared mem

true,// thread can call Java

sessionId,// audio session ID

AudioTrack::TRANSFER_SHARED,

NULL, // default offloadInfo

-1, -1, // default uid, pid values

paa.get());

break;

default:

ALOGE("Unknown mode %d", memoryMode);

goto native_init_failure;

}

if (status != NO_ERROR) {

ALOGE("Error %d initializing AudioTrack", status);

goto native_init_failure;

}

// Set caller name so it can be logged in destructor.

// MediaMetricsConstants.h: AMEDIAMETRICS_PROP_CALLERNAME_VALUE_JAVA

lpTrack->setCallerName("java");

} else { // end if (nativeAudioTrack == 0)

lpTrack = (AudioTrack*)nativeAudioTrack;

// TODO: We need to find out which members of the Java AudioTrack might

// need to be initialized from the Native AudioTrack

// these are directly returned from getters:

// mSampleRate

// mAudioFormat

// mStreamType

// mChannelConfiguration

// mChannelCount

// mState (?)

// mPlayState (?)

// these may be used internally (Java AudioTrack.audioParamCheck():

// mChannelMask

// mChannelIndexMask

// mDataLoadMode

// initialize the callback information:

// this data will be passed with every AudioTrack callback

// Create a container for storing audio data

lpJniStorage = new AudioTrackJniStorage();

lpJniStorage->mCallbackData.audioTrack_class = (jclass)env->NewGlobalRef(clazz);

// we use a weak reference so the AudioTrack object can be garbage collected.

lpJniStorage->mCallbackData.audioTrack_ref = env->NewGlobalRef(weak_this);

lpJniStorage->mCallbackData.busy = false;

}

lpJniStorage->mAudioTrackCallback =

new JNIAudioTrackCallback(env, thiz, lpJniStorage->mCallbackData.audioTrack_ref,

javaAudioTrackFields.postNativeEventInJava);

lpTrack->setAudioTrackCallback(lpJniStorage->mAudioTrackCallback);

nSession = (jint *) env->GetPrimitiveArrayCritical(jSession, NULL);

if (nSession == NULL) {

ALOGE("Error creating AudioTrack: Error retrieving session id pointer");

goto native_init_failure;

}

// read the audio session ID back from AudioTrack in case we create a new session

...

...

...

...

return (jint) AUDIOTRACK_ERROR_SETUP_NATIVEINITFAILED;

}On Android_ media_ AudioTrack_ Several important things are done in the setup() function:

1. Create Audiorack of Native layer.

2. Create a container for storing audio data.

3. Save the AudioTrack reference of Java layer to AudioTrackJniStorage

struct audiotrack_callback_cookie {

jclass audioTrack_class;

jobject audioTrack_ref;

bool busy;

Condition cond;

bool isOffload;

};

class AudioTrackJniStorage {

public:

sp<MemoryHeapBase> mMemHeap;

sp<MemoryBase> mMemBase;

audiotrack_callback_cookie mCallbackData{};

sp<JNIDeviceCallback> mDeviceCallback;

sp<JNIAudioTrackCallback> mAudioTrackCallback;

bool allocSharedMem(int sizeInBytes) {

mMemHeap = new MemoryHeapBase(sizeInBytes, 0, "AudioTrack Heap Base");

if (mMemHeap->getHeapID() < 0) {

return false;

}

mMemBase = new MemoryBase(mMemHeap, 0, sizeInBytes);

return true;

}

};

bool allocSharedMem(int sizeInBytes) {

//Create an anonymous shared memory

mMemHeap = new MemoryHeapBase(sizeInBytes, 0, "AudioTrack Heap Base");

if (mMemHeap->getHeapID() < 0) {

return false;

}

mMemBase = new MemoryBase(mMemHeap, 0, sizeInBytes);

return true;

}

};

//Create anonymous shared memory area

MemoryHeapBase::MemoryHeapBase(size_t size, uint32_t flags, char const * name)

: mFD(-1), mSize(0), mBase(MAP_FAILED), mFlags(flags),

mDevice(nullptr), mNeedUnmap(false), mOffset(0)

{

//Get memory page size

const size_t pagesize = getpagesize();

//byte alignment

size = ((size + pagesize-1) & ~(pagesize-1));

//Create shared memory. Open the / dev/ashmem device. Get a file description narrator

int fd = ashmem_create_region(name == nullptr ? "MemoryHeapBase" : name, size);

ALOGE_IF(fd<0, "error creating ashmem region: %s", strerror(errno));

if (fd >= 0) {

//Map anonymous shared memory to the current process address space through mmap

if (mapfd(fd, size) == NO_ERROR) {

if (flags & READ_ONLY) {

ashmem_set_prot_region(fd, PROT_READ);

}

}

}

}4. Initialize the native AudioTrack object in different modes and call the set() method of lpTrack.

AudioTrack.cpp

status_t AudioTrack::set(

audio_stream_type_t streamType,

uint32_t sampleRate,

audio_format_t format,

audio_channel_mask_t channelMask,

size_t frameCount,

audio_output_flags_t flags,

callback_t cbf,

void* user,

int32_t notificationFrames,

const sp<IMemory>& sharedBuffer,

bool threadCanCallJava,

audio_session_t sessionId,

transfer_type transferType,

const audio_offload_info_t *offloadInfo,

uid_t uid,

pid_t pid,

const audio_attributes_t* pAttributes,

bool doNotReconnect,

float maxRequiredSpeed,

audio_port_handle_t selectedDeviceId)

{

status_t status;

uint32_t channelCount;

pid_t callingPid;

pid_t myPid;

...

...

mThreadCanCallJava = threadCanCallJava;

mSelectedDeviceId = selectedDeviceId;

mSessionId = sessionId;

//Set audio transmission data type

switch (transferType) {

case TRANSFER_DEFAULT:

if (sharedBuffer != 0) {

transferType = TRANSFER_SHARED;

} else if (cbf == NULL || threadCanCallJava) {

transferType = TRANSFER_SYNC;

} else {

transferType = TRANSFER_CALLBACK;

}

break;

case TRANSFER_CALLBACK:

case TRANSFER_SYNC_NOTIF_CALLBACK:

if (cbf == NULL || sharedBuffer != 0) {

ALOGE("%s(): Transfer type %s but cbf == NULL || sharedBuffer != 0",

convertTransferToText(transferType), __func__);

status = BAD_VALUE;

goto exit;

}

break;

case TRANSFER_OBTAIN:

case TRANSFER_SYNC:

if (sharedBuffer != 0) {

ALOGE("%s(): Transfer type TRANSFER_OBTAIN but sharedBuffer != 0", __func__);

status = BAD_VALUE;

goto exit;

}

break;

case TRANSFER_SHARED:

if (sharedBuffer == 0) {

ALOGE("%s(): Transfer type TRANSFER_SHARED but sharedBuffer == 0", __func__);

status = BAD_VALUE;

goto exit;

}

break;

default:

ALOGE("%s(): Invalid transfer type %d",

__func__, transferType);

status = BAD_VALUE;

goto exit;

}

mSharedBuffer = sharedBuffer;

mTransfer = transferType;

mDoNotReconnect = doNotReconnect;

ALOGV_IF(sharedBuffer != 0, "%s(): sharedBuffer: %p, size: %zu",

__func__, sharedBuffer->unsecurePointer(), sharedBuffer->size());

ALOGV("%s(): streamType %d frameCount %zu flags %04x",

__func__, streamType, frameCount, flags);

// invariant that mAudioTrack != 0 is true only after set() returns successfully

if (mAudioTrack != 0) {

ALOGE("%s(): Track already in use", __func__);

status = INVALID_OPERATION;

goto exit;

}

// handle default values first.

//Audio format setting

if (streamType == AUDIO_STREAM_DEFAULT) {

streamType = AUDIO_STREAM_MUSIC;

}

if (pAttributes == NULL) {

if (uint32_t(streamType) >= AUDIO_STREAM_PUBLIC_CNT) {

ALOGE("%s(): Invalid stream type %d", __func__, streamType);

status = BAD_VALUE;

goto exit;

}

mStreamType = streamType;

} else {

// stream type shouldn't be looked at, this track has audio attributes

memcpy(&mAttributes, pAttributes, sizeof(audio_attributes_t));

ALOGV("%s(): Building AudioTrack with attributes:"

" usage=%d content=%d flags=0x%x tags=[%s]",

__func__,

mAttributes.usage, mAttributes.content_type, mAttributes.flags, mAttributes.tags);

mStreamType = AUDIO_STREAM_DEFAULT;

audio_flags_to_audio_output_flags(mAttributes.flags, &flags);

}

...

...

...

//Assuming that the callback function for providing audio data is set, start the AudioTrackThread thread to provide audio data

if (cbf != NULL) {

mAudioTrackThread = new AudioTrackThread(*this);

mAudioTrackThread->run("AudioTrack", ANDROID_PRIORITY_AUDIO, 0 /*stack*/);

// thread begins in paused state, and will not reference us until start()

}

// create the IAudioTrack

{

AutoMutex lock(mLock);

status = createTrack_l();//continue

}

if (status != NO_ERROR) {

if (mAudioTrackThread != 0) {

mAudioTrackThread->requestExit(); // see comment in AudioTrack.h

mAudioTrackThread->requestExitAndWait();

mAudioTrackThread.clear();

}

goto exit;

}

...

...

...

mStatus = status;

return status;

}We have done some processing on the parameters in the set() function. Continue to look at createTrack_l().

status_t AudioTrack::createTrack_l()

{

status_t status;

bool callbackAdded = false;

//Get the proxy object of AudioFlinger

const sp<IAudioFlinger>& audioFlinger = AudioSystem::get_audio_flinger();

if (audioFlinger == 0) {

ALOGE("%s(%d): Could not get audioflinger",

__func__, mPortId);

status = NO_INIT;

goto exit;

}

{

...

...

...

...

IAudioFlinger::CreateTrackInput input;

if (mStreamType != AUDIO_STREAM_DEFAULT) {

input.attr = AudioSystem::streamTypeToAttributes(mStreamType);

} else {

input.attr = mAttributes;

}

input.config = AUDIO_CONFIG_INITIALIZER;

input.config.sample_rate = mSampleRate;

input.config.channel_mask = mChannelMask;

input.config.format = mFormat;

input.config.offload_info = mOffloadInfoCopy;

input.clientInfo.clientUid = mClientUid;

input.clientInfo.clientPid = mClientPid;

input.clientInfo.clientTid = -1;

if (mFlags & AUDIO_OUTPUT_FLAG_FAST) {

// It is currently meaningless to request SCHED_FIFO for a Java thread. Even if the

// application-level code follows all non-blocking design rules, the language runtime

// doesn't also follow those rules, so the thread will not benefit overall.

if (mAudioTrackThread != 0 && !mThreadCanCallJava) {

input.clientInfo.clientTid = mAudioTrackThread->getTid();

}

}

input.sharedBuffer = mSharedBuffer;

input.notificationsPerBuffer = mNotificationsPerBufferReq;

input.speed = 1.0;

if (audio_has_proportional_frames(mFormat) && mSharedBuffer == 0 &&

(mFlags & AUDIO_OUTPUT_FLAG_FAST) == 0) {

input.speed = !isPurePcmData_l() || isOffloadedOrDirect_l() ? 1.0f :

max(mMaxRequiredSpeed, mPlaybackRate.mSpeed);

}

input.flags = mFlags;

input.frameCount = mReqFrameCount;

input.notificationFrameCount = mNotificationFramesReq;

input.selectedDeviceId = mSelectedDeviceId;

input.sessionId = mSessionId;

input.audioTrackCallback = mAudioTrackCallback;

IAudioFlinger::CreateTrackOutput output;

sp<IAudioTrack> track = audioFlinger->createTrack(input,

output,

&status);

...\

...

...

...

...

// FIXME compare to AudioRecord

sp<IMemory> iMem = track->getCblk();

if (iMem == 0) {

ALOGE("%s(%d): Could not get control block", __func__, mPortId);

status = NO_INIT;

goto exit;

}

// TODO: Using unsecurePointer() has some associated security pitfalls

// (see declaration for details).

// Either document why it is safe in this case or address the

// issue (e.g. by copying).

void *iMemPointer = iMem->unsecurePointer();

if (iMemPointer == NULL) {

ALOGE("%s(%d): Could not get control block pointer", __func__, mPortId);

status = NO_INIT;

goto exit;

}

// invariant that mAudioTrack != 0 is true only after set() returns successfully

if (mAudioTrack != 0) {

IInterface::asBinder(mAudioTrack)->unlinkToDeath(mDeathNotifier, this);

mDeathNotifier.clear();

}

//Save the created Track proxy object and anonymous shared memory proxy object to the member variable of AudioTrack

mAudioTrack = track;

mCblkMemory = iMem;

IPCThreadState::self()->flushCommands();

//Save the first address of anonymous shared memory. An audio is stored in the head of the anonymous shared memory_ track_ cblk_ T object

audio_track_cblk_t* cblk = static_cast<audio_track_cblk_t*>(iMemPointer);

mCblk = cblk;

mAwaitBoost = false;

if (mFlags & AUDIO_OUTPUT_FLAG_FAST) {

if (output.flags & AUDIO_OUTPUT_FLAG_FAST) {

ALOGI("%s(%d): AUDIO_OUTPUT_FLAG_FAST successful; frameCount %zu -> %zu",

__func__, mPortId, mReqFrameCount, mFrameCount);

if (!mThreadCanCallJava) {

mAwaitBoost = true;

}

} else {

ALOGD("%s(%d): AUDIO_OUTPUT_FLAG_FAST denied by server; frameCount %zu -> %zu",

__func__, mPortId, mReqFrameCount, mFrameCount);

}

}

mFlags = output.flags;

//mOutput != output includes the case where mOutput == AUDIO_IO_HANDLE_NONE for first creation

if (mDeviceCallback != 0) {

if (mOutput != AUDIO_IO_HANDLE_NONE) {

AudioSystem::removeAudioDeviceCallback(this, mOutput, mPortId);

}

AudioSystem::addAudioDeviceCallback(this, output.outputId, output.portId);

callbackAdded = true;

}

mPortId = output.portId;

// We retain a copy of the I/O handle, but don't own the reference

mOutput = output.outputId;

mRefreshRemaining = true;

// Starting address of buffers in shared memory. If there is a shared buffer, buffers

// is the value of pointer() for the shared buffer, otherwise buffers points

// immediately after the control block. This address is for the mapping within client

// address space. AudioFlinger::TrackBase::mBuffer is for the server address space.

void* buffers;

if (mSharedBuffer == 0) {

buffers = cblk + 1;

} else {

// TODO: Using unsecurePointer() has some associated security pitfalls

// (see declaration for details).

// Either document why it is safe in this case or address the

// issue (e.g. by copying).

buffers = mSharedBuffer->unsecurePointer();

if (buffers == NULL) {

ALOGE("%s(%d): Could not get buffer pointer", __func__, mPortId);

status = NO_INIT;

goto exit;

}

}

mAudioTrack->attachAuxEffect(mAuxEffectId);

// If IAudioTrack is re-created, don't let the requested frameCount

// decrease. This can confuse clients that cache frameCount().

if (mFrameCount > mReqFrameCount) {

mReqFrameCount = mFrameCount;

}

// reset server position to 0 as we have new cblk.

mServer = 0;

// update proxy

if (mSharedBuffer == 0) {

mStaticProxy.clear();

mProxy = new AudioTrackClientProxy(cblk, buffers, mFrameCount, mFrameSize);

} else {

mStaticProxy = new StaticAudioTrackClientProxy(cblk, buffers, mFrameCount, mFrameSize);

mProxy = mStaticProxy;

}

mProxy->setVolumeLR(gain_minifloat_pack(

gain_from_float(mVolume[AUDIO_INTERLEAVE_LEFT]),

gain_from_float(mVolume[AUDIO_INTERLEAVE_RIGHT])));

mProxy->setSendLevel(mSendLevel);

const uint32_t effectiveSampleRate = adjustSampleRate(mSampleRate, mPlaybackRate.mPitch);

const float effectiveSpeed = adjustSpeed(mPlaybackRate.mSpeed, mPlaybackRate.mPitch);

const float effectivePitch = adjustPitch(mPlaybackRate.mPitch);

mProxy->setSampleRate(effectiveSampleRate);

AudioPlaybackRate playbackRateTemp = mPlaybackRate;

playbackRateTemp.mSpeed = effectiveSpeed;

playbackRateTemp.mPitch = effectivePitch;

mProxy->setPlaybackRate(playbackRateTemp);

mProxy->setMinimum(mNotificationFramesAct);

mDeathNotifier = new DeathNotifier(this);

IInterface::asBinder(mAudioTrack)->linkToDeath(mDeathNotifier, this);

// This is the first log sent from the AudioTrack client.

// The creation of the audio track by AudioFlinger (in the code above)

// is the first log of the AudioTrack and must be present before

// any AudioTrack client logs will be accepted.

...

...

...

...

...

mStatus = status;

// sp<IAudioTrack> track destructor will cause releaseOutput() to be called by AudioFlinger

return status;

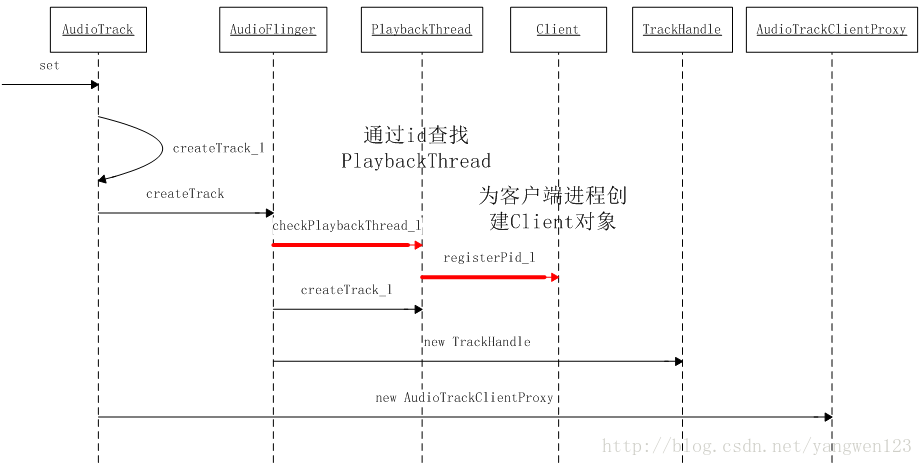

}As mentioned in the previous article, during the initialization of AudioPolicyService, the AudioPolicyManager is created and the AudioFlinger::openOutput() function is called to open a default audio output PlaybackThread thread and assign a globally unique audio to the thread_ io_ handle_ T value and saved as a key value pair in the member variable mPlaybackThreads of AudioFlinger. Here, first, according to the audio parameters, get the PlaybackThread thread thread id number of the current audio output interface by calling the AudioSystem::getOutput() function. It is also passed to the createTrack function to create a Track.

Audio playback requires AudioTrack to write audio data and AudioFlinger to mix the data. Therefore, a data channel should be established between AudioTrack and AudioFlinger. AudioTrack and AudioFlinger belong to different process spaces. Android system uses Binder communication method to communicate.

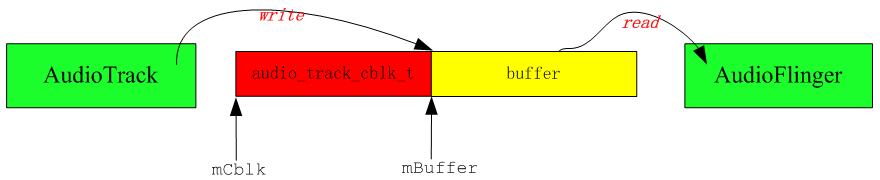

IAudioTrack establishes the relationship between AudioTrack and AudioFlinger. In the static mode, the anonymous shared memory used to store audio data is created on the AudioTrack side. In stream playback mode, anonymous shared memory is created on AudioFlinger side. The anonymous shared memory created in the two playback modes is different. An audio will be created in the header of the anonymous shared memory in stream mode_ Track_ cblk_ T object to coordinate the pace between producer AudioTrack and consumer AudioFlinger. createTrack is to create a Track object in AudioFlinger.

AudioFlinger.cpp

sp<IAudioTrack> AudioFlinger::createTrack(const CreateTrackInput& input,

CreateTrackOutput& output,

status_t *status)

{

sp<PlaybackThread::Track> track; //Create a track object

sp<TrackHandle> trackHandle;

sp<Client> client;

status_t lStatus;

audio_stream_type_t streamType;

audio_port_handle_t portId = AUDIO_PORT_HANDLE_NONE;

std::vector<audio_io_handle_t> secondaryOutputs;

bool updatePid = (input.clientInfo.clientPid == -1);

const uid_t callingUid = IPCThreadState::self()->getCallingUid();

uid_t clientUid = input.clientInfo.clientUid;

audio_io_handle_t effectThreadId = AUDIO_IO_HANDLE_NONE;

std::vector<int> effectIds;

audio_attributes_t localAttr = input.attr;

if (!isAudioServerOrMediaServerUid(callingUid)) {

ALOGW_IF(clientUid != callingUid,

"%s uid %d tried to pass itself off as %d",

__FUNCTION__, callingUid, clientUid);

clientUid = callingUid;

updatePid = true;

}

pid_t clientPid = input.clientInfo.clientPid;

const pid_t callingPid = IPCThreadState::self()->getCallingPid();

if (updatePid) {

ALOGW_IF(clientPid != -1 && clientPid != callingPid,

"%s uid %d pid %d tried to pass itself off as pid %d",

__func__, callingUid, callingPid, clientPid);

clientPid = callingPid;

}

audio_session_t sessionId = input.sessionId;

if (sessionId == AUDIO_SESSION_ALLOCATE) {

sessionId = (audio_session_t) newAudioUniqueId(AUDIO_UNIQUE_ID_USE_SESSION);

} else if (audio_unique_id_get_use(sessionId) != AUDIO_UNIQUE_ID_USE_SESSION) {

lStatus = BAD_VALUE;

goto Exit;

}

output.sessionId = sessionId;

output.outputId = AUDIO_IO_HANDLE_NONE;

output.selectedDeviceId = input.selectedDeviceId;

//Get the output device (this function will eventually be implemented in AudioPolicyManager.cpp, and get the device directly through meengine)

lStatus = AudioSystem::getOutputForAttr(&localAttr, &output.outputId, sessionId, &streamType,

clientPid, clientUid, &input.config, input.flags,

&output.selectedDeviceId, &portId, &secondaryOutputs);

if (lStatus != NO_ERROR || output.outputId == AUDIO_IO_HANDLE_NONE) {

ALOGE("createTrack() getOutputForAttr() return error %d or invalid output handle", lStatus);

goto Exit;

}

....

....

....

....

....

{

Mutex::Autolock _l(mLock);

//Find the corresponding PlaybackThread according to the ID number of the playback thread. When openout, the playback thread is saved in the mPlaybackThreads of AudioFlinger in the form of key/value

PlaybackThread *thread = checkPlaybackThread_l(output.outputId);

if (thread == NULL) {

ALOGE("no playback thread found for output handle %d", output.outputId);

lStatus = BAD_VALUE;

goto Exit;

}

//Find out whether the Client object has been created for the Client process according to the Client process pid. If not, create a Client object

client = registerPid(clientPid);

PlaybackThread *effectThread = NULL;

// check if an effect chain with the same session ID is present on another

// output thread and move it here.

//Traverse all playback threads. No output thread is included. Assuming that the sessionId of the Track in this thread is the same as the current one, this thread will be taken as the effectThread of the current Track.

for (size_t i = 0; i < mPlaybackThreads.size(); i++) {

sp<PlaybackThread> t = mPlaybackThreads.valueAt(i);

if (mPlaybackThreads.keyAt(i) != output.outputId) {

uint32_t sessions = t->hasAudioSession(sessionId);

if (sessions & ThreadBase::EFFECT_SESSION) {

effectThread = t.get();

break;

}

}

}

ALOGV("createTrack() sessionId: %d", sessionId);

output.sampleRate = input.config.sample_rate;

output.frameCount = input.frameCount;

output.notificationFrameCount = input.notificationFrameCount;

output.flags = input.flags;

//Create a Track in the PlaybackThread thread found

track = thread->createTrack_l(client, streamType, localAttr, &output.sampleRate,

input.config.format, input.config.channel_mask,

&output.frameCount, &output.notificationFrameCount,

input.notificationsPerBuffer, input.speed,

input.sharedBuffer, sessionId, &output.flags,

callingPid, input.clientInfo.clientTid, clientUid,

&lStatus, portId, input.audioTrackCallback);

LOG_ALWAYS_FATAL_IF((lStatus == NO_ERROR) && (track == 0));

// we don't abort yet if lStatus != NO_ERROR; there is still work to be done regardless

output.afFrameCount = thread->frameCount();

output.afSampleRate = thread->sampleRate();

output.afLatencyMs = thread->latency();

output.portId = portId;

if (lStatus == NO_ERROR) {

// Connect secondary outputs. Failure on a secondary output must not imped the primary

// Any secondary output setup failure will lead to a desync between the AP and AF until

// the track is destroyed.

TeePatches teePatches;

for (audio_io_handle_t secondaryOutput : secondaryOutputs) {

PlaybackThread *secondaryThread = checkPlaybackThread_l(secondaryOutput);

if (secondaryThread == NULL) {

ALOGE("no playback thread found for secondary output %d", output.outputId);

continue;

}

....

....

....

....

....

....

//At this time, the Track has been successfully created. You must also create a proxy object TrackHandle for the Track

// return handle to client

trackHandle = new TrackHandle(track);

Exit:

if (lStatus != NO_ERROR && output.outputId != AUDIO_IO_HANDLE_NONE) {

AudioSystem::releaseOutput(portId);

}

*status = lStatus;

return trackHandle;

}Create a Client object for the application process in AudioFlinger to communicate with the Client process. Then find the corresponding PlaybackThread according to the playback thread ID. And hand over the task of creating the Track to it. After the PlaybackThread is created, because the Track has no communication function, it must also create a TrackHandle object for the proxy communication service.

(online resources)

//According to the process pid. Create a client object for the client requesting audio playback.

sp<AudioFlinger::Client> AudioFlinger::registerPid(pid_t pid)

{

Mutex::Autolock _cl(mClientLock);

// If pid is already in the mClients wp<> map, then use that entry

// (for which promote() is always != 0), otherwise create a new entry and Client.

sp<Client> client = mClients.valueFor(pid).promote();

if (client == 0) {

client = new Client(this, pid);

mClients.add(pid, client);

}

return client;

}

AudioFlinger's variable mClients holds pid and client objects as key value pairs. Here, first take out the corresponding client object. If not, create a new client object for the client process.

AudioFlinger::Client::Client(const sp<AudioFlinger>& audioFlinger, pid_t pid)

: RefBase(),

mAudioFlinger(audioFlinger),

mPid(pid)

{

mMemoryDealer = new MemoryDealer(

audioFlinger->getClientSharedHeapSize(),

(std::string("AudioFlinger::Client(") + std::to_string(pid) + ")").c_str());

}A MemoryDealer object is created to allocate shared memory

MemoryDealer::MemoryDealer(size_t size, const char* name, uint32_t flags)

: mHeap(new MemoryHeapBase(size, flags, name)), //Creates shared memory of the specified size

mAllocator(new SimpleBestFitAllocator(size)) //Create memory allocator

{

}

SimpleBestFitAllocator::SimpleBestFitAllocator(size_t size)

{

size_t pagesize = getpagesize();

mHeapSize = ((size + pagesize-1) & ~(pagesize-1));

chunk_t* node = new chunk_t(0, mHeapSize / kMemoryAlign);

mList.insertHead(node);

}When AudioTrack in the application process requests AudioFlinger to create a Track object in PlaybackThread, AudioFlinger will first create a Client object for the application process and create a 2m shared memory at the same time. When creating Track, Track will allocate buffer in 2M shared memory for audio playback.

Threads.cpp

// PlaybackThread::createTrack_l() must be called with AudioFlinger::mLock held

sp<AudioFlinger::PlaybackThread::Track> AudioFlinger::PlaybackThread::createTrack_l(

const sp<AudioFlinger::Client>& client,

audio_stream_type_t streamType,

const audio_attributes_t& attr,

uint32_t *pSampleRate,

audio_format_t format,

audio_channel_mask_t channelMask,

size_t *pFrameCount,

size_t *pNotificationFrameCount,

uint32_t notificationsPerBuffer,

float speed,

const sp<IMemory>& sharedBuffer,

audio_session_t sessionId,

audio_output_flags_t *flags,

pid_t creatorPid,

pid_t tid,

uid_t uid,

status_t *status,

audio_port_handle_t portId,

const sp<media::IAudioTrackCallback>& callback)

{

size_t frameCount = *pFrameCount;

size_t notificationFrameCount = *pNotificationFrameCount;

sp<Track> track;

status_t lStatus;

audio_output_flags_t outputFlags = mOutput->flags;

audio_output_flags_t requestedFlags = *flags;

uint32_t sampleRate;

if (sharedBuffer != 0 && checkIMemory(sharedBuffer) != NO_ERROR) {

lStatus = BAD_VALUE;

goto Exit;

}

if (*pSampleRate == 0) {

*pSampleRate = mSampleRate;

}

sampleRate = *pSampleRate;

// special case for FAST flag considered OK if fast mixer is present

if (hasFastMixer()) {

outputFlags = (audio_output_flags_t)(outputFlags | AUDIO_OUTPUT_FLAG_FAST);

}

....

....

....

....

....

track = new Track(this, client, streamType, attr, sampleRate, format,

channelMask, frameCount,

nullptr /* buffer */, (size_t)0 /* bufferSize */, sharedBuffer,

sessionId, creatorPid, uid, *flags, TrackBase::TYPE_DEFAULT, portId);

lStatus = track != 0 ? track->initCheck() : (status_t) NO_MEMORY;

if (lStatus != NO_ERROR) {

ALOGE("createTrack_l() initCheck failed %d; no control block?", lStatus);

// track must be cleared from the caller as the caller has the AF lock

goto Exit;

}

mTracks.add(track);

{

Mutex::Autolock _atCbL(mAudioTrackCbLock);

if (callback.get() != nullptr) {

mAudioTrackCallbacks.emplace(callback);

}

}

sp<EffectChain> chain = getEffectChain_l(sessionId);

if (chain != 0) {

ALOGV("createTrack_l() setting main buffer %p", chain->inBuffer());

track->setMainBuffer(chain->inBuffer());

chain->setStrategy(AudioSystem::getStrategyForStream(track->streamType()));

chain->incTrackCnt();

}

if ((*flags & AUDIO_OUTPUT_FLAG_FAST) && (tid != -1)) {

pid_t callingPid = IPCThreadState::self()->getCallingPid();

// we don't have CAP_SYS_NICE, nor do we want to have it as it's too powerful,

// so ask activity manager to do this on our behalf

sendPrioConfigEvent_l(callingPid, tid, kPriorityAudioApp, true /*forApp*/);

}

}

lStatus = NO_ERROR;

Exit:

*status = lStatus;

return track;

}A Track is created in the above function, and Track inherits TrackBase, so let's take a look at its structure

// TrackBase constructor must be called with AudioFlinger::mLock held

AudioFlinger::ThreadBase::TrackBase::TrackBase(

ThreadBase *thread,

const sp<Client>& client,

const audio_attributes_t& attr,

uint32_t sampleRate,

audio_format_t format,

audio_channel_mask_t channelMask,

size_t frameCount,

void *buffer,

size_t bufferSize,

audio_session_t sessionId,

pid_t creatorPid,

uid_t clientUid,

bool isOut,

alloc_type alloc,

track_type type,

audio_port_handle_t portId,

std::string metricsId)

: RefBase(),

mThread(thread),

mClient(client),

mCblk(NULL),

// mBuffer, mBufferSize

mState(IDLE),

mAttr(attr),

mSampleRate(sampleRate),

mFormat(format),

mChannelMask(channelMask),

mChannelCount(isOut ?

audio_channel_count_from_out_mask(channelMask) :

audio_channel_count_from_in_mask(channelMask)),

mFrameSize(audio_has_proportional_frames(format) ?

mChannelCount * audio_bytes_per_sample(format) : sizeof(int8_t)),

mFrameCount(frameCount),

mSessionId(sessionId),

mIsOut(isOut),

mId(android_atomic_inc(&nextTrackId)),

mTerminated(false),

mType(type),

mThreadIoHandle(thread ? thread->id() : AUDIO_IO_HANDLE_NONE),

mPortId(portId),

mIsInvalid(false),

mTrackMetrics(std::move(metricsId), isOut),

mCreatorPid(creatorPid)

{

const uid_t callingUid = IPCThreadState::self()->getCallingUid();

if (!isAudioServerOrMediaServerUid(callingUid) || clientUid == AUDIO_UID_INVALID) {

ALOGW_IF(clientUid != AUDIO_UID_INVALID && clientUid != callingUid,

"%s(%d): uid %d tried to pass itself off as %d",

__func__, mId, callingUid, clientUid);

clientUid = callingUid;

}

// clientUid contains the uid of the app that is responsible for this track, so we can blame

// battery usage on it.

//Get application process uid

mUid = clientUid;

// ALOGD("Creating track with %d buffers @ %d bytes", bufferCount, bufferSize);

size_t minBufferSize = buffer == NULL ? roundup(frameCount) : frameCount;

// check overflow when computing bufferSize due to multiplication by mFrameSize.

if (minBufferSize < frameCount // roundup rounds down for values above UINT_MAX / 2

|| mFrameSize == 0 // format needs to be correct

|| minBufferSize > SIZE_MAX / mFrameSize) {

android_errorWriteLog(0x534e4554, "34749571");

return;

}

minBufferSize *= mFrameSize;

if (buffer == nullptr) {

bufferSize = minBufferSize; // allocated here.

} else if (minBufferSize > bufferSize) {

android_errorWriteLog(0x534e4554, "38340117");

return;

}

size_t size = sizeof(audio_track_cblk_t);

if (buffer == NULL && alloc == ALLOC_CBLK) {

// check overflow when computing allocation size for streaming tracks.

if (size > SIZE_MAX - bufferSize) {

android_errorWriteLog(0x534e4554, "34749571");

return;

}

size += bufferSize;

}

if (client != 0) {

//Request the MemoryDealer tool class in the Client to allocate the buffer

mCblkMemory = client->heap()->allocate(size);

if (mCblkMemory == 0 ||

(mCblk = static_cast<audio_track_cblk_t *>(mCblkMemory->unsecurePointer())) == NULL) {

ALOGE("%s(%d): not enough memory for AudioTrack size=%zu", __func__, mId, size);

client->heap()->dump("AudioTrack");

mCblkMemory.clear();

return;

}

} else {

mCblk = (audio_track_cblk_t *) malloc(size);

if (mCblk == NULL) {

ALOGE("%s(%d): not enough memory for AudioTrack size=%zu", __func__, mId, size);

return;

}

}

....

....

....

....

mBufferSize = bufferSize;

#ifdef TEE_SINK

mTee.set(sampleRate, mChannelCount, format, NBAIO_Tee::TEE_FLAG_TRACK);

#endif

}

}The construction process of TrackBase is mainly to allocate shared memory for audio playback. Continue to analyze the construction of Track.

// Track constructor must be called with AudioFlinger::mLock and ThreadBase::mLock held

AudioFlinger::PlaybackThread::Track::Track(

PlaybackThread *thread,

const sp<Client>& client,

audio_stream_type_t streamType,

const audio_attributes_t& attr,

...

...

...

mVolumeHandler(new media::VolumeHandler(sampleRate)),

mOpPlayAudioMonitor(OpPlayAudioMonitor::createIfNeeded(uid, attr, id(), streamType)),

// mSinkTimestamp

mFrameCountToBeReady(frameCountToBeReady),

mFastIndex(-1),

mCachedVolume(1.0),

/* The track might not play immediately after being active, similarly as if its volume was 0.

* When the track starts playing, its volume will be computed. */

mFinalVolume(0.f),

mResumeToStopping(false),

mFlushHwPending(false),

mFlags(flags)

{

// client == 0 implies sharedBuffer == 0

ALOG_ASSERT(!(client == 0 && sharedBuffer != 0));

ALOGV_IF(sharedBuffer != 0, "%s(%d): sharedBuffer: %p, size: %zu",

__func__, mId, sharedBuffer->unsecurePointer(), sharedBuffer->size());

if (mCblk == NULL) {

return;

}

if (!thread->isTrackAllowed_l(channelMask, format, sessionId, uid)) {

ALOGE("%s(%d): no more tracks available", __func__, mId);

releaseCblk(); // this makes the track invalid.

return;

}

if (sharedBuffer == 0) { //stream mode

mAudioTrackServerProxy = new AudioTrackServerProxy(mCblk, mBuffer, frameCount,

mFrameSize, !isExternalTrack(), sampleRate);

} else { //static mode

mAudioTrackServerProxy = new StaticAudioTrackServerProxy(mCblk, mBuffer, frameCount,

mFrameSize, sampleRate);

}

mServerProxy = mAudioTrackServerProxy;

...

...

...

...

}In the Track construct. Create different proxy objects according to different playback modes.

When constructing the Client object, a memory allocation tool object MemoryDealer is created. At the same time, an anonymous shared memory with a size of 2M is created. Here, the MemoryDealer object is used to allocate a buffer of the specified size on this anonymous shared memory.

sp<IMemory> MemoryDealer::allocate(size_t size)

{

sp<IMemory> memory;

//Allocate shared memory of size and return the offset of the buffer

const ssize_t offset = allocator()->allocate(size);

if (offset >= 0) {

To be assigned buffer Package as Allocation object

memory = new Allocation(this, heap(), offset, size);

}

return memory;

}

size_t SimpleBestFitAllocator::allocate(size_t size, uint32_t flags)

{

Mutex::Autolock _l(mLock);

ssize_t offset = alloc(size, flags);

return offset;

}

ssize_t SimpleBestFitAllocator::alloc(size_t size, uint32_t flags)

{

if (size == 0) {

return 0;

}

size = (size + kMemoryAlign-1) / kMemoryAlign;

chunk_t* free_chunk = nullptr;

chunk_t* cur = mList.head();

size_t pagesize = getpagesize();

while (cur) {

int extra = 0;

if (flags & PAGE_ALIGNED)

extra = ( -cur->start & ((pagesize/kMemoryAlign)-1) ) ;

// best fit

if (cur->free && (cur->size >= (size+extra))) {

if ((!free_chunk) || (cur->size < free_chunk->size)) {

free_chunk = cur;

}

if (cur->size == size) {

break;

}

}

cur = cur->next;

}

if (free_chunk) {

const size_t free_size = free_chunk->size;

free_chunk->free = 0;

free_chunk->size = size;

if (free_size > size) {

int extra = 0;

if (flags & PAGE_ALIGNED)

extra = ( -free_chunk->start & ((pagesize/kMemoryAlign)-1) ) ;

if (extra) {

chunk_t* split = new chunk_t(free_chunk->start, extra);

free_chunk->start += extra;

mList.insertBefore(free_chunk, split);

}

ALOGE_IF((flags&PAGE_ALIGNED) &&

((free_chunk->start*kMemoryAlign)&(pagesize-1)),

"PAGE_ALIGNED requested, but page is not aligned!!!");

const ssize_t tail_free = free_size - (size+extra);

if (tail_free > 0) {

chunk_t* split = new chunk_t(

free_chunk->start + free_chunk->size, tail_free);

mList.insertAfter(free_chunk, split);

}

}

return (free_chunk->start)*kMemoryAlign;

}

return NO_MEMORY;

}

When creating a track, AudioFlinger applies for the corresponding memory, and then returns AudioTrack through the imememory interface. In this way, AudioTrack and AudioFlinger manage the same audio_track_cblk_t. Through it, the ring data sharing area is realized. AudioTrack writes audio data to the data sharing area. AudioFlinger reads the audio data from the data sharing area and sends it to AudioHardware for playback after passing through the Mixer. AudioTrack is the producer and AudioFlinger is the consumer.

Summarize the playback process:

1. The Java layer creates AudioTrack objects through JNI.

2. Find the open audio output device according to parameters such as StreamType. If no matching audio output device is found. Then request AudioFlinger to open a new audio output device

3. AudioFlinger creates a mixer thread MixerThread for the output device, and returns the id of the thread to AudioTrack as the return value of getOutput();

4. AudioTrack creates a Track by calling AudioFlinger's createTrack() through the binder mechanism. Moreover, the TrackHandle Binder local object is created, and the IAudioTrack proxy object is returned at the same time.

5. AudioFlinger registers the Track into MixerThread.

6. AudioTrack obtains the data sharing area created in AudioFlinger through the IAudioTrack interface.

7. AudioTrack calls start to start writing data, and AudioFlinger reads the data for playback.