1. Introduction to big data

1.1 big data concept

big data refers to a data set that cannot be captured, managed and processed by conventional software tools within a certain time range. It is a massive, high growth rate and diversified information asset that requires a new processing mode to have stronger decision-making power, insight and discovery power and process optimization ability. It mainly solves the problems of massive data storage and massive data analysis and calculation.

The smallest basic unit of data storage is bit, and all units are given in order: bit,Byte,KB,MB,GB,TB,PB,EB,ZB,YB,BB,NB,DB.

1Byte= 8bit 1K = 1024Byte 1MB = 1024K 1G = 1024M

1T = 1024G 1P = 1024T 1E = 1024P 1Z = 1024E

1Y = 1024Z 1B = 1024Y 1N = 1024B 1D = 1024N

In 1986, there was only 0.02EB, or about 21000TB of data in the world, while in 2007, there were 280EB, or about 300000000TB of data in the world, a 14000 fold increase.

Recently, due to the emergence of mobile Internet and Internet of things, the access of various terminal devices and the popularity of various business forms, the global data volume will double every 40 months! If you don't have any impression, you can take another simple example. In 2012, 2.5EB data will be generated every day. According to IDC's report, the global data volume will soar from 4.4ZB to 44ZB from 2013 to 2020! By 2025, there will be 163ZB data in the world!

It can be seen that up to now, the amount of data in the world has been explosive! The traditional relational database can't handle such a large amount of data at all!

1.2 big data features

1) Volume:

Up to now, the data volume of all printing materials produced by mankind is 200PB, while the total data volume of words spoken by mankind in history is about 5EB. At present, the capacity of typical personal computer hard disk is TB, while the data volume of some large enterprises is close to EB.

2) Velocity:

This is the most significant feature that distinguishes big data from traditional data mining. According to IDC's "Digital Universe" report, the global data usage is expected to reach 35.2ZB by 2020. In the face of such a large amount of data, the efficiency of data processing is the life of the enterprise.

3) Variety:

This type of diversity also allows data to be divided into structured data and unstructured data. Compared with the structured data based on database / text, which is easy to store in the past, there are more and more unstructured data, including network log, audio, video, picture, geographic location information, etc. these multiple types of data put forward higher requirements for data processing ability.

4) Value (low value density):

The value density is inversely proportional to the total amount of data.

1.3 big data application scenarios

1) O2O: Baidu big data + platform helps businesses refine operation and improve sales through advanced online and offline communication technology and passenger flow analysis ability.

2) Retail: explore user value and provide personalized service solutions; Run through the network and physical retail, and work together to create the ultimate experience. Classic case, diaper + beer.

3) Commodity advertisement recommendation: recommend the types of commodity advertisements visited to users

4) Real estate: big data comprehensively helps the real estate industry to create accurate investment strategies and marketing, select more suitable places, build more suitable buildings and sell them to more suitable people.

5) Insurance: massive data mining and risk prediction help the insurance industry with precision marketing and improve its fine pricing ability.

6) Finance: multi dimension reflects the characteristics of users, helps financial institutions recommend high-quality customers and prevent fraud risks.

7) Artificial intelligence

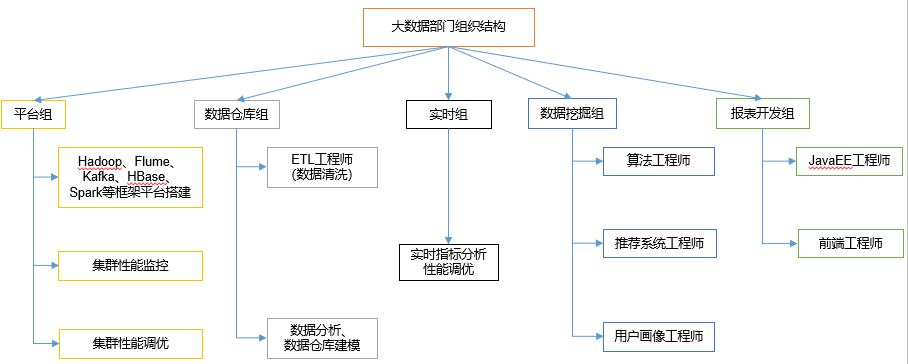

1.4 organizational structure of big data Department

2. Hadoop introduction and big data ecology

2.1 introduction to Hadoop

Hadoop originated from nutch. Nutch's design goal is to build a large-scale whole network search engine, including web page capture, index, query and other functions. However, with the increase of the number of web pages captured, it has encountered a serious scalability problem - how to solve the problem of storage and index of billions of web pages.

Two papers published by Google in 2003 and 2004 provide a feasible solution to this problem.

Distributed file system (GFS) can be used to handle the storage of massive web pages

MAPREDUCE, a distributed computing framework, can be used to deal with the index calculation of massive web pages.

Nutch's developers completed the corresponding open source implementation of HDFS and MAPREDUCE, and split it from nutch into an independent project HADOOP. By January 2008, HADOOP became the top project of Apache

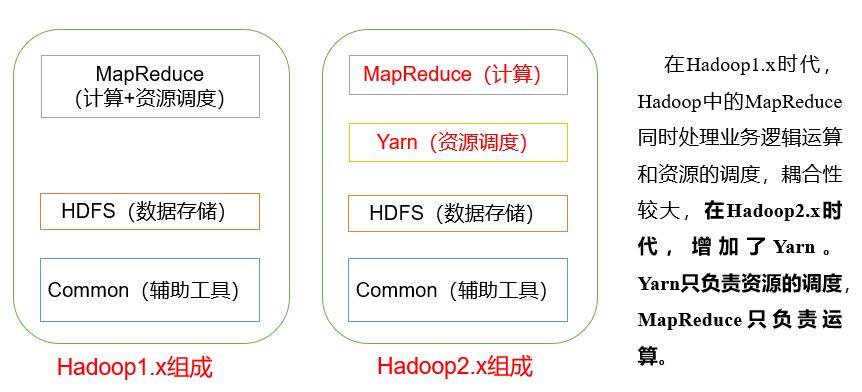

In a narrow sense, hadoop refers to hadoop software alone,

- HDFS: distributed file system

- MapReduce: distributed computing system

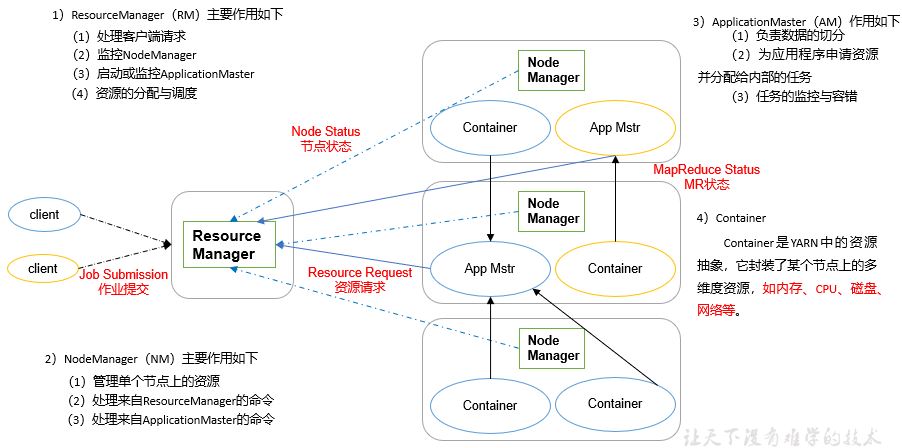

- Yarn: distributed cluster resource management

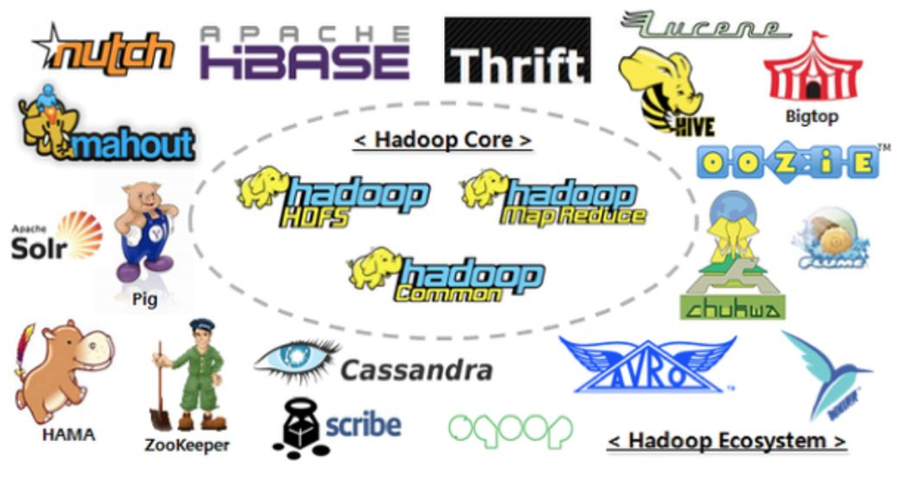

Broadly speaking, hadoop refers to an ecosystem of big data, including many other software

2.2 three major releases of Hadoop

Hadoop has three major distributions: Apache, Cloudera and Hortonworks.

Apache version is the most original (basic) version, which is best for introductory learning.

Cloudera integrates many big data frameworks internally. Corresponding product CDH.

Hortonworks documentation is good. Corresponding product HDP.

1)Apache Hadoop

Official website address: http://hadoop.apache.org/releases.html

Download address: https://archive.apache.org/dist/hadoop/common/

2)Cloudera Hadoop

Official website address: https://www.cloudera.com/downloads/cdh/5-10-0.html

Download address: http://archive-primary.cloudera.com/cdh5/cdh/5/

(1) Cloudera, founded in 2008, is the first company to commercialize Hadoop. It provides partners with Hadoop commercial solutions, mainly including support, consulting services and training.

(2) In 2009, Doug Cutting, the founder of Hadoop, also joined cloudera. Cloudera products mainly include CDH, Cloudera Manager and Cloudera Support

(3) CDH is the Hadoop distribution of cloudera. It is completely open source and has enhanced compatibility, security and stability compared with Apache Hadoop. Cloudera is priced at $10000 per node per year.

(4) Cloudera Manager is the software distribution, management and monitoring platform of the cluster. It can deploy a Hadoop cluster within a few hours and monitor the nodes and services of the cluster in real time.

3)Hortonworks Hadoop

Official website address: https://hortonworks.com/products/data-center/hdp/

Download address: https://hortonworks.com/downloads/#data-platform

(1) Hortonworks, founded in 2011, is a joint venture between Yahoo and Benchmark Capital, a Silicon Valley venture capital company.

(2) At the beginning of its establishment, the company absorbed about 25 to 30 Yahoo engineers specializing in Hadoop. The above engineers began to assist Yahoo in developing Hadoop in 2005, contributing 80% of Hadoop code.

(3) Hortonworks' main product is the Hortonworks Data Platform (HDP), which is also a 100% open source product. In addition to common projects, HDP also includes Ambari, an open source installation and management system.

(4) Hortonworks has been acquired by Cloudera.

2.3 Hadoop composition

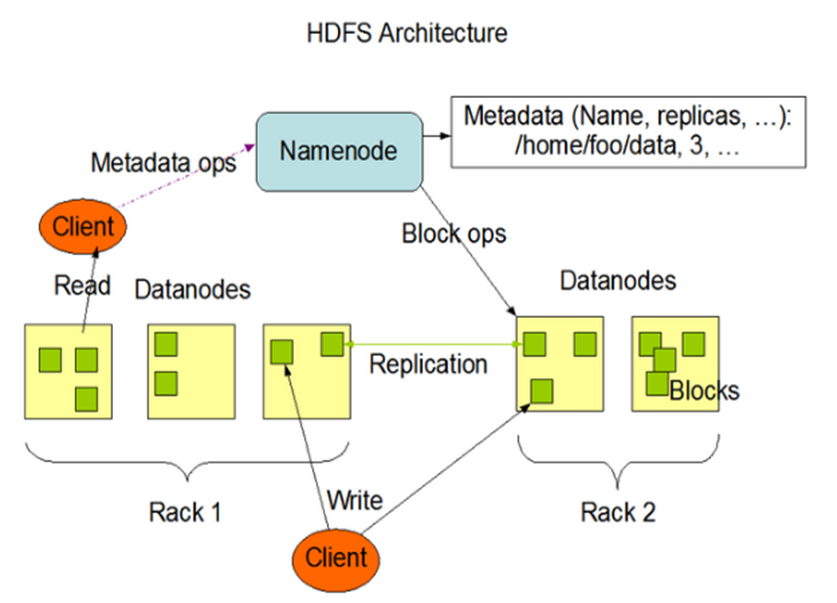

2.3.1 overview of HDFS architecture

1) NameNode (nn): stores the metadata of the file, such as file name, file directory structure, file attributes (generation time, number of copies, file permissions), as well as the block list of each file and the DataNode where the block is located.

2) DataNode(dn): stores file block data and the checksum of block data in the local file system.

3) Secondary NameNode(2nn): Backup metadata of NameNode at regular intervals.

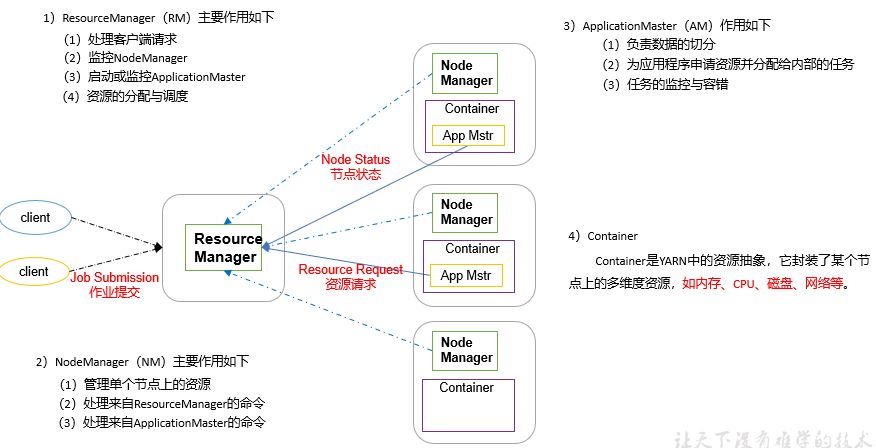

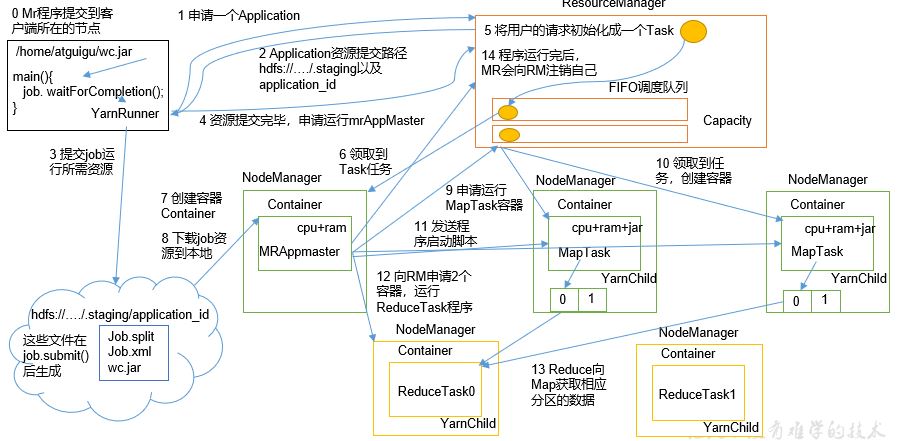

2.3.2 overview of yarn architecture

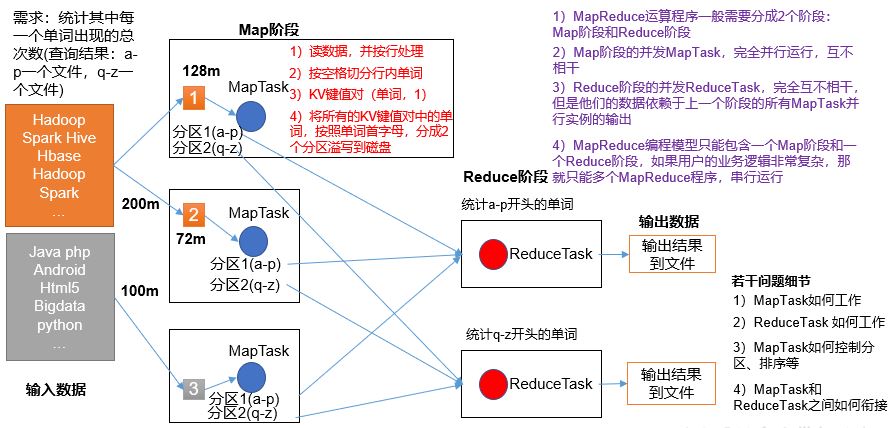

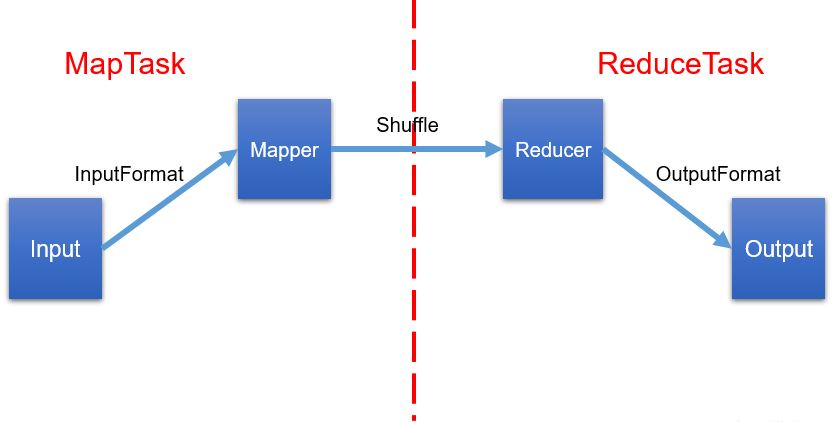

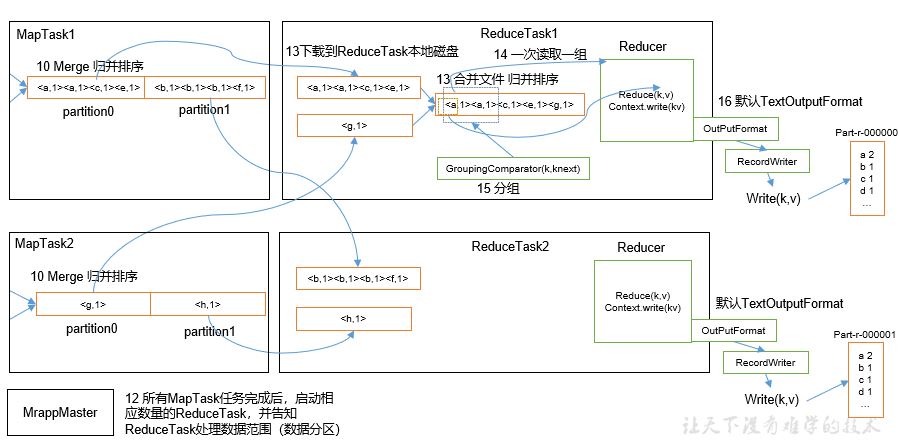

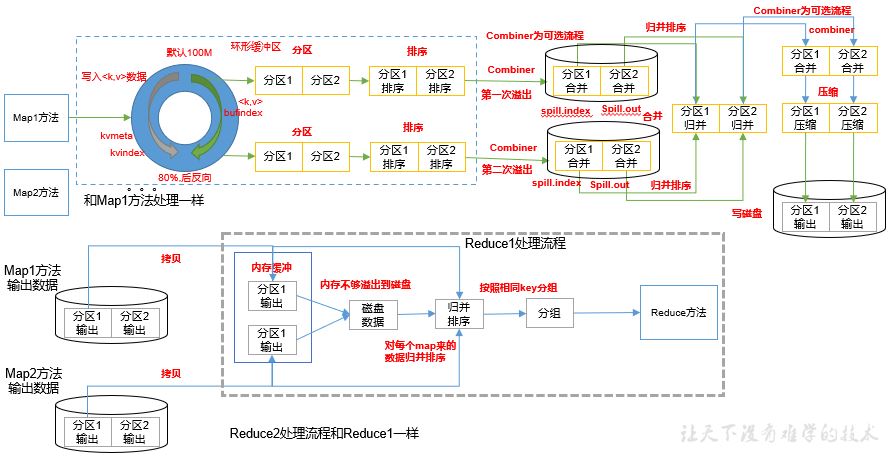

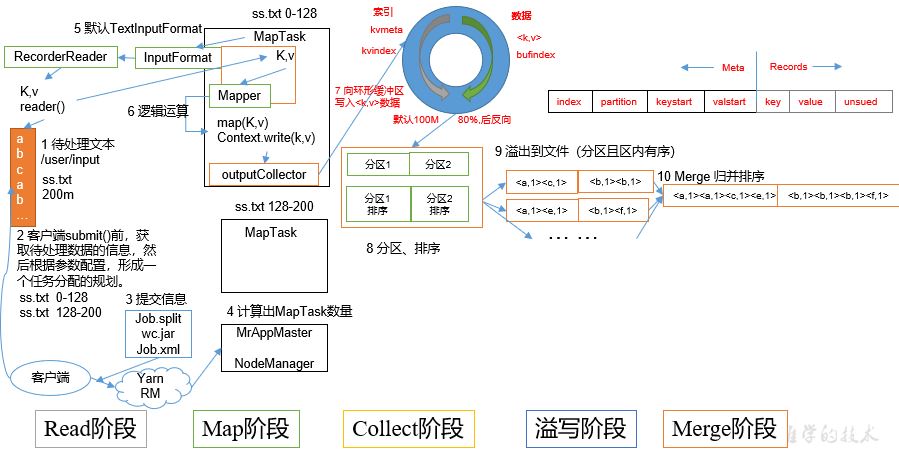

2.3.3 overview of MapReduce architecture

MapReduce divides the calculation process into two stages: Map and Reduce

1) The Map stage processes the input data in parallel

2) In the Reduce phase, the Map results are summarized

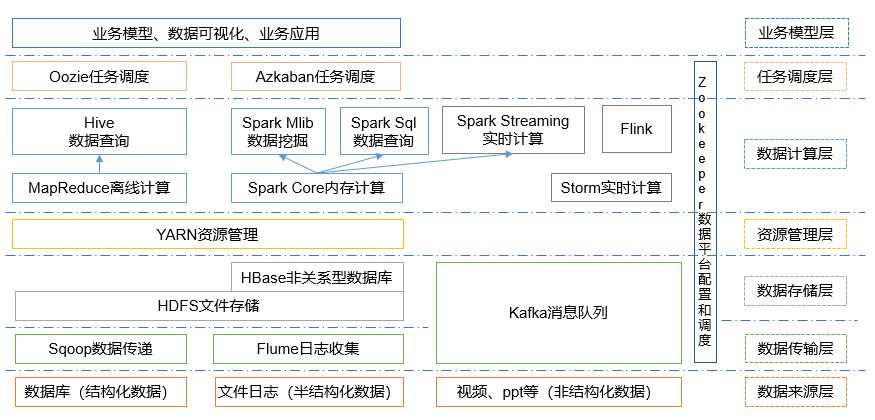

2.4 big data technology ecosystem

1) Sqoop: sqoop is an open source tool, which is mainly used to transfer data between Hadoop, Hive and traditional database (MySQL). It can import the data in a relational database (such as mysql, Oracle, etc.) into Hadoop HDFS or HDFS into relational database.

2) Flume: flume is a highly available, highly reliable and distributed system for massive log collection, aggregation and transmission. Flume supports customization of various data senders in the log system for data collection;

3) Kafka: Kafka is a high-throughput distributed publish subscribe message system;

4) Spark: spark is currently the most popular open source big data memory computing framework. It can be calculated based on the big data stored on Hadoop.

5) Flink: Flink is currently the most popular open source big data memory computing framework. There are many scenarios for real-time computing.

6) Oozie: oozie is a workflow scheduling management system that manages Hdoop job s.

7) HBase: HBase is a distributed, column oriented open source database. HBase is different from the general relational database. It is a database suitable for unstructured data storage.

8) Hive: hive is a data warehouse tool based on Hadoop. It can map structured data files into a database table and provide simple SQL query function. It can convert SQL statements into MapReduce tasks for operation. Its advantage is low learning cost. It can quickly realize simple MapReduce statistics through SQL like statements without developing special MapReduce applications. It is very suitable for statistical analysis of data warehouse.

9) ZooKeeper: it is a reliable coordination system for large-scale distributed systems. Its functions include configuration maintenance, name service, distributed synchronization, group service, etc.

3. Build Hadoop operating environment

3.1 template virtual machine environment preparation

1) Prepare a template virtual machine. The virtual machine configuration requirements are as follows:

Note: the Linux system environment in this paper is illustrated by CentOS-7.5-x86-1804

Windows version: Windows 10, 64 bit (build 19043.1466) 10.0.19043

VMWare WorkStation version: VMWare ® Workstation 15 Pro

Template virtual machine: CentOS-7.5-x86-1804, 4G memory and 50G hard disk. Install the necessary environment to prepare for the installation of hadoop (the memory size of virtual machine is determined by your own computer, and it is recommended to learn 16G or above of big data computer memory)

yum install -y epel-release yum install -y psmisc nc net-tools rsync vim lrzsz ntp libzstd openssl-static tree iotop git

Using Yum to install requires that the virtual machine can access the Internet normally. You can test the virtual machine networking before installing yum

ping www.baidu.com

2) Turn off the firewall. Turn off the firewall and start it automatically

systemctl stop firewalld systemctl disable firewalld

3) Create wangxin user and modify the password of wangxin user (user name and password can be specified by yourself, here is an example)

useradd wangxin passwd ******

4) Configure wangxin user to have root permission, which is convenient for sudo to execute the command with root permission later

[root@hadoop100 ~]$ vim /etc/sudoers modify/etc/sudoers File, find the following line (line 91), in root Add a line below as follows: ## Allow root to run any commands anywhere root ALL=(ALL) ALL wangxin ALL=(ALL) NOPASSWD:ALL

5) Create a folder in the / opt directory and modify the owner and group

(1) Create the module and software folders in the / opt directory

[root@hadoop100 ~]$ mkdir /opt/module [root@hadoop100 ~]$ mkdir /opt/software

(2) Modify that the owner and group of the module and software folders are wangxin users

[root@hadoop100 ~]$ chown wangxin:wangxin /opt/module [root@hadoop100 ~]$ chown wangxin:wangxin /opt/software

(3) View the owner and group of the module and software folders

[root@hadoop100 ~]$ cd /opt/ [root@hadoop100 opt]$ ll

6) Uninstall the open JDK of the virtual machine

[root@hadoop100 ~]$ rpm -qa | grep -i java | xargs -n1 rpm -e --nodeps

7) Restart the virtual machine

[root@hadoop100 ~]$ reboot

3.2 cloning virtual machines

1) Using the template machine Hadoop 100, clone three virtual machines: Hadoop 102, Hadoop 103, Hadoop 104

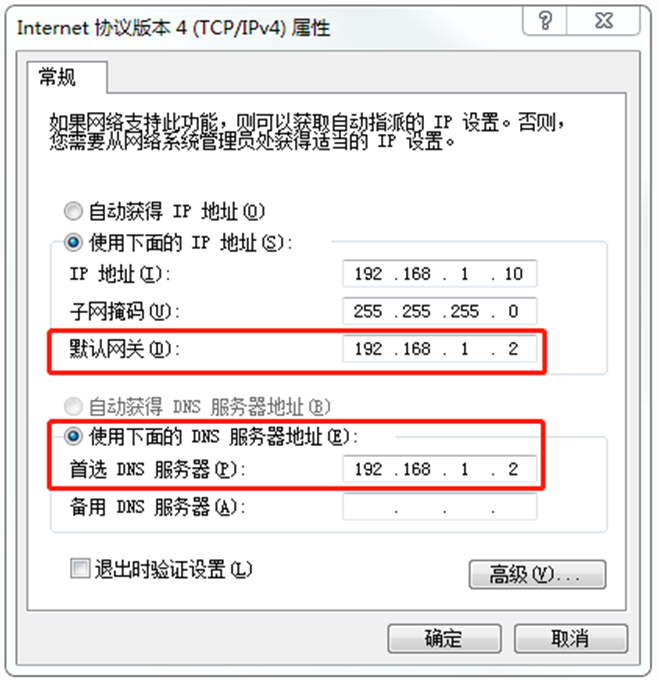

2) Modify the clone machine IP, which is illustrated by Hadoop 102 below

(1) Modify the static IP of the cloned virtual machine

[root@hadoop100 ~]$ vim /etc/sysconfig/network-scripts/ifcfg-ens33

Change to

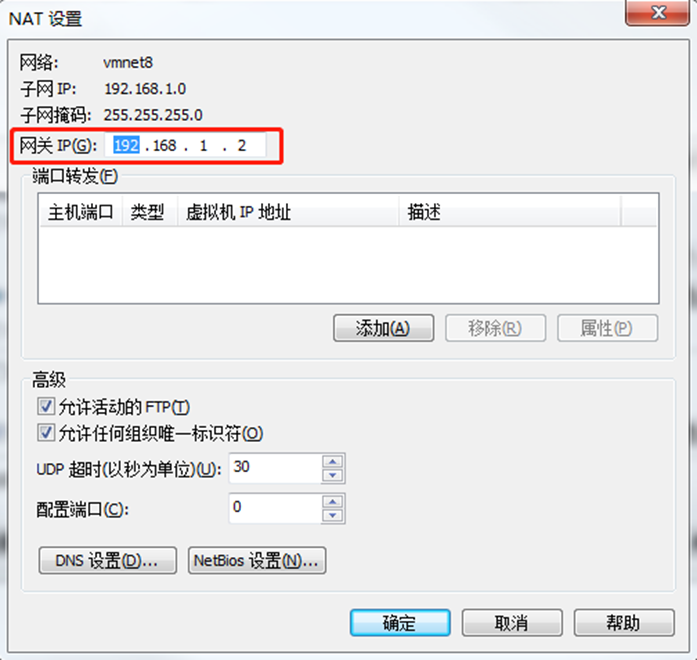

DEVICE=ens33 TYPE=Ethernet ONBOOT=yes BOOTPROTO=static NAME="ens33" IPADDR=192.168.1.102 PREFIX=24 GATEWAY=192.168.1.2 DNS1=192.168.1.2

Note: the above configuration is just an example. Other configurations such as IP address can be set by yourself

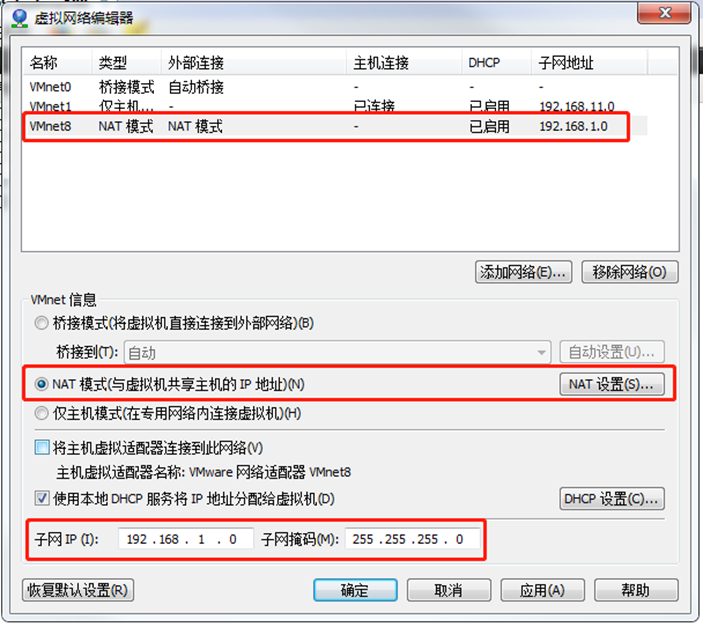

(2) View the virtual network editor of Linux virtual machine, edit - > virtual network editor - > VMnet8

(3) View the IP address of Windows system adapter VMware Network Adapter VMnet8

(4) Ensure that the IP address and virtual network editor address in the ifcfg-ens33 file of Linux system are the same as the VM8 network IP address of Windows system.

3) Modify the host name of the clone machine. The following is an example of Hadoop 102

(1) To modify the host name, choose one of two methods

[root@hadoop100 ~]$ hostnamectl --static set-hostname hadoop102

Or modify the / etc/hostname file

[root@hadoop100 ~]$ vim /etc/hostname hadoop102

(2) Configure the linux clone host name mapping hosts file and open / etc/hosts

[root@hadoop100 ~]$ vim /etc/hosts

Add the following

192.168.1.100 hadoop100 192.168.1.101 hadoop101 192.168.1.102 hadoop102 192.168.1.103 hadoop103 192.168.1.104 hadoop104 192.168.1.105 hadoop105 192.168.1.106 hadoop106 192.168.1.107 hadoop107 192.168.1.108 hadoop108

4) Restart the clone machine Hadoop 102

[root@hadoop100 ~]$ reboot

5) Modify the host mapping file (hosts file) of windows

(1) If the operating system is Windows 7, you can modify it directly

(a) Enter the path C:\Windows\System32\drivers\etc

(b) Open the hosts file, add the following contents, and then save

192.168.1.100 hadoop100 192.168.1.101 hadoop101 192.168.1.102 hadoop102 192.168.1.103 hadoop103 192.168.1.104 hadoop104 192.168.1.105 hadoop105 192.168.1.106 hadoop106 192.168.1.107 hadoop107 192.168.1.108 hadoop108

(2) If the operating system is windows10, copy it first, modify and save it, and then overwrite it

(a) Enter the path C:\Windows\System32\drivers\etc

(b) Copy hosts file to desktop

(c) Open the desktop hosts file and add the following

192.168.1.100 hadoop100 192.168.1.101 hadoop101 192.168.1.102 hadoop102 192.168.1.103 hadoop103 192.168.1.104 hadoop104 192.168.1.105 hadoop105 192.168.1.106 hadoop106 192.168.1.107 hadoop107 192.168.1.108 hadoop108

(d) Overwrite the desktop hosts file with the C:\Windows\System32\drivers\etc path hosts file

3.3 installing JDK

Operate under virtual machine Hadoop 102

1) Uninstall existing JDK

[wangxin@hadoop102 ~]$ rpm -qa | grep -i java | xargs -n1 sudo rpm -e --nodeps

2) Import the JDK into the software folder under the opt directory with the XShell tool

3) Check whether the software package is imported successfully in opt directory under Linux system

[wangxin@hadoop102 ~]$ ls /opt/software/

See the following results:

hadoop-3.1.3.tar.gz jdk-8u212-linux-x64.tar.gz

4) Unzip the JDK to the / opt/module directory

[wangxin@hadoop102 software]$ tar -zxvf jdk-8u212-linux-x64.tar.gz -C /opt/module/

5) Configure JDK environment variables

(1) Create a new / etc / profile d/my_ env. SH file

[wangxin@hadoop102 ~]$ sudo vim /etc/profile.d/my_env.sh

Add the following

#JAVA_HOME export JAVA_HOME=/opt/module/jdk1.8.0_212 export PATH=$PATH:$JAVA_HOME/bin

(2) Exit after saving

:wq

(3) source click the / etc/profile file to make the new environment variable PATH effective

[wangxin@hadoop102 ~]$ source /etc/profile

6) Test whether the JDK is installed successfully

[wangxin@hadoop102 ~]$ java -version

If you can see the following results, the Java installation is successful.

java version "1.8.0_212"

Note: restart (if java version can be used, there is no need to restart)

[wangxin@hadoop102 ~]$ sudo reboot

3.4 installing Hadoop

Operate under the virtual machine Hadoop 102

Hadoop download address: https://archive.apache.org/dist/hadoop/common/hadoop-3.1.3/

1) Add hadoop-3.1.3. With XShell tool tar. GZ is imported into the software folder under the opt directory

2) Enter the Hadoop installation package path

[wangxin@hadoop102 ~]$ cd /opt/software/

3) Unzip the installation file under / opt/module

[wangxin@hadoop102 software]$ tar -zxvf hadoop-3.1.3.tar.gz -C /opt/module/

4) Check whether the decompression is successful

[wangxin@hadoop102 software]$ ls /opt/module/ hadoop-3.1.3

5) Add Hadoop to environment variable

(1) Get Hadoop installation path

[wangxin@hadoop102 hadoop-3.1.3]$ pwd /opt/module/hadoop-3.1.3

(2) Open / etc / profile d/my_ env. SH file

sudo vim /etc/profile.d/my_env.sh

In my_ env. Add the following at the end of the SH file: (shift+g)

#HADOOP_HOME export HADOOP_HOME=/opt/module/hadoop-3.1.3 export PATH=$PATH:$HADOOP_HOME/bin export PATH=$PATH:$HADOOP_HOME/sbin

(3) Exit after saving

:wq

(4) Make the modified document effective

[wangxin@hadoop102 hadoop-3.1.3]$ source /etc/profile

6) Test whether the installation is successful

[wangxin@hadoop102 hadoop-3.1.3]$ hadoop version Hadoop 3.1.3

7) Restart (restart if Hadoop command cannot be used)

[wangxin@hadoop102 hadoop-3.1.3]$ sync [wangxin@hadoop102 hadoop-3.1.3]$ sudo reboot

3.5 Hadoop directory structure

1) View Hadoop directory structure

[wangxin@hadoop102 hadoop-3.1.3]$ ll Total consumption 52 drwxr-xr-x. 2 wangxin wangxin 4096 5 June 22, 2017 bin drwxr-xr-x. 3 wangxin wangxin 4096 5 June 22, 2017 etc drwxr-xr-x. 2 wangxin wangxin 4096 5 June 22, 2017 include drwxr-xr-x. 3 wangxin wangxin 4096 5 June 22, 2017 lib drwxr-xr-x. 2 wangxin wangxin 4096 5 June 22, 2017 libexec -rw-r--r--. 1 wangxin wangxin 15429 5 June 22, 2017 LICENSE.txt -rw-r--r--. 1 wangxin wangxin 101 5 June 22, 2017 NOTICE.txt -rw-r--r--. 1 wangxin wangxin 1366 5 June 22, 2017 README.txt drwxr-xr-x. 2 wangxin wangxin 4096 5 June 22, 2017 sbin drwxr-xr-x. 4 wangxin wangxin 4096 5 June 22, 2017 share

2) Important catalogue

(1) bin directory: stores scripts for operating Hadoop related services (HDFS,YARN)

(2) etc Directory: Hadoop configuration file directory, which stores Hadoop configuration files

(3) lib Directory: the local library where Hadoop is stored (the function of compressing and decompressing data)

(4) sbin Directory: stores scripts for starting or stopping Hadoop related services

(5) share Directory: stores the dependent jar packages, documents, and official cases of Hadoop

4. Hadoop operation mode

Hadoop operation modes include: local mode, pseudo distributed mode and fully distributed mode.

Hadoop official website: http://hadoop.apache.org/

4.1 local operation mode (official wordcount)

1) Create a wcinput folder under the hadoop-3.1.3 file

[wangxin@hadoop102 hadoop-3.1.3]$ mkdir wcinput

2) Create a word under the wcinput file Txt file

[wangxin@hadoop102 hadoop-3.1.3]$ cd wcinput

3) Edit word Txt file

[wangxin@hadoop102 wcinput]$ vim word.txt

Enter the following in the file

hadoop yarn hadoop mapreduce wangxin wangxin

Save exit:: wq

4) Go back to Hadoop directory / opt/module/hadoop-3.1.3

5) Execution procedure

[wangxin@hadoop102 hadoop-3.1.3]$ hadoop jar share/hadoop/mapreduce/hadoop-mapreduce-examples-3.1.3.jar wordcount wcinput wcoutput

6) View results

[wangxin@hadoop102 hadoop-3.1.3]$ cat wcoutput/part-r-00000

See the following results:

wangxin 2 hadoop 2 mapreduce 1 yarn 1

4.2 fully distributed operation mode

4.2.1 virtual machine preparation

See Chapter 3.1 and 3.2 for details.

4.2.2 writing cluster distribution script xsync

1) scp (secure copy)

(1) scp definition:

scp can copy data between servers. (from server1 to server2)

(2) Basic grammar

scp -r $pdir/$fname $user@hadoop$host:$pdir/$fname Command recursion File path to copy/name Target user@host:Destination path/name

(3) Case practice

Premise: in Hadoop 102, Hadoop 103 and Hadoop 104, the / opt/module has been created

/opt/software two directories, and these two directories have been modified to wangxin:wangxin

sudo chown wangxin:wangxin -R /opt/module

(a) On Hadoop 102, add / opt / module / jdk1 8.0_ 212 directory to Hadoop 103.

[wangxin@hadoop102 ~]$ scp -r /opt/module/jdk1.8.0_212 wangxin@hadoop103:/opt/module

(b) On Hadoop 103, copy the / opt/module/hadoop-3.1.3 directory in Hadoop 102 to Hadoop 103.

[wangxin@hadoop103 ~]$ scp -r wangxin@hadoop102:/opt/module/hadoop-3.1.3 /opt/module/

(c) Operate on Hadoop 103 and copy all directories under / opt/module directory in Hadoop 102 to Hadoop 104.

[wangxin@hadoop103 opt]$ scp -r wangxin@hadoop102:/opt/module/* wangxin@hadoop104:/opt/module

2) rsync remote synchronization tool

rsync is mainly used for backup and mirroring. It has the advantages of high speed, avoiding copying the same content and supporting symbolic links.

Difference between rsync and scp: copying files with rsync is faster than scp. rsync only updates the difference files. scp is to copy all the files.

(1) Basic grammar

rsync -av $pdir/$fname $user@hadoop$host:$pdir/$fname The command option parameter is the path of the file to be copied/name Target user@host:Destination path/name

Option parameter description

Option function

-a archive copy

-v displays the copy process

(2) Case practice

(a) Synchronize the / opt/software directory on Hadoop 102 to the / opt/software directory on Hadoop 103 server

[wangxin@hadoop102 opt]$ rsync -av /opt/software/* wangxin@hadoop103:/opt/software

3) xsync cluster distribution script

(1) Requirement: copy files to the same directory of all nodes in a circular way

(2) Demand analysis:

(a) Original copy of rsync command:

rsync -av /opt/module root@hadoop103:/opt/

(b) Expected script:

xsync name of the file to synchronize

(c) Note: the script stored in the directory / home/wangxin/bin can be directly executed by wangxin users anywhere in the system.

(3) Script implementation

(a) Create an xsync file in the / home/wangxin/bin directory

[wangxin@hadoop102 opt]$ cd /home/wangxin [wangxin@hadoop102 ~]$ mkdir bin [wangxin@hadoop102 ~]$ cd bin [wangxin@hadoop102 bin]$ vim xsync

Write the following code in this file

#!/bin/bash

#1. Number of judgment parameters

if [ $# -lt 1 ]

then

echo Not Enough Arguement!

exit;

fi

#2. Traverse all machines in the cluster

for host in hadoop102 hadoop103 hadoop104

do

echo ==================== $host ====================

#3. Traverse all directories and send them one by one

for file in $@

do

#4. Judge whether the document exists

if [ -e $file ]

then

#5. Get parent directory

pdir=$(cd -P $(dirname $file); pwd)

#6. Get the name of the current file

fname=$(basename $file)

ssh $host "mkdir -p $pdir"

rsync -av $pdir/$fname $host:$pdir

else

echo $file does not exists!

fi

done

done

(b) The modified script xsync has execution permission

[wangxin@hadoop102 bin]$ chmod +x xsync

(c) Copy the script to / bin for global invocation

[wangxin@hadoop102 bin]$ sudo cp xsync /bin/

(d) Test script

[wangxin@hadoop102 ~]$ xsync /home/wangxin/bin [wangxin@hadoop102 bin]$ sudo xsync /bin/xsync

4.2.3 SSH non secret login configuration

1) Configure ssh

(1) Basic grammar

ssh ip address of another computer

(2) Solution to Host key verification failed during ssh connection

[wangxin@hadoop102 ~]$ ssh hadoop103

appear:

The authenticity of host '192.168.1.103 (192.168.1.103)' can't be established. RSA key fingerprint is cf:1e:de:d7:d0:4c:2d:98:60:b4:fd:ae:b1:2d:ad:06. Are you sure you want to continue connecting (yes/no)?

(3) The solution is as follows: enter yes directly

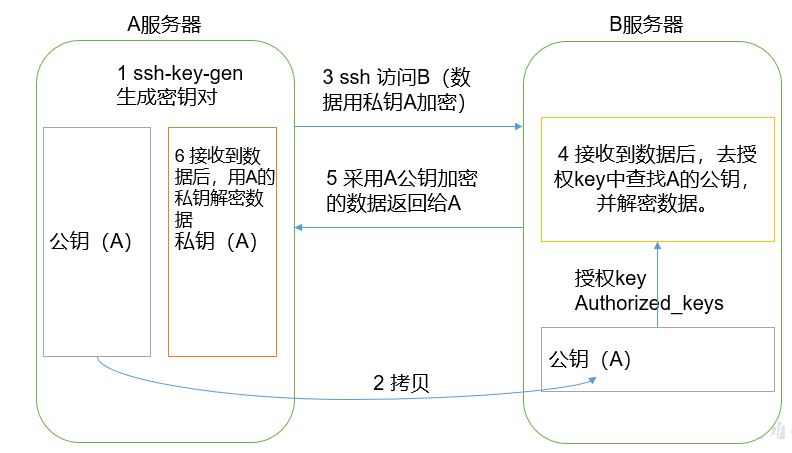

2) No key configuration

(1) Secret free login principle

(2) Generate public and private keys:

[wangxin@hadoop102 .ssh]$ ssh-keygen -t rsa

Then click (three carriage returns) and two file IDS will be generated_ RSA (private key), id_rsa.pub (public key)

(3) Copy the public key to the target machine for password free login

[wangxin@hadoop102 .ssh]$ ssh-copy-id hadoop102 [wangxin@hadoop102 .ssh]$ ssh-copy-id hadoop103 [wangxin@hadoop102 .ssh]$ ssh-copy-id hadoop104

be careful:

You also need to configure wangxin account on Hadoop 103 to log in to Hadoop 102, Hadoop 103 and Hadoop 104 servers without secret.

You also need to configure wangxin account on Hadoop 104 to log in to Hadoop 102, Hadoop 103 and Hadoop 104 servers without secret.

You also need to use the root account on Hadoop 102 to configure non secret login to Hadoop 102, Hadoop 103 and Hadoop 104;

3). Explanation of file functions under the ssh folder (~ /. ssh)

known_hosts records the public key of the computer that ssh has accessed

id_rsa generated private key

id_ rsa. Public key generated by pub

authorized_keys stores the authorized secret free login server public key

4.2.4 cluster configuration

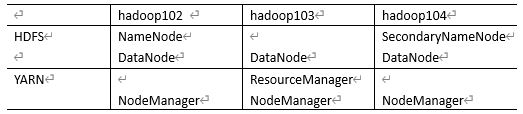

1) Cluster deployment planning

Note: NameNode and SecondaryNameNode should not be installed on the same server

Note: resource manager also consumes a lot of memory and should not be configured on the same machine as NameNode and SecondaryNameNode.

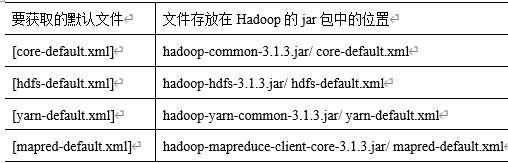

2) Profile description

Hadoop configuration files are divided into two types: default configuration files and user-defined configuration files. Only when users want to modify a default configuration value, they need to modify the user-defined configuration file and change the corresponding attribute value.

(1) Default profile:

The default file to be obtained is stored in the jar package of Hadoop

(2) Custom profile:

core-site.xml,hdfs-site.xml,yarn-site.xml,mapred-site.xml four configuration files are stored in $Hadoop_ On the path of home / etc / Hadoop, users can modify the configuration again according to the project requirements.

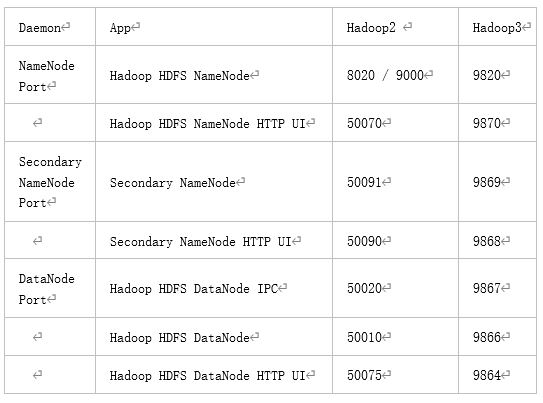

(3) Common port number Description

3) Configure cluster

(1) Core profile

Configure core site xml

[wangxin@hadoop102 ~]$ cd $HADOOP_HOME/etc/hadoop [wangxin@hadoop102 hadoop]$ vim core-site.xml

The contents of the document are as follows:

<?xml version="1.0" encoding="UTF-8"?>

<?xml-stylesheet type="text/xsl" href="configuration.xsl"?>

<configuration>

<!-- appoint NameNode Address of -->

<property>

<name>fs.defaultFS</name>

<value>hdfs://hadoop102:9820</value>

</property>

<!-- appoint hadoop Storage directory of data -->

<property>

<name>hadoop.tmp.dir</name>

<value>/opt/module/hadoop-3.1.3/data</value>

</property>

<!-- to configure HDFS The static user used for web page login is wangxin -->

<property>

<name>hadoop.http.staticuser.user</name>

<value>wangxin</value>

</property>

<!-- Configure this wangxin(superUser)Host nodes that are allowed to be accessed through proxy -->

<property>

<name>hadoop.proxyuser.wangxin.hosts</name>

<value>*</value>

</property>

<!-- Configure this wangxin(superUser)Allow groups to which users belong through proxy -->

<property>

<name>hadoop.proxyuser.wangxin.groups</name>

<value>*</value>

</property>

<!-- Configure this wangxin(superUser)Allow users through proxy-->

<property>

<name>hadoop.proxyuser.wangxin.groups</name>

<value>*</value>

</property>

</configuration>

(2) HDFS profile

Configure HDFS site xml

[wangxin@hadoop102 hadoop]$ vim hdfs-site.xml

The contents of the document are as follows:

<?xml version="1.0" encoding="UTF-8"?>

<?xml-stylesheet type="text/xsl" href="configuration.xsl"?>

<configuration>

<!-- nn web End access address-->

<property>

<name>dfs.namenode.http-address</name>

<value>hadoop102:9870</value>

</property>

<!-- 2nn web End access address-->

<property>

<name>dfs.namenode.secondary.http-address</name>

<value>hadoop104:9868</value>

</property>

</configuration>

(3) YARN profile

Configure yarn site xml

[wangxin@hadoop102 hadoop]$ vim yarn-site.xml

The contents of the document are as follows:

<?xml version="1.0" encoding="UTF-8"?>

<?xml-stylesheet type="text/xsl" href="configuration.xsl"?>

<configuration>

<!-- appoint MR go shuffle -->

<property>

<name>yarn.nodemanager.aux-services</name>

<value>mapreduce_shuffle</value>

</property>

<!-- appoint ResourceManager Address of-->

<property>

<name>yarn.resourcemanager.hostname</name>

<value>hadoop103</value>

</property>

<!-- Inheritance of environment variables -->

<property>

<name>yarn.nodemanager.env-whitelist</name>

<value>JAVA_HOME,HADOOP_COMMON_HOME,HADOOP_HDFS_HOME,HADOOP_CONF_DIR,CLASSPATH_PREPEND_DISTCACHE,HADOOP_YARN_HOME,HADOOP_MAPRED_HOME</value>

</property>

<!-- yarn Maximum and minimum memory allowed to be allocated by the container -->

<property>

<name>yarn.scheduler.minimum-allocation-mb</name>

<value>512</value>

</property>

<property>

<name>yarn.scheduler.maximum-allocation-mb</name>

<value>4096</value>

</property>

<!-- yarn The amount of physical memory the container allows to manage -->

<property>

<name>yarn.nodemanager.resource.memory-mb</name>

<value>4096</value>

</property>

<!-- close yarn Limit check on physical memory and virtual memory -->

<property>

<name>yarn.nodemanager.pmem-check-enabled</name>

<value>false</value>

</property>

<property>

<name>yarn.nodemanager.vmem-check-enabled</name>

<value>false</value>

</property>

</configuration>

(4) MapReduce profile

Configure mapred site xml

[wangxin@hadoop102 hadoop]$ vim mapred-site.xml

The contents of the document are as follows:

<?xml version="1.0" encoding="UTF-8"?>

<?xml-stylesheet type="text/xsl" href="configuration.xsl"?>

<configuration>

<!-- appoint MapReduce The program runs on Yarn upper -->

<property>

<name>mapreduce.framework.name</name>

<value>yarn</value>

</property>

</configuration>

4) Distribute the configured Hadoop configuration file on the cluster

[wangxin@hadoop102 hadoop]$ xsync /opt/module/hadoop-3.1.3/etc/hadoop/

5) Go to 103 and 104 to check the distribution of documents

[wangxin@hadoop103 ~]$ cat /opt/module/hadoop-3.1.3/etc/hadoop/core-site.xml [wangxin@hadoop104 ~]$ cat /opt/module/hadoop-3.1.3/etc/hadoop/core-site.xml

4.2.5 cluster

1) Configure workers

[wangxin@hadoop102 hadoop]$ vim /opt/module/hadoop-3.1.3/etc/hadoop/workers

Add the following contents to the document:

hadoop102 hadoop103 hadoop104

Note: no space is allowed at the end of the content added in the file, and no blank line is allowed in the file.

Synchronize all node profiles

[wangxin@hadoop102 hadoop]$ xsync /opt/module/hadoop-3.1.3/etc

2) Start cluster

(1) If the cluster is started for the first time, The namenode needs to be formatted in the Hadoop 102 node (note that formatting namenode will generate a new cluster id, resulting in inconsistent cluster IDS between namenode and datanode, and the cluster cannot find past data. If the cluster reports an error during operation and needs to reformat namenode, be sure to stop the namenode and datanode processes first, and delete the data and logs directories of all machines before formatting.)

[wangxin@hadoop102 ~]$ hdfs namenode -format

(2) Start HDFS

[wangxin@hadoop102 hadoop-3.1.3]$ sbin/start-dfs.sh

(3) Start YARN on the node (Hadoop 103) where the resource manager is configured

[wangxin@hadoop103 hadoop-3.1.3]$ sbin/start-yarn.sh

(4) View the NameNode of HDFS on the Web side

(a) Enter in the browser: http://hadoop102:9870

(b) View data information stored on HDFS

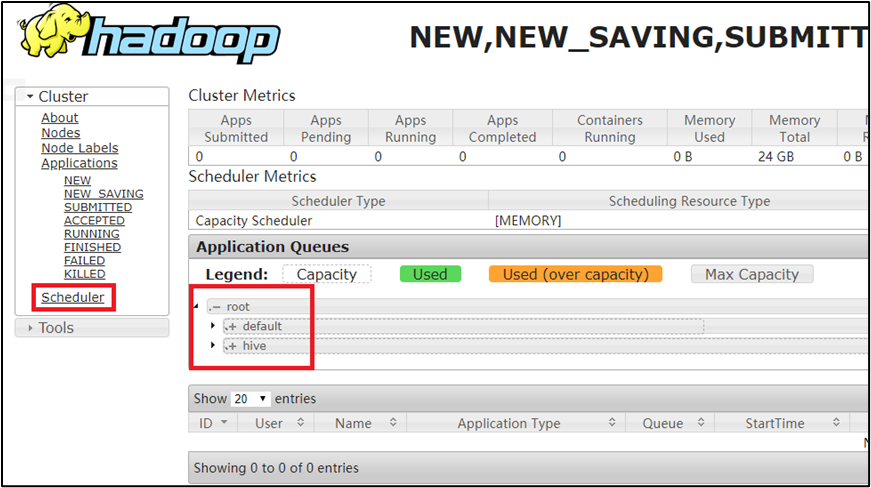

(5) View YARN's ResourceManager on the Web

(a) Enter in the browser: http://hadoop103:8088

(b) View Job information running on YARN

3) Cluster Basic test

(1) Upload files to cluster

Upload small files

[wangxin@hadoop102 ~]$ hadoop fs -mkdir /input [wangxin@hadoop102 ~]$ hadoop fs -put $HADOOP_HOME/wcinput/word.txt /input

Upload large files

[wangxin@hadoop102 ~]$ hadoop fs -put /opt/software/jdk-8u212-linux-x64.tar.gz /

(2) After uploading the file, check where the file is stored

(a) View HDFS file storage path

[wangxin@hadoop102 subdir0]$ pwd /opt/module/hadoop-3.1.3/data/dfs/data/current/BP-938951106-192.168.10.107-1495462844069/current/finalized/subdir0/subdir0

(b) View the contents of files stored on disk by HDFS

[wangxin@hadoop102 subdir0]$ cat blk_1073741825 hadoop yarn hadoop mapreduce wangxin wangxin

(3) Splicing

-rw-rw-r--. 1 wangxin wangxin 134217728 5 June 23-16:01 blk_1073741836 -rw-rw-r--. 1 wangxin wangxin 1048583 5 June 23-16:01 blk_1073741836_1012.meta -rw-rw-r--. 1 wangxin wangxin 63439959 5 June 23-16:01 blk_1073741837 -rw-rw-r--. 1 wangxin wangxin 495635 5 June 23-16:01 blk_1073741837_1013.meta [wangxin@hadoop102 subdir0]$ cat blk_1073741836>>tmp.tar.gz [wangxin@hadoop102 subdir0]$ cat blk_1073741837>>tmp.tar.gz [wangxin@hadoop102 subdir0]$ tar -zxvf tmp.tar.gz

(4) Download

[wangxin@hadoop104 software]$ hadoop fs -get /jdk-8u212-linux-x64.tar.gz ./

(5) Execute the wordcount program

[wangxin@hadoop102 hadoop-3.1.3]$ hadoop jar share/hadoop/mapreduce/hadoop-mapreduce-examples-3.1.3.jar wordcount /input /output

4.2.6 summary of cluster start / stop mode

1) Each service component starts / stops one by one

(1) Start / stop HDFS components respectively

hdfs --daemon start/stop namenode/datanode/secondarynamenode

(2) Start / stop YARN

yarn --daemon start/stop resourcemanager/nodemanager

2) Each module starts / stops separately (ssh configuration is the premise)

(1) Overall start / stop HDFS

start-dfs.sh/stop-dfs.sh

(2) Overall start / stop of YARN

start-yarn.sh/stop-yarn.sh

4.2.7 configuring the history server

In order to view the historical operation of the program, you need to configure the history server. The specific configuration steps are as follows:

1) Configure mapred site xml

[wangxin@hadoop102 hadoop]$ vim mapred-site.xml

Add the following configuration to this file.

<!-- Historical server address -->

<property>

<name>mapreduce.jobhistory.address</name>

<value>hadoop102:10020</value>

</property>

<!-- History server web End address -->

<property>

<name>mapreduce.jobhistory.webapp.address</name>

<value>hadoop102:19888</value>

</property>

2) Distribution configuration

[wangxin@hadoop102 hadoop]$ xsync $HADOOP_HOME/etc/hadoop/mapred-site.xml

3) Start the history server in Hadoop 102

[wangxin@hadoop102 hadoop]$ mapred --daemon start historyserver

4) Check whether the history server is started

[atguigu@hadoop102 hadoop]$ jps

5) View JobHistory

http://hadoop102:19888/jobhistory

4.2.8 configuring log aggregation

Log aggregation concept: after the application runs, upload the program running log information to the HDFS system.

Benefits of log aggregation function: you can easily view the details of program operation, which is convenient for development and debugging.

Note: to enable the log aggregation function, you need to restart NodeManager, ResourceManager and HistoryServer.

To enable the log aggregation function, the specific steps are as follows:

1) Configure yarn site xml

[wangxin@hadoop102 hadoop]$ vim yarn-site.xml

Add the following configuration to this file.

<!-- Enable log aggregation -->

<property>

<name>yarn.log-aggregation-enable</name>

<value>true</value>

</property>

<!-- Set log aggregation server address -->

<property>

<name>yarn.log.server.url</name>

<value>http://hadoop102:19888/jobhistory/logs</value>

</property>

<!-- Set the log retention time to 7 days -->

<property>

<name>yarn.log-aggregation.retain-seconds</name>

<value>604800</value>

</property>

2) Distribution configuration

[wangxin@hadoop102 hadoop]$ xsync $HADOOP_HOME/etc/hadoop/yarn-site.xml

3) Close NodeManager, ResourceManager, and HistoryServer

[wangxin@hadoop103 ~]$ stop-yarn.sh [wangxin@hadoop102 ~]$ mapred --daemon stop historyserver

4) Start NodeManager, ResourceManage, and HistoryServer

[wangxin@hadoop103 ~]$ start-yarn.sh [wangxin@hadoop102 ~]$ mapred --daemon start historyserver

5) Delete existing output files on HDFS

[wangxin@hadoop102 ~]$ hadoop fs -rm -r /output

6) Execute WordCount program

[wangxin@hadoop102 ~]$ hadoop jar $HADOOP_HOME/share/hadoop/mapreduce/hadoop-mapreduce-examples-3.1.3.jar wordcount /input /output

7) Check the log,

http://hadoop102:19888/jobhistory

4.2.9 writing common scripts for hadoop clusters

1) View the java process script of three servers: jpsall

[wangxin@hadoop102 ~]$ cd /home/wangxin/bin [wangxin@hadoop102 ~]$ vim jpsall

Then enter

#!/bin/bash

for host in hadoop102 hadoop103 hadoop104

do

echo =============== $host ===============

ssh $host jps $@ | grep -v Jps

done

Exit after saving, and then grant script execution permission

[wangxin@hadoop102 bin]$ chmod +x jpsall

2) hadoop cluster startup and shutdown script (including hdfs, yarn and historyserver): myhadoop sh

[wangxin@hadoop102 ~]$ cd /home/wangxin/bin [wangxin@hadoop102 ~]$ vim myhadoop.sh

Then enter

#!/bin/bash

if [ $# -lt 1 ]

then

echo "No Args Input..."

exit ;

fi

case $1 in

"start")

echo " =================== start-up hadoop colony ==================="

echo " --------------- start-up hdfs ---------------"

ssh hadoop102 "/opt/module/hadoop-3.1.3/sbin/start-dfs.sh"

echo " --------------- start-up yarn ---------------"

ssh hadoop103 "/opt/module/hadoop-3.1.3/sbin/start-yarn.sh"

echo " --------------- start-up historyserver ---------------"

ssh hadoop102 "/opt/module/hadoop-3.1.3/bin/mapred --daemon start historyserver"

;;

"stop")

echo " =================== close hadoop colony ==================="

echo " --------------- close historyserver ---------------"

ssh hadoop102 "/opt/module/hadoop-3.1.3/bin/mapred --daemon stop historyserver"

echo " --------------- close yarn ---------------"

ssh hadoop103 "/opt/module/hadoop-3.1.3/sbin/stop-yarn.sh"

echo " --------------- close hdfs ---------------"

ssh hadoop102 "/opt/module/hadoop-3.1.3/sbin/stop-dfs.sh"

;;

*)

echo "Input Args Error..."

;;

esac

Exit after saving, and then grant script execution permission

[wangxin@hadoop102 bin]$ chmod +x myhadoop.sh

3) Distribute the / home/wangxin/bin directory to ensure that custom scripts can be used on all three machines

[wangxin@hadoop102 ~]$ xsync /home/wangxin/bin/

4.2.10 cluster time synchronization

Time synchronization method: find a machine as a time server, and all machines will synchronize with the cluster time regularly. For example, synchronize the time every ten minutes.

Specific operation of configuring time synchronization:

1) Time server configuration (must be root)

(0) view ntpd service status and startup and self startup status of all nodes

[wangxin@hadoop102 ~]$ sudo systemctl status ntpd [wangxin@hadoop102 ~]$ sudo systemctl is-enabled ntpd

(1) Turn off ntpd service and self start on all nodes

[wangxin@hadoop102 ~]$ sudo systemctl stop ntpd [wangxin@hadoop102 ~]$ sudo systemctl disable ntpd

(2) Modify NTP of Hadoop 102 Conf configuration file (Hadoop 102 should be used as the time server)

[wangxin@hadoop102 ~]$ sudo vim /etc/ntp.conf

The amendments are as follows

a) Modify 1 (authorize all machines in the 192.168.1.0-192.168.1.255 network segment to query and synchronize time from this machine)

#restrict 192.168.1.0 mask 255.255.255.0 nomodify notrap

restrict 192.168.1.0 mask 255.255.255.0 nomodify notrap

b) Modification 2 (cluster in LAN, do not use time on other Internet)

server 0.centos.pool.ntp.org iburst

server 1.centos.pool.ntp.org iburst

server 2.centos.pool.ntp.org iburst

server 3.centos.pool.ntp.org iburst

by

#server 0.centos.pool.ntp.org iburst

#server 1.centos.pool.ntp.org iburst

#server 2.centos.pool.ntp.org iburst

#server 3.centos.pool.ntp.org iburst

c) Add 3 (when the node loses network connection, the local time can still be used as the time server to provide time synchronization for other nodes in the cluster)

server 127.127.1.0

fudge 127.127.1.0 stratum 10

(3) Modify the / etc/sysconfig/ntpd file of Hadoop 102

[wangxin@hadoop102 ~]$ sudo vim /etc/sysconfig/ntpd

Add the following contents (synchronize the hardware time with the system time)

SYNC_HWCLOCK=yes

(4) Restart ntpd service

[wangxin@hadoop102 ~]$ sudo systemctl start ntpd

(5) Set ntpd service startup

[wangxin@hadoop102 ~]$ sudo systemctl enable ntpd

2) Other machine configurations (must be root)

(1) Configure other machines to synchronize with the time server once every 10 minutes

[wangxin@hadoop103 ~]$ sudo crontab -e

The scheduled tasks are as follows:

*/10 * * * * /usr/sbin/ntpdate hadoop102

(2) Modify any machine time

[wangxin@hadoop103 ~]$ sudo date -s "2017-9-11 11:11:11"

(3) Check whether the machine is synchronized with the time server in ten minutes

[wangxin@hadoop103 ~]$ sudo date

5. HDFS

5.1 HDFS overview

5.1.1 HDFS output background and definition

HDFS generation background

With the increasing amount of data, if there is not enough data in one operating system, it will be allocated to more disks managed by the operating system, but it is inconvenient to manage and maintain. There is an urgent need for a system to manage the files on multiple machines, which is the distributed file management system. HDFS is just one kind of distributed file management system.

HDFS definition

HDFS (Hadoop Distributed File System), which is a file system used to store files and locate files through the directory tree; Secondly, it is distributed, and many servers unite to realize its functions. The servers in the cluster have their own roles.

HDFS usage scenario: it is suitable for the scenario of one write and multiple read, and does not support file modification. Suitable for data analysis, not suitable for network disk application.

5.1.2 advantages and disadvantages of HDFS

advantage:

1) High fault tolerance

(1) Data is automatically saved in multiple copies. It improves fault tolerance by adding copies

(2) After a copy is lost, it can be recovered automatically

2) Suitable for handling big data

(1) Data scale: it can handle data with data scale of GB, TB or even PB

(2) File size: it can handle a large number of files with a size of more than one million

3) It can be built on cheap machines and improve reliability through multi copy mechanism

Disadvantages:

1) It is not suitable for low latency data access, such as millisecond data storage

2) Unable to efficiently store a large number of small files

(1) If a large number of small files are stored, it will occupy a lot of memory of NameNode to store file directory and block information. This is not desirable because NameNode memory is always limited

(2) The addressing time of small file storage will exceed the reading time, which violates the design goal of HDFS.

3) Concurrent writing and random file modification are not supported

(1) A file can only have one write, and multiple threads are not allowed to write at the same time;

(2) Only data append is supported, and random modification of files is not supported.

5.1.3 HDFS composition architecture

1) NameNode (nn): Master, which is a supervisor and manager.

(1) Manage the namespace of HDFS;

(2) Configure replica policy;

(3) Managing Block mapping information;

(4) Handle client read and write requests.

2) DataNode: Slave. The NameNode issues a command and the DataNode performs the actual operation.

(1) Store actual data blocks

(2) Perform read / write operations on data blocks.

3) Client: the client.

(1) File segmentation. When uploading files to HDFS, the Client divides the files into blocks one by one, and then uploads them;

(2) Interact with NameNode to obtain the location information of the file;

(3) Interact with DataNode to read or write data;

(4) The Client provides some commands to manage HDFS, such as NameNode formatting;

(5) The Client can access HDFS through some commands, such as adding, deleting, checking and modifying HDFS;

4) Secondary NameNode: not a hot standby of NameNode. When the NameNode hangs, it cannot immediately replace the NameNode and provide services.

(1) Assist NameNode to share its workload, such as regularly merging Fsimage and Edits and pushing them to NameNode;

(2) In case of emergency, NameNode can be recovered.

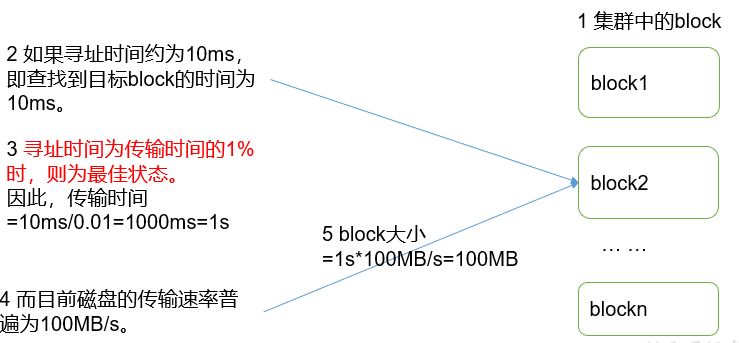

5.1.4 HDFS file block size

The files in HDFS are physically stored in blocks. The size of blocks can be specified through the configuration parameter (DFS. Block size). The default size is Hadoop 2 128M in the X version and 64M in the old version.

Why can't the size of the block be set too small or too large?

(1) The block setting of HDFS is too small, which will increase the addressing time. The program has been looking for the starting position of the block

(2) If the block is set too large, the time to transfer data from the disk will be significantly greater than the time required to locate the start position of the block. As a result, the program will be very slow in processing this data.

Summary: the size setting of HDFS block mainly depends on the disk transfer rate.

5.2 Shell operation of HDFS

5.2.1 basic grammar

hadoop fs specific command OR hdfs dfs specific command

The two are identical.

5.2.2 complete command

[wangxin@hadoop102 hadoop-3.1.3]$ bin/hadoop fs

[-appendToFile <localsrc> ... <dst>]

[-cat [-ignoreCrc] <src> ...]

[-checksum <src> ...]

[-chgrp [-R] GROUP PATH...]

[-chmod [-R] <MODE[,MODE]... | OCTALMODE> PATH...]

[-chown [-R] [OWNER][:[GROUP]] PATH...]

[-copyFromLocal [-f] [-p] <localsrc> ... <dst>]

[-copyToLocal [-p] [-ignoreCrc] [-crc] <src> ... <localdst>]

[-count [-q] <path> ...]

[-cp [-f] [-p] <src> ... <dst>]

[-createSnapshot <snapshotDir> [<snapshotName>]]

[-deleteSnapshot <snapshotDir> <snapshotName>]

[-df [-h] [<path> ...]]

[-du [-s] [-h] <path> ...]

[-expunge]

[-get [-p] [-ignoreCrc] [-crc] <src> ... <localdst>]

[-getfacl [-R] <path>]

[-getmerge [-nl] <src> <localdst>]

[-help [cmd ...]]

[-ls [-d] [-h] [-R] [<path> ...]]

[-mkdir [-p] <path> ...]

[-moveFromLocal <localsrc> ... <dst>]

[-moveToLocal <src> <localdst>]

[-mv <src> ... <dst>]

[-put [-f] [-p] <localsrc> ... <dst>]

[-renameSnapshot <snapshotDir> <oldName> <newName>]

[-rm [-f] [-r|-R] [-skipTrash] <src> ...]

[-rmdir [--ignore-fail-on-non-empty] <dir> ...]

[-setfacl [-R] [{-b|-k} {-m|-x <acl_spec>} <path>]|[--set <acl_spec> <path>]]

[-setrep [-R] [-w] <rep> <path> ...]

[-stat [format] <path> ...]

[-tail [-f] <file>]

[-test -[defsz] <path>]

[-text [-ignoreCrc] <src> ...]

[-touchz <path> ...]

[-usage [cmd ...]]

5.2.3 practical operation of common commands

2.3.1 preparation

1) Start Hadoop cluster (convenient for subsequent testing)

[wangxin@hadoop102 hadoop-3.1.3]$ sbin/start-dfs.sh [wangxin@hadoop103 hadoop-3.1.3]$ sbin/start-yarn.sh

2) - help: output this command parameter

[wangxin@hadoop102 hadoop-3.1.3]$ hadoop fs -help rm

2.3.2 upload

1) - moveFromLocal: cut and paste from local to HDFS

[wangxin@hadoop102 hadoop-3.1.3]$ touch a.txt [wangxin@hadoop102 hadoop-3.1.3]$ hadoop fs -moveFromLocal ./a.txt /test

2) - copyFromLocal: copy files from the local file system to the HDFS path

[wangxin@hadoop102 hadoop-3.1.3]$ hadoop fs -copyFromLocal README.txt /

3) - appendToFile: append a file to the end of an existing file

[wangxin@hadoop102 hadoop-3.1.3]$ touch b.txt [wangxin@hadoop102 hadoop-3.1.3]$ vi b.txt

input

Hello, Hadoop!

[wangxin@hadoop102 hadoop-3.1.3]$ hadoop fs -appendToFile b.txt /test/a.txt

4) - put: equivalent to copyFromLocal

[wangxin@hadoop102 hadoop-3.1.3]$ hadoop fs -put ./b.txt /user/wangxin/test/

2.3.3 Download

1) - copyToLocal: copy from HDFS to local

[wangxin@hadoop102 hadoop-3.1.3]$ hadoop fs -copyToLocal /test/a.txt ./

2) - get: equivalent to copyToLocal, which means downloading files from HDFS to local

[wangxin@hadoop102 hadoop-3.1.3]$ hadoop fs -get /test/a.txt ./

3) - getmerge: merge and download multiple files. For example, there are multiple files in HDFS directory / user/wangxin/test: log 1, log. 2,log. 3,…

[wangxin@hadoop102 hadoop-3.1.3]$ hadoop fs -getmerge /user/wangxin/test/* ./logs.txt

2.3.4 HDFS direct operation

1) - ls: display directory information

[wangxin@hadoop102 hadoop-3.1.3]$ hadoop fs -ls /

2) - mkdir: create directory on HDFS

[wangxin@hadoop102 hadoop-3.1.3]$ hadoop fs -mkdir -p /monash/FIT

3) - cat: displays the contents of the file

[wangxin@hadoop102 hadoop-3.1.3]$ hadoop fs -cat /test/b.txt

4) - chgrp, - chmod, - chown: the same as in Linux file system. Modify the permissions of the file

[wangxin@hadoop102 hadoop-3.1.3]$ hadoop fs -chmod 666 /test/a.txt [wangxin@hadoop102 hadoop-3.1.3]$ hadoop fs -chown wangxin:wangxin /test/b.txt

5) - cp: copy from one path of HDFS to another path of HDFS

[wangxin@hadoop102 hadoop-3.1.3]$ hadoop fs -cp /test/b.txt /copys.txt

6) - mv: move files in HDFS directory

[wangxin@hadoop102 hadoop-3.1.3]$ hadoop fs -mv /copys.txt /monash/FIT

7) - tail: displays data at the end 1kb of a file

[wangxin@hadoop102 hadoop-3.1.3]$ hadoop fs -tail /sanguo/shuguo/kongming.txt

8) - rm: delete a file or folder

[wangxin@hadoop102 hadoop-3.1.3]$ hadoop fs -rm /test/a.txt

9) - rmdir: delete empty directory

[wangxin@hadoop102 hadoop-3.1.3]$ hadoop fs -mkdir /test1 [wangxin@hadoop102 hadoop-3.1.3]$ hadoop fs -rmdir /test2

10) - du statistics folder size information

[wangxin@hadoop102 hadoop-3.1.3]$ hadoop fs -du -s -h /user/wangxin/test 2.7 K /user/wangxin/test [wangxin@hadoop102 hadoop-3.1.3]$ hadoop fs -du -h /user/wangxin/test 1.3 K /user/wangxin/test/README.txt 15 /user/wangxin/test/hello.txt 1.4 K /user/wangxin/test/world.txt

11) - setrep: sets the number of copies of files in HDFS

[wangxin@hadoop102 hadoop-3.1.3]$ hadoop fs -setrep 10 /test/b.txt

The number of replicas set here is only recorded in the metadata of NameNode. Whether there will be so many replicas depends on the number of datanodes. At present, there are only three devices, and there are only three replicas at most. Only when the number of nodes increases to 10, the number of replicas can reach 10.

5.3 HDFS client operation

5.3.1 prepare Windows Hadoop development environment

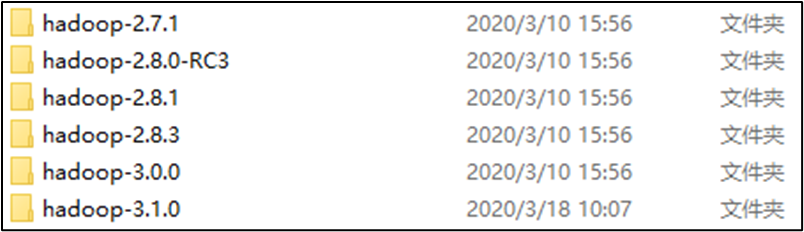

1) Find the Windows dependency directory of the downloaded Hadoop and open it:

Select Hadoop-3.1.0 and copy it to other places (such as disk D).

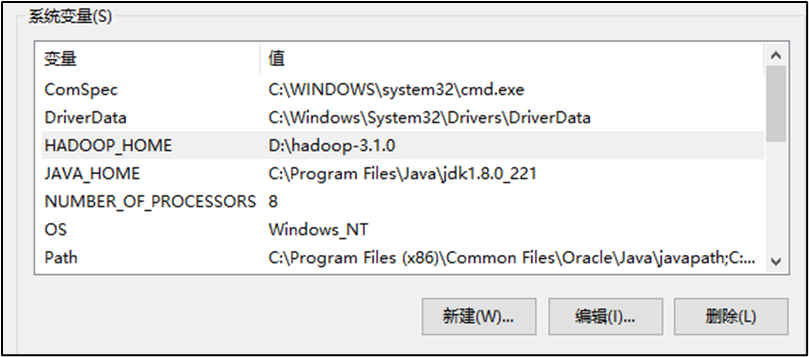

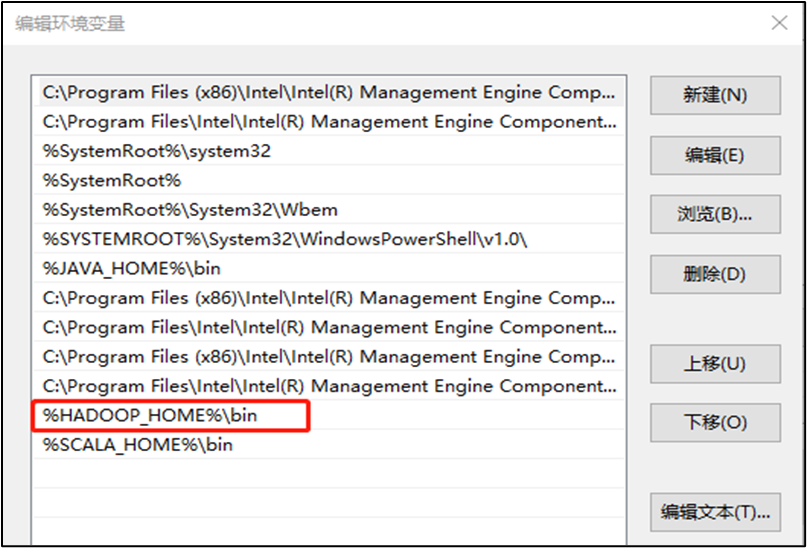

2) Configure HADOOP_HOME environment variable.

3) Configure the Path environment variable. Then restart the computer

4) Open Intellij, create a Maven project HdfsClientDemo, and import the corresponding dependent coordinates + log addition

<dependencies>

<dependency>

<groupId>junit</groupId>

<artifactId>junit</artifactId>

<version>4.12</version>

</dependency>

<dependency>

<groupId>org.apache.logging.log4j</groupId>

<artifactId>log4j-slf4j-impl</artifactId>

<version>2.12.0</version>

</dependency>

<dependency>

<groupId>org.apache.hadoop</groupId>

<artifactId>hadoop-client</artifactId>

<version>3.1.3</version>

</dependency>

</dependencies>

In the src/main/resources directory of the project, create a new file named "log4j2.xml", and fill in the file

<?xml version="1.0" encoding="UTF-8"?>

<Configuration status="error" strict="true" name="XMLConfig">

<Appenders>

<!-- The type name is Console,The name must be an attribute -->

<Appender type="Console" name="STDOUT">

<!-- Layout as PatternLayout In a way,

The output style is[INFO] [2018-01-22 17:34:01][org.test.Console]I'm here -->

<Layout type="PatternLayout"

pattern="[%p] [%d{yyyy-MM-dd HH:mm:ss}][%c{10}]%m%n" />

</Appender>

</Appenders>

<Loggers>

<!-- Additivity is false -->

<Logger name="test" level="info" additivity="false">

<AppenderRef ref="STDOUT" />

</Logger>

<!-- root loggerConfig set up -->

<Root level="info">

<AppenderRef ref="STDOUT" />

</Root>

</Loggers>

</Configuration>

5) Create package name: com wangxin. hdfs

6) Create HdfsClient class

public class HdfsClient{

@Test

public void testMkdirs() throws IOException, InterruptedException, URISyntaxException{

// 1 get file system

Configuration configuration = new Configuration();

// Configure to run on a cluster

// configuration.set("fs.defaultFS", "hdfs://hadoop102:9820");

// FileSystem fs = FileSystem.get(configuration);

FileSystem fs = FileSystem.get(new URI("hdfs://hadoop102:9820"), configuration, "wangxin");

// 2 create directory

fs.mkdirs(new Path("/test/hadoop-api"));

// 3 close resources

fs.close();

}

}

7) Execution procedure

The user name needs to be configured at runtime

When the client operates HDFS, it has a user identity. By default, the HDFS client API will obtain a parameter from the JVM as its user identity: - dhaoop_ USER_ Name = wangxin, wangxin is the user name.

5.3.2 API operation of HDFS

HDFS file upload (test parameter priority)

1) Write source code

@Test

public void testCopyFromLocalFile() throws IOException, InterruptedException, URISyntaxException {

// 1 get file system

Configuration configuration = new Configuration();

configuration.set("dfs.replication", "2");

FileSystem fs = FileSystem.get(new URI("hdfs://hadoop102:8020"), configuration, "wangxin");

// 2 upload files

fs.copyFromLocalFile(new Path("E:/hello.txt"), new Path("/hello.txt"));

// 3 close resources

fs.close();

System.out.println("over");

}

2) HDFS site Copy the XML to the root directory of the project

<?xml version="1.0" encoding="UTF-8"?>

<?xml-stylesheet type="text/xsl" href="configuration.xsl"?>

<configuration>

<property>

<name>dfs.replication</name>

<value>1</value>

</property>

</configuration>

3) Parameter priority

Parameter priority: (1) value set in client code > (2) user defined configuration file under ClassPath > (3) then custom configuration of server (xxx site. XML) > (4) default configuration of server (XXX default. XML)

HDFS file download

@Test

public void testCopyToLocalFile() throws IOException, InterruptedException, URISyntaxException{

// 1 get file system

Configuration configuration = new Configuration();

FileSystem fs = FileSystem.get(new URI("hdfs://hadoop102:9820"), configuration, "wangxin");

// 2. Perform the download operation

// boolean delSrc refers to whether to delete the original file

// Path src refers to the path of the file to be downloaded

// Path dst refers to the path to download the file to

// boolean useRawLocalFileSystem whether to enable file verification

fs.copyToLocalFile(false, new Path("/hello.txt"), new Path("e:/helloworld.txt"), true);

// 3 close resources

fs.close();

}

HDFS delete files and directories

@Test

public void testDelete() throws IOException, InterruptedException, URISyntaxException{

// 1 get file system

Configuration configuration = new Configuration();

FileSystem fs = FileSystem.get(new URI("hdfs://hadoop102:9820"), configuration, "wangxin");

// 2 execute deletion

fs.delete(new Path("/test1/"), true);

// 3 close resources

fs.close();

}

Renaming and moving HDFS files

@Test

public void testRename() throws IOException, InterruptedException, URISyntaxException{

// 1 get file system

Configuration configuration = new Configuration();

FileSystem fs = FileSystem.get(new URI("hdfs://hadoop102:9820"), configuration, "wangxin");

// 2. Modify the file name

fs.rename(new Path("/hello.txt"), new Path("/test.txt"));

// 3 close resources

fs.close();

}

HDFS file details view

View file name, permission, length and block information

@Test

public void testListFiles() throws IOException, InterruptedException, URISyntaxException{

// 1 get file system

Configuration configuration = new Configuration();

FileSystem fs = FileSystem.get(new URI("hdfs://hadoop102:9820"), configuration, "wangxin");

// 2 obtain document details

RemoteIterator<LocatedFileStatus> listFiles = fs.listFiles(new Path("/"), true);

while(listFiles.hasNext()){

LocatedFileStatus status = listFiles.next();

// Output details

// File name

System.out.println(status.getPath().getName());

// length

System.out.println(status.getLen());

// jurisdiction

System.out.println(status.getPermission());

// grouping

System.out.println(status.getGroup());

// Get stored block information

BlockLocation[] blockLocations = status.getBlockLocations();

for (BlockLocation blockLocation : blockLocations) {

// Gets the host node of the block storage

String[] hosts = blockLocation.getHosts();

for (String host : hosts) {

System.out.println(host);

}

}

System.out.println("-----------Division line of monitor----------");

}

// 3 close resources

fs.close();

}

HDFS file and folder judgment

@Test

public void testListStatus() throws IOException, InterruptedException, URISyntaxException{

// 1 get file configuration information

Configuration configuration = new Configuration();

FileSystem fs = FileSystem.get(new URI("hdfs://hadoop102:9820"), configuration, "wangxin");

// 2. Judge whether it is a file or a folder

FileStatus[] listStatus = fs.listStatus(new Path("/"));

for (FileStatus fileStatus : listStatus) {

// If it's a file

if (fileStatus.isFile()) {

System.out.println("f:"+fileStatus.getPath().getName());

}else {

System.out.println("d:"+fileStatus.getPath().getName());

}

}

// 3 close resources

fs.close();

}

5.4 data flow of HDFS

5.4.1 HDFS data writing process

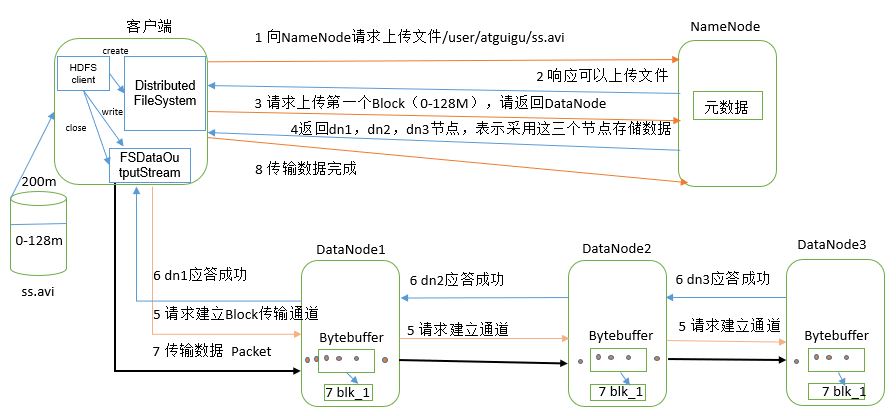

(1) The client requests the NameNode to upload files through the distributed file system module. The NameNode checks whether the target file exists and whether the parent directory exists.

(2) NameNode returns whether it can be uploaded.

(3) The client requests which DataNode servers the first Block is uploaded to.

(4) NameNode returns three DataNode nodes: dn1, dn2 and dn3.

(5) The client requests dn1 to upload data through FSDataOutputStream module. dn1 will continue to call dn2 after receiving the request, and then dn2 will call dn3 to complete the establishment of this communication pipeline.

(6) dn1, dn2 and dn3 answer the client level by level.

(7) The client starts to upload the first Block to dn1 (first read the data from the disk and put it into a local memory cache). With packets as the unit, dn1 will send a packet to dn2 and dn2 to dn3; Every packet transmitted by dn1 will be put into a reply queue to wait for a reply.

(8) After the transmission of a Block is completed, the client again requests the NameNode to upload the server of the second Block. (repeat steps 3-7).

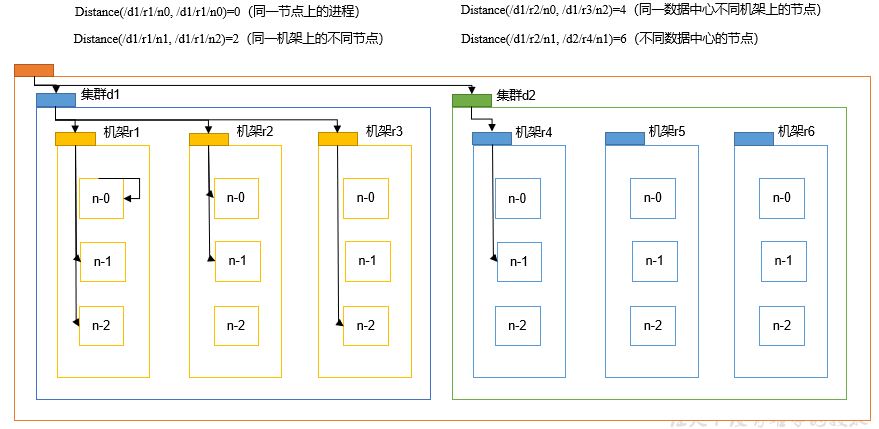

5.4.2 network topology - node distance calculation

In the process of HDFS writing data, NameNode will select the DataNode closest to the data to be uploaded to receive the data. So how to calculate the nearest distance?

Node distance: the sum of the distances from two nodes to the nearest common ancestor.

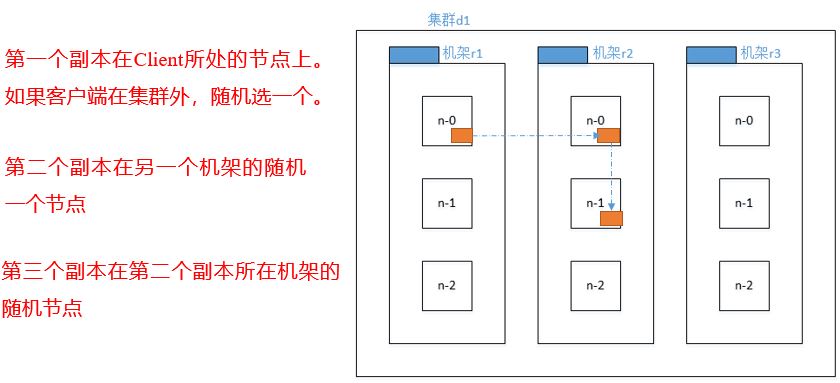

5.4.3 rack awareness (replica storage node selection)

1) Official IP address

Rack sensing description

http://hadoop.apache.org/docs/r3.1.3/hadoop-project-dist/hadoop-hdfs/HdfsDesign.html#Data_Replication

For the common case, when the replication factor is three, HDFS's placement policy is to put one replica on the local machine if the writer is on a datanode, otherwise on a random datanode, another replica on a node in a different (remote) rack, and the last on a different node in the same remote rack. This policy cuts the inter-rack write traffic which generally improves write performance. The chance of rack failure is far less than that of node failure; this policy does not impact data reliability and availability guarantees. However, it does reduce the aggregate network bandwidth used when reading data since a block is placed in only two unique racks rather than three. With this policy, the replicas of a file do not evenly distribute across the racks. One third of replicas are on one node, two thirds of replicas are on one rack, and the other third are evenly distributed across the remaining racks. This policy improves write performance without compromising data reliability or read performance.

Hadoop3.1.3 replica node selection

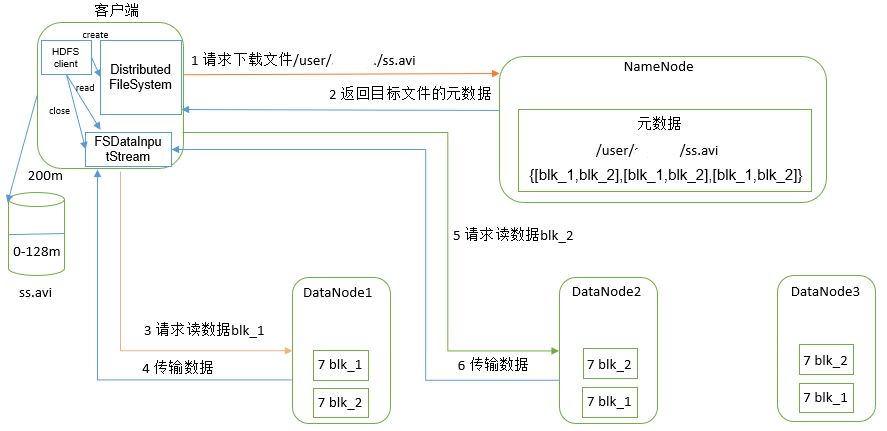

5.4.4 HDFS data reading process

(1) The client requests the NameNode to download the file through the distributedfile system. The NameNode finds the DataNode address where the file block is located by querying the metadata.

(2) Select a DataNode (proximity principle, then random) server and request to read data.

(3) DataNode starts to transmit data to the client (read the data input stream from the disk and verify it in packets).

(4) The client receives in packets, caches them locally, and then writes them to the target file.

5.5 NameNode and SecondaryNameNode

5.5.1 working mechanism of NN and 2NN

Where is the metadata stored in NameNode?

First of all, let's assume that if it is stored in the disk of the NameNode node, it must be inefficient because it often needs random access and responds to customer requests. Therefore, metadata needs to be stored in memory. However, if it only exists in memory, once the power is cut off and the metadata is lost, the whole cluster will not work. Therefore, an FsImage that backs up metadata on disk is generated.

This will bring new problems. When the metadata in memory is updated, if FsImage is updated at the same time, the efficiency will be too low. However, if it is not updated, consistency problems will occur. Once the NameNode node is powered off, data loss will occur. Therefore, the edit file is introduced (only additional operation is performed, which is very efficient). Whenever metadata is updated or added, the metadata in memory is modified and appended to Edits. In this way, once the NameNode node is powered off, metadata can be synthesized through the combination of FsImage and Edits.

However, if data is added to Edits for a long time, the file data will be too large, the efficiency will be reduced, and once the power is off, the time required to recover metadata will be too long. Therefore, it is necessary to merge FsImage and Edits regularly. If this operation is completed by NameNode node, the efficiency will be too low. Therefore, a new node, SecondaryNamenode, is introduced to merge FsImage and Edits.

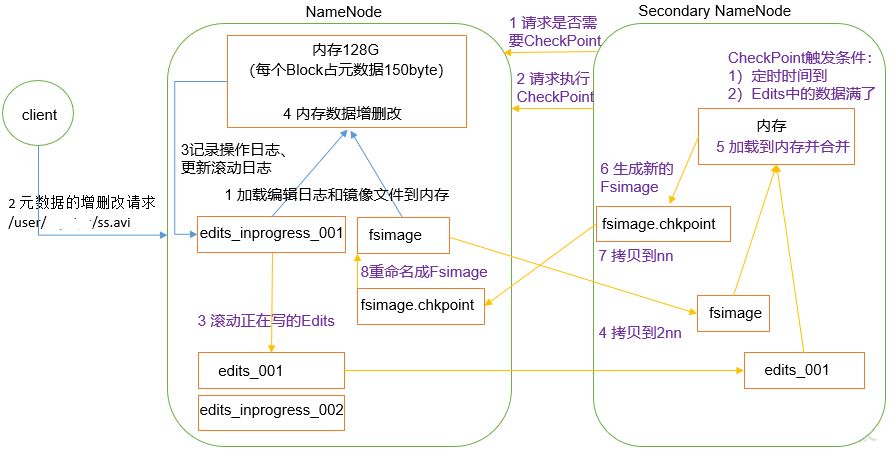

NameNode working mechanism

1) Phase 1: NameNode startup

(1) After the NameNode formatting is started for the first time, the Fsimage and Edits files are created. If it is not the first time to start, directly load the editing log and image file into memory.

(2) The request of the client to add, delete or modify metadata.

(3) NameNode records the operation log and updates the rolling log.

(4) NameNode adds, deletes and modifies metadata in memory.

2) The second stage: the second namenode works

(1) The Secondary NameNode asks if the NameNode needs a CheckPoint. Directly bring back the NameNode check result.

(2) Secondary NameNode requests CheckPoint.

(3) NameNode scrolls the Edits log being written.

(4) Copy the editing log and image file before scrolling to the Secondary NameNode.

(5) The Secondary NameNode loads the editing log and image files into memory and merges them.

(6) Generate a new image file fsimage chkpoint.

(7) Copy fsimage Chkpoint to NameNode.

(8) NameNode will fsimage Rename chkpoint to fsimage.

Detailed explanation of working mechanism of NN and 2NN:

Fsimage: file formed after metadata in NameNode memory is serialized.

Edits: records every step of the client's operation to update metadata information (metadata can be calculated through edits).

When the NameNode starts, first scroll through the Edits and generate an empty Edits Inprogress, and then load Edits and Fsimage into memory. At this time, NameNode memory holds the latest metadata information. The Client starts sending metadata addition, deletion and modification requests to the NameNode, and the operations of these requests will be recorded in Edits In progress (the operation of querying metadata will not be recorded in Edits, because the query operation will not change the metadata information). If NameNode hangs up at this time, the metadata information will be read from Edits after restart. Then, NameNode will add, delete and modify metadata in memory.

Since there will be more and more operations recorded in Edits and the edit file will be larger and larger, the NameNode will be very slow when starting to load Edits, so it is necessary to merge Edits and Fsimage (the so-called merging is to load Edits and Fsimage into memory, follow the operations in Edits step by step, and finally form a new Fsimage). The function of secondary NameNode is to help NameNode merge Edits and Fsimage.

The SecondaryNameNode will first ask whether the NameNode needs a CheckPoint (trigger the CheckPoint by satisfying either of the two conditions: the timing time is up and the data in the Edits is full). Directly bring back the NameNode check result. When the SecondaryNameNode performs the CheckPoint operation, it will first make the NameNode scroll the Edits and generate an empty Edits In progress, the purpose of scrolling Edits is to mark Edits, and all new operations in the future will be written to Edits In progress, other unconsolidated Edits and Fsimage will be copied to the local of the SecondaryNameNode, and then the copied Edits and Fsimage will be loaded into memory for merging to generate Fsimage Chkpoint, and then Fsimage Copy chkpoint to NameNode, rename it to Fsimage and replace the original Fsimage. When the NameNode starts, it only needs to load the previously unconsolidated Edits and Fsimage, because the metadata information in the merged Edits has been recorded in Fsimage.

5.5.2 analysis of fsimage and Edits

Fsimage and Edits concepts

After the NameNode is formatted, the following files will be generated in the / opt/module/hadoop-3.1.3/data/tmp/dfs/name/current directory

(1) Fsimage file: a permanent checkpoint of HDFS file system metadata, which contains the serialization information of all directories and file inode s of HDFS file system.

(2) Edits file: the path to store all update operations of the HDFS file system. All write operations performed by the file system client will first be recorded in the edits file.

(3)seen_ The txid file holds a number, which is the last edit_ Number of

(4) Each time the NameNode starts, it will read the Fsimage file into the memory and load the update operation in the Edits to ensure that the metadata information in the memory is up-to-date and synchronous. It can be seen that the Fsimage and Edits files are merged when the NameNode starts.

1) oiv view Fsimage file

(1) View oiv and oev commands

[wangxin@hadoop102 current]$ hdfs oiv apply the offline fsimage viewer to an fsimage oev apply the offline edits viewer to an edits file

(2) Basic grammar

hdfs oiv -p file type -i Mirror file -o Converted file output path

(3) Case practice

[wangxin@hadoop102 current]$ pwd /opt/module/hadoop-3.1.3/data/dfs/name/current [wangxin@hadoop102 current]$ hdfs oiv -p XML -i fsimage_0000000000000000025 -o /opt/module/hadoop-3.1.3/fsimage.xml [wangxin@hadoop102 current]$ cat /opt/module/hadoop-3.1.3/fsimage.xml

Thinking: it can be seen that there is no DataNode corresponding to the record block in Fsimage. Why?

After the cluster starts, the DataNode is required to report the data block information and report it again after an interval of time.

2) oev view Edits file

(1) Basic grammar

hdfs oev -p file type -i Edit log -o Converted file output path

(2) Case practice

[wangxin@hadoop102 current]$ hdfs oev -p XML -i edits_0000000000000000012-0000000000000000013 -o /opt/module/hadoop-3.1.3/edits.xml [wangxin@hadoop102 current]$ cat /opt/module/hadoop-3.1.3/edits.xml

5.5.3 CheckPoint time setting

1) Typically, the SecondaryNameNode executes every hour.

[hdfs-default.xml]

<property> <name>dfs.namenode.checkpoint.period</name> <value>3600s</value> </property>

2) Check the number of operations once a minute. When the number of operations reaches 1 million, the SecondaryNameNode executes once.

<property> <name>dfs.namenode.checkpoint.txns</name> <value>1000000</value> <description>Operation action times</description> </property> <property> <name>dfs.namenode.checkpoint.check.period</name> <value>60s</value> <description> 1 Number of operations per minute</description> </property >

5.5.4 NameNode fault handling

After the NameNode fails, the following two methods can be used to recover the data.

1) Copy the data in the SecondaryNameNode to the directory where the NameNode stores the data;

(1) kill -9 NameNode process

(2) Delete the data stored in NameNode (/ opt/module/hadoop-3.1.3/data/tmp/dfs/name)

[wangxin@hadoop102 hadoop-3.1.3]$ rm -rf /opt/module/hadoop-3.1.3/data/dfs/name/*

(3) Copy the data in the SecondaryNameNode to the original NameNode storage data directory

[wangxin@hadoop102 dfs]$ scp -r wangxin@hadoop104:/opt/module/hadoop-3.1.3/data/dfs/namesecondary/* ./name/

(4) Restart NameNode

[wangxin@hadoop102 hadoop-3.1.3]$ hdfs --daemon start namenode

2) Use the - importCheckpoint option to start the NameNode daemon to copy the data in the SecondaryNameNode to the NameNode directory.

(1) Modify HDFS site In XML

<property>

<name>dfs.namenode.checkpoint.period</name>

<value>120</value>

</property>

<property>

<name>dfs.namenode.name.dir</name>

<value>/opt/module/hadoop-3.1.3/data/dfs/name</value>

</property>

(2) kill -9 NameNode process

(3) Delete the data stored in NameNode (/ opt/module/hadoop-3.1.3/data/dfs/name)

[wangxin@hadoop102 hadoop-3.1.3]$ rm -rf /opt/module/hadoop-3.1.3/data/dfs/name/*

(4) If the SecondaryNameNode is not on the same host node as the NameNode, you need to copy the directory in which the SecondaryNameNode stores data to the same level directory in which the NameNode stores data, and delete in_use.lock file

[wangxin@hadoop102 dfs]$ scp -r wangxin@hadoop104:/opt/module/hadoop-3.1.3/data/dfs/namesecondary ./ [wangxin@hadoop102 namesecondary]$ rm -rf in_use.lock [wangxin@hadoop102 dfs]$ pwd /opt/module/hadoop-3.1.3/data/dfs [wangxin@hadoop102 dfs]$ ls data name namesecondary

(5) Import checkpoint data (wait for a while and ctrl+c ends)

[wangxin@hadoop102 hadoop-3.1.3]$ bin/hdfs namenode -importCheckpoint

(6) Start NameNode

[wangxin@hadoop102 hadoop-3.1.3]$ hdfs --daemon start namenode

5.5.5 cluster security mode

1. NameNode startup

When NameNode starts, first load the image file (Fsimage) into memory and perform various operations in the edit log (Edits). Once the image of the file system metadata is successfully created in memory, an empty edit log is created. At this point, NameNode starts listening to DataNode requests. During this process, NameNode has been running in safe mode, that is, the file system of NameNode is read-only to the client.

2. DataNode startup

The location of data blocks in the system is not maintained by NameNode, but stored in DataNode in the form of block list. During the normal operation of the system, NameNode will keep the mapping information of all block positions in memory. In the safe mode, each DataNode will send the latest block list information to the NameNode. After the NameNode knows enough block location information, it can run the file system efficiently.

3. Safe mode exit judgment

If the minimum replica condition is met, the NameNode exits safe mode after 30 seconds. The so-called minimum replica condition means that 99.9% of the blocks in the entire file system meet the minimum replica level (default: dfs.replication.min=1). When starting a newly formatted HDFS cluster, because there are no blocks in the system, the NameNode will not enter safe mode.

1) Basic grammar

The cluster is in safe mode and cannot perform important operations (write operations). After the cluster is started, it will automatically exit the safe mode.

(1)bin/hdfs dfsadmin -safemode get (Function Description: View safe mode status) (2)bin/hdfs dfsadmin -safemode enter (Function Description: enter safe mode state) (3)bin/hdfs dfsadmin -safemode leave (Function Description: leave safe mode state) (4)bin/hdfs dfsadmin -safemode wait (Function Description: wait for safe mode status)

2) Case

Simulate wait safe mode

3) View current mode

[wangxin@hadoop102 hadoop-3.1.3]$ hdfs dfsadmin -safemode get Safe mode is OFF

4) First in security mode

[wangxin@hadoop102 hadoop-3.1.3]$ bin/hdfs dfsadmin -safemode enter

5.5.6 NameNode multi directory configuration

1) The local directory of NameNode can be configured into multiple directories, and the contents of each directory are the same, which increases the reliability

2) The specific configuration is as follows

(1) At HDFS site Add the following content to the XML file

<property>

<name>dfs.namenode.name.dir</name>

<value>file://${hadoop.tmp.dir}/dfs/name1,file://${hadoop.tmp.dir}/dfs/name2</value>

</property>

(2) Stop the cluster and delete all data in the data and logs of the three nodes.

[wangxin@hadoop102 hadoop-3.1.3]$ rm -rf data/ logs/ [wangxin@hadoop103 hadoop-3.1.3]$ rm -rf data/ logs/ [wangxin@hadoop104 hadoop-3.1.3]$ rm -rf data/ logs/

(3) Format the cluster and start it.

[wangxin@hadoop102 hadoop-3.1.3]$ bin/hdfs namenode –format [wangxin@hadoop102 hadoop-3.1.3]$ sbin/start-dfs.sh

(4) View results

[wangxin@hadoop102 dfs]$ ll Total consumption 12 drwx------. 3 wangxin wangxin 4096 12 November 8:03 data drwxrwxr-x. 3 wangxin wangxin 4096 12 November 8:03 name1 drwxrwxr-x. 3 wangxin wangxin 4096 12 November 8:03 name2

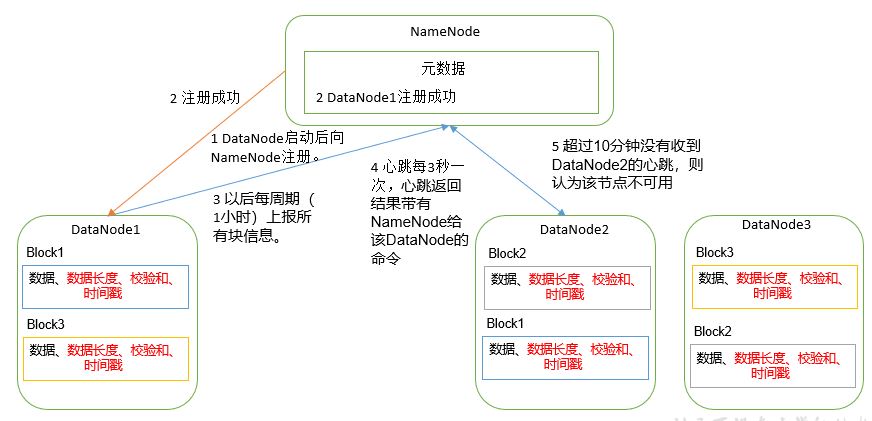

5.6 DataNode

5.6.1 working mechanism of datanode

(1) A data block is stored on the disk in the form of a file on the DataNode, including two files, one is the data itself, and the other is metadata, including the length of the data block, the checksum of the block data, and the timestamp.

(2) After the DataNode is started, it registers with the NameNode. After passing the registration, it periodically reports all block information to the NameNode (1 hour).

(3) The heartbeat occurs every 3 seconds. The result of the heartbeat is accompanied by the command given by the NameNode to the DataNode, such as copying block data to another machine or deleting a data block. If the heartbeat of a DataNode is not received for more than 10 minutes, the node is considered unavailable.

(4) Some machines can be safely joined and exited during cluster operation.

5.6.2 data integrity