Python wechat ordering applet course video

https://edu.csdn.net/course/detail/36074

Python practical quantitative transaction financial management system

https://edu.csdn.net/course/detail/35475

Blinn Phong reflection model practice (web Implementation)

games101 fourth job

Finally, the Blinn Phong model with mapping is completed to produce lighting effect

It's done

- Blinn phone reflection model without map

- A model with a map, but texture mapping is applied to vertex shaders

- Model with mapping, texture mapped on a slice shader

Blinn phone lighting model

There are three kinds of lighting: ambient light, diffuse light and specular like highlight. This corresponds to three kinds of reflection respectively. When light shines on the object surface, the corresponding reflection will occur on the object surface and reflect the light to the human eye, so that the human eye can see the object.

Ambient light is simply understood as a kind of light everywhere. The color and intensity of light are the same. Of course, this is not the case in reality; Diffuse reflection was learned in junior high school. After diffuse reflection occurs at a point on the object surface, the point can be seen from anywhere and the color intensity is the same; Specular reflection is similar to the highlight effect of light shining on a mirror. Set the light entering the human eye as L, the diffuse reflection as Ld, the specular reflection as Ls, and the ambient reflection as La. There can be formulas

L=La+Ld+Ls(1)(1)L=La+Ld+Ls\begin{equation}

L = L_a+L_d+Ls

\end{equation}

Environmental reflection

Because the illumination intensity of ambient light is the same everywhere, there is a formula

La=ka×IaLa=ka×IaL_a = k_a \times I_a

ka represents the current influence factor, which can be made into color, Ia is the light intensity, and all light intensities are the same

The figure shows the effect produced by using only ambient light and map. Ia is set to 0.39 and ka is set to vec3(1.0, 1.0, 1.0).

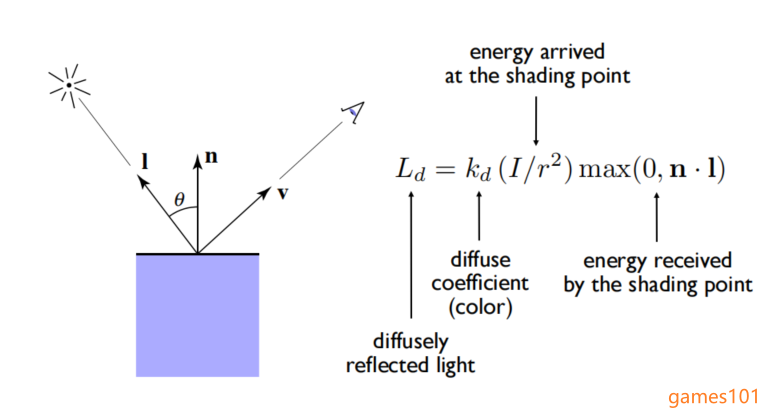

diffuse reflection

Junior high school physics can understand that the intensity of diffuse light is the same. The intensity of diffuse light defined here is the same from any point of view to the reflected point

As can be seen from the figure below, I represents the original light intensity. In three-dimensional space, the light intensity diffuses outward in the form of a sphere, so the intensity attenuation is related to the sphere area. If the distance between the light and the point on the object surface is r, the light intensity at the point is I/r^2; The intensity of light from the front to an object is different from that from the side. The maximum intensity of light from the front can be obtained, and the influence of the current point stress angle is n*l; If n*l is negative, it means that the light is on the back and cannot be seen. Therefore, there is the formula shown in the following picture

Ld is the diffuse reflection intensity, kd is the influence factor, which can be color, I is the initial illumination intensity, r is the distance between the light source and the object point, n is the normal vector of the current point, and l is the direction vector from the point to the light source. Add ambient and diffuse reflections to the program

When compared with the environmental reflection alone, it can be found that the cow has become more contoured

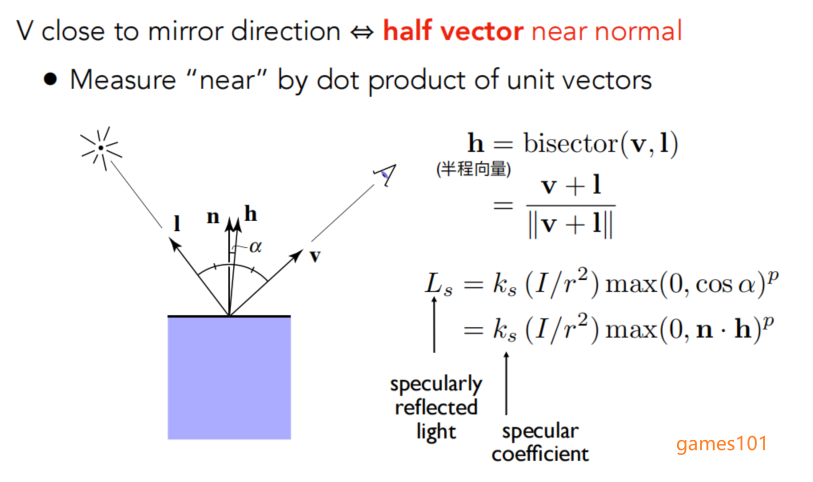

Specular reflection

Specular reflection is the most complex and important point. When the reflection angle is consistent with the incident angle, the illumination intensity is the largest. Firstly, multiple variables are defined as shown in the figure below. I is the direction vector from point to light, n is the normal vector of point, and v is the direction vector from point to viewing angle. Now, we know that when the reflection angle is consistent with the incident angle, the intensity is the largest. If the reflection angle is set as the incident angle, the greater the angle between v and the reflected ray, the more light will be lost, and the angle between v and the reflected ray can be converted into the angle between N and h.

H is called a half city vector, which is actually the direction vector of the angular bisector of I vector and v vector. At this time, the illumination loss value caused by the angle between N and H is n * h, and other parts are consistent with diffuse reflection. I / r^2 represents the reduction of illumination with distance, and ks represents the influence factor

The above three reflections are combined to form the Blinn phone reflection model, which has obvious highlight compared with the previous two

Vertex shader and slice shader

In the vertex Shader, the attributes of each vertex will be traversed for processing. At this time, the operation is the operation of triangular vertices. WebGL provides the loading of shaders. The variable type can be defined from the vertex Shader and received in the slice Shader

- If the vertex coordinates are transmitted, the vertex coordinates of the pixels inside the triangle are received in the slice shader

- If the normal vector is transmitted, the interpolated normal vector inside the triangle is received in the slice shader

- If vertex colors are transferred, color interpolation is received in the slice shader

- All others are the same. Pixel by pixel interpolation will be generated after interpolation calculation in the slice shader

Reflection code calculated per vertex

// The Blinn Phong reflection of custom colors is implemented

const vertexShader = `

// vec3 normal, uv,

varying vec3 vColor;

void main(){

// Ld diffuse reflection

vec3 lightPoint = vec3(3.0, 4.0, -3.0);

vec3 l = normalize(lightPoint - position);

float radius1 = distance(lightPoint, position);

// Kd

vec3 LdColor = vec3(1.0, 0.5, 0.7);

float Id = 1.0;

vec3 Ld = LdColor*(Id/radius1)*max(0.0, dot(normal,l));

// La ambient light

vec3 LaColor = vec3(1.0, 1.0, 1.0);

float Ia = 0.2;

// vec3 La = LaColor*Ia;

// Use your own color

vec3 La = LdColor*Ia;

// Ls specular light

vec3 LsColor = vec3(1.0, 1.0, 1.0);

float Is =1.0;

vec3 vs = normalize(cameraPosition-position);

vec3 hs = normalize(vs+l);

float p = 30.0;

vec3 Ls = LsColor*(Is/radius1)* pow(max(0.0, dot(normal, hs)), p);

vColor = La + Ld + Ls;

gl\_Position = projectionMatrix * modelViewMatrix * vec4(position, 1.0);

}

`;

const fragmentShader = `

varying vec3 vColor;

void main(){

gl\_FragColor = vec4(vColor, 1.0);

}

`;

Reflection code calculated piece by piece

//normal map

const vertexShader3 = `

// vec3 normal, uv,

varying vec3 vPosition;

varying vec3 vNormal;

varying vec2 vUv;

void main(){

vPosition = position;

vUv = uv;

vNormal = normal;

gl\_Position = projectionMatrix * modelViewMatrix * vec4(position, 1.0);

}

`;

//Normal mapping processing

const fragmentShader3 = `

uniform sampler2D cowTexture;

uniform sampler2D cowNormalTexture;

varying vec3 vPosition;

varying vec3 vNormal;

varying vec2 vUv;

void main(){

vec4 map1 = texture2D(cowTexture, vUv);

vec3 selfColor = vec3(map1.x, map1.y, map1.z);

// The normal map changes normal

float uvAfterU = (vUv.x*1024.0+1.0)/1024.0;

float uvAfterV = (vUv.y*1024.0+1.0)/1024.0;

float c1 = 1.0;

float c2 = 1.0;

vec4 currentDepth = texture2D(cowNormalTexture, vUv);

vec4 currentDepthU = texture2D(cowNormalTexture, vec2(uvAfterU, vUv.y));

vec4 currentDepthV = texture2D(cowNormalTexture, vec2(vUv.x, uvAfterV));

// float dp\_u = c1 *

if(uvAfterU<=1.0 && uvAfterV<=1.0){

}

// Ld diffuse reflection

vec3 lightPoint = vec3(3.0, 4.0, -3.0);

vec3 l = normalize(lightPoint - vPosition);

float radius1 = distance(lightPoint, vPosition);

// Kd

vec3 LdColor = vec3(1.0, 1.0, 1.0);

float Id = 1.0;

vec3 Ld = selfColor*(Id/radius1)*max(0.0, dot(vNormal,l));

//

// La ambient light

vec3 LaColor = vec3(1.0, 1.0, 1.0);

float Ia = 0.39;

// vec3 La = LaColor*Ia;

// Use your own color

vec3 La = selfColor*Ia;

// Ls specular light

vec3 LsColor = vec3(1.0, 1.0, 1.0);

float Is =1.0;

vec3 vs = normalize(cameraPosition-vPosition);

vec3 hs = normalize(vs+l);

float p = 30.0;

vec3 Ls = selfColor*(Is/radius1)* pow(max(0.0, dot(vNormal, hs)), p);

// Overall color

vec3 vColor = La + Ld + Ls;

// vec3 vColor = La + Ld ;

// vec3 vColor = vec3(1.0, 0.6, 0.8);

gl\_FragColor = vec4(vColor, 1.0);

}

`;

In the previous practice, the pure CPU computing method and the native canvas API rendering were used, but the efficiency and processing were too slow. Therefore, the subsequent experiments used threejs framework to speed up the practice efficiency